Scraping and storing data using Amazon Web Services (AWS)

My Problem

Task:

Collect data from WMATA API every 10 seconds for a while... months

Requirements:

- don't burn out laptop

- store data somewhere more accessible than hard drive

- ... but somewhere more suitable than Github

- little impedance to data science workflow

- free

- scalable

problem | solution | what | why | how

- access WMATA API with Python

- parse JSON and save as .csv

- ... all using my laptop

import Wmata

apikey = 'xxxxxxxxxyyyyyyyyzzzzzzz'

api = Wmata(apikey)

stopid = '1003043'

buspred=api.bus_prediction(stopid)

>>> buspred['StopName']

u'New Hampshire Ave + 7th St'

>>> buspred['Predictions'][1]

{u'TripID': u'6783517', u'VehicleID': u'6493', u'DirectionNum': u'1', u'RouteID': u'64', u'DirectionText': u'South to Federal Triangle', u'Minutes': 10}

problem | solution | what | why | how

laptop | shiny | aws

Solution 1.0: laptop

Solution 1.1: shiny server

- runs 24/7

- re-code API calls in R

- use Shiny Server to collect and store data

- write to .csv or database

- feels like a hack

- only R

- works on ERI Shiny server, but not shinyapps.io

problem | solution | what | why | how

laptop | shiny | aws

Solution 1.better: AWS

-

runs 24/7 on EC2 Linux instance and writes to SimpleDB

-

doesn't burn up or crash my laptop

-

can run multiple scrapers using the same instance

-

can be more dangerous ... in a good way

-

scale

-

maintain one version of code that runs on EC2 and locally

-

configure EC2 instance w/ any software or packages

-

free, for a while

-

overhead

,

problem | solution | what | why | how

laptop | shiny | aws

## Python code deployed to EC2 to collect and store WMATA bus data

import datetime

import time

import boto.sdb

from pytz import timezone

from python_wmata import Wmata

def buspred2simpledb(wmata, stopID, dom, freq=10, mins=10, wordy=True):

stime = datetime.datetime.now(timezone('EST'))

while datetime.datetime.now(timezone('EST')) < stime + datetime.timedelta(minutes=mins):

time.sleep(freq)

try:

buspred = wmata.bus_prediction(stopID)

npred = len(buspred['Predictions'])

if npred>0:

for i in range(0,npred):

thetime = str(datetime.datetime.now(timezone('EST')))

items = buspred['Predictions'][i]

items.update({'time':thetime})

items.update({'stop':stopID})

itemname = items['VehicleID'] + '_' + str(items['Minutes']) + '_' + thetime

dom.put_attributes(itemname, items) ## actually writing to simpledb domain

if wordy==True: print items

except:

print [str(datetime.datetime.now()), 'some error...']

pass

## establish connection to WMATA API

api = Wmata('xxxyyyzzzmyWMATAkey')

## establish connection to AWS SimpleDB

conn = boto.sdb.connect_to_region(

'us-east-1',

aws_access_key_id='xxxyyyzzzzTHISisYOURaccessKEY',

aws_secret_access_key='xxxyyyzzzzTHISisYOURsecretKEY'

)

## connect to simpleDB domain wmata2

mydom = conn.get_domain('wmata2')

## Letting it rip...scrape

buspred2simpledb(api, stopID='1003043', dom=mydom, freq=5, mins=60*24, wordy=True)problem | solution | what | why | how

laptop | shiny | aws

Solution 1.better: AWS

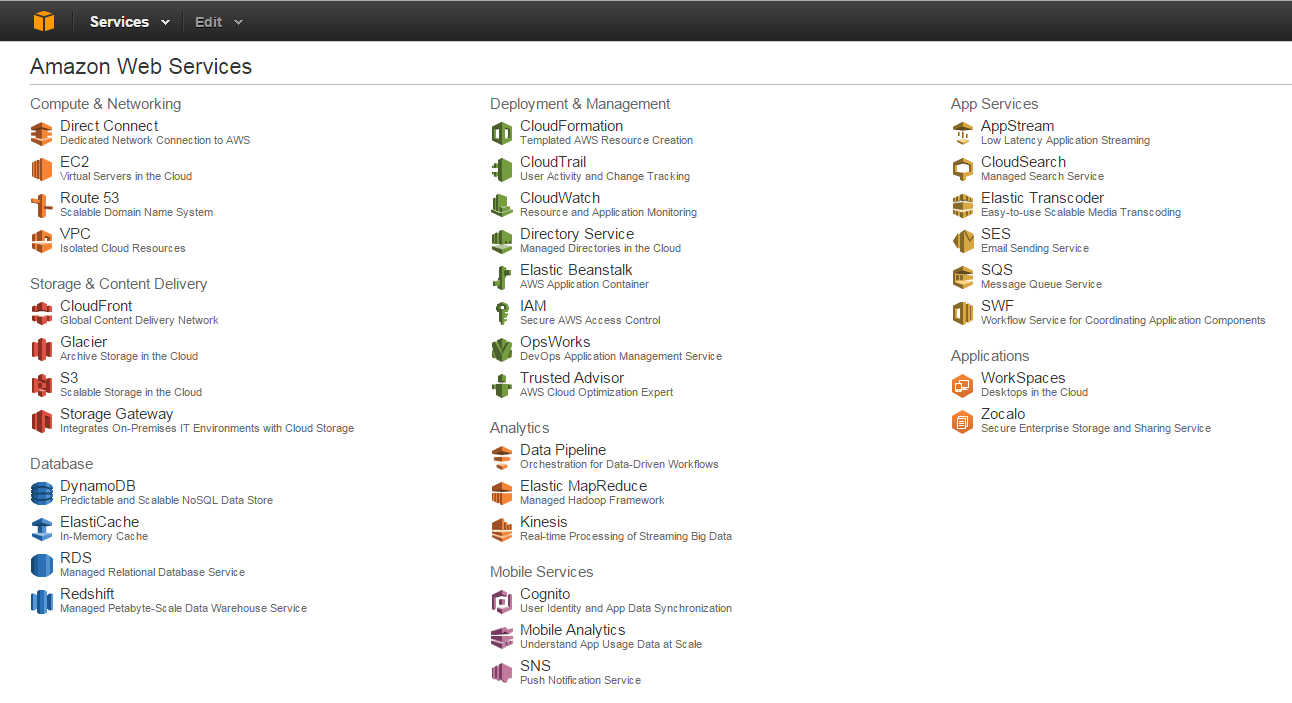

What is AWS

Simple DB

...a lot of things

problem | solution | what | why | how

everything | EC2 | S3 | SimpleDB | RDS

What is AWS

Elastic Compute Cloud (EC2)

- where you run your code

- pre-configured instances (virtual machines)

- Ubuntu, Linux, Windows, ...

- 1 year free

problem | solution | what | why | how

everything | EC2 | S3 | SimpleDB | RDS

What is AWS

Elastic Compute Cloud (EC2)

- customize with software and packages (Python, R, etc)

- save image configuration & stop when not using it

- run Rstudio server or other web-based software from browser

problem | solution | what | why | how

everything | EC2 | S3 | SimpleDB | RDS

What is AWS

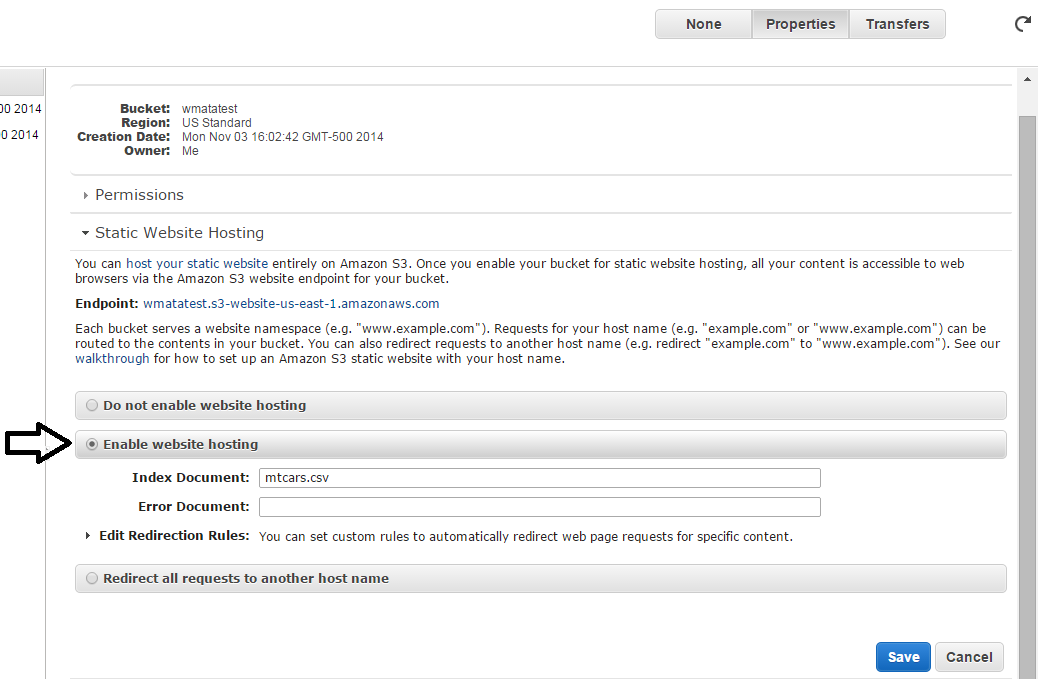

Simple Storage Service (S3)

- file system where you store stuff

- like dropbox... actually it is

- this presentation stored on S3

- files accessible from a URL on the web

- 5GB free

## move data from AWS S3 to laptop using AWS CLI

aws s3 cp s3://yourbucket/scraped_data.csv C:/Users/abrooks/Documents/scraped_data.csv

## read data from file in R

df <- read.csv('http://wmatatest.s3-website-us-east-1.amazonaws.com/bus64_17Sep2014.txt', sep='|')

## an image from this presentation hosted on S3

https://s3.amazonaws.com/media-p.slid.es/uploads/ajb073/images/793870/awsservices.pngproblem | solution | what | why | how

everything | EC2 | S3 | SimpleDB | RDS

What is AWS

SimpleDB

- lightweight non-relational database

- SQL < SimpleDB < NoSQL

- flexible schema

- automatically indexes

- "SQLish" queries from API

- ... made easy with packages in Python (boto) and R

- ... even Chrome sdbNavigator extension

- easy to setup, easy to write into

- working with API without package is nontrivial.

- extracting data wholesale into a table-like object clunky

problem | solution | what | why | how

everything | EC2 | S3 | SimpleDB | RDS

What is AWS

Relational Database Service (RDS)

- traditional relational databases in the cloud: MySQL, Oracle, Microsoft SQL Server, or PostgreSQL

- tested:

- Creating SQL Server instance

- Connecting to through SQL Server Management Studio

- Inserting and extracting data

- API calls for data

easy

easy

hard

not possible

problem | solution | what | why | how

everything | EC2 | S3 | SimpleDB | RDS

Good for

standard data science configurations

- useful for communicating our tech specs to clients

- clients can use config files for EC2 instances to outfit the same system in their environment

- OR use pre-configured EC2 instances

- smoother model deployment

- scale up resources on demand

- host websites, web apps, software

- schedule refreshes and data extracts

- less time waiting for IT to approve software

problem | solution | what | why | how

standardization & deployment | personal projects

Good for

personal projects

- EC2 and S3 take the load off your laptop

- run your code overnight, over days, weeks, ...

- you can make mistakes

- just start another instance

- won't take down the company server

- learn more

- store your collected data publicly on S3 (or not)

- relatively simple set-up for the ~intermediate techie

- scale up projects as you need more resources

problem | solution | what | why | how

standardization & deployment | personal projects

How to do it

-

setup AWS account

- free, but need credit card

- can use your regular amazon.com account

- start with EC2

- then S3

- download (if on Windows)

-

PuTTY:

- PuTTYgen for to generate keys

- PuTTY to connect to image

- PSCP to move files locally to EC2

-

amazon EC2 CLI tools

- makes communication easy from laptop to AWS

-

PuTTY:

first things first

problem | solution | what | why | how

start | EC2 | S3 | SimpleDB

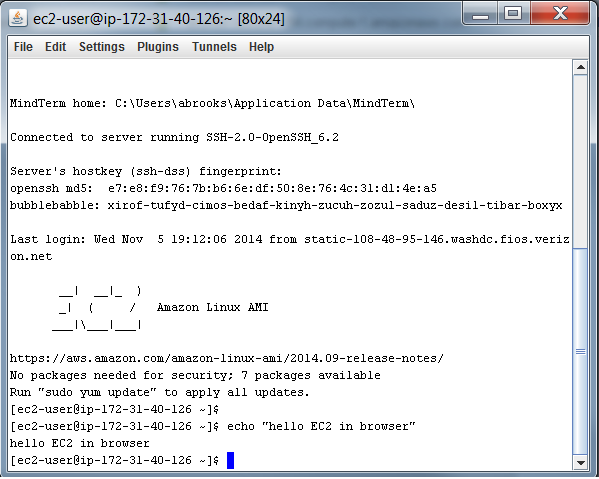

How to do it

- launch an instance

- connect to an instance

- authorize inbound SSH traffic to instance

- setting up keys is the hardest part

- only need one key pair (.pem)

- use PuTTYgen to convert .pem to .ppk

- same key can be used to launch multiple instances

EC2: getting started

problem | solution | what | why | how

start | EC2 | S3 | SimpleDB

How to do it

EC2: move code and data

- develop and test code locally

- push to EC2 with PuTTY PSCP

- move output from EC2 to laptop

## move your python script from laptop to EC2.

## directories with spaces need to be quoted with ""

## at the Windows command prompt...

pscp -r -i C:\yourfolder\yourAWSkey.ppk C:\yourfolder\yourscript.py ec2-user@ec2-xx-xxx-xx-xxx.compute-1.amazonaws.com:/home/ec2-user/yourscript.py

problem | solution | what | why | how

start | EC2 | S3 | SimpleDB

How to do it

EC2: using screen

## in your EC2 Linux instance...

## create a new screen and run script

screen

python noisy_script1.py

## type ctrl+a+d to leave screen

## list screens

screen -list

## get backinto screen

screen -r 1001

problem | solution | what | why | how

start | EC2 | S3 | SimpleDB

How to do it

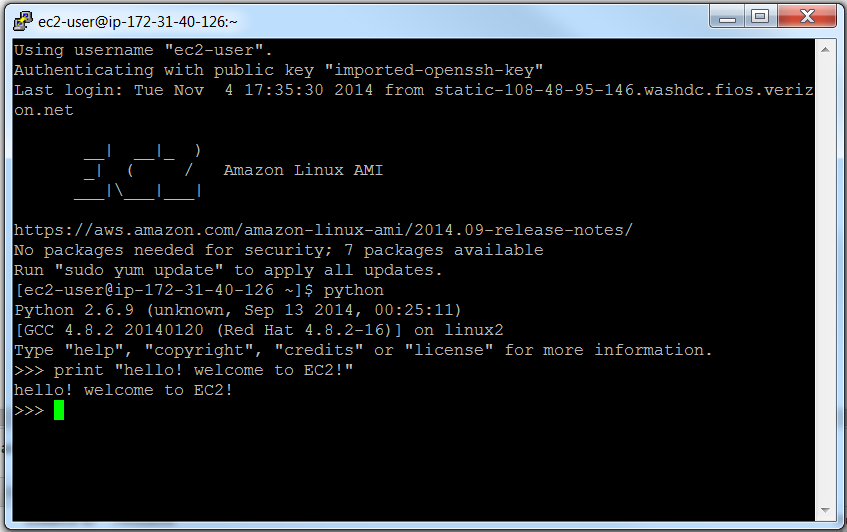

EC2: configure instance with Python packages

- Python comes loaded on EC2 Linux instance

- install packages at the Linux command line

- OR save configuration as a .sh file and use to configure new instances if you frequently spin up new instances

## at the EC2 Linux command line...

## get pip

wget https://bootstrap.pypa.io/get-pip.py

## run pip install script

sudo python get-pip.py

## install pytz package

sudo pip install pytzproblem | solution | what | why | how

start | EC2 | S3 | SimpleDB

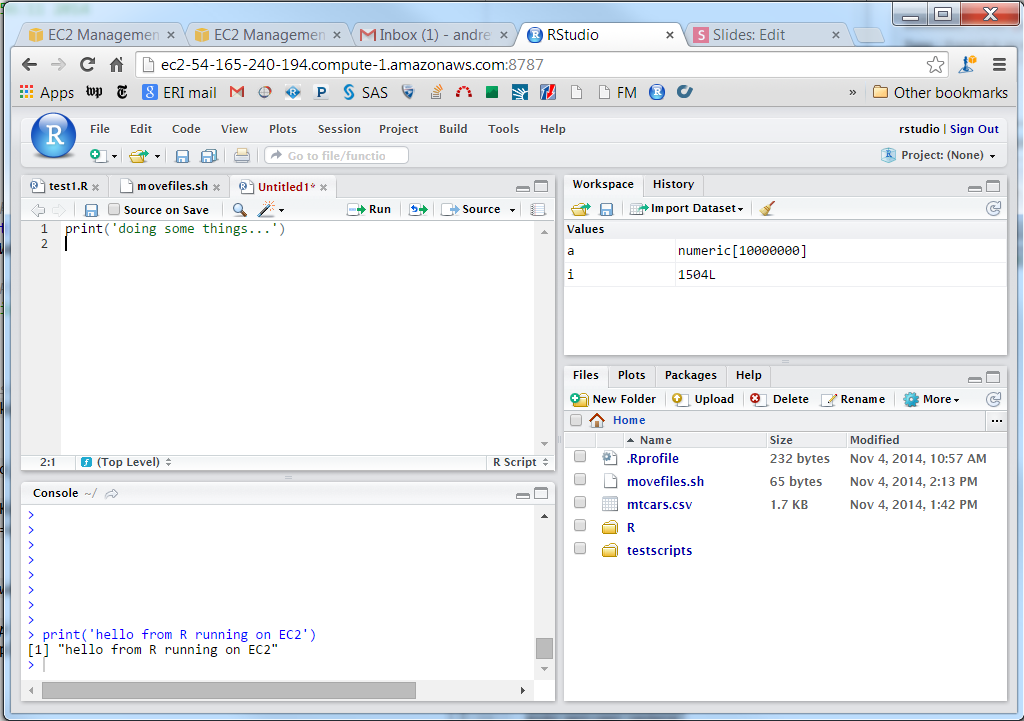

How to do it

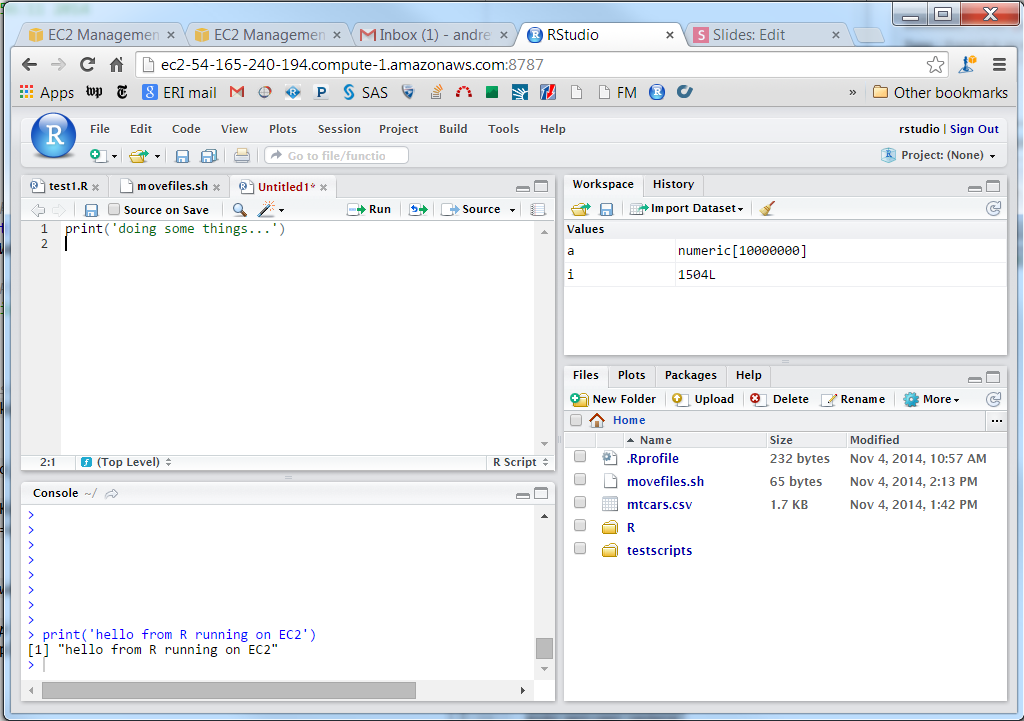

EC2: configure instance with your favorite tools

- Configure an EC2 instance with RStudio

- run interactively from web browser or in EC2 shell

- http://ec2-xx-xxx-xxx-xxx.compute-1.amazonaws.com:8787/

# this tutorial shows how to download Rstudio on Ubuntu server

# http://randyzwitch.com/r-amazon-ec2/

# at the EC2 Ubuntu instance command line...

#Create a user, home directory and set password

sudo useradd rstudio

sudo mkdir /home/rstudio

sudo passwd rstudio

sudo chmod -R 0777 /home/rstudio

#Update all files from the default state

sudo apt-get update

sudo apt-get upgrade

#Add CRAN mirror to custom sources.list file using vi

sudo vi /etc/apt/sources.list.d/sources.list

#Add following line (or your favorite CRAN mirror).

# ctrl+i to allow vi to let you type

# esc + ZZ to exit and save file

deb http://cran.rstudio.com/bin/linux/ubuntu precise/

#Update files to use CRAN mirror

#Don't worry about error message

sudo apt-get update

#Install latest version of R

#Install without verification

sudo apt-get install r-base

problem | solution | what | why | how

start | EC2 | S3 | SimpleDB

How to do it

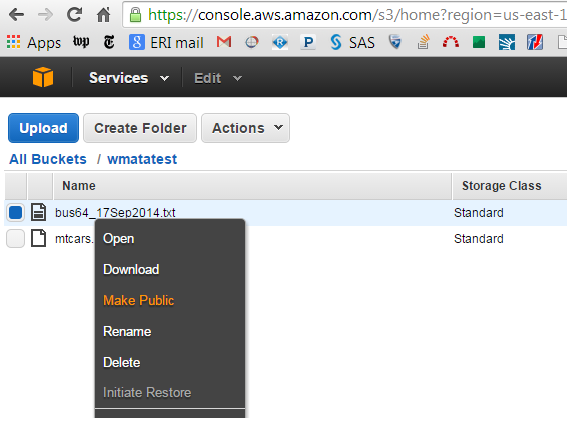

S3: getting started

- sign up for S3 account

- create a bucket

- add objects to bucket using online AWS interface

problem | solution | what | why | how

start | EC2 | S3 | SimpleDB

How to do it

## R code

df <- read.csv('http://wmatatest.s3-website-us-east-1.amazonaws.com/bus64_17Sep2014.txt', sep='|')

head(df)

time Minutes VehicleID DirectionText RouteID TripID

1 2014-09-17 08:30:43.537000 7 2145 South to Federal Triangle 64 6783581

2 2014-09-17 08:30:43.537000 20 7209 South to Federal Triangle 64 6783582

problem | solution | what | why | how

start | EC2 | S3 | SimpleDB

How to do it

S3: move files to/from S3 and EC2/laptop

- install AWS command line tools

- configure AWS command line interface

- AWS S3 web interface

- S3Browser - user friendly GUI, like WinSCP

### at the windows command prompt (or EC2 instance command line)

aws s3 cp mtcars.csv s3://yourbucket/mtcars.csv

### R code to configure RStudio Ubuntu instance to enable system calls from within R.

# only need to do this once

Sys.setenv(AWS_ACCESS_KEY_ID = 'xxXXyyYYzzZZYourAWSaccessKEY')

Sys.setenv(AWS_SECRET_ACCESS_KEY = 'xxxxYYYYYYYZZZZZZourAWSprivateKEY')

# move data to S3 storage

system('aws s3 cp mtcars.csv s3://wmatatest/mtcars.csv --region us-east-1')problem | solution | what | why | how

start | EC2 | S3 | SimpleDB

How to do it

S3: access your data/files from R

- connecting directly to S3 from R is less supported

- ... but copying files (data) from S3 to your local computer is easy... but kind of defeats the purpose of S3

- ability to access data directly from URL

- let me know if you find better methods!

problem | solution | what | why | how

start | EC2 | S3 | SimpleDB

How to do it

SimpleDB: getting started

- Sign up for SimpleDB & get keys

- GUIs

- I found scratchpad buggy

- better luck with sdb Navigator Chrome extension

- GUI useful to get started and explore, but most work will be done using boto in Python, or similar.

problem | solution | what | why | how

start | EC2 | S3 | SimpleDB

How to do it

SimpleDB : access with Python

- install boto Python package

-

boto simpledb tutorial

- create domains (tables)

- add items (attributes/rows/records)

- retrieving data

- easy to write into

- clunky to extract from in bulk

import boto.sdb

conn = boto.sdb.connect_to_region(

'us-east-1',

aws_access_key_id='xxxxyyyzzzzYourAWSaccessKEY',

aws_secret_access_key='xxxxyyyyzzzzYourAWSsecretKEY'

)

## establish connection to domain

dom = conn.get_domain('wmata2')

## select all items/rows from domain (table)

query = 'select * from `wmata2`'

rs = dom.select(query)

## rs is a cursor. need to iterate over every element to extract, which is slow. 45 seconds for 27,000 items.

mins = []

for i in rs: mins.append(i['Minutes'])problem | solution | what | why | how

start | EC2 | S3 | SimpleDB

How to do it

SimpleDB: access with R

- R support is limited:

- awsConnect - supported on Linux/OSx only

- RAmazonDBREST - no luck

- AWS.tools - no luck on Windows

- Could access API directly without package... a non-trivial task

problem | solution | what | why | how

start | EC2 | S3 | SimpleDB

Documentation

- official Amazon documentation is comprehensive and helpful

- stackOverflow and blogs found through Googling were useful to navigate gaps in documentation specific to me and my computer

- HTML slides made from reveal.js online editor slides.com .... coincidentally also hosted on AWS S3