Cassandra @Appdata

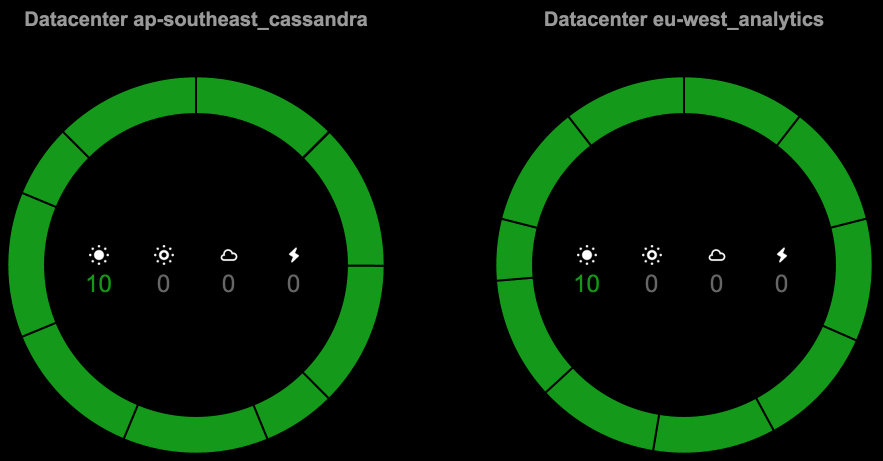

geography

cluster setup

Node: AWS c3.2xlarge | 15 GB RAM, 8 Cores | 2x60GB SSD drives

CoreOS, Docker, fleet/etcd

Java HEAP = 8GB | Commit log dir & Data dir @ separate phisical disks

Keyspace:

CREATE KEYSPACE packetdata WITH replication = {'class': 'NetworkTopologyStrategy', 'eu-west_analytics': 2, 'ap-southeast_cassandra' : 2};

PERFORMANCE benchmarks

[LIVE demo]

Results:

op rate : 5271

partition rate : 5271

row rate : 134434

latency mean : 51.3

latency median : 20.2

latency 95th percentile : 175.4

latency 99th percentile : 231.5

latency 99.9th percentile : 255.1

latency max : 2393.6

total gc count : 907

total gc mb : 601200

total gc time (s) : 233

avg gc time(ms) : 257

stdev gc time(ms) : 1333

Total operation time : 00:00:59

Improvement over 181 threadCount: 6%

Sleeping for 15s

Running with 406 threadCount

Running [insert] with 406 threads 1 minutes

probelms being solved

For our specific case of two regions (Europe and Asia) next parameters are appropriate and proved to be working:

- etcd `peer-heartbeat-interval: 1000`

- etcd `peer-election-timeout: 4000`

- fleet `etcd-request-timeout: 15`

[Cassandra timeout during write query at consistency ONE (1 replica were required but only 0 acknowledged the write)] Check the output of `cassandra-stress` for the line like [Using data-center name 'eu-west_analytics' for DCAwareRoundRobinPolicy]

Use `-node whitelist 52.16.151.33,52.16.119.105,52.16.53.144` [CASSANDRA-8313]

CASCADING CLUSTER FAILURE on container restart: SSTables went out of sync -> (re)Synchronization carousel RESOLVED: using host FS for storing commit logs and

Agents: until OpsCenter not created its specific keyspace agents fail to publish JMX data into it and OpsCenter fails to communicate with them. RESOLVED: Ansible Agents Installation (demo)

broadcast_address | broadcast_rpc_address | listen_address | rpc_address

SST compaction overhead -> switch to using SSD drives (default: slow EBS)

next steps

-

Spark JobServer

- Security (C* | Spark | etcd)

- Cassandra CF tuning

CREATE TABLE packets (

owner uuid,

...

PRIMARY KEY ((owner, ...), ...)

) WITH

bloom_filter_fp_chance=0.100000 AND

caching='KEYS_ONLY' AND

comment='' AND

dclocal_read_repair_chance=0.000000 AND

gc_grace_seconds=864000 AND

index_interval=128 AND

read_repair_chance=0.100000 AND

replicate_on_write='true' AND

default_time_to_live=0 AND

speculative_retry='99.0PERCENTILE' AND

memtable_flush_period_in_ms=0 AND

compaction={'class': 'SizeTieredCompactionStrategy'} AND

compression={'sstable_compression': 'LZ4Compressor'};