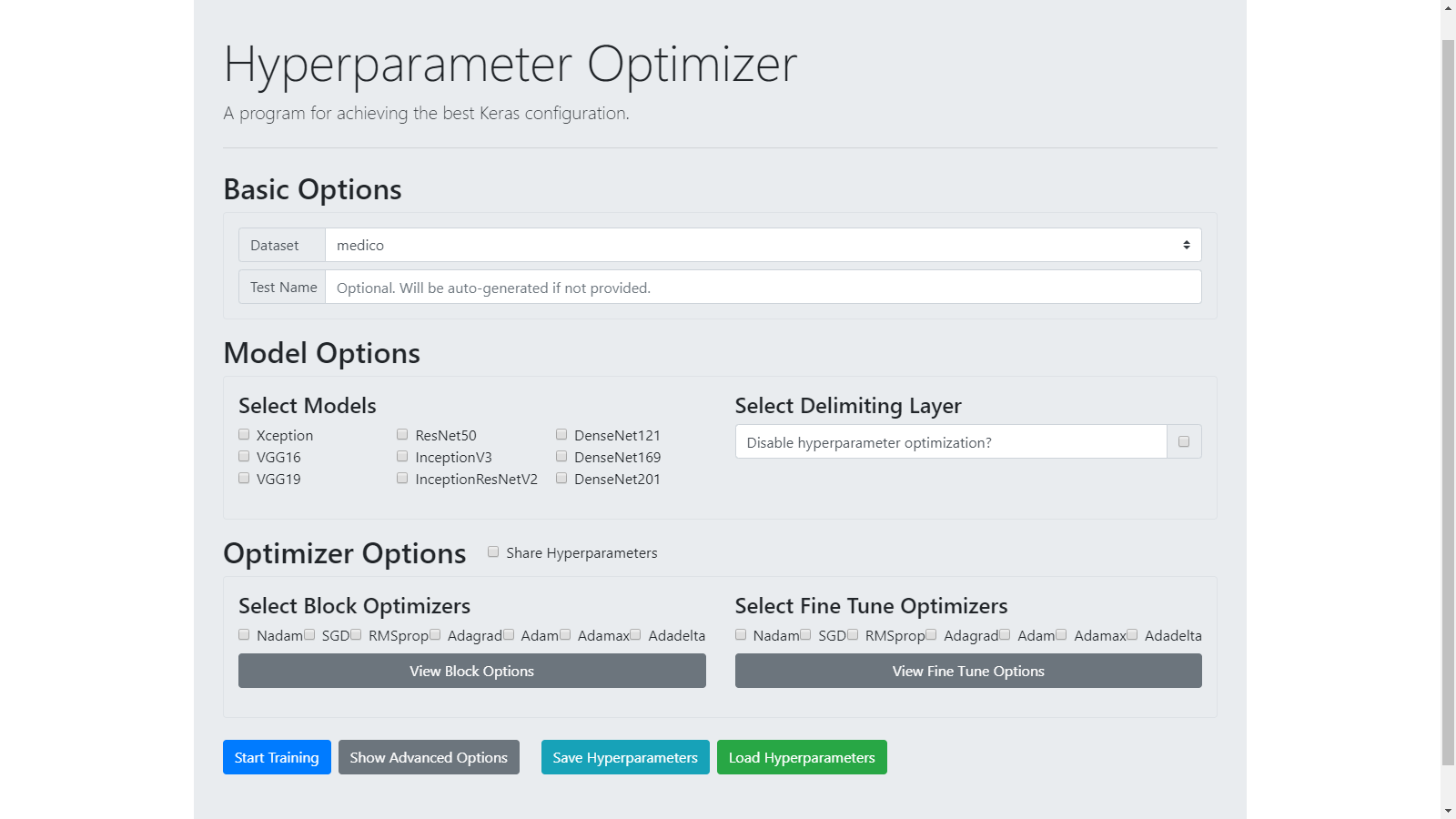

Automatic Hyperparameter Optimization in Keras for the MediaEval 2018 Medico Multimedia Task

Rune Johan Borgli

Overview

We use a work-in-progress system for automatic hyperparameter optimization called Saga.

We use transfer learning with models pre-trained on ImageNet.

We use Bayesian optimization for the hyperparameter optimization.

We make changes to the dataset to try to improve performance.

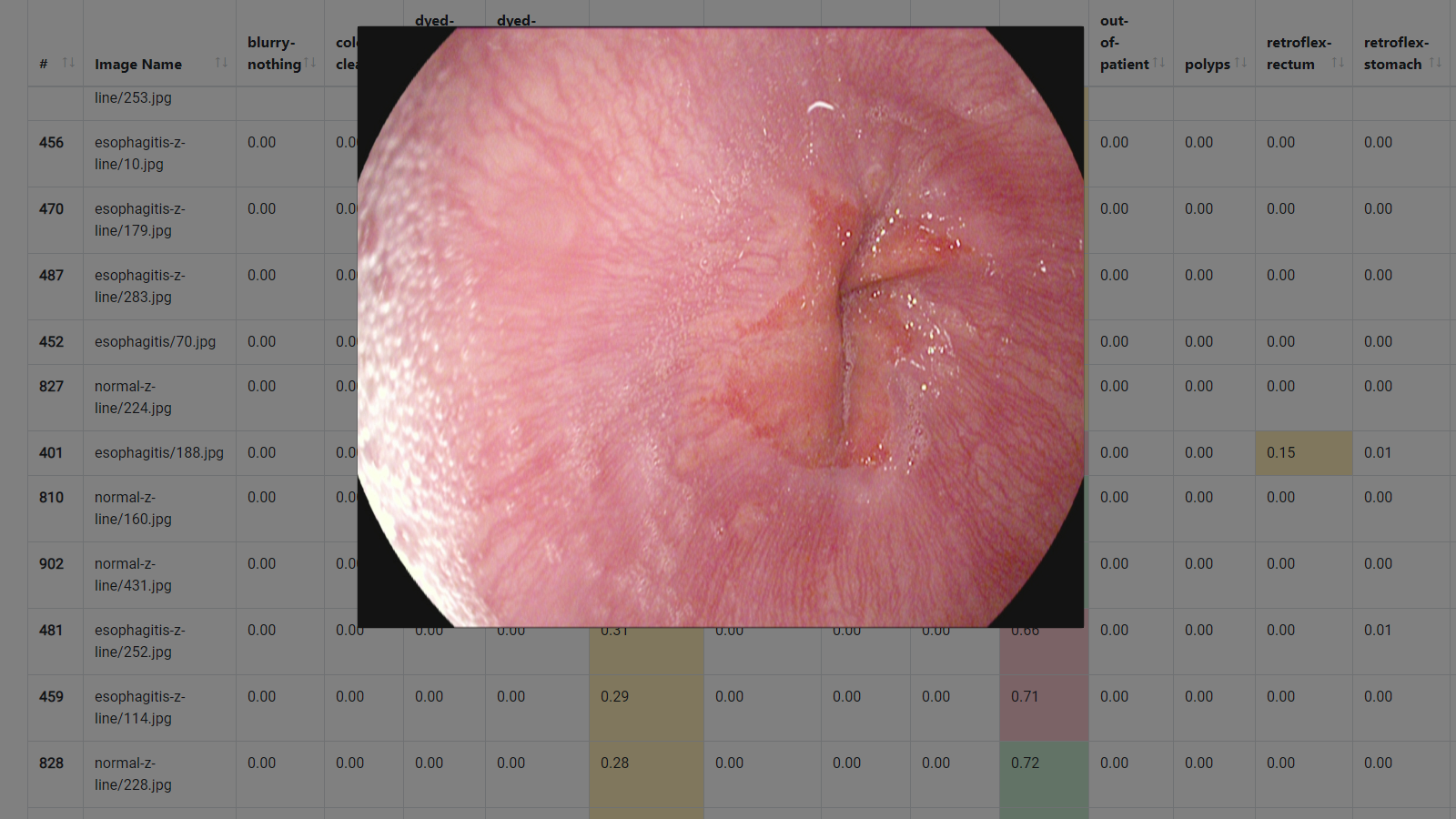

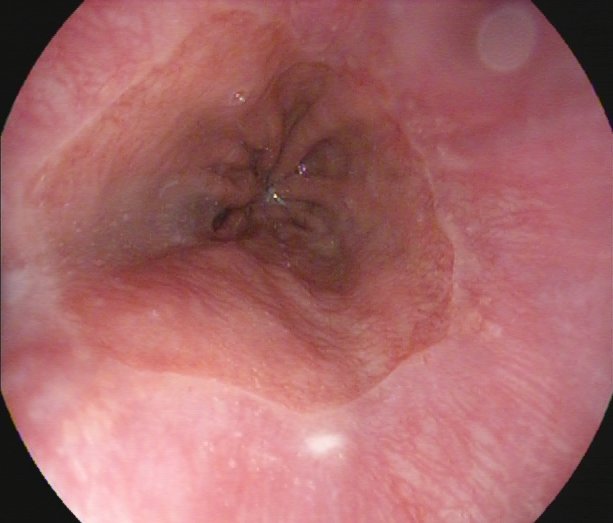

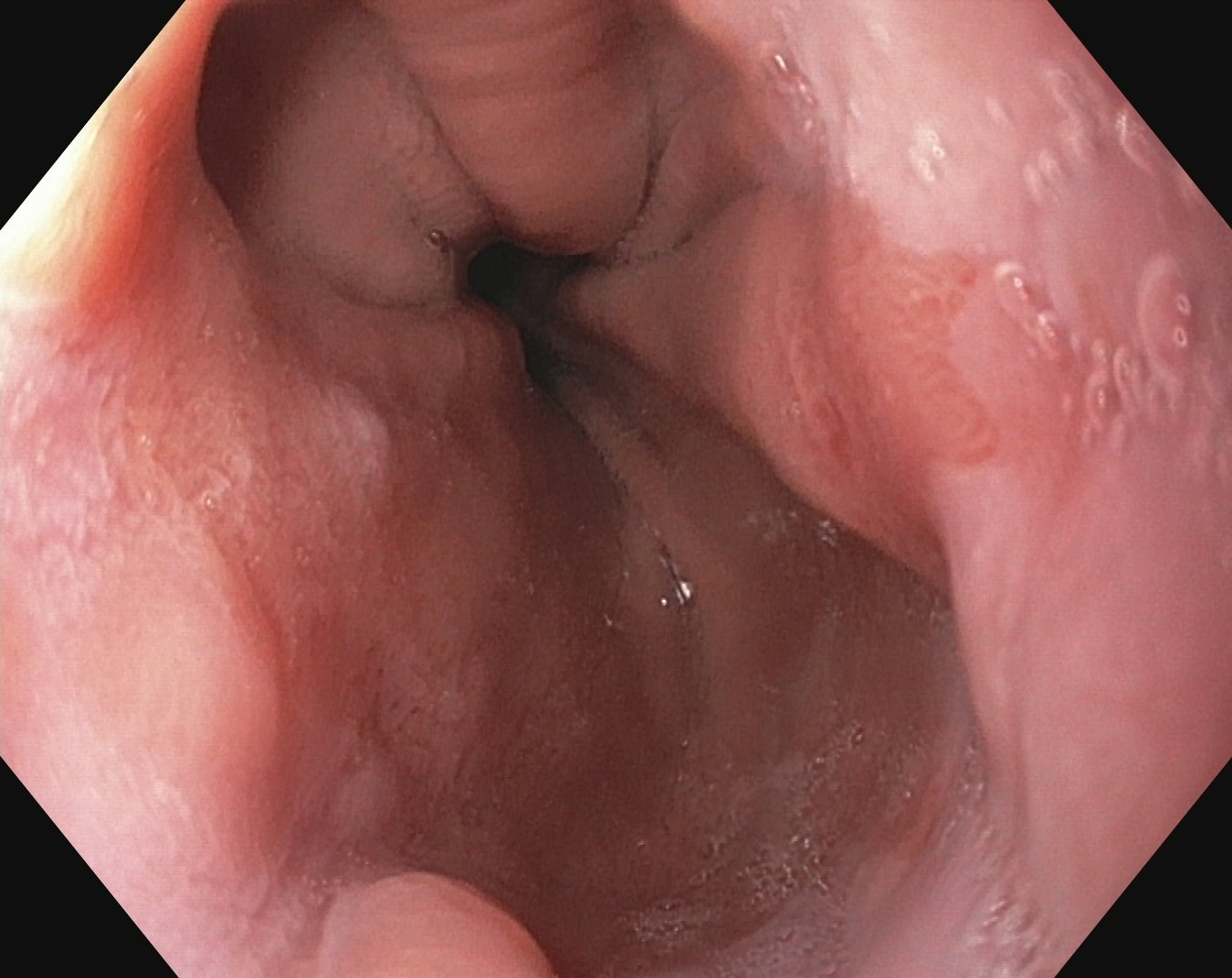

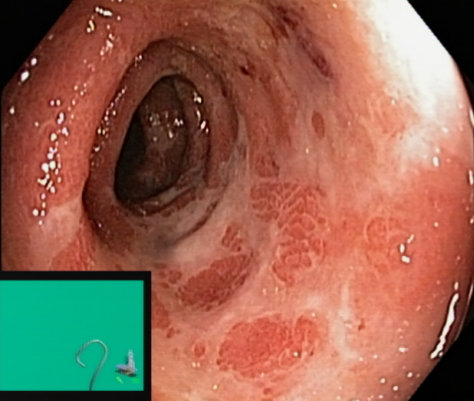

Dataset observations

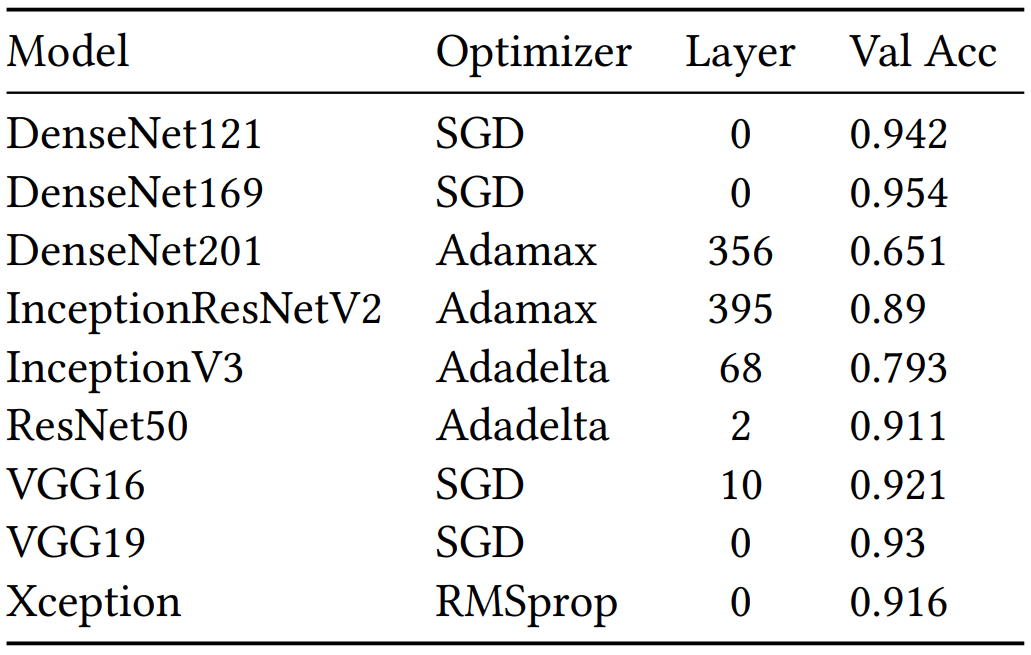

Best result for different models

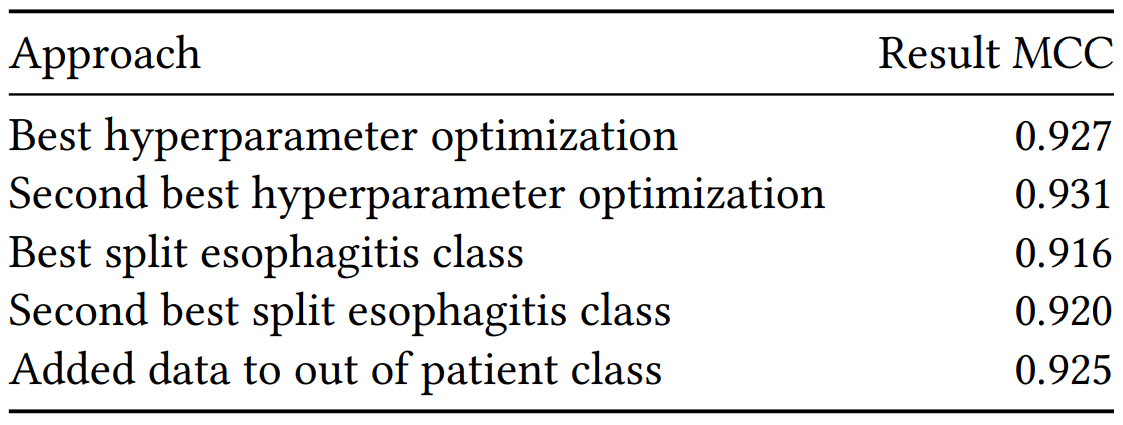

Results

Model

DenseNet169

Optimizer

SGD

LR Block

0.00005

Layer

0

LR TUNE

0.004

Best found hyperparameters

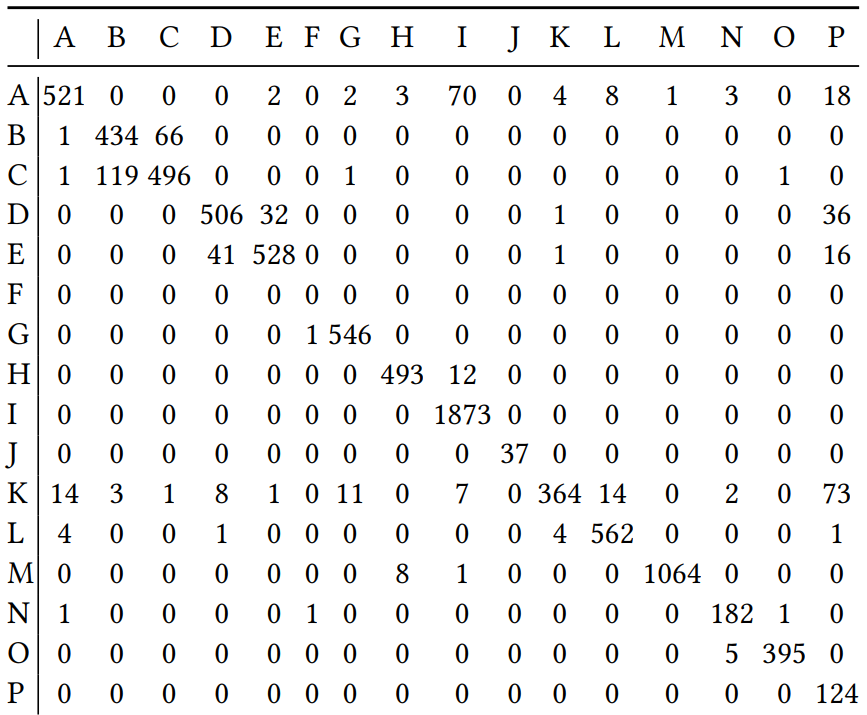

Best result confusion matrix

(A) Ulcerative colitis, (B) Esophagitis, (C) Normal z-line, (D) Dyed lifted polyps, (E) Dyed resection margins, (F) Out of patient, (G) Normal pylorus, (H) Stool inclusions, (I) Stool plenty, (J) Blurry nothing, (K) Polyps, (L) Normal cecum, (M) Colon clear, (N) Retroflex rectum, (O) Retroflex stomach, and (P) Instruments

Summary

Automatic hyperparameter optimization achieves good results.

Creating the optimal model is not the whole picture. We must also improve the dataset.

Future work should include preprocessing and k-fold crossvalidation.