KVM

Kernel-based Virtual Machine

Internals, code and more

Who am I

I'm a Computer Security Enthusiast.

An enthusiast Fedora developer.

#Web #Dev technology and bleeding edge tech.

I like beer, whisky, musics, movies, ...

Plan

Allow students to know where to begin qemu and qemu-kvm source code reading

- What behind KVM

- QEMU and KVM architecture overview

- KVM internals

- Very small Introduction to Libvirt

KVM in 5 secondes

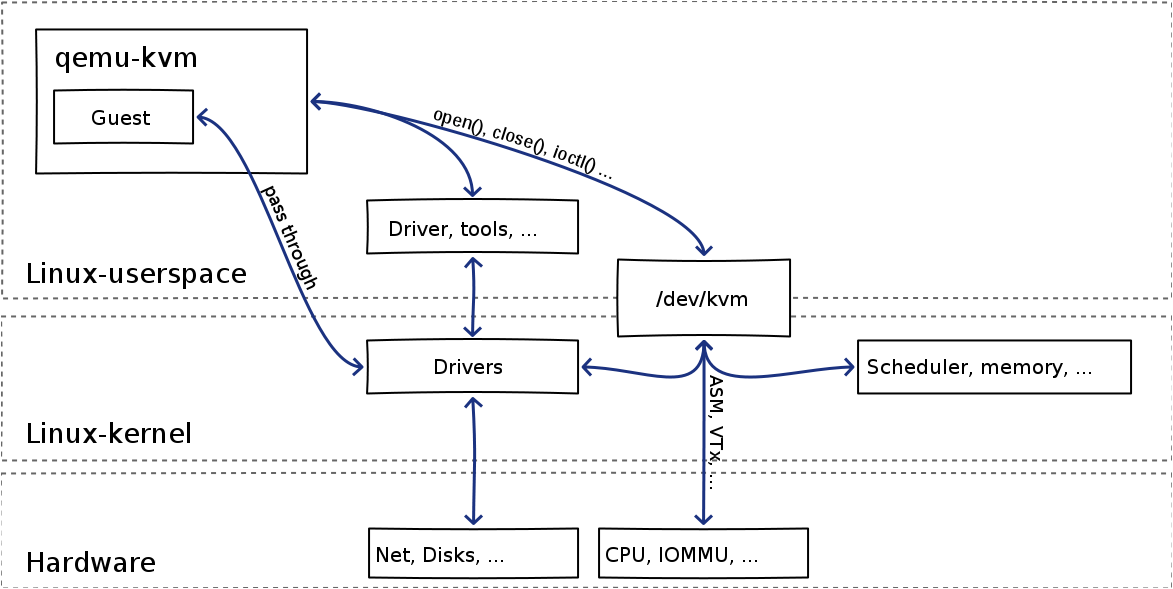

- Introduced to make VT-x/AMD-V available to user space

- Exposes virtualization features securely throug a single interface

- /dev/kvm

- vailable since 2.6.20 (2006)

- Clean and efficient dev From first LKML posting to merge: 3 months

- 100% orthogonal to core kernel

KVM IN 5 SECONDES

KVM is not KVM

First of all there is QEMU then KVM then Libvirt then the whole ecosystems..

Painting is an illusion, a piece of magic, so what you see is not what you see.

Philip Guston

At the begining, Qemu

- Running a guest involves executing guest code

- Handling timers

- Processing I/O

- Responding to monitor commands.

- Doing all these things at once without pausing guest execution

Deal with events

Deal with events

There are two popular architectures for programs that need to respond to events from multiple sources

DEAL WITH EVENTS

Parallel architecture

Splits work into processes or threads that can execute simultaneously.

DEAL WITH EVENTS

Event-driven architecture

Event-driven architecture reacts to events by running a main loop that dispatches to event handlers.

Threading and event driven model of qemu

Qemu uses an hybrid architecture

The hard must become habit. The habit must become easy. The easy must become beautiful.

Doug Henning quotes (Canadian Magician).

Qemu the event_loop

-

Event-driven architecture is centered around the event loop which dispatches events to handler functions. QEMU's main event loop is main_loop_wait()

-

Waits for file descriptors to become readable or writable. File descriptors are a critical because files, sockets, pipes, and various other resources are all file descriptors.

-

Runs expired timers.

- Runs bottom-halves (BHs), which used to avoid reentrancy and overflowing the call stack.

QEMU THE EVENT_LOOP

A file descriptor becomes ready, a timer expires, or a BH is scheduled, the event loop invokes a callback

-

No other core code is executing at the same time so synchronization is not necessary

-

Execute sequentially and atomically

-

Only 1 thread of control needed at any given time

-

No blocking system calls or long-running computations should be performed.

-

Avoid spending an unbounded amount of time in a callback

-

If you not follow those advices this will force the guest to pause and the monitor to become unresponsive.

QEMU threads

To help the event_loop

Offload what need to be offloaded

QEMU THREADS

TO HELP THE EVENT_LOOP

- There are system calls which have no non-blocking equivalent.

-

Sometimes long-running computations flood the CPU and can't be easily break up into callbacks.

-

In these cases dedicated worker threads can be used to carefully move these tasks out of core QEMU.

-

One example of worker threads is vnc-jobs.c

-

When a worker thread needs to notify core QEMU, a pipe or a qemu_eventfd() file descriptor is added to the event loop.

executing guest code

Here are two mechanism for executing guest code: Tiny Code Generator (TCG) and KVM

Executing guest code in qemu is very simple, it use thread.

Exactly 1 thread by vcpu.

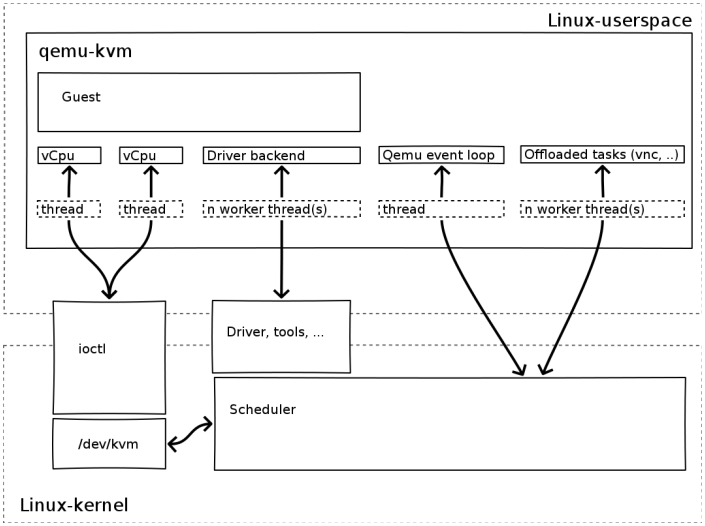

summary about qemu processing

-

1 process per guest

-

1 thread for the main event_loop()

-

1 thread by vcpu

- As many (reasonably) threads as needed for offloaded tasks

All the existing Linux strengths at our disposal

Memory as Huge page, KSM, IO, Scheduler, Energy, Device hotplug, networking, Security, All the Linux software world, ...

qemu guest memory

Guest ram is allocated at qemu start up

This mapped memory is "really" allocated by the process (with malloc())

but wait

Where is the x86 virtualisation

reminder

why x86 virt is a pain...

-

No hardware provisions

-

Instruction behave differently depending on privilege context

-

Architecture not built for trap and emulate

-

CISC is ... CISC

A complete theorical virtualisation courses : CS 686: Special Topic: Intel EM64T and VT Extensions (Spring 2007)

reminder

how intel vt-x help

Guest SW <-> VMM Transitions

Virtual-machine control structure

keep that in mind for later in the pres...

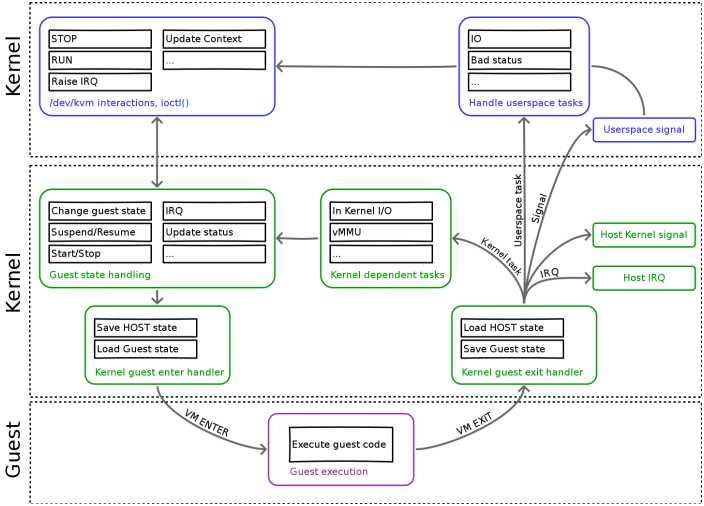

KVM virtualisation

KVM is a virtualization feature in the Linux kernel that lets you safely execute guest code directly on the host CPU

open("/dev/kvm")

ioctl(KVM_CREATE_VM)

ioctl(KVM_CREATE_VCPU)

for (;;) {

ioctl(KVM_RUN)

switch (exit_reason) {

case KVM_EXIT_IO: /* ... */

case KVM_EXIT_HLT: /* ... */

}

}

kvm virtualisation

It's DEMO time

What do you need :

-

A bit of C ...

-

A touch of ASM

-

Makefile

-

gcc

QEMU / KVM / CPU / TIME interactions

Light vs Heavy exit

QEMU / KVM / CPU / TIME interactions

causes of VM Exits

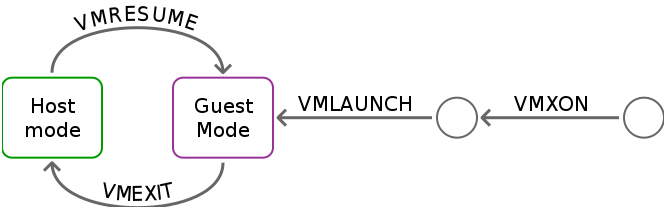

VM Entry :

-

Transition from VMM to Guest

-

Enters VMX non-root operation

-

Loads Guest state and Exit criteria from VMCS

-

VMLAUNCH instruction used on initial entry

-

VMRESUME instruction used on subsequent entries

VM Exit :

-

VMEXIT instruction used on transition from Guest to VMM

-

Enters VMX root operation

-

Saves Guest state in VMCS

-

Loads VMM state from VMCS

start a kvm vm in reality

A bit more complicated than before :

-

KVM CREATE VM : The new VM has no virtual cpus and no memory

-

KVM SET USER MEMORY REGION : MAP userspace memory for the VM

-

KVM CREATE IRQCHIP / ...PIT KVM CREATE VCPU : Create hardware component and map them with VT-X functionnalities

-

KVM SET REGS / ...SREGS / ...FPU / ... KVM SET CPUID / ...MSRS / ...VCPU EVENTS / ... KVM SET LAPIC : hardware configurations

-

KVM RUN : Start the VM

start a vm in qemu-kvm

/usr/bin/qemu-kvm -S -M pc-0.13 -enable-kvm -m 512 -smp 2,sockets=2,cores=1,threads=1 -name test -uuid e9b4c7be-d60a-c16e-92c3-166421b4daca -nodefconfig -nodefaults -chardev socket,id=monitor,path=/var/lib/libvirt/qemu/test.monitor,server,nowait -mon chardev=monitor,mode=readline -rtc base=utc -boot c -drive file=/var/lib/libvirt/images/test.img,if=none,id=drive-virtio-disk0,boot=on,format=raw -device virtio-blk-pci,bus=pci.0,addr=0x5,drive=drive-virtio-disk0,id=virtio-disk0 -drive if=none,media=cdrom,id=drive-ide0-1-0,readonly=on,format=raw -device ide-drive,bus=ide.1,unit=0,drive=drive-ide0-1-0,id=ide0-1-0 -device virtio-net-pci,vlan=0,id=net0,mac=52:54:00:cc:1c:10,bus=pci.0,addr=0x3 -net tap,fd=59,vlan=0,name=hostnet0 -chardev pty,id=serial0 -device isa-serial,chardev=serial0 -usb -device usb-tablet,id=input0 -vnc 127.0.0.1:0 -vga cirrus -device AC97,id=sound0,bus=pci.0,addr=0x4 -device virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x6

Now you really know why tools are great

KVM processing

What about passthroug, paravirt and virtio

Reduce VM exits or make them lightweight

Improve I/O throughput & latency (less emulation)

Compensates virtualization effects

Enable direct host-guest interaction

VIRTIO device

-

Network

-

Block

-

Serial I/O (console, host-guest channel, ...)

-

Memory balloon

- File system (9P)

- SCSI

Based on generic RX/TX buffer

Logic distributed in the guest driver (aka virtual device) and qemu backend (and kernel backend in some cases)

Virtio device

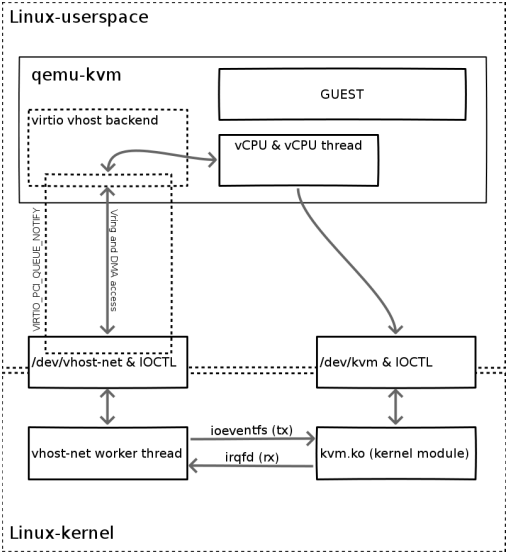

vhost example

-

High throughput

-

Low latency guest networking

Normally the QEMU userspace process emulates I/O accesses from the guest.

Vhost puts virtio emulation code into the kernel

-

Dont forget vhost-blk and vhost-scsi

Virtio

vhost example

-

vhost-net driver creates a /dev/vhost-net character device on the host

-

QEMU is launched with -netdev tap,vhost=on and open /dev/vhost-net

- vhost driver creates a kernel thread called vhost-$pid

-

$pid = pidof(QEMU)

-

Job of the worker thread is to handle I/O events and perform the device emulation.

-

vhost architecture is not directly linked to KVM

-

Use ioeventfd and irqfd

Virtio device

Virtio device

vhost

Kernel code :

-

drivers/vhost/vhost.c - common vhost driver code

-

drivers/vhost/net.c - vhost-net driver

-

virt/kvm/eventfd.c - ioeventfd and irqfd

The QEMU userspace code shows how to initialize the vhost instance :

-

hw/vhost.c - common vhost initialization code

-

hw/vhost_net.c - vhost-net initialization

wHO is using kvm

LIBVIRT

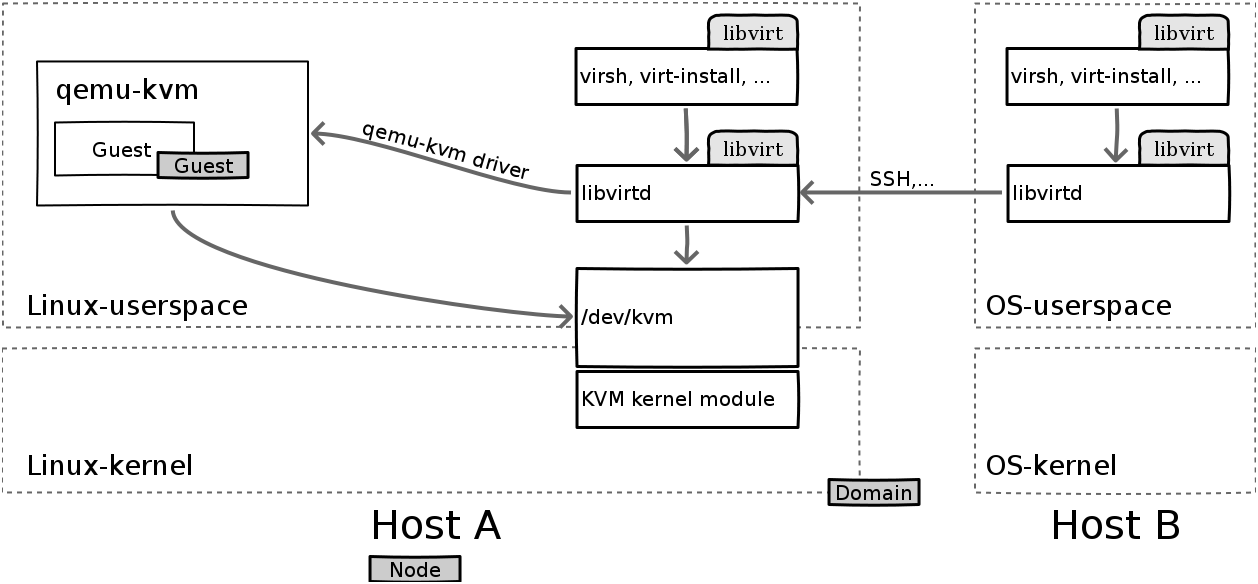

very small introduction

-

Virtualization library: manage guest on one or many nodes

-

Share the application stack between hypervisors

-

Long term stability and compatibility of API and ABI

-

Provide security and remote access “out of the box”

- Expand to management APIs (Node, Storage, Network)

livirt

Very small introduction

Question ?