State of the art in benchmarking BigData systems

Frans Ojala: frans.ojala@helsinki.fi

Helsinki University

A standard or point of reference against which things may be compared.

A problem designed to evaluate the performance of a computer system.

Benchmark

/ˈbɛn(t)ʃmɑːk/

- Oxford dictionary

Benchmark

Premise 1: we need compute power, storage, network bandwidth etc. and it costs.

- How can we get the best system for the capital we have?

Premise 2: different vendors have different systems that have different properties.

- How can we compare the systems?

Why should we benchmark?

- CPU speed

- Disk I/O

- Memory I/O

- Network speed, latency

- Database throughput

- Application throughput

- Holistic system performance on load

Benchmark

What should be benchmarked?

Easy! Build an application that mimics the desired load and build a scoreboard!

Benchmark

How should we benchmark?

-

Introduction and motivation

-

A brief history of benchmarking

-

Case example in Cloud benchmarking: BigBench

-

Case example in ranking service providers: SMICloud

-

Summary of key concepts

-

Inudstry standards

Overview

A brief history of benchmarking

HPC

benchmarks

- HPCC (HPC Challenge) benchmark suite

- Floating point rate, double precision rate, memory bandwidth and latency, network capacity, random memory updates, discrete Fourier transform. LINPACK.

- Metrics are MFLOPS, Gb/s etc.

- SPEC benchmark suite

- A newer perspective that fixes some problems with HPCC.

- Measures elapsed time, claims to be fairer overall.

- Large set of programs to measure different aspects of HPC systems.

- TPC benchmarks

- Cover many aspects of traditional computing, such as typical business order-entry environment, data integration, decision support and others.

Database

benchmarks

- TPC-benchmarks (-A, -C, -D)

- Many solutions for business and e-commerce

- Mainly geared toward databases and transactions

- Wisconsin benchmark

- 'De facto' database benchmark for a long time

- Structured data

- XGDBench

- New benchmark for graph stores

- Addresses exascale cloud problems

- MAG: simulates real-world data

Cloud benchmarks

BigBench

- A result of the first Workshop for Big Data benchmarking.

- TPCx-BB uses BigBench for its BigData benchmark.

- 3 V's: Volume, Variety, Velocity;

- Arguably also 'Value'.

- End-to-end benchmark system:

- Fictious retailer as basis.

- Data model (3V).

- Data generator.

- Workload description (in english).

- Requires more work and industry input.

BigBench

Data Model

Ghazal, Ahmad, et al. "BigBench: towards an industry standard benchmark for big data analytics." Proceedings of the 2013 ACM SIGMOD international conference on Management of data. ACM, 2013.

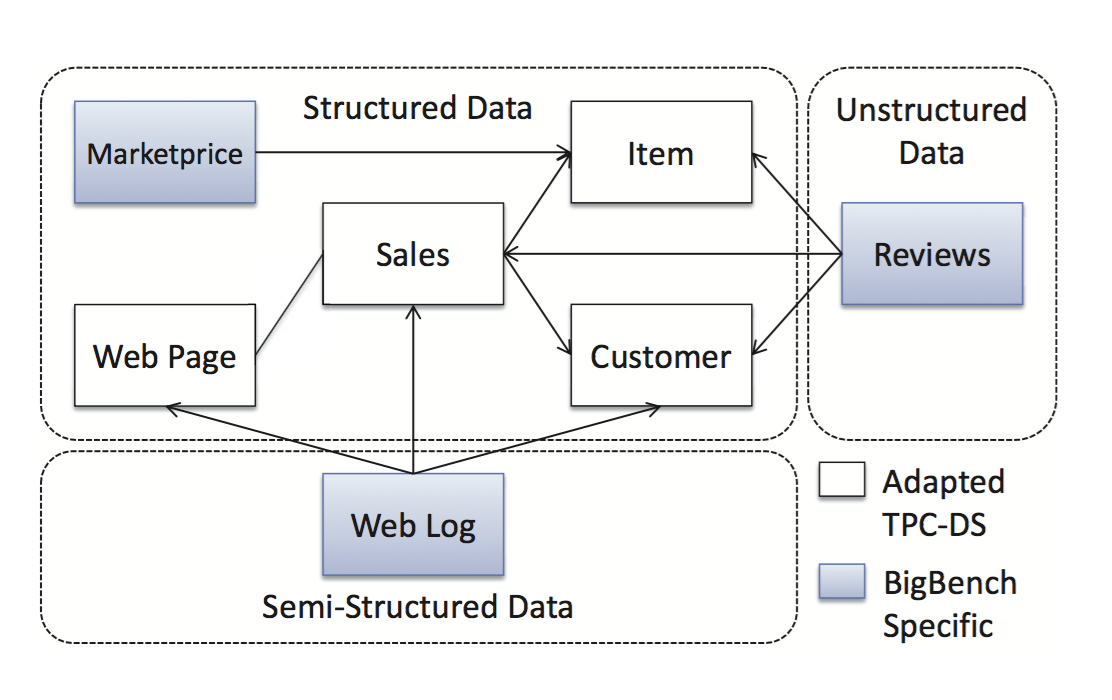

- Structured:

- TPC-DS adaption

- Additionally a table for current competitor prices

- Relational data

- Semi-structured:

- Web-page navigation sequence

- Repeat customers and guests

- Key-value data

- Unstructured:

- User product reviews

BigBench

Data Generator

Rabl, Tilmann, et al. "A data generator for cloud-scale benchmarking." Performance Evaluation, Measurement and Characterization of Complex Systems. Springer Berlin Heidelberg, 2010. 41-56.

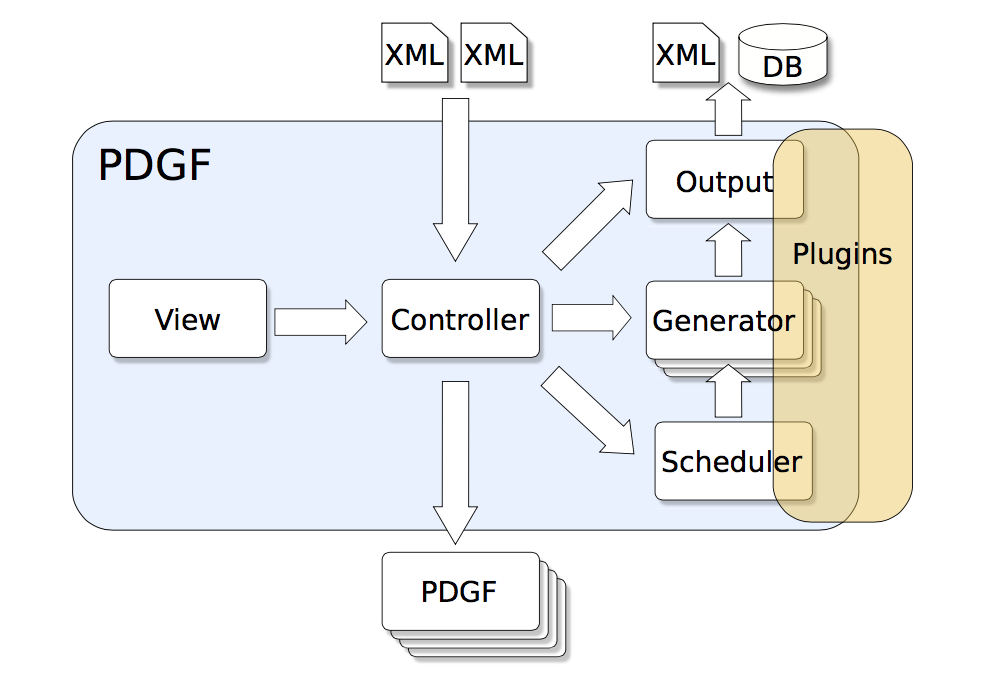

- Based on PDGF and its extension:

- Can generate large amount of data for arbitrary schema.

- Structured data from original PDGF.

- Semi-structured and unstructured data needs extensions.

- TextGen:

- Uses Markov chains to extract key words from input text.

- Builds a dictionary and uses its contents to build synthetic reviews.

BigBench

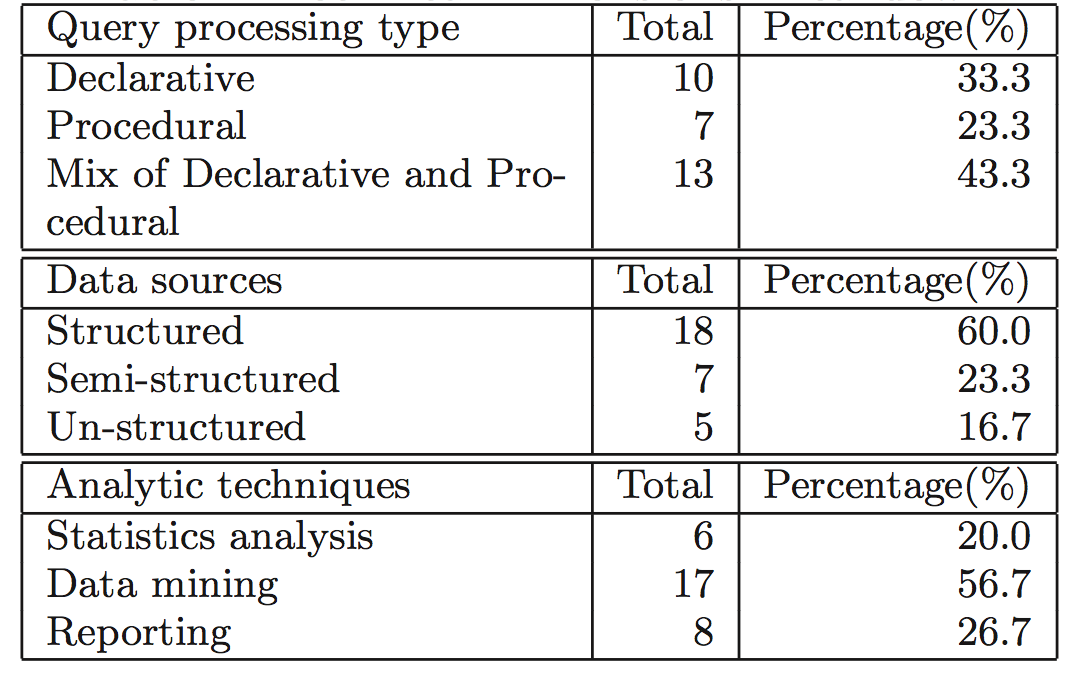

Workload queries

- Workloads constructed from the enterprise point of view:

- Marketing

- Merchandising

- Operations

- Supply chain

- New businessa models

-

Also from technical point of view:

- Use mutliple data types in queries

- Declarative (SQL) and procedural (MR)

- Algorithmic data processing

Ghazal, Ahmad, et al. "BigBench: towards an industry standard benchmark for big data analytics." Proceedings of the 2013 ACM SIGMOD international conference on Management of data. ACM, 2013.

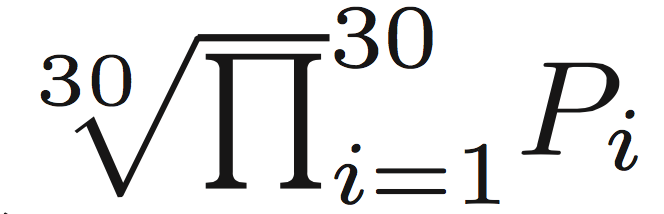

Proposed metric:

BigBench

Ghazal, Ahmad, et al. "BigBench: towards an industry standard benchmark for big data analytics."

Proceedings of the 2013 ACM SIGMOD international conference on Management of data. ACM, 2013.

TPCx-BB: http://www.tpc.org/tpcx-bb/default.asp

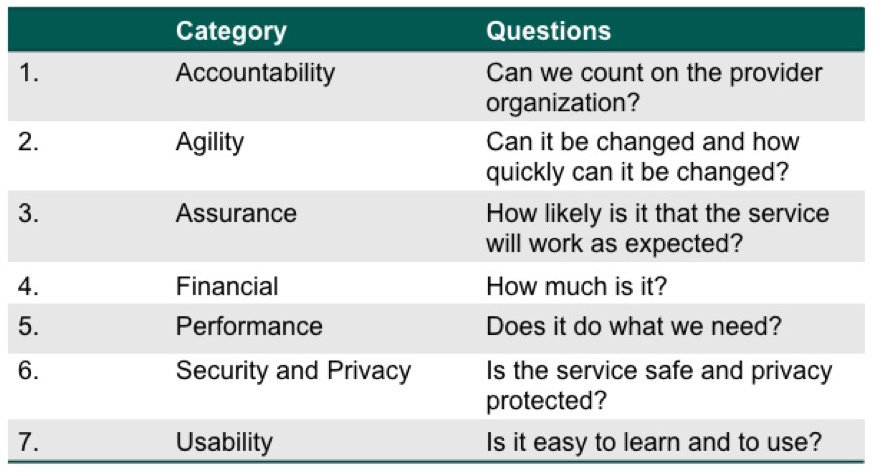

Ranking service providers

SMICloud

- Framework proposed by Cloud Service Measurment Index Consortium to compare cloud providers.

- Offers evaluation based on user QoS requirements.

- How to measure various SMI attributes?

- How to rank service providers based on SMI attributes?

- Some SMI not easily quantified.

- What SMI to include?

- More SMI complicates Multi-Criteria Decision Making.

- Analytical Hierarchical Process to assign weights to SMI.

- Decision support tool!

SMICloud

Service Measurment Index

http://www.csmic.org

- Based on ISO standards' business-relevant KPI.

- Holistic view of the QoS requirements of a cloud customer.

- Catalogues service providers

- Monitors service providers

- Brokers with cloud customers

SMICloud

Key Perfomance Indicators

- Service response time

- Sustainability

- Suitability

- Accuracy

- Transparency

- Interoperability

- Availability

- Reilability

- Stability

- Cost

- Adaptability

- Elasticity

- Usability

- Throughput and efficiency

- Scalability

SMICloud

Garg, Saurabh Kumar, Steve Versteeg, and Rajkumar Buyya. "A framework for ranking of cloud computing services"

Future Generation Computer Systems 29.4 (2013): 1012-1023.

SMICloud: http://www.csmic.org

Summary of key concepts

Data generation

- Data used in benchmarking must be generated

- Network bandwidth insufficient

- Storage systems insufficient

- Empirical data not tailorable

- Methods

- On-the-fly

- A-priori

- Hybrid

- Generator must support wide data dynamics

- Able to address "4 V's"

Workloads

- Multiple types of workloads must be supported

- Premade suites

- Custom designed

- Platform independent

- Should support at least the most common APIs

- MapReduce, Spark, MongoDB, Cassandra...

- Enables more workloads

- Possible to holistically evaluate system

Metrics

- Systems have multiple dimensions

- Dimensions vary between applications

- Must support reporting of various properties

- No single numer can represent a system

- Users have different needs

- Need to be able to rank providers accordingly

- Needs multidimensional metrics

- Quality of Service is a important in the Cloud

- No single number can represent a system holistically

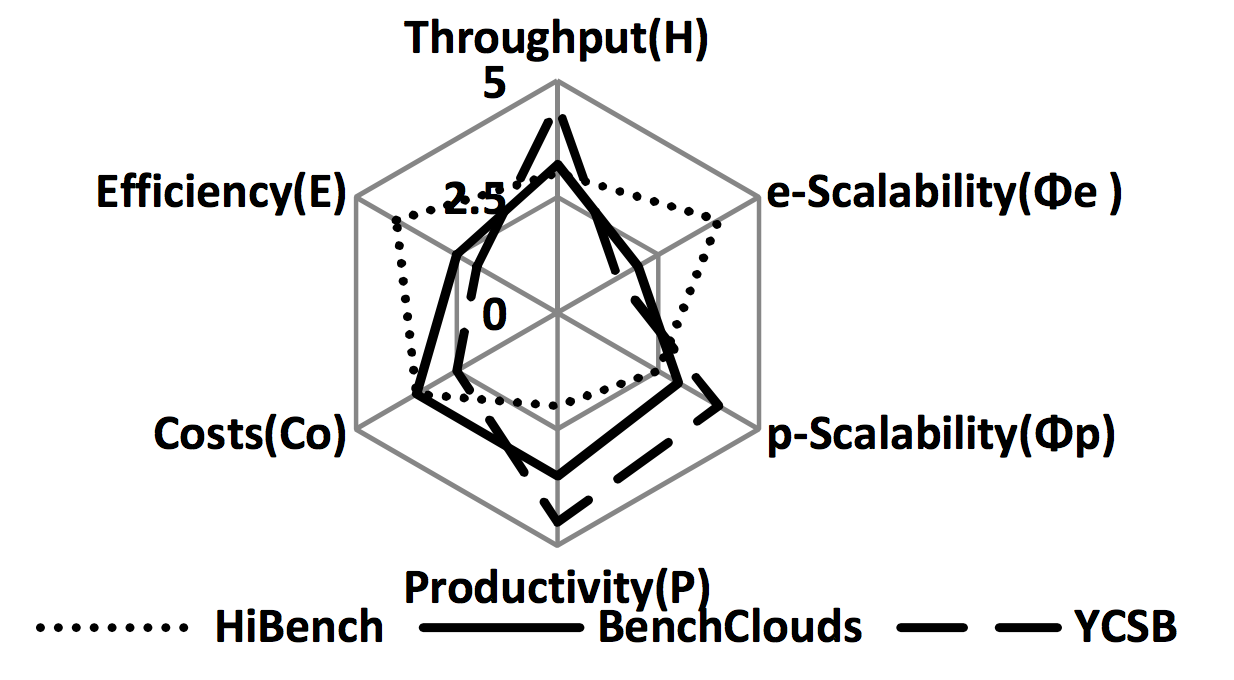

Differences between benchmarks

Hwang, Kai, et al. "Cloud Performance Modeling with Benchmark Evaluation of Elastic Scaling Strategies." Parallel and Distributed Systems, IEEE Transactions on 27.1 (2016): 130-143.

- Different benchmarks can achieve varying results

- Implementation details

- Programming model

- Programming language

- Data

- Workloads

Industry standard

(Does not exist yet)

Industry standards

- TCPx-HS, BB

- TPC has had several industry standard benchmarks

- PARSEC 2.0

- Version 1.0 was a de facto benchmark, now obsolete

- Has major overhauls, BigData approach, but still lacking

-

CloudHarmony

- Provides ranking services of different cloud providers

- Basis on CloudBench, CloudProbe

- CloudSpectator

- Basis on UnixBench, Phoronix test suite, others

- Current top google result for 'Cloud benchmarking'

Sources

Sources

- BigBench

- Ghazal, Ahmad, et al. "BigBench: towards an industry standard benchmark for big data analytics." Proceedings of the 2013 ACM SIGMOD international conference on Management of data. ACM, 2013.

-

SMICloud

- Garg, Saurabh Kumar, Steve Versteeg, and Rajkumar Buyya. "SMICloud: a framework for comparing and ranking cloud services." Utility and Cloud Computing (UCC), 2011 Fourth IEEE International Conference on. IEEE, 2011.

-

TPC, see associated documentation

- http://www.tpc.org

- Others: ask, and I shall provide

Questions?

Comments?

More details on benchmarks

HPCC

- Linpack TPP benchmark measures floating point rate of execution for linear equations.

- Floating point rate of execution of double precision real matrix-matrix multiplication.

- Synthetic benchmark program to measure sustainable memory bandwidth and the corresponding computation rate for simple vector kernel.

- Parallel matrix transpose for measuring network capacity.

- Measures the rate of integer random updates of memory (GUPS).

- Measures the floating point rate of execution of double precision complex one-dimensional Discrete Fourier Transform (DFT).

- Communication bandwidth and latency.

SPEC CPU

Dixit, Kaivalya M. "Overview of the SPEC Benchmarks." (1993): 489-521.

LINPACK

- Collection of subroutines written in Fortran to solve systems of linear equations (matrix operations).

- Measures double precision floating point performance.

- Widely used and is the standard benchmark in ranking "top500" supercomputers.

LINPACK problems

-

Small, fit in cache

-

Obsolete instruction mix

-

Uncontrolled source code

-

Prone to compiler tricks

-

Short runtimes on modern machines

-

Single-number performance characterization with a single benchmark

-

Difficult to reproduce results (short runtime and low-precision UNIX timer)

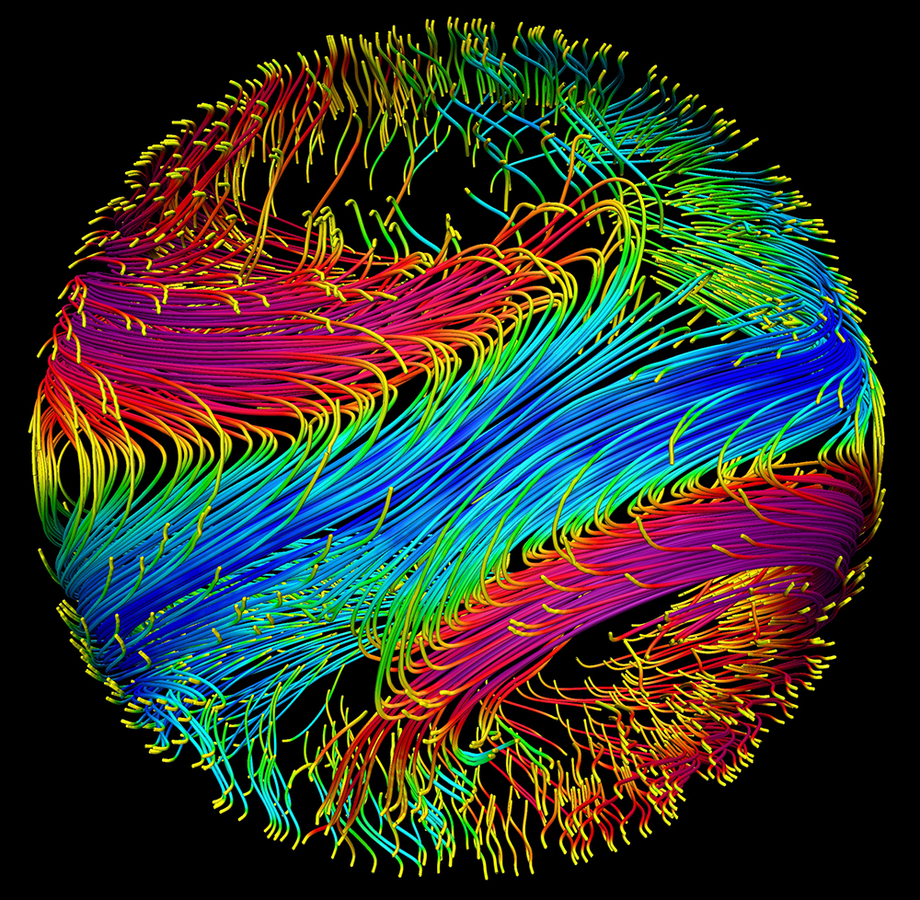

NAS parallel benchmarks

- Small set of programs designed to help evaluate the performance of parallel supercomputers.

- Basis in computational fluid dynamics (CFD) applications and consist of five kernels and three pseudo-applications in the original "pencil-and-paper" specification (NPB 1).

- Has been extended to include new benchmarks for unstructured adaptive mesh, parallel I/O, multi-zone applications, and computational grids.

Courtesy of NASA, NAS: https://www.nas.nasa.gov/publications/gallery.html

More cloud benchmarks

- AMP benchmarks

- LinkBench

- Cloud Suite

- CloudCmp

- CloudBench

- Cloudstone

- C-SMART

- ... the list goes on