Deploying Machine Learning Models to Production

Nawfal Tachfine

Data Scientist

Typical Data Science Workflow

formulate

problem

get data

build and clean dataset

study dataset

train model

+ feature selection

+ algorithm selection

+ hyperparameter optimization

Then what?

Enter Industrialization...

goal = "automatically serve predictions to any given information system"

philosophy = "start from final use case and work your way back to data-prep"

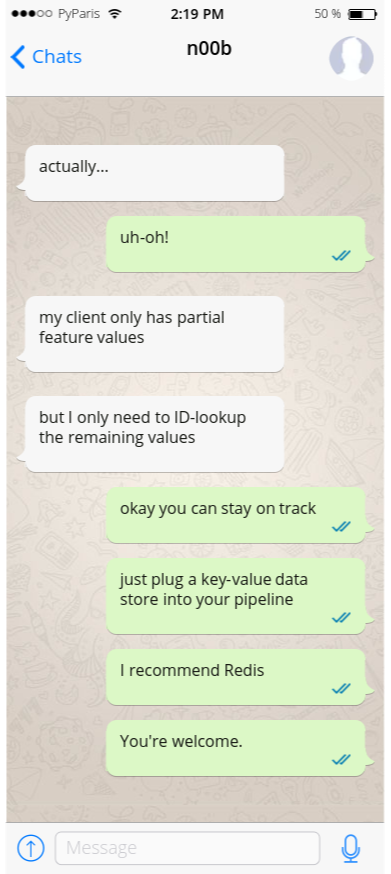

potential_constraints = [

data_sources,

data_enrichment,

model_stability,

scalability,

resilience,

resources

]

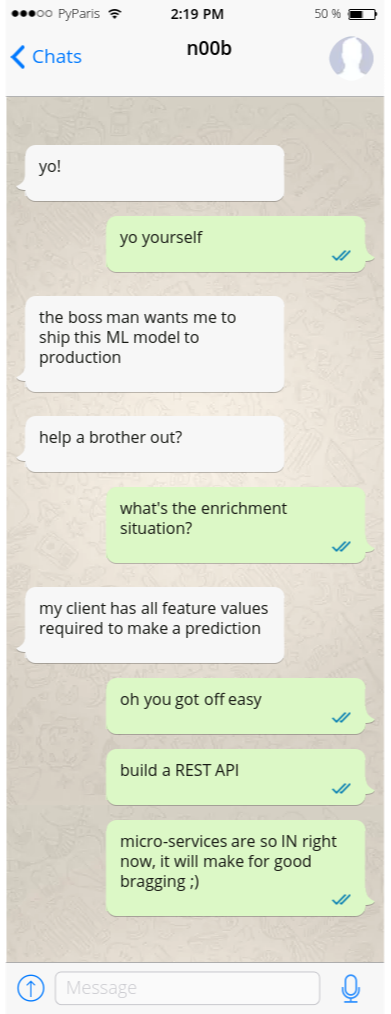

The Data Dependency Problem

Design Patterns

-

Think about your production use-case as early as possible.

- Your data pre-processing pipelines must be deterministic and reproduce the same conditions as in training.

- make your transformations robust against abnormal values

- variable order matters (cf. scikit-learn)

- Log as much as you can.

- Write clean code: your models will evolve (re-training, new features, ... etc)

- Keep things simple: stability trumps complexity.

Building a Prediction API

-

Web app framework: Flask

- simple,

- lightweight,

- powerful

This approach is based on serialization and can be used for models trained with librairies other than scikit-learn.

@app.route('/api/v1.0/aballone', methods=['POST'])

def index():

query = request.get_json()['inputs']

data = prepare(query)

output = model.predict(data)

return jsonify({'outputs': output})

if __name__ == '__main__':

app.run(host='0.0.0.0')Toolbox

Building a Prediction API

- I/O: JSON

- universal support

-

Virtualization: Docker

- no more dependency problems,

- run (almost) anywhere

- Deployment: AWS Elastic Beanstalk

- (very) easy to use,

- (relatively) cheap,

- provides auto-scaling and load-balancing.

Toolbox

Demo Time!