Learning the Language of Chemistry

How Deep Learning is shaping up the Drug Discovery Process

- Applied Scientist @ Microsoft

- Ex-NVIDIA

- Ex-USC-INI

- DL Admirer

Traditional Drug Discovery Process

-

Target Validation and Lead Selection

-

Lead Optimization

-

Multiple Testing Phases

-

Final Drug

Traditional Drug Discovery Process

-

Takes 10+ years for the development of a single drug!

-

Lower estimates for the development of a drug is roughly ~$2B!

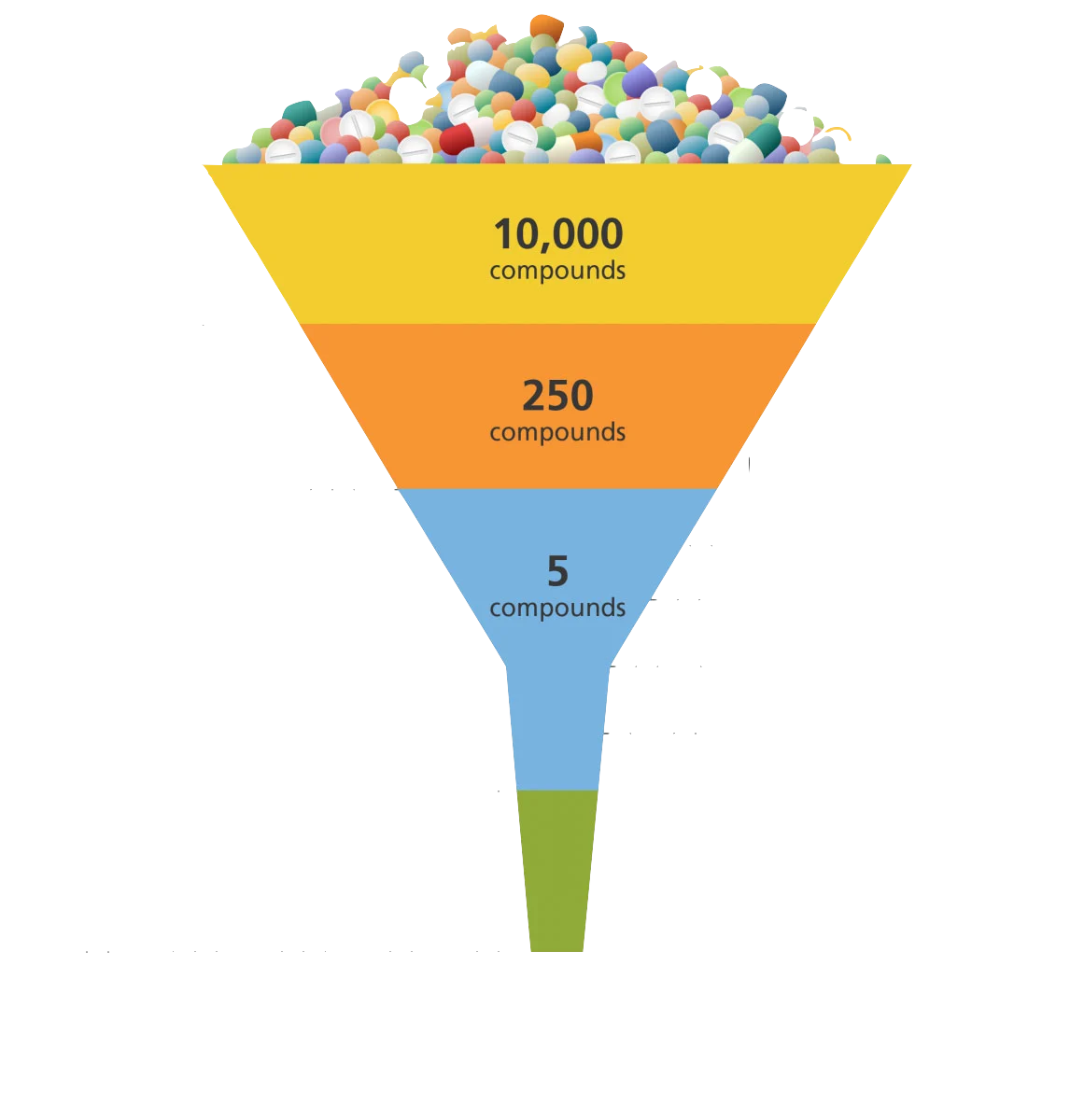

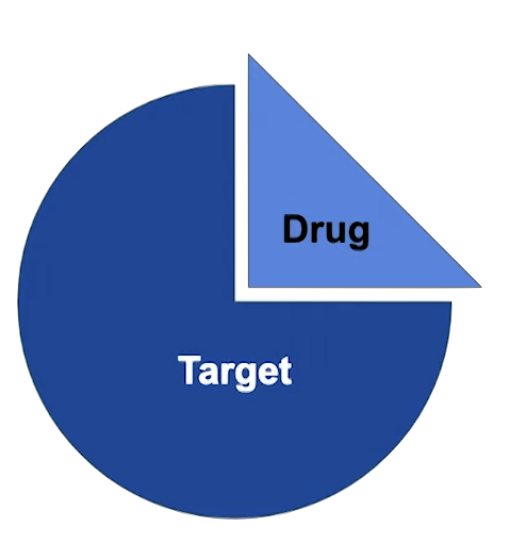

Drug Discovery 101

-

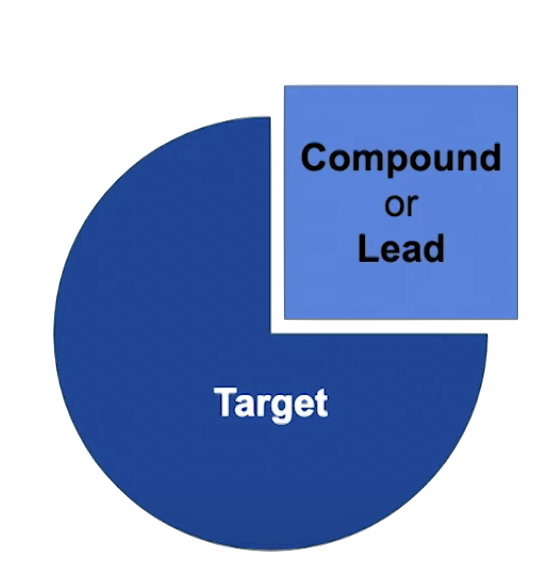

Target : A compound that is associated with a disease and it is the desired therapy destination.

-

Lead : A molecule that shows remedial promise but it is not fully optimized.

-

Drug : An optimized lead leading to desired results.

Drug Discovery 101

-

Exploration in the compound universe leads to a combinatorial explosion! (1e20 -1e60 molecules)

-

What we want is targeted and directed exploration.

-

What if we had a magic box that could generate novel molecules with the desired properties?

Cue Deep Learning!

NLP In Generative Chemistry

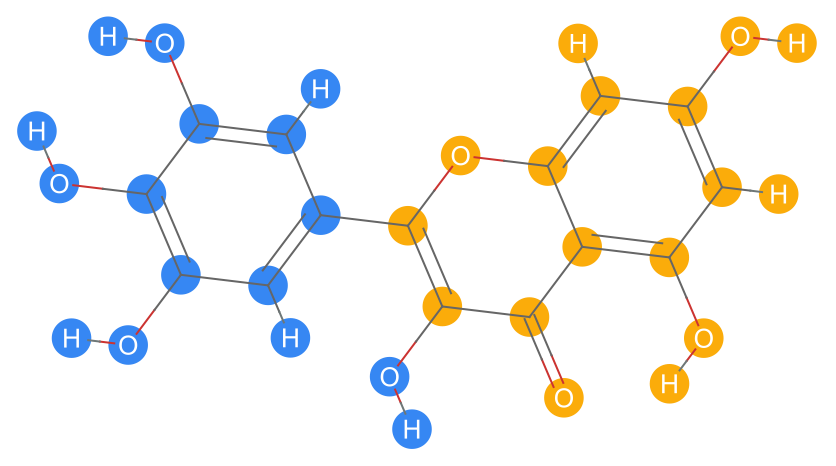

Different representations

-

But how do you represent a molecule to train these models?

-

Molecules are usually represented in two primary formats:

-

Graphs

-

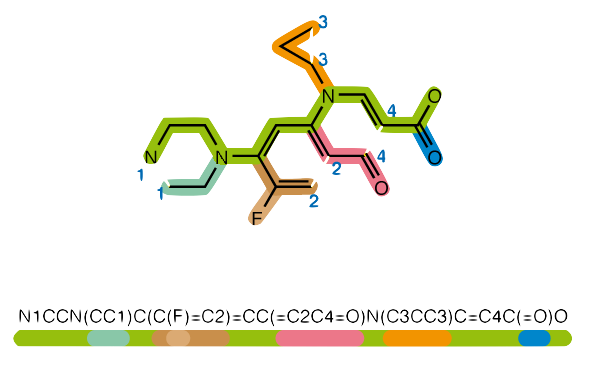

SMILES

-

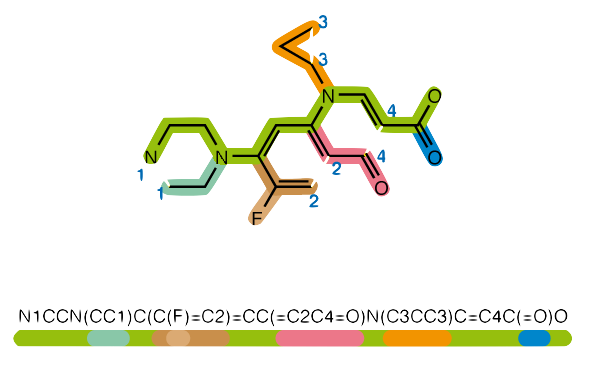

Different representations

-

SMILES stands for Simplified Molecular Input Line-Entry System.

-

Textual representation obtain from a Depth-First Traversal of a molecular compound.

-

Chemical rules are established for validity.

Transformers to the Rescue

-

Transformers have revolutionized NLP tasks.

-

Championed the field of Self-Supervised learning.

-

SOTA in:

- Machine Translation

- Question-Answering

- Summarization

- So many other tasks!

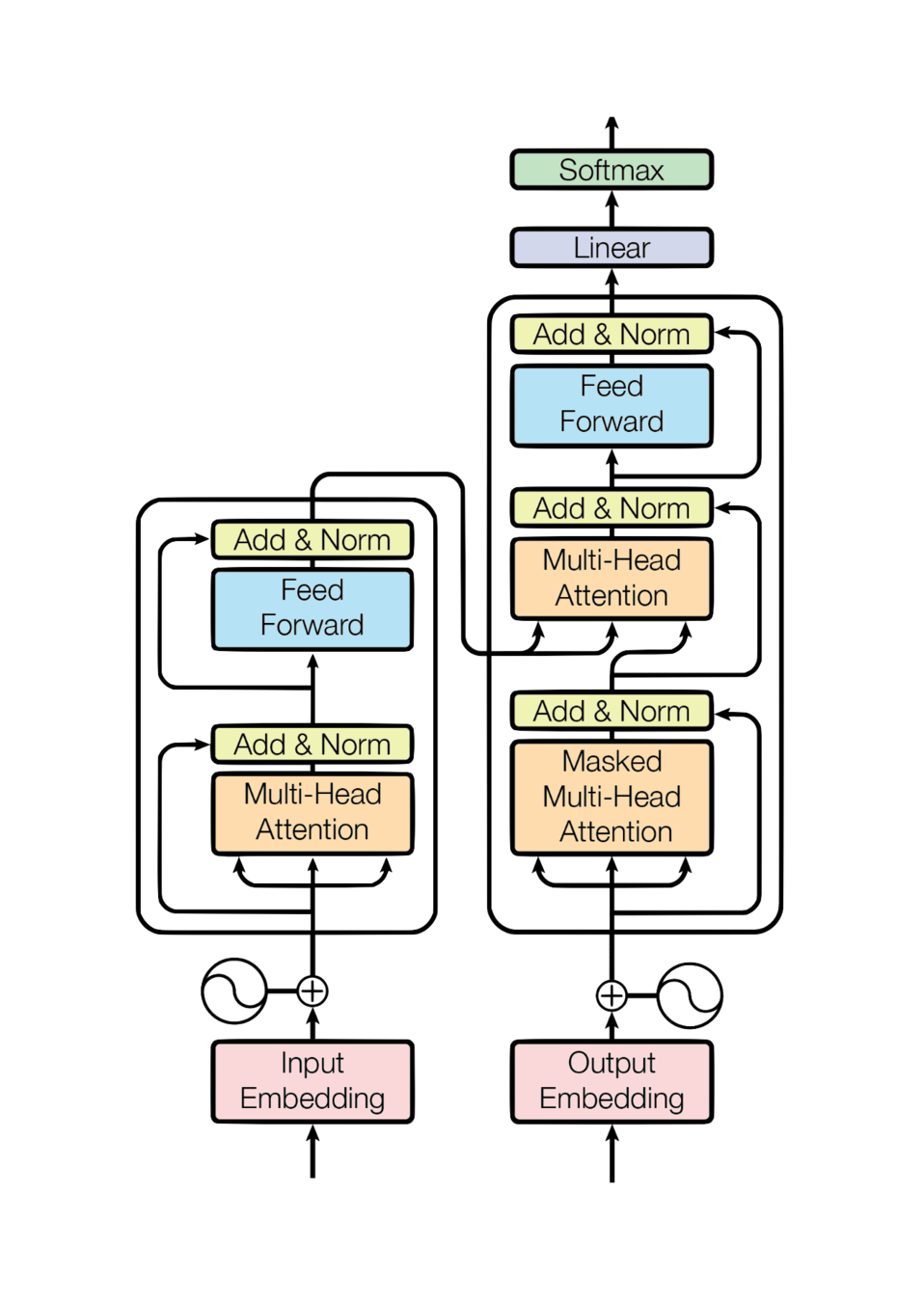

Transformers to the Rescue

-

The famous Transformer architecture!

-

The daunting model has two broad parts:

-

Encoder : Learn the latent embeddings.

-

Decoder : Generate output auto-regressively.

-

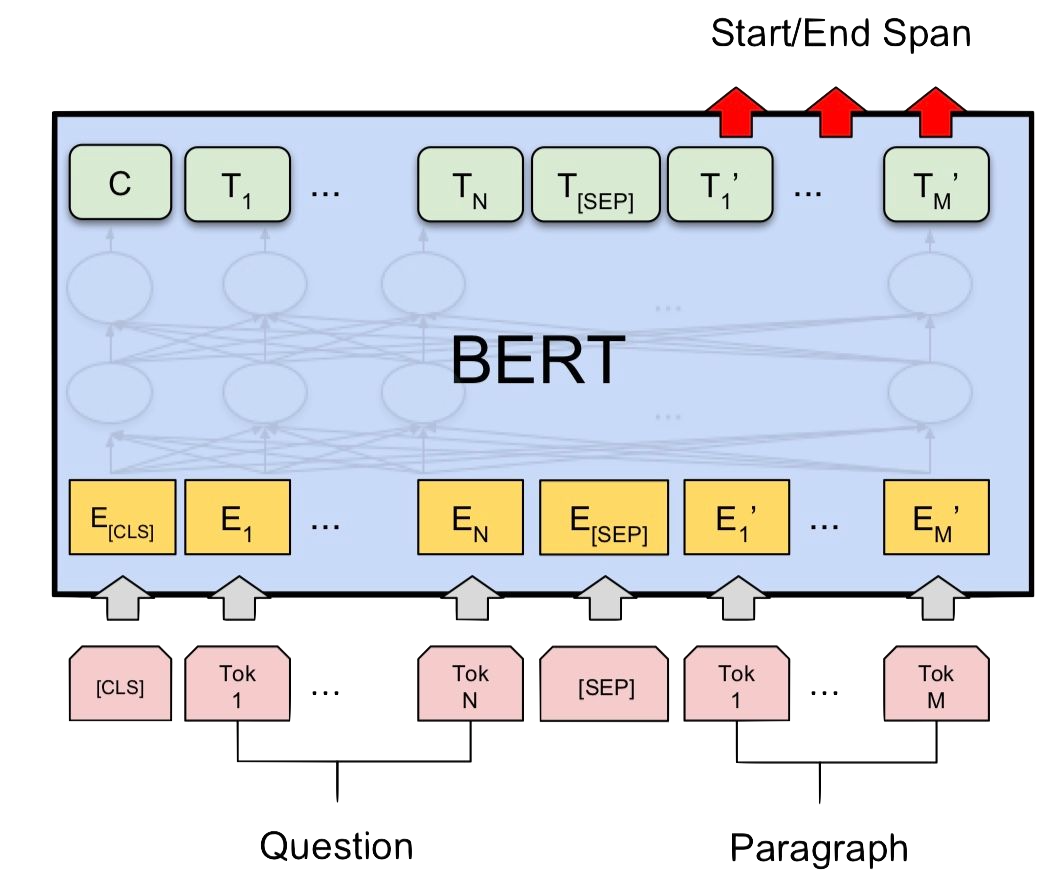

Transformers to the Rescue

Encoder Only

-

BERT based models.

-

Uses contexts bidirectionally for effective learning.

-

Proposed Masked Language Modeling (MLM).

Transformers to the Rescue

Decoder Only

-

GPT based models.

-

Unidirectional Autoregressive model.

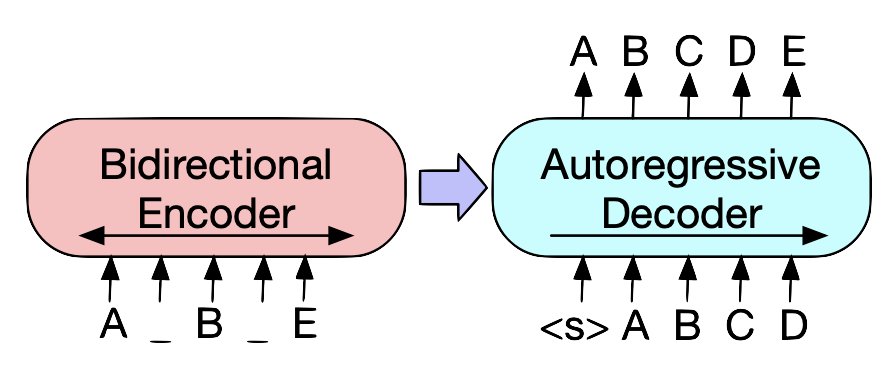

Transformers to the Rescue

Encoder-Decoder

Best of both worlds!

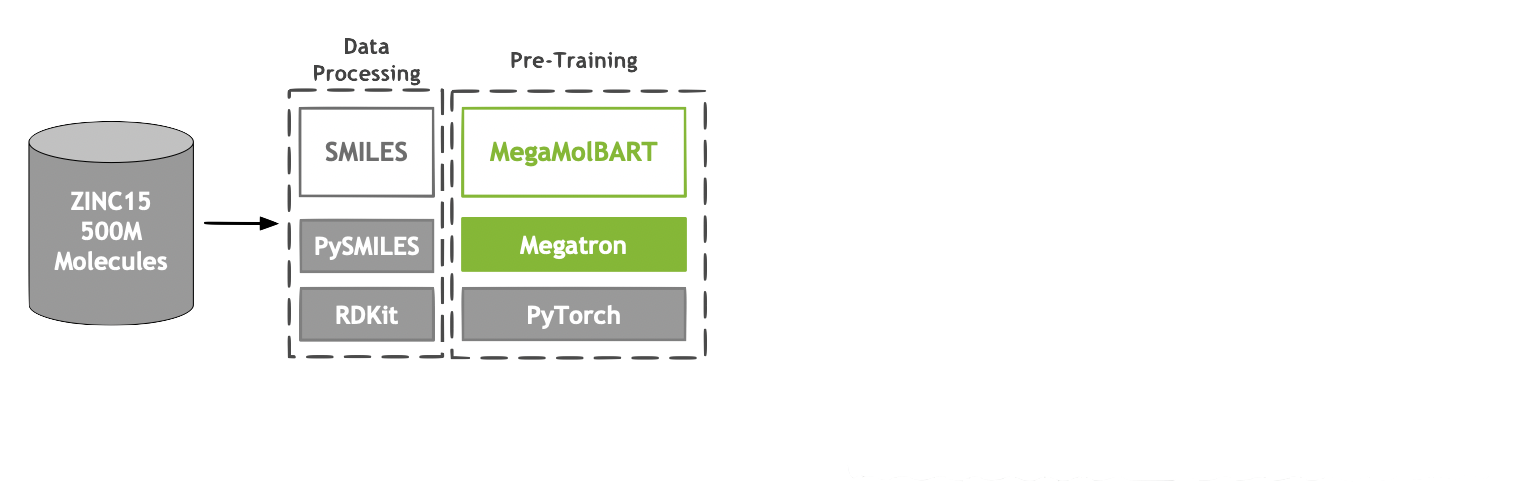

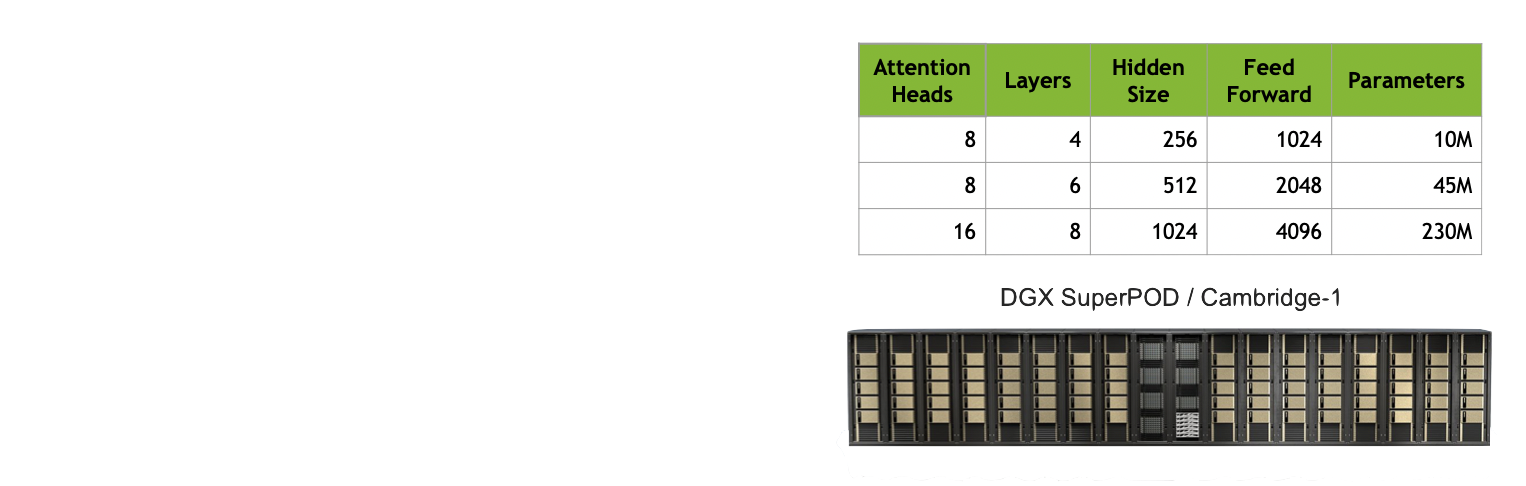

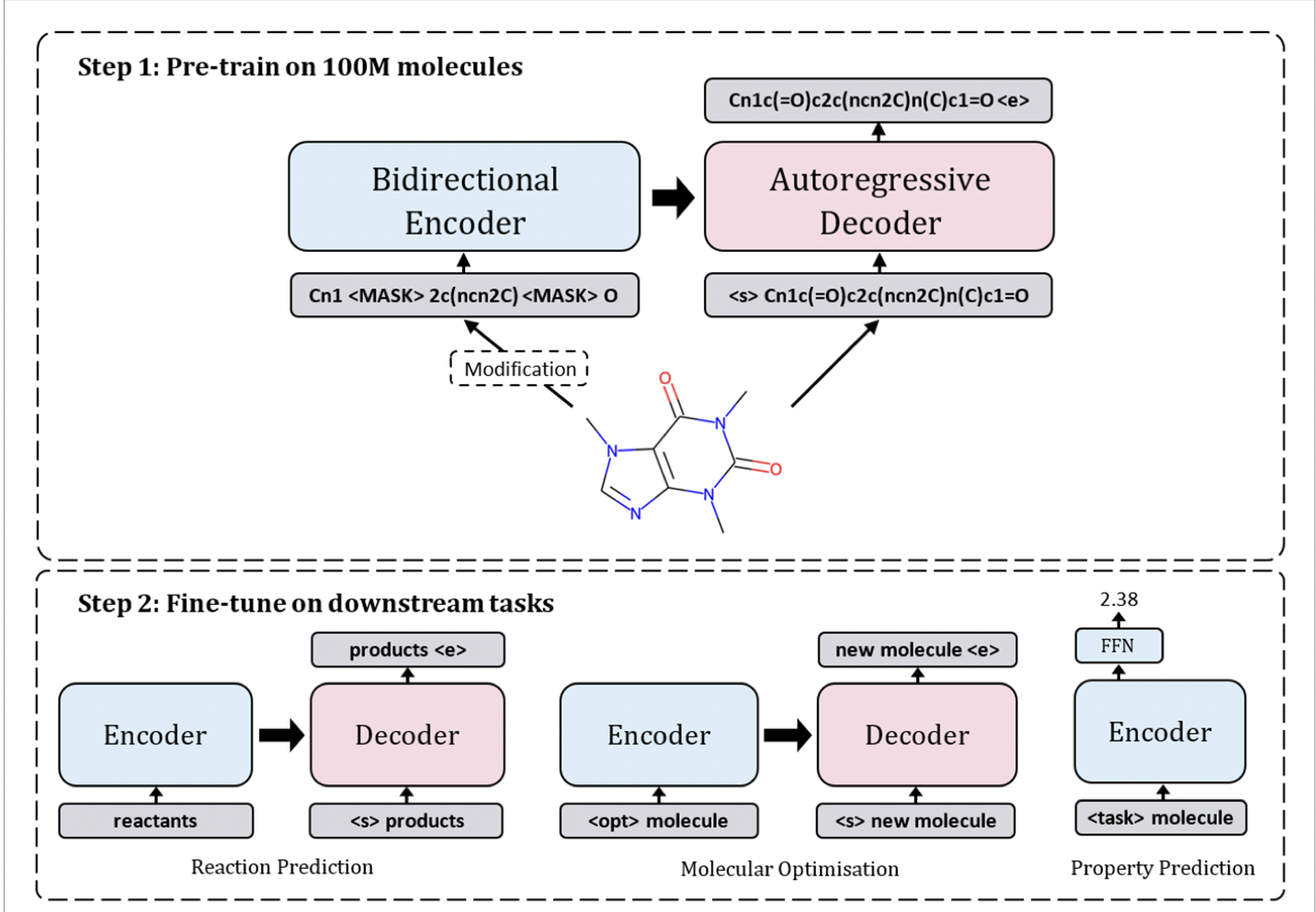

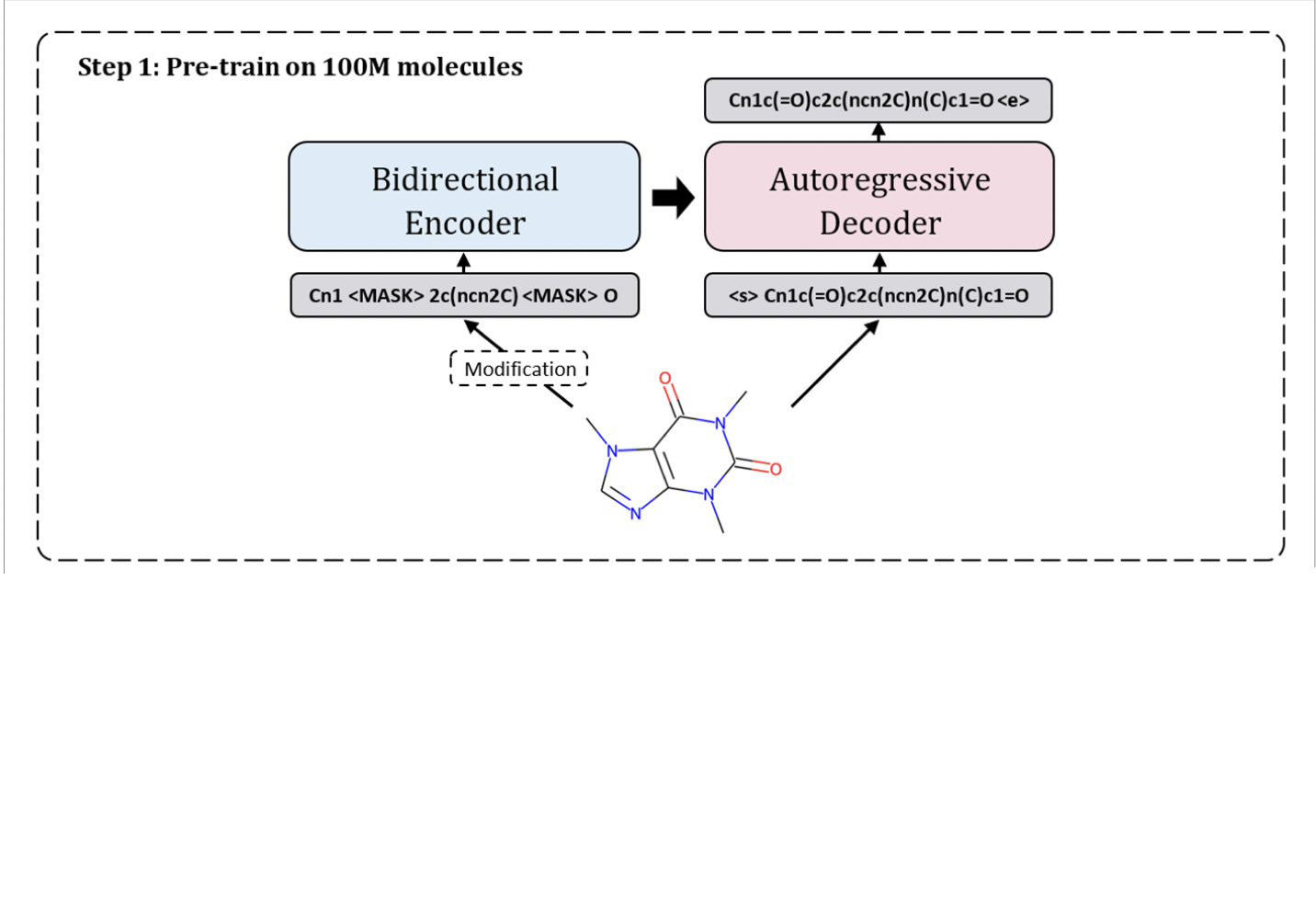

Mega-Mol-BART

-

Developed at NVIDIA in collaboration with AstraZeneca.

-

Uses the Megatron framework (Mega)

-

Trained on ~500M molecules (Mol)

-

Uses the Bidirectional and Autoregressive Transformer architecture (BART)

Mega-Mol-BART

-

500M molecules sampled randomly from ZINC15

-

BART

-

Developed by FAIR.

-

Span Masking.

-

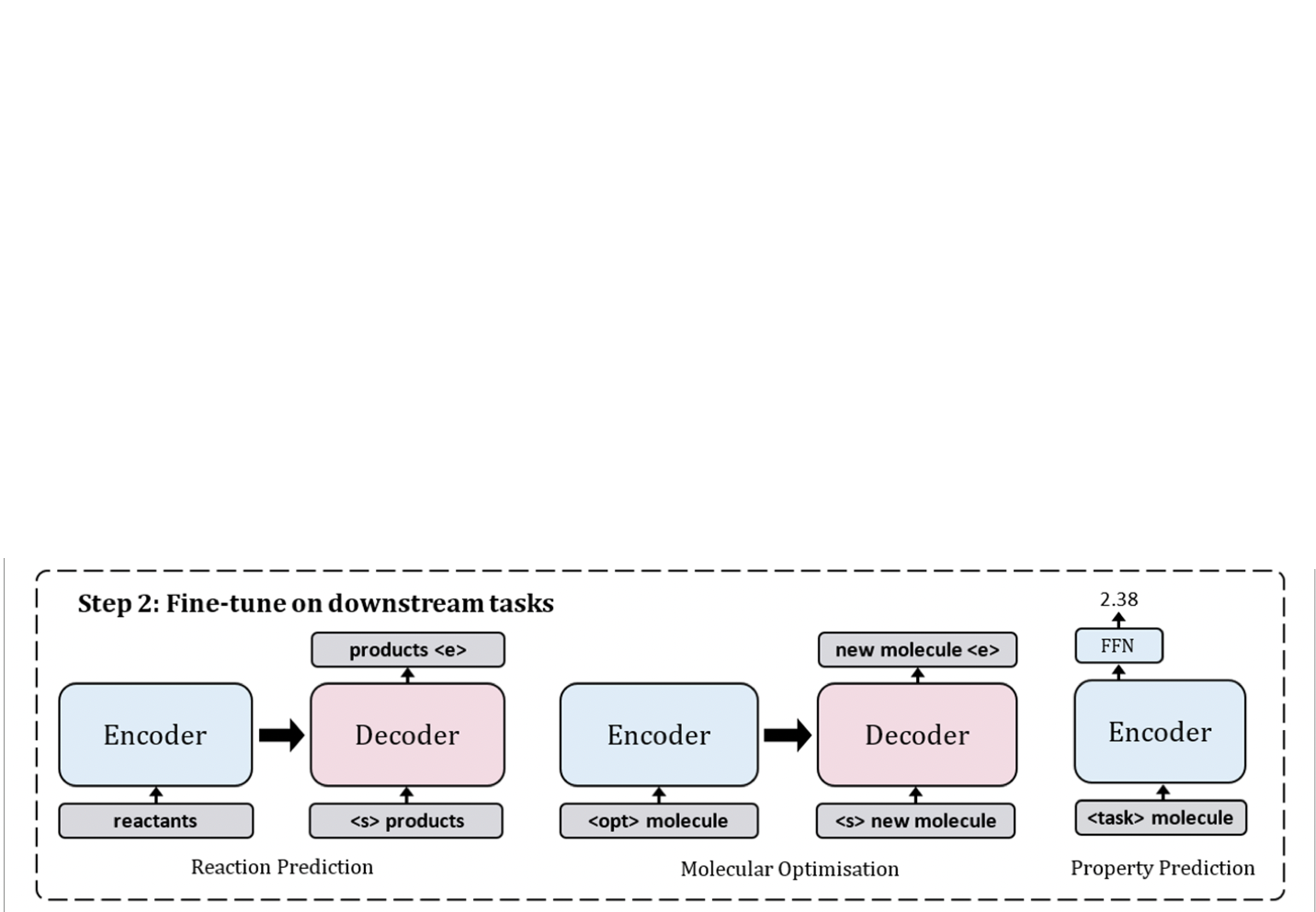

Results

Results

-

Usage:

-

SMILES strings sampled around the region of a single input SMILES molecule.

-

SMILES strings sampled at regularly spaced intervals between two input molecules.

-

-

Results:

-

Validity: 0.98

-

Uniqueness: 0.32

-

Novelty: 0.36

-

Results

Future Direction

-

Train even bigger models

-

Expand to more downstream fine-tuning tasks.

-

BioNemo!

QUESTIONS ?

BabootaRahul

rahulbaboota