Deep Learning in NLP

- Powered by Python

About me

- Technical Lead and Chief Deep Learning Engineer at Neuron

- Google Summer of Code Intern'14

What is...

- Machine Learning?

- Natural Language Processing?

- Neural Networks?

- Deep Learning?

Common NLP Tasks

- Language Modelling

- Sentiment Analysis

- Named Entity Recognition

- Topic Modelling

- Semantic Proximity

- Text Summarization

- Machine Translation

- Speech Recognition

The Old Way of doing things...

- Bag of words

- This movie wasn't particularly funny or entertaining -> [movie, funny, entertaining]

- n-grams(next slide)

- Regex Patterns, etc.

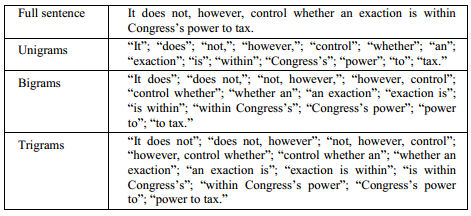

n-grams example

Suggested Reading:

n-grams/naive bayes

Pros and Cons of Bag-of-words and

n-gram models

- easy to build(hypothesize and code)

- require relatively lesser data to train

- faster to train

- give no heed to the order of the words

- doesn't care about the structure of the natural language

- usually assumes that a word is conditioned upon just last few words

- can't handle long term dependencies in text

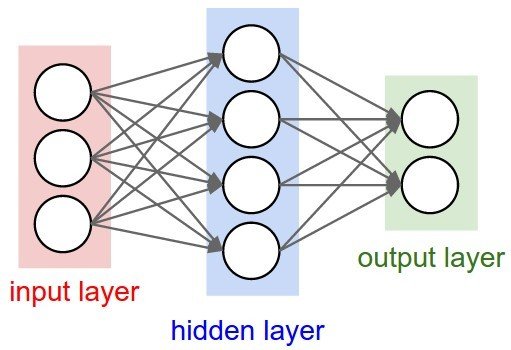

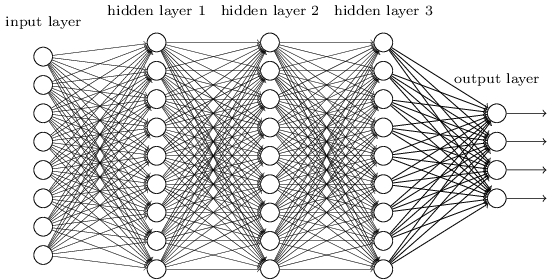

Neural Networks

...Neural Networks

a beautiful, highly flexible and generic biologically-inspired programming paradigm which enables a computer to learn from observational data

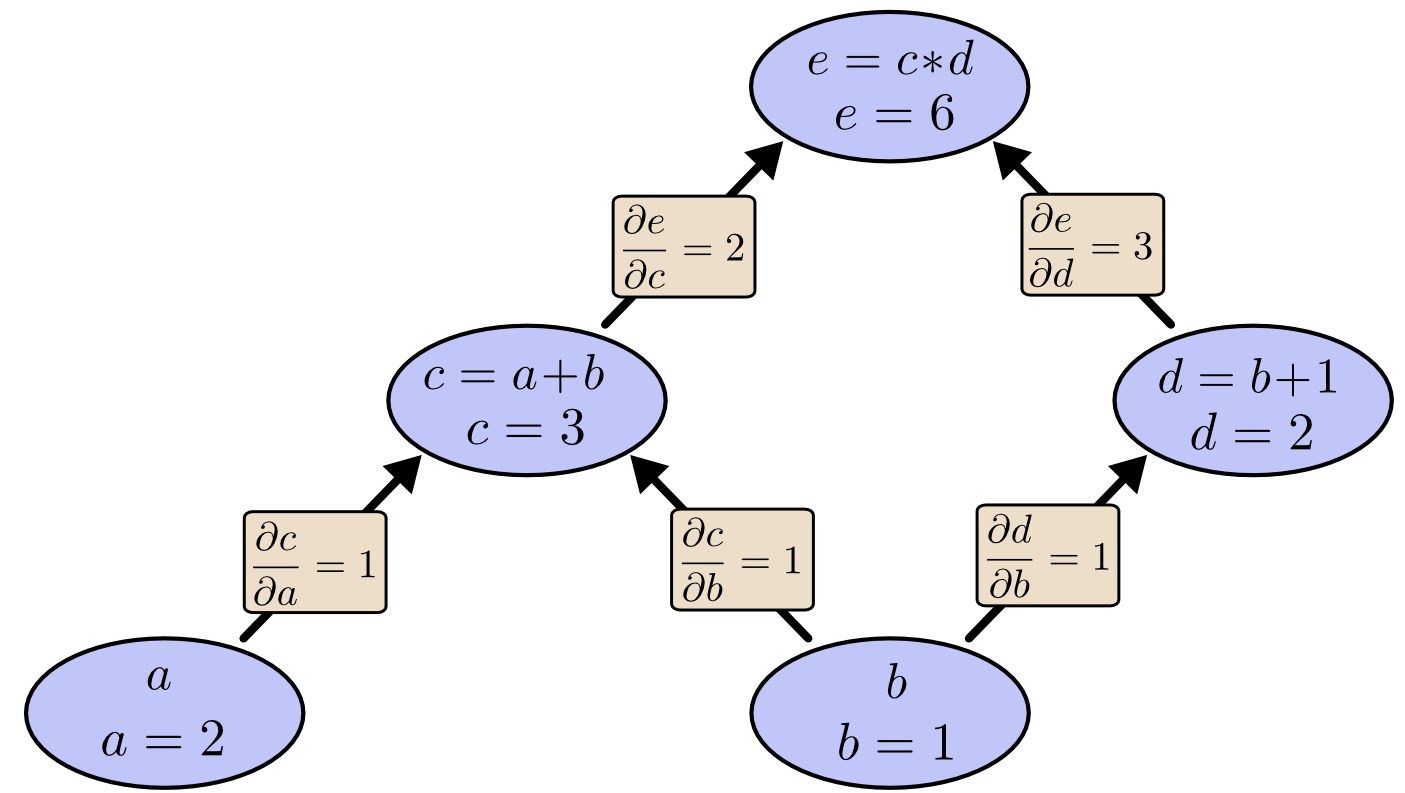

Backpropagation

Suggested Reading:

Neural Networks

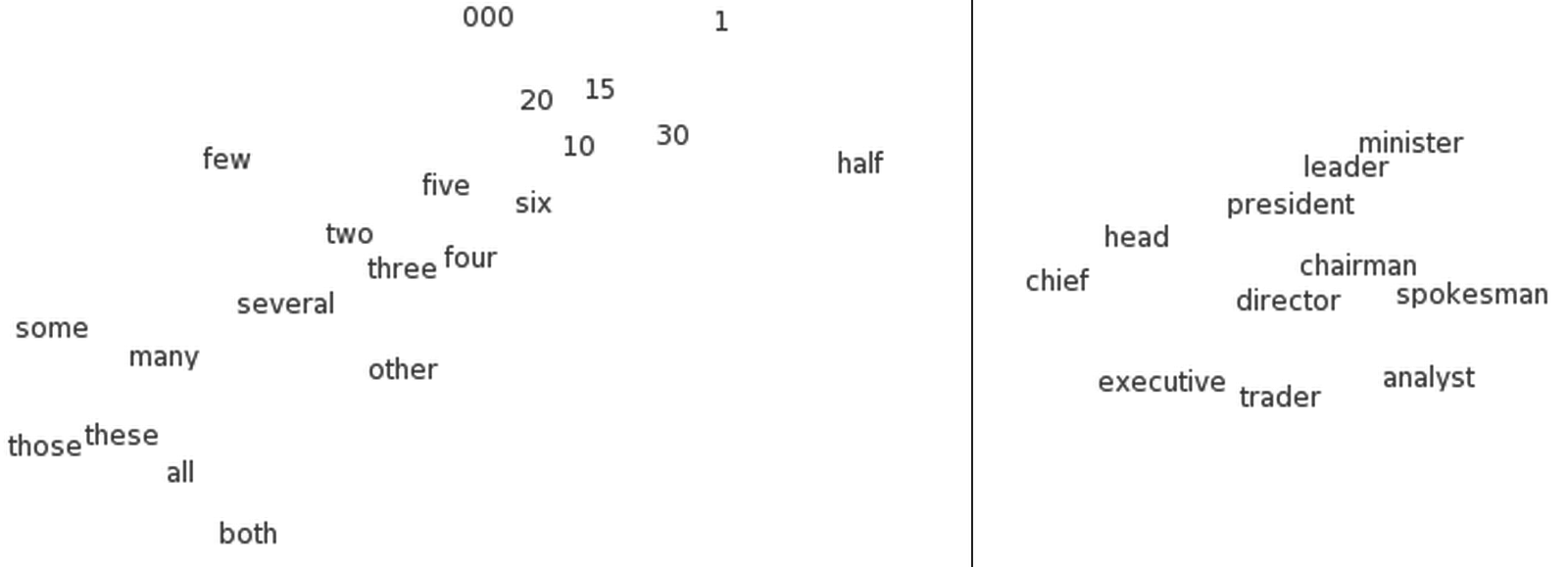

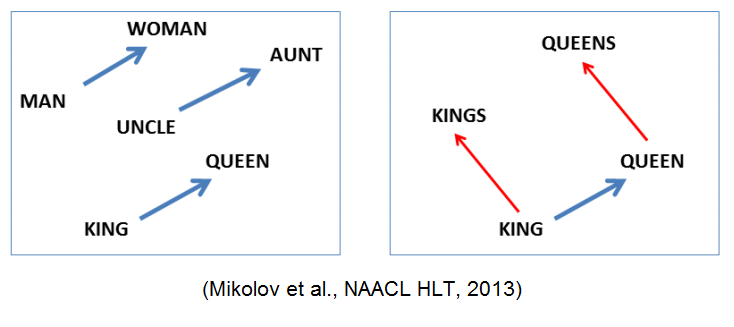

Word Vector Embeddings

Vector Space Models

- Creates a d-dimensional space, where each word is represented by a point in this space

- All the words with a very high co-occurrence will be clustered together

- Understands semantic relations between words

- Each dimension explains one of the characteristics of the natural language like plural, past tense, opposite gender, etc.

Introduction to Gensim

- A Python based library that provides modules for word2vec, LDA, doc2vec, etc.

- Options for training word vectors using skip gram and CBOW

- In built scripts to train word vectors over wikipedia dumps

...Gensim

model = Word2Vec(sentences, size=100, window=5, min_count=5, workers=4)

model.save(fname)

model = Word2Vec.load(fname) # you can continue training with the loaded model!

model.most_similar(positive=['woman', 'king'], negative=['man'])

==> [('queen', 0.50882536), ...]

model.doesnt_match("breakfast cereal dinner lunch".split())

==> 'cereal'

model.similarity('woman', 'man')

==> 0.73723527

model['computer'] # raw numpy vector of a word

==> array([-0.00449447, -0.00310097, 0.02421786, ...], dtype=float32)...Gensim

Suggested Reading:

Word Vectors

Introduction to Theano

Theano is a Python library that allows you to define, optimize, and evaluate mathematical expressions involving multi-dimensional arrays efficiently.

- Built on top of numpy

- Symbolic Expressions

- Automatic Differentiation

- In built integration for GPU Computation

- Python Interface

- Shared Variables

Theano Syntax

import numpy

import theano

import theano.tensor as T

from theano import pp

x = T.dscalar('x')

y = x ** 2

gy = T.grad(y, x)

f = theano.function([x], gy)

f(4)

# array(8.0)Suggested Reading:

Theano

Deep Neural Networks

Suggested Reading:

Deep Learning Models

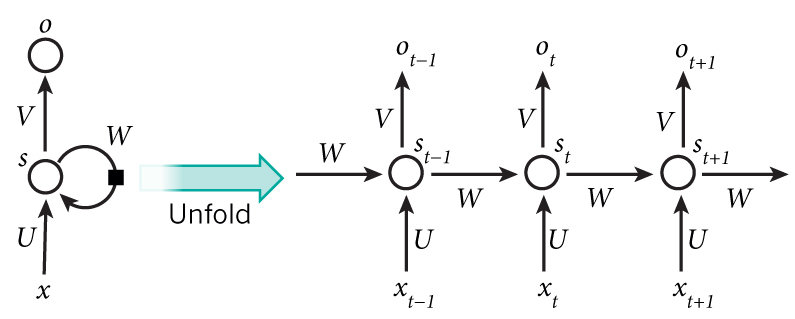

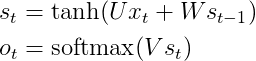

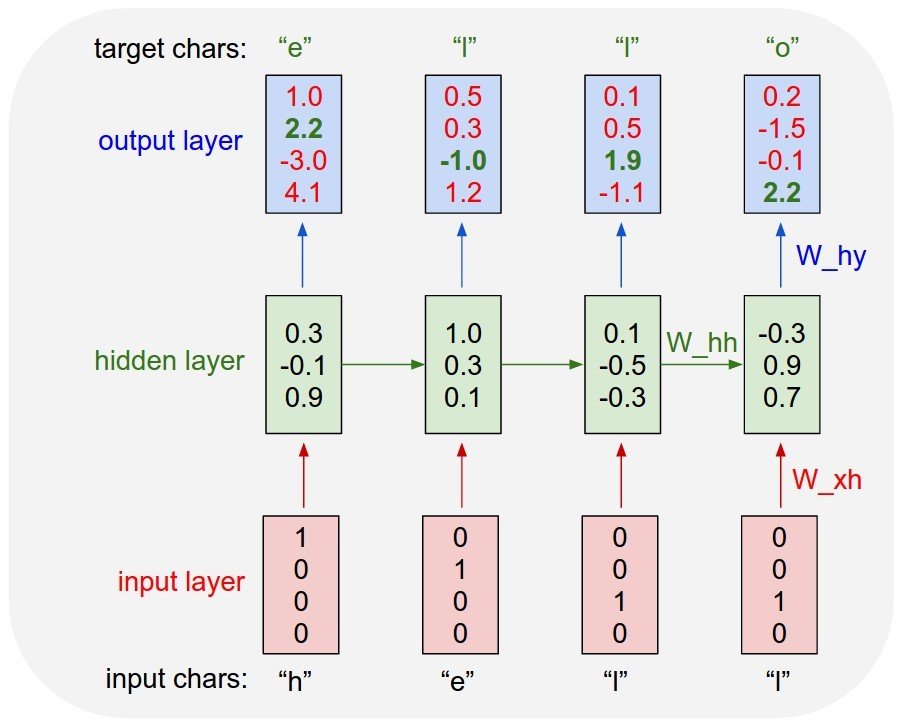

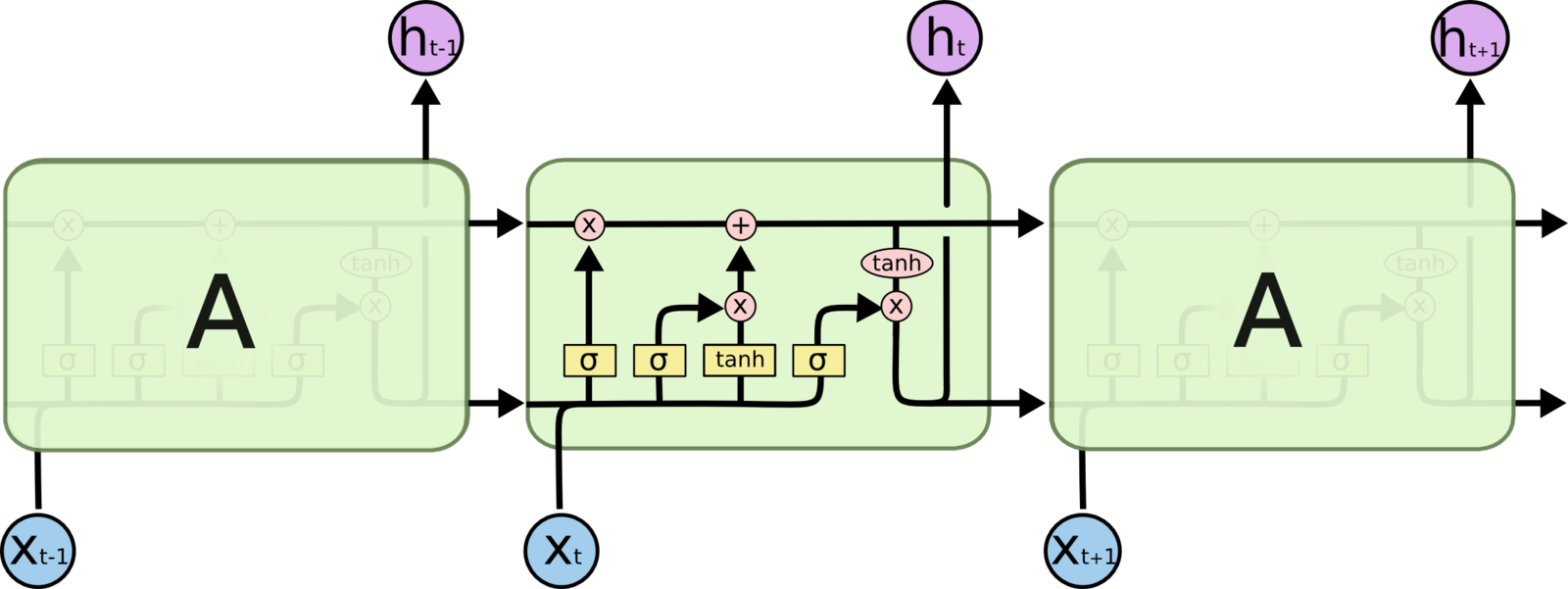

Recurrent Neural Networks

...RNNs

- particularly useful for sequential data(text, audio, etc.)

- "Recurrent" because they performs the same operations on each element of the input

- considers the dependencies between the input elements(words), contrary to bag-of-words model

- common RNNs are 5-1000 layer deep

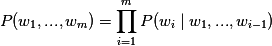

Language Modelling

Probability of a sentence consisting of 'm' words

=

Multiplication of Joint probability of each word conditioned upon (all) previous words

LM with RNN

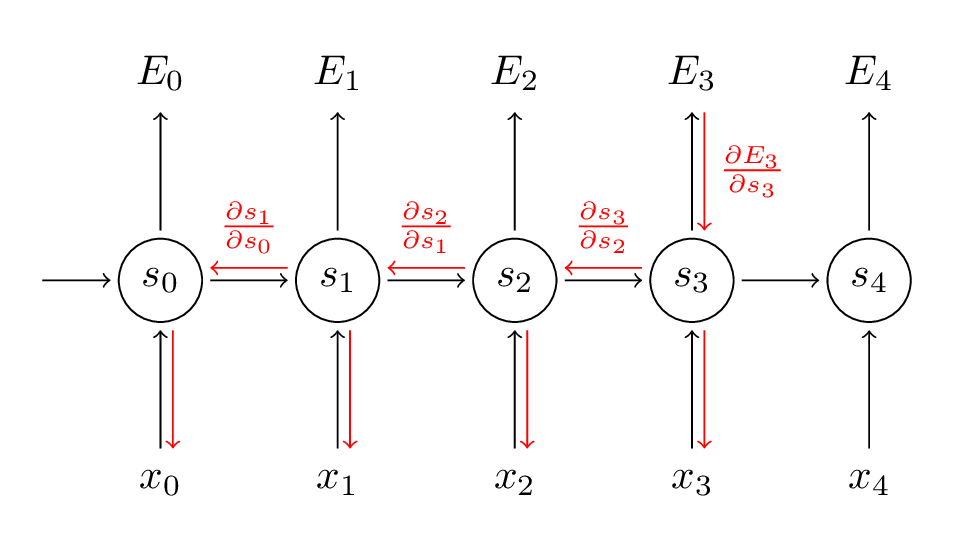

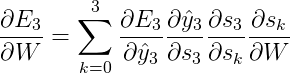

Back Propagation in Time

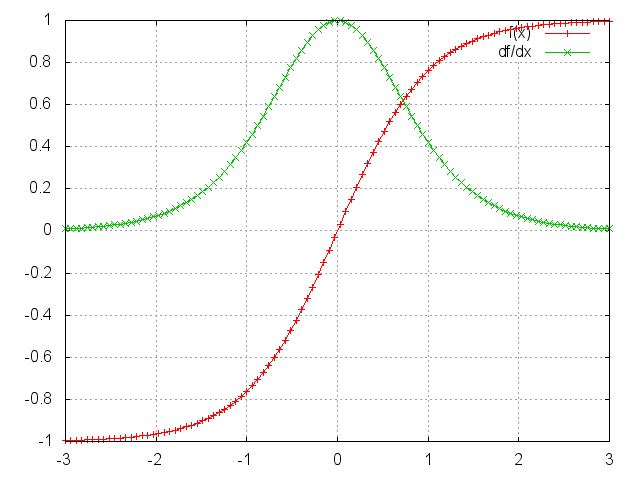

Vanishing Gradient Problem

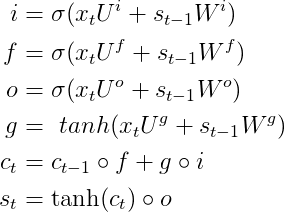

Long-Short Term Memory

LSTM Eqns

Suggested Reading:

RNN

Email: rishy.s13@gmail.com

Github: https://github.com/rishy

Linkedin: https://www.linkedin.com/in/rishabhshukla1