Message Passing Interface (MPI)

Hagiu Bogdan

Spac Valentin

What is MPI ?

A standardized and portable message-passing system designed by a group of researchers from academia and industry to function on a wide variety of parallel computers.

There are several well-tested and efficient implementations of MPI, including some that are free or in the public domain (implemented for Fortran, C, C++ and Java)

MPI - General Information

- Function start with MPI_* to differ from application;

- MPIhas defined is own data types to abstract machine dependent implementations;

- Two types of communication: point-to-point and collective communication

MPI - Geting started

- First step is to include the MPI header file

- MPI enviroment must be initialized with: MPI_INIT(init* argc, char*** argv);

- MPI_Finalize() used to clean up the MPI environment;

Point to point communication

-

MPI_Send(

void* data,

int count,

MPI_Datatype datatype,

int destination,

int tag,

MPI_Comm communicator) -

Blocking send, that is the processor doesn't do anything unil the message is sent

Point to point communication

-

MPI_Recv(

void* data,

int count,

MPI_Datatype datatype,

int source,

int tag,

MPI_Comm communicator,

MPI_Status* status) -

Source, tag, communicator has to be correct for the message to be recived

-

We can use wildcards in place of source and tag: MPI_ANY_SOURCE and MPI_ANY_TAG

Point to point communication

-

For a receive operation, communication ends when the message is copied to the local variables

-

For a send operation, communication is completed when the message is transferred to MPI for sending. (so that the buffer can be recycled)

Point to point communication

-

A program can send a blocking send and the receiver may use non-blocking receive or vice versa

-

Very similar function calls int MPI_Isend(void *buf, int count, MPI_Datatype dtype, int dest, int tag, MPI_Comm comm, MPI_Request *request);

Point to point communication

-

We can send different data types at the same time – eg. Integers, floats, characters, doubles... using MPI_Pack. This function gives you an intermediate buffer which you will send.

-

int MPI_Pack(void *inbuf, int incount, MPI_Datatype datatype, void *outbuf, int outsize, int *position, MPI_Comm comm) -

MPI_Send(buffer, count, MPI_PACKED, dest, tag, MPI_COMM_WORLD)

Collective Communication

-

Works like point to point except you send to all other processors;

-

MPI_Barrier(comm), blocks until each processor calls this. Synchronizes everyone.

-

Broadcast operation MPI_Bcast copies the data value in one processor to others.

Types of Collective Communication

Collective Communication

- Many more functions to lift hard work from you.

- MPI_Allreduce, MPI_Gatherv, MPI_Scan, MPI_Reduce_Scatter ...

- Check out the API documentation

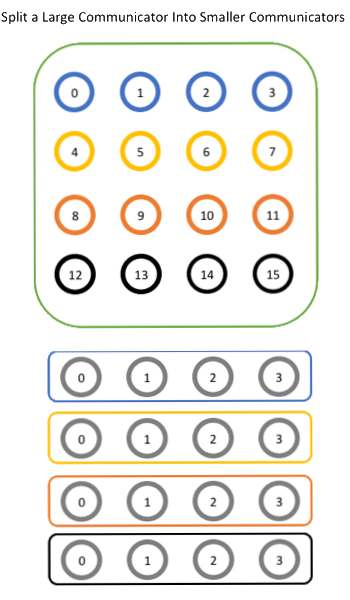

Communicators

- Communicators group processors

- Basic communicator MPI_COMM_WORLD defined for all processors

- You can create your own communicators to group processors. Thus you can send messages to only a subset of all processors

The end

\[^_^]/