Experience Optimization

using

Contextual Bandits

Trey Causey

zulily

@treycausey

s/clos/experiment/g

Moe: "You could flash-fry a

buffalo in 40 seconds."

Homer: "Awwww, but I want it now!"

Experiments

- High-power experiments may take too long

- Can't adjust to rapidly changing content

- Experiment's lifetime == content's lifetime

- May not want to select one treatment

Multi-armed bandits

- Reinforcement learning

- Exploration vs. exploitation

Contextual bandits

- Incorporate features of user and content

- The best experience for you, right now

- ... even if that changes.

Development

- Developed in Python

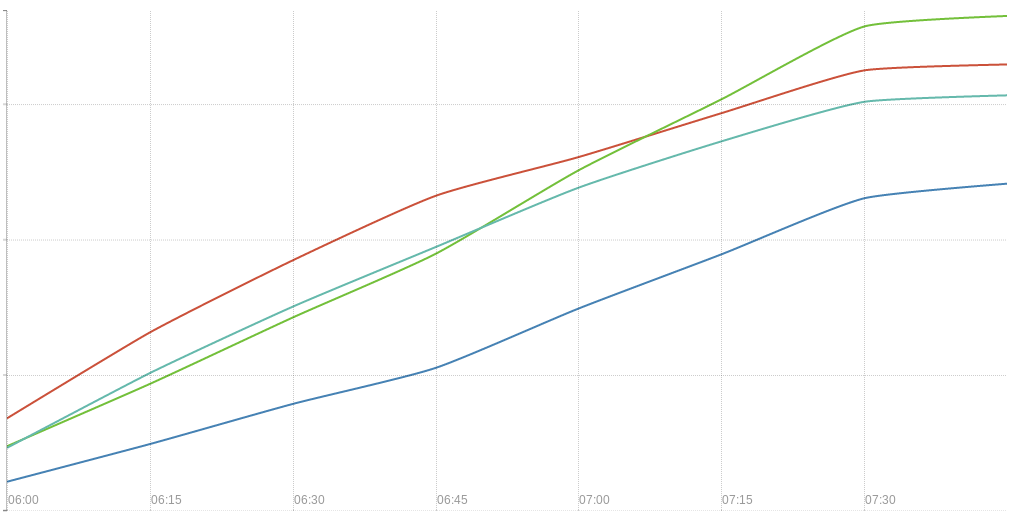

- Tested with Monte Carlo simulation

Implementation

- Capacity

Has to handle thousands of trials/second

Has to handle thousands of trials/second

- Latency

Called on page load to determine experience

Called on page load to determine experience

- Implementation

In production in Java