Mechanisms of Action (MoA) Prediction

Andrey Lukyanenko

Доступные данные

- 23814 строк в тренировочных данных

- 772 столбца с генами, 100 столбцов с клетками

- Treatment duration and dose

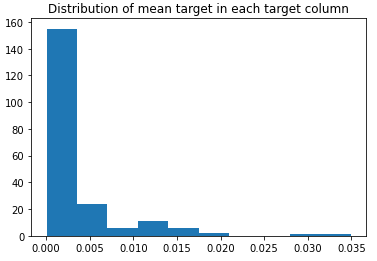

- 206 бинарных целевых переменных

- 402 дополнительныe бинарныe целевыe переменныe

- Анонимизация данных

- Нормализация данных

- Контрольная группа в данных

Multilabel multioutput

- По одной модели на каждую целевую переменную (206 моделей)

- Одна модель на все переменные

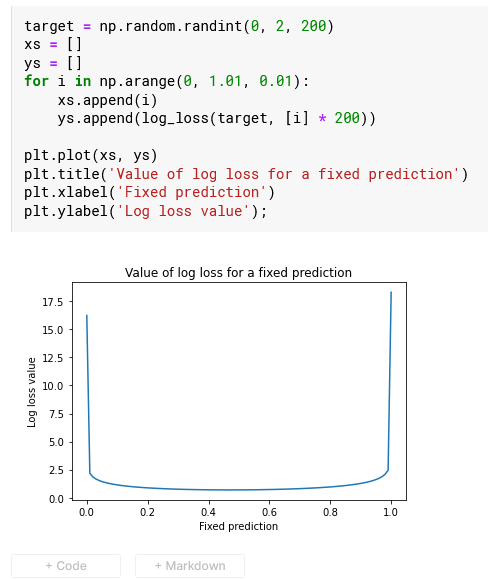

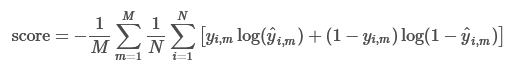

Метрика соревнования

Основные подходы

- MultilabelStratifiedKFold

- 206 моделей

- Структуры типа MLP

- Структуры типа Resnet

- Структуры типа Transformer

- Tabnet

Признаки

- Нормализация признаков

- PCA

- Выделение кластеров

- Статистики по строкам

- Трансформация признаков

- VarianceThreshold

Трюки

- Претренировка на 402, затем тюнинг на 206

- Клипание предсказаний

- Бленды, бленды, бленды

- Оптимизировать все

Label smoothing

class LabelSmoothingLoss(nn.Module):

def __init__(self, classes, smoothing=0.0, dim=-1):

super(LabelSmoothingLoss, self).__init__()

self.confidence = 1.0 - smoothing

self.smoothing = smoothing

self.cls = classes

self.dim = dim

def forward(self, pred, target):

pred = pred.log_softmax(dim=self.dim)

with torch.no_grad():

# true_dist = pred.data.clone()

true_dist = torch.zeros_like(pred)

true_dist.fill_(self.smoothing / (self.cls - 1))

true_dist.scatter_(1, target.data.unsqueeze(1), self.confidence)

return torch.mean(torch.sum(-true_dist * pred, dim=self.dim)) Label smoothing

class SmoothBCEwLogits(_WeightedLoss):

def __init__(self, weight=None, reduction='mean', smoothing=0.0):

super().__init__(weight=weight, reduction=reduction)

self.smoothing = smoothing

self.weight = weight

self.reduction = reduction

@staticmethod

def _smooth(targets:torch.Tensor, n_labels:int, smoothing=0.0):

assert 0 <= smoothing < 1

with torch.no_grad():

targets = targets * (1.0 - smoothing) + 0.5 * smoothing

return targets

def forward(self, inputs, targets):

targets = SmoothBCEwLogits._smooth(targets, inputs.size(-1),

self.smoothing)

loss = F.binary_cross_entropy_with_logits(inputs, targets,self.weight)

if self.reduction == 'sum':

loss = loss.sum()

elif self.reduction == 'mean':

loss = loss.mean()

return lossРекалибровка слоёв

def recalibrate_layer(self, layer):

if(torch.isnan(layer.weight_v).sum() > 0):

print ('recalibrate layer.weight_v')

layer.weight_v = torch.nn.Parameter(torch.where(torch.isnan(layer.weight_v),

torch.zeros_like(layer.weight_v),

layer.weight_v))

layer.weight_v = torch.nn.Parameter(layer.weight_v + 1e-7)

if(torch.isnan(layer.weight).sum() > 0):

print ('recalibrate layer.weight')

layer.weight = torch.where(torch.isnan(layer.weight),

torch.zeros_like(layer.weight),

layer.weight)

layer.weight += 1e-7