Language as Robot Middleware

Andy Zeng

CCI Seminar

Robot Manipulation

Amazon Picking Challenge

Amazon Picking Challenge

arc.cs.princeton.edu

Team MIT-Princeton

Amazon Picking Challenge

arc.cs.princeton.edu

Team MIT-Princeton

excel at simple things, adapt to hard things

How to endow robots with

"intuition" and "commonsense"?

Robotics as a platform to build intelligent machines

SHRDLU, Terry Winograd, MIT 1968

"will you please stack up both of the red blocks and either a green cube or a pyramid"

person:

"what does the box contain?"

person:

"the blue pyramid and the blue block."

robot:

"can the table pick up blocks?"

person:

"no."

robot:

"why did you do that?"

person:

"ok."

robot:

"because you asked me to."

robot:

Robotics as a platform to build intelligent machines

SHRDLU, Terry Winograd, MIT 1968

Robot butlers?

Representation is a key part of the puzzle

Perception

Actions

what is the right representation?

Representation is a key part of the puzzle

semantic?

compact?

compositional?

general?

interpretable?

Perception

Actions

what is the right representation?

On the hunt for the "best" state representation

how to represent:

semantic?

compact?

compositional?

general?

interpretable?

On the hunt for the "best" state representation

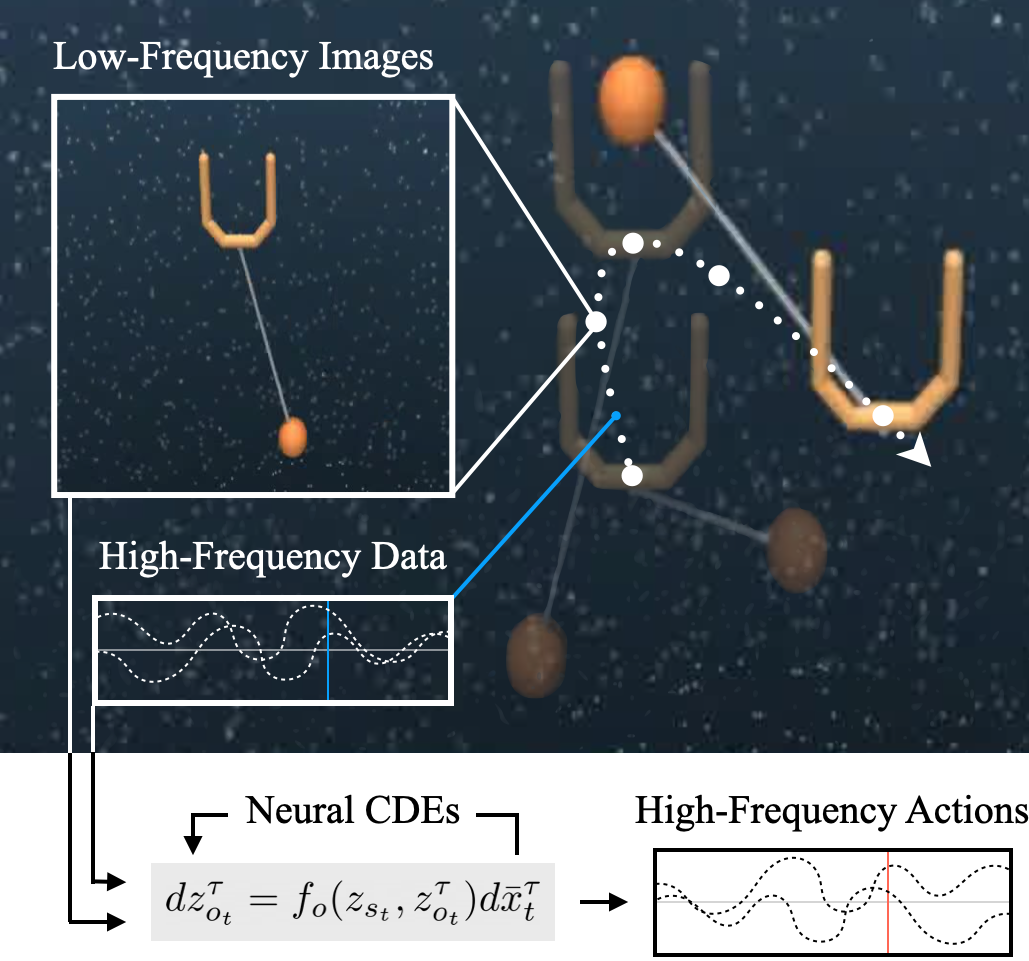

Haochen Shi and Huazhe Xu et al., RSS 2022

Learned Visual Representations

how to represent:

Dynamics Representations

Self-supervised Representations

Misha Laskin and Aravind Srinivas et al., ICML 2020

semantic?

compact?

compositional?

general?

interpretable?

On the hunt for the "best" state representation

Haochen Shi and Huazhe Xu et al., RSS 2022

Learned Visual Representations

NeRF Representations

3D Reconstructions

how to represent:

Dynamics Representations

Object-centric Representations

Danny Driess and Ingmar Schubert et al., arxiv 2022

Ben Mildenhall, Pratul Srinivasan, Matthew Tancik et al., ECCV 2020

Self-supervised Representations

Richard Newcombe et al., ISMAR 2011

Andy Zeng, Peter Yu, et al., ICRA 2017

Misha Laskin and Aravind Srinivas et al., ICML 2020

semantic?

compact?

compositional?

general?

interpretable?

On the hunt for the "best" state representation

Haochen Shi and Huazhe Xu et al., RSS 2022

Learned Visual Representations

NeRF Representations

3D Reconstructions

how to represent:

Dynamics Representations

Danny Driess and Ingmar Schubert et al., arxiv 2022

Ben Mildenhall, Pratul Srinivasan, Matthew Tancik et al., ECCV 2020

Self-supervised Representations

Richard Newcombe et al., ISMAR 2011

Misha Laskin and Aravind Srinivas et al., ICML 2020

Continuous-Time

Representations

Sumeet Singh et al., IROS 2022

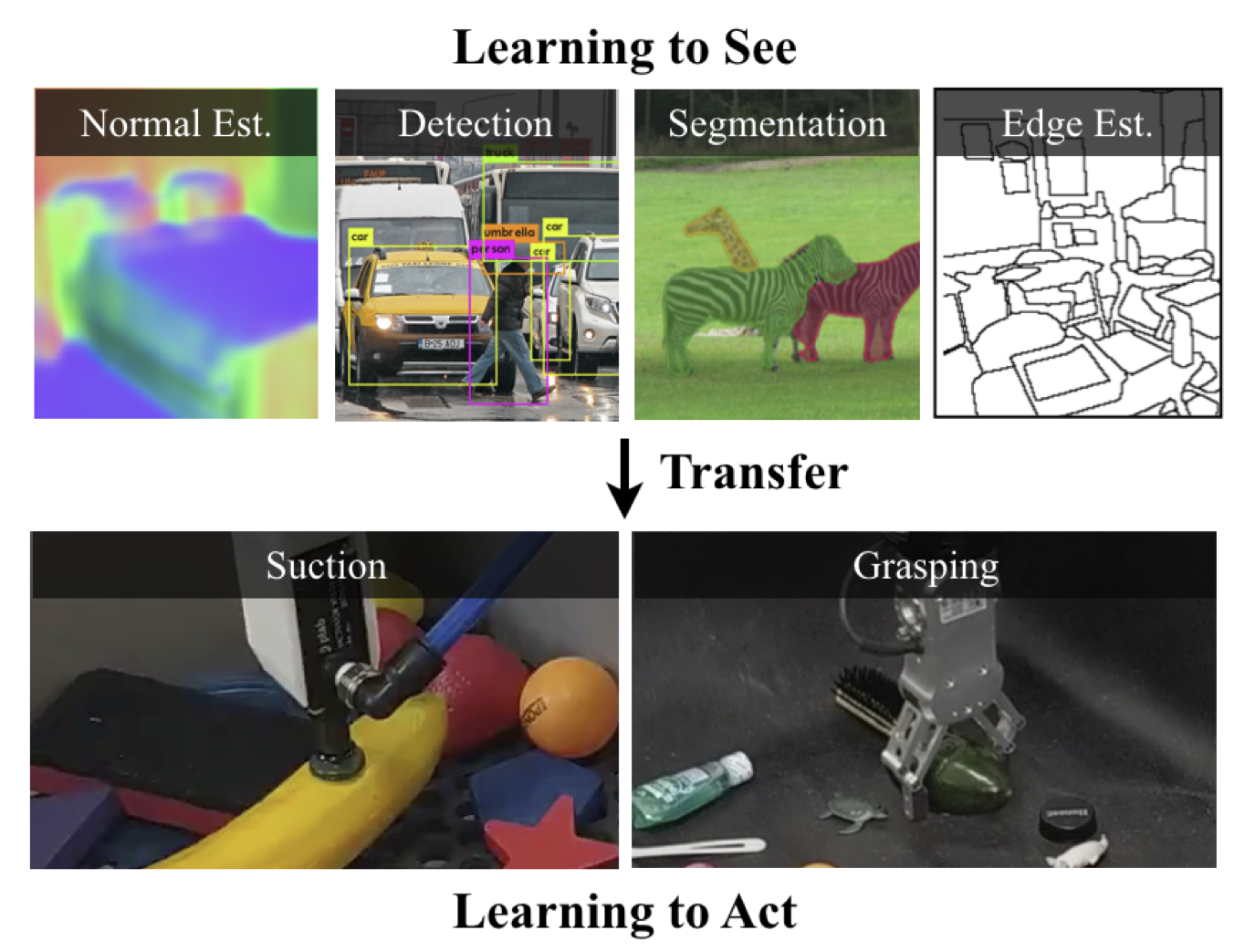

Pretrained Representations

Lin Yen-Chen et al., ICRA 2020

Cross-embodied Representations

Kevin Zakka et al., CoRL 2021

semantic?

compact?

compositional?

general?

interpretable?

Object-centric Representations

Andy Zeng, Peter Yu, et al., ICRA 2017

On the hunt for the "best" state representation

how to represent:

semantic?

compact?

compositional?

general?

interpretable?

what about

language?

On the hunt for the "best" state representation

how to represent:

semantic? ✓

compact? ✓

compositional? ✓

general? ✓

interpretable? ✓

what about

language?

On the hunt for the "best" state representation

how to represent:

semantic? ✓

compact? ✓

compositional? ✓

general? ✓

interpretable? ✓

what about

language?

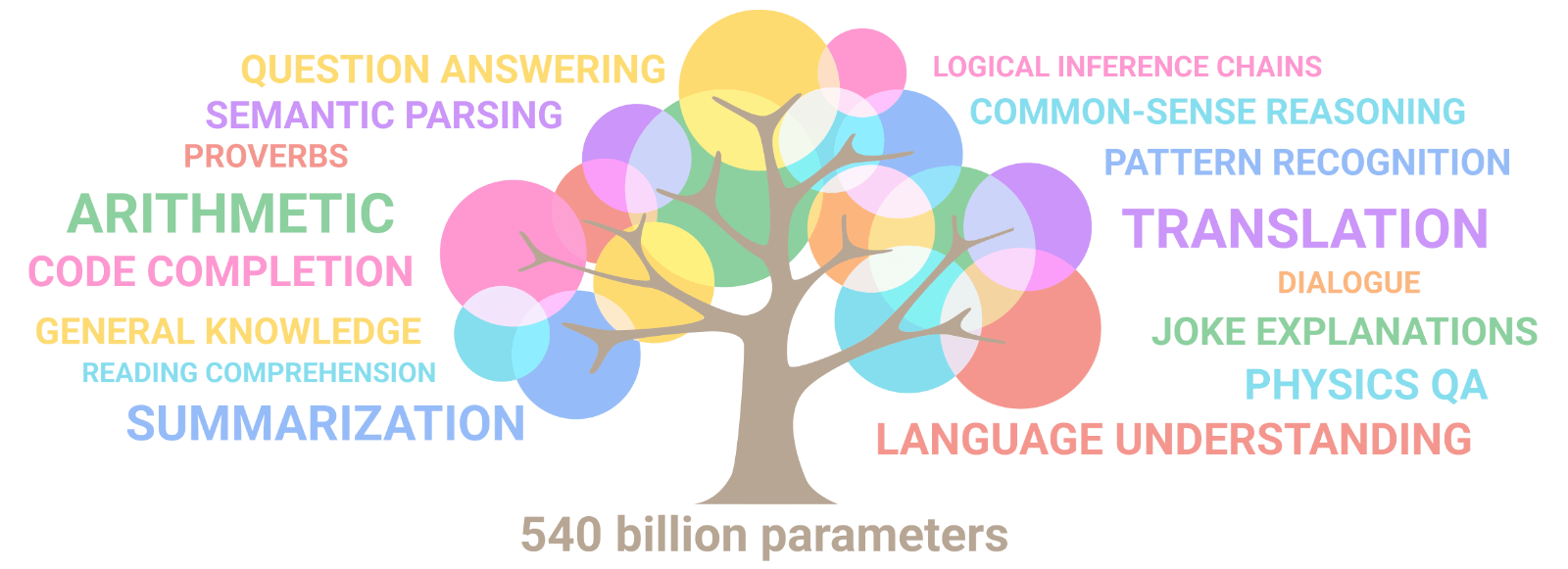

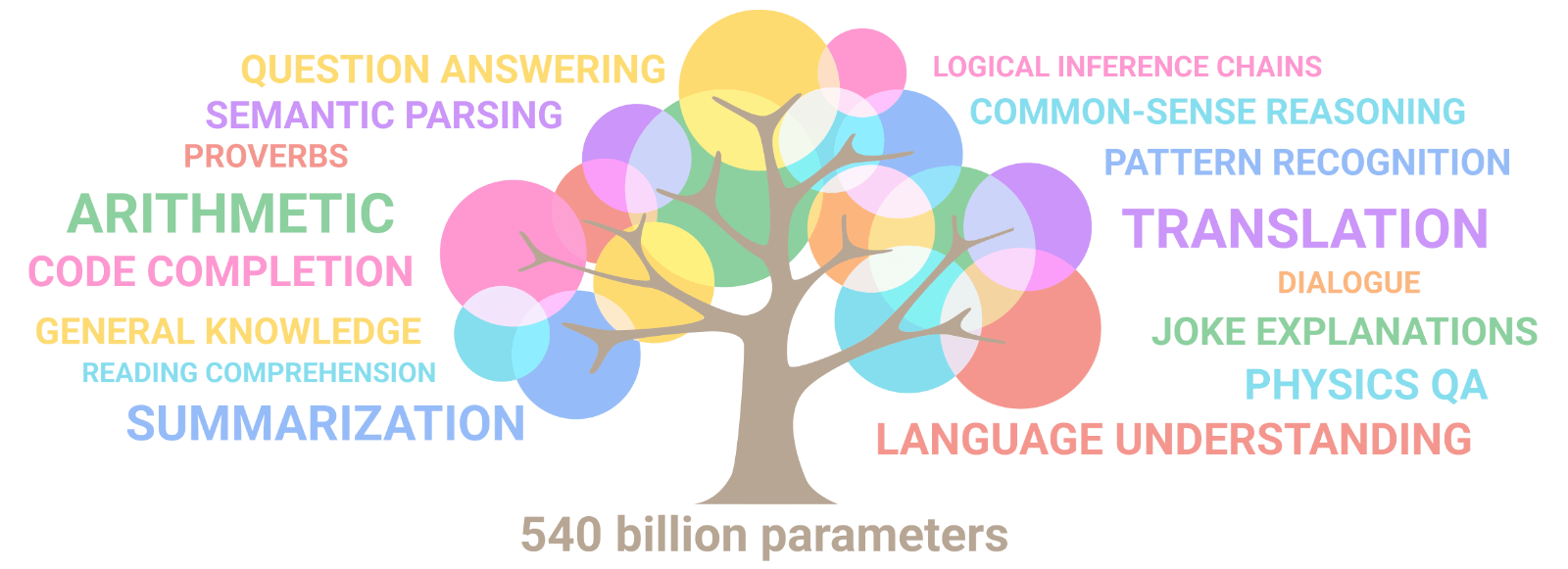

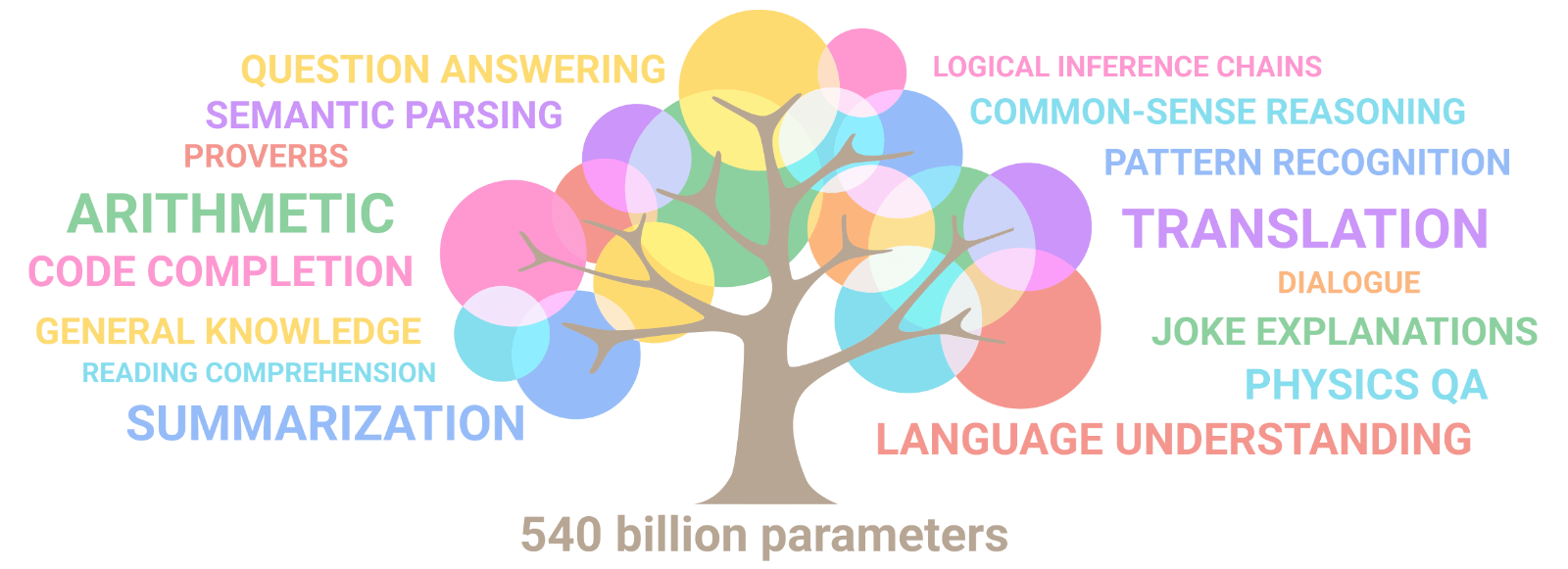

advent of large language models

Aakanksha Chowdhery, Sharan Narang, Jacob Devlin et al., "PaLM", 2022

On the hunt for the "best" state representation

how to represent:

semantic? ✓

compact? ✓

compositional? ✓

general? ✓

interpretable? ✓

what about

language?

advent of large language models

maybe this was the multi-task representation we've been looking for all along?

Aakanksha Chowdhery, Sharan Narang, Jacob Devlin et al., "PaLM", 2022

Mohit Shridhar et al., "CLIPort", CoRL 2021

Does multi-task learning result in positive transfer of representations?

Recent work in multi-task learning...

Does multi-task learning result in positive transfer of representations?

Past couple years of research suggest: its complicated

Recent work in multi-task learning...

Does multi-task learning result in positive transfer of representations?

Past couple years of research suggest: its complicated

In computer vision...

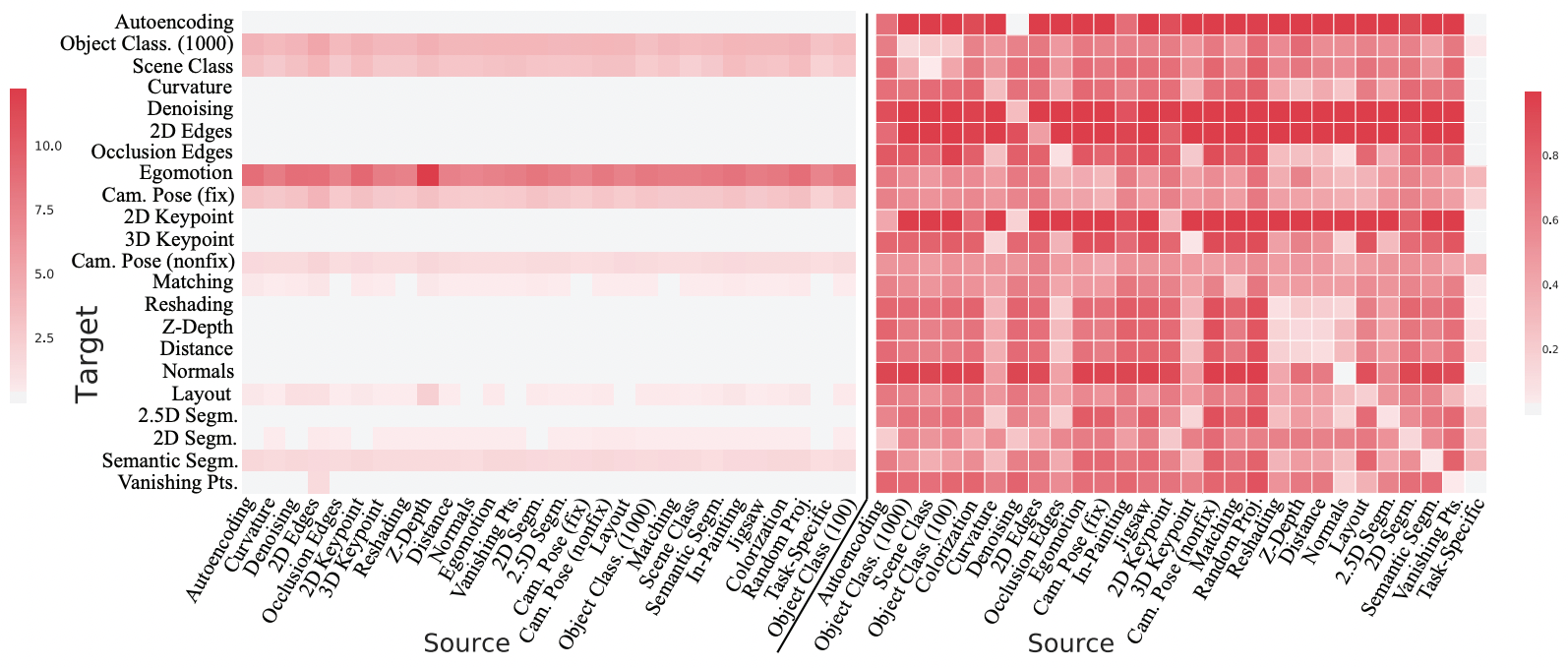

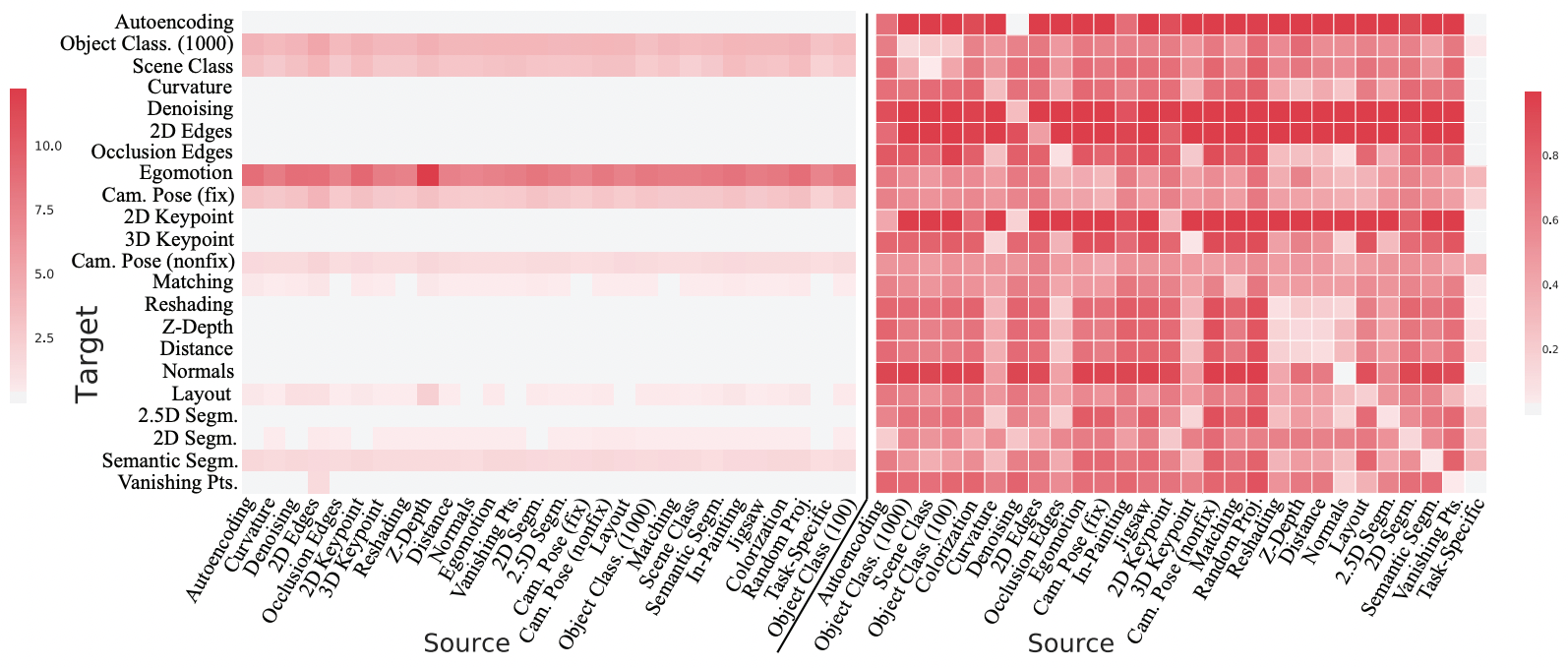

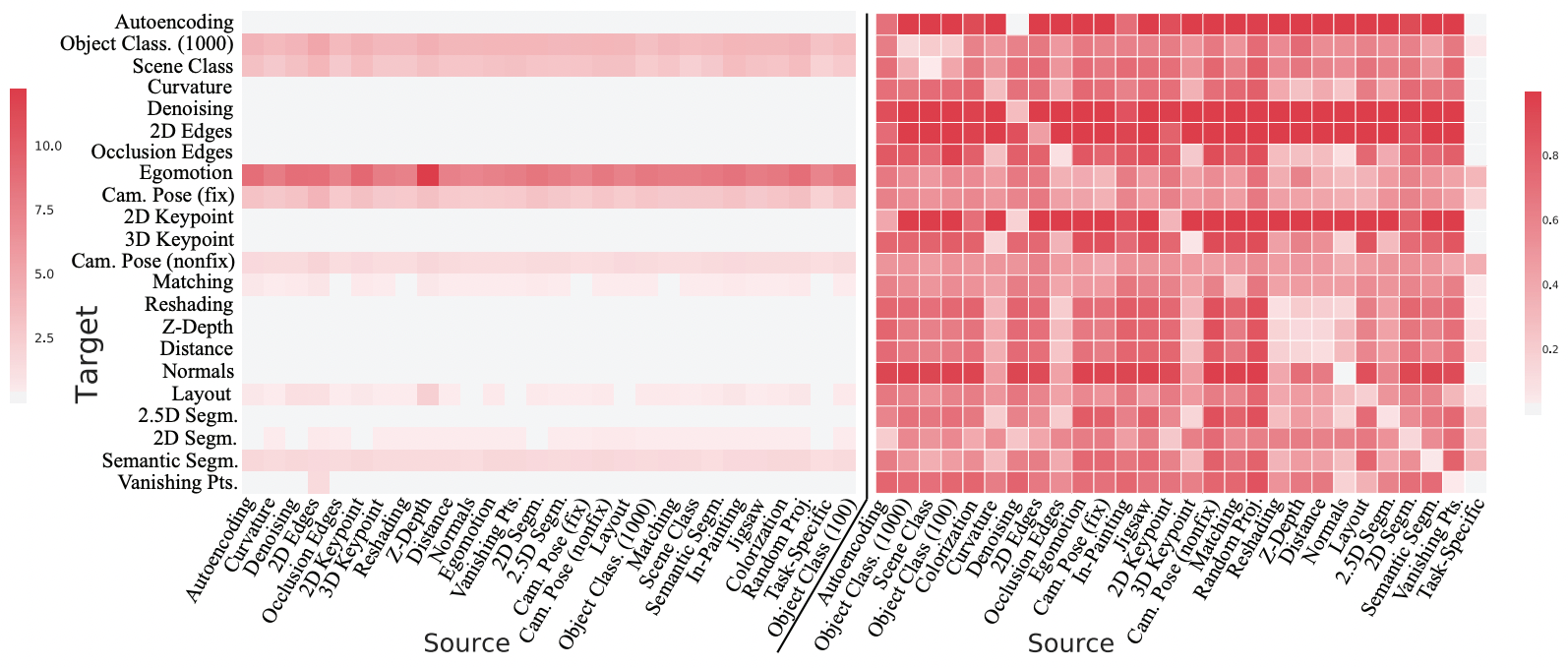

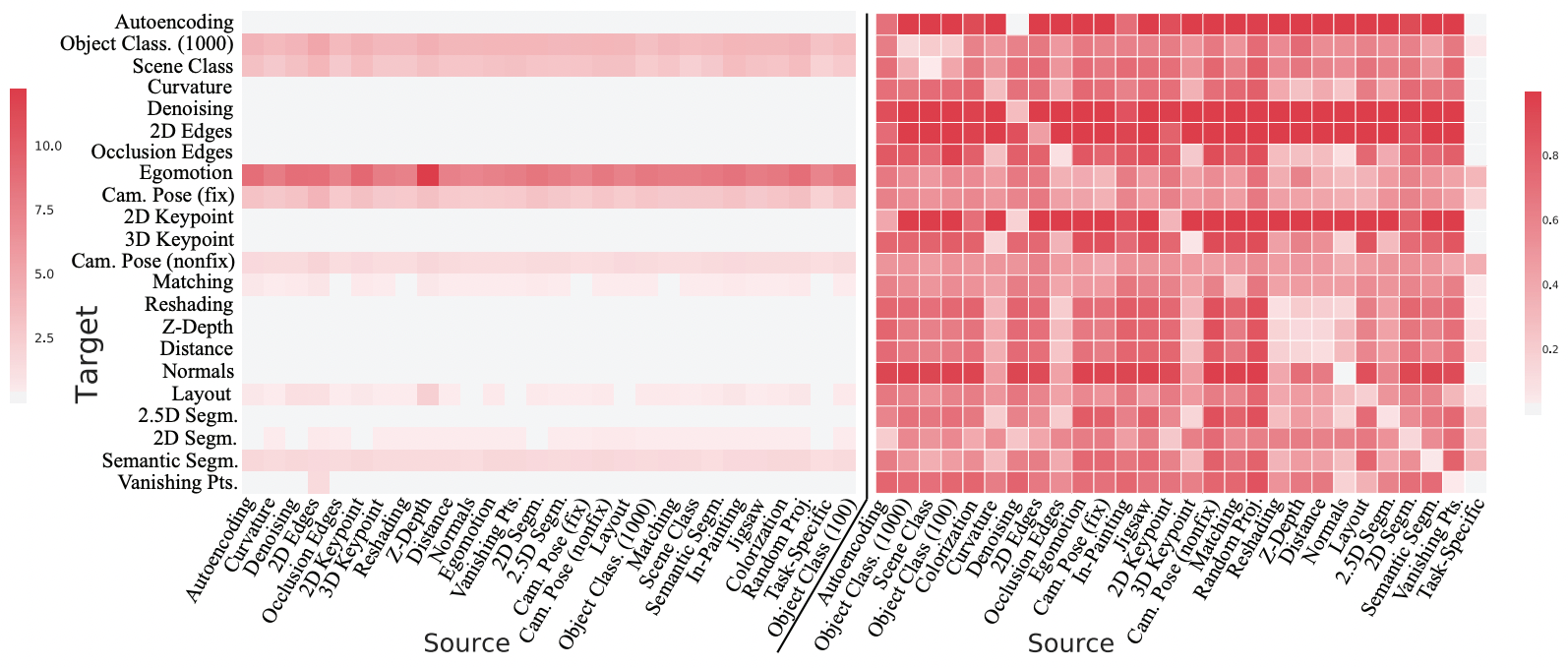

Amir Zamir, Alexander Sax, William Shen, et al., "Taskonomy", CVPR 2018

Recent work in multi-task learning...

Does multi-task learning result in positive transfer of representations?

Past couple years of research suggest: its complicated

In computer vision...

Amir Zamir, Alexander Sax, William Shen, et al., "Taskonomy", CVPR 2018

Recent work in multi-task learning...

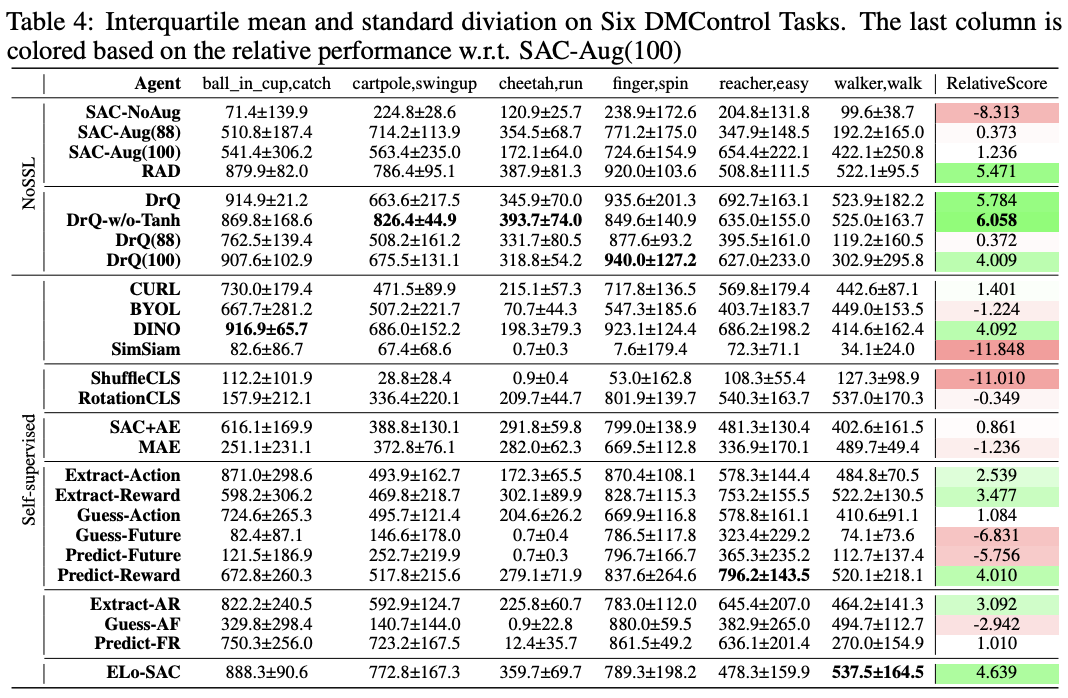

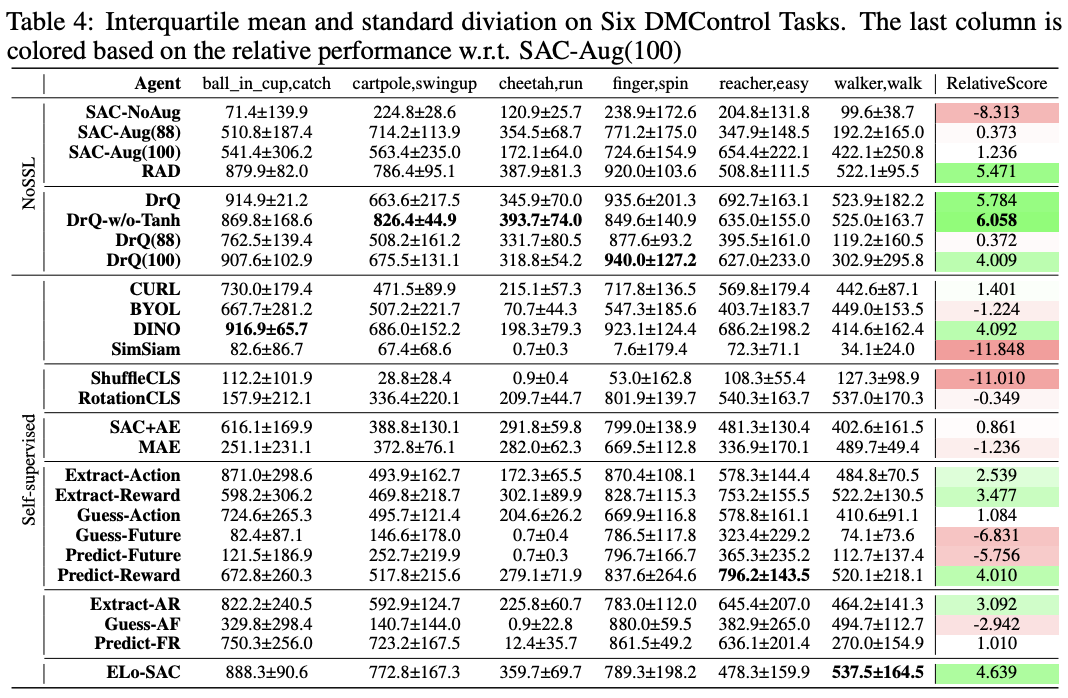

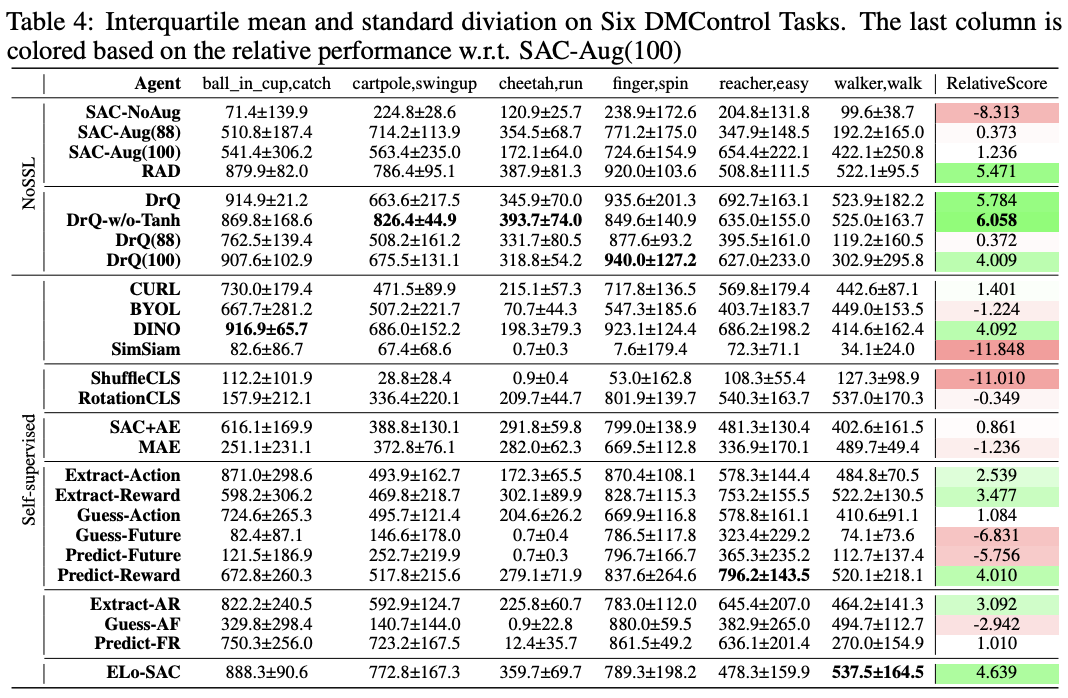

Xiang Li et al., "Does Self-supervised Learning Really Improve Reinforcement Learning from Pixels?", 2022

Does multi-task learning result in positive transfer of representations?

Past couple years of research suggest: its complicated

In computer vision...

Amir Zamir, Alexander Sax, William Shen, et al., "Taskonomy", CVPR 2018

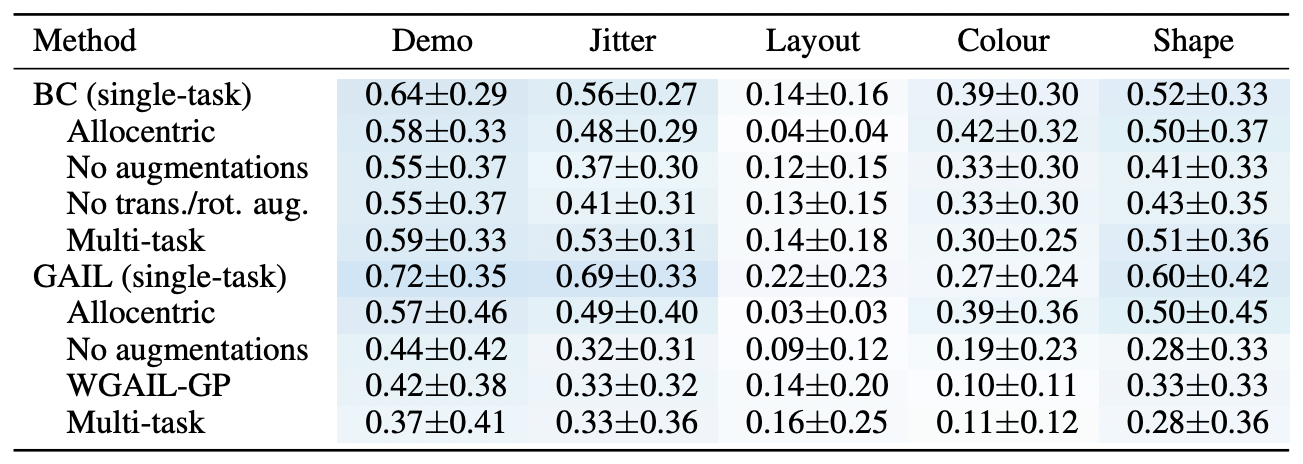

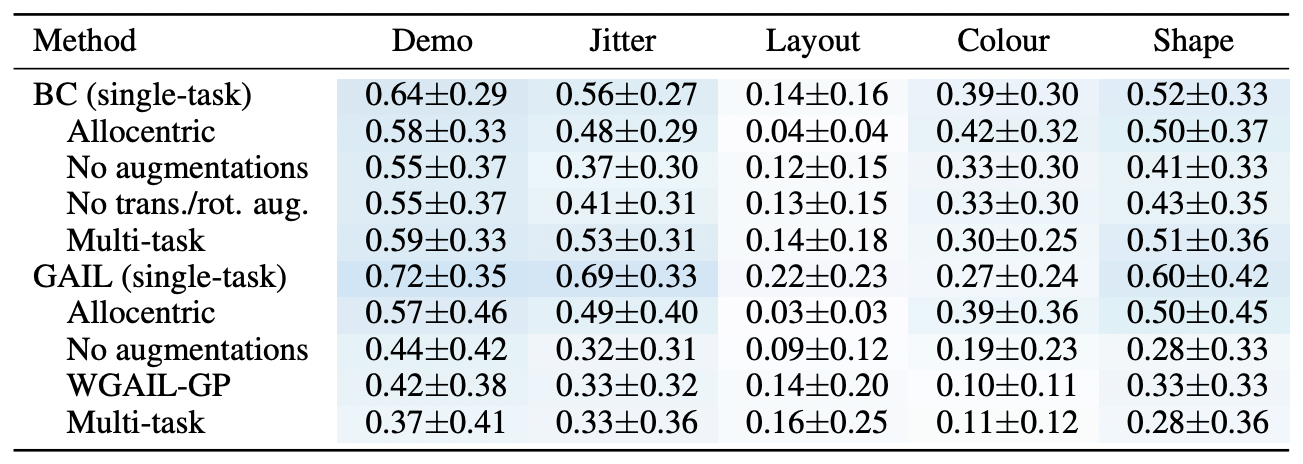

In robot learning...

Sam Toyer, et al., "MAGICAL", NeurIPS 2020

Recent work in multi-task learning...

Xiang Li et al., "Does Self-supervised Learning Really Improve Reinforcement Learning from Pixels?", 2022

Does multi-task learning result in positive transfer of representations?

Past couple years of research suggest: its complicated

In computer vision...

Amir Zamir, Alexander Sax, William Shen, et al., "Taskonomy", CVPR 2018

In robot learning...

Sam Toyer, et al., "MAGICAL", NeurIPS 2020

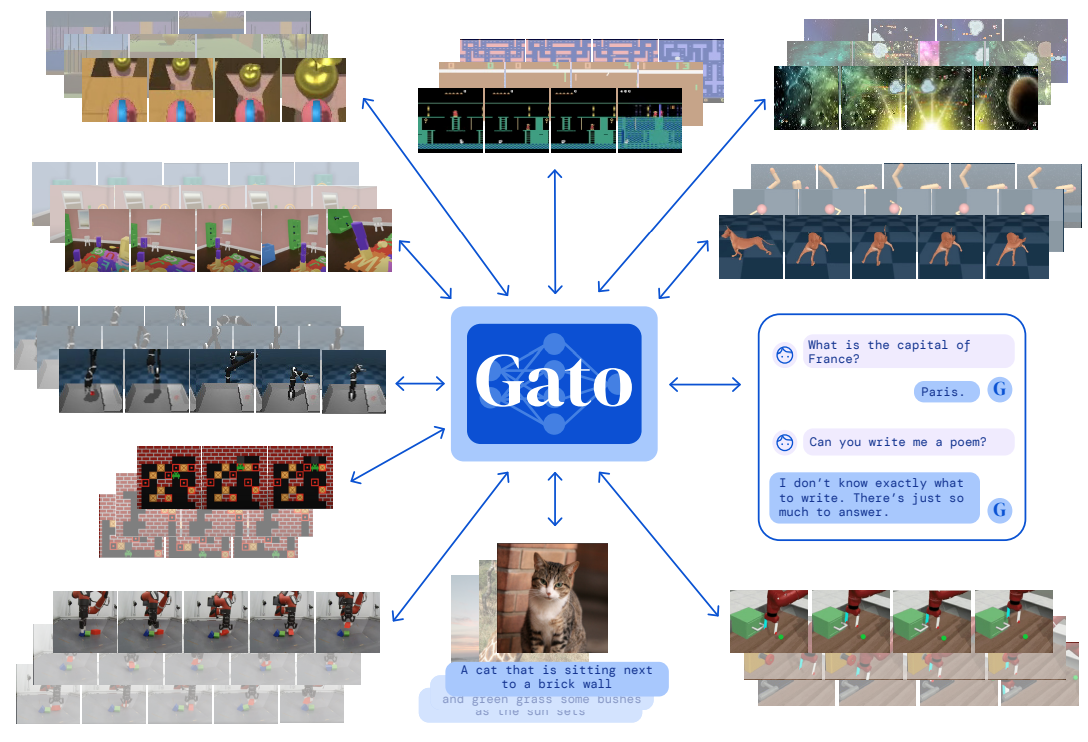

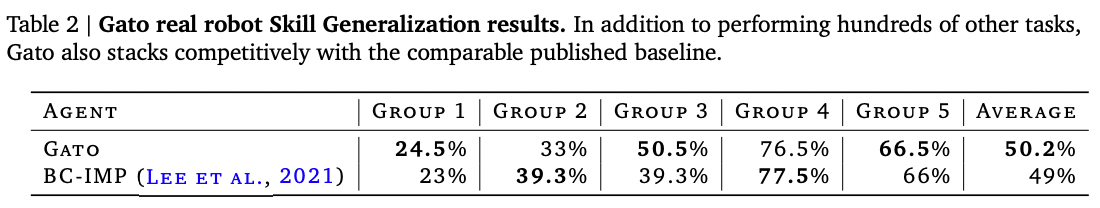

Scott Reed, Konrad Zolna, Emilio Parisotto, et al., "A Generalist Agent", 2022

Recent work in multi-task learning...

Xiang Li et al., "Does Self-supervised Learning Really Improve Reinforcement Learning from Pixels?", 2022

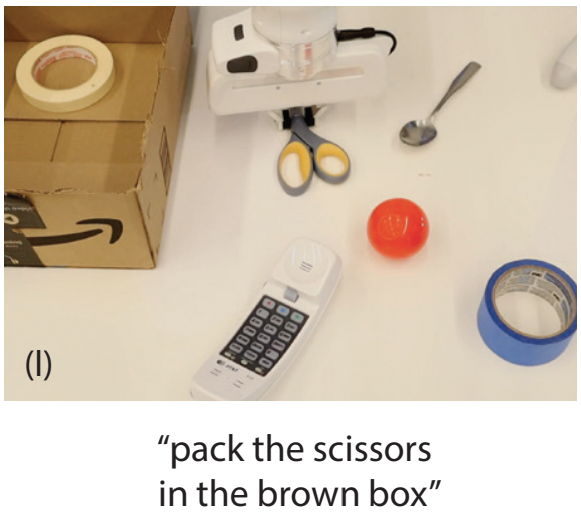

CLIPort

Multi-task learning + grounding in language seems more likely to lead to positive transfer

Mohit Shridhar, Lucas Manuelli, Dieter Fox, "CLIPort: What and Where Pathways for Robotic Manipulation", CoRL 2021

On the hunt for the "best" state representation

how to represent:

semantic? ✓

compact? ✓

compositional? ✓

general? ✓

interpretable? ✓

what about

language?

advent of large language models

maybe this was the multi-task representation we've been looking for all along?

Aakanksha Chowdhery, Sharan Narang, Jacob Devlin et al., "PaLM", 2022

Mohit Shridhar et al., "CLIPort", CoRL 2021

How do we use "language" as a state representation?

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

Open research problem! but here's one way to do it...

How do we use "language" as a state representation?

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

Open research problem! but here's one way to do it...

Visual Language Model

CLIP, ALIGN, LiT,

SimVLM, ViLD, MDETR

Human input (task)

How do we use "language" as a state representation?

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

Open research problem! but here's one way to do it...

Visual Language Model

CLIP, ALIGN, LiT,

SimVLM, ViLD, MDETR

Human input (task)

Large Language Model for Planning (e.g. SayCan)

Language-conditioned Policies

Do As I Can, Not As I Say: Grounding Language in Robotic Affordances

https://say-can.github.io/

Live demo

Describing the visual world with language

(and using for feedback too!)

Some limits of "language" as intermediate representation?

- loses spatial precision

- highly multimodal (lots of different ways to say the same thing)

- not as information-rich as in-domain representations (e.g. images)

Some limits of "language" as intermediate representation?

- loses spatial precision

- highly multimodal (lots of different ways to say the same thing)

- not as information-rich as in-domain representations (e.g. images)

Office space

Perception wishlist

item #1: hierarchical high-res image description?

Conference room

Desk, Chairs

Coke bottle

Bottle label

Nutrition facts

Nutrition values

w/ spatial info?

Some limits of "language" as intermediate representation?

- Only for high level? what about control?

Perception

Planning

Control

Some limits of "language" as intermediate representation?

- Only for high level? what about control?

Perception

Planning

Control

Socratic Models

Inner Monologue

ALM + LLM + VLM

SayCan

Wenlong Huang et al, 2022

LLM

Imitation? RL?

Intuition and commonsense is not just a high-level thing

Intuition and commonsense is not just a high-level thing

applies to low-level behaviors too

- spatial: "move a little bit to the left"

- temporal: "move faster"

- functional: "balance yourself"

Intuition and commonsense is not just a high-level thing

Seems to be stored in the depths of in language models... how to extract it?

applies to low-level behaviors too

- spatial: "move a little bit to the left"

- temporal: "move faster"

- functional: "balance yourself"

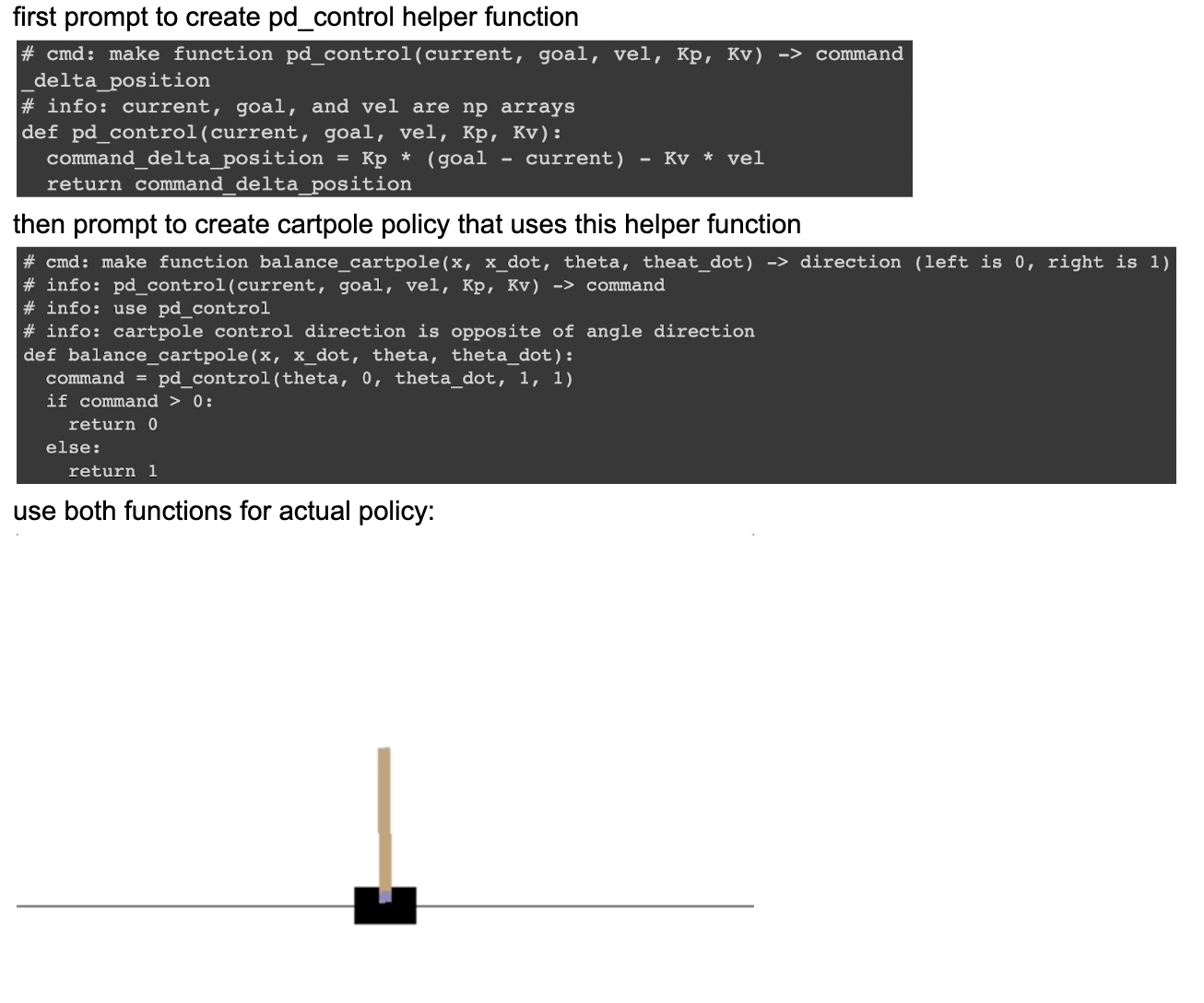

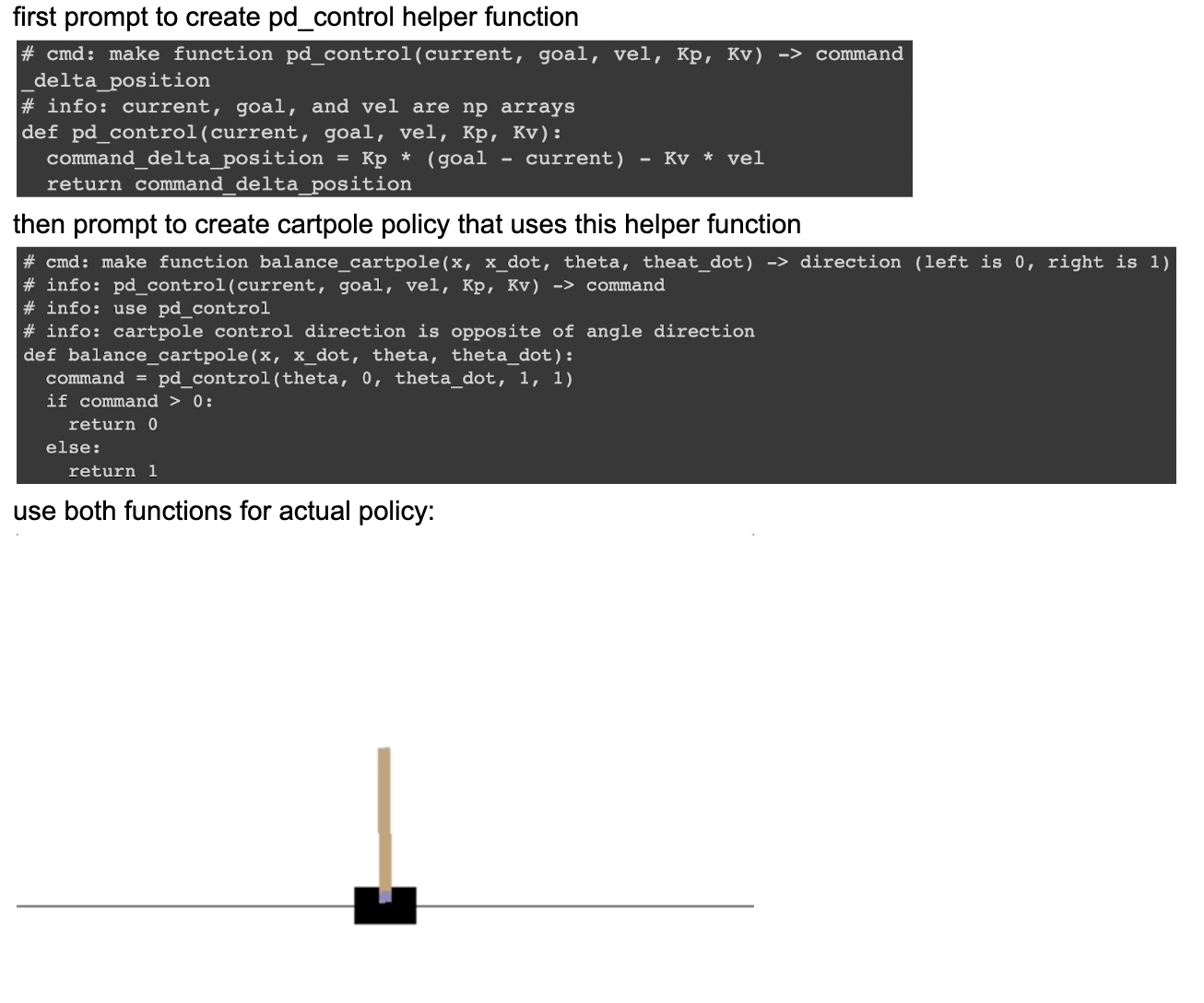

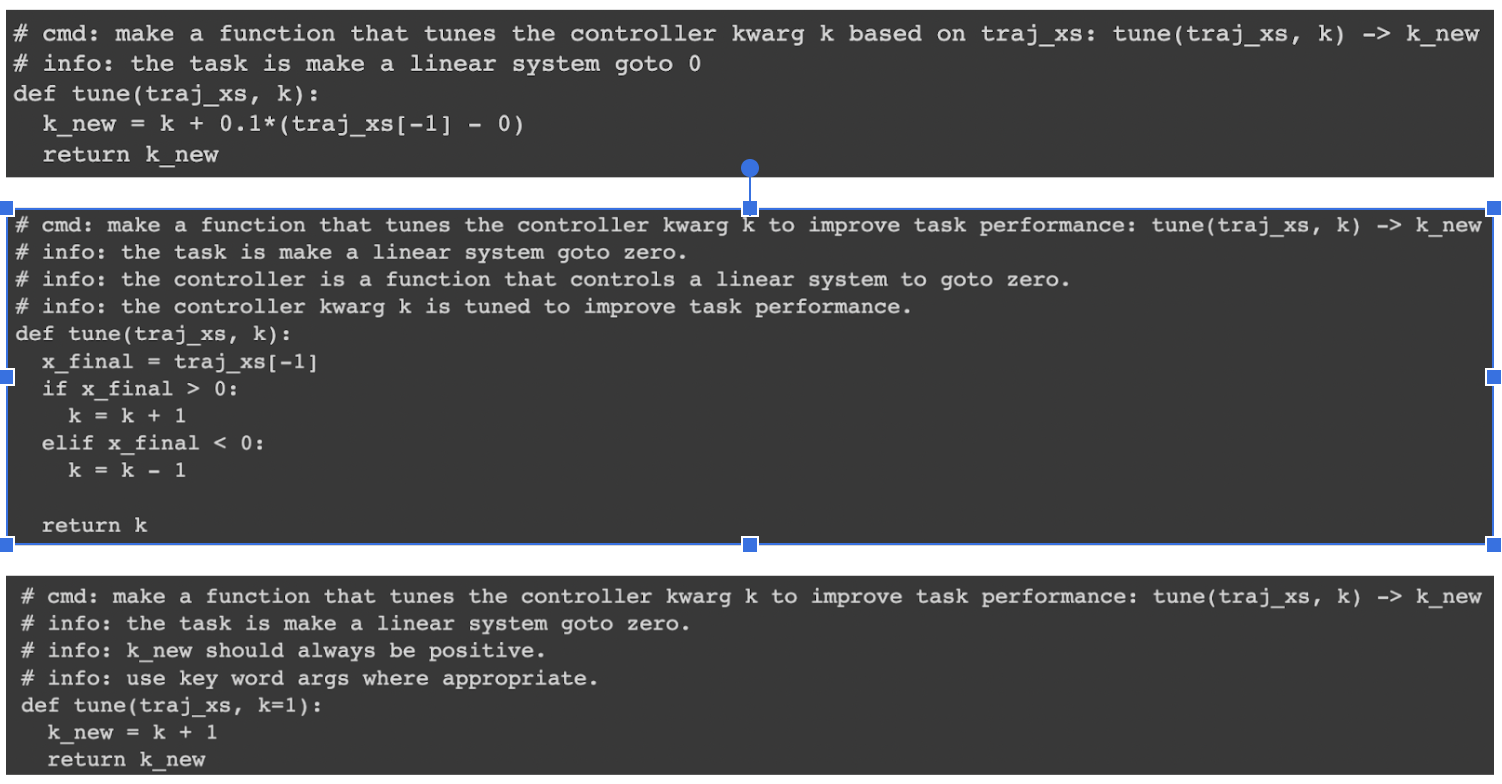

Can language models do control too?

Can language models do control too?

Turns out they've read lots of robot Python code and robotics textbooks too

LLMs can write robot code!

Can language models do control too?

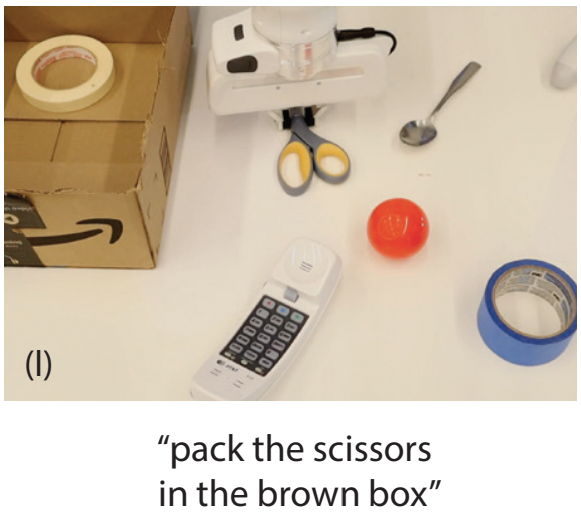

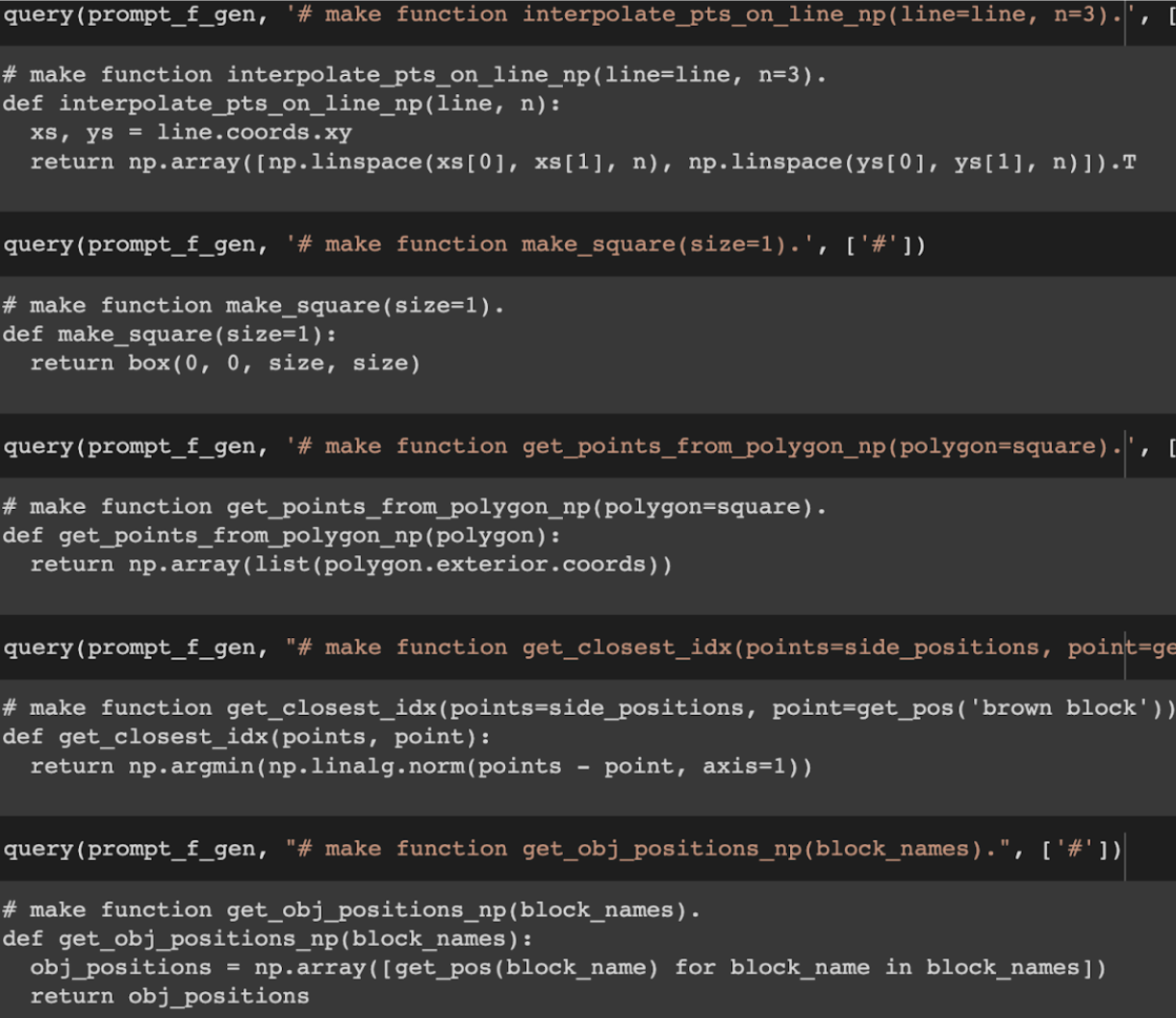

Jacky Liang, "Code as Policies"

Turns out they've read lots of robot Python code and robotics textbooks too

LLMs can write robot code!

write a PD controller

Can language models do control too?

Jacky Liang, "Code as Policies"

Turns out they've read lots of robot Python code and robotics textbooks too

LLMs can write robot code!

write a PD controller

write impedance controller

use NumPy SciPy code...

more examples...

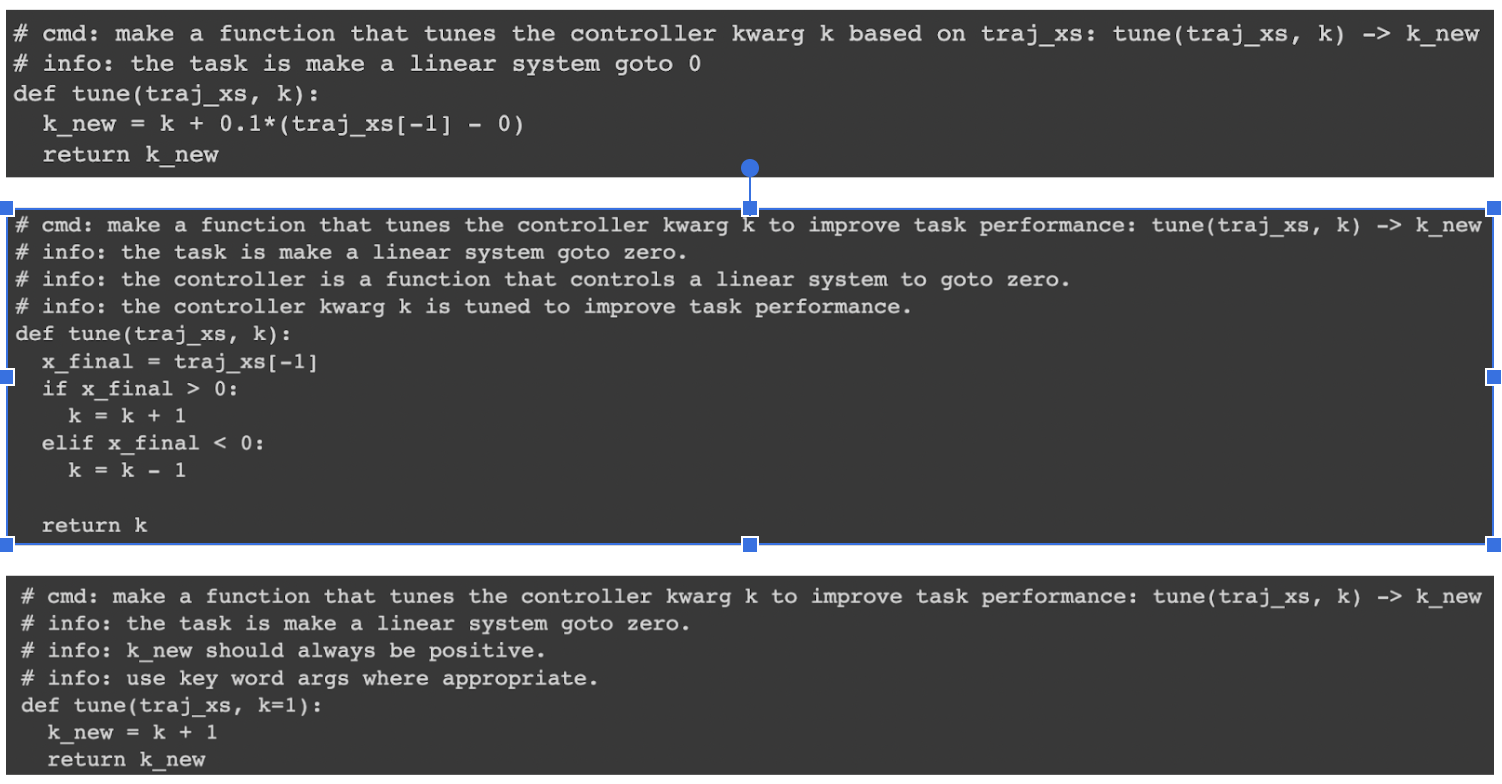

Can language models do control too?

Jacky Liang, "Code as Policies"

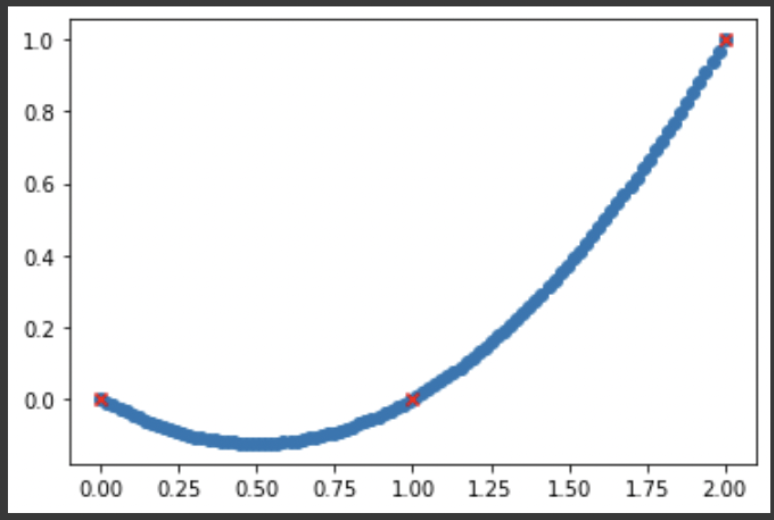

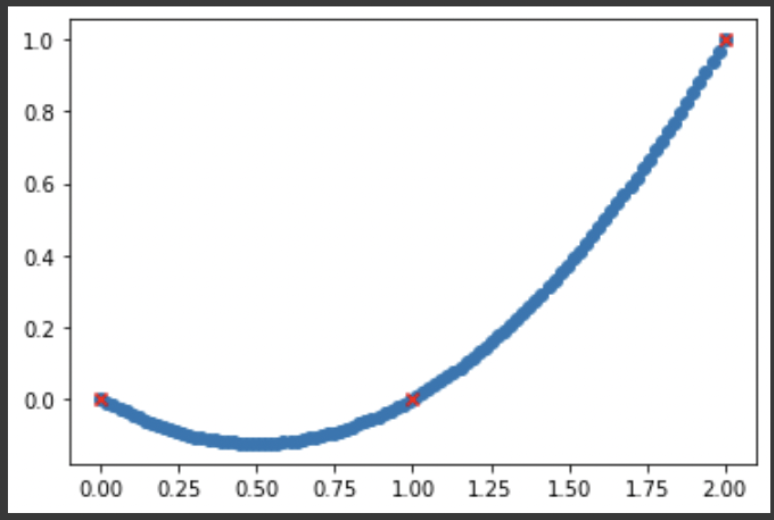

LLMs can generate (and adjust) continuous control trajectories...

... but no autonomous feedback loop...

Can language models do control too?

Jacky Liang, "Code as Policies"

LLMs can generate (and adjust) continuous control trajectories...

... but no autonomous feedback loop...

Perception wishlist item #2:

a perception model that can describe robot trajectories

Perception wishlist:

- hierarchical super high-res image description?

- open-vocab is great, but can we get generative?

- a visual-language model that is spatially grounded in 3D

- a perception model that can describe robot trajectories

- a foundation model for sounds

- not just speech, but also "robot noises"

As shared protocol for collaboration

Robot Operating System

(ROS)

Perception

Planning

Control

Since 2007: A common protocol for individual modules to "talk to each other"

"Language" as the glue for robots & AI

Language

Perception

Planning

Control

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

"Language" as the glue for robots & AI

Language

Perception

Planning

Control

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

We have some reason to believe that

"the structure of language is the structure of generalization"

To understand language is to understand generalization

https://evjang.com/2021/12/17/lang-generalization.html

Sapir–Whorf hypothesis

"Language" as the glue for robots & AI

Language

Perception

Planning

Control

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

We have some reason to believe that

"the structure of language is the structure of generalization"

To understand language is to understand generalization

https://evjang.com/2021/12/17/lang-generalization.html

Sapir–Whorf hypothesis

Discover new modes of collaboration

Towards grounding everything in language

Language

Perception

Planning

Control

Humans

Towards grounding everything in language

Language

Perception

Planning

Control

Humans

A path not just for general robots,

but for human-centered robots!

go/languagein-actionsout

Thank you!

Pete Florence

Tom Funkhouser

Adrian Wong

Kaylee Burns

Jake Varley

Erwin Coumans

Alberto Rodriguez

Johnny Lee

Vikas Sindhwani

Ken Goldberg

Stefan Welker

Corey Lynch

Laura Downs

Jonathan Tompson

Shuran Song

Vincent Vanhoucke

Kevin Zakka

Michael Ryoo

Travis Armstrong

Maria Attarian

Jonathan Chien

Brian Ichter

Krzysztof Choromanski

Phillip Isola

Tsung-Yi Lin

Ayzaan Wahid

Igor Mordatch

Oscar Ramirez

Federico Tombari

Daniel Seita

Lin Yen-Chen

Adi Ganapathi