From Words to Actions

Andy Zeng

RSS 2023 Workshop on Interdisciplinary Exploration of Generalizable Manipulation Policy Learning: Paradigms and Debates

Manipulation

TossingBot

Interact with the physical world to learn bottom-up commonsense

Transporter Nets

Implicit Behavior Cloning

with machine learning from pixels

i.e. "how the world works"

On the quest for shared priors

Interact with the physical world to learn bottom-up commonsense

with machine learning

i.e. "how the world works"

# Tasks

Data

MARS Reach arm farm '21

On the quest for shared priors

Interact with the physical world to learn bottom-up commonsense

with machine learning

i.e. "how the world works"

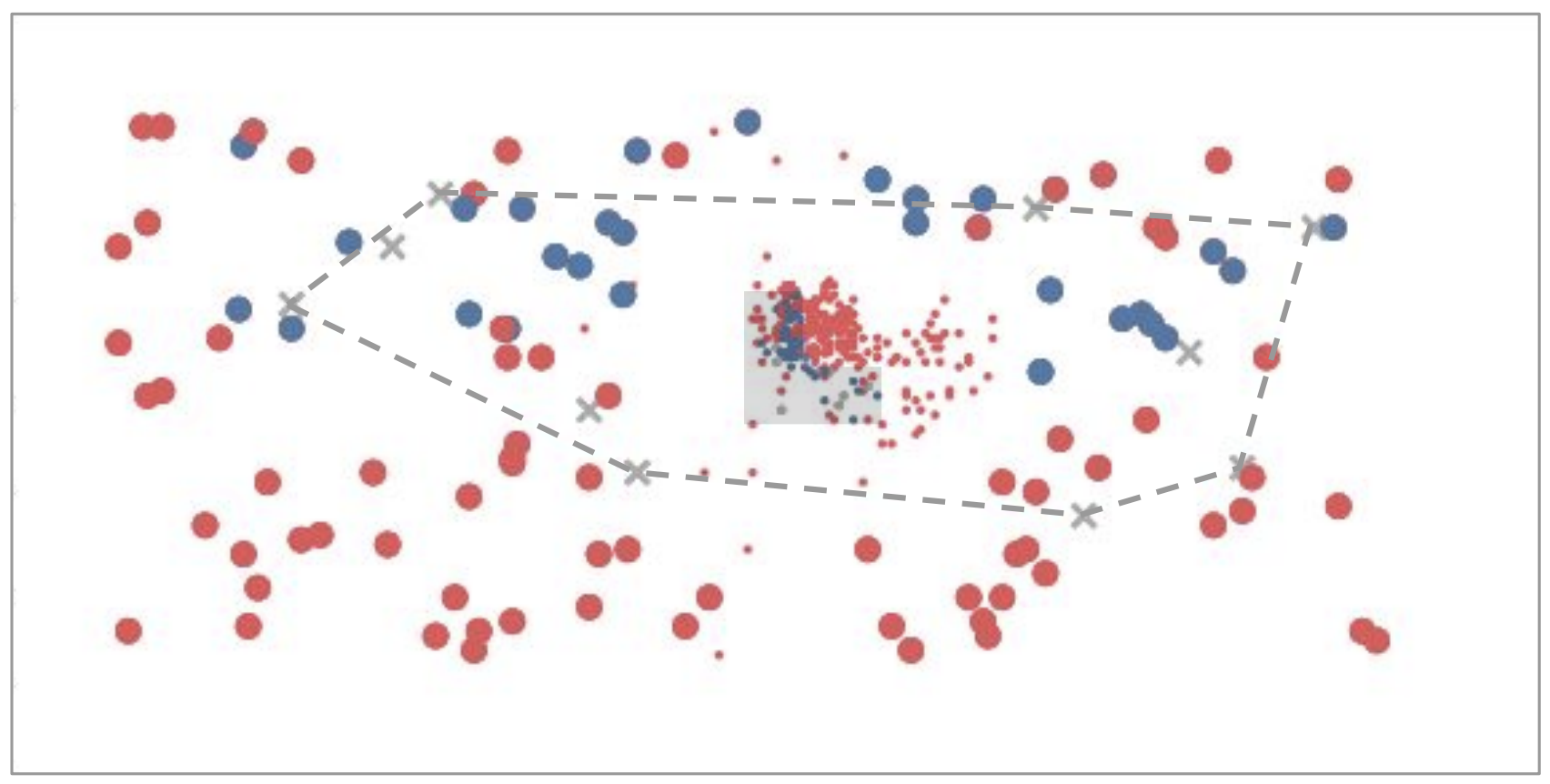

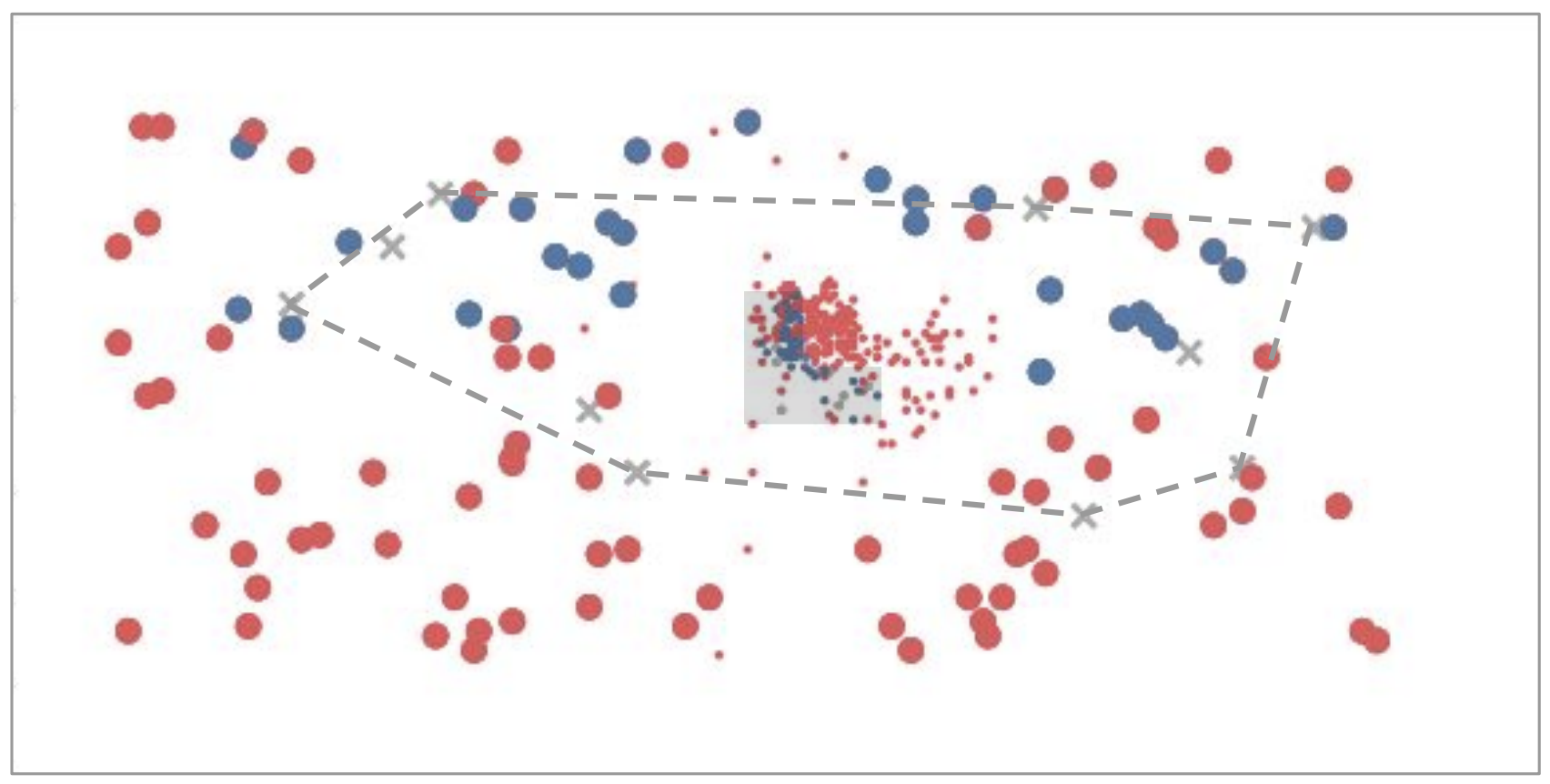

# Tasks

Data

Expectation

Reality

Complexity in environment, embodiment, contact, etc.

MARS Reach arm farm '21

Machine learning is a box

Interpolation

Extrapolation

adapted from Tomás Lozano-Pérez

Machine learning is a box

Interpolation

Extrapolation

Internet

Meanwhile in NLP...

Large Language Models

Large Language Models?

Internet

Meanwhile in NLP...

Books

Recipes

Code

News

Articles

Dialogue

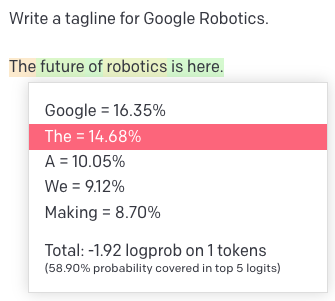

Demo

Quick Primer on Language Models

Tokens (inputs & outputs)

Transformers (models)

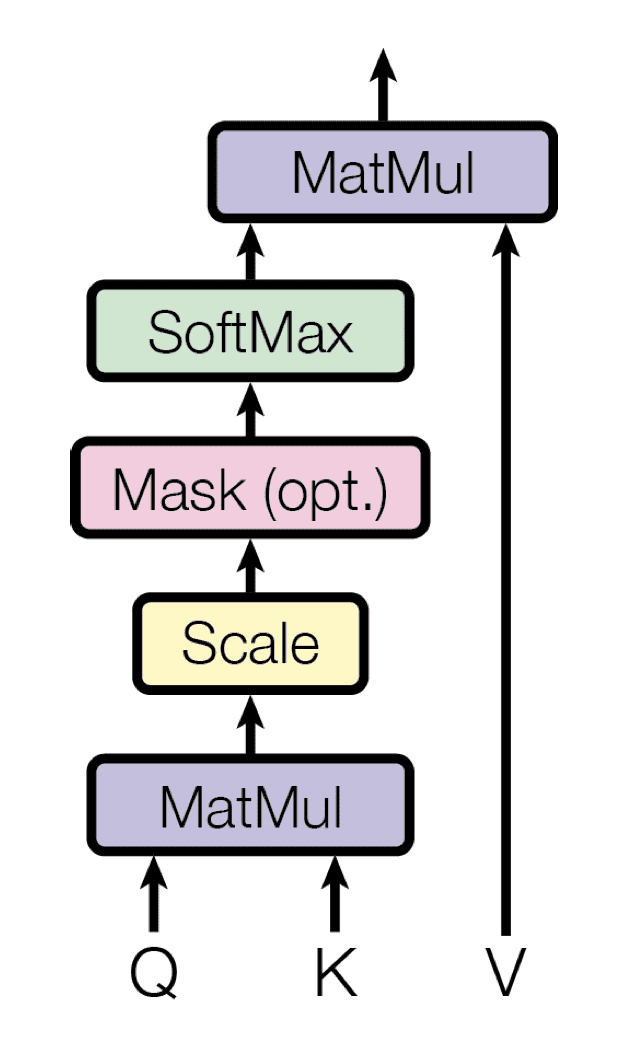

Self-Attention

Pieces of words (BPE encoding)

big

bigger

per word:

biggest

small

smaller

smallest

big

er

per token:

est

small

Attention Is All You Need, NeurIPS 2017

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, Illia Polosukhin

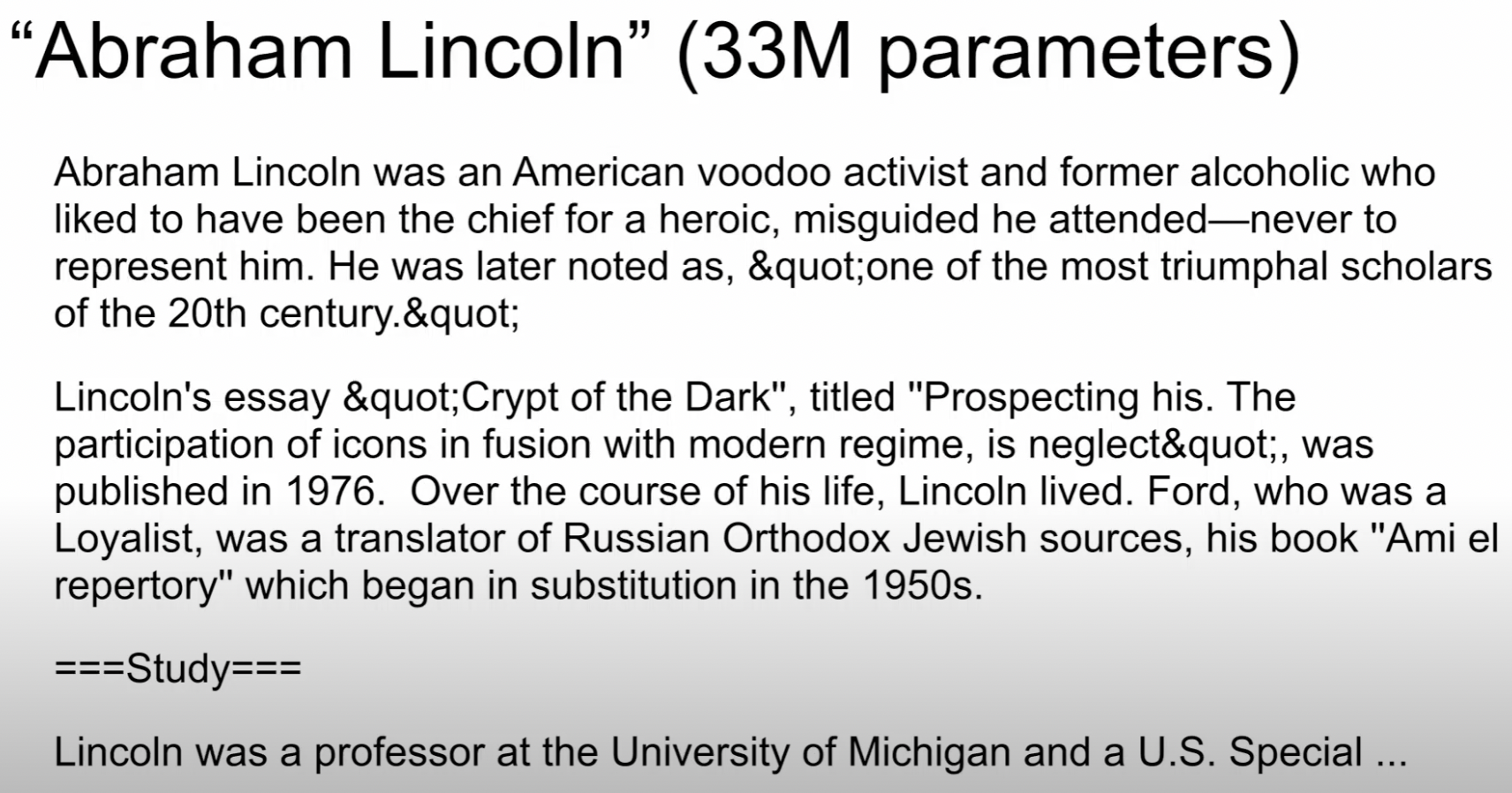

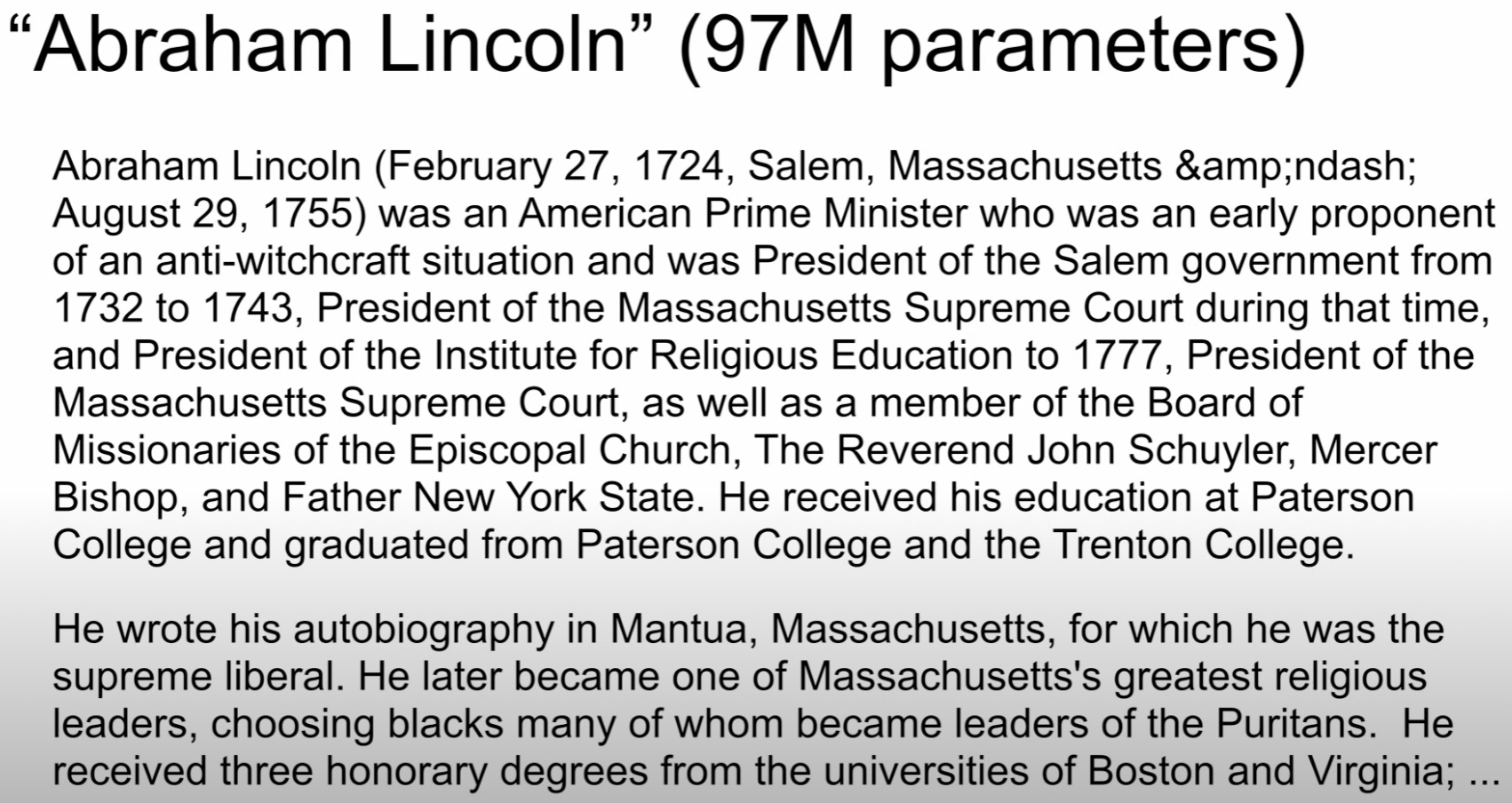

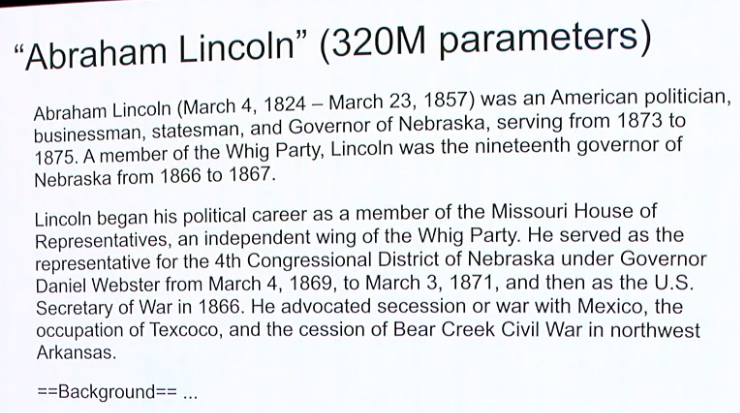

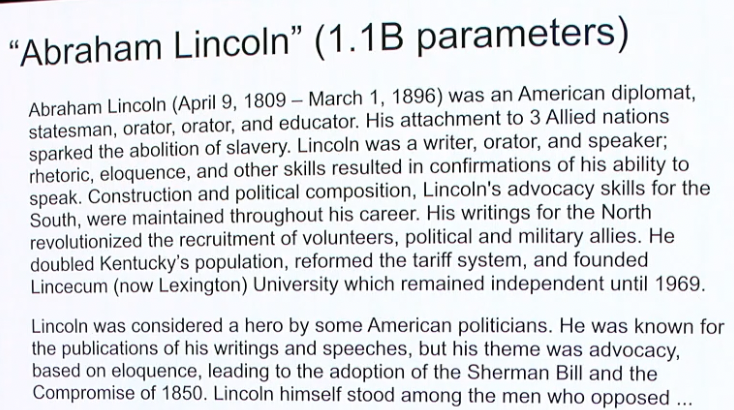

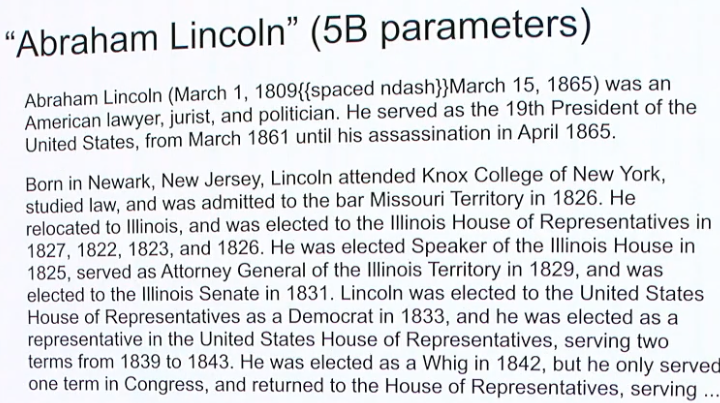

Bigger is Better

Neural Language Models: Bigger is Better, WeCNLP 2018

Noam Shazeer

Bigger is Better

Neural Language Models: Bigger is Better, WeCNLP 2018

Noam Shazeer

Bigger is Better

Neural Language Models: Bigger is Better, WeCNLP 2018

Noam Shazeer

Bigger is Better

Neural Language Models: Bigger is Better, WeCNLP 2018

Noam Shazeer

Bigger is Better

Neural Language Models: Bigger is Better, WeCNLP 2018

Noam Shazeer

Robot Planning

Visual Commonsense

Robot Programming

Socratic Models

Code as Policies

PaLM-SayCan

Demo

Somewhere in the space of interpolation

Lives

Socratic Models & PaLM-SayCan

One way to use Foundation Models with "language as middleware"

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

Socratic Models & PaLM-SayCan

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

Visual Language Model

CLIP, ALIGN, LiT,

SimVLM, ViLD, MDETR

Human input (task)

One way to use Foundation Models with "language as middleware"

Socratic Models & PaLM-SayCan

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

Visual Language Model

CLIP, ALIGN, LiT,

SimVLM, ViLD, MDETR

Human input (task)

Large Language Models for

High-Level Planning

Language-conditioned Policies

Do As I Can, Not As I Say: Grounding Language in Robotic Affordances say-can.github.io

Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents wenlong.page/language-planner

One way to use Foundation Models with "language as middleware"

Socratic Models: Robot Pick-and-Place Demo

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

https://socraticmodels.github.io

For each step, predict pick & place:

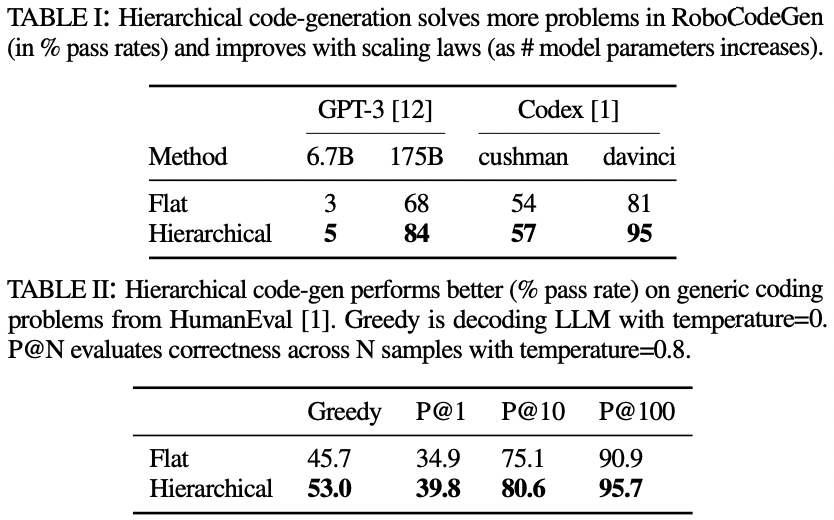

Language models can write code

Code as a medium to express more complex plans

Jacky Liang, Wenlong Huang, Fei Xia, Peng Xu, Karol Hausman, Brian Ichter, Pete Florence, Andy Zeng

code-as-policies.github.io

Code as Policies: Language Model Programs for Embodied Control

Live Demo

In-context learning is supervised meta learning

Trained with autoregressive models via "packing"

In-context learning is supervised meta learning

Trained with autoregressive models via "packing"

Better with non-recurrent autoregressive sequence models

Transformers at certain scale can generalize to unseen (i.e. tasks)

"Data Distributional Properties Drive Emergent In-Context Learning in Transformers"

Chan et al., NeurIPS '22

"General-Purpose In-Context Learning by Meta-Learning Transformers" Kirsch et al., NeurIPS '22

"What Can Transformers Learn In-Context? A Case Study of Simple Function Classes" Garg et al., '22

Language models can write code

Jacky Liang, Wenlong Huang, Fei Xia, Peng Xu, Karol Hausman, Brian Ichter, Pete Florence, Andy Zeng

code-as-policies.github.io

Code as Policies: Language Model Programs for Embodied Control

use NumPy,

SciPy code...

Linguistic Patterns

PaLM-SayCan, Socratic Models, Inner Monologue, Code as Policies

There may be more we can extract from language models

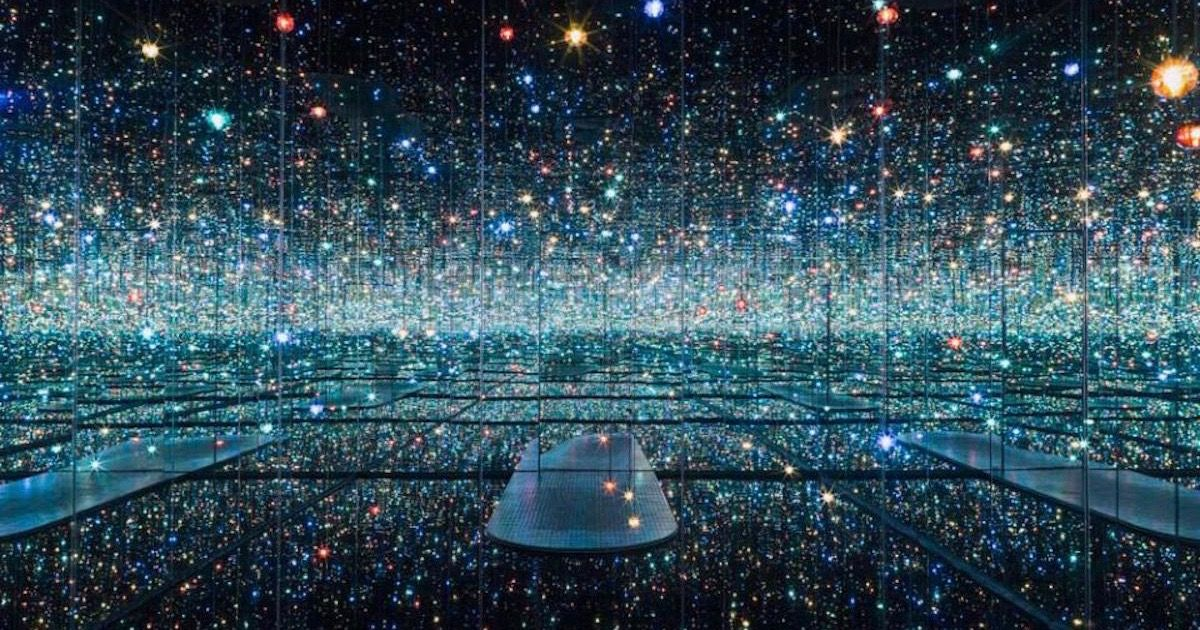

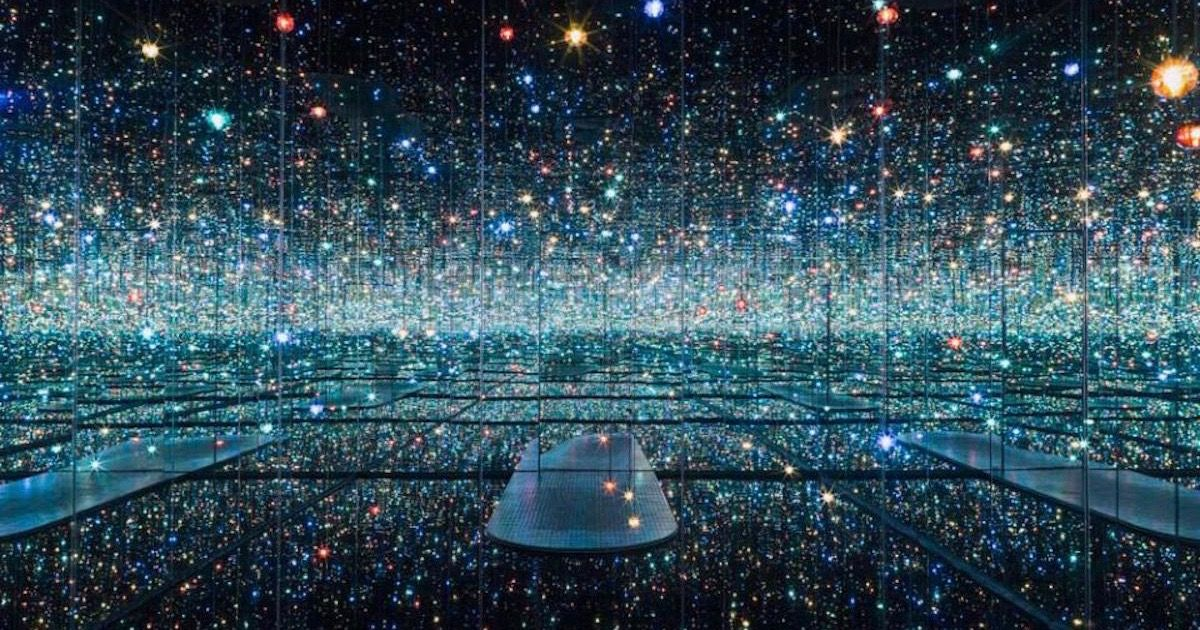

Linguistic Patterns

Non-Linguistic Patterns

General Pattern Machines?

PaLM-SayCan, Socratic Models, Inner Monologue, Code as Policies

There may be more we can extract from language models

Research topic wishlist

- Why/where/when do these pattern emerge from in-context learning?

- Is this unique to the Transformers architecture?

- Do patterns emerge from pretraining in other domains?

- Can this be a mechanism for positive transfer between modalities?

- Which patterns can't be captured with in-context training on Internet data?

- How can we leverage these capabilities more in robotics?

looking for help from the community

Thank you!

Pete Florence

Johnny Lee

Vikas Sindhwani

Vincent Vanhoucke

Kevin Zakka

Michael Ryoo

Maria Attarian

Brian Ichter

Krzysztof Choromanski

Federico Tombari

Jacky Liang

Aveek Purohit

Wenlong Huang

Fei Xia

Peng Xu

Karol Hausman

and many others!

Suvir Mirchandani

Dorsa Sadigh