Moving Beyond the Limits of LLMs by Understanding Their Inner Workings, multi-agent systems & complex reasoning

Armağan Amcalar

Dubai AI Week

April 22, 2025

WHO AM I?

AUTHORED ON GITHUB

Built with AI

dashersw

Ground Rules:

Interrupt me! Raise your hand, ask questions.

No question is silly – others likely have it too.

Let's discuss.

Agenda:

AI/AGI Ground Rules & Industry Path

Mathematical Foundations of LLMs

Human Learning, Society & ML Origins

AGI: Emergence, Not a Leap

LLM Limits & The Path via Multi-Agent Systems

Prompting, Reasoning & Vector Search Deep Dive

dashersw

How do humans really learn?

How did human society evolve and self-organize?

Where does Machine Learning actually come from? (Hint: Not just stats)

Why is collaboration our superpower?

Intelligence

dashersw

Adaptation to Environment

Reasoning and Problem-Solving

Learning and Memory

Skilled Use of Knowledge

AUTONOMOUS

dashersw

Acting independently or without human intervention

AGENT

dashersw

An entity that perceives its environment, makes decisions, and takes actions

COLLABORATION

dashersw

Multiple entities working together to achieve a common goal

multi-agent systems

dashersw

Operate independently

Interact with their environment

Communicate and cooperate with other agents

Learn and adapt based on their experiences

imitate intelligence with next-token prediction

dashersw

CAN YOU OR HOW DO YOU

LLM HYPE VS REALITY

The Hype: GPT-5 (or the next big model) will be AGI! Superintelligence is imminent!

The Reality: LLMs predict the next word (or token).

- Complex statistical models.

- Fundamentally deterministic (randomness is simulated via probability tweaks).

- Giant mathematical function (multiplications & additions).

- No inherent intelligence, state, reasoning, or true learning mechanism beyond next-token prediction.

The slow typing in ChatGPT? That's how it works, not a UX feature!

dashersw

HOW DOES ONE

CATCH A BALL?

dashersw

Visual Processing

Occipital lobe processes visual information.

Movement Planning

Parietal lobe and premotor cortex plan movement.

Execution of Movement

Primary motor cortex sends signals to muscles.

Coordination and Balance

Cerebellum ensures smooth movement.

Sensory Feedback

Somatosensory cortex adjusts grip.

https://training.seer.cancer.gov/module_anatomy/unit5_3_nerve_org1_cns.html

vectorized by Jkwchui, CC BY-SA 3.0, via Wikimedia Commons

Why LLMs Alone Fall Short of AGI

- Intelligence requires distributed, specialized functions (like the human brain).

- LLMs lack mechanisms for:

- True State: Remembering facts across sessions (beyond context window/hacky memory).

- Adaptation: Fundamentally changing based on experience (requires retraining/RLHF).

- Complex Reasoning: Beyond pattern matching in text.

- Planning & Abstraction: Not inherent in next-token prediction.

- Perception & Action: No connection to real-world sensors or actuators (unless specifically added).

dashersw

EMERGENCE,

Emergent Behavior and Complex Systems

dashersw

Atoms form molecules, molecules form cells,

cells form organisms

each level exhibits new, emergent properties.

dashersw

dashersw

GESTALT

The whole is greater than the sum of its parts

dashersw

GESTALT

individual agents working together can achieve far more than they could separately.

coordinated actions lead to emergent behavior and intelligence.

dashersw

Multi-agent systems are networks of LLMs working together, each potentially specializing in different tasks or aspects of a problem

dashersw

- Division of cognitive labor

- Specialized expertise

- Parallel processing

- Enhanced reliability through cross-checking

dashersw

Why?

- Complex problem solving

- Scientific research assistance

- Business process automation

- Creative collaboration

dashersw

Some application areas

For autonomous behavior, ındıvıdual agents need to reason better.

dashersw

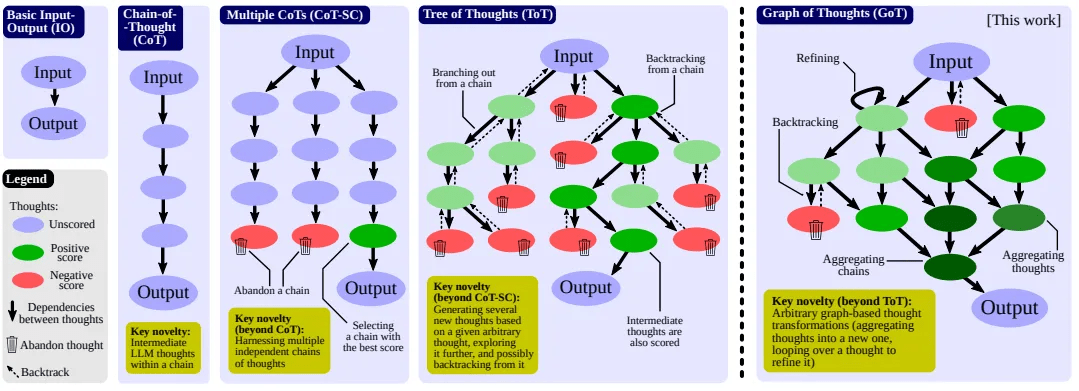

Popular reasonıng technıques

Chain of thought

Tree of thought

Graph of thought

dashersw

dashersw

Improving agent capabilities

Memory systems

Short-term memory

Long-term memory

Working memory

dashersw

Self-Reflection

Self-feedback loop for error analysis

Performance evaluation by confidence scoring

Strategy adjustment

dashersw

finally... Multi-agent SYstems

DELEGATION

Task planning and decomposition

Agent selection

Progress monitoring

Result integration

dashersw

Coordination patterns

Central coordinator (i.e. project manager)

Peer-to-peer network

Hierarchical network

Things to consider

Retry after errors

Fallback strategies

Exception reporting

dashersw

Types of Agents

- Expert agents (domain-specific knowledge)

- Critics (validation and verification)

- Coordinators (task management)

- Memory agents (information management)

dashersw

More advanced topics

dashersw

Memory, insights, habits

dashersw

what if questions

dashersw

what if English isn't the best language to prompt these agents?

dashersw

Beyond English: The Language of Prompts

-

Problem: Natural language (like English) is ambiguous. LLMs struggle with implicit meaning. ("Shower gel" is a good example).

-

Better: Languages with more explicit structure and meaning encoded in tokens.

-

Agglutinative languages (Turkish, Korean) - suffixes add clear meaning.

-

Programming / Markup Languages: JSON, Mermaid, PlantUML.

-

dashersw

Another example

Legolas is an elven prince of the Woodland Realm, a master of archery and close combat, renowned for his agility, keen senses, and unwavering loyalty as a member of the Fellowship of the Ring.

{

"name": "Legolas",

"race": "Elf",

"title": "Prince of the Woodland Realm",

"skills": ["archery", "agility", "keen senses", "combat"],

"affiliations": ["Fellowship of the Ring"],

"weapon": "Bow and arrow",

"notable_traits": ["immortal", "sharp eyesight", "swift and silent"]

}dashersw

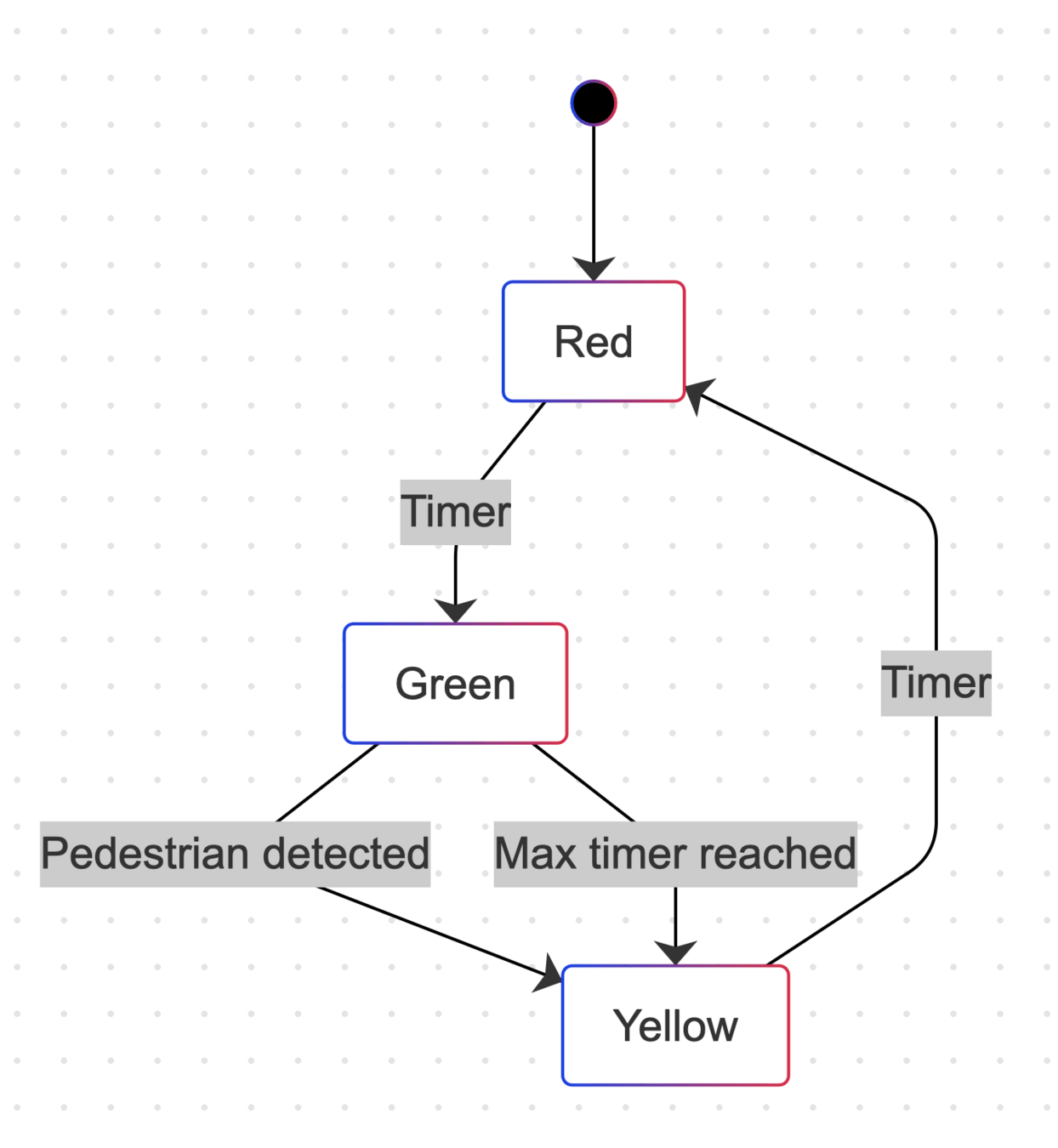

Another example

The traffic light cycles through Red → Green → Yellow → Red, but with a twist during the Green state. If a pedestrian presses the button (or is detected), the light will switch to Yellow earlier to allow crossing. If no one is there, it stays Green for the full duration.

stateDiagram-v2

[*] --> Red

Red --> Green: Timer

Green --> Yellow: Pedestrian detected

Green --> Yellow: Max timer reached

Yellow --> Red: Timer

dashersw

Mathematical Foundations:

Vectors & Embeddings

-

How do LLMs understand words? They don't. They use math.

-

Embeddings: Representing words/tokens/text as numerical vectors in high-dimensional space (e.g., 1536 dimensions).

-

Each dimension captures some aspect of meaning (abstractly).

-

Vectors: Have magnitude (length) and direction.

-

Goal: Similar concepts/words have similar vectors (close in the space).

-

Allows "Word Arithmetic":

-

vector(King) - vector(Man) + vector(Woman) ≈ vector(Queen)

-

dashersw

How LLMs Predict & Vector Search Works

-

Prediction:

-

Entire input sequence is also embedded into a single vector.

-

LLM predicts the next token by finding the most statistically probable vector near the sequence's end-point vector.

-

Parameters (p, temperature) control the "search radius" for the next token (determinism vs. randomness).

-

-

Vector Search:

-

Store embeddings of documents/profiles in a database.

-

Embed the search query into a vector.

-

Find vectors in the database closest (most similar) to the query vector.

-

Similarity metrics: Euclidean distance, Cosine similarity (angle).

-

Result: A ranked list based on similarity, not exact match.

-

dashersw

The Resolution Problem & NeoL's Approach

-

Problem: Embedding long text into a fixed-size vector loses resolution/nuance. Meaning gets compressed and blurred. (Long text vs. short text example).

-

RAG (Retrieval Augmented Generation): Standard approach. Chunk documents, find relevant chunks via vector search, feed chunks to LLM to answer query. Limitations: Chunking is arbitrary, context loss, poor for summarization/holistic understanding.

-

Neol's Approach (GARAG - Generation Augmented RAG):

-

Generate structured data (Dimensions: industry, skills, role, etc.) from both query and profiles using LLMs.

-

Augment the raw data with this meaningful, structured information.

-

Retrieve by performing vector search within these aligned dimensions.

-

Result: More accurate, context-aware search that leverages LLM intelligence before the search, mitigating resolution loss.

-

dashersw

Key Takeaways

-

AGI will likely be an emergent property of complex systems, not a single algorithm leap.

-

LLMs are powerful tools (next-token predictors), but have fundamental limitations for AGI.

-

Multi-Agent Systems (MAS) offer a promising architecture for building more capable, adaptable AI.

-

Understanding how LLMs work (vectors, probability, limitations) is key to unlocking their true potential.

-

Effective AI requires structure: specialized agents, codified reasoning (steps, flowcharts), smart prompting (language matters!).

-

Generation Augmented Retrieval (GAR) can overcome key limitations of standard vector search.

-

True innovation often lies in fusing domain expertise with deep technical understanding.

THANK YOU!

Armağan Amcalar

dashersw