The Impact of Memory Allocators on Performance

whoami

- AI on Embedded Sytems, Web dev, ...

- Got into Rust 2 years ago with the AoC

- ODMantic (a Python ORM for MongoDB)

Arthur Pastel

Software Engineer

🇫🇷 🥖 🥐

@art049 on

Building

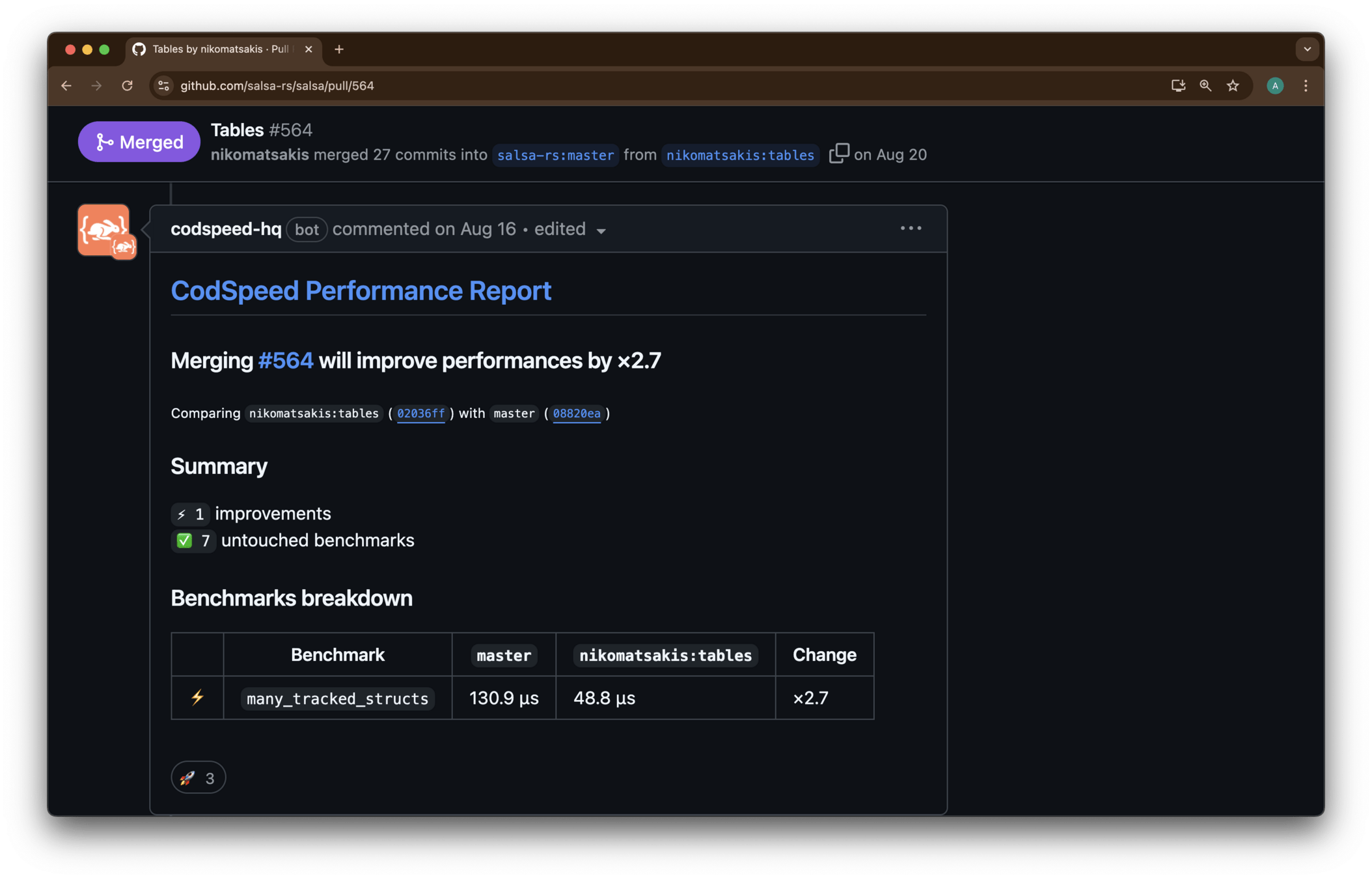

- Consistent performance measurement

- Integrated with existing benchmarking libraries

- Run on VM (even GitHub runners)

- Performance reports in PRs

Used by Rust projects:

Why do we need to allocate memory again?

A dummy allocator

use std::io::{Read, Write};

const POOL_SIZE: usize = 32;

static mut MEMORY_POOL: [u8; POOL_SIZE] = [0; POOL_SIZE]; // Statically allocated buffer

fn read_line_to_static_memory_pool() -> &'static str {

let mut buffer = [0; 1]; // Temporary buffer to read one byte at a time

let mut i = 0;

loop {

let n = std::io::stdin().read(&mut buffer).unwrap();

if n == 0 || buffer[0] == b'\n' {

break;

}

unsafe { MEMORY_POOL[i] = buffer[0] };

i += 1;

}

unsafe { std::str::from_utf8_unchecked(&MEMORY_POOL[0..i]) }

}

fn main() {

print!("Enter your address: ");

std::io::stdout().flush().unwrap();

let address = read_line_to_static_memory_pool();

println!("You entered: {}", address);

}Usage

But...

Dynamic data

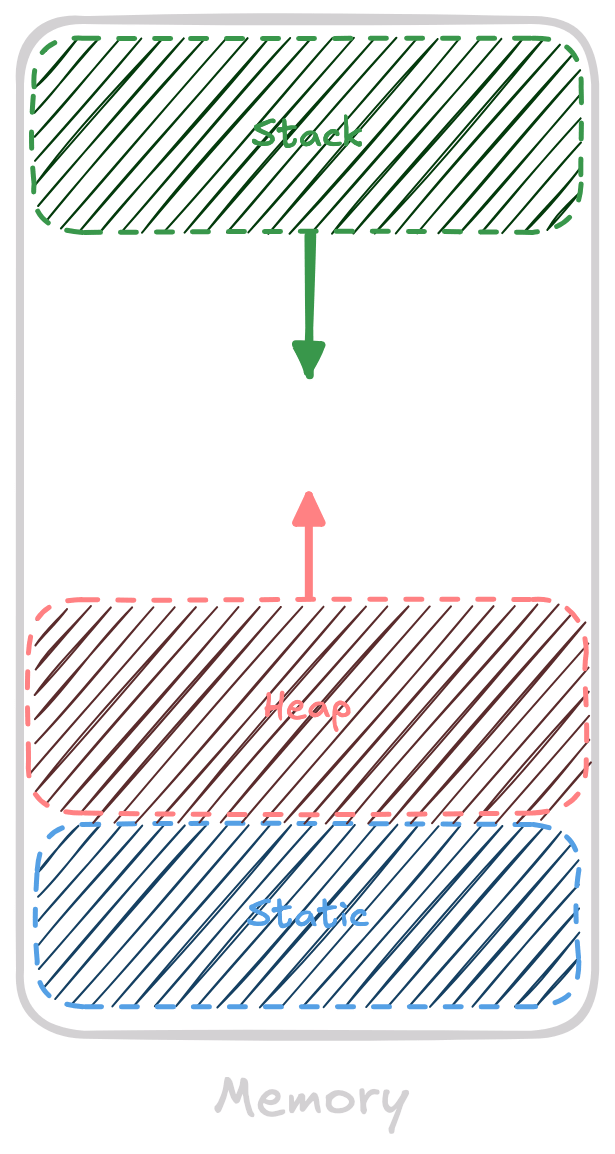

Types of memory

Types of memory

Static Memory

- Allocated at compile time, exists during the whole execution

- Rust ->

static/static mut(⚠️) →'staticlifetime- ❗

const≠static→constis inlined and doesn't exist in memory

- ❗

- Amazing performance since there is no overhead

- Limited flexibility (size known at compile time) and can lead to data races

static answer : u64 = 42;Stack Memory

- Allocated at compile time, exists during the whole execution

- Rust ->

let/let mut, (de)allocated on scope change - Great memory access performance: locality & just the stack pointer to move

- Only valid within the allocation scope. Size must be known at compile time.

- The stack cannot grow indefinitely (stack overflow)

let answer = 42;Stack Memory limit

use libc::{getrlimit, rlimit, RLIMIT_STACK};

use std::io;

fn main() -> io::Result<()> {

let mut limit = rlimit {

rlim_cur: 0,

rlim_max: 0,

};

unsafe {

if getrlimit(RLIMIT_STACK, &mut limit) != 0 {

eprintln!("Failed to get stack size limit");

return Err(io::Error::last_os_error());

}

}

println!("Current stack size limit: {} bytes", limit.rlim_cur);

println!("Maximum stack size limit: {} bytes", limit.rlim_max);

large_stack();

Ok(())

}

fn large_stack() {

// Attempt to allocate a large array on the stack

let _large_array: [u8; 16 * 1024 * 1024] = [0; 16 * 1024 * 1024];

println!("Successfully allocated a 16MB array on the stack");

}

Stack Memory limit

Heap Memory

- Allocated during runtime by the Allocator/OS

- Rust ->

String,Box,Vec,... (with a pointer still stored on the stack) - Extremely flexible, dynamic allocation, unlimited size

- Allocation is costly and needs bookkeeping

let s = String::from("hello world");

let answer = Box::new(42);

let v = Vec::from([1,2,3]);Recap: Static vs Stack vs Heap

| Static | Stack | Heap | |

|---|---|---|---|

| Rust | static |

let |

String,Box, Vec,...

|

| Allocation Performance | No overhead | Fast | Slow |

| Flexibility | Compile-time | Compile-time | Runtime |

Allocating on the Heap with the Allocator

Remember learning C?

#include <stdio.h>

#include <stdlib.h> // use stdlib.h header file to malloc() function

int main ()

{

int *pt; // declare a pointer of type int

// use malloc() function to define the size of block in bytes

pt = malloc (sizeof(int));

// use if condition that defines ptr is not equal to null

if (pt != NULL)

{

printf (" Memory is created using the malloc() function ");

}

else

printf (" memory is not created ");

return 0;

} void *malloc(size_t size); void free(void *_Nullable ptr); void *calloc(size_t nmemb, size_t size); void *realloc(void *_Nullable ptr, size_t size);

libc spec of an allocator

Is malloc a system call?

But it uses a few of them

-

brk/sbrk-> -

mmap-> creates a new mapping in the virtual address space

glibc malloc implementation exclusively uses mmap if possible

Using a custom allocator in Rust

pub unsafe trait GlobalAlloc {

unsafe fn alloc(&self, layout: Layout) -> *mut u8;

unsafe fn dealloc(&self, ptr: *mut u8, layout: Layout);

}The global allocator: std::alloc::GlobalAlloc

pub unsafe trait GlobalAlloc {

unsafe fn alloc(&self, layout: Layout) -> *mut u8;

unsafe fn dealloc(&self, ptr: *mut u8, layout: Layout);

// Provided methods

unsafe fn alloc_zeroed(&self, layout: Layout) -> *mut u8 { ... }

unsafe fn realloc(

&self,

ptr: *mut u8,

layout: Layout,

new_size: usize,

) -> *mut u8 { ... }

}The global allocator: std::alloc::GlobalAlloc

pub unsafe trait GlobalAlloc {

unsafe fn alloc(&self, layout: Layout) -> *mut u8;

unsafe fn dealloc(&self, ptr: *mut u8, layout: Layout);

// Provided methods

unsafe fn alloc_zeroed(&self, layout: Layout) -> *mut u8 { ... }

unsafe fn realloc(

&self,

ptr: *mut u8,

layout: Layout,

new_size: usize,

) -> *mut u8 { ... }

}The global allocator: std::alloc::GlobalAlloc

#[global_allocator]

static ALLOCATOR: SimpleAlloc = SimpleAlloc::new();use std::alloc::{GlobalAlloc, Layout};

use std::os::raw::c_void;

use libc::{free, malloc, realloc};

pub struct GlibcMallocAlloc;

unsafe impl GlobalAlloc for GlibcMallocAlloc {

unsafe fn alloc(&self, layout: Layout) -> *mut u8 {

let raw_ptr = malloc(layout.size());

raw_ptr.cast::<u8>()

}

unsafe fn dealloc(&self, ptr: *mut u8, _layout: Layout) {

free(ptr.cast::<c_void>())

}

unsafe fn realloc(&self, ptr: *mut u8, _layout: Layout, new_size: usize) -> *mut u8 {

realloc(ptr.cast::<c_void>(), new_size) as *mut u8

}

}A libc::malloc based allocator

The new std::alloc::Allocator API

(unstable)

The new std::alloc::Allocator API

let mut vec = Vec::new_in(MyAllocator);

let mut vec = Vec::with_capacity_in(10, MyAllocator);

vec.allocator() // Gets the alloc

Vec::from([1,2,3]).to_vec_in(MyAllocator); std::alloc::Allocator API

struct Layout {

size: usize,

align: usize,

}

pub unsafe trait Allocator {

fn allocate(&self, layout: Layout) -> Result<NonNull<[u8]>, AllocError>;

unsafe fn deallocate(&self, ptr: NonNull<u8>, layout: Layout);

// Provided methods

fn allocate_zeroed(

&self,

layout: Layout,

) -> Result<NonNull<[u8]>, AllocError> { ... }

unsafe fn grow(

&self,

ptr: NonNull<u8>,

old_layout: Layout,

new_layout: Layout,

) -> Result<NonNull<[u8]>, AllocError> { ... }

unsafe fn grow_zeroed(

&self,

ptr: NonNull<u8>,

old_layout: Layout,

new_layout: Layout,

) -> Result<NonNull<[u8]>, AllocError> { ... }

unsafe fn shrink(

&self,

ptr: NonNull<u8>,

old_layout: Layout,

new_layout: Layout,

) -> Result<NonNull<[u8]>, AllocError> { ... }

fn by_ref(&self) -> &Self

where Self: Sized { ... }

}

Building an simple bump allocator

- A kind of arena allocator

- Only allocates by moving a pointer by the allocated size

- Deallocates on destruction of the allocator

Building an simple bump allocator

pub struct ArenaAllocator {

arena: *mut u8,

size: usize,

offset: Cell<usize>,

}

unsafe impl Allocator for ArenaAllocator {

fn allocate(&self, layout: Layout) -> Result<NonNull<[u8]>, AllocError> {

let size = layout.size();

let align = layout.align();

let ptr_offset = align_up(self.offset.get(), align);

let new_offset = ptr_offset + size;

if new_offset > self.size {

return Err(AllocError);

}

let ptr = unsafe { self.arena.add(ptr_offset) };

self.offset.set(new_offset);

Ok(NonNull::slice_from_raw_parts(

NonNull::new(ptr).unwrap(),

size,

))

}

unsafe fn deallocate(&self, _ptr: NonNull<u8>, _layout: Layout) {

println!("Not deallocating memory in arena");

}

}

/// Aligns the given offset to the given alignment.

fn align_up(offset: usize, align: usize) -> usize {

(offset + align - 1) & !(align - 1)

}

impl ArenaAllocator {

pub fn from_ptr(ptr: *mut [u8]) -> Self {

ArenaAllocator {

arena: ptr as *mut u8,

size: ptr.len(),

offset: Cell::new(0),

}

}

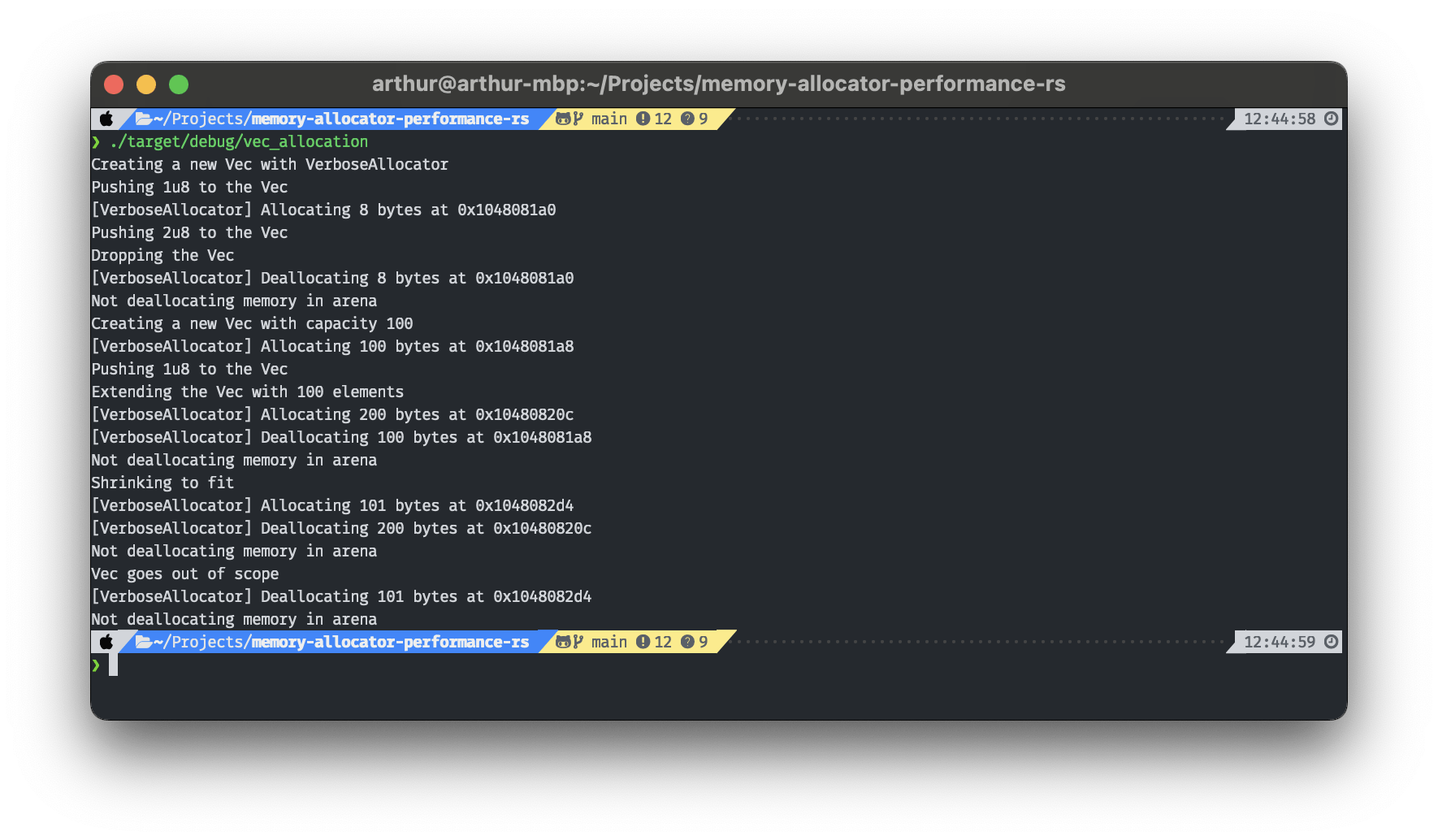

}Testing the bump allocator

![feature(allocator_api)]

use std::ptr::addr_of_mut;

use memory_allocator_performance_rs::ArenaAllocator;

use memory_allocator_performance_rs::VerboseAllocator;

static mut STATIC_ARENA_MEM: [u8; 1024] = [0; 1024];

fn main() {

let allocator = VerboseAllocator::new(ArenaAllocator::from_ptr(unsafe {

addr_of_mut!(STATIC_ARENA_MEM)

}));

println!("Creating a new Vec with VerboseAllocator");

let mut v = Vec::new_in(&allocator);

println!("Pushing 1u8 to the Vec");

v.push(1u8);

println!("Pushing 2u8 to the Vec");

v.push(2u8);

println!("Dropping the Vec");

drop(v);

println!("Creating a new Vec with capacity 100");

let mut v = Vec::with_capacity_in(100, &allocator);

println!("Pushing 1u8 to the Vec");

v.push(1u8);

println!("Extending the Vec with 100 elements");

v.extend((0..100).map(|x| x as u8));

println!("Shrinking to fit");

v.shrink_to_fit();

println!("Vec goes out of scope");

}

Testing the bump allocator

Challenges in memory allocation

- Memory footprint

- Fragmentation

- Predictability

Production-grade allocators

jemalloc

jemalloc is a general purpose malloc implementation that emphasizes fragmentation avoidance and scalable concurrency support. jemalloc first came into use as the FreeBSD libc allocator in 2005, and since then it has found its way into numerous applications that rely on its predictable behavior.

mimalloc is a compact general purpose allocator with excellent performance.

bumpallo

A fast bump allocation arena for Rust

Measuring allocation performance

Measurement Setup

- CodSpeed Wall Time Runner

- Bare metal machine

- 16 cores ARM64 CPU

- 32GB of RAM

- Bare metal machine

- Benchmarks

- With criterion.rs

- Using the

AllocatorAPI

glibc_malloc benchmarks

- Allocate 1600 chunks of

chunk_sizebytes - Free half of those chunks in FIFO order

- Free the other half in LIFO order

We'll measure that with chunk_size = 16, 32, 64, 128, 256

glibc_malloc benchmarks

Time results for various chunk sizes (less is better)

Measured by

glibc_malloc multithreaded benchmarks

- We run the same benchmark on N threads

- Each thread allocates and deallocates 1600 chunks

- All the threads are using the same allocator

- Fixed chunk size to 128 bytes

N = 4,8,16

glibc_malloc multithreaded benchmarks

Time results for various number of threads (less is better)

Measured by

Large blocks benchmarks

- Allocate a block of memory with a random size between 5MB and 25MB

- Make sure we have at most 20 allocated blocks living together by replacing an existing block (picked randomly)

Repeat 1000 times (with a fixed seed):

large allocation benchmarks

Measured by

Time results for various chunk sizes (less is better)

Conclusion

- Use bump allocators when dealing with a lot of short lived small objects

- Use mimalloc when the only constraint is performance

- mimalloc is non deterministic -> measurement noise

Some memory performance tricks

Using SmallVecs

use smallvec::SmallVec;

let mut v = SmallVec::<[u8; 4]>::new(); // initialize an empty vector

// The vector can hold up to 4 items without spilling onto the heap.

v.extend(0..4);

assert_eq!(v.len(), 4);

assert!(!v.spilled());

// Pushing another element will force the buffer to spill:

v.push(4);

assert_eq!(v.len(), 5);

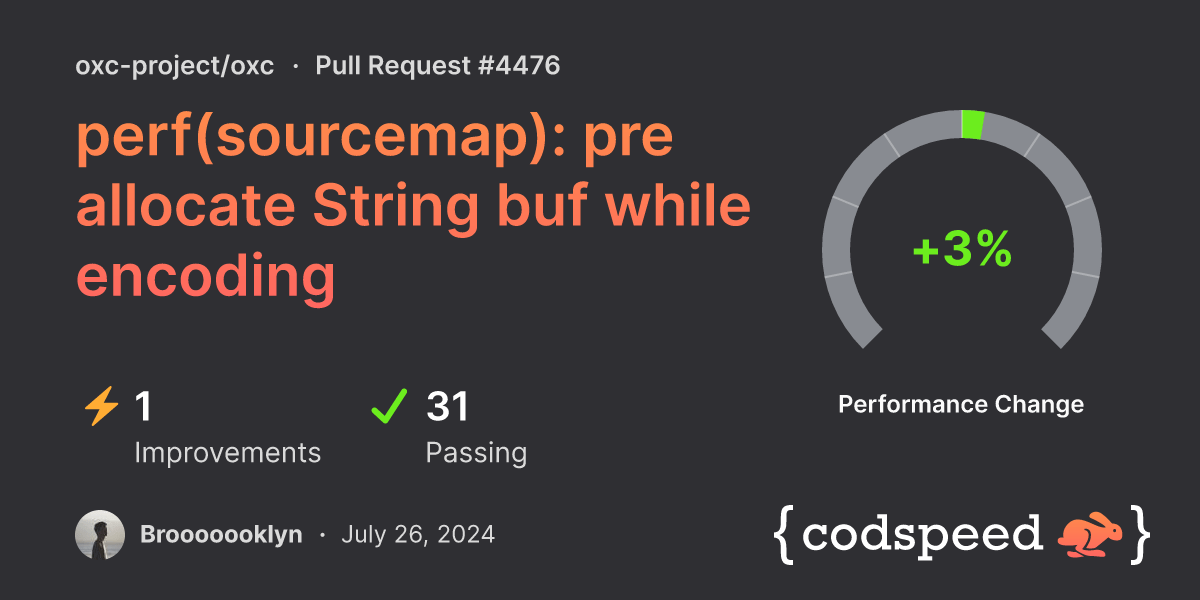

assert!(v.spilled());Pre-allocating to avoid reallocs

- Avoid extending strings or vectors

- Use

with_capacity - Example on

oxc

Thank you !

@art049 on

Drop by our booth 😃

(also, we're hiring

systems engineers)