What Going All-Remote Taught Us About Appsec and Testing Shortfalls

Working from Home Theory

- Uninterrupted

- Increased availability due to no commuting

- Relaxed and Happy

Working From Home Pandemic Edition

- Interrupted by spouses, roommates, children

- Unpredictable availability due to conflicting needs

- Not relaxed, not happy

Software Maturity

=

Process & Automation

The Challenge

- Main Application Security

- Maintain Application Quality

- Apply bug fixes

- Don't break things

Code Your Write

Code Your Users Install

10X

Application Security For The Code You Write

Application Security For The Code Others Write

YOU will be blamed for any problem

Photo of Titanic

My web apps

- 5-10 direct dependencies

- 5-10 direct dev dependencies

- 100 - 1000 transient dependencies

Are you going to be ready?

My dear, here we must run as fast as we can, just to stay in place. And if you wish to go anywhere you must run twice as fast as that.

Lewis Carroll, "Alice in Wonderland"

Talking about need to upgrade dependencies to keep up with security and performance updates

At The Office

(long long time ago, in the galaxy far far away)

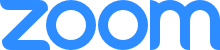

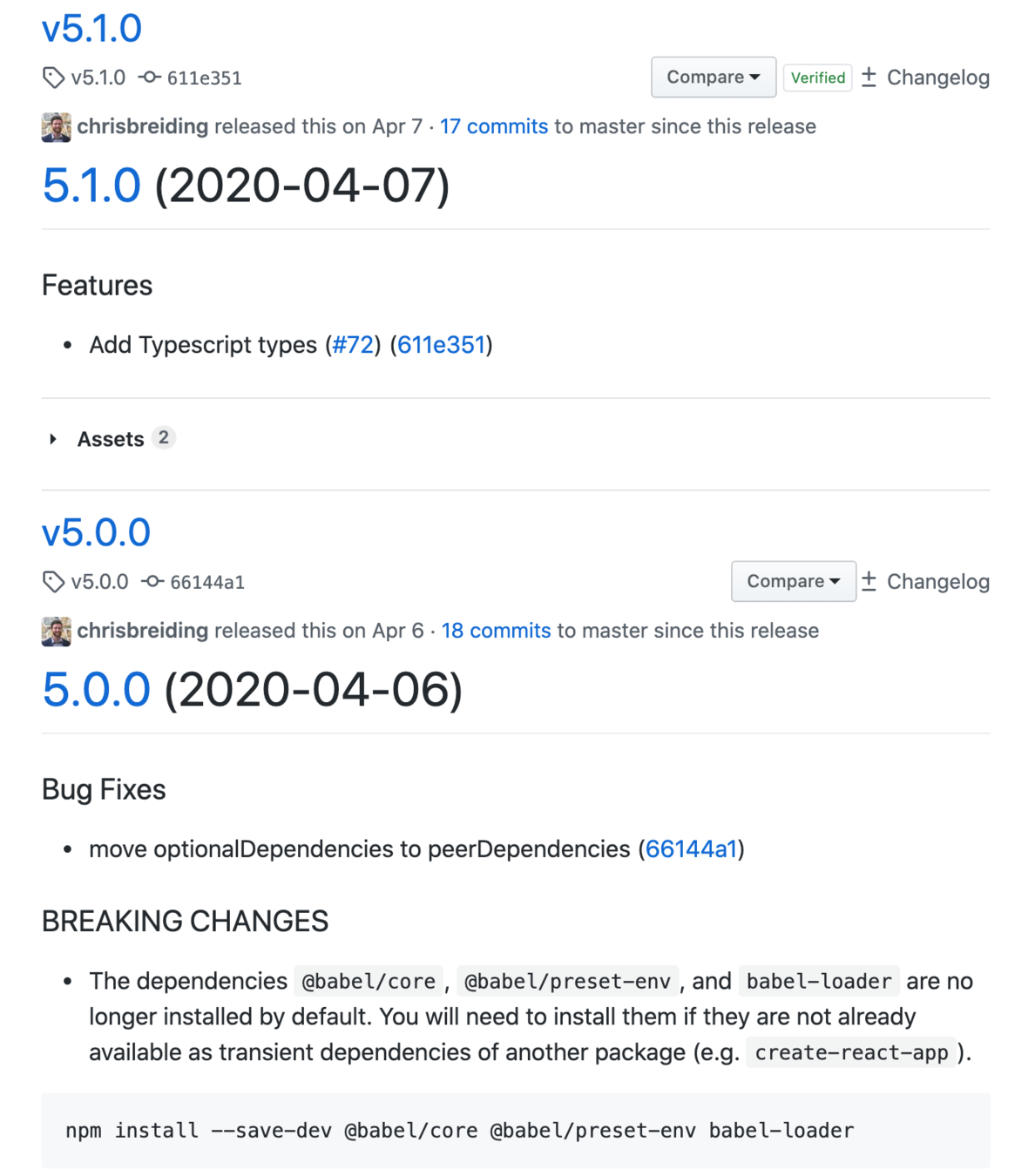

Automatic Dependency Updates

I am not doing this

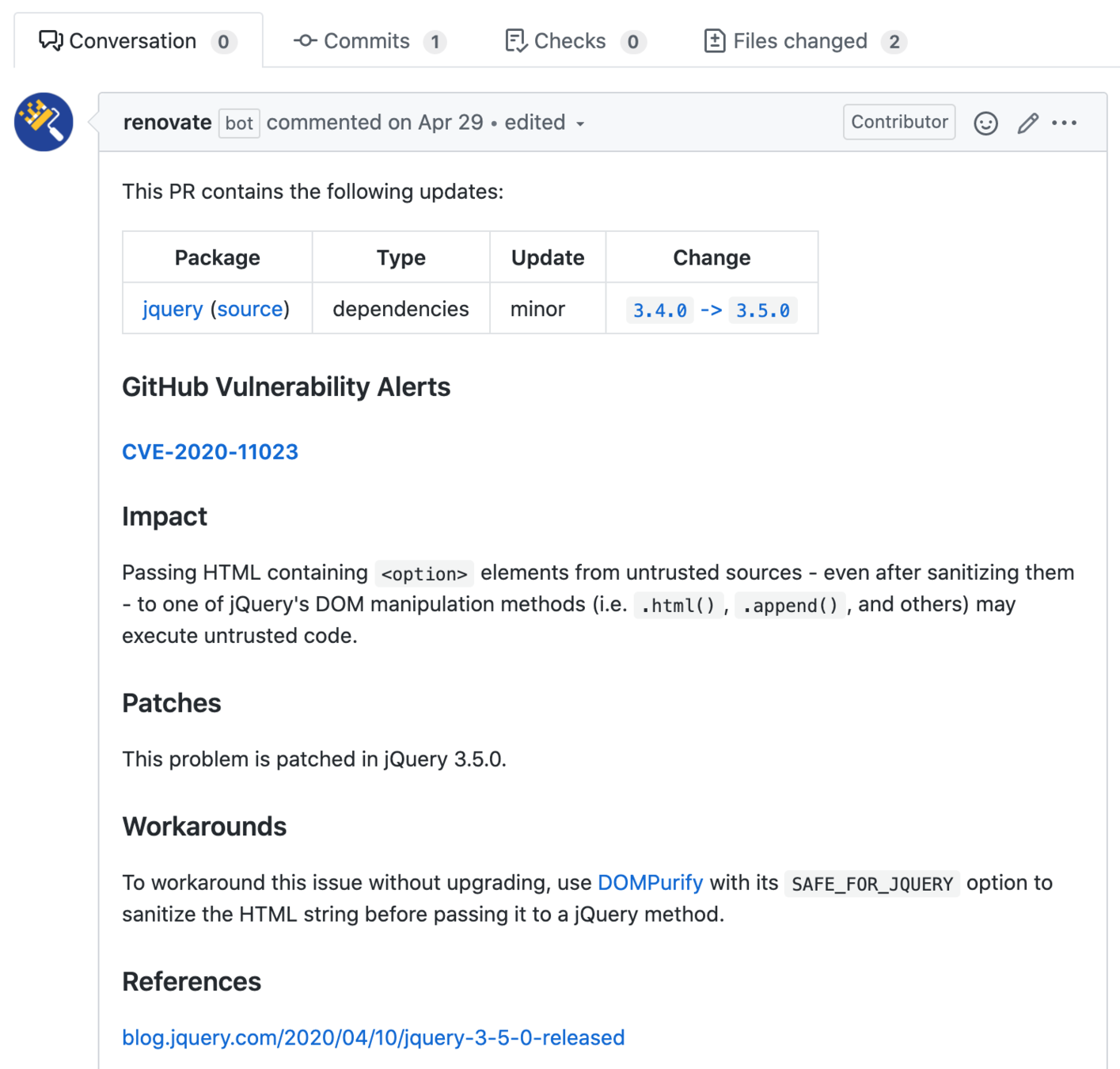

Automatic Dependency Updates

can be overwhelming

-

greenkeeper.io

-

dependabot

-

next-update

-

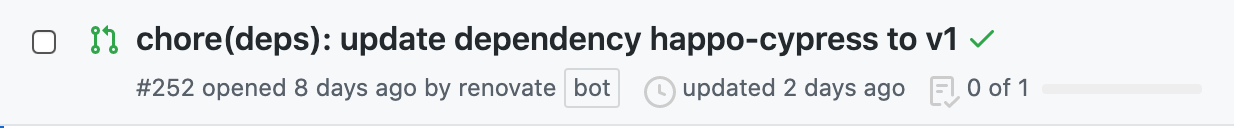

renovate bot

Automatic Dependency Updates

- meaningful

- configurable

- automatic

New feature!

Bug fixes!

No flood

No or little manual work

Rule: label most important dependencies versions

Your web framework

Your backend framework

Your main production libraries

Less important:

Build tools

Minor production utilities

Tip: update dependencies on different schedules

prod

dep

Tips & Tricks

Disabled upgrades for all tools but a few production dependencies

{

"extends": [

"config:base"

],

"enabledManagers": ["npm"],

"packageRules": [

{

"packagePatterns": ["*"],

"excludePackagePatterns":

["react", "react-dom"],

"enabled": false

}

]

}My users care about these libraries

I have automatic tests for these dependencies, if the tests pass, I can merge the update with confidence

renovate.json

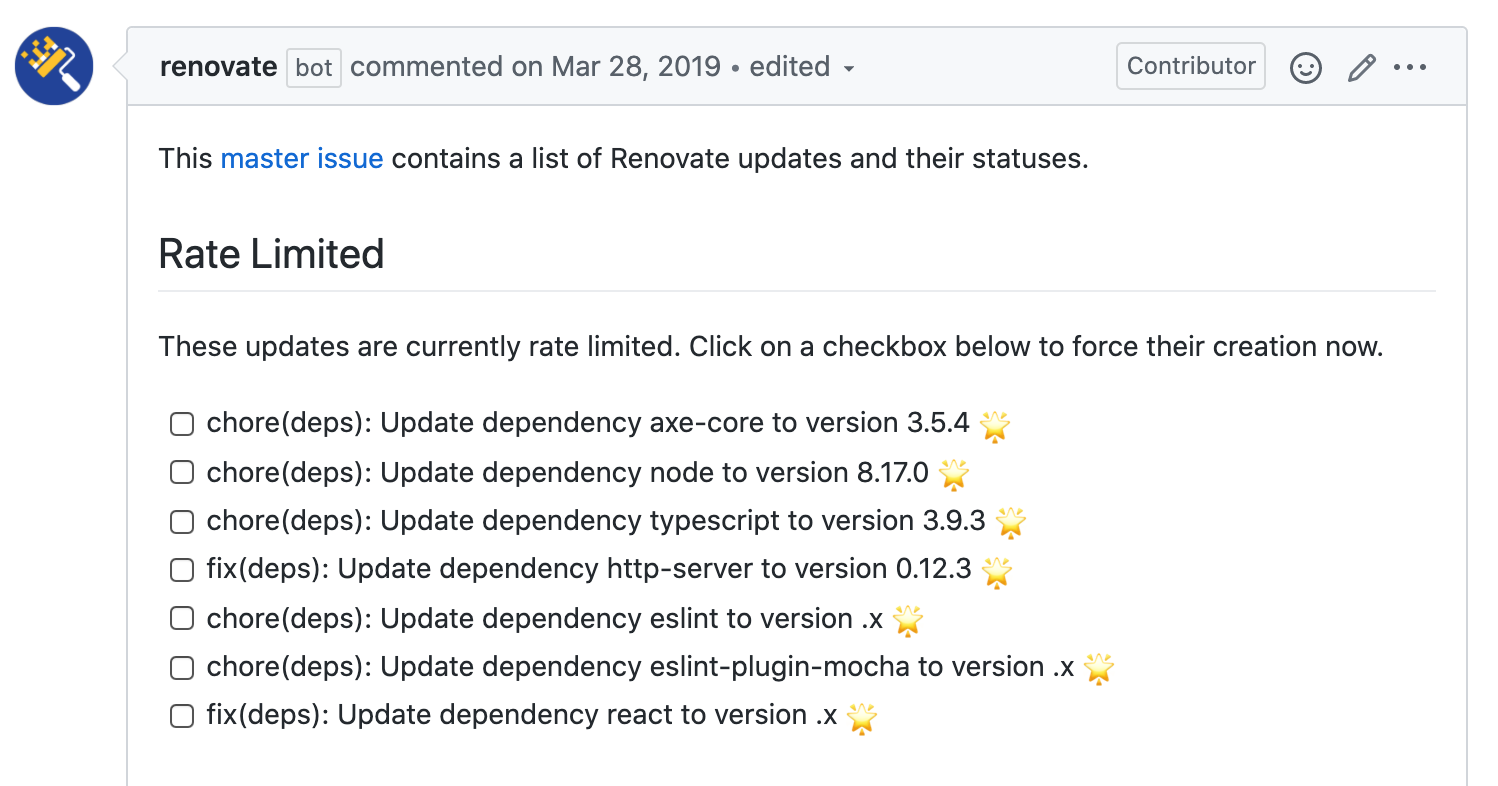

Dependency Upgrades: use a review checklist

Dependency Upgrades: use a review checklist

Not everyone follows semantic release 😞

semantic release

Commit subject convention

patch: a bug was fixed 🐞

minor: a new feature was added 🎉

major: breaking API change 👀

Semantic release tools inspect commits since last release to update version

major.minor.patch

SemVer = meaningful changelog

upgrade requires effort (maybe)

new feature!

Move Fast

-

production dependencies

-

development dependencies

-

"trusted" dependencies (usually our own)

prod

dev

trusted

How much effort does it take?

Goal:

- meaningful

- configurable

- automatic

No or little manual work

{

"extends": ["config:base"],

"automerge": true,

"major": {

"automerge": false

}

}renovate.json

Do you trust your tests enough to automerge dependency upgrades?

Tips: do not "jump" on the major version

🛑 X.Y.Z -> (X + 1).0.0

✅ X.Y.Z -> (X + 1).0.1

✅ X.Y.Z -> (X + 1).1.0

Do you trust your tests to find possible mistakes:

- Code syntax, types

- Logical

- Visual style

- A11y

- Compiling and bundling

- Security

- Performance

- Deployment

Do you trust your tests to find possible mistakes:

- Code syntax, types

Static code analysis tools (linters)

Do you trust your tests to find possible mistakes:

- Logical

Cover your code in meaningful tests

$ npm i -D cypressdescribe('Todo App', () => {

it('completes an item', () => {

cy.visit('http://localhost:8080')

// there are several existing todos

cy.get('.todo').should('have.length', 3)

})

})Cypress: free open-source end-to-end test runner for anything that runs in the browser

describe('Todo App', () => {

it('completes an item', () => {

// base url is stored in "cypress.json" file

cy.visit('/')

// there are several existing todos

cy.get('.todo').should('have.length', 3)

cy.log('**adding a todo**')

cy.get('.input').type('write tests{enter}')

cy.get('.todo').should('have.length', 4)

cy.log('**completing a todo**')

cy.contains('.todo', 'write tests').contains('button', 'Complete').click()

cy.contains('.todo', 'write tests')

.should('have.css', 'text-decoration', 'line-through solid rgb(74, 74, 74)')

cy.log('**removing a todo**')

// due to quarantine, we have to delete an item

// without completing it

cy.contains('.todo', 'Meet friend for lunch').contains('button', 'x').click()

cy.contains('.todo', 'Meet friend for lunch').should('not.exist')

})

})

Do you trust your tests to find possible mistakes:

- Logical

Cover your code in meaningful tests

1. Carefully collect user stories and feature requirments

2. Write matching tests

Keep updating user stories and tests to keep them in sync

What should I test?

1. Carefully collect user stories and feature requirments

2. Write matching tests

Keep updating user stories and tests to keep them in sync

HARD

Are we testing all features?

Feature A

User can add todo items

Feature B

User can complete todo items

Feature C

User can delete todo items

❚❚❚❚❚

❚❚❚❚❚ ❚❚ ❚❚❚❚

❚❚❚

❚❚❚❚

❚❚❚ ❚❚❚❚❚❚❚

❚❚❚❚

❚❚

❚❚❚❚❚ ❚❚

❚❚❚❚❚❚

❚❚❚

❚❚❚❚❚

❚❚❚❚❚

❚❚❚❚

❚❚❚❚

❚❚❚❚❚❚ ❚❚❚ ❚❚

❚

❚❚

❚❚❚❚❚

❚❚❚❚❚ ❚❚ ❚❚❚❚

❚❚❚

❚❚❚❚

❚❚❚ ❚❚❚❚❚❚❚

❚❚❚❚

❚❚

❚❚❚❚❚ ❚❚

❚❚❚❚❚❚

❚❚❚

❚❚❚❚❚

❚❚❚❚❚

❚❚❚❚

❚❚❚❚

❚❚❚❚❚❚ ❚❚❚ ❚❚

❚

❚❚

❚❚❚❚❚

❚❚❚❚❚ ❚❚ ❚❚❚❚

❚❚❚

❚❚❚❚

❚❚❚ ❚❚❚❚❚❚❚

❚❚❚❚

❚❚

❚❚❚❚❚ ❚❚

❚❚❚❚❚❚

❚❚❚

❚❚❚❚❚

❚❚❚❚❚

❚❚❚❚

❚❚❚❚

❚❚❚❚❚❚ ❚❚❚ ❚❚

❚

❚❚

source code

Keeping track via code coverage

Are we testing all features?

Feature A

User can add todo items

Feature B

User can complete todo items

Feature C

User can delete todo items

❚❚❚❚❚

❚❚❚❚❚ ❚❚ ❚❚❚❚

❚❚❚

❚❚❚❚

❚❚❚ ❚❚❚❚❚❚❚

❚❚❚❚

❚❚

❚❚❚❚❚ ❚❚

❚❚❚❚❚❚

❚❚❚

❚❚❚❚❚

❚❚❚❚❚

❚❚❚❚

❚❚❚❚

❚❚❚❚❚❚ ❚❚❚ ❚❚

❚

❚❚

❚❚❚❚❚

❚❚❚❚❚ ❚❚ ❚❚❚❚

❚❚❚

❚❚❚❚

❚❚❚ ❚❚❚❚❚❚❚

❚❚❚❚

❚❚

❚❚❚❚❚ ❚❚

❚❚❚❚❚❚

❚❚❚

❚❚❚❚❚

❚❚❚❚❚

❚❚❚❚

❚❚❚❚

❚❚❚❚❚❚ ❚❚❚ ❚❚

❚

❚❚

source code

it('adds todos', () => { ... })it('completes todos', () => {

... })Keeping track via code coverage

Are we testing all features?

Feature A

User can add todo items

Feature B

User can complete todo items

Feature C

User can delete todo items

❚❚❚❚❚

❚❚❚❚❚ ❚❚ ❚❚❚❚

❚❚❚

❚❚❚❚

❚❚❚ ❚❚❚❚❚❚❚

❚❚❚❚

❚❚

❚❚❚❚❚ ❚❚

❚❚❚❚❚❚

❚❚❚

❚❚❚❚❚

❚❚❚❚❚

❚❚❚❚

❚❚❚❚

❚❚❚❚❚❚ ❚❚❚ ❚❚

❚

❚❚

source code

it('adds todos', () => { ... })it('completes todos', () => {

... })green: lines executed during the tests

red: lines NOT executed during the tests

Keeping track via code coverage

Are we testing all features?

Feature A

User can add todo items

Feature B

User can complete todo items

Feature C

User can delete todo items

❚❚❚❚❚

❚❚❚❚❚ ❚❚ ❚❚❚❚

❚❚❚

❚❚❚❚

❚❚❚ ❚❚❚❚❚❚❚

❚❚❚❚

❚❚

❚❚❚❚❚ ❚❚

❚❚❚❚❚❚

❚❚❚

❚❚❚❚❚

❚❚❚❚❚

❚❚❚❚

❚❚❚❚

❚❚❚❚❚❚ ❚❚❚ ❚❚

❚

❚❚

source code

it('adds todos', () => { ... })it('completes todos', () => {

... })it('deletes todos', () => {

... })Keeping track via code coverage

Are we testing all features?

Feature A

User can add todo items

Feature B

User can complete todo items

Feature C

User can delete todo items

❚❚❚❚❚

❚❚❚❚❚ ❚❚ ❚❚❚❚

❚❚❚

❚❚❚❚

❚❚❚ ❚❚❚❚❚❚❚

❚❚❚❚

❚❚

❚❚❚❚❚ ❚❚

❚❚❚❚❚❚

❚❚❚

❚❚❚❚❚

❚❚❚❚❚

❚❚❚❚

❚❚❚❚

❚❚❚❚❚❚ ❚❚❚ ❚❚

❚

❚❚

source code

it('adds todos', () => { ... })it('completes todos', () => {

... })it('deletes todos', () => {

... })Code coverage from tests indirectly

measures implemented features tested

Keeping track via code coverage

⚠️ 100% Code Coverage ≠ 0🐞

Feature A

User can add todo items

Feature B

User can complete todo items

Feature C

User can delete todo items

❚❚❚❚❚

❚❚❚❚❚ ❚❚ ❚❚❚❚

❚❚❚

❚❚❚❚

❚❚❚ ❚❚❚❚❚❚❚

❚❚❚❚

❚❚

❚❚❚❚❚ ❚❚

❚❚❚❚❚❚

❚❚❚

❚❚❚❚❚

❚❚❚❚❚

❚❚❚❚

❚❚❚❚

❚❚❚❚❚❚ ❚❚❚ ❚❚

❚

❚❚

source code

it('adds todos', () => { ... })it('completes todos', () => {

... })it('deletes todos', () => {

... })Unrealistic tests; subset of inputs

code does not implement the feature correctly

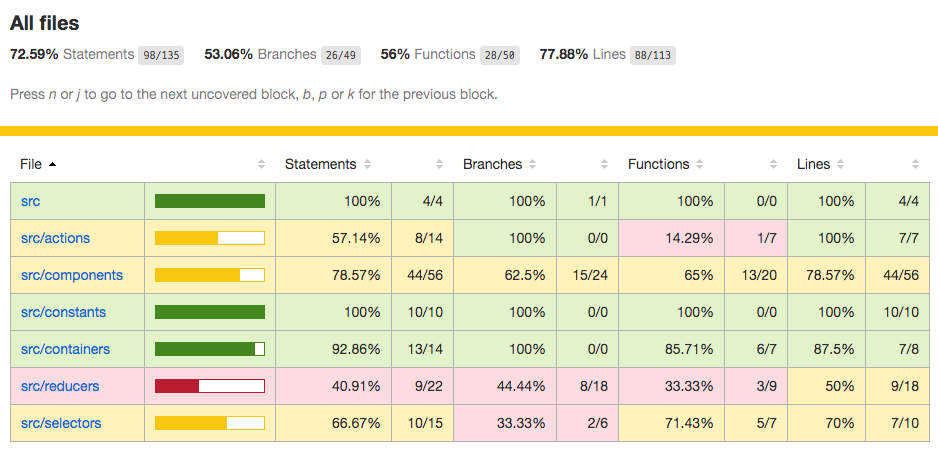

Code Coverage with @cypress/code-coverage

-

Instrument code (YOU)

-

Using Istanbul / nyc library

-

-

Cypress does the rest

it('adds todos', () => {

cy.visit('/')

cy.get('.new-todo')

.type('write code{enter}')

.type('write tests{enter}')

.type('deploy{enter}')

cy.get('.todo').should('have.length', 3)

})E2E tests are extremely effective at covering a lot of app code

it('adds todos', () => {

cy.visit('/')

cy.get('.new-todo')

.type('write code{enter}')

.type('write tests{enter}')

.type('deploy{enter}')

cy.get('.todo').should('have.length', 3)

})

E2E tests are extremely effective at covering a lot of app code

Nice job, @cypress/code-coverage plugin

Cypress E2E, component and unit test produce combined code coverage report

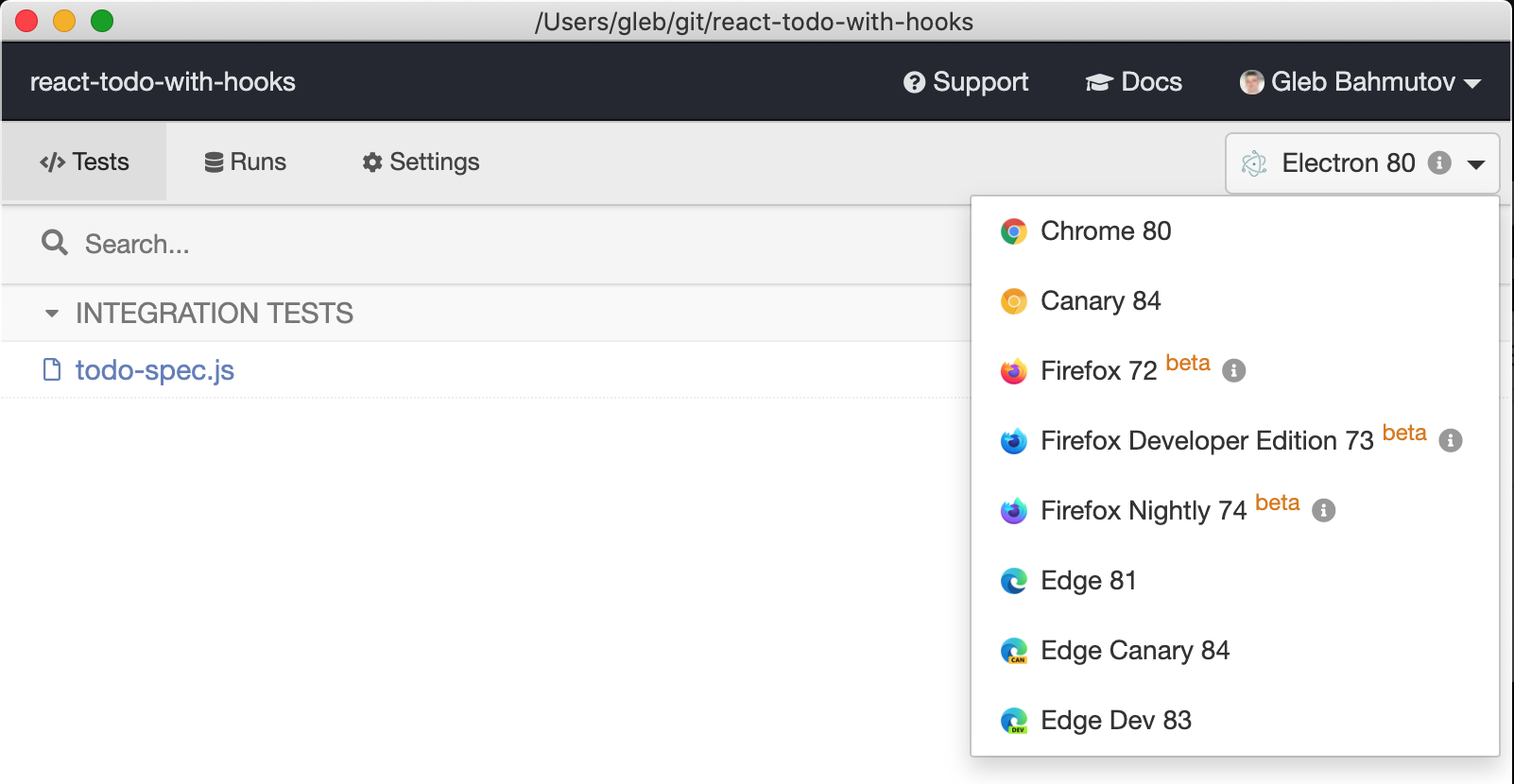

Do you trust your tests to find possible mistakes:

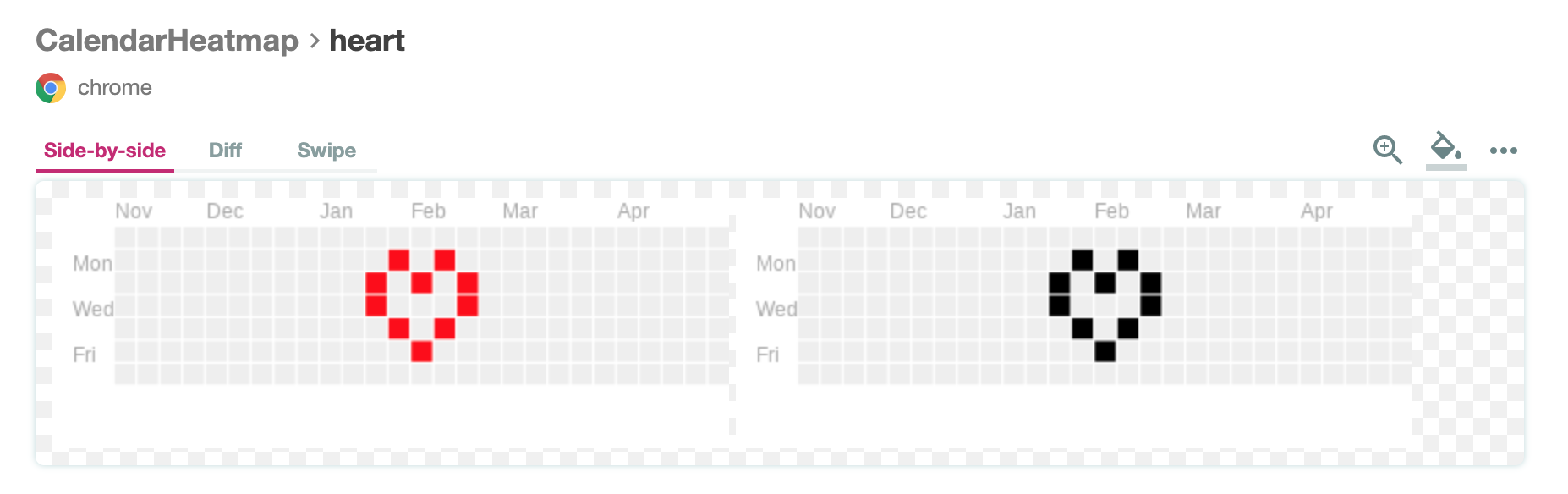

- Visual style

Use image diffing tests

it('looks the same', () => {

// visit the page

// interact like a user

// now we can do visual diffing

cy.get('.react-calendar-heatmap').happoScreenshot({

component: 'CalendarHeatmap',

})

})

Visual review against baseline image

Visual review against baseline image

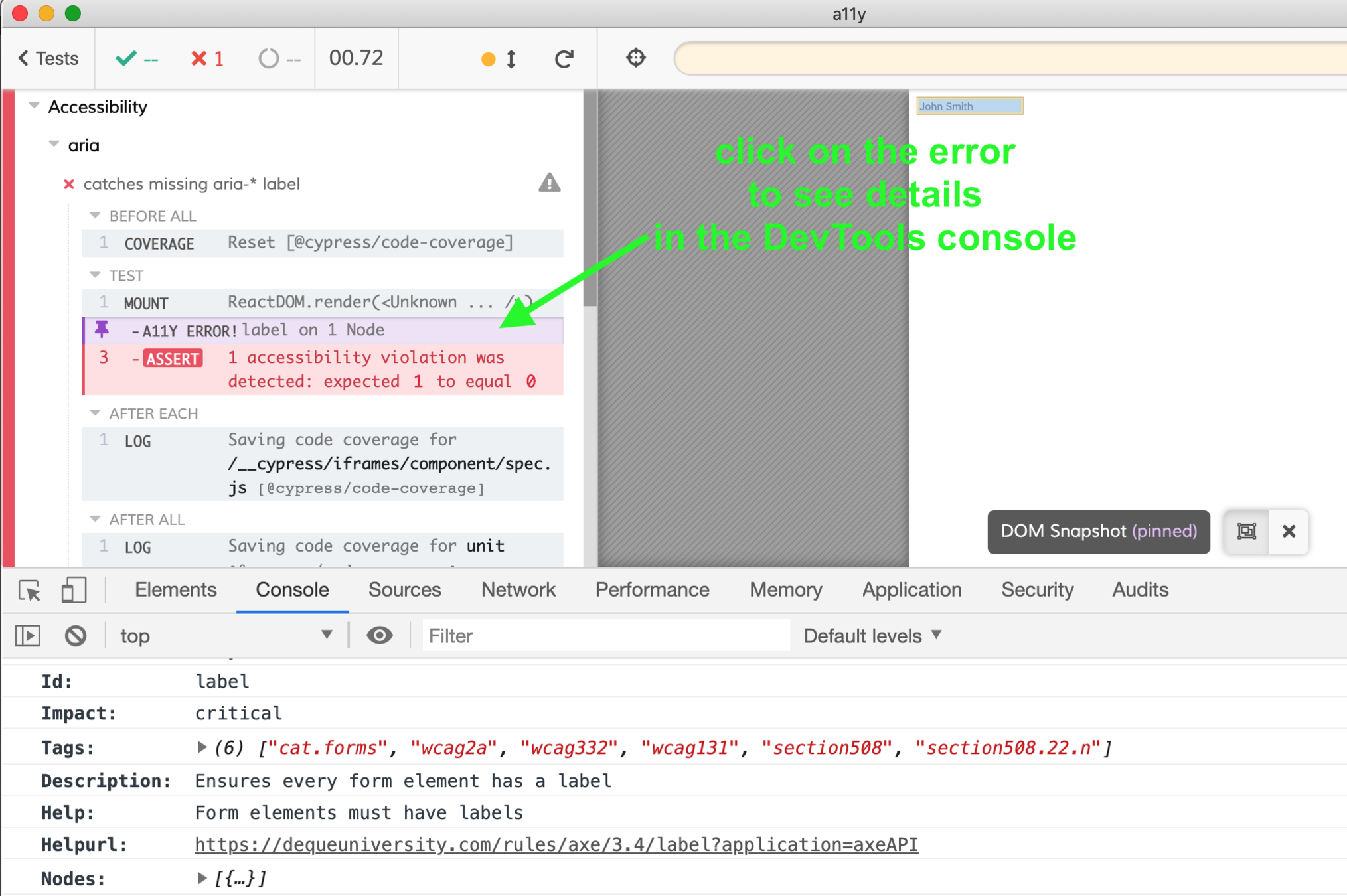

Do you trust your tests to find possible mistakes:

- A11y

Use accessability testing plugin

it('catches missing aria-* label', () => {

// https://github.com/avanslaars/cypress-axe

cy.injectAxe()

cy.visit('/')

cy.checkA11y('input', {

runOnly: {

type: 'tag',

values: ['wcag2a'],

},

})

})

Do you trust your tests to find possible mistakes:

- Code syntax, types

- Logical

- Visual style

- A11y

- Compiling and bundling

- Security

- Performance

- Deployment

Do you trust your tests to find possible mistakes:

- Code syntax, types

- Logical

- Visual style

- A11y

- Compiling and bundling

- Security

- Performance

- Deployment

End-to-end tests

I feel safe automatically updating dependencies if all commit checks pass

- high code coverage

- visual snapshots

Good tests ➡ confidence ➡ ability to move fast ➡ staying fully patched & safe

Q&A