Well Tested Software

Gleb Bahmutov, PhD

Tuesday, Nov 20, 2018 12:50

🔊 Dr Gleb Bahmutov PhD

C / C++ / C# / Java / CoffeeScript / JavaScript / Node / Angular / Vue / Cycle.js / functional

these slides

14 people. Atlanta, Philly, Boston, LA, Chicago

Fast, easy and reliable testing for anything that runs in a browser

I have Cypress.io stickers

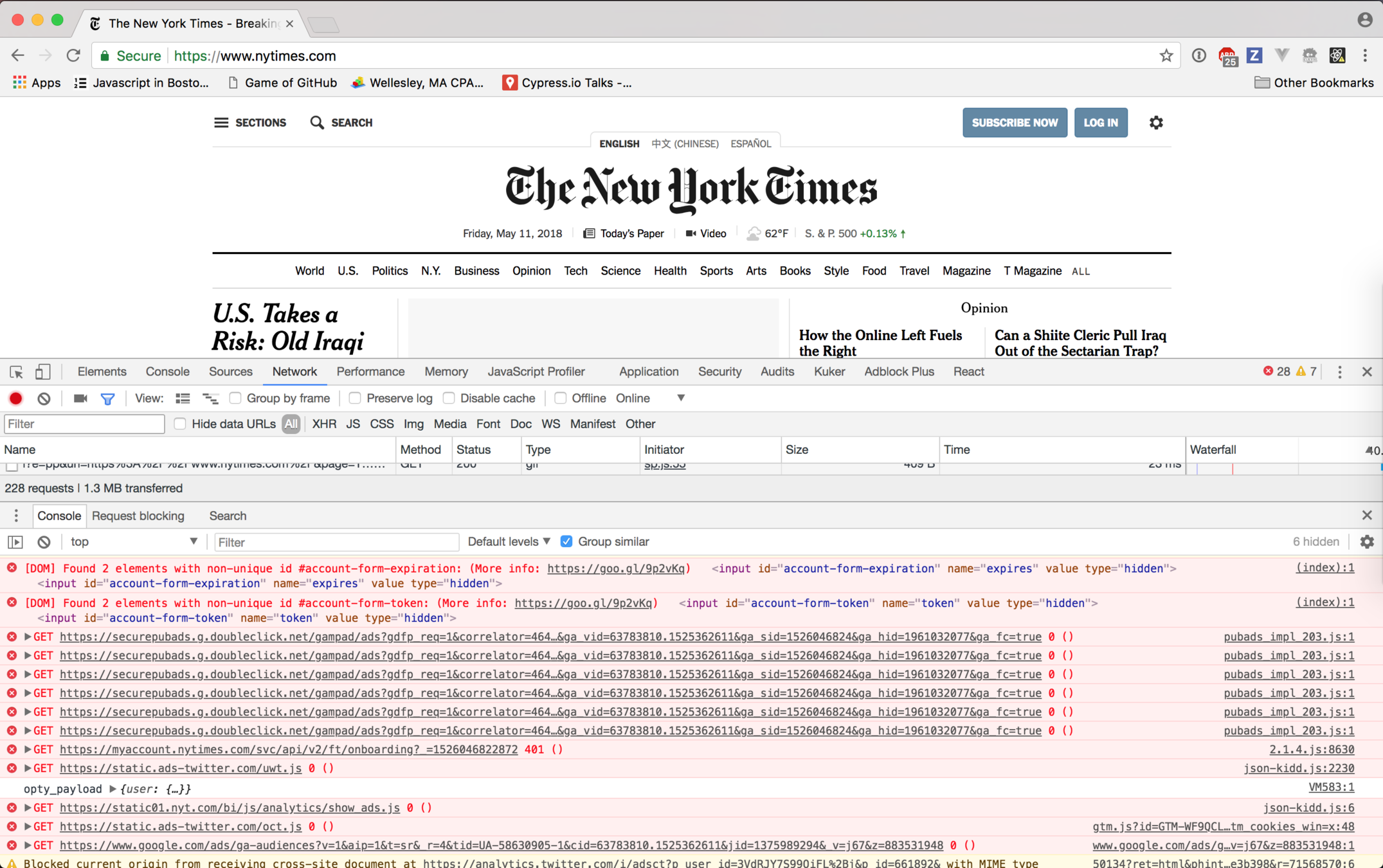

Every working website looks the same. Every broken web app is broken in its own way.

Leo Tolstoy, "Anna Karenina"

(paraphrasing)

I love good software

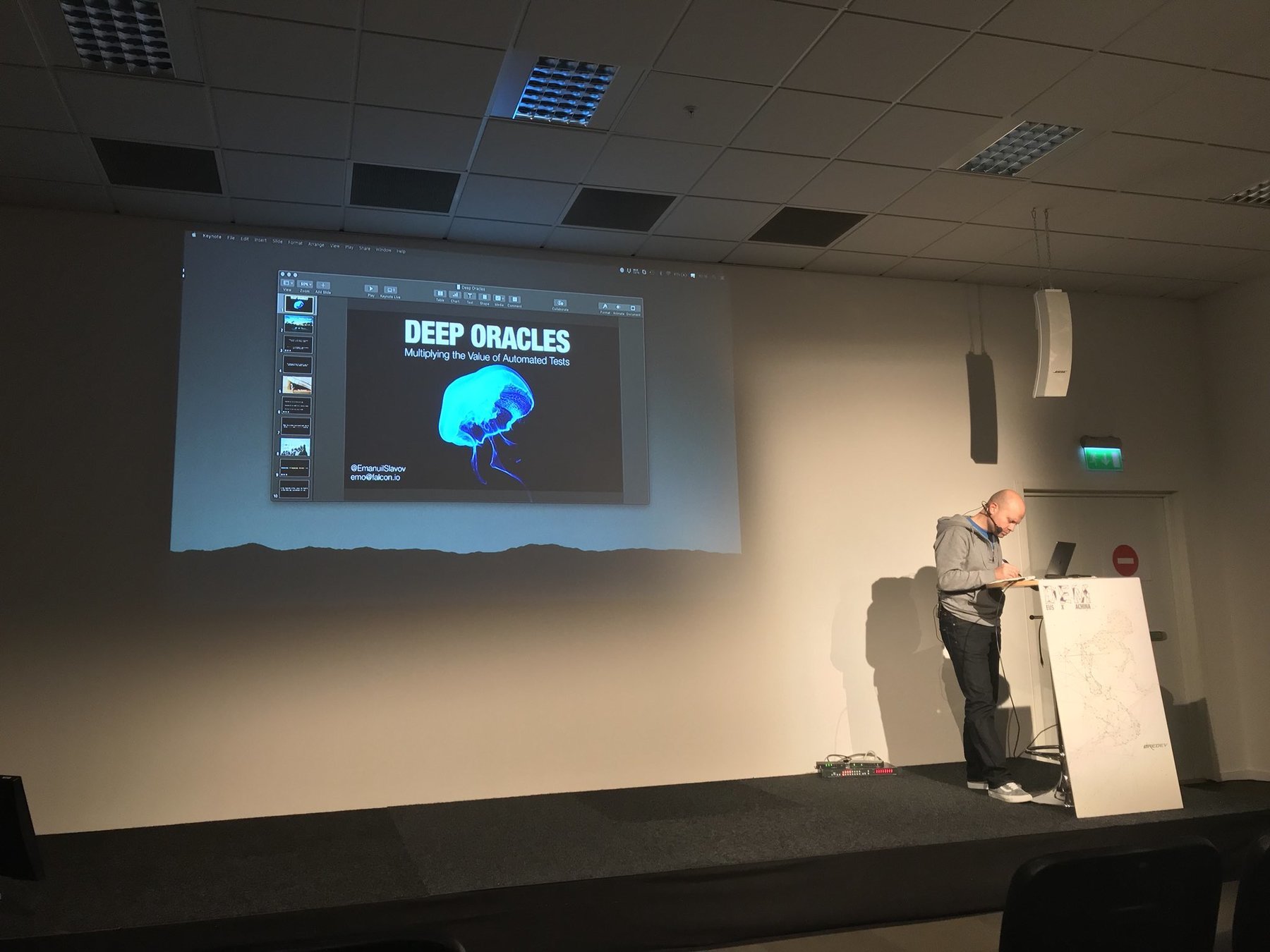

Deep Oracles

Testing in Devops for Engineers

We going to need some tests

80 blog posts

E2E

integration

unit

Smallest pieces

E2E

integration

unit

Tape, QUnit, Mocha, Ava, Jest

Delightful JavaScript Testing

$ npm install --save-dev jest

$ node_modules/.bin/jest/* eslint-env jest */

describe('add', () => {

const add = require('.').add

it('adds numbers', () => {

expect(add(1, 2)).toBe(3)

})

})Unit Tests

Short: setup and assertion

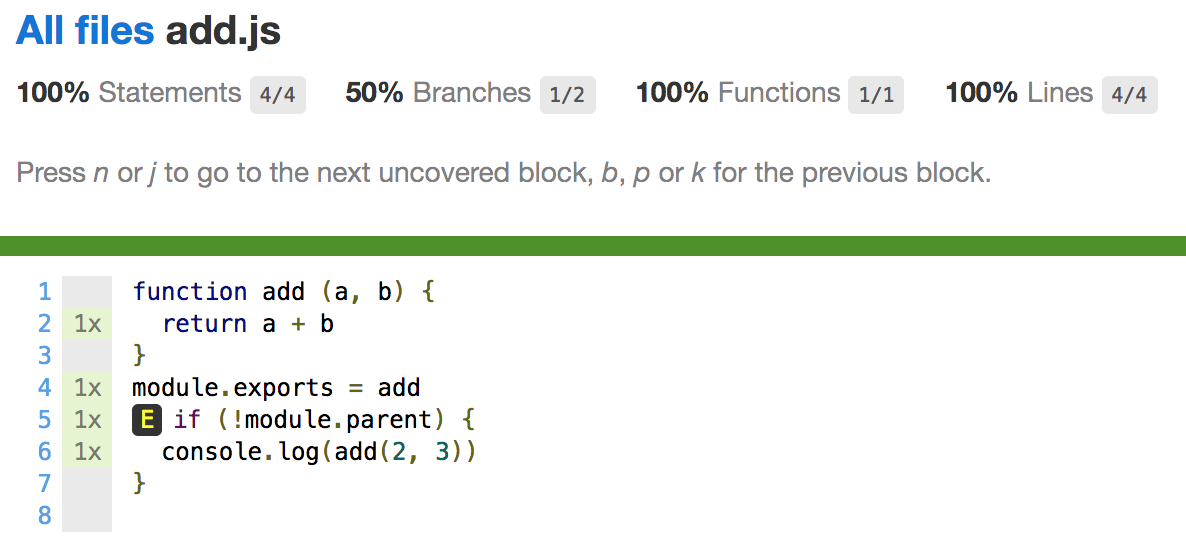

Code coverage

Focus on the code

E2E

integration

unit

Web application

- Open real browser

- Load actual app

- Interact with app like a real user

- See if it works

E2E

integration

unit

Really important to users

Really important to developers

When should I write an end-to-end test?

planning

coding

deploying

staging / QA

production

E2E

E2E

Users

planning

coding

deploying

staging / QA

production

💵

E2E

E2E

Users

💵

🐞$0

💵

💵

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💵

💵

planning

coding

deploying

staging / QA

production

💵

E2E

E2E

Users

💵

🐞$0

💵

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

💰

Answer: E2E

💵

💵

💵

Test the software the way

the user would use it

it('changes the URL when "awesome" is clicked', () => {

cy.visit('/my/resource/path')

cy.get('.awesome-selector')

.click()

cy.url()

.should('include',

'/my/resource/path#awesomeness')

})Declarative Syntax

there are no async / awaits or promise chains

Tests should read naturally

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://example.com');

await page.screenshot({path: 'example.png'});

await browser.close();

})();Puppeteer

test('My Test', async t => {

await t

.setNativeDialogHandler(() => true)

.click('#populate')

.click('#submit-button');

const location = await t.eval(() => window.location);

await t.expect(location.pathname)

.eql('/testcafe/example/thank-you.html');

});TestCafe

it('changes the URL when "awesome" is clicked', () => {

const user = cy

user.visit('/my/resource/path')

user.get('.awesome-selector')

.click()

user.url()

.should('include',

'/my/resource/path#awesomeness')

})Cypress is like a real user

Kent C Dodds https://testingjavascript.com/

Dear user,

- open url localhost:3000/my/resource/path

- click on button "foo"

- check if url includes /my/resource/path#awesomeness

more details: "End-to-end Testing Is Hard - But It Doesn't Have to Be" ReactiveConf 2018 https://www.youtube.com/watch?v=swpz0H0u13k

Unit vs E2E

unit test:

-

focus on code

-

short

-

black box

end-to-end test:

-

focus on feature

-

long

-

external effects

When should I write a unit test vs end-to-end test?

export const add = (a, b) => a + bMy code is called by

import {add} from './math'

add(2, 3)other code

export const add = (a, b) => a + bMy code is called by

import {add} from './math'

add(2, 3)other code

Unit Test

My code is called by the user

My code is called by the user

E2E Test

unit test:

-

you are mocking way too much

end-to-end test:

(enzyme, js-dom)

unit test

end-to-end test:

-

The tests does not behave like a user

unit test:

-

focus on X

-

gives you confidence

-

runs locally and on CI

end-to-end test:

-

focus on X

-

gives you confidence

-

runs locally and on CI

commonalities

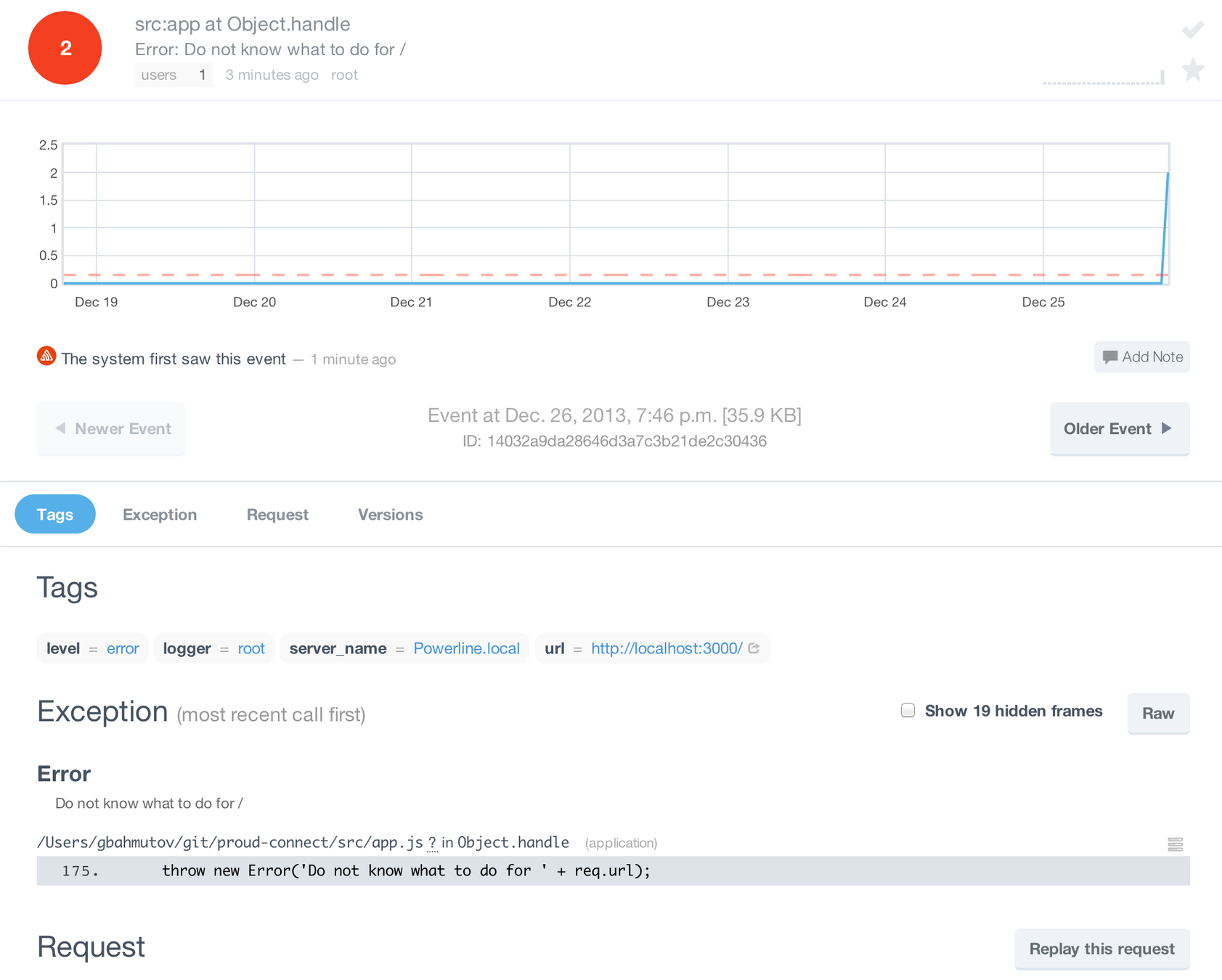

Crashes will happen

image source: http://ktla.com/2016/04/02/small-plane-crashes-into-suv-on-15-freeway-in-san-diego-county/

undefined is not a function

Sentry.io crash service

if (process.env.NODE_ENV === 'production') {

var raven = require('raven');

var SENTRY_DSN = 'https://<DSN>@app.getsentry.com/...';

var client = new raven.Client(SENTRY_DSN);

client.patchGlobal();

}

foo.bar // this Error will be reportednpm install raven --save

Stack traces

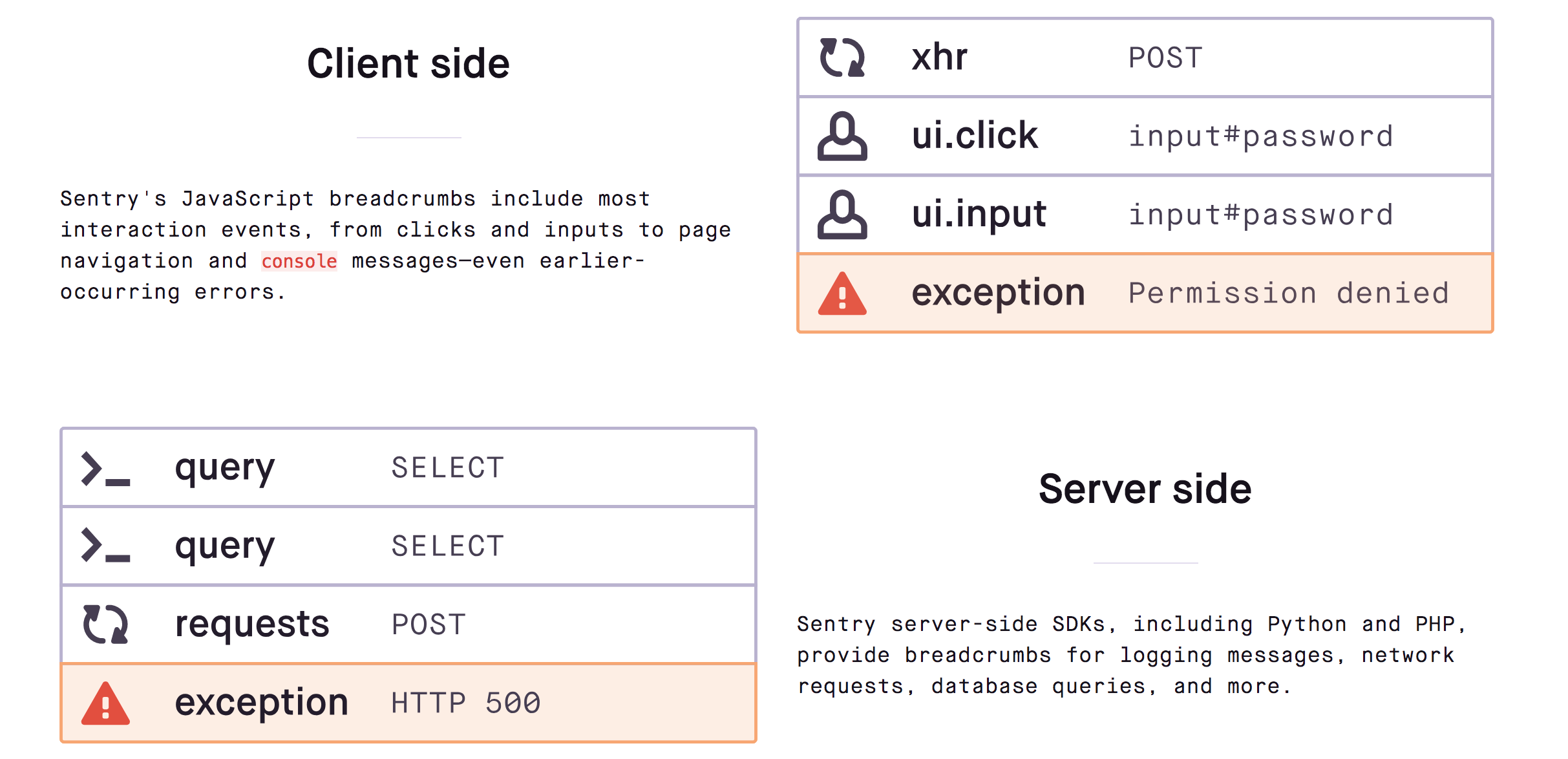

Breadcrumbs

Keep your test balance

unit / E2E tests for happy path

error handling

tests based on crashes

50%

20%

30%

Writing tests

Why this does not work:

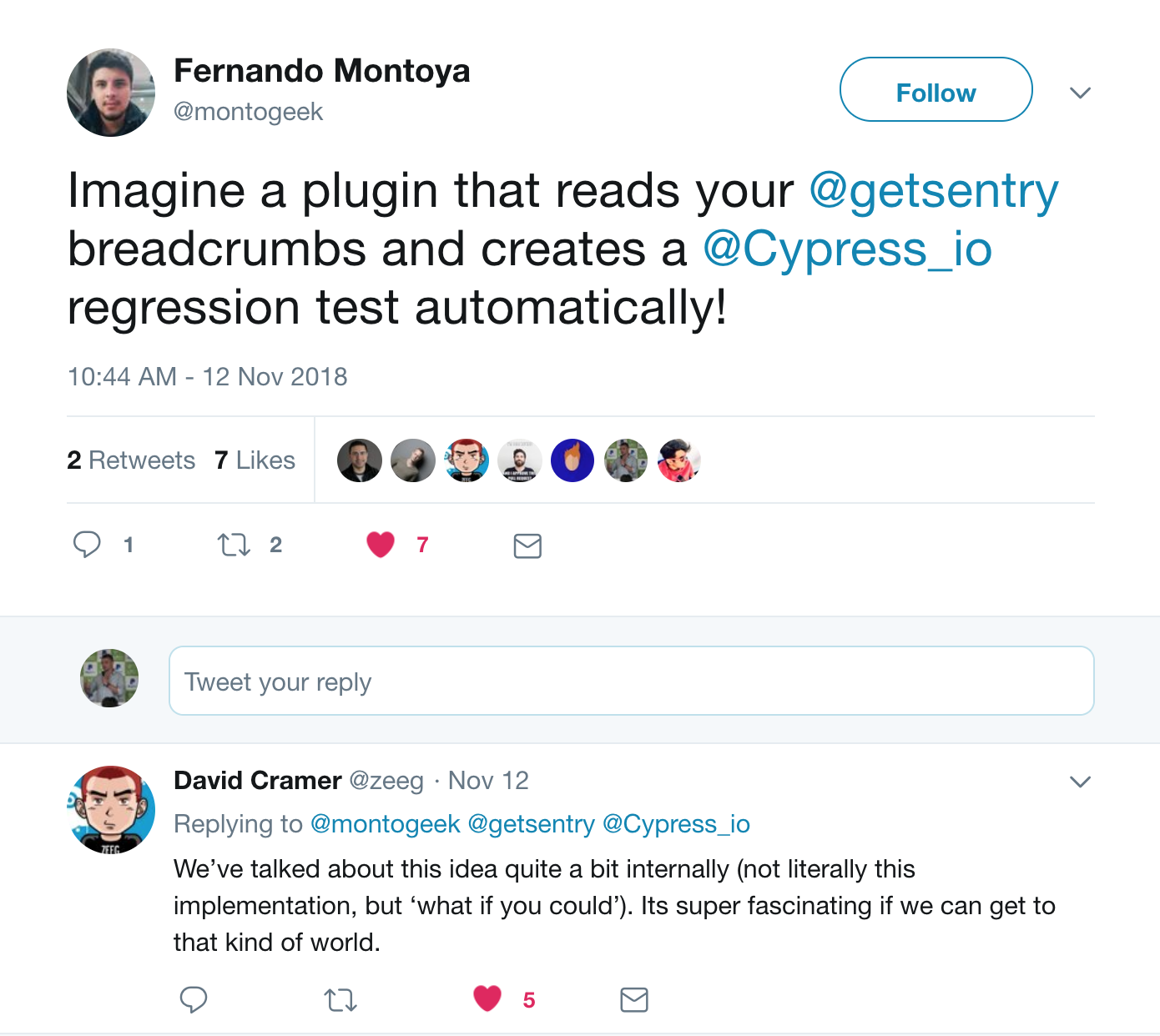

Generate test from crash breadcrumbs ...

Record user actions and generate a test...

Good GitHub issue:

What did you do?

What did you expect to happen?

What has happened?

(repeat)

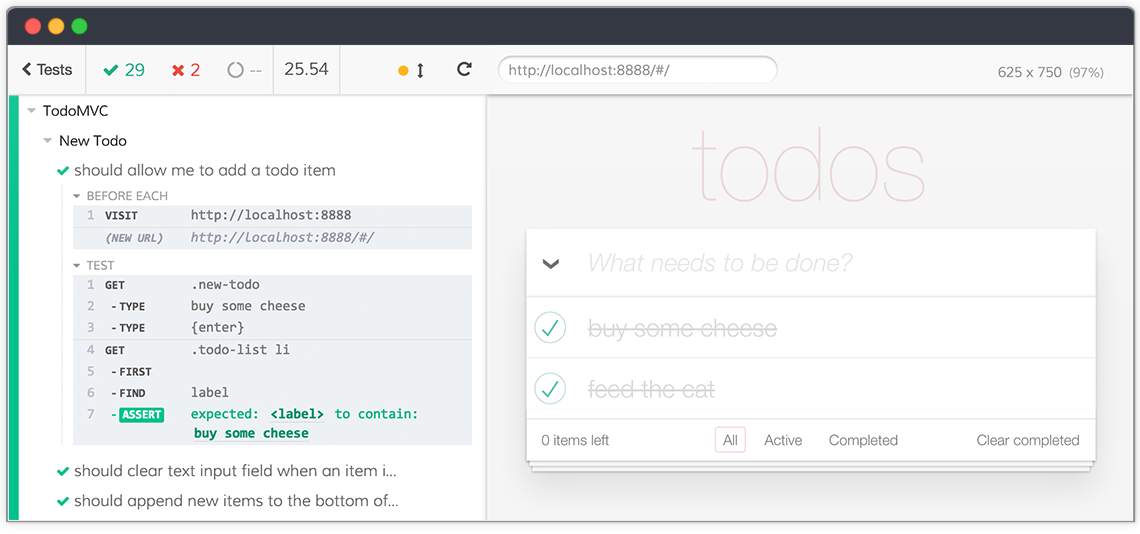

it('adds 2 todos', () => {

cy.visit('http://localhost:3000')

cy.get('.new-todo')

.type('learn testing{enter}')

cy.get('.todo-list li')

.should('have.length', 1)

cy.get('.new-todo')

.type('be cool{enter}')

cy.get('.todo-list li')

.should('have.length', 2)

})command (action)

assertion

command (action)

assertion

Good Test

command (action)

assertion

command (action)

assertion

Good Test

Easy to record

user actions

command (action)

assertion

command (action)

assertion

Good Test

How to generate assertions from user recording?!

If you have ideas, let me know

@bahmutov

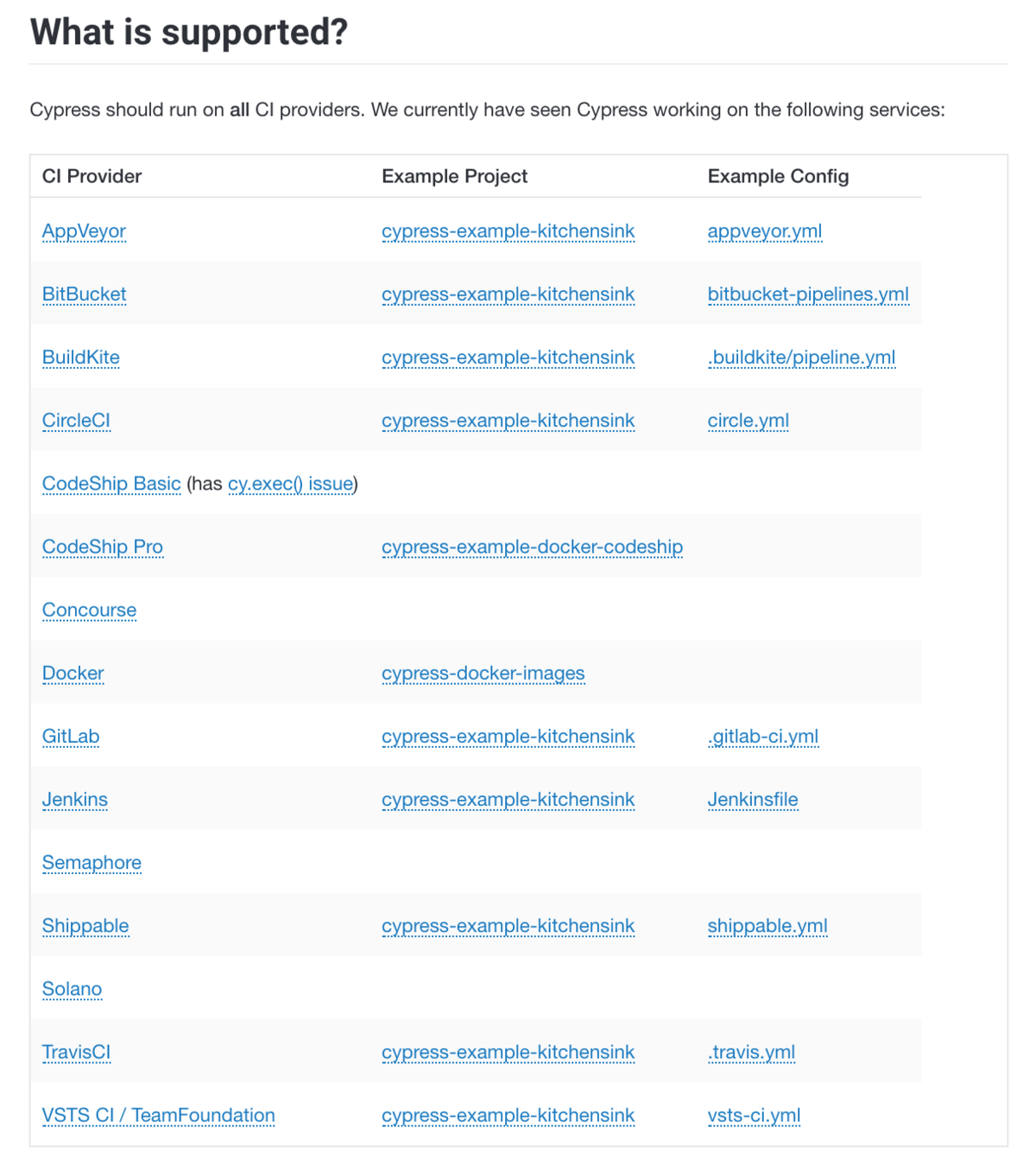

Good news about Continuous Integration

Making it easy for users is not easy

version: 2

jobs:

test:

docker:

- image: cypress/base:10

steps:

- checkout

# restore folders with npm dependencies and Cypress binary

- restore_cache:

keys:

- cache-{{ checksum "package.json" }}

# install npm dependencies and Cypress binary

# if they were cached, this step is super quick

- run:

name: Install dependencies

command: npm ci

- run: npm run cy:verify

# save npm dependencies and Cypress binary for future runs

- save_cache:

key: cache-{{ checksum "package.json" }}

paths:

- ~/.npm

- ~/.cache

# start server before starting tests

- run:

command: npm start

background: true

- run: npm run e2e:record

workflows:

version: 2

build:

jobs:

- testDocker image

typical CI config file

version: 2

jobs:

test:

docker:

- image: cypress/base:10

steps:

- checkout

# restore folders with npm dependencies and Cypress binary

- restore_cache:

keys:

- cache-{{ checksum "package.json" }}

# install npm dependencies and Cypress binary

# if they were cached, this step is super quick

- run:

name: Install dependencies

command: npm ci

- run: npm run cy:verify

# save npm dependencies and Cypress binary for future runs

- save_cache:

key: cache-{{ checksum "package.json" }}

paths:

- ~/.npm

- ~/.cache

# start server before starting tests

- run:

command: npm start

background: true

- run: npm run e2e:record

workflows:

version: 2

build:

jobs:

- testDocker image

Caching

Caching

typical CI config file

version: 2

jobs:

test:

docker:

- image: cypress/base:10

steps:

- checkout

# restore folders with npm dependencies and Cypress binary

- restore_cache:

keys:

- cache-{{ checksum "package.json" }}

# install npm dependencies and Cypress binary

# if they were cached, this step is super quick

- run:

name: Install dependencies

command: npm ci

- run: npm run cy:verify

# save npm dependencies and Cypress binary for future runs

- save_cache:

key: cache-{{ checksum "package.json" }}

paths:

- ~/.npm

- ~/.cache

# start server before starting tests

- run:

command: npm start

background: true

- run: npm run e2e:record

workflows:

version: 2

build:

jobs:

- testDocker image

Caching

Caching

Install

typical CI config file

version: 2

jobs:

test:

docker:

- image: cypress/base:10

steps:

- checkout

# restore folders with npm dependencies and Cypress binary

- restore_cache:

keys:

- cache-{{ checksum "package.json" }}

# install npm dependencies and Cypress binary

# if they were cached, this step is super quick

- run:

name: Install dependencies

command: npm ci

- run: npm run cy:verify

# save npm dependencies and Cypress binary for future runs

- save_cache:

key: cache-{{ checksum "package.json" }}

paths:

- ~/.npm

- ~/.cache

# start server before starting tests

- run:

command: npm start

background: true

- run: npm run e2e:record

workflows:

version: 2

build:

jobs:

- testDocker image

Caching

Caching

App and tests

typical CI config file

Install

defaults: &defaults

working_directory: ~/app

docker:

- image: cypress/browsers:chrome67

version: 2

jobs:

build:

<<: *defaults

steps:

- checkout

# find compatible cache from previous build,

# it should have same dependencies installed from package.json checksum

- restore_cache:

keys:

- cache-{{ .Branch }}-{{ checksum "package.json" }}

- run:

name: Install Dependencies

command: npm ci

# run verify and then save cache.

# this ensures that the Cypress verified status is cached too

- run: npm run cy:verify

# save new cache folder if needed

- save_cache:

key: cache-{{ .Branch }}-{{ checksum "package.json" }}

paths:

- ~/.npm

- ~/.cache

- run: npm run types

- run: npm run stop-only

# all other test jobs will run AFTER this build job finishes

# to avoid reinstalling dependencies, we persist the source folder "app"

# and the Cypress binary to workspace, which is the fastest way

# for Circle jobs to pass files

- persist_to_workspace:

root: ~/

paths:

- app

- .cache/Cypress

4x-electron:

<<: *defaults

# tell CircleCI to execute this job on 4 machines simultaneously

parallelism: 4

steps:

- attach_workspace:

at: ~/

- run:

command: npm start

background: true

# runs Cypress test in load balancing (parallel) mode

# and groups them in Cypress Dashboard under name "4x-electron"

- run: npm run e2e:record -- --parallel --group $CIRCLE_JOB

workflows:

version: 2

# this workflow has 4 jobs to show case Cypress --parallel and --group flags

# "build" installs NPM dependencies so other jobs don't have to

# └ "1x-electron" runs all specs just like Cypress pre-3.1.0 runs them

# └ "4x-electron" job load balances all specs across 4 CI machines

# └ "2x-chrome" load balances all specs across 2 CI machines and uses Chrome browser

build_and_test:

jobs:

- build

# this group "4x-electron" will load balance all specs

# across 4 CI machines

- 4x-electron:

requires:

- buildParallel config is ... more complicated

typical CI config file

# first, install Cypress, then run all tests (in parallel)

stages:

- build

- test

# to cache both npm modules and Cypress binary we use environment variables

# to point at the folders we can list as paths in "cache" job settings

variables:

npm_config_cache: "$CI_PROJECT_DIR/.npm"

CYPRESS_CACHE_FOLDER: "$CI_PROJECT_DIR/cache/Cypress"

# cache using branch name

# https://gitlab.com/help/ci/caching/index.md

cache:

key: ${CI_COMMIT_REF_SLUG}

paths:

- .npm

- cache/Cypress

- node_modules

# this job installs NPM dependencies and Cypress

install:

image: cypress/base:10

stage: build

script:

- npm ci

- $(npm bin)/print-env CI

- npm run cy:verify

# all jobs that actually run tests can use the same definition

.job_template: &job

image: cypress/base:10

stage: test

script:

# print CI environment variables for reference

- $(npm bin)/print-env CI

# start the server in the background

- npm run start:ci &

# run Cypress test in load balancing mode, pass id to tie jobs together

- npm run e2e:record -- --parallel --ci-build-id $CI_PIPELINE_ID --group electrons

# actual job definitions

# all steps are the same, they come from the template above

electrons-1:

<<: *job

electrons-2:

<<: *job

electrons-3:

<<: *job

electrons-4:

<<: *job

electrons-5:

<<: *jobpipeline {

agent {

// this image provides everything needed to run Cypress

docker {

image 'cypress/base:10'

}

}

stages {

// first stage installs node dependencies and Cypress binary

stage('build') {

steps {

// there a few default environment variables on Jenkins

// on local Jenkins machine (assuming port 8080) see

// http://localhost:8080/pipeline-syntax/globals#env

echo "Running build ${env.BUILD_ID} on ${env.JENKINS_URL}"

sh 'npm ci'

sh 'npm run cy:verify'

}

}

stage('start local server') {

steps {

// start local server in the background

// we will shut it down in "post" command block

sh 'nohup npm start &'

}

}

// this tage runs end-to-end tests, and each agent uses the workspace

// from the previous stage

stage('cypress parallel tests') {

environment {

// we will be recordint test results and video on Cypress dashboard

// to record we need to set an environment variable

// we can load the record key variable from credentials store

// see https://jenkins.io/doc/book/using/using-credentials/

CYPRESS_RECORD_KEY = credentials('cypress-example-kitchensink-record-key')

// because parallel steps share the workspace they might race to delete

// screenshots and videos folders. Tell Cypress not to delete these folders

CYPRESS_trashAssetsBeforeRuns = 'false'

}

// https://jenkins.io/doc/book/pipeline/syntax/#parallel

parallel {

// start several test jobs in parallel, and they all

// will use Cypress Dashboard to load balance any found spec files

stage('tester A') {

steps {

echo "Running build ${env.BUILD_ID}"

sh "npm run e2e:record:parallel"

}

}

// second tester runs the same command

stage('tester B') {

steps {

echo "Running build ${env.BUILD_ID}"

sh "npm run e2e:record:parallel"

}

}

}

}

}

post {

// shutdown the server running in the background

always {

echo 'Stopping local server'

sh 'pkill -f http-server'

}

}

}language: node_js

node_js:

# Node 10.3+ includes npm@6 which has good "npm ci" command

- 10.8

cache:

# cache both npm modules and Cypress binary

directories:

- ~/.npm

- ~/.cache

override:

- npm ci

- npm run cy:verify

defaults: &defaults

script:

# ## print all Travis environment variables for debugging

- $(npm bin)/print-env TRAVIS

- npm start -- --silent &

- npm run cy:run -- --record --parallel --group $STAGE_NAME

# after all tests finish running we need

# to kill all background jobs (like "npm start &")

- kill $(jobs -p) || true

jobs:

include:

# we have multiple jobs to execute using just a single stage

# but we can pass group name via environment variable to Cypress test runner

- stage: test

env:

- STAGE_NAME=1x-electron

<<: *defaults

# run tests in parallel by including several test jobs with same name variable

- stage: test

env:

- STAGE_NAME=4x-electron

<<: *defaults

- stage: test

env:

- STAGE_NAME=4x-electron

<<: *defaults

- stage: test

env:

- STAGE_NAME=4x-electron

<<: *defaults

- stage: test

env:

- STAGE_NAME=4x-electron

<<: *defaultsDifferent CIs

Copy / paste / tweak until CI passes

CircleCI Orbs

Reusable CI configuration code

CircleCI Orbs

version: 2.1

orbs:

cypress: cypress-io/cypress@1.0.0

workflows:

build:

jobs:

- cypress/runCircleCI Orbs

version: 2.1

orbs:

cypress: cypress-io/cypress@1.0.0

workflows:

build:

jobs:

- cypress/runVersioned namespaced package

CircleCI Orbs

version: 2.1

orbs:

cypress: cypress-io/cypress@1.0.0

workflows:

build:

jobs:

- cypress/run"run" command defined in cypress orb

CircleCI Orbs

version: 2.1

orbs:

cypress: cypress-io/cypress@1.0.0

workflows:

build:

jobs:

- cypress/install

- cypress/run:

requires:

- cypress/install

record: true

parallel: true

parallelism: 10

start: 'npm start'Parallel run scenario

CircleCI Orbs

version: 2.1

orbs:

cypress: cypress-io/cypress@1.0.0

workflows:

build:

jobs:

- cypress/install

- cypress/run:

requires:

- cypress/install

record: true

parallel: true

parallelism: 10

start: 'npm start'Job parameters

Orbs: best thing in CI since Docker

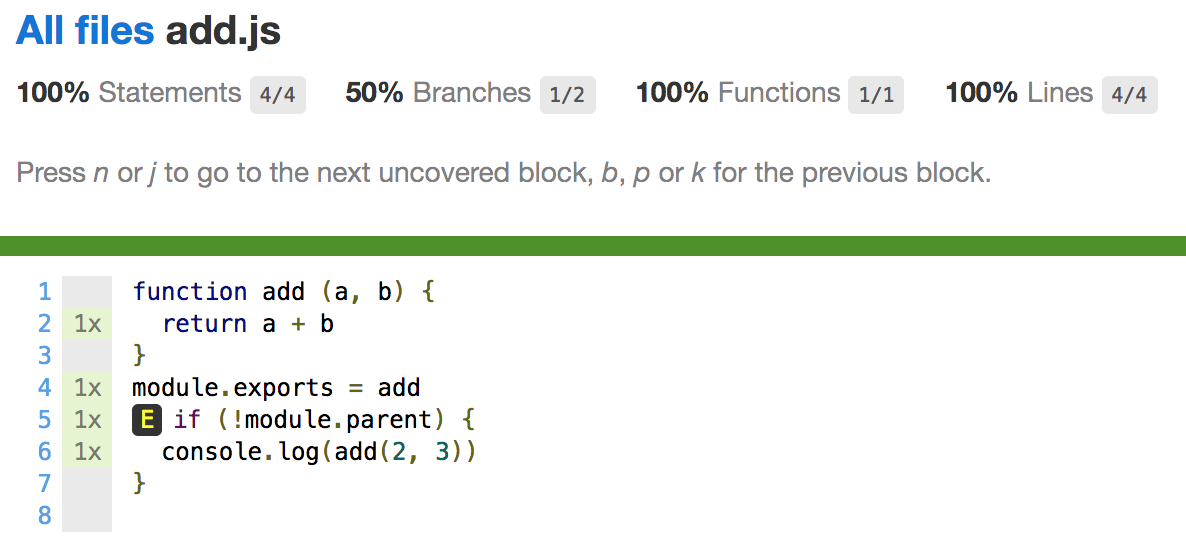

100% code coverage

Tools: Istanbul, NYC

Coverage is hard

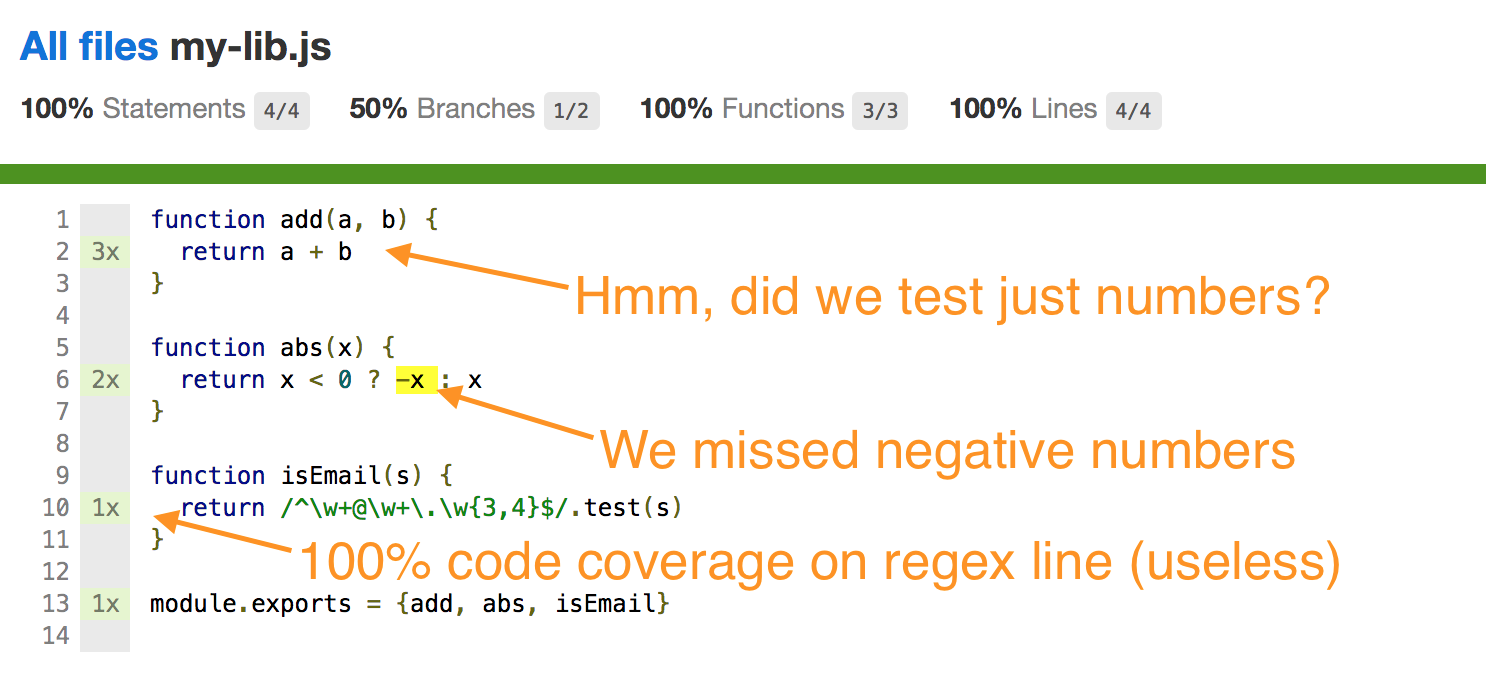

Code coverage

is tricky

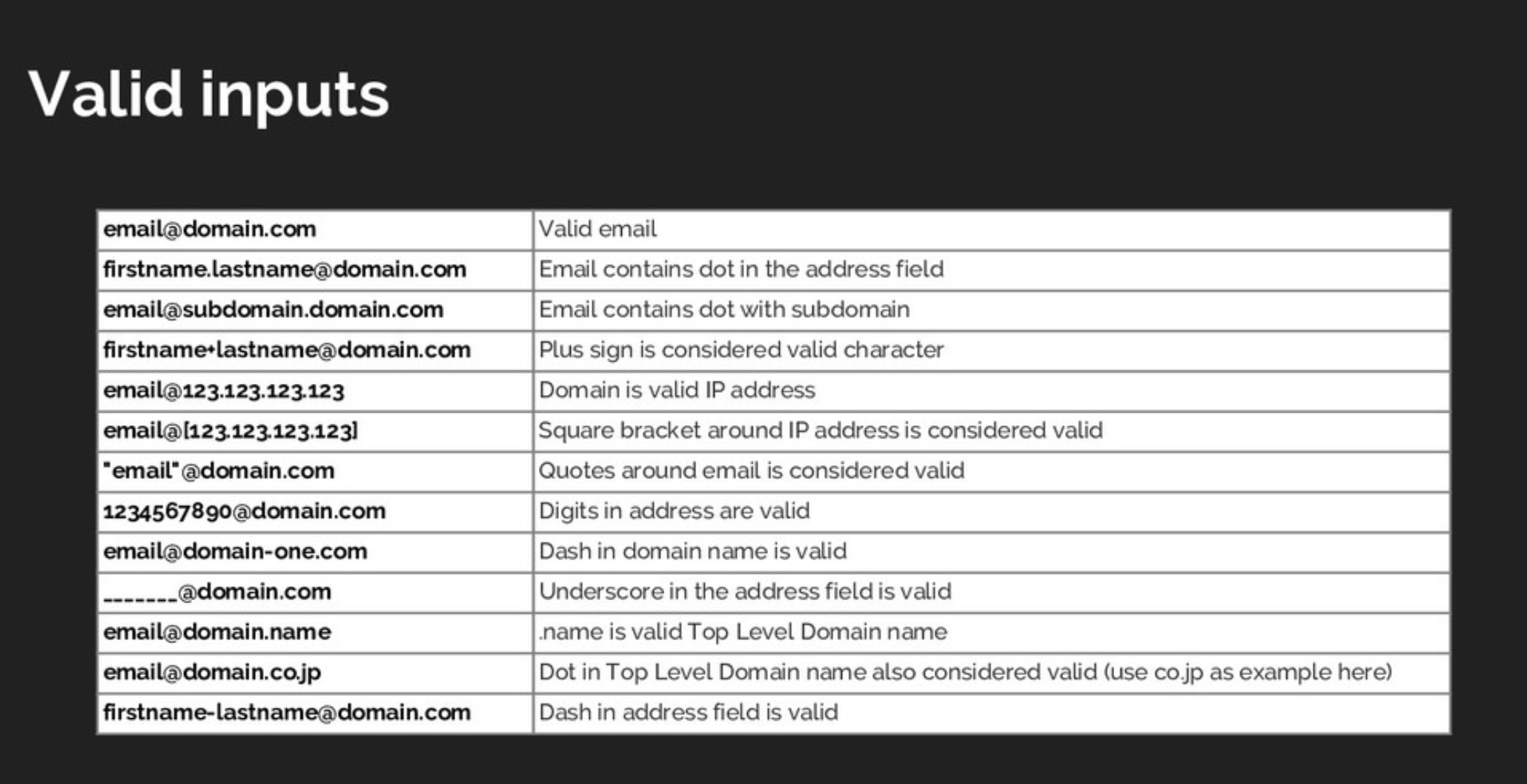

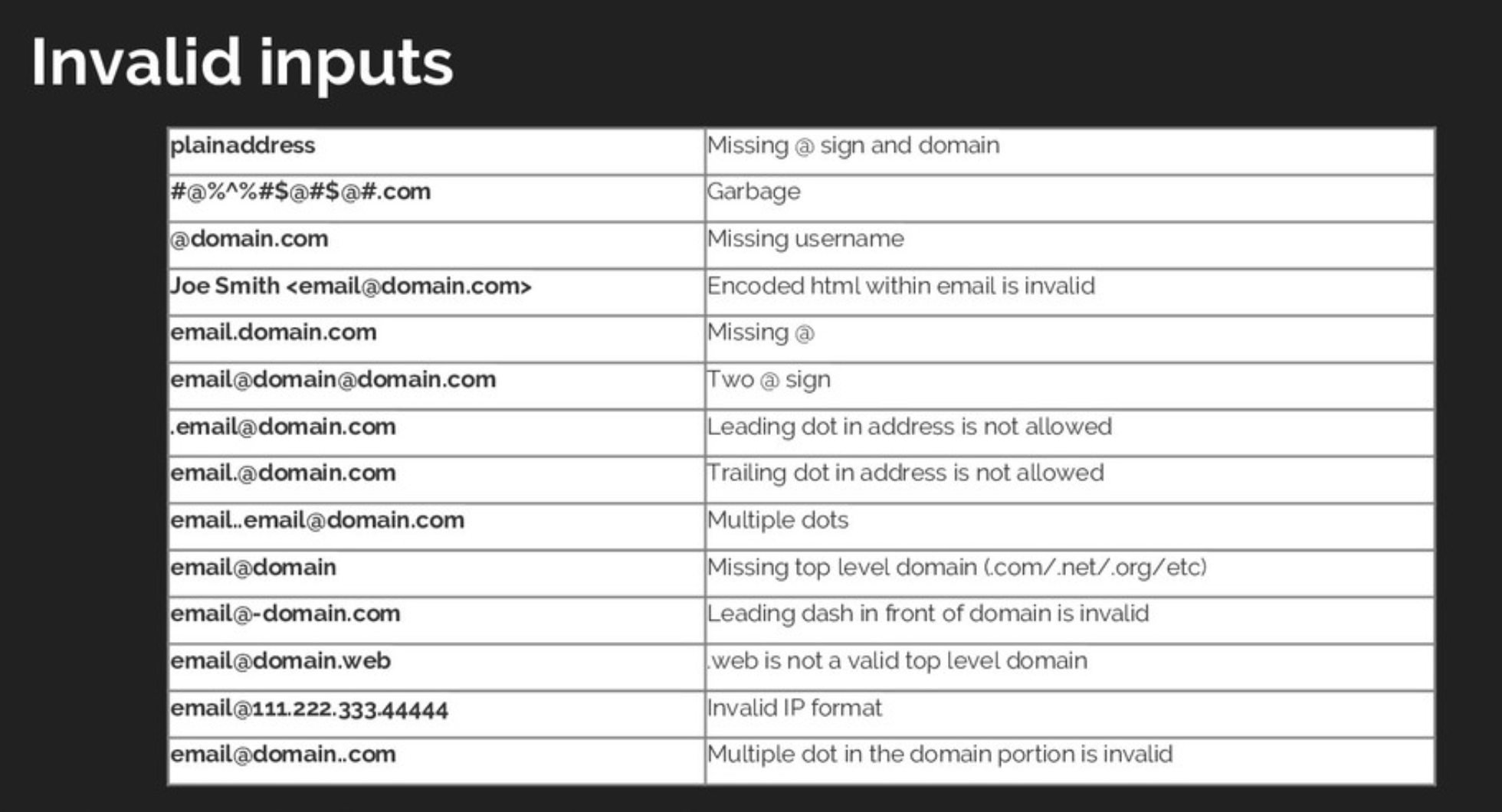

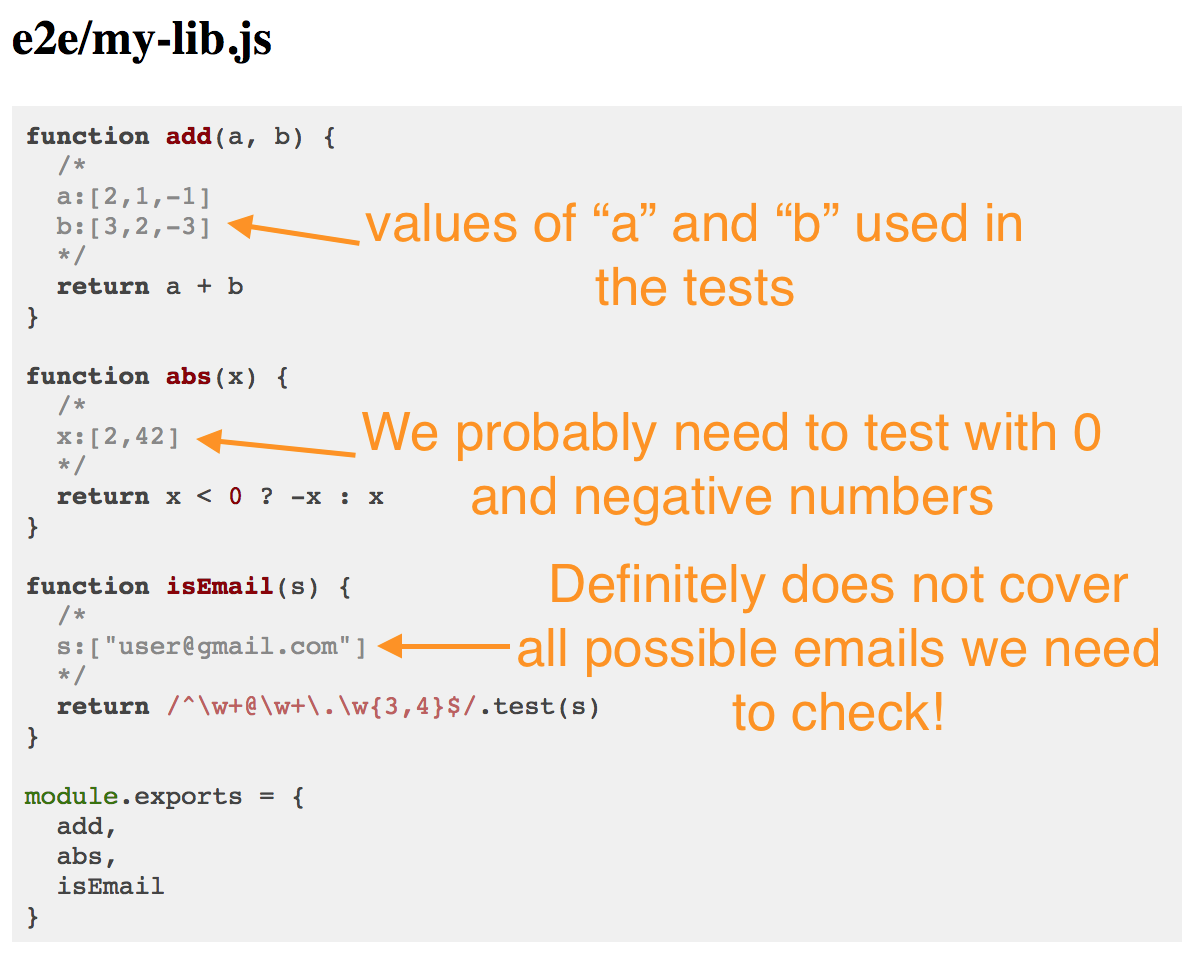

const isEmail = (s) =>

/^\w+@\w+\.\w{3,4}$/.test(s)

// 1 test = 100% code coverage

(?:[a-z0-9!#$%&'*+/=?^_`{|}~-]+(?:\.[a-z0-9!#$%&'*+/=?^_`{|}~-]+)*|"(?:[\x01-\x08\x0b\x0c\x0e-\x1f\x21\x23-\x5b\x5d-\x7f]|\\[\x01-\x09\x0b\x0c\x0e-\x7f])*")@(?:(?:[a-z0-9](?:[a-z0-9-]*[a-z0-9])?\.)+[a-z0-9](?:[a-z0-9-]*[a-z0-9])?|\[(?:(?:25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\.){3}(?:25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?|[a-z0-9-]*[a-z0-9]:(?:[\x01-\x08\x0b\x0c\x0e-\x1f\x21-\x5a\x53-\x7f]|\\[\x01-\x09\x0b\x0c\x0e-\x7f])+)\])

Code coverage

vs

Data coverage

mocha -r data-cover spec.jsI don't think code coverage is useful for end-to-end tests

You cannot test every part of your car by driving around

user interface

application code

vendor code

polyfills

there is probably a lot of app code unreachable through the UI alone

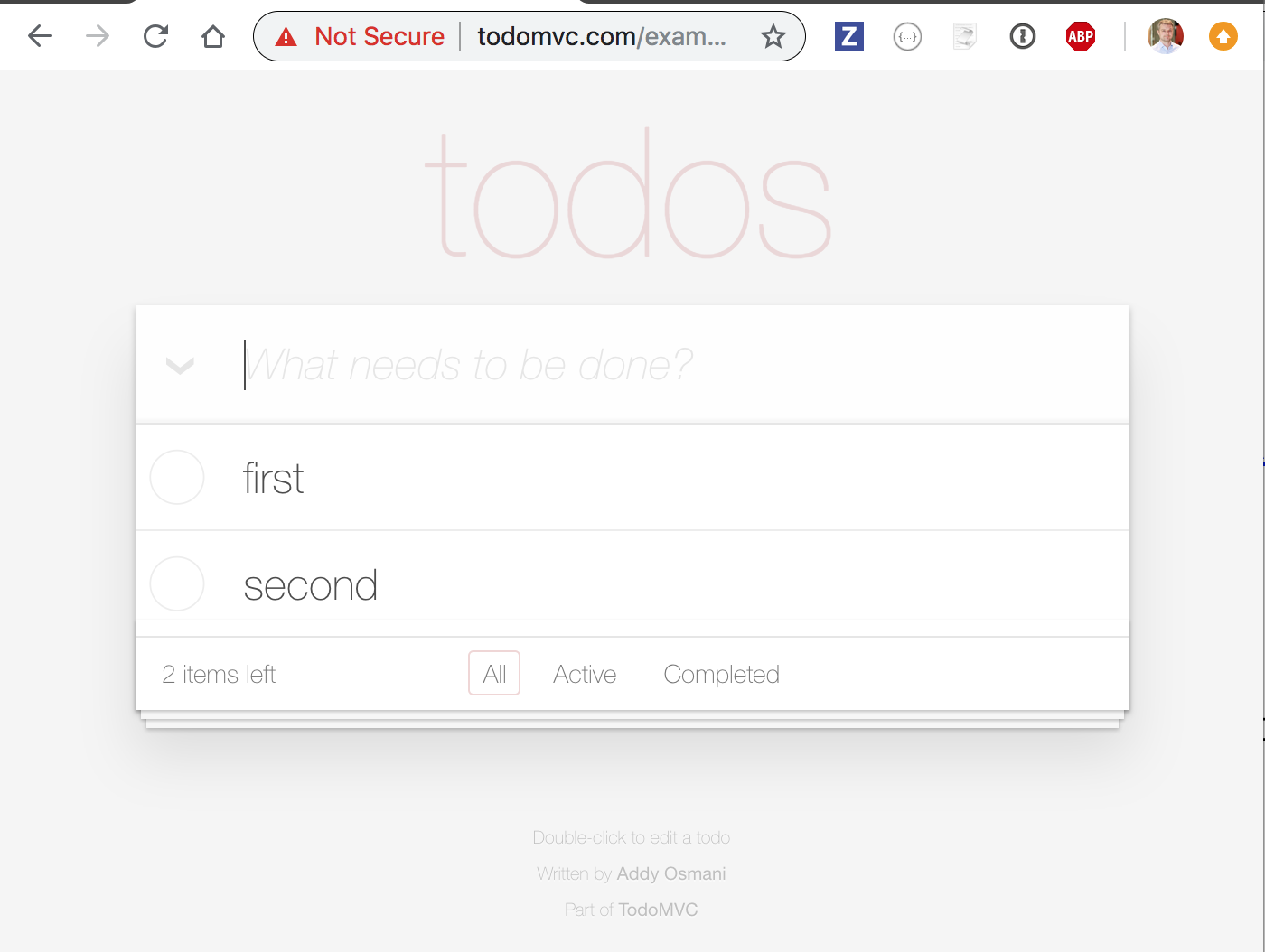

beforeEach(() => {

cy.visit('/')

})

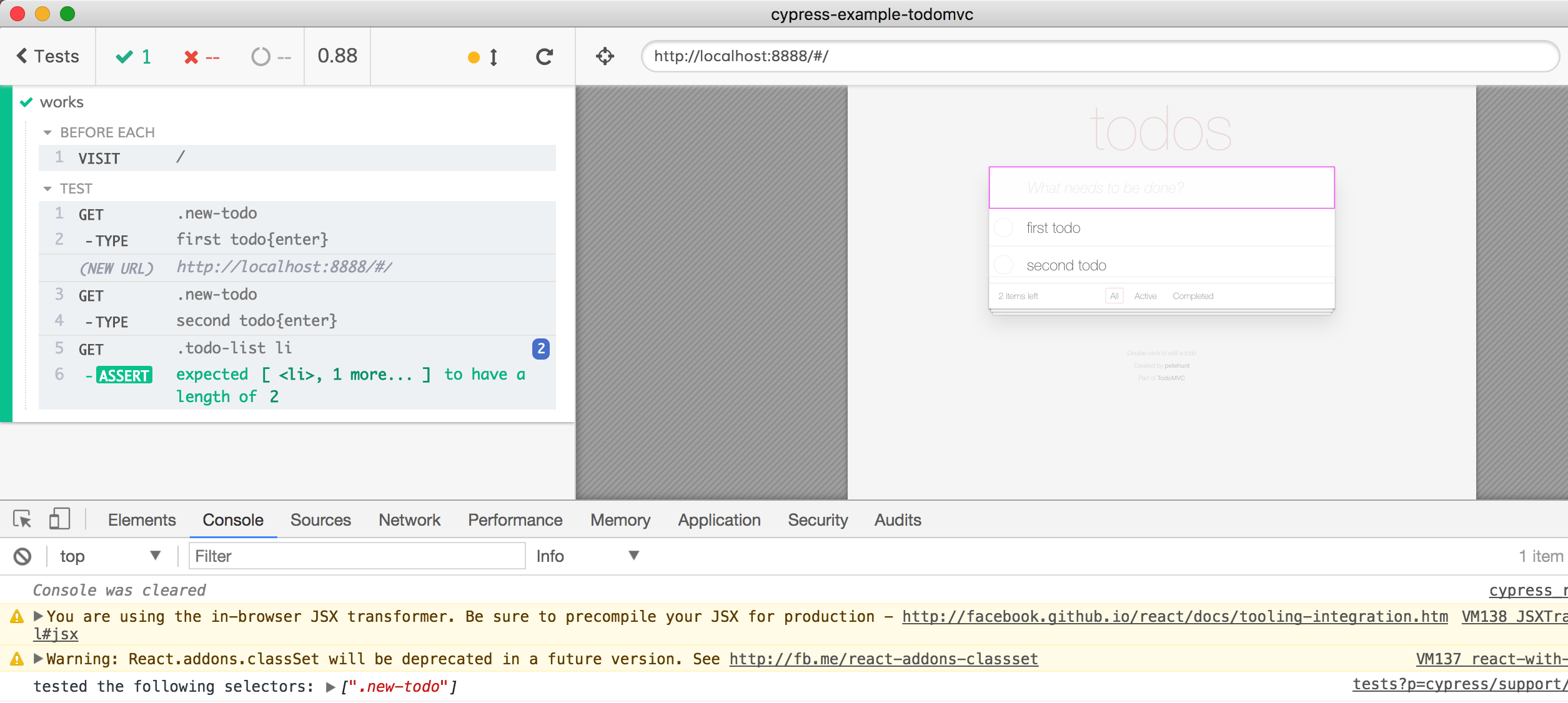

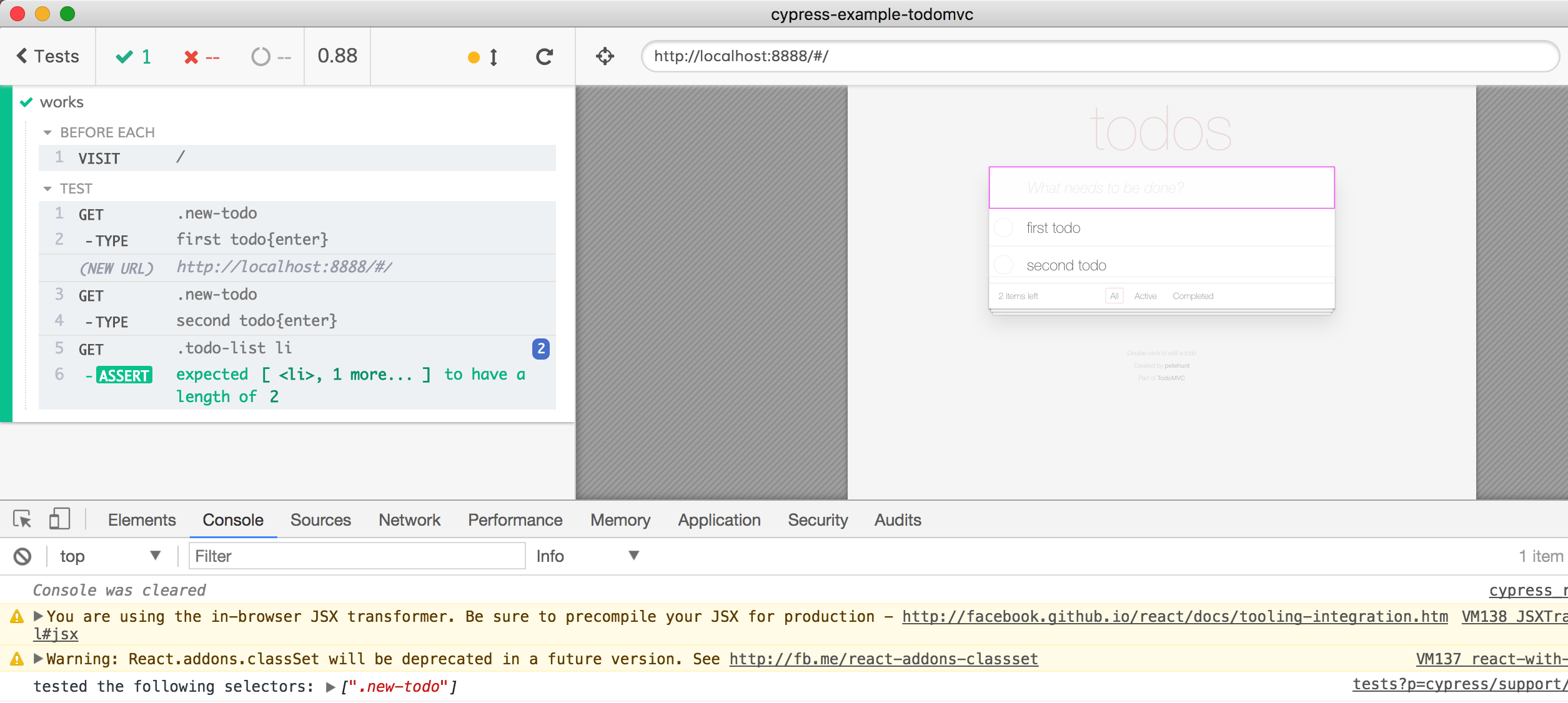

it('works', function () {

cy.get('.new-todo').type('first todo{enter}')

cy.get('.new-todo').type('second todo{enter}')

cy.get('.todo-list li').should('have.length', 2)

})What does this test cover?

Cypress.Commands.overwrite('type',

(type, $el, text, options) => {

rememberSelector($el)

return type($el, text, options)

})Track "cy.type" elements

Highlight tested element

Elements NOT covered by the test

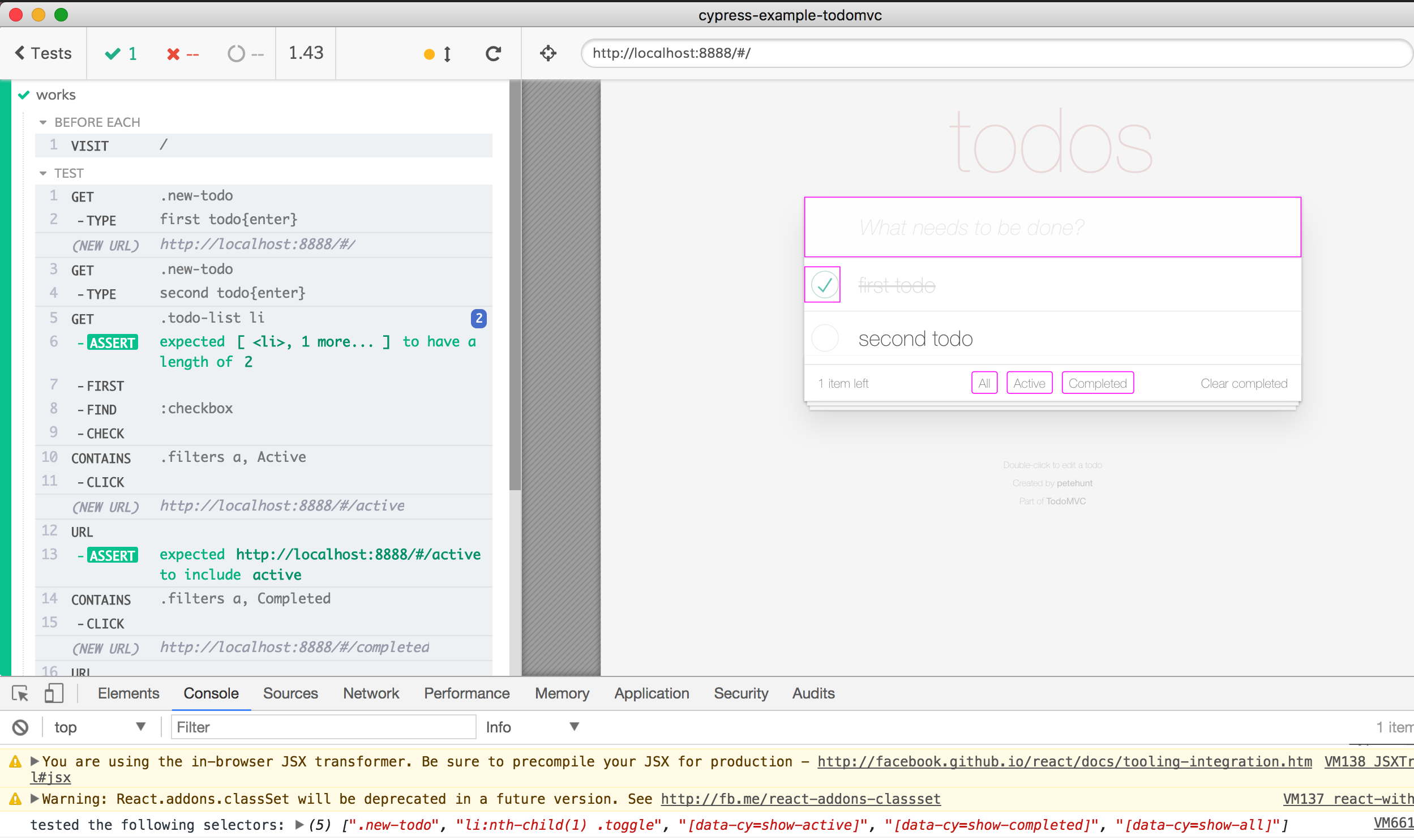

beforeEach(() => {

cy.visit('/')

})

it('works', function () {

cy.get('.new-todo').type('first todo{enter}')

cy.get('.new-todo').type('second todo{enter}')

cy.get('.todo-list li').should('have.length', 2)

.first().find(':checkbox').check()

cy.contains('.filters a', 'Active').click()

cy.url().should('include', 'active')

cy.contains('.filters a', 'Completed').click()

cy.url().should('include', 'completed')

cy.contains('.filters a', 'All').click()

cy.url().should('include', '#/')

})Extend test to cover more elements

The test did not cover "Clear completed" button

Problem: only "check" this box,

but not "uncheck"

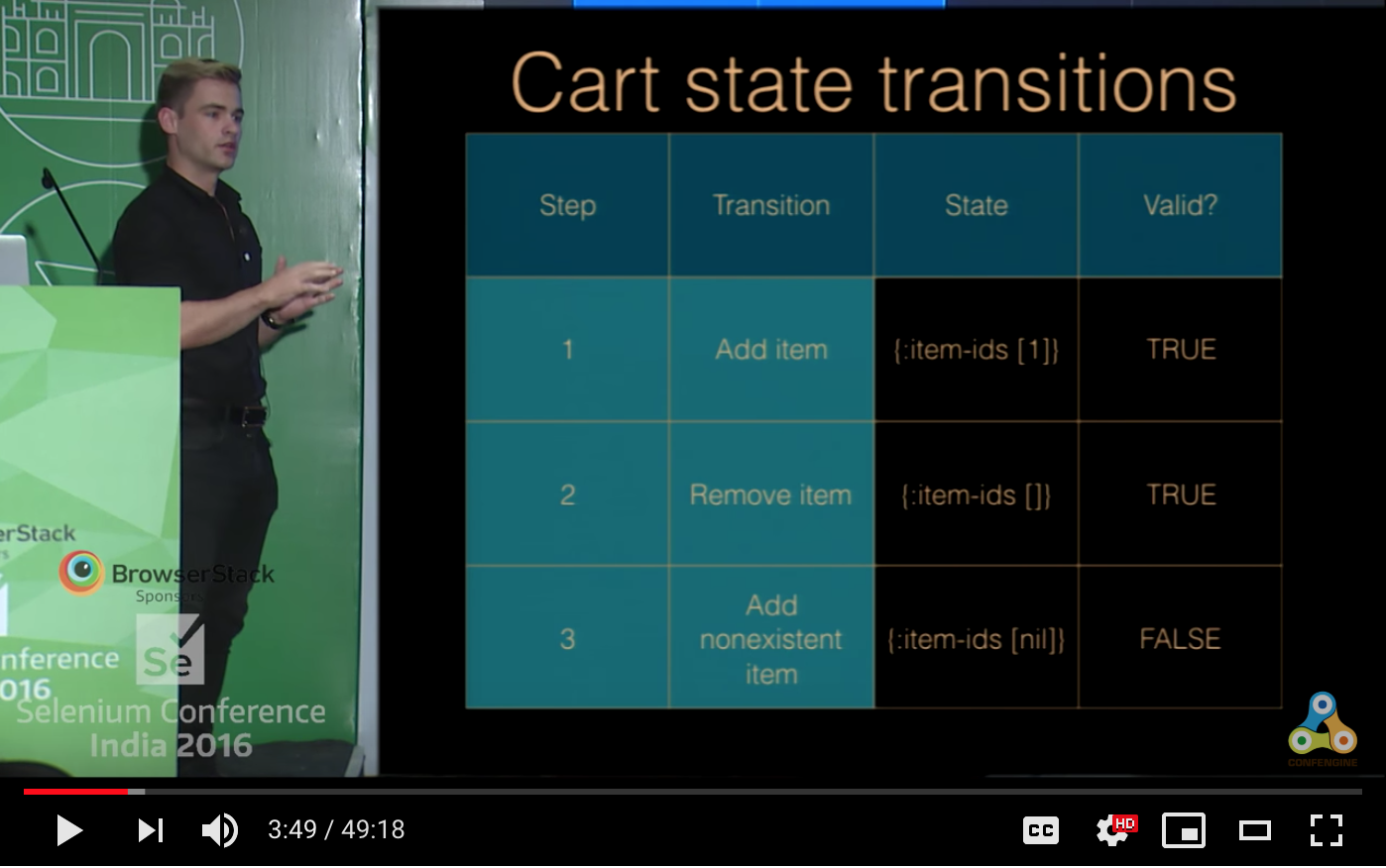

Sean Grove @sgrove

Tests should cover all important states of the app, not UI

UI = f (state)

tests = g (UI)

tests = h (state)

ReactiveConf 2018 – David Khourshid: Reactive State Machines and Statecharts

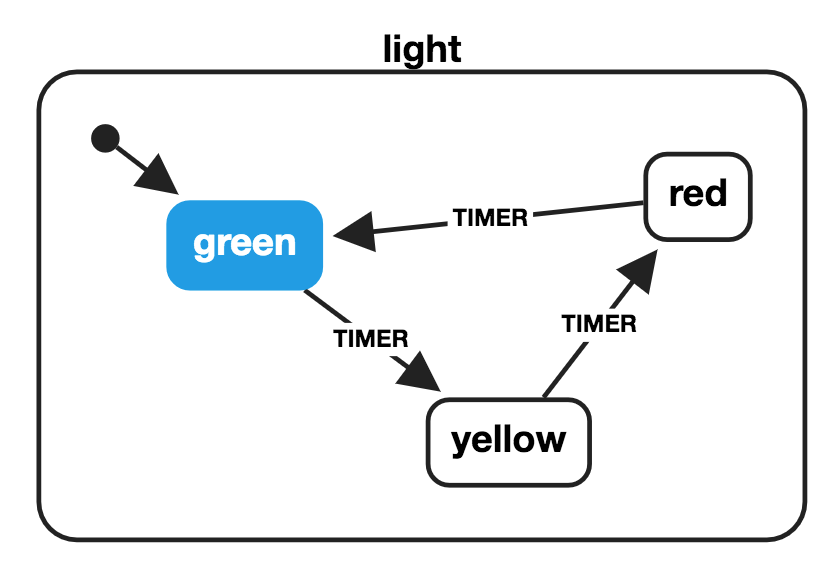

import { Machine } from 'xstate';

const lightMachine = Machine({

id: 'light',

initial: 'green',

states: {

green: {

on: {

TIMER: 'yellow',

}

},

yellow: {

on: {

TIMER: 'red',

}

},

red: {

on: {

TIMER: 'green',

}

}

}

});Code in https://github.com/bahmutov/tic-tac-toe by David K

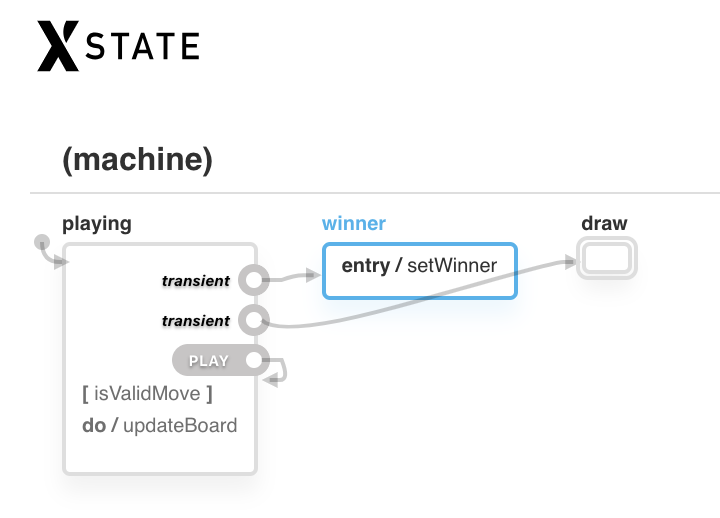

const ticTacToeMachine = Machine(

{

initial: "playing",

states: {

playing: {

on: {

"": [

{ target: "winner", cond: "checkWin" },

{ target: "draw", cond: "checkDraw" }

],

PLAY: [

{

target: "playing",

cond: "isValidMove",

actions: "updateBoard"

}

]

}

},

winner: {

onEntry: "setWinner"

},

draw: {

type: "final"

}

}

}

...

}

const {

getShortestValuePaths

} = require('xstate/lib/graph')

const ticTacToeMachine = require('./machine')

const shortestValuePaths = getShortestValuePaths(ticTacToeMachine)

const winnerXPath = filterWinnerX(shortestValuePaths)

const winnerOPath = filterWinnerY(shortestValuePaths)

const draws = filterDraw(shortestValuePaths)tests = h (state)

it('player X wins', () => {

play(winnerXPath)

cy.contains('h2', 'Player X wins!')

})

it('player O wins', () => {

play(winnerOPath)

cy.contains('h2', 'Player O wins!')

})

draws.forEach((draw, k) => {

it(`plays to a draw ${k}`, () => {

const drawPath = shortestValuePaths[draw]

play(drawPath)

cy.contains('h2', 'Draw')

})

})tests = h (state)

Cypress running autogenerated tests

tests

code

coverage

data

coverage

element

coverage

state

coverage

Well Tested Software

Rethink test coverage

CI configuration: orbs

If it hurts, do more of it

push ups, public speaking, code deploys, end-to-end tests

Well Tested Software