Attention & Transformers

Benjamin Akera

With applications in NLP

NLP

What is it?

Who has used it?

A few Examples ?

Why I find it exciting

What this talk is Not

A complete introduction to NLP

Does not cover all models, aspects, papers, architectures

Not preaching, should be interactive: Ask

What this talk is:

An overview of recent simple architectural tricks that have influenced NLP as we know it now, with Deep Learning

Interactive

Ask as many questions

Let's Consider: You're at The Border

Erinya lyo ggw'ani

bakuyita batya?How???

PART 1

Attention

〞

Attention; What is it?

– Question

Scenario: A Party

Relates elements in a source sentence to a target sentence

Attention

Source Sentence

Target Sentence

e

a

l

e

H

e

l

l

o

k

G

y

b

o

BERT

T4

ELMo

GPT3

Neural MT

DALL.E

Sunbird translate

FB Translate

multimodal learning

Speech Recognition

Relates elements in a source sentence to a target sentence

Attention

Source Sentence

Target Sentence

H

e

l

l

o

e

a

l

e

k

G

y

b

o

Relates elements in a source sentence to a target sentence

Attention

H

e

l

l

o

Source Sentence

e

a

l

e

k

G

y

b

o

Self Attention

Types of Attention Mechanisms

Multi-Head Attention

Self Attention

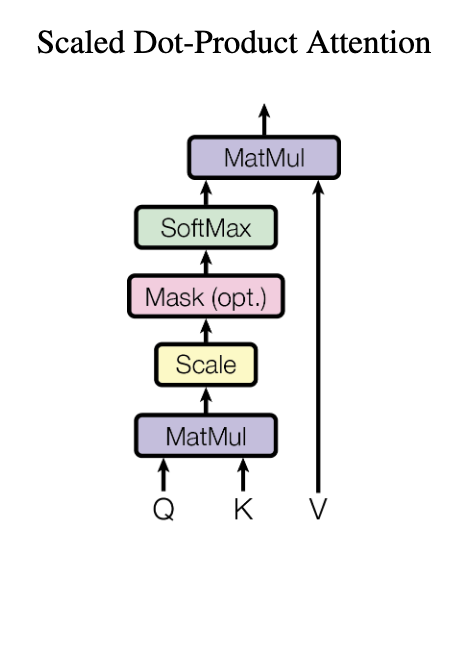

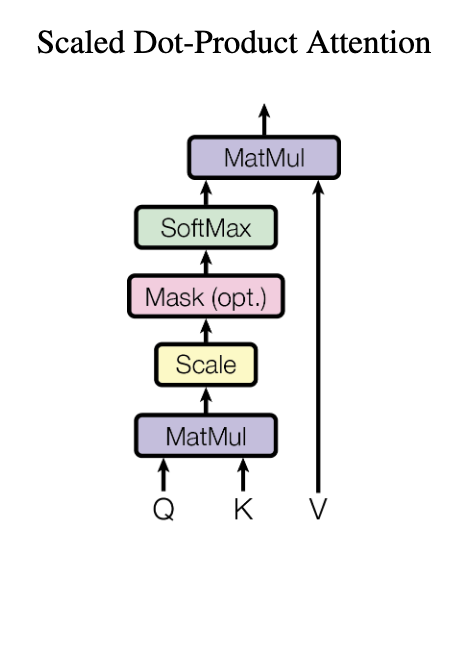

Vaswani et al. (2017) describe attention functions as "mapping a query and a set of key-value pairs to an output, where the query, keys, values, and output are all vectors

o

\( Attention(Q, K, V) = softmax(\frac{QK^T}{\sqrt{d_k}})V\)

Self Attention

Is when when your source and target sentences are the same

Source Sentence

e

a

l

e

k

G

y

b

o

n

y

a

b

o

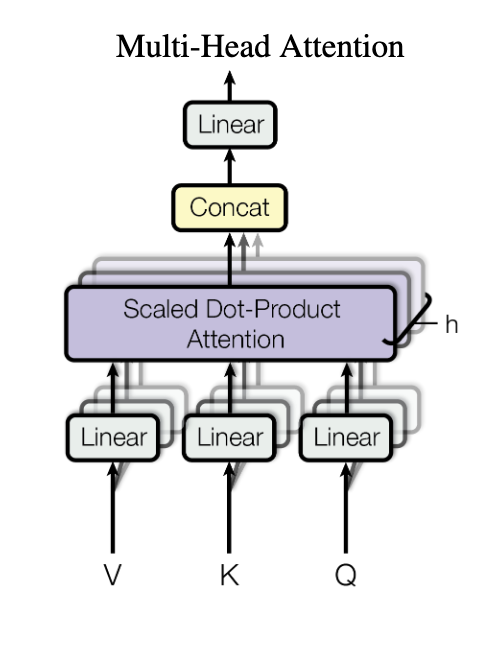

Multi Head attention

In Vaswani et al. , the authors first apply a linear transformation to the input matrices Q, K and V, and then perform attention i.e. they compute:

where, WV, WQ and WK are learnt parameters.

In multi-head attention, say with #heads = 4, the authors apply a linear transformation to the matrices and perform attention 4 times.

Multi headed Attention

Compute K Attention in Parallel

Allows more than one relation

Attention Head 1

Attention Head 2

Attention Head 3

Attention Head 4

1.Oli Otya? 2.Erinya Lyo Lyanni? 3.ova wa ? 4.Bakuyita batya?

1.How are you? 2.What is your name? 3.Where do you come from? 4.What is your name ?

Attention Head

PART 2

Transformers

〞

Transformers

What are they?

Transformers?

Transformers?

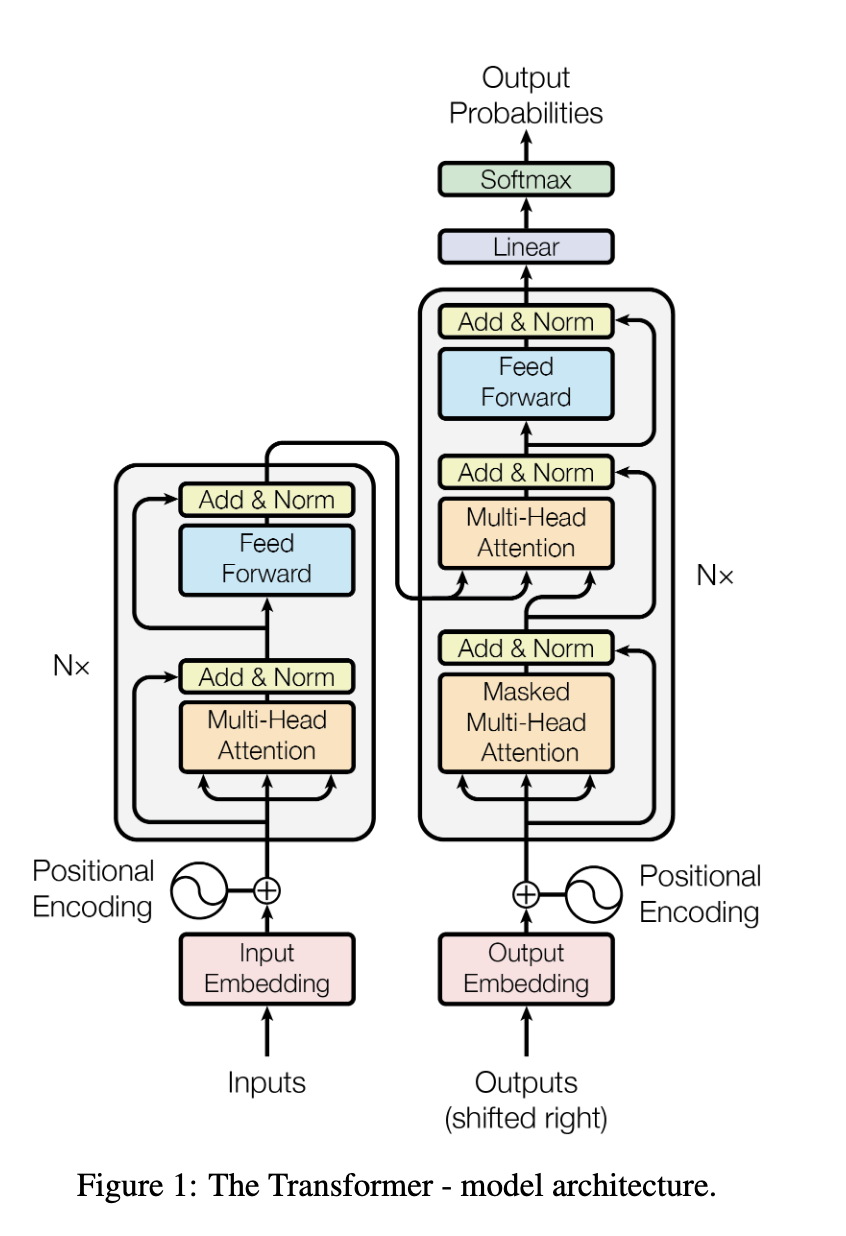

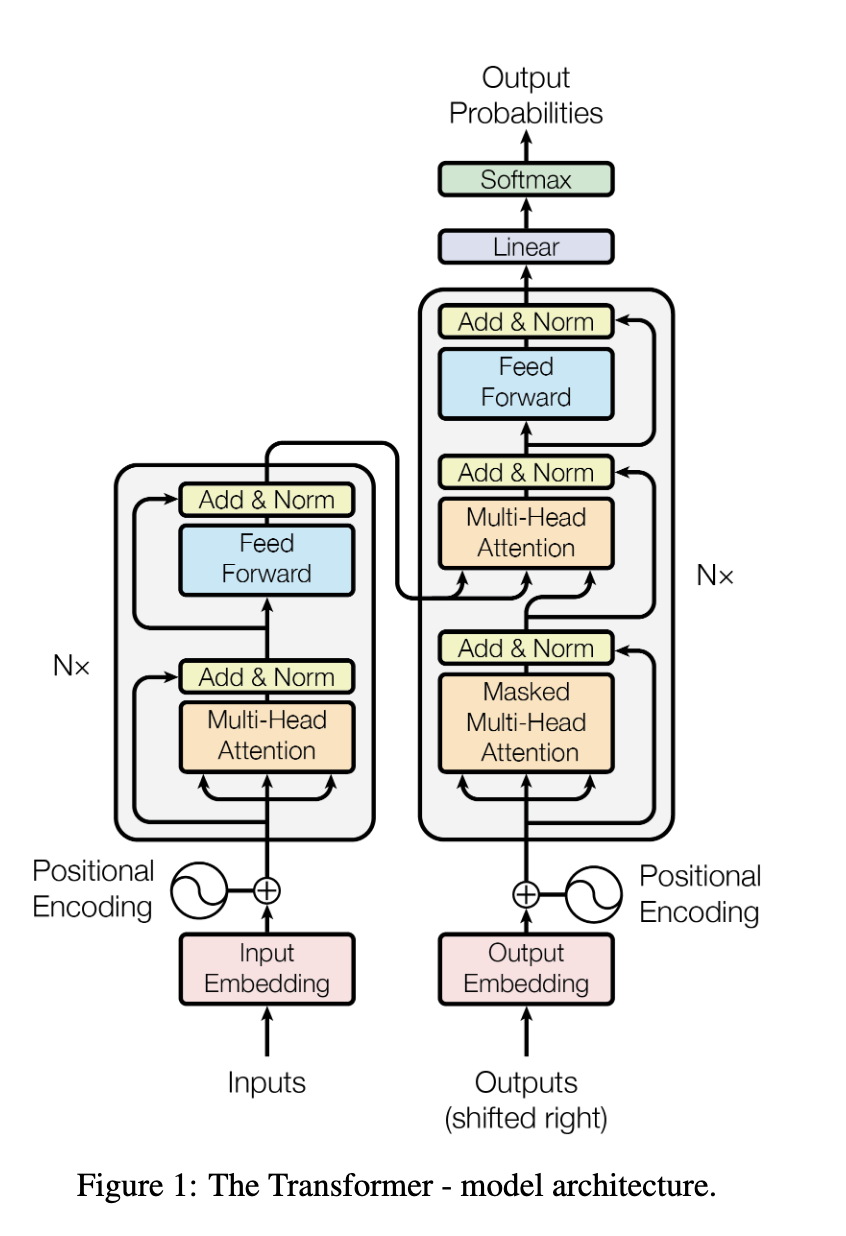

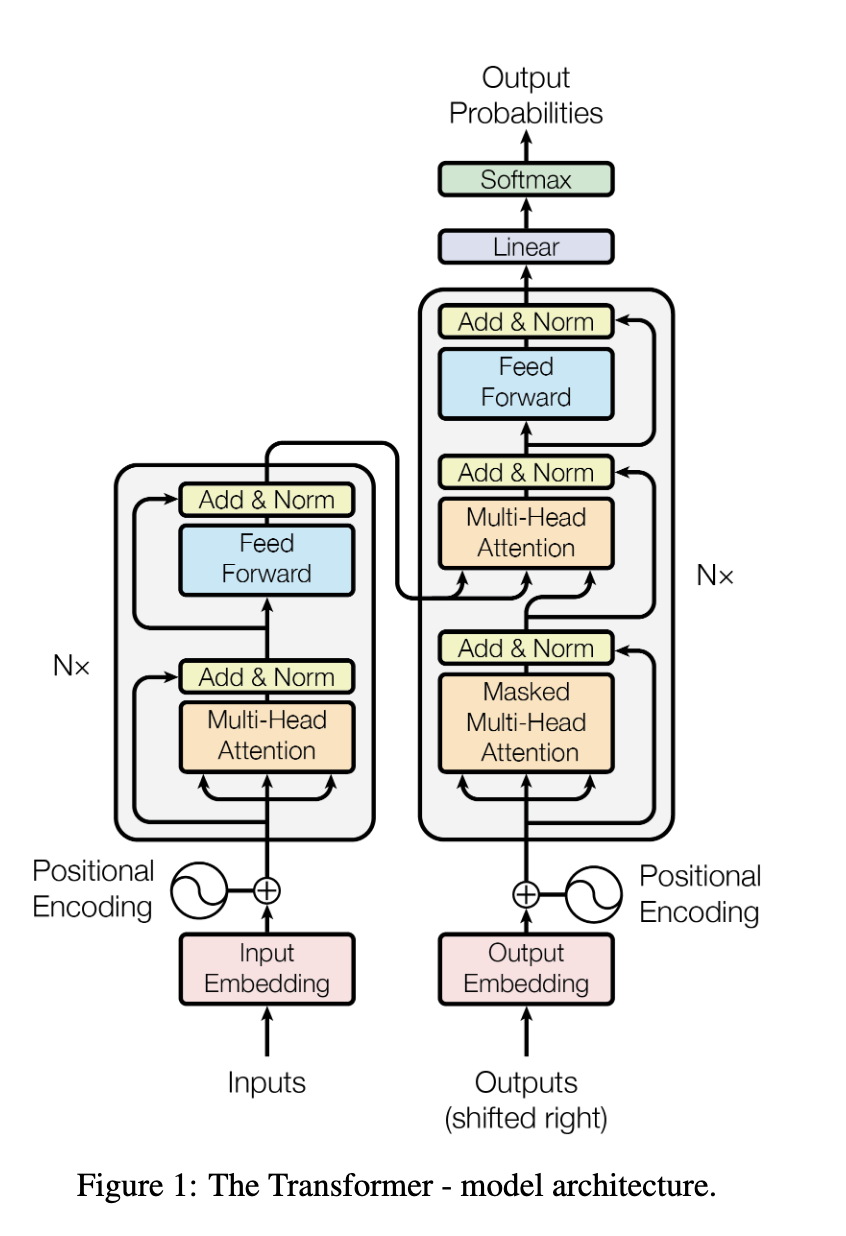

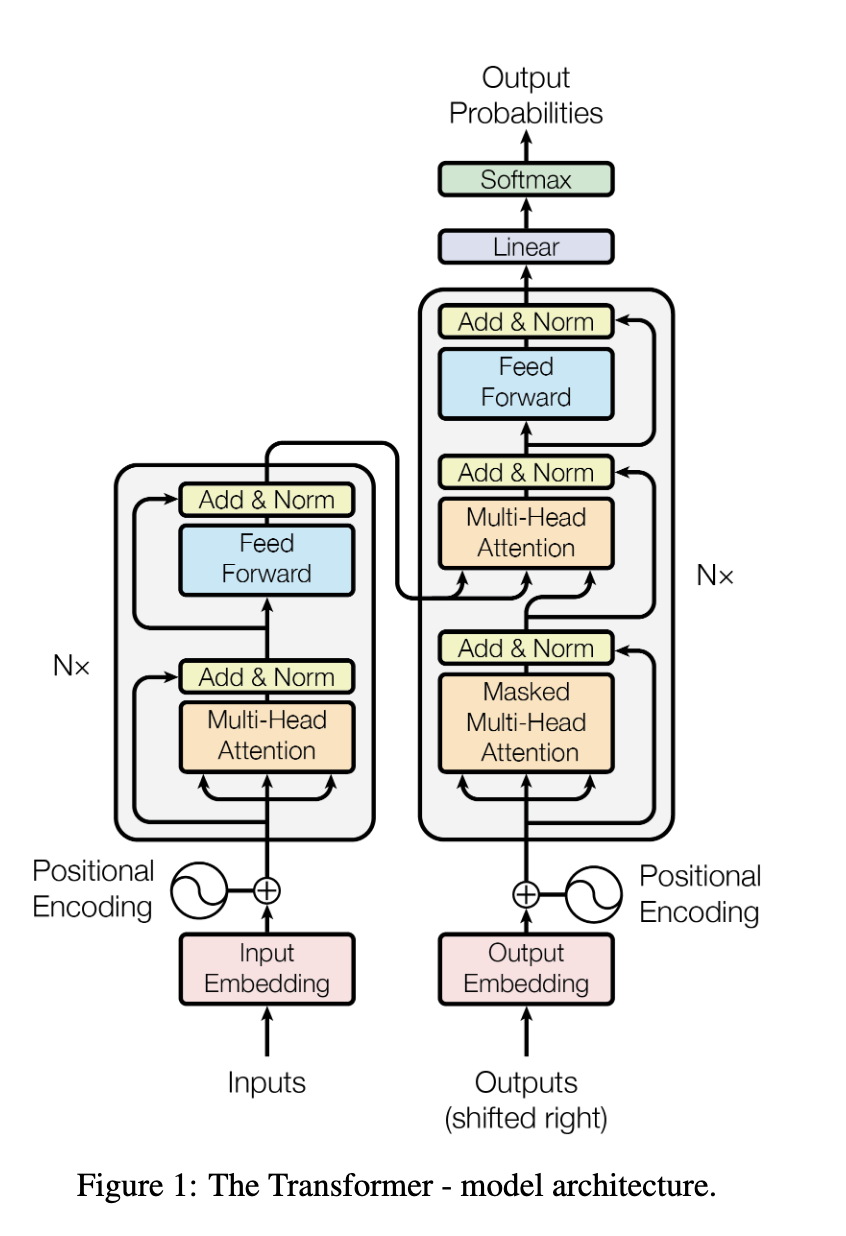

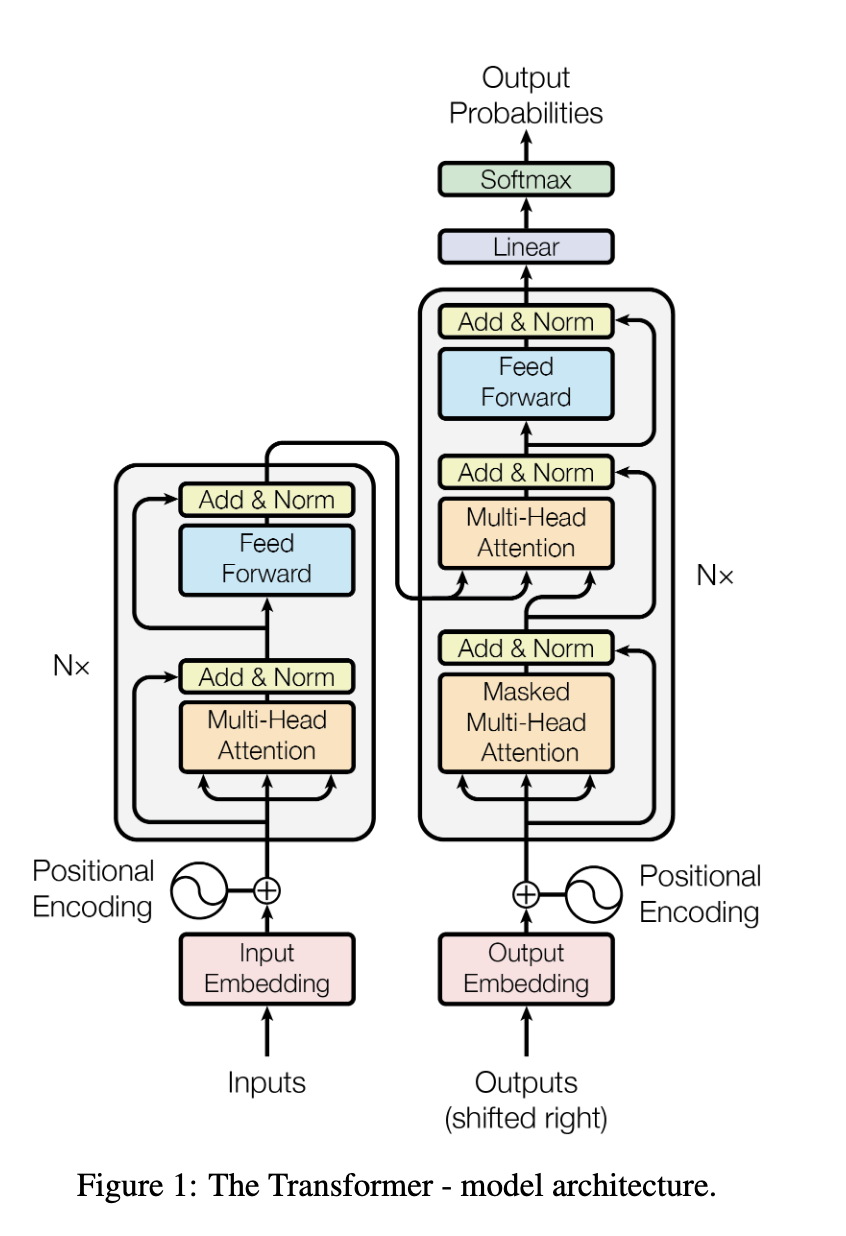

Transformers

- A popular architecture in NLP

- Utilizes self attention and Multihead attention

- Combines attention with fast autoregressive decoding

Transformers

Sequence

Attention

Positional

Encoding

Decoders

1. Self Attention + Multi-Head attention

Multi-head attention

Self Attention

2. Encoder

Positional Encoding

Positional Encoding

When we compare two elements of a sequence together (like in attention)

we don’t have a notion of how far apart they are or where one is relative to the other

\( PE_{(pos, 2_i)} = sin (pos/10000^{{2i}/d_{model}}) \)

\( PE_{(pos, 2_i + 1)} = sin (pos/10000^{{2i}/d_{model}}) \)

Positional Encoding

When we compare two elements of a sequence together (like in attention)

we don’t have a notion of how far apart they are or where one is relative to the other

\( PE_{(pos, 2_i)} = sin (pos/10000^{{2i}/d_{model}}) \)

\( PE_{(pos, 2_i + 1)} = sin (pos/10000^{{2i}/d_{model}}) \)

Using a linear combination of these signals we can “pan” forwards or backwards in the sequence.

Decoder

Decoder

Fast Auto-regressive Decoding

Sequence generation

Learn a map from input sequence to output sequence:

\( y_o, . . ., y_T = f(x_0,....x_N) \)

In reality tend to look like this:

\( \hat y_o, . . ., \hat y_T = decoder(encoder(x_0,....x_N)) \)

Fast Auto-regressive Decoding

Autoregressive Decoding

Condition each output on all previously generated outputs

\( \hat y_o = decoder(encoder(x_0,....x_N)) \)

\( \hat y_1 = decoder(\hat y_0, encoder(x_0,....x_N)) \)

\( \hat y_2 = decoder(\hat y_0, y_1, encoder(x_0,....x_N)) \)

\( \hat y_{t+1} = decoder(\hat y_0, y_1...y_t, encoder(x_0,....x_N)) \)

.

.

Fast Auto-regressive Decoding

Autoregressive Decoding

At train time, we have access to all the true targets outputs

\( \hat y_o = decoder(encoder(x_0,....x_N)) \)

\( \hat y_1 = decoder(\hat y_0, encoder(x_0,....x_N)) \)

\( \hat y_{t+1} = decoder(\hat y_0, y_1...y_t, encoder(x_0,....x_N)) \)

.

Question: How do we squeeze all of these into one call of our decoder?

Attention

If we feed all our inputs and targets, it is easy to cheat using Attention

<pad> What is your name?

<pad> Erinya lyo ggwe ani?

?

Erinya

lyo

ggwe

ani

?

what

is

your

name

<PAD>

<pad> Erinya lyo ggwe ani?

If we feed all our inputs and targets, it is easy to cheat using Attention

what

is

your

name

?

Erinya

lyo

ggwe

ani

?

<PAD>

When we apply this triangular mask, the attention can only look into the past instead of being able to cheat and look into the future.

If we feed all our inputs and targets, it is easy to cheat using Attention

what

is

your

name

?

Erinya

lyo

ggwe

yani

?

<PAD>

\( \hat y_o = decoder(encoder(x_0,....x_N)) \)

\( \hat y_1 = decoder(\hat y_0, encoder(x_0,....x_N)) \)

\( \hat y_2 = decoder(\hat y_0, y_1, encoder(x_0,....x_N)) \)

\( \hat y_{t+1} = decoder(\hat y_0, y_1...y_t, encoder(x_0,....x_N)) \)

.

.

If we feed all our inputs and targets, it is easy to cheat using Attention

Erinya lyo gwe [MASK]?

[MASK] is your name?

We Call this Masked Language Models

PART 4

Applications,

Labs,

Questions

Neural Machine Translation

Text Generation

Image Classification

Multi-modal learning

....

Applications

Attention

Transformers

Encoder Block

Decoder Block

Positional Encoding

Summary

End to End Neural Machine Translation with Attention

Take Home: Reproduce a model using SALT Dataset

LAB

https://t.ly/O43KV