06 - Open Problems

&

Wrapping up, Recap, Career Guidance. etc

3rd April 2024

Recap

06 - Open Problems

&

Recap, Career Guidance. etc

Do our models understand our tasks?

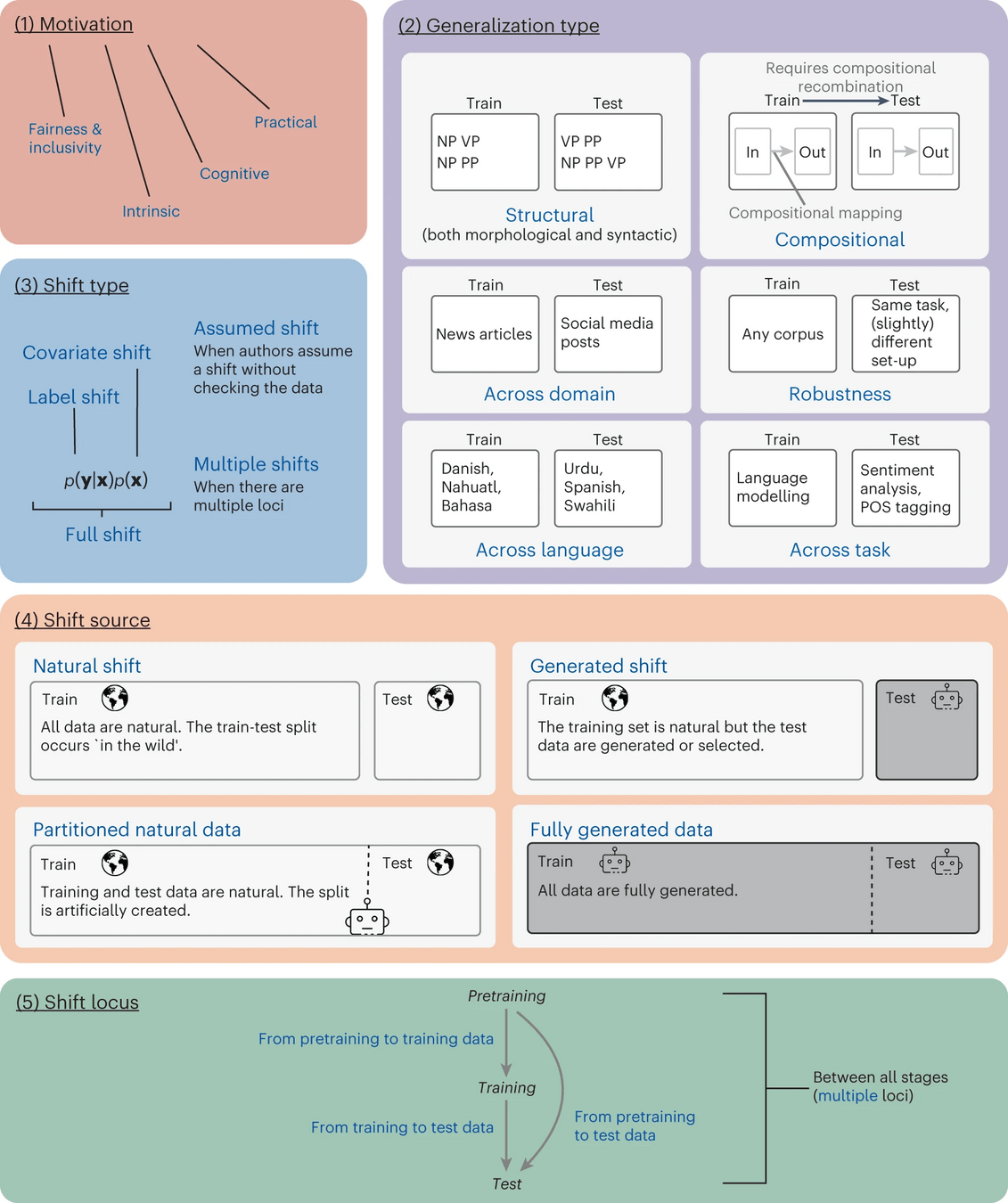

Hupkes, Dieuwke, et al. "A taxonomy and review of generalization research in NLP." Nature Machine Intelligence 5.10 (2023): 1161-1174.01- Generalization

How much do models really generalize?

01- Generalization

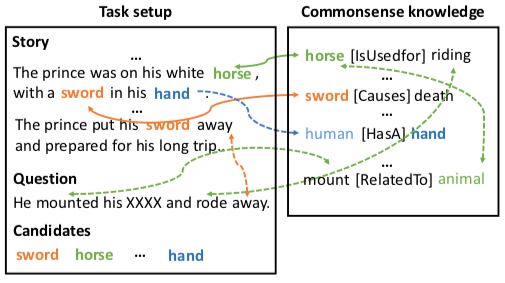

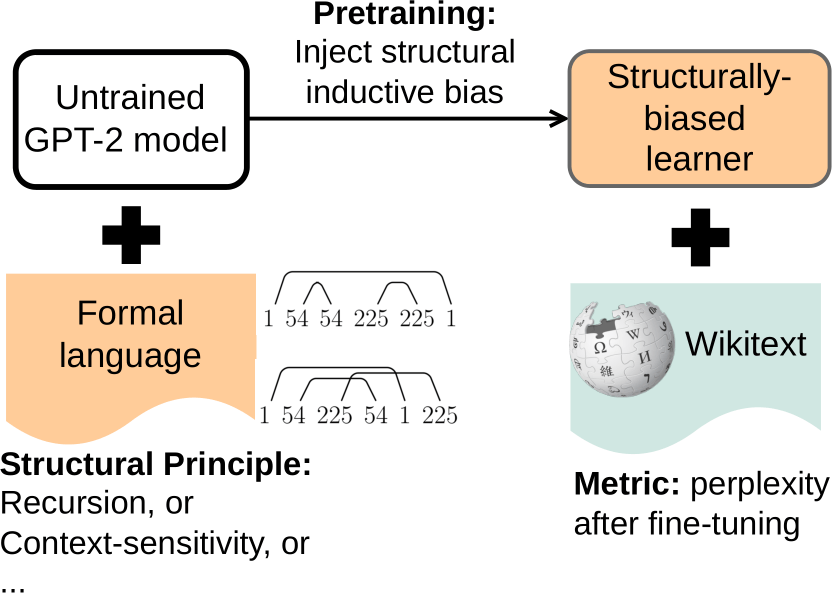

- Clever Inductive Biases

- Common sense reasoning

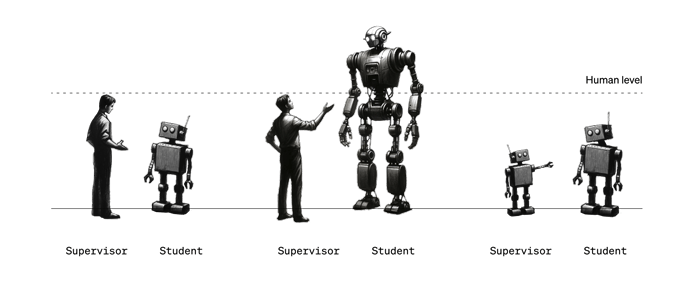

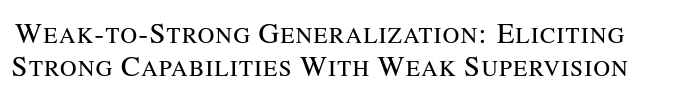

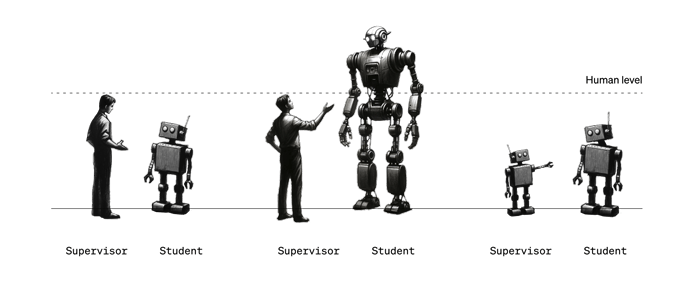

- Supervision + Alignment, using small models to supervise larger models

01- Generalization

What makes NLP systems work?

02 - Analysis & Interpretation

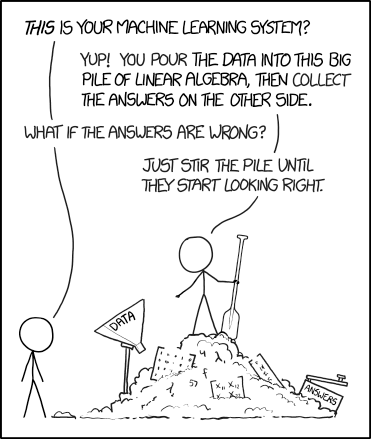

What's going on inside NNs?

input sentence

Output

Black Box Model

02 - Analysis & Interpretation

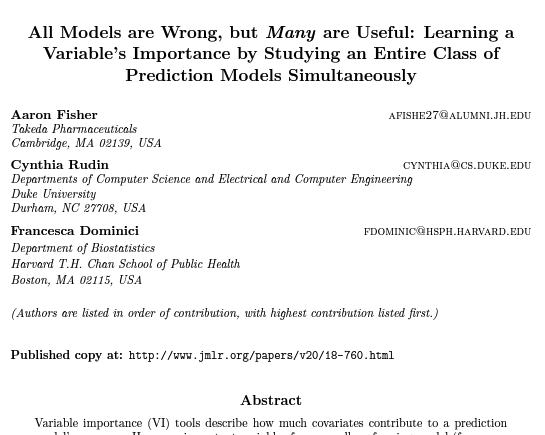

Can we build interpretable, but performant models?

e.g Establishing bounds with Model Class Reliance:

This article proposes MCR as the upper and lower limit on how important a set of variables can be to any well-performing model in a class.

In this way, MCR provides a more comprehensive and robust measure of importance than traditional importance measures for a single model.

More reads: https://rssdss.design.blog/2020/03/31/all-models-are-wrong-but-some-are-completely-wrong/

02 - Analysis & Interpretation

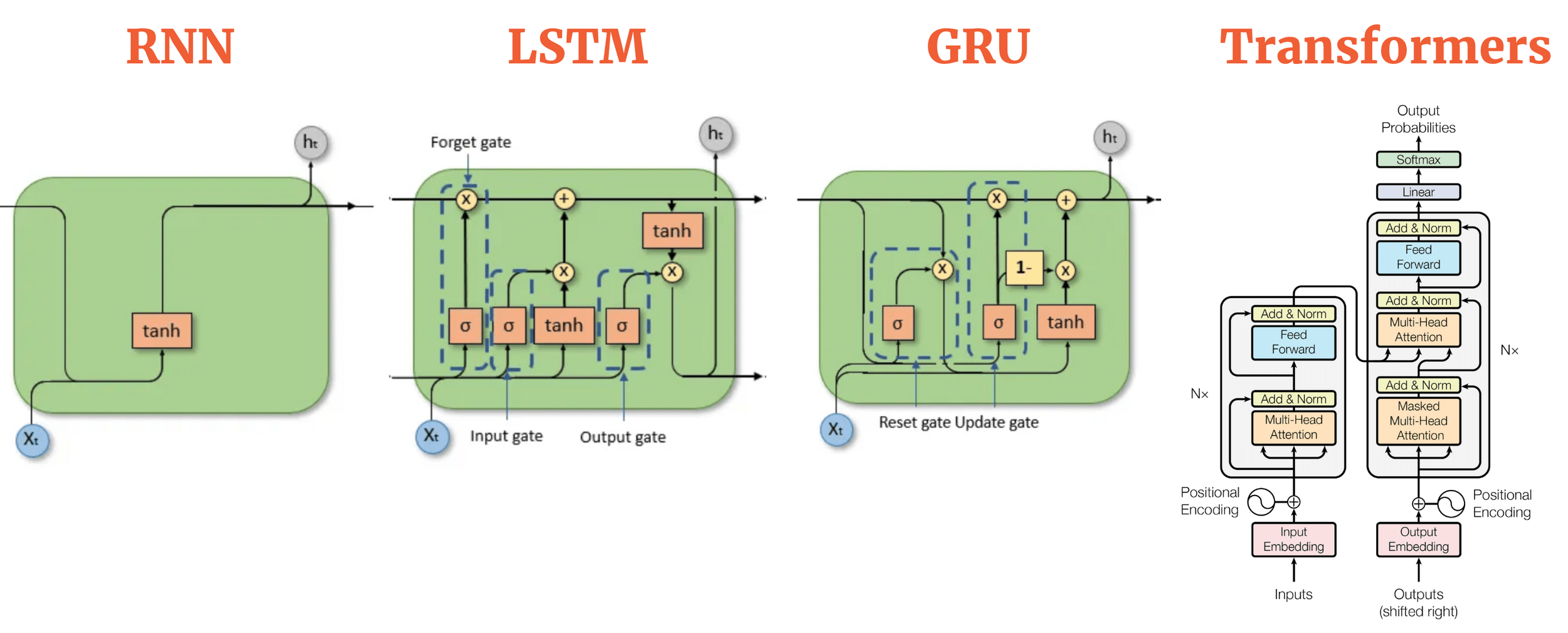

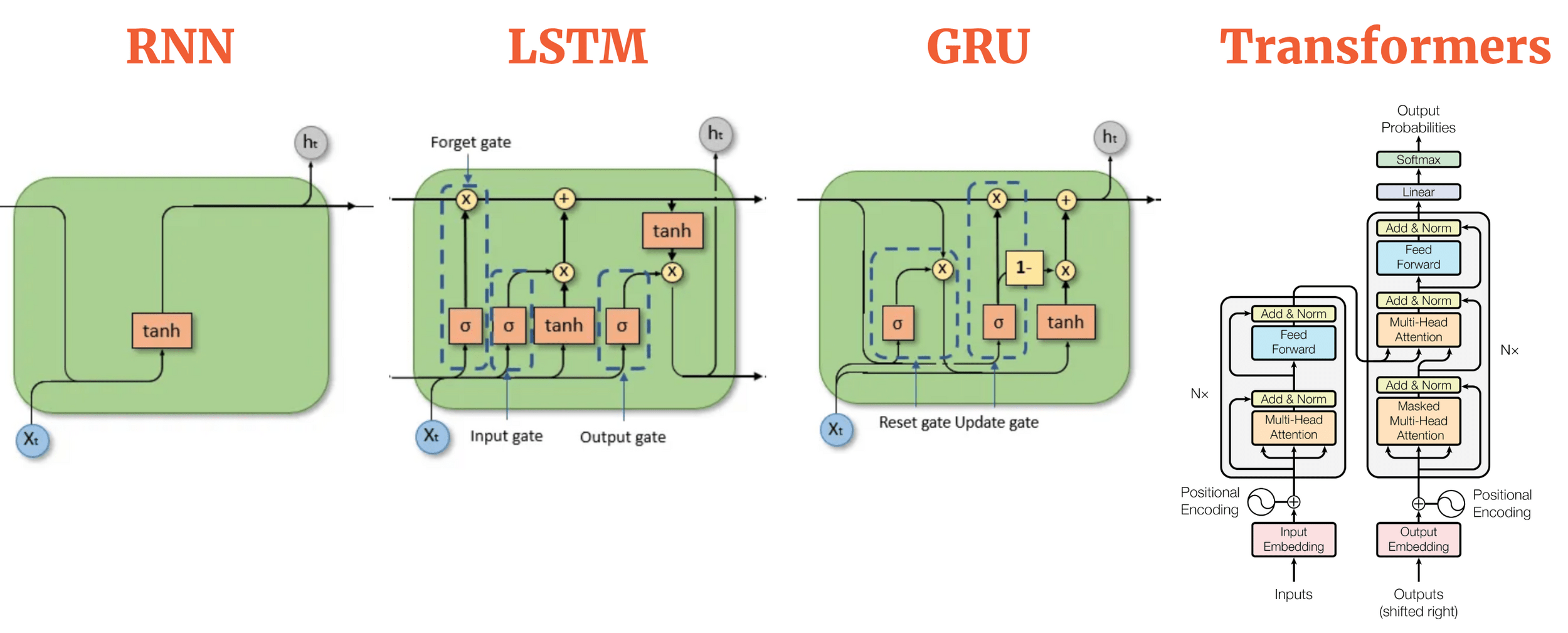

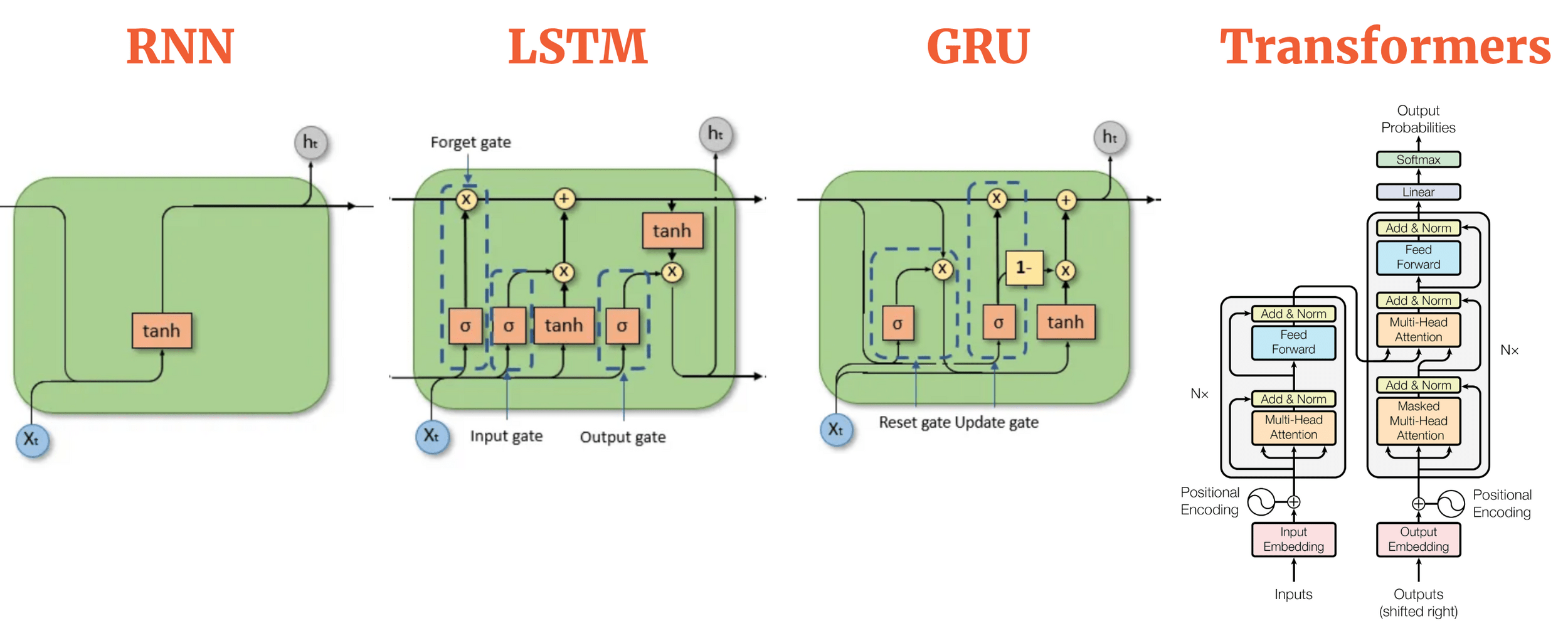

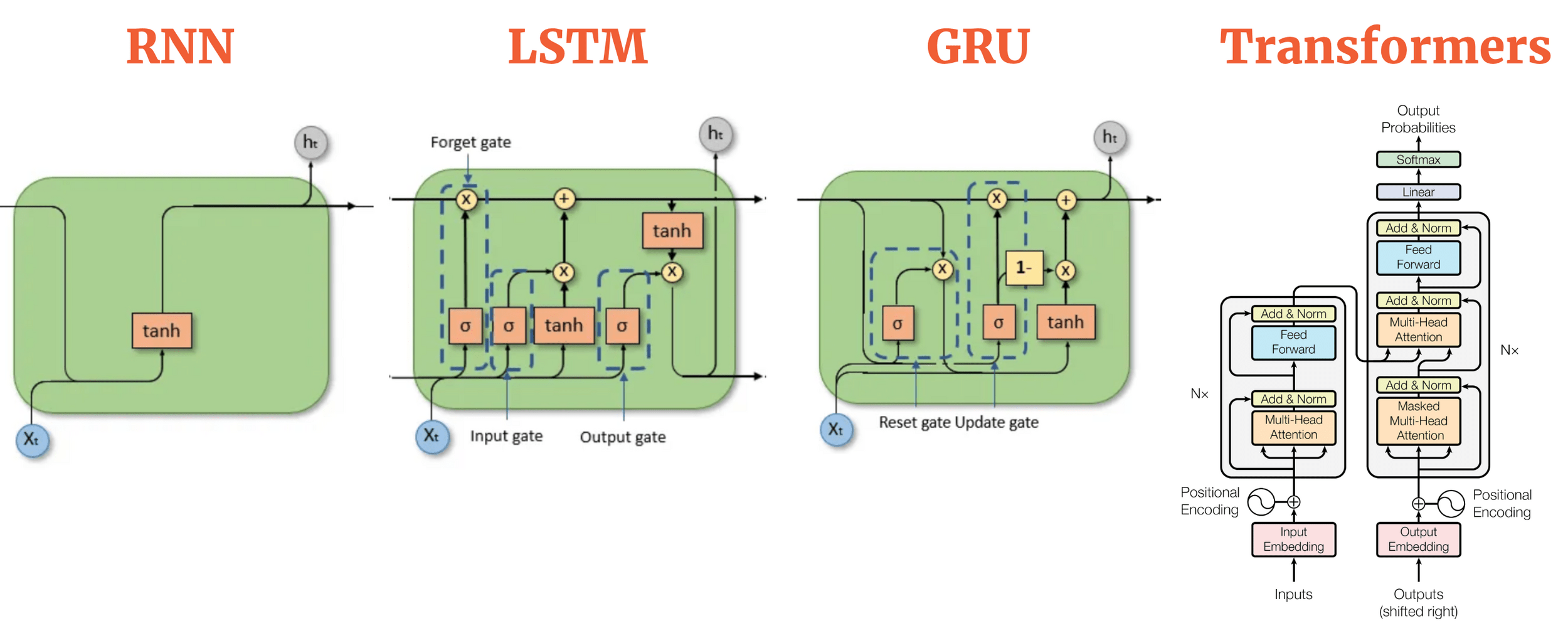

Can understanding help find the next transformer?

02 - Analysis & Interpretation

- What can't be learned via language model pertaining?

- How are our models affecting people and transferring power?

- What does deep learning struggle to do

- What do Neural Nets tell us about language?

- What will replace the transformer?

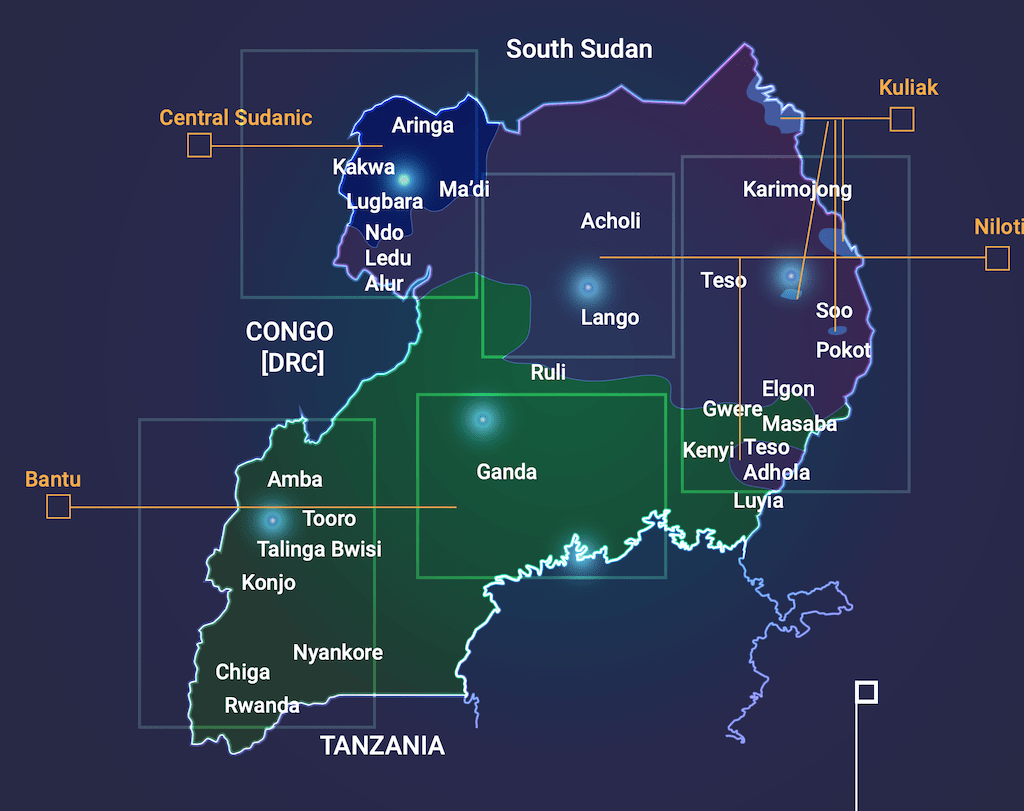

03 - Multilinguality

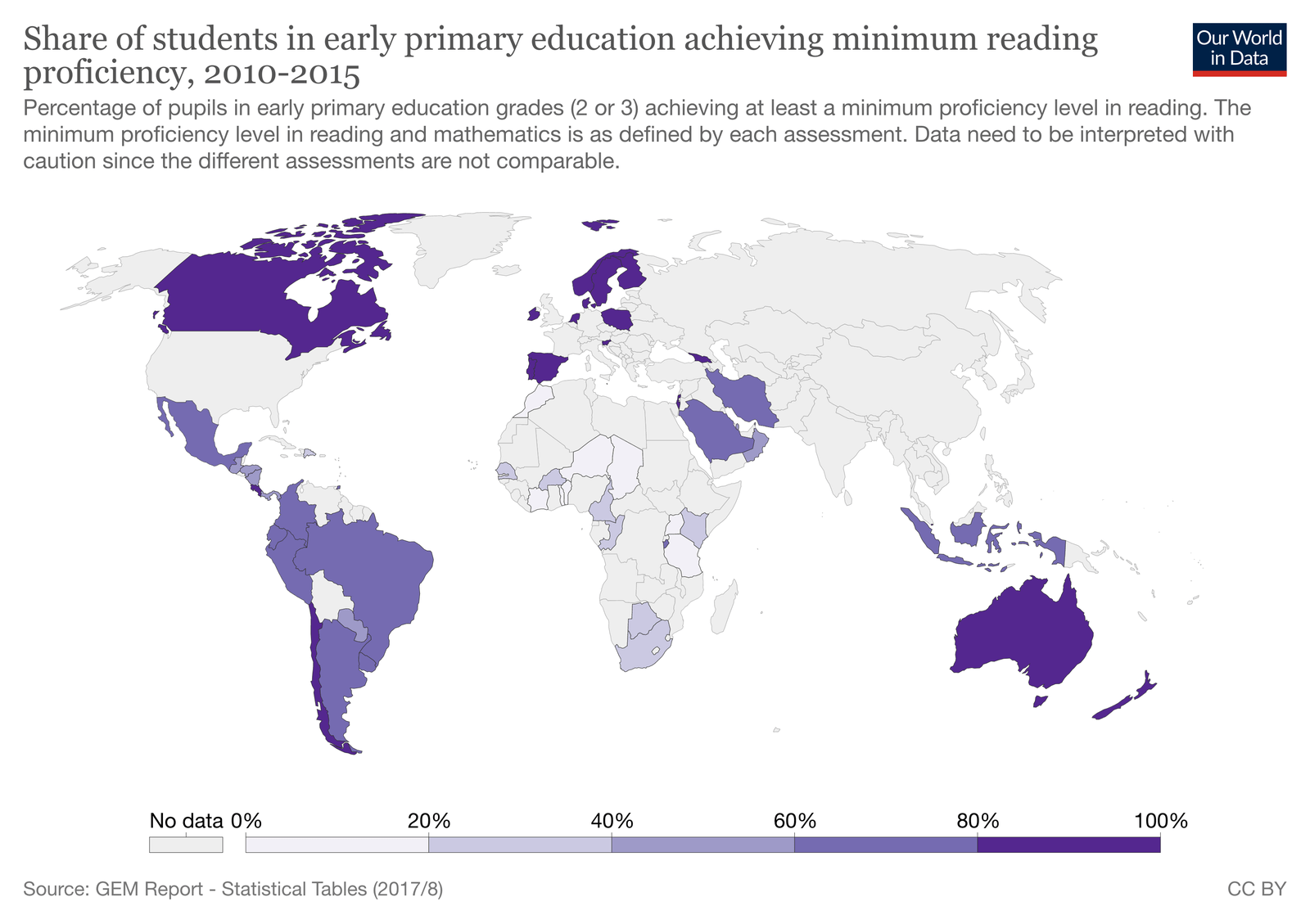

There are significant gaps between high and low resource languages

03 - Multilinguality

Can we remove language resource gaps?

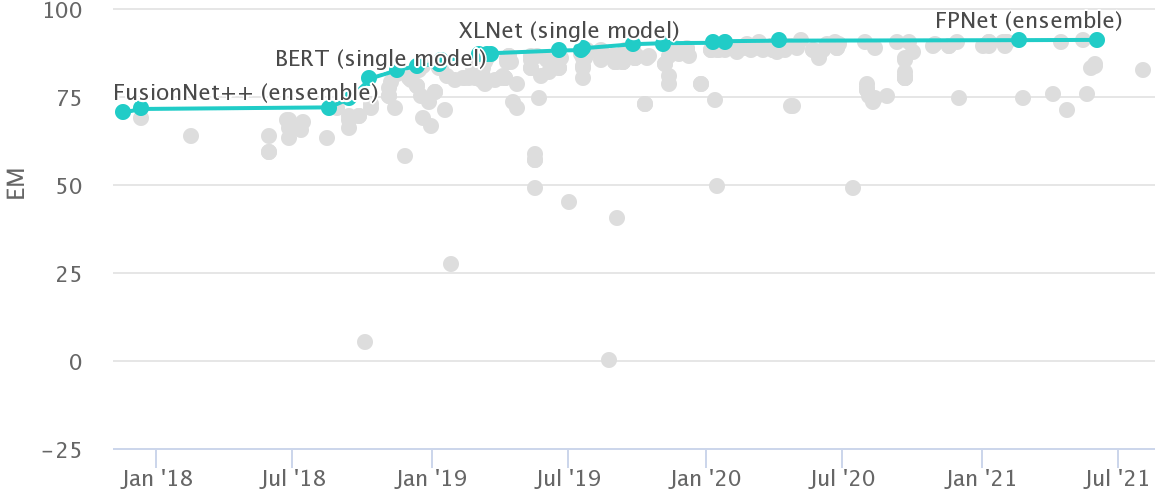

04 - Evaluation and Comparison

Benchmarks and how we evaluate drive the progress of the field

04 - Evaluation and Comparison

How do we evaluate things like interpretability?

05 - What is the next transformer?

?

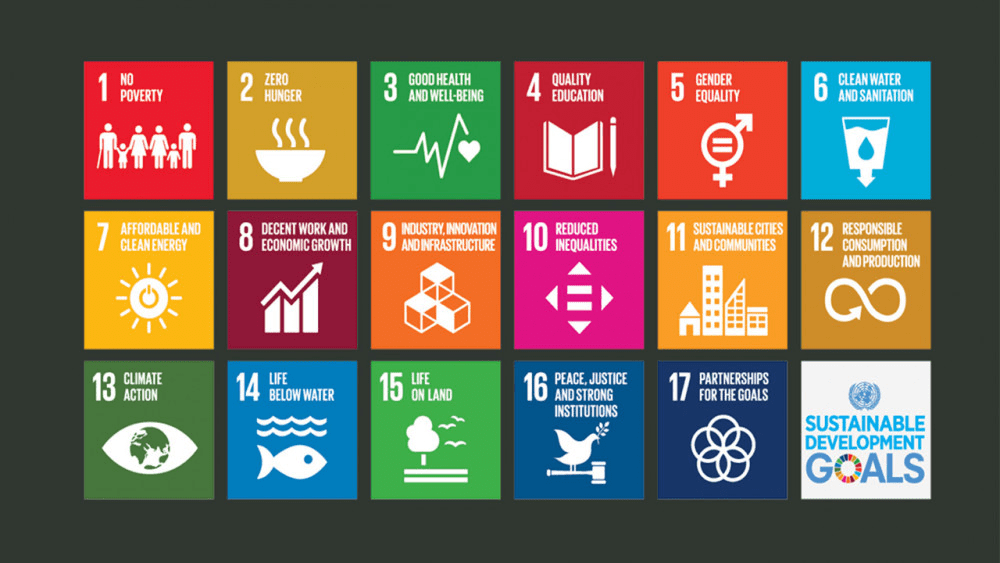

06 - Working in the real world

How do we make NLP systems work in the real world, on real problems?

05 - Working in the real world

1 . Bio/Clinical NLP

05 - Working in the real world

2 . Legal Domain

05 - Working in the real world

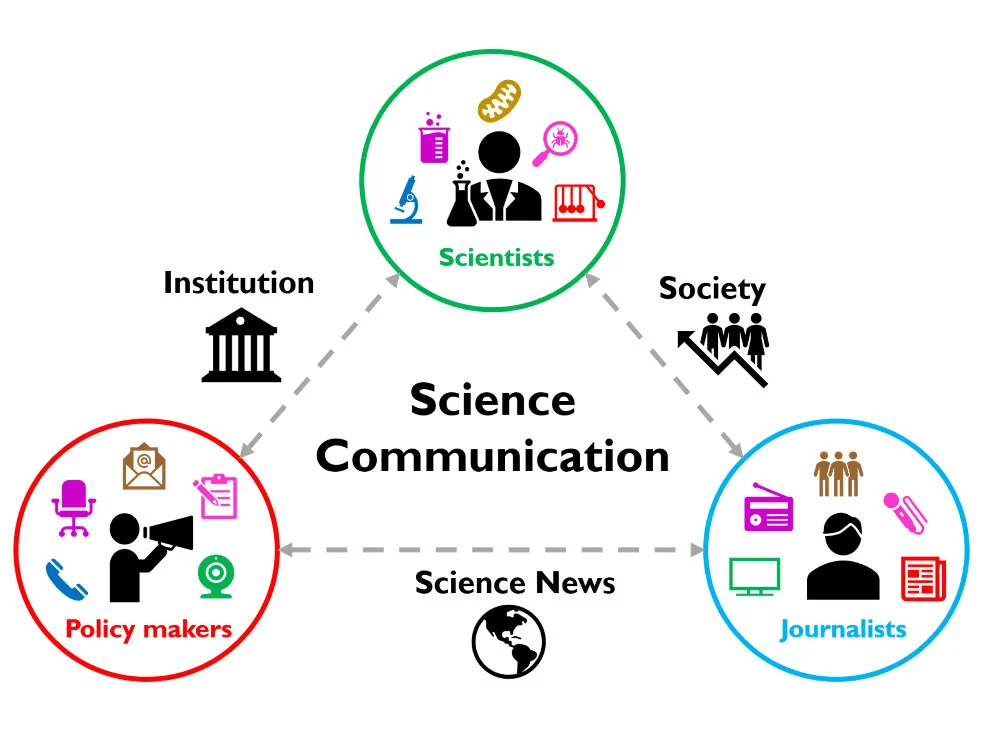

3 . Scientific Communication

05 - Working in the real world

3 . Education

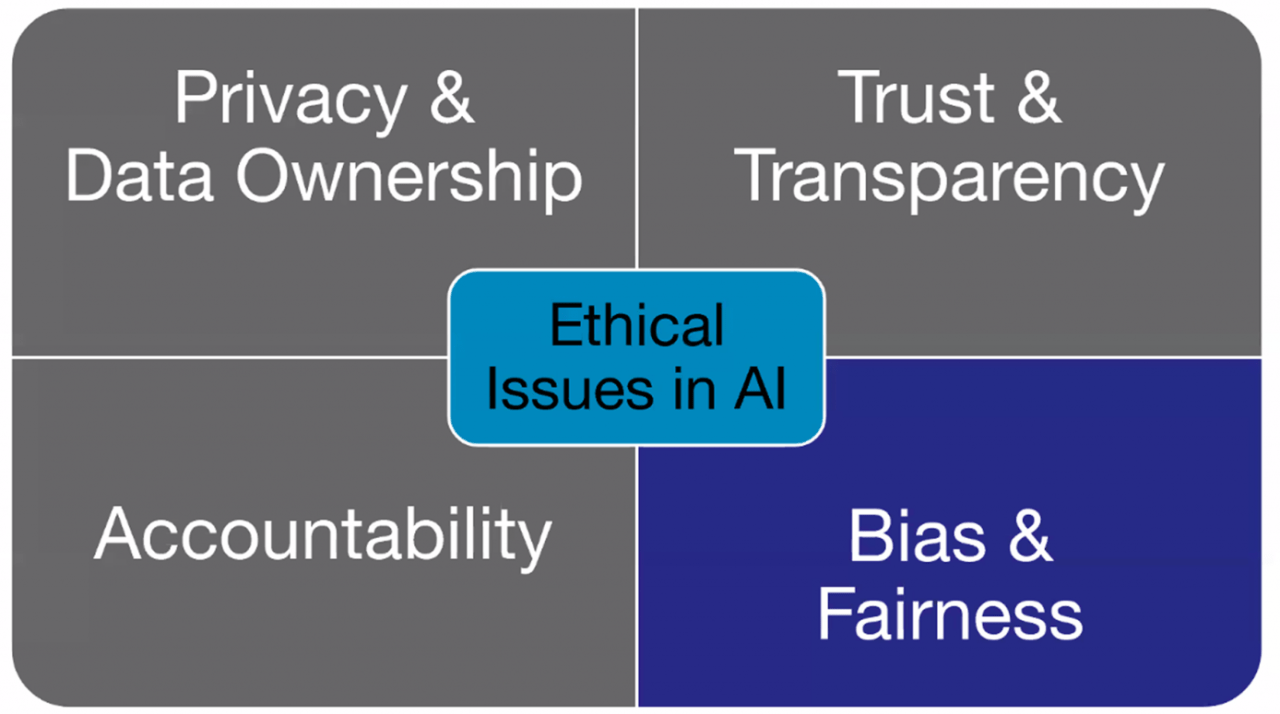

07 - Fairness, Bias, Responsible NLP

.. and many others

Wrapping Up

- Key ideas - Distributed representations

- Major opportunities - NLP systems work in ways that support real-world applications

- Many open questions