Machine Learning for Chest X-ray Image Analysis

Benjamin Akera

Sunbird AI

Mila - Quebec AI Inst.

McGill University

27-03-2024

What we shall cover

- Motivation for automating Xray image processing

- Datasets and methods

- Shortcomings, caveats and considerations

- Code walkthrough

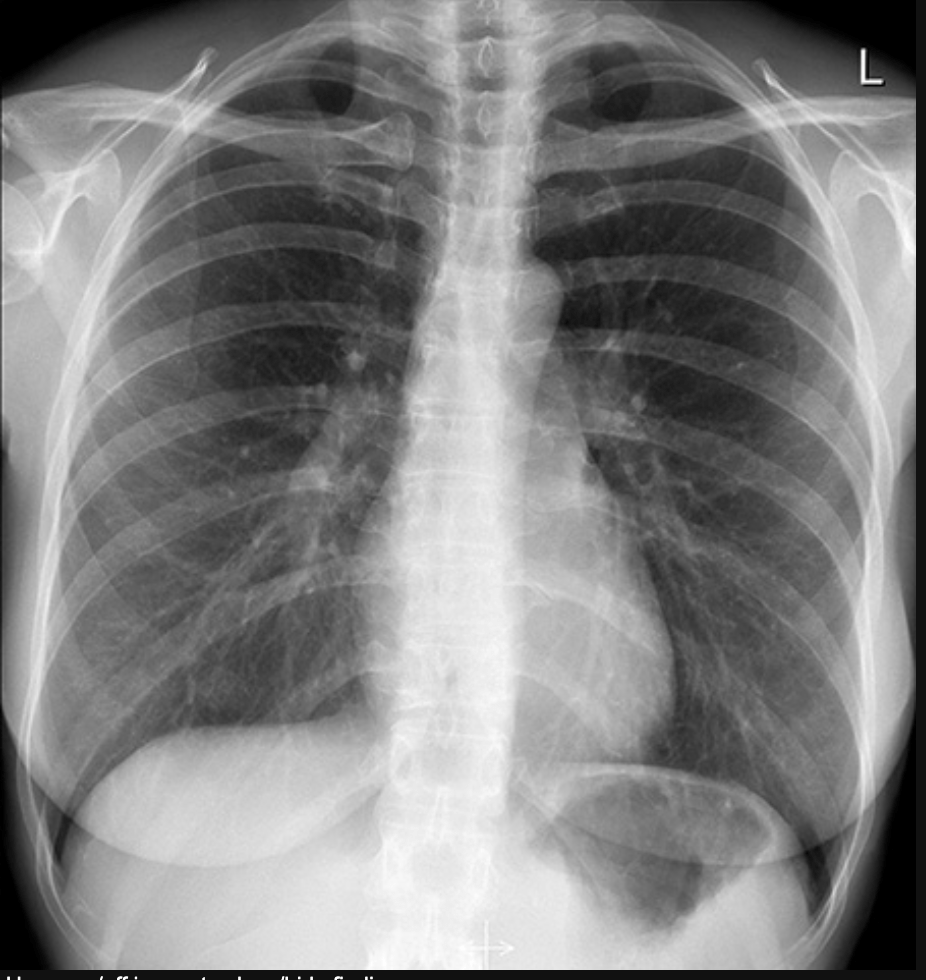

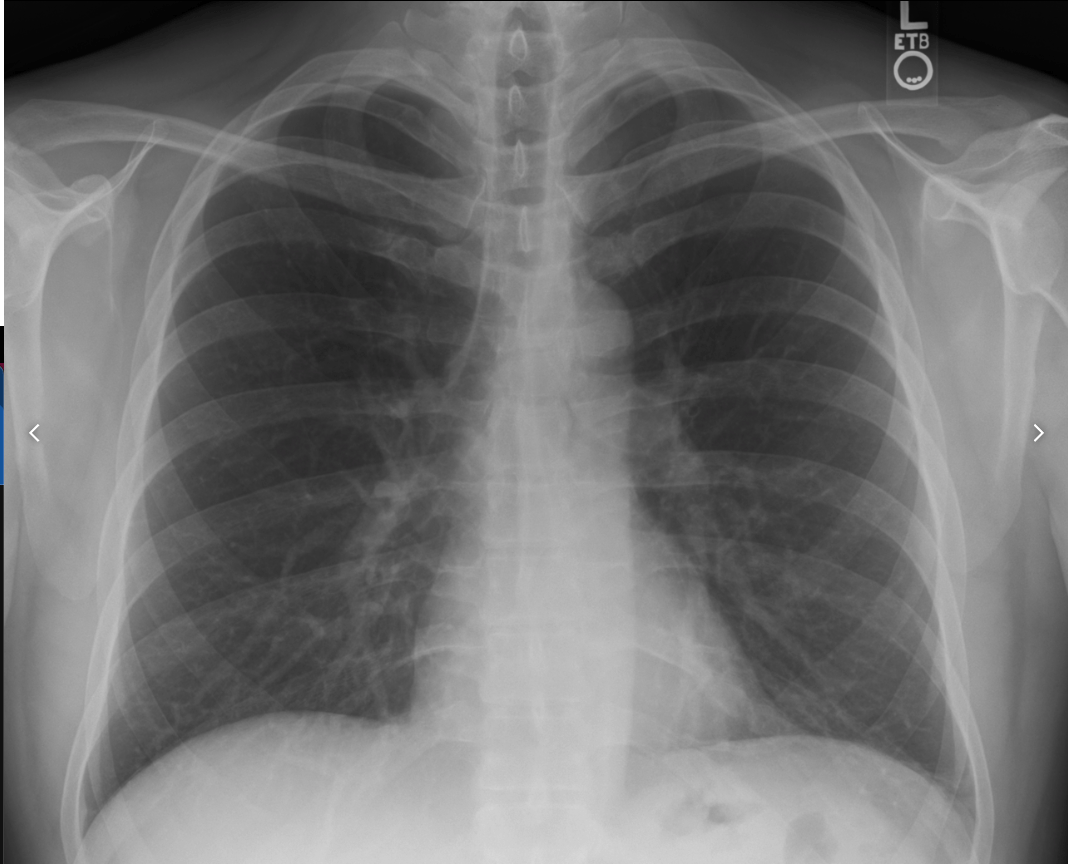

Chest Radiographs

- Commonly called X-Rays

- ("X" stands for "I have no idea what this thing is, but look, I see through people's skin)

- Visualizes the lungs

- Visualizes the heart

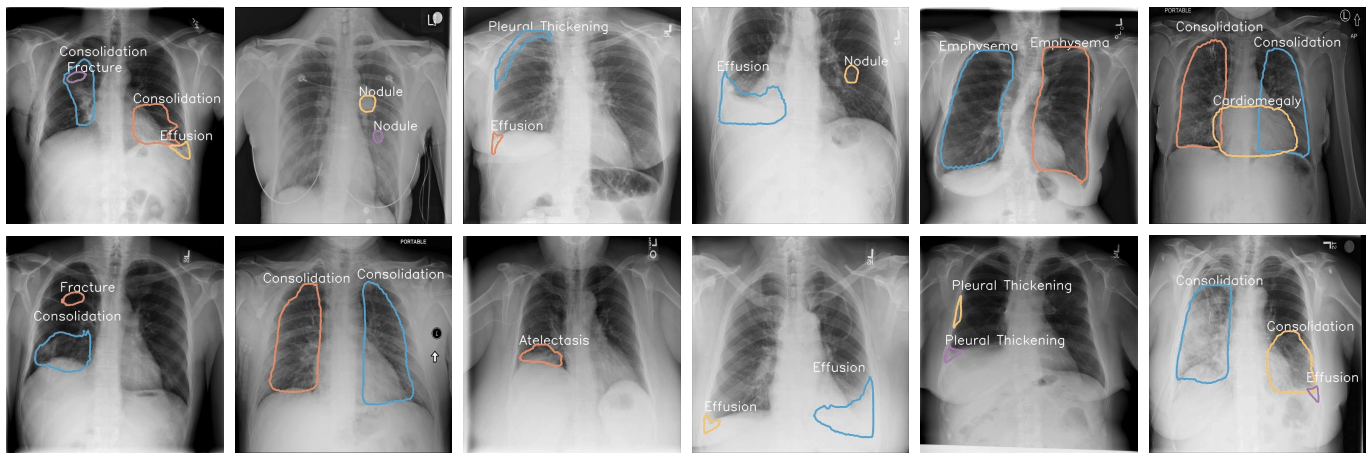

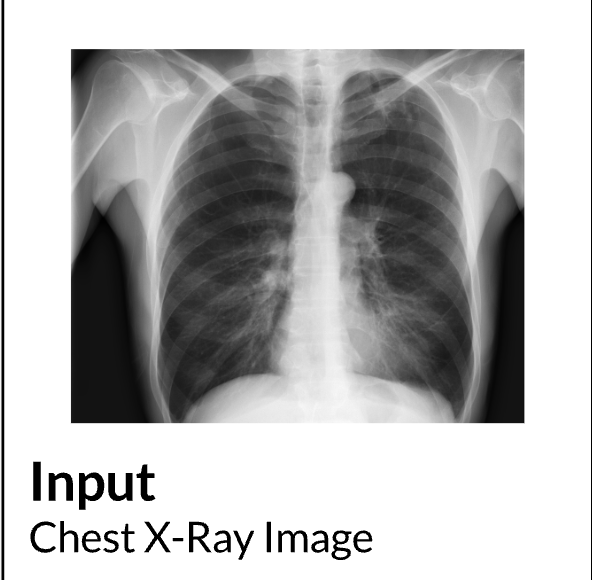

What do we look for in a CXR?

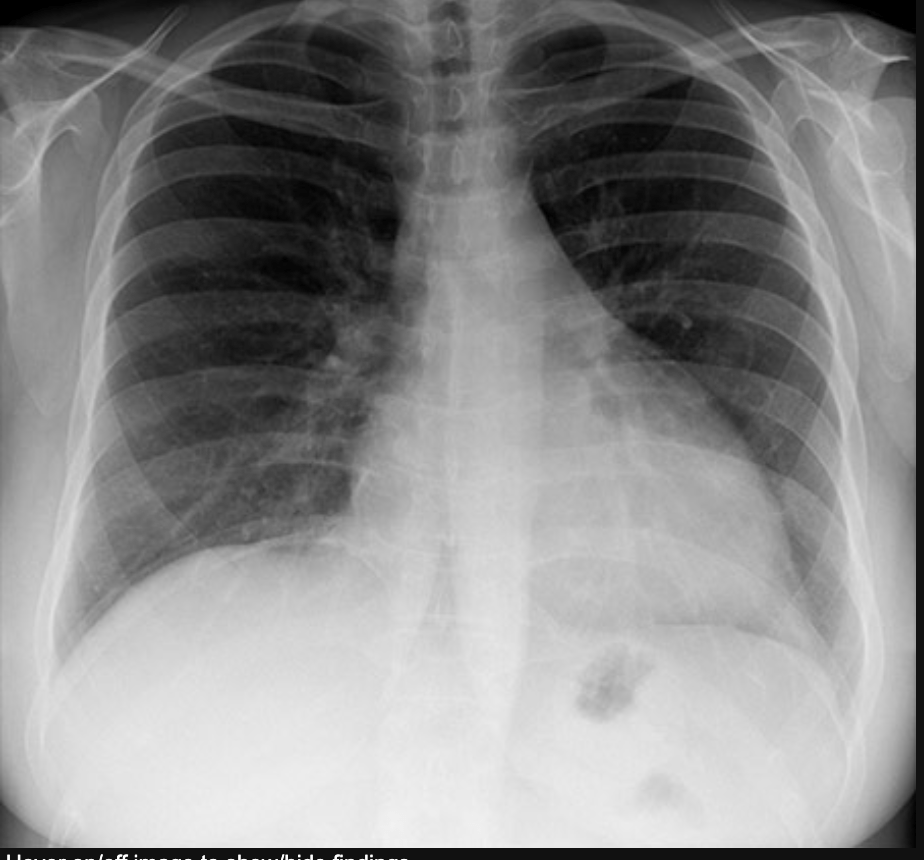

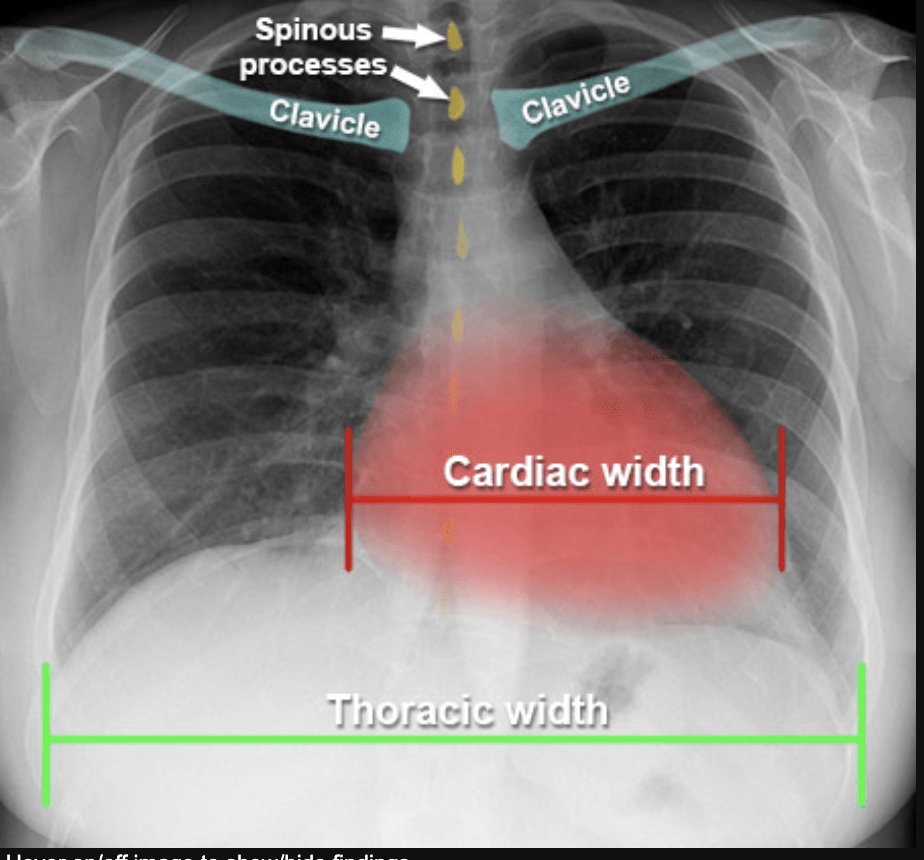

- Lets take this image for example.

- What do we see in it?

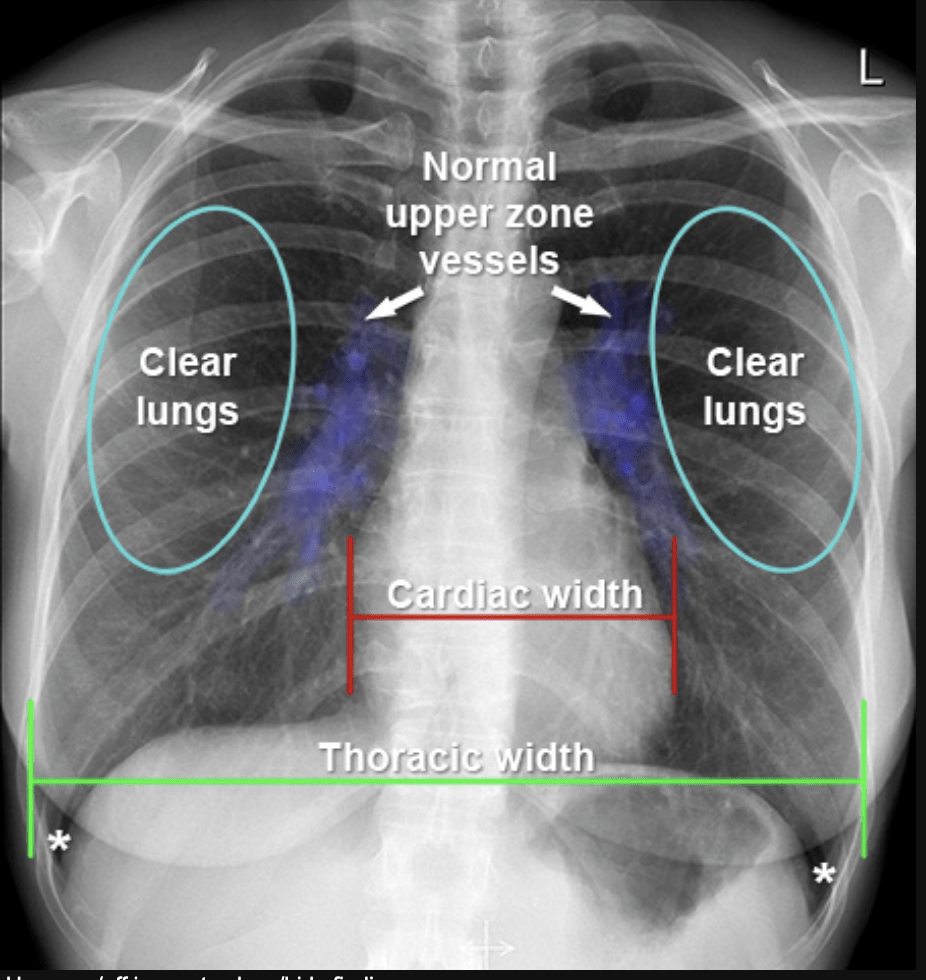

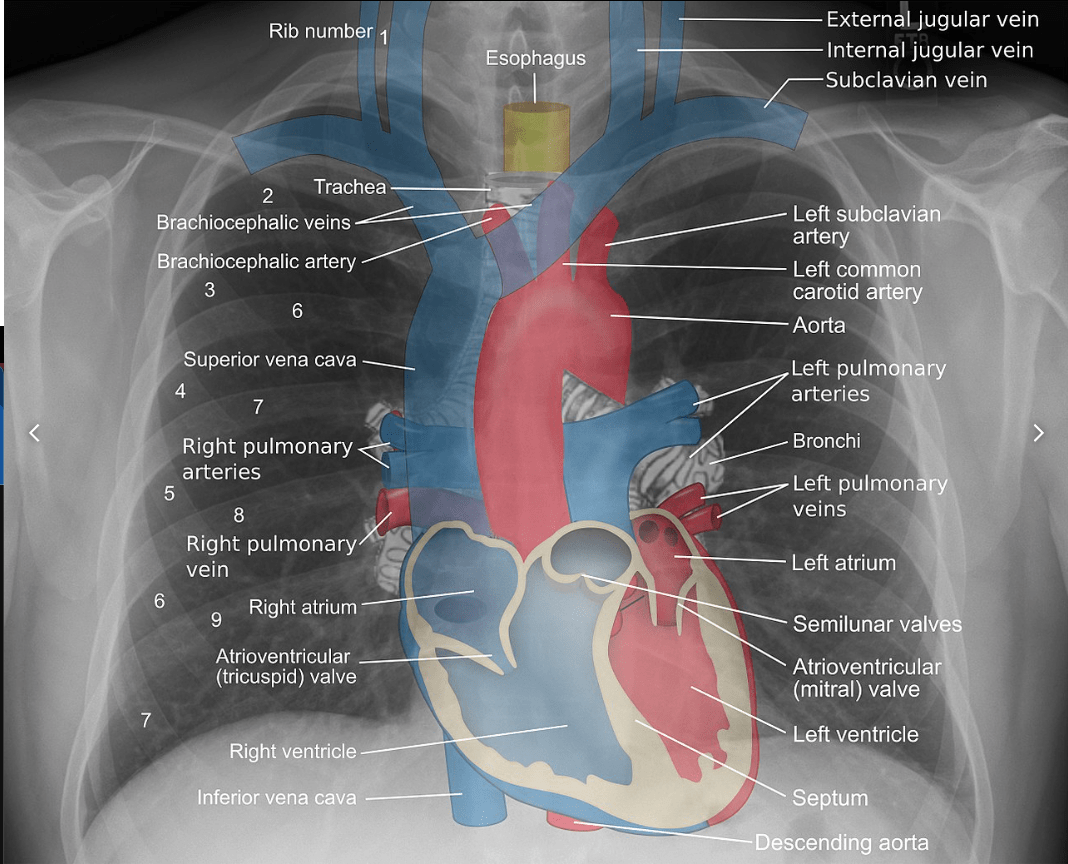

What do we look for in a CXR?

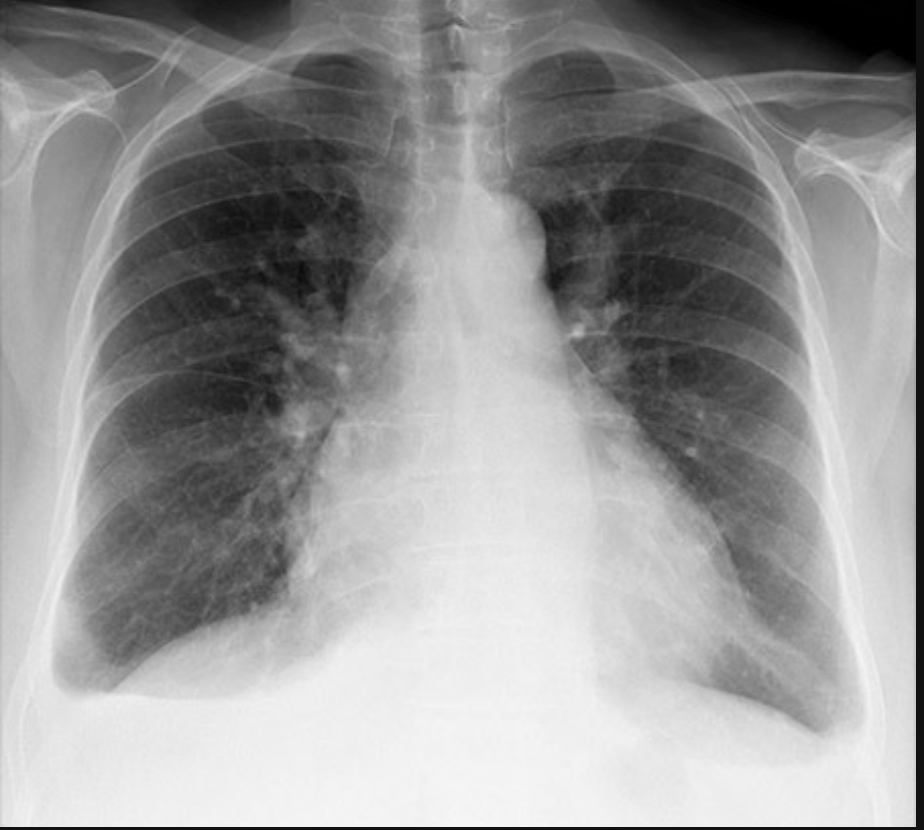

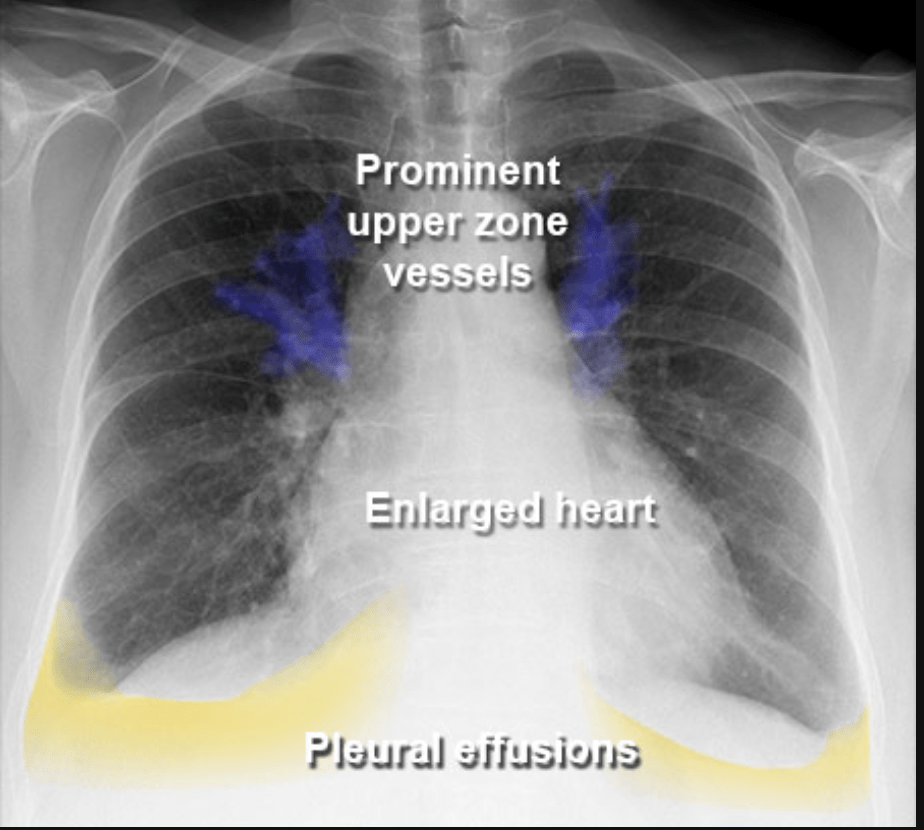

- How about this one?

Cardiomegaly - A big heart

What do we look for in a CXR?

- Water where it is not supposed to be.

Pleural Effusion

Chest Radiographs

Radiologists have a very strong mental model of what a CXR should look like

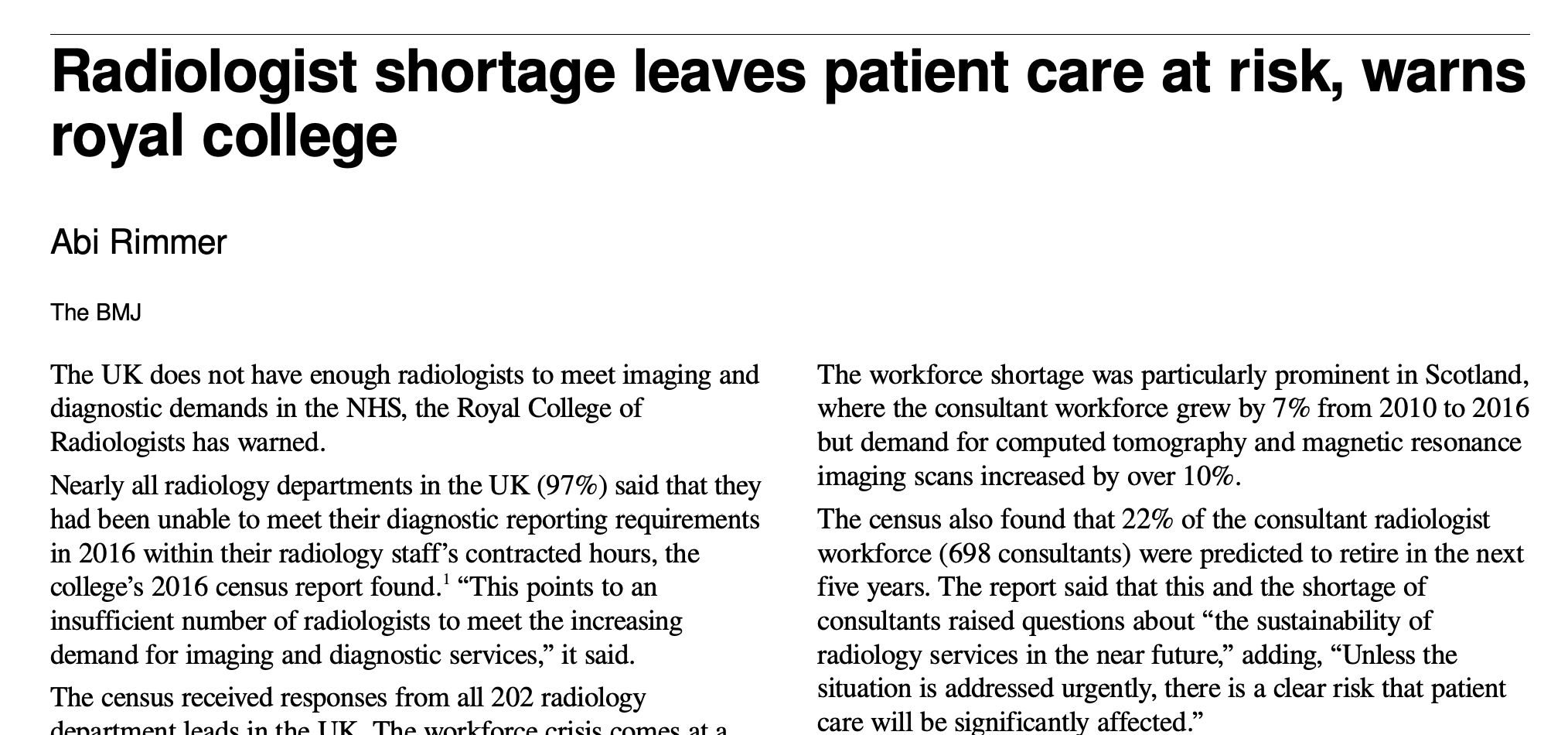

Why should we automate x-ray interpretation?

Delays in medical interpretation are bad

- 12 million people in Rwanda, 11 radiologists

- 4 Million people in Libya, 2 radiologists

- Most radiologists are in urban settings

- Delays in medical interpretation are bad

What do we need to train CXR models?

- Data

- Models

- Domain Expertise

1. Data

Data is the new oil for machine learning

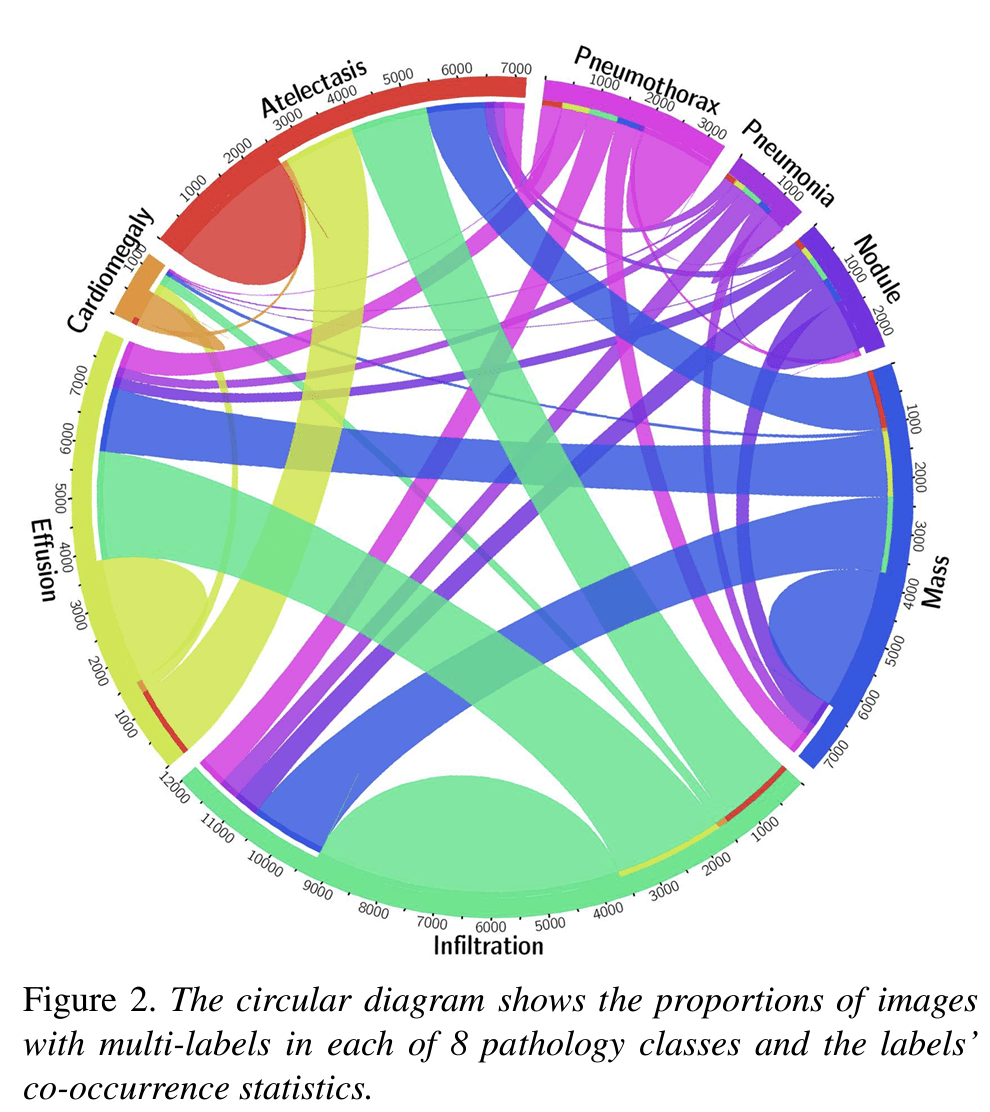

Era of Open Data

- 30, 000+ patients

- 10,000 + images

- Each associated with 14 labels

- Derived automatically from free-text reports

- Freely, publicly available

NIH Dataset

Era of Open Data

NIH is not the only one

- JSRT Database - 247 Dicoms, have heart + lung segmentation

- Open-I Indiana University CXR - 8121 DICOMs, 3996 Reports

- Chest XRay14 - 112,120 PNGs, 14 labels

- MIMIC-CXR-JPG - 369,188 JPGs, 14 labels

- MIMIC-CXR - Same as above, full resolution DICOMs + actual text

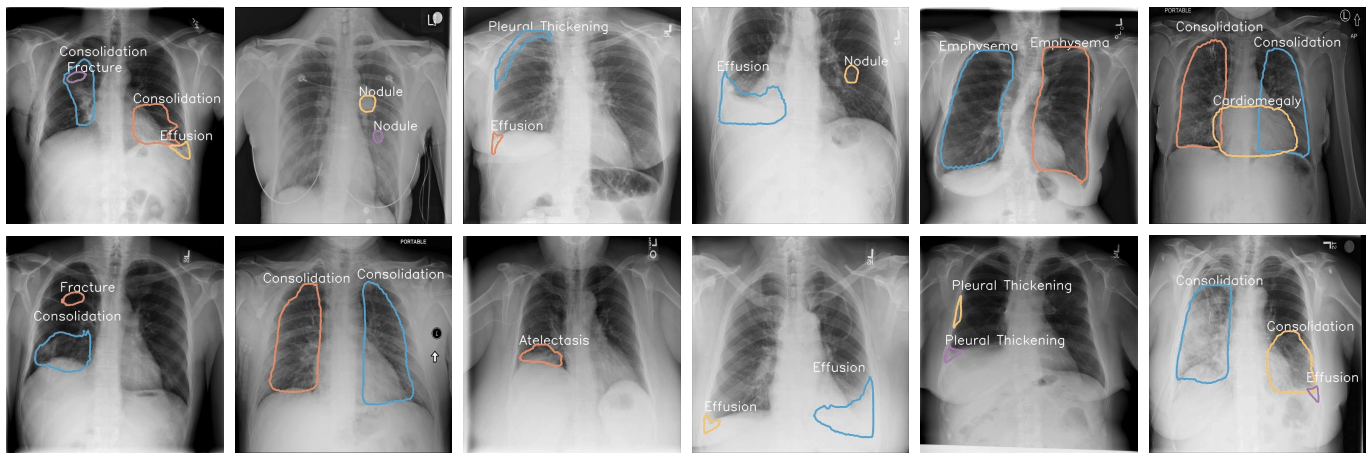

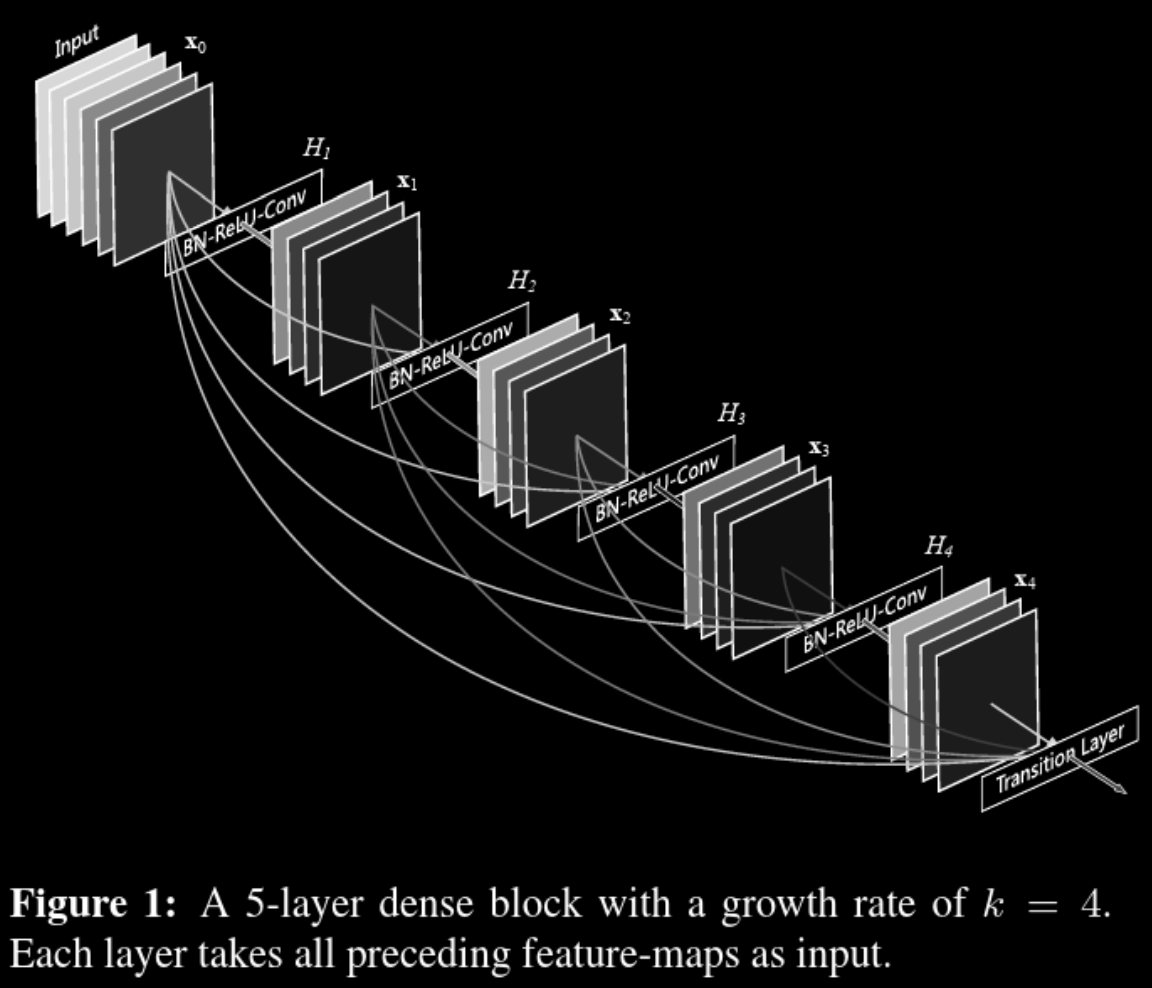

- VinDR-CXR - 18,000 PA views, 28 labels

- CheXpert - 224, 316 JPGs,14 labels

- PadChest - 160,000 PNGs

-

RSNA + Kaggle - too many to list

- May overlap with other datasets

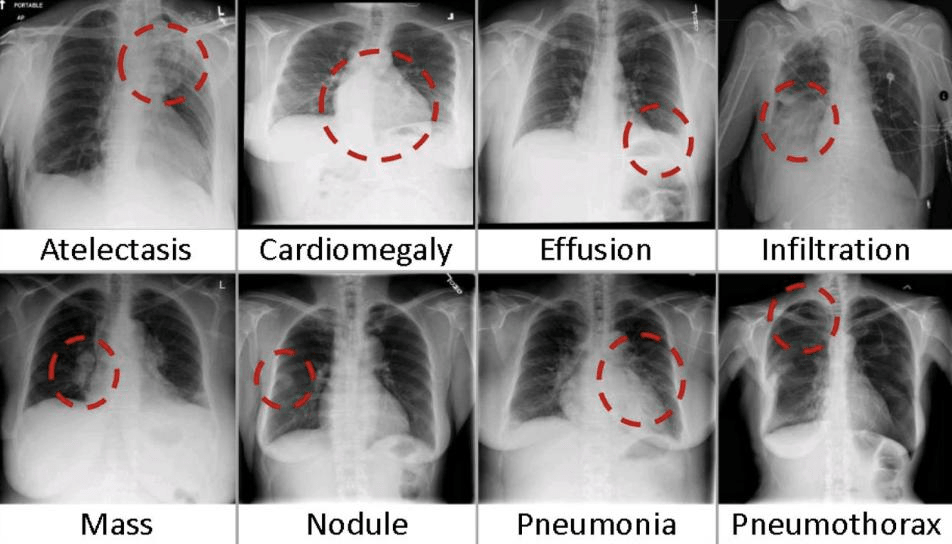

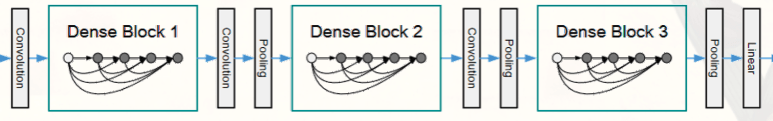

2. Models

Deep Learning Models are already good at Computer Vision

- Densenet-121

- Shown to perform well in several publications

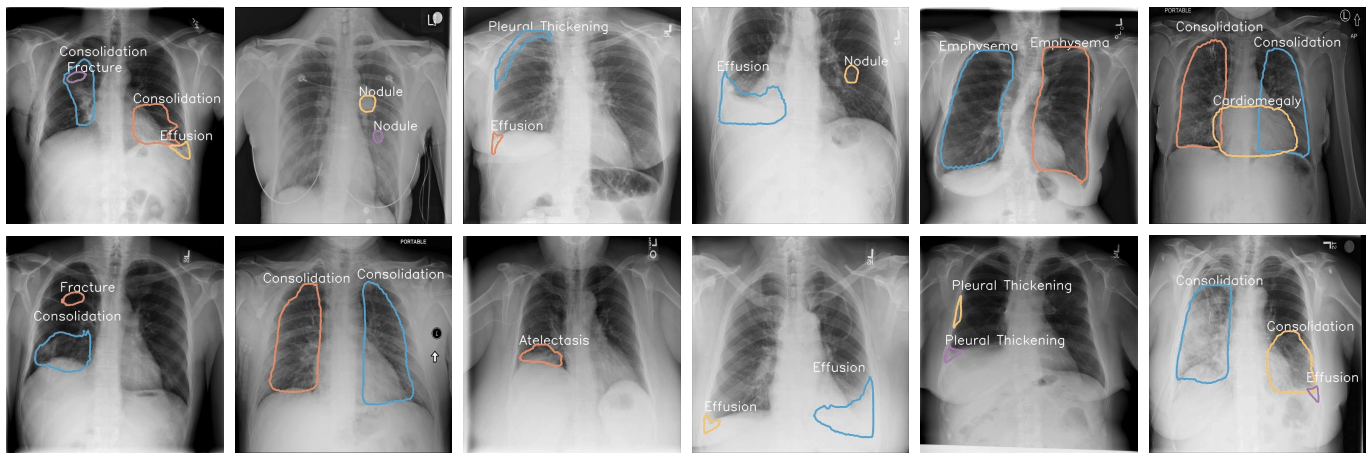

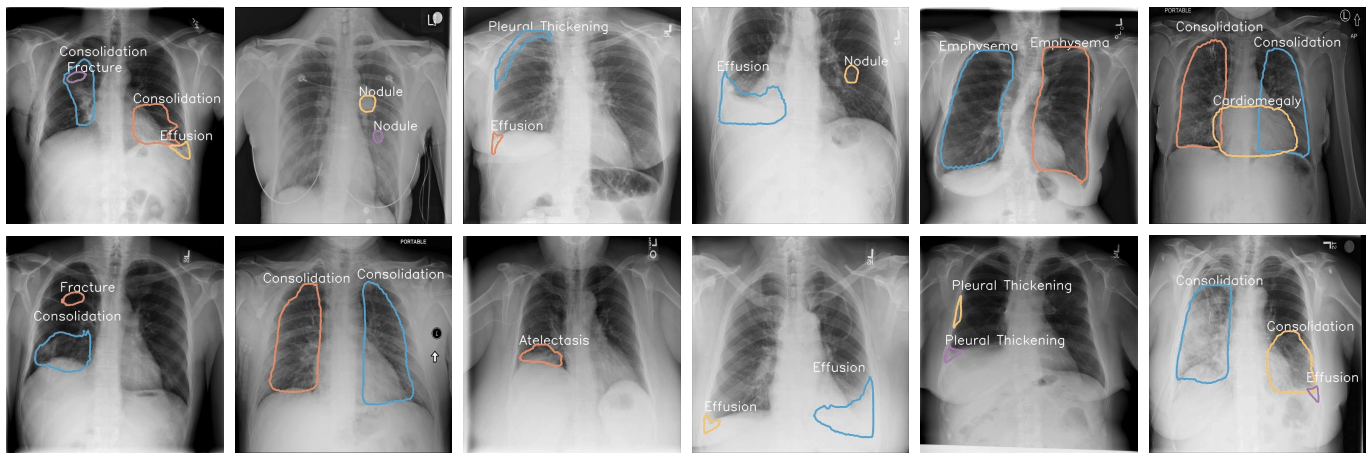

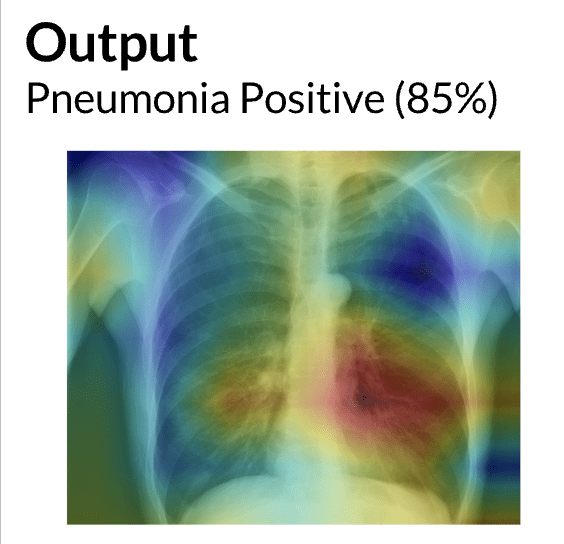

Case Study 01:

- Classify Chest Xrays using a large labelled dataset

- Compare to domain experts (radiologists)

- Worked surprisingly really well !

Code Walkthrough

Colab

Issue #1: Non Causal Features

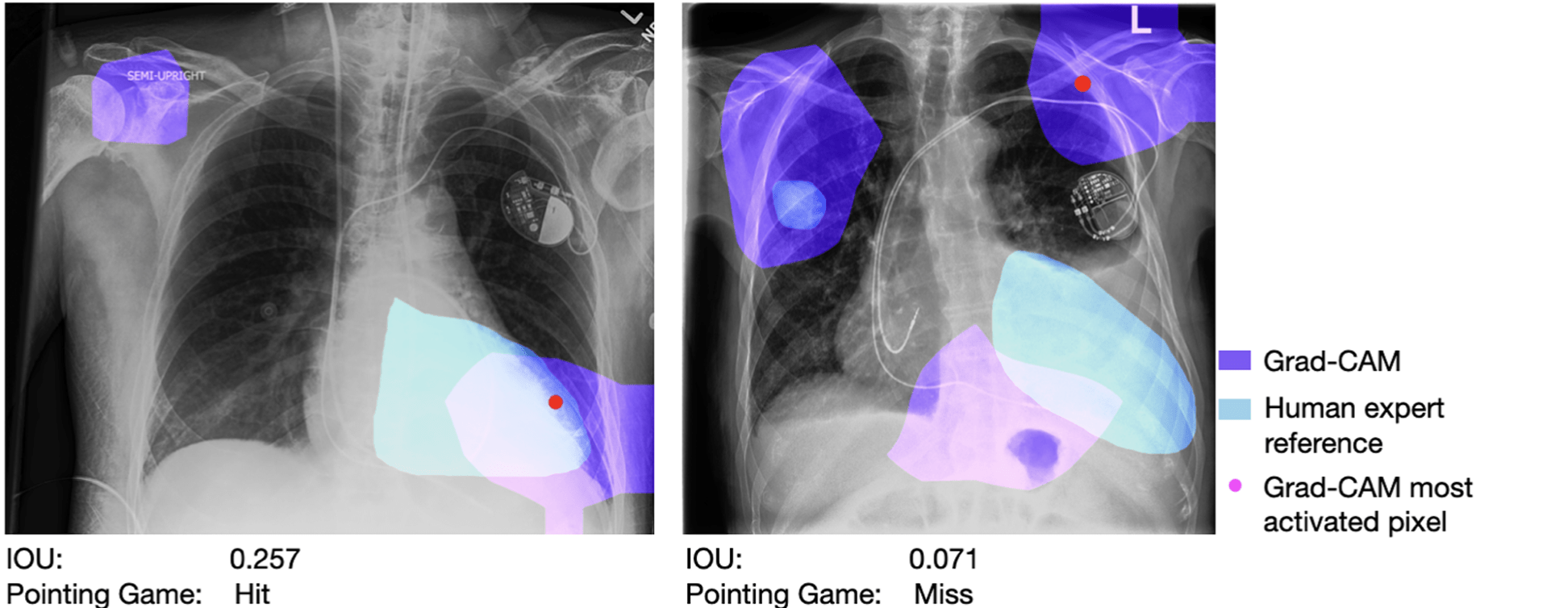

Deep learning saliency maps may not accurately highlight diagnostically relevant regions for medical image interpretation 2

Saporta, Adriel, et al. "Deep learning saliency maps do not accurately highlight diagnostically relevant regions for medical image interpretation (p. 2021.02. 28.21252634)." (2021).Solution 1: Block out non-causal Features

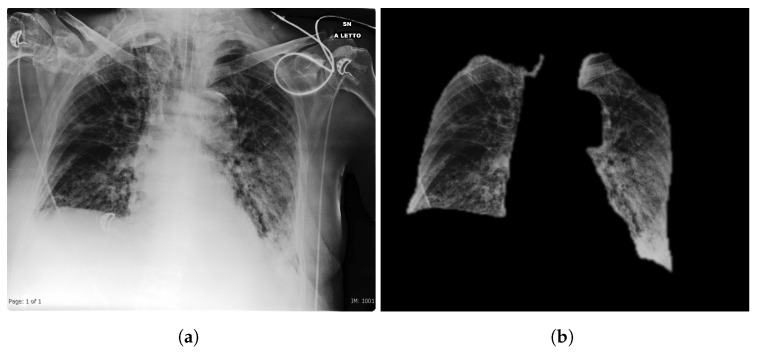

Original image (a) and extracted lung segmented image (b). Many possible bias sources like all the writings and medical equipment is naturally removed.

Tartaglione, Enzo, et al. "Unveiling covid-19 from chest x-ray with deep learning: a hurdles race with small data." International Journal of Environmental Research and Public Health 17.18 (2020): 6933

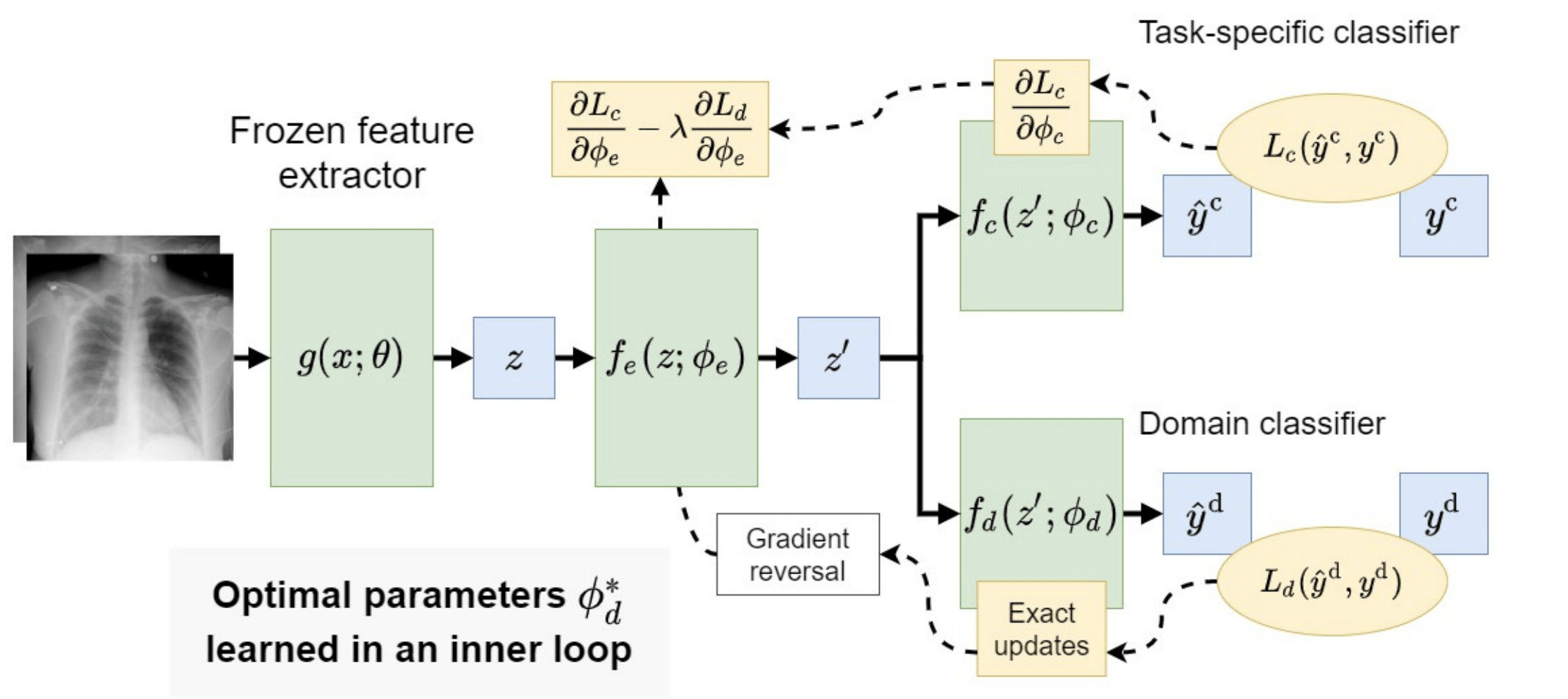

Solution 2: Explicitly model the non-causal factors

Learn a model that simultaneously predicts the class label and domain label for a given CXR image. The parameters of the model are updated to extract representations that contain information about the class label but not about domain label

Issue #2: Noisy Labels

FINDINGS:

Coarse bilateral interstitial opacities are consistent with patient's known interstitial lung disease. There is minimally increased prominence of pulmonary vasculature and heart size compared to prior, possibly secondary to slightly lower lung volumes and/or interval hydration/fluid overload. Mild congestive heart failure cannot be excluded. No pleural effusion or pneumothorax is seen. Underlying interstitial lung disease slightly limits evaluation for pneumonia, but no new large opacities are detected. Aortic calcification is again seen. A nasogastric tube traverses below the diaphragm, distal tip not well seen.> Labelled as positive for pneumonia

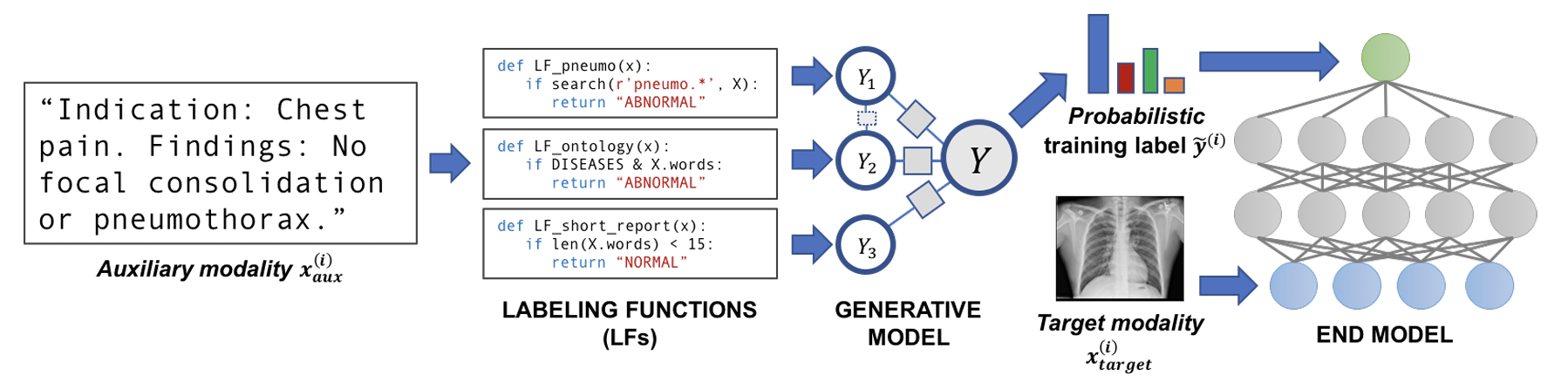

Solution: Learning with Noisy Labels

- Iteratively build labeling functions and use "unlabelled" data

A data programming pipeline uses clinician-written pattern rules on radiology text reports to automatically generate training labels for classifying abnormalities like pneumonia from chest X-rays.

Conclusions

- Always good to remember chest xray image analysis is part of a bigger workflow from triage to diagnostics to treatment, all these are important stages of clinical care

- Datasets are available, machine learning methods can work

- Always good to know the caveats

- Working with domain experts is key

- Many libraries for x-ray data analysis are out there, torchxray vision is only one

- Get familiar with the data generating process

Questions

Acknowlegements

- Material was inspired by Alistair Johnson's presentation from CVPR 2021

- TorchXrayVision Team

References

-

Dunnmon, Jared, et al. "Cross-modal data programming enables rapid medical machine learning." arXiv preprint arXiv:1903.11101 (2019)

-

Trivedi, Anusua, et al. "Deep learning models for COVID-19 chest x-ray classification: Preventing shortcut learning using feature disentanglement." Plos one 17.10 (2022): e0274098.

-

Tartaglione, Enzo, et al. "Unveiling covid-19 from chest x-ray with deep learning: a hurdles race with small data." International Journal of Environmental Research and Public Health 17.18 (2020): 6933

-

Saporta, Adriel, et al. "Deep learning saliency maps do not accurately highlight diagnostically relevant regions for medical image interpretation (p. 2021.02. 28.21252634)." (2021).