Looking into solving SPP in GCS with Distributed Algorithms

Short Talk RLG

October 23rd 2023

Bernhard Paus Graesdal

Disclaimer: Only worked on this for two weeks! Very preliminary

High Level Motivation

1. Solve large GCS instances

2. Leverage parallel computation

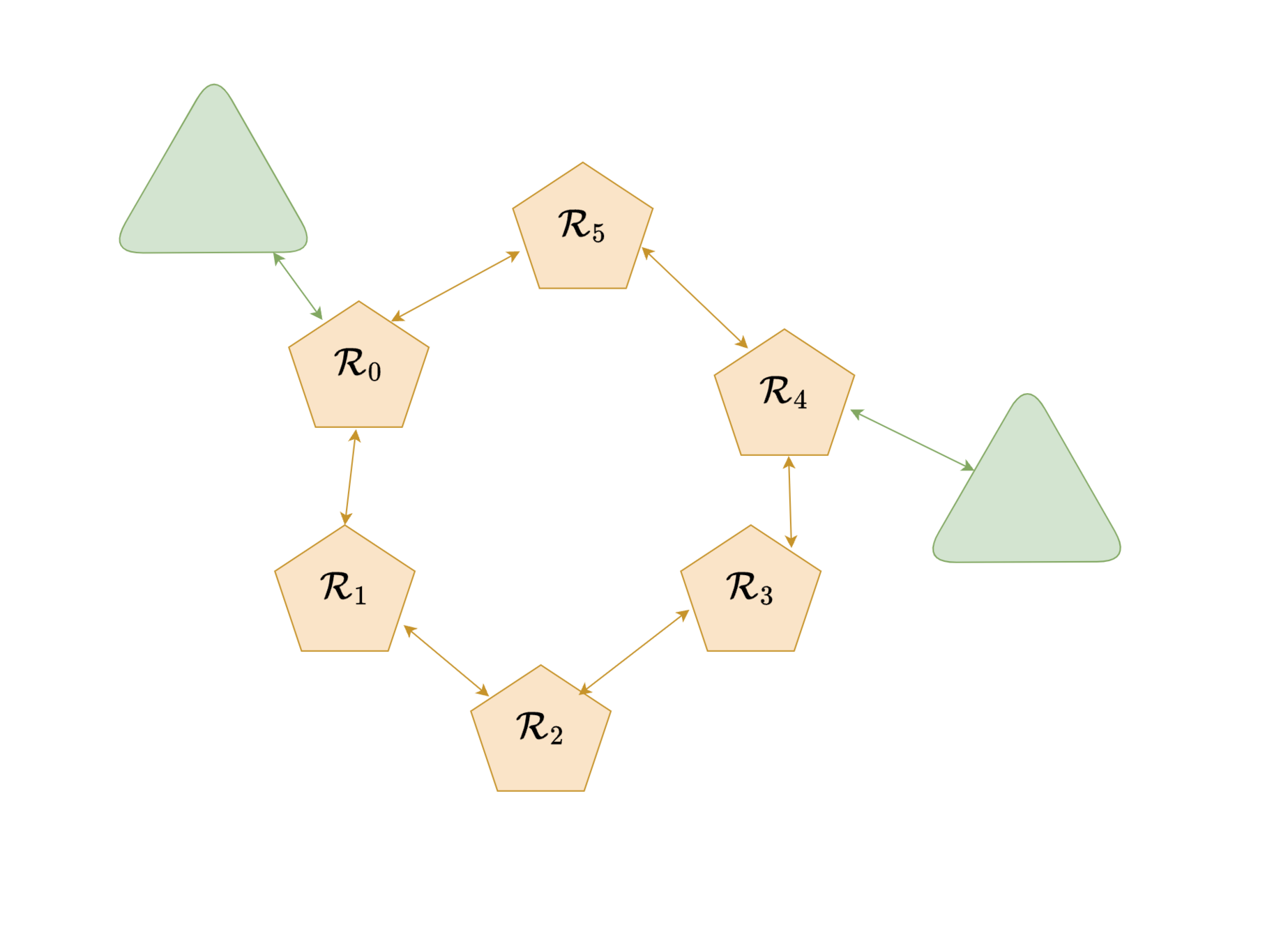

Motivational example : Large contact graphs

- Very large graphs

- Consistent structure for different problems

- Graph is very sparse

- (each node is only connected to two neighboring nodes)

Agenda

- Background on ADMM

- Two proposed ADMM formulations for GCS

- Some preliminary results

Great reference on ADMM:

[1] S. Boyd, “Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers,” FNT in Machine Learning, 2010

- General ADMM problem looks like this:

- \(f: \R^n \rightarrow \R \cup \{ \infty \} \) and \(g: \R^m \rightarrow \R \cup \{ \infty \} \) are convex functions

- Notice that they can take infinite value (this is how we encode other constraints)

- \(A \in \R^{l \times n}\) and \(B \in \R^{l \times m}\)

- \(x \in \R^n\) and \(z \in \R^m\) are optimization variables

ADMM Introduction (1/3)

ADMM Introduction (2/3)

- Form the Augmented Lagrangian

- \(\rho > 0, \ \rho \in \R\) can be seen as a penalty parameter

- \(\|\cdot\|_2\) denotes the Euclidean norm

- \(y \in \R^l\) is a vector of dual variables

- Initialization: Choose initial guesses \(x^{(0)}\), \(z^{(0)}\), and \(y^{(0)}\).

- Iteration: For \(k = 0, 1, 2, \ldots\) until convergence, update:

3. Convergence Check: Stop the iterations if the stopping criteria are satisfied.

ADMM Introduction (3/3)

Scaled price variables

2. Iteration: For \(k = 0, 1, 2, \ldots\) until convergence, update:

- Introduce a scaled dual variable \( u:= (1/\rho) y\), and reformulate the Augmented Lagrangian (using completion of squares) as:

- Gives simpler update steps:

Example 1: Constrained Convex Optimization

- Put the problem into ADMM form:

- Consider the problem:

- \( \mathcal{C} \subset \mathbb{R}^n \) a convex set

- \( f: \mathbb{R}^n \rightarrow \mathbb{R} \) a convex function

- \( \tilde{I}_\mathcal{C} \) indicator function for set \( \mathcal{C} \)

Example 1: Constrained Convex Optimization

Update steps now take the form:

- x-update is unconstrained convex optimization

- \( \Pi_\mathcal{C} \) is the Euclidean projection

- For many problems the z-update step has a closed-form solution!

- Put the problem into ADMM form:

- Consider the problem:

- \( f_i: \mathbb{R}^n \rightarrow \mathbb{R} \) convex functions

- \( z \in \mathbb{R}^n \)

- \( x_i \in \mathbb{R}^n \) are "local copies" of \( z \)

Example 2: Global Variable Consensus

Example 2: Global Variable Consensus

Update steps now take the form:

- x-update is unconstrained convex optimization

- x-update can be solved in parallel

- y- and z-updates are very cheap

Two paths:

Solving GCS with ADMM

- Solve the non-convex SPP in GCS problem directly

- Solve the convex relaxation

1. Solve the non-convex problem directly

- Non-convex (naive) formulation of SPP in GCS:

- Bi-convex in \( y_e \) and \( x_v \) (except for integrality on \( y_e \) )

- Let us use ADMM to decompose the problem

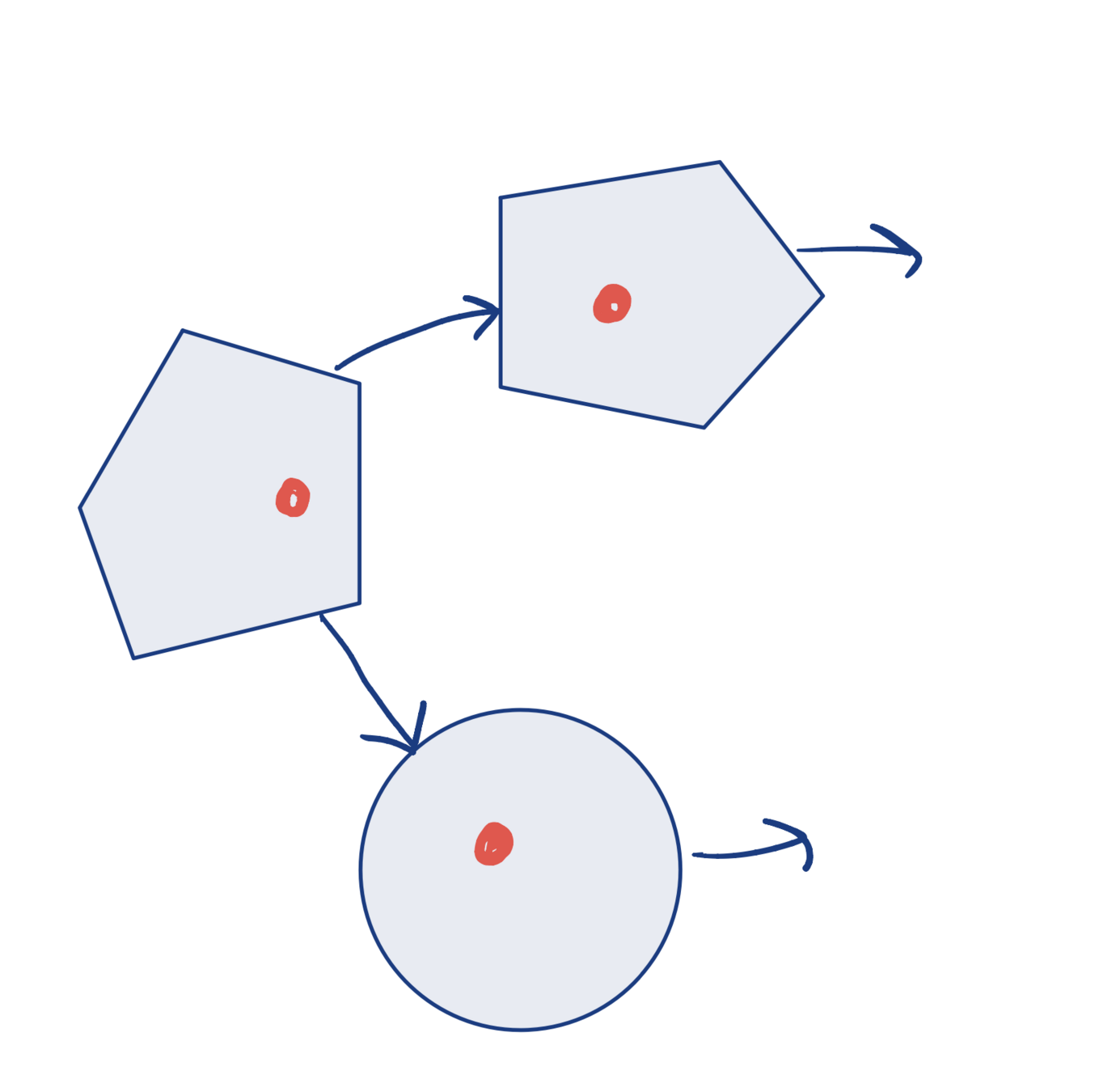

1. Solve the non-convex problem directly

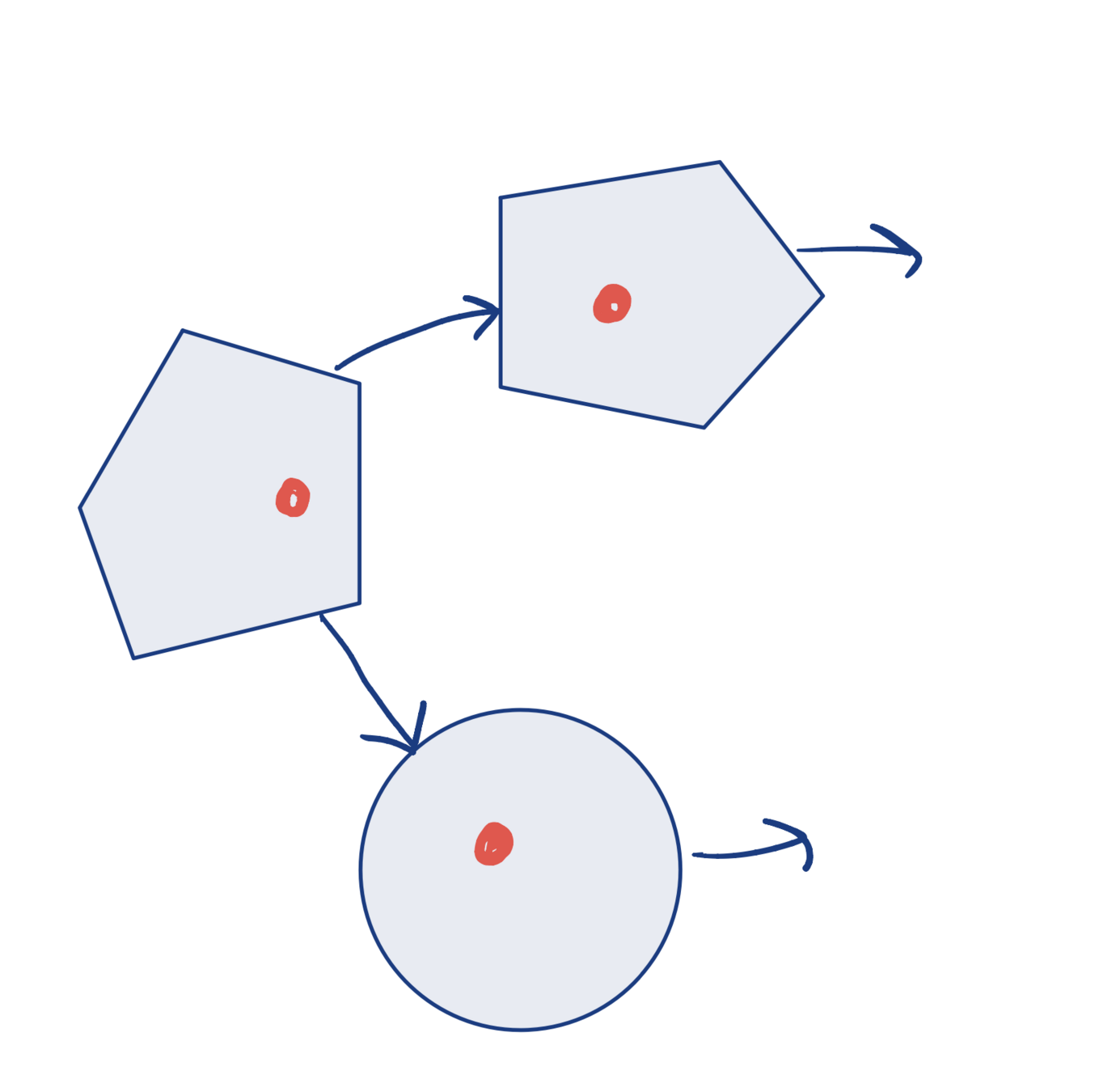

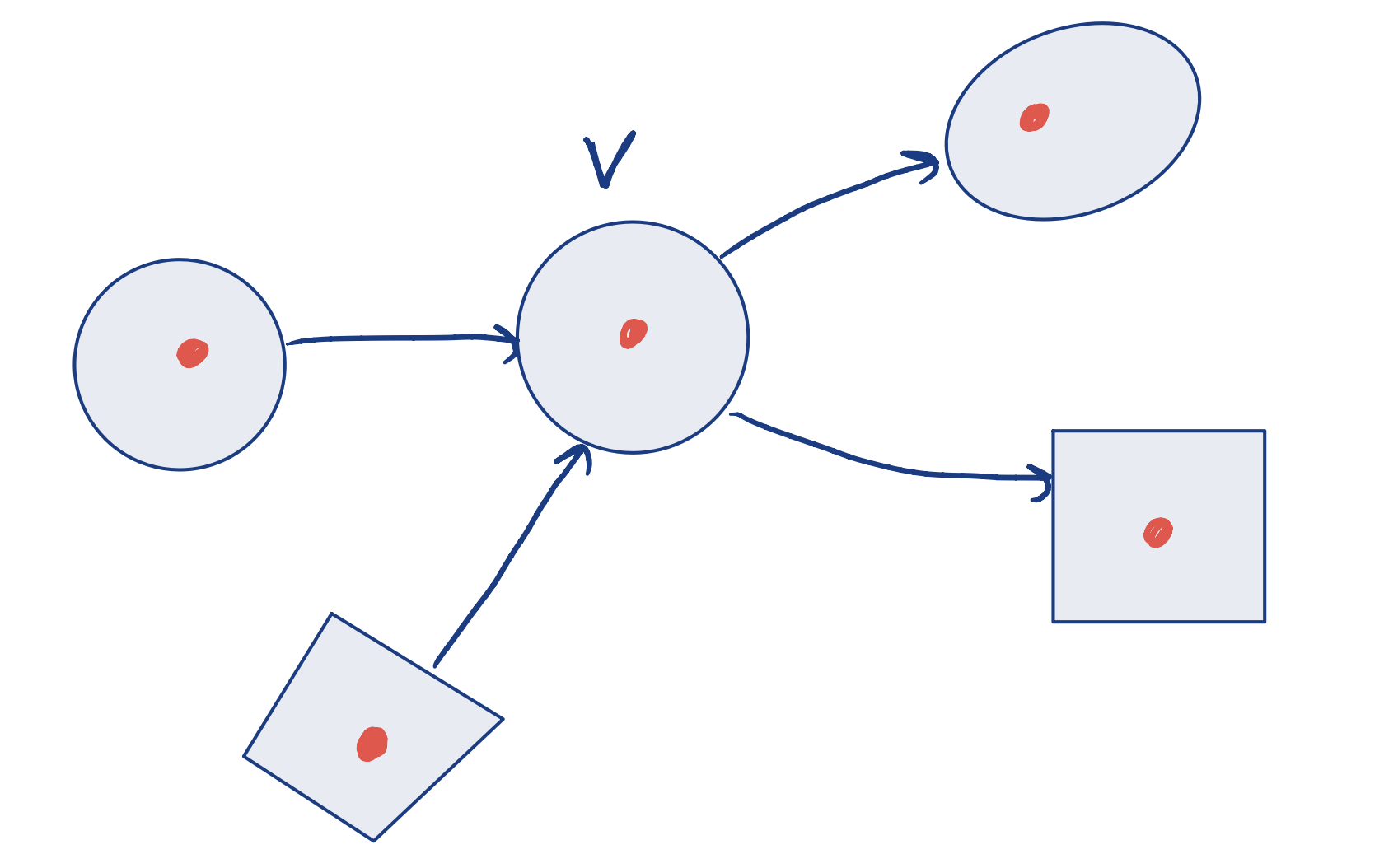

- Use the "global variable consensus" trick and decompose over edges

- Introduce auxiliary variables ("local variables") for each edge:

- \(\forall e = (u, v)\) introduce the local variables \(x_{eu}\) and \(x_{ev}\).

\( \longrightarrow \)

Original

With local copies

- Add "consensus constraint" for each edge:

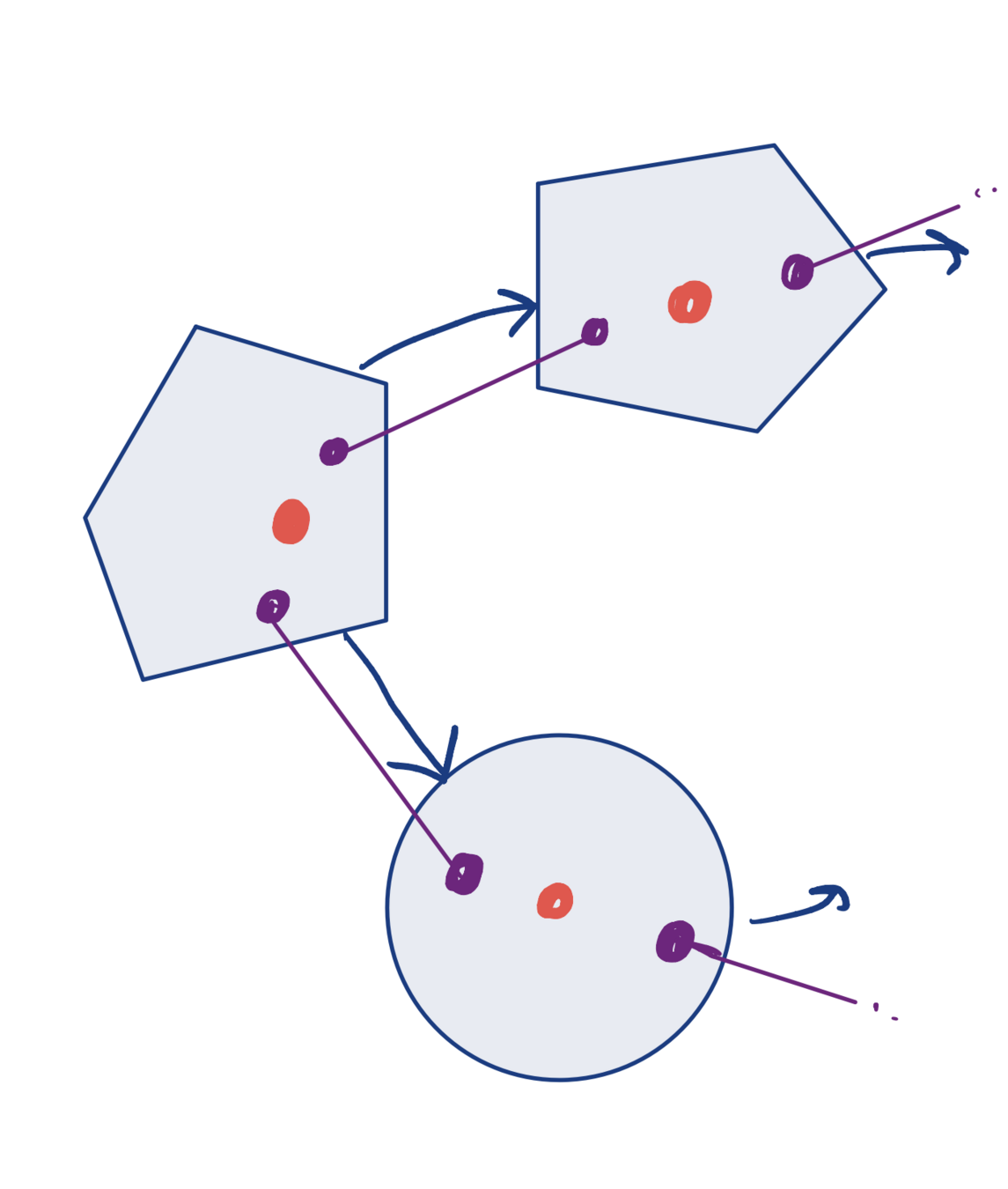

1. Solve the MICP directly

- Perform consensus over vertices

- Relax the consensus constraints into the Augmented Lagrangian

- Use the indicator function for all other constraints (keep them)

\( \longrightarrow \)

- For each edge \(e = (u, v)\) introduce the local variables \(x_{eu}\) and \(x_{ev}\). We can now rewrite the SPP GCS problem as:

"Consensus constraints"

\( \leftarrow \)

Cost now decomposes independently over edges

\( \leftarrow \)

1. Solve the MICP directly

1. Solve the MICP directly

We now have three blocks of variables:

- Consensus variables: \( x_v, \, v \in \mathcal{V} \)

- Local variables: \((x_{eu}, x_{ev}), \, e = (u,v) \in \mathcal{E} \)

- Flow variables: \( y_e, \, e \in \mathcal{E} \)

The problem is bi-convex in these

1. Solve the MICP directly

-

Local update: Solve a small shortest path problem between two vertices

- Can be solved in parallel

- Consensus update: Closed-form mean:

3. Discrete update: Reduces to a discrete SPP (solve with Dijkstra)

4. Dual update:

- Simple problems

- Expect convergence issues on harder instances

- Still, the "global" decision of which path to take is changing several times!

2. Solve the convex relaxation

- Convex relaxation of GCS problem:

- \( z_e \) spatial flow in for edge \( e \)

- \( z_e' \) spatial flow out for edge \(e \)

- \( y_e \) flow for edge \(e \)

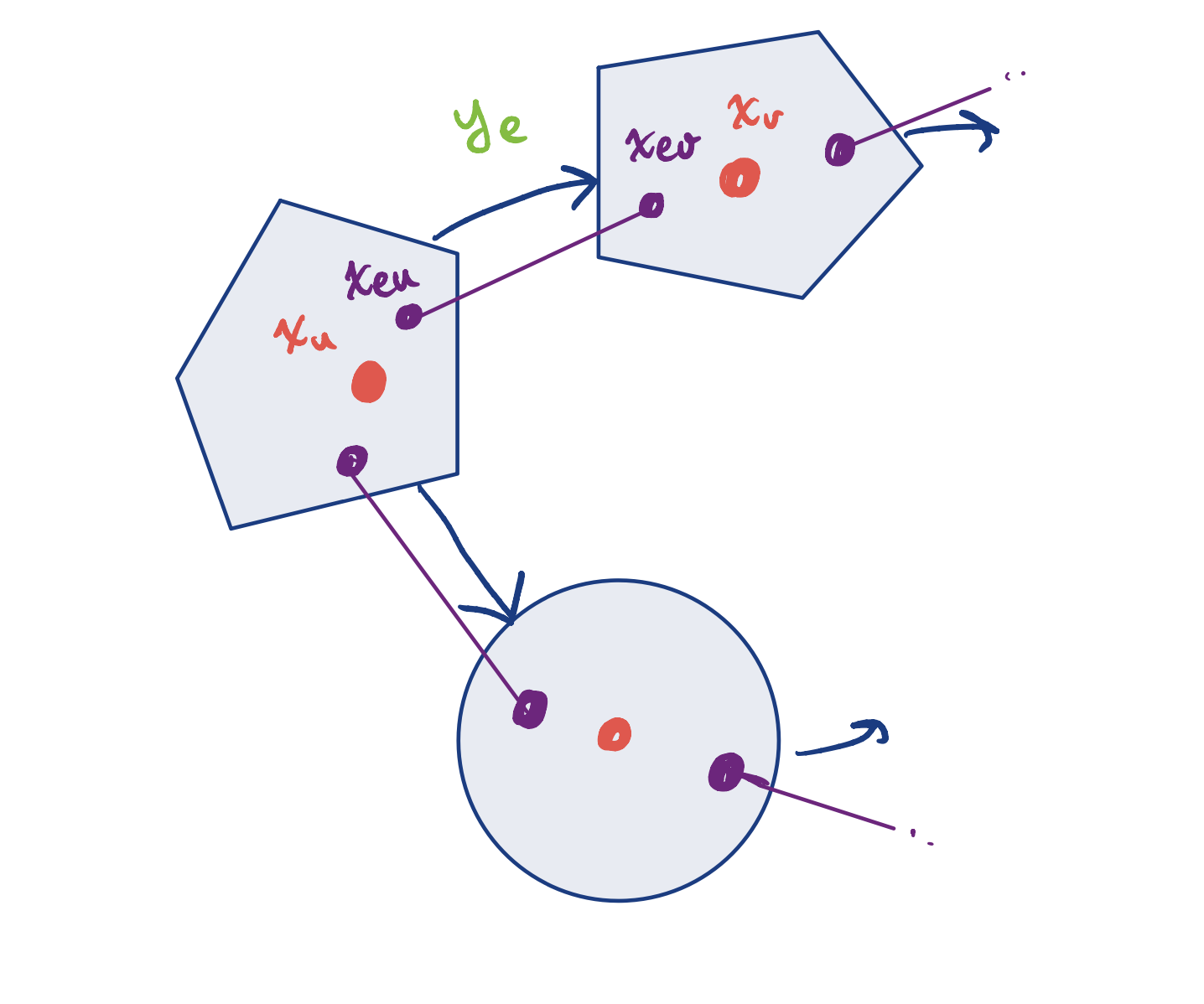

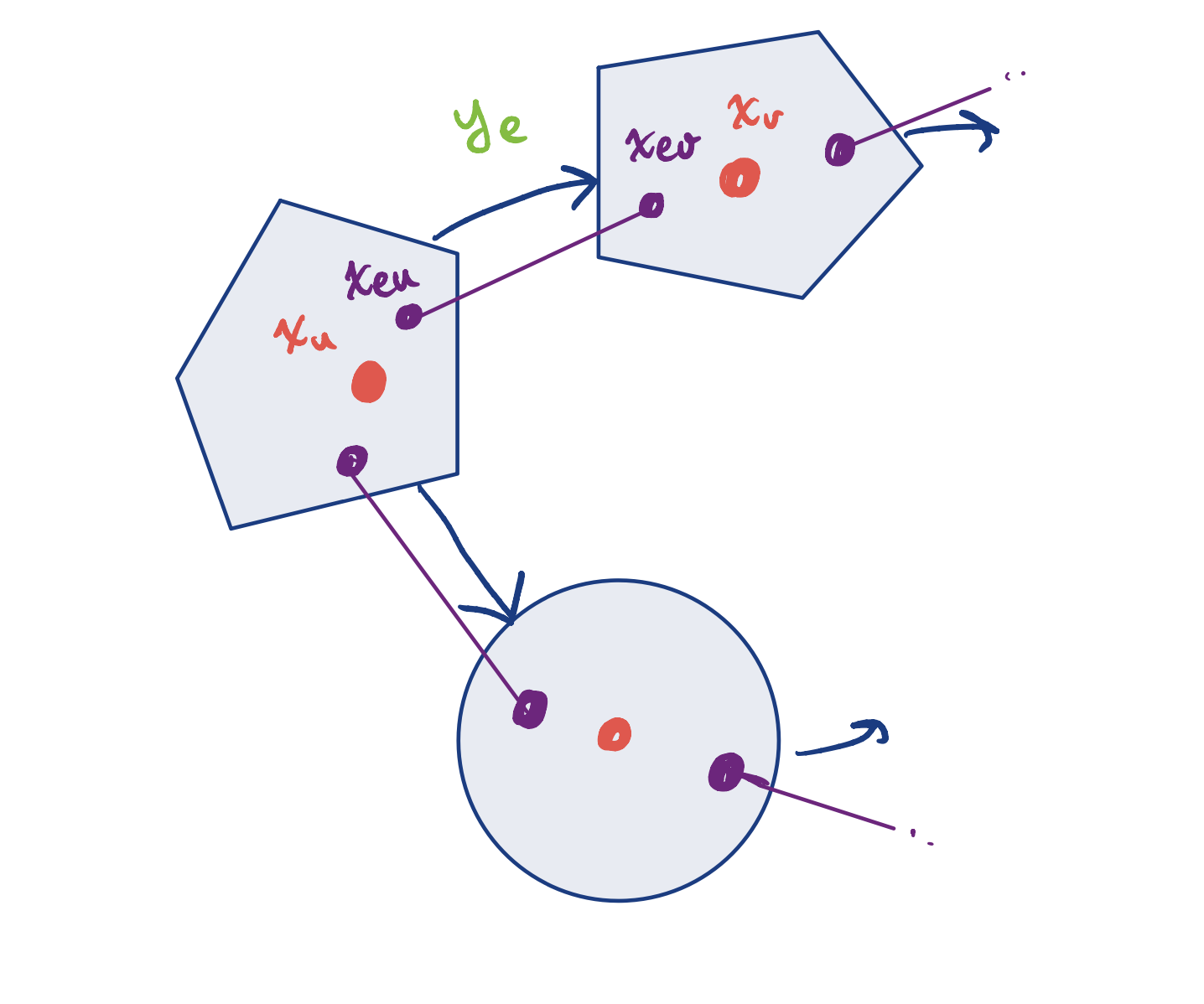

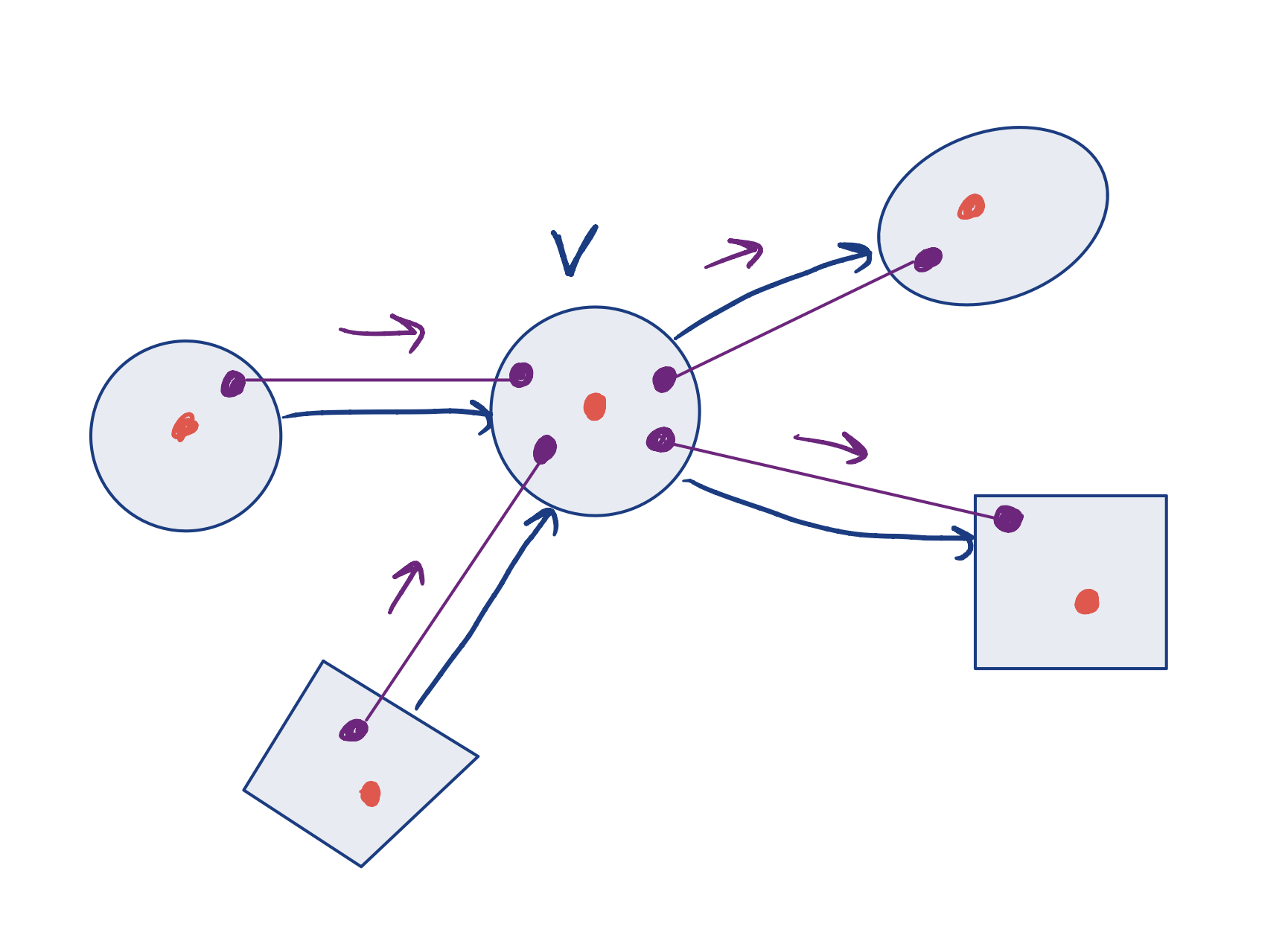

2. Solve the convex relaxation

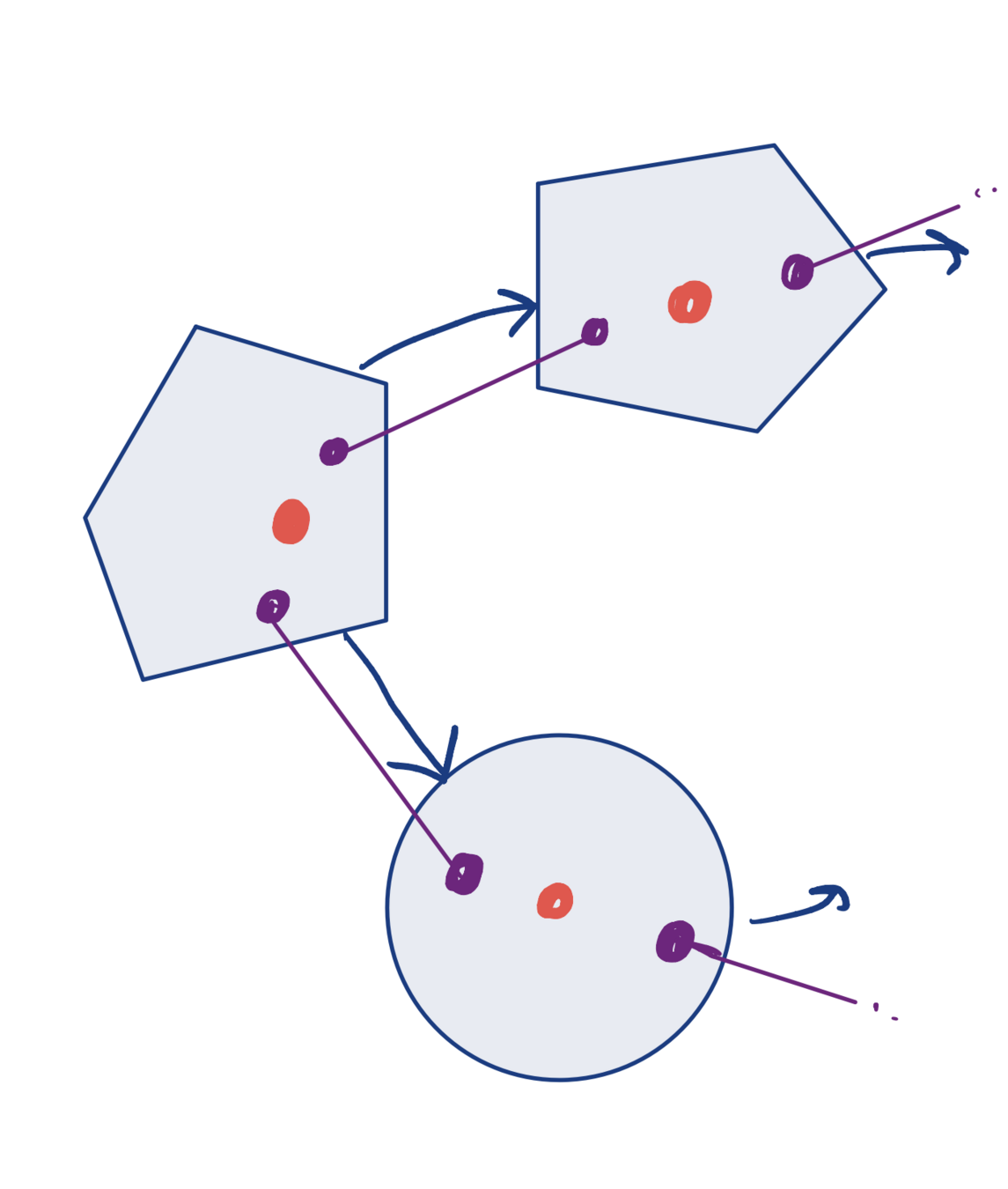

- To make the cost terms and all constraints independent, we now decompose over vertices

- For each vertex, introduce local variables

- Do consensus over edges

Original

With local copies

\( \longrightarrow \)

2. Solve the convex relaxation

We now have two blocks of variables:

- Local variables (over vertices): \( z_{ve}, z_{ve}', y_{ve}, \, \forall v \in \mathcal{V}, \forall e \in \mathcal{E}_v\).

- Consensus variables (over edges): \((z_{e}, z_{e}', y_e), \, \forall e \in \mathcal{E} \)

2. Solve the convex relaxation

-

Local update (on vertices): For each vertex, solve a small SOCP with neighboring vertices

- Can be solved in parallel

3. Dual update:

2. Consensus update (on edges): For each edge, compute the consensus variables as "the mean" over the local edge variables:

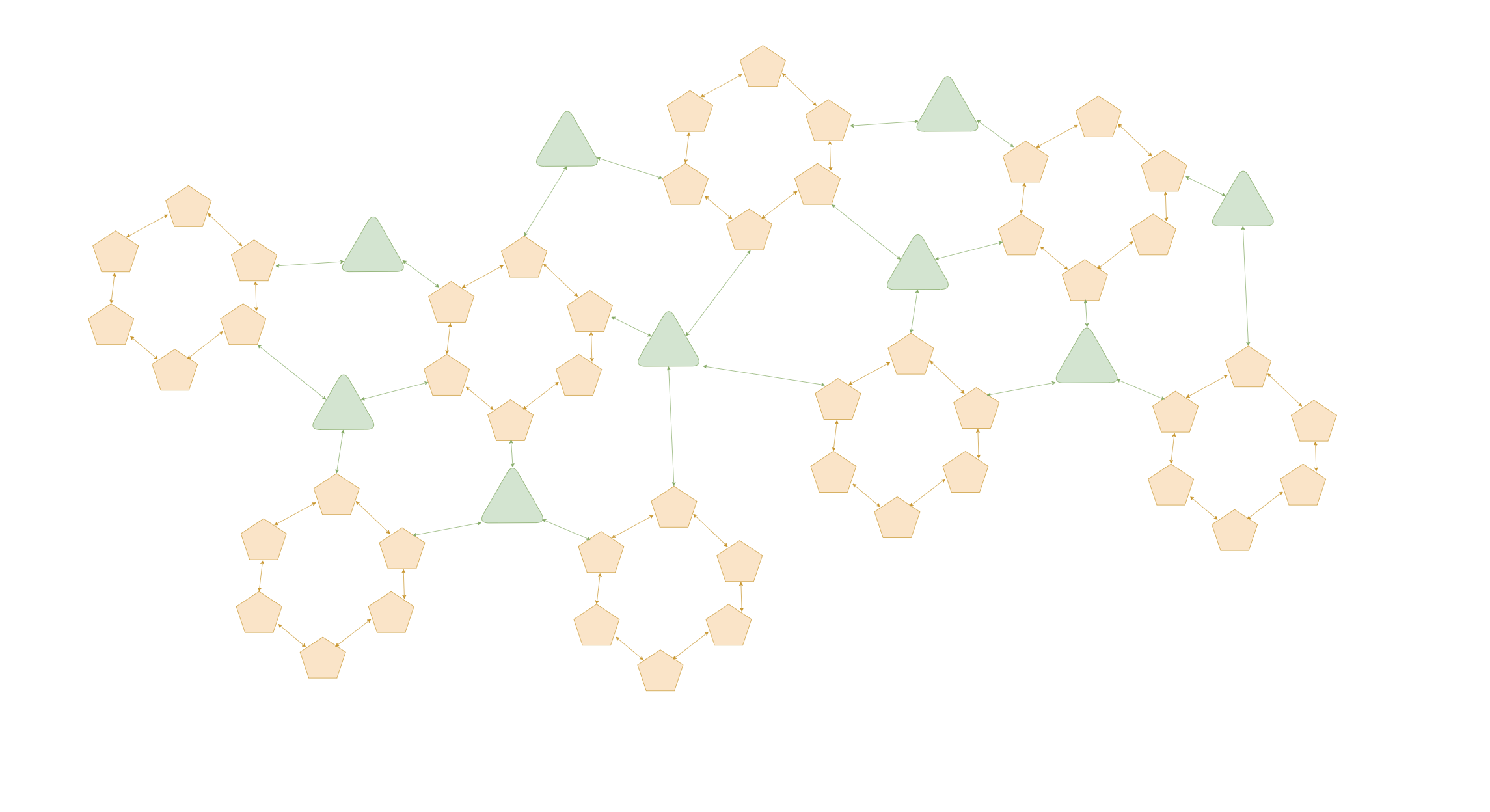

First result

- Not very fast, but converges

- Each iteration only relies on local information (neighboring vertices and edges)

Green = GCS solution

Red = ADMM solution

Conclusion

- Very preliminary work, but seems interesting

- Maybe this can be a way to remove "irrelevant" parts of the problem?

- For instance: Only solve subproblems where incoming flow is non-zero

- Need to clearly define what problems that would benefit from being solved like this