Equivariant normalizing flows and their application to cosmology

Carolina Cuesta-Lazaro

April 2022 - IAIFI JC

Simulated Data

Data

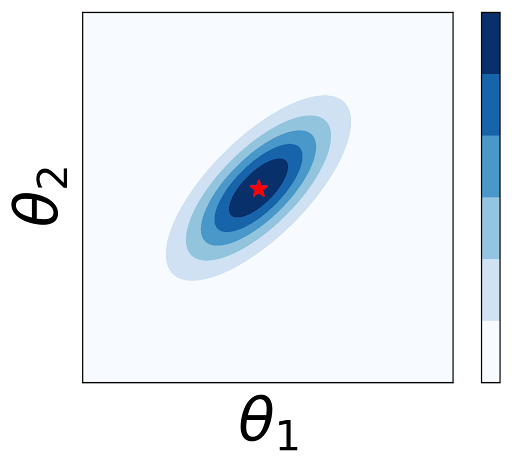

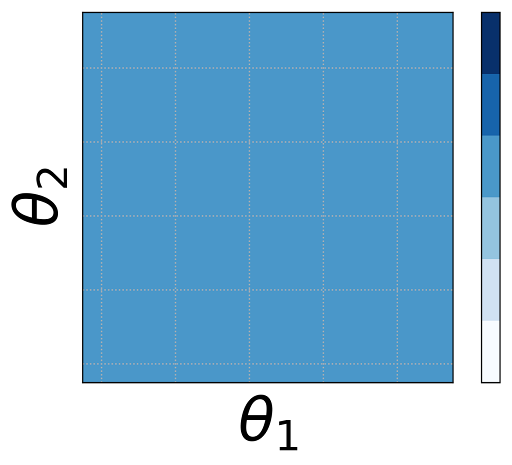

Prior

Posterior

Forwards

Inverse

Cosmological parameters

EARLY UNIVERSE

LATE UNIVERSE

Normalizing flows: Generative models and density estimators

VAE,GAN ...

Gaussianization

Data space

Latent space

Maximize the data likelihood

NeuralNet

f must be invertible

J efficient to compute

1-D

n-D

Equivariance

Invariance

Equivariant

Invariant

Equivariant

Invariant

Challenge: Expressive + Invertible + Equivariant

1. Continuous time Normalizing flows

ODE solutions are invertible!

z = odeint(self.phi, x, [0, 1])torchdiffeq

solving the ODE might introduce error in estimating p(x)

Image Credit: https://arxiv.org/abs/1810.01367

Equivariant? GNNs

1. Invertible but expressive

2. Equivariant to E(n)

E(n) equivariant normalizing flows

Cosmological simulations -> Millions of particles!

Solution: Density on mesh + Convolutions in Fourier space

1-D functions learned from data

Cubic splines (8 spline points)

Monotonic rational quadratic splines

(8 spline points)

Loss Function

Generative: Maximize likelihood

Discriminative: target the posterior

Gaussian Random Field:

The Power spectrum is an optimal summary statistic

Analytical likelihood

Flow likelihood

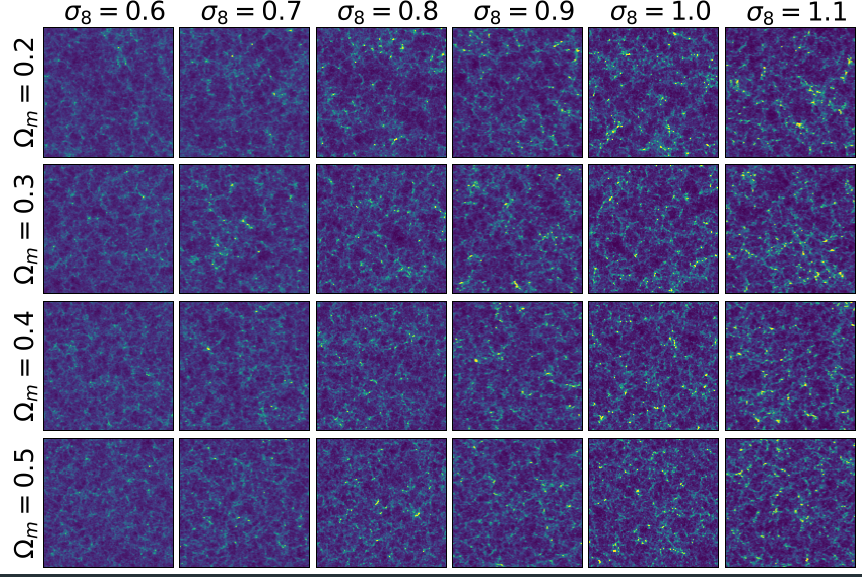

Non-Gaussian N-body simulations

1. Inference

Non-Gaussian N-body simulations

2. Sampling

- Can we quantify the full information content? Can normalizing flows extract all the information there is about cosmology?

- Can the latent space be the initial conditions for the N-body sim?

- Are current models to embed symmetries too constraining?

- Model misspecification?

- Does dimensionality reduction help with interpretability?