Generative Solutions for Cosmic Problems

Flatiron Institute

Institute for Advanced Studies

Carol(ina) Cuesta-Lazaro

["Genie 2: A large-scale foundation model" Parker-Holder et al (2024)]

["Generative AI for designing and validating easily synthesizable and structurally novel antibiotics" Swanson et al]

Probabilistic ML has made high dimensional inference tractable

1024x1024xTime

["Genie 3: A new frontier for world models" Parker-Holder et al (2025)]

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

1-Dimensional

Machine Learning

Secondary anisotropies

Galaxy formation

Intrinsic alignments

DESI / SphereX / Hetdex

Euclid / LSST

SO / CMB-S4

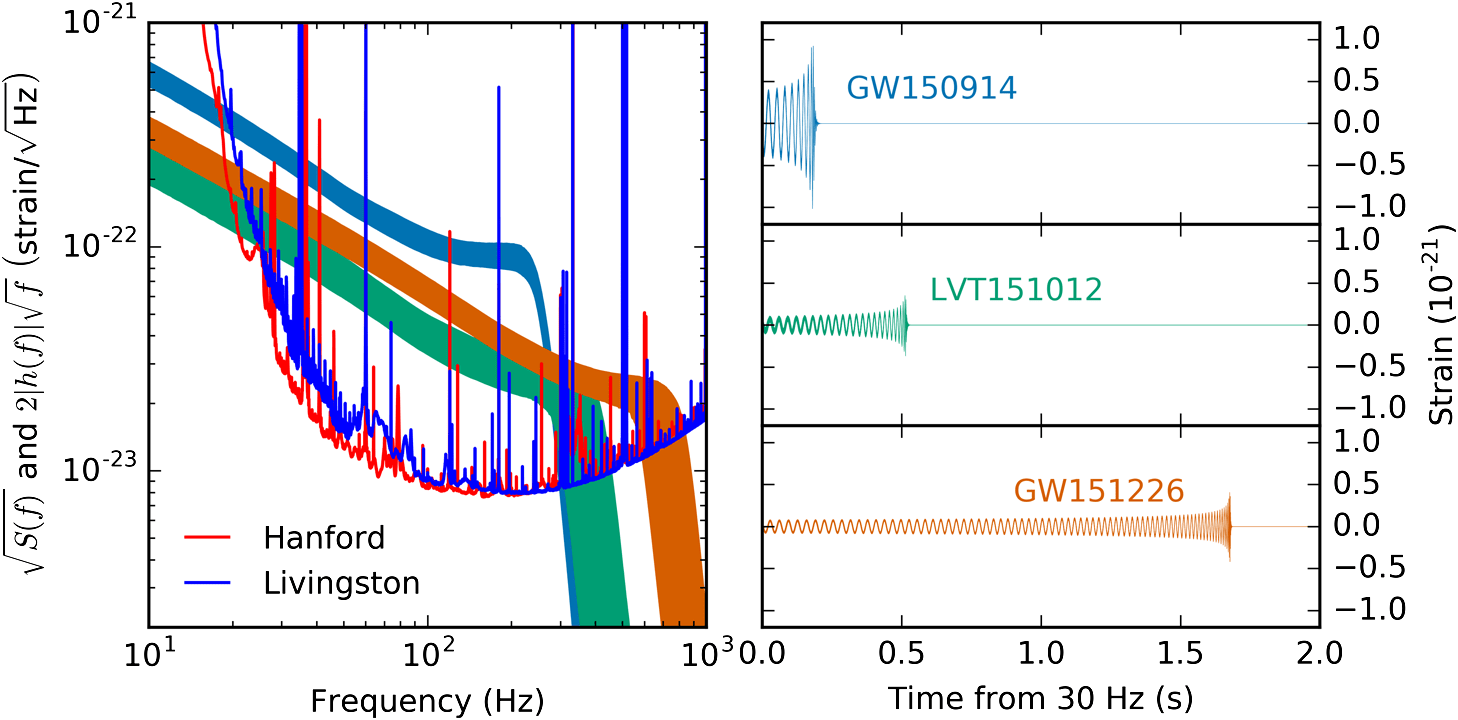

Ligo / Einstein

The era of Big Data Cosmology

xAstrophysics

HERA / CHIME

SAGA / MANGA

Galaxy formation

Emitters Census

Reionization

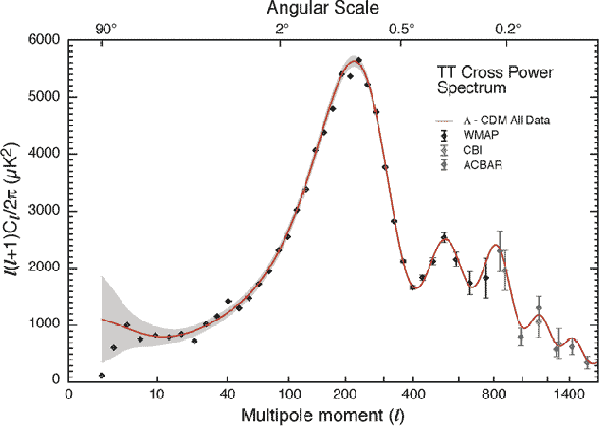

Cosmic Microwave Background

Galaxies / Dwarfs

21 cm

Galaxy Surveys

Gravitational Lensing

Gravitational Waves

AGN Feedback/Supernovae

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

Field-Level Inference and Emulators

Robust Simulation-based inference

Generating Fields

Generating Representations

Disentangling systematics from physics latent spaces

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

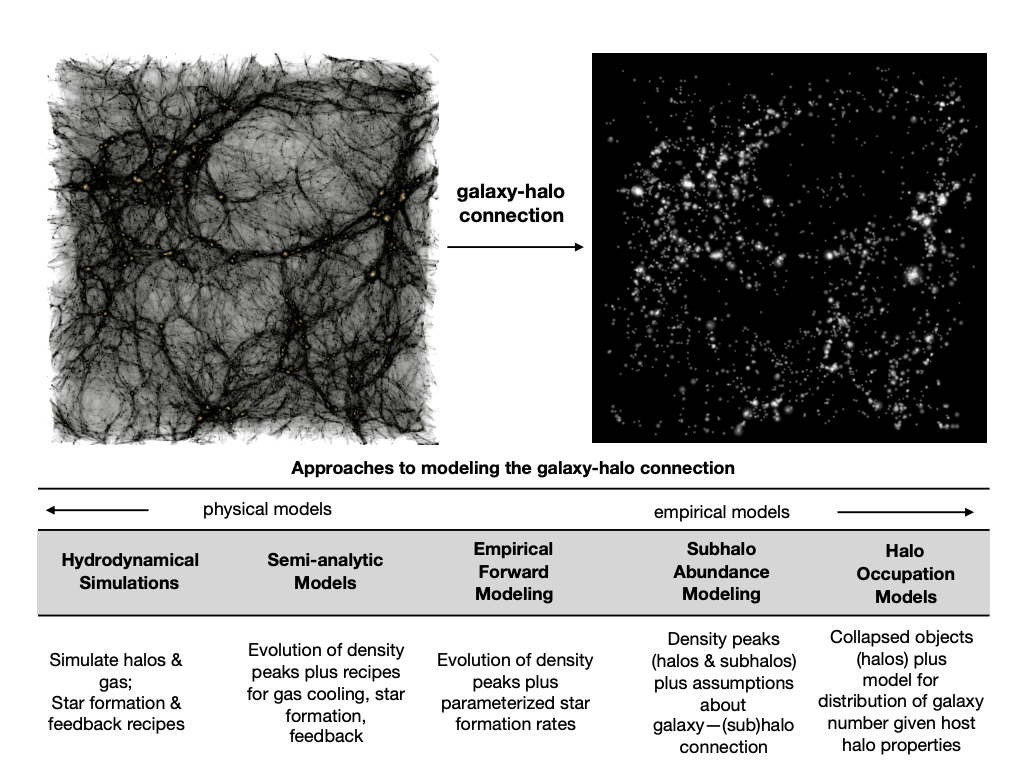

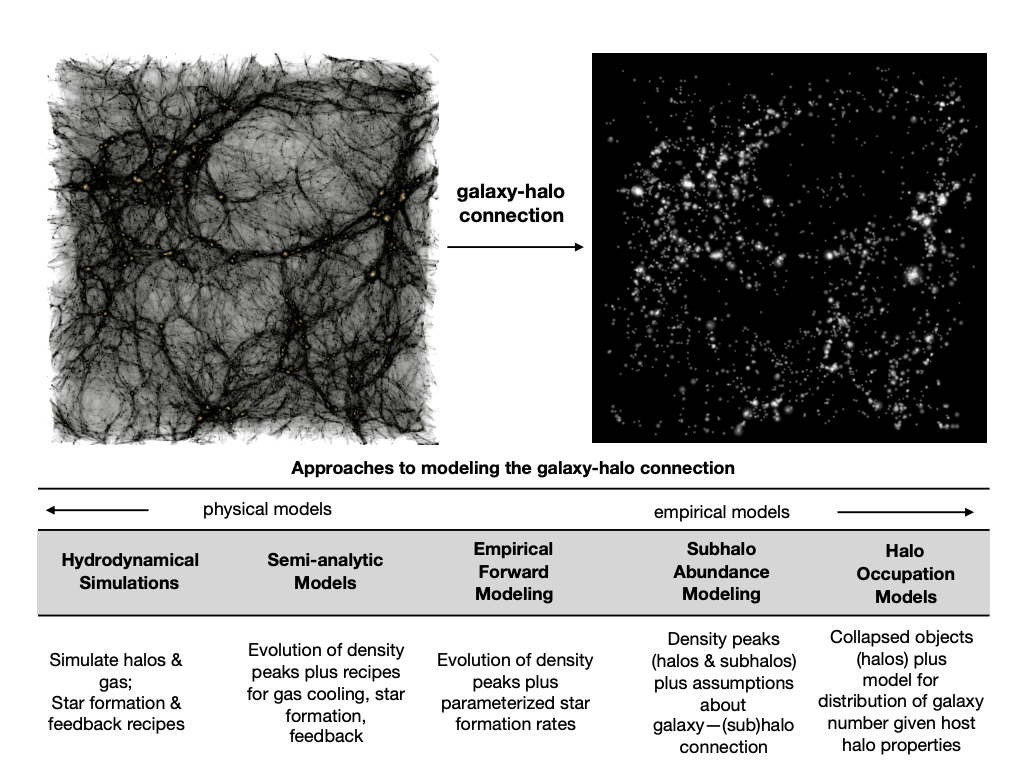

What is field-level inference?

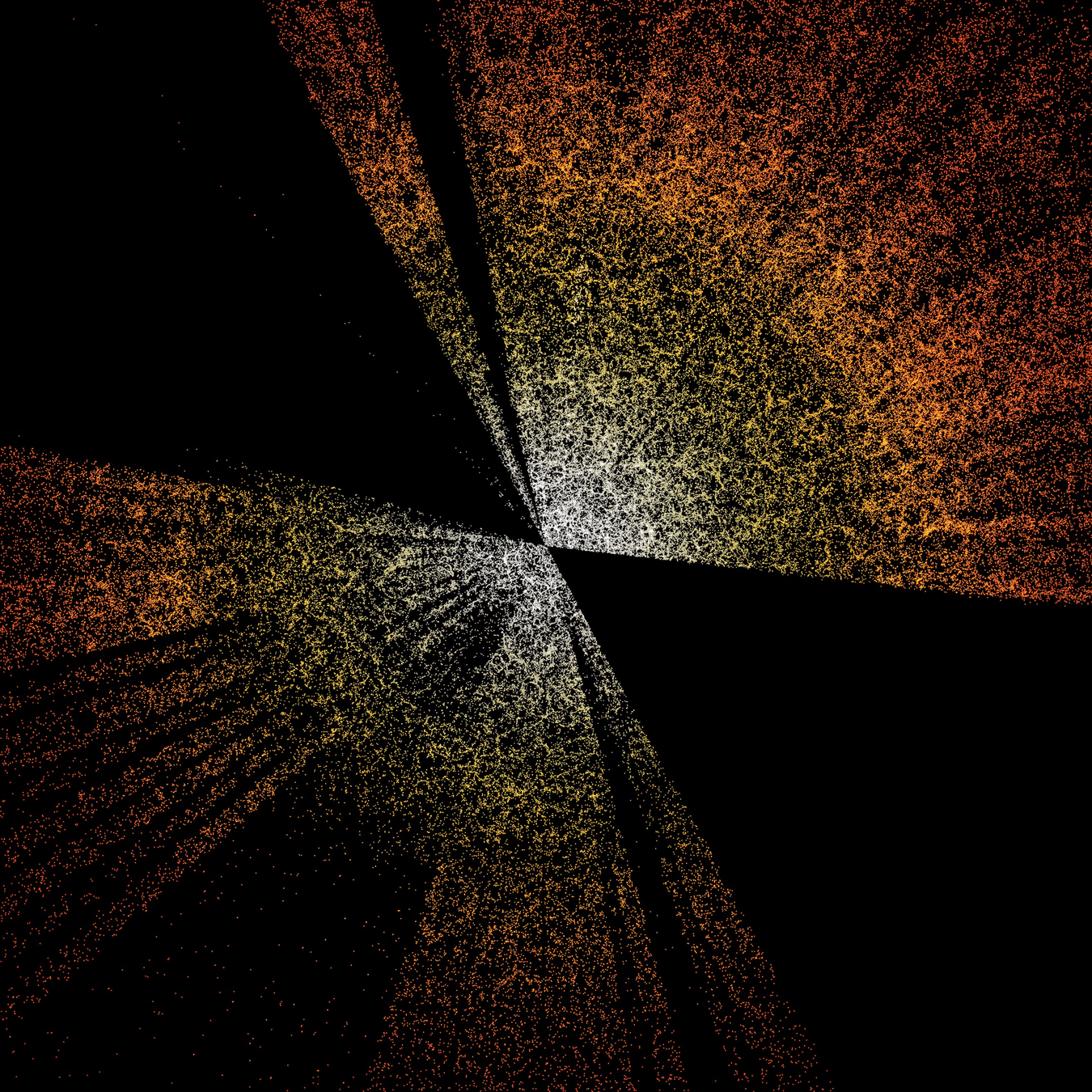

A digital twin of our Universe

Observed Galaxy Distribution

Simulated Galaxy Distribution

Field Level Inference

Forward Model

(= no Cosmic Variance)

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

Why field-level inference?

Optimal constraints

Counts-in-cell

Do we really need to infer 10^9 parameters to constrain ~10?

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

Compression

Marginal Likelihood

Explicit Likelihood

Implicit Likelihood

Initial Conditions

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

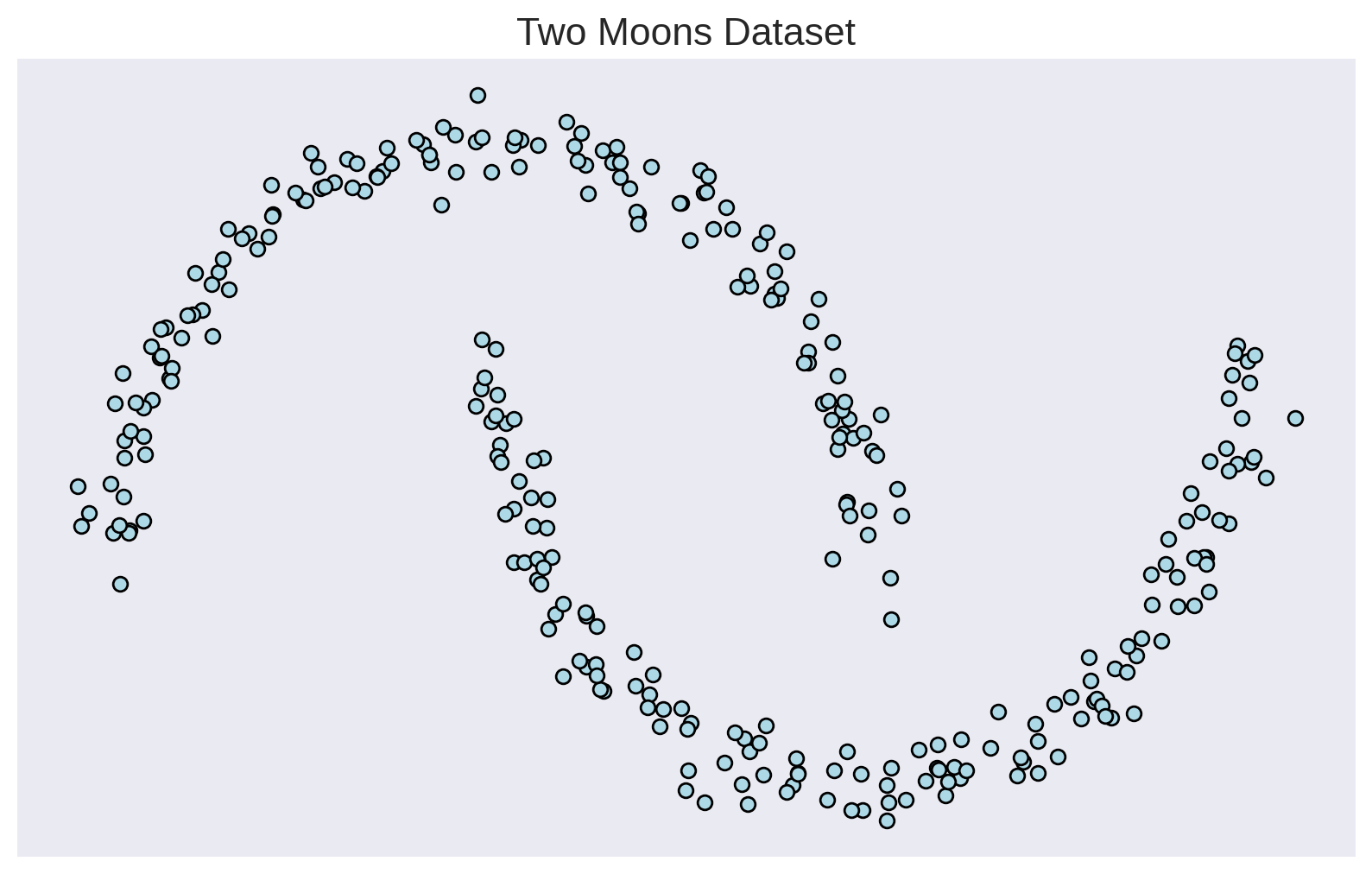

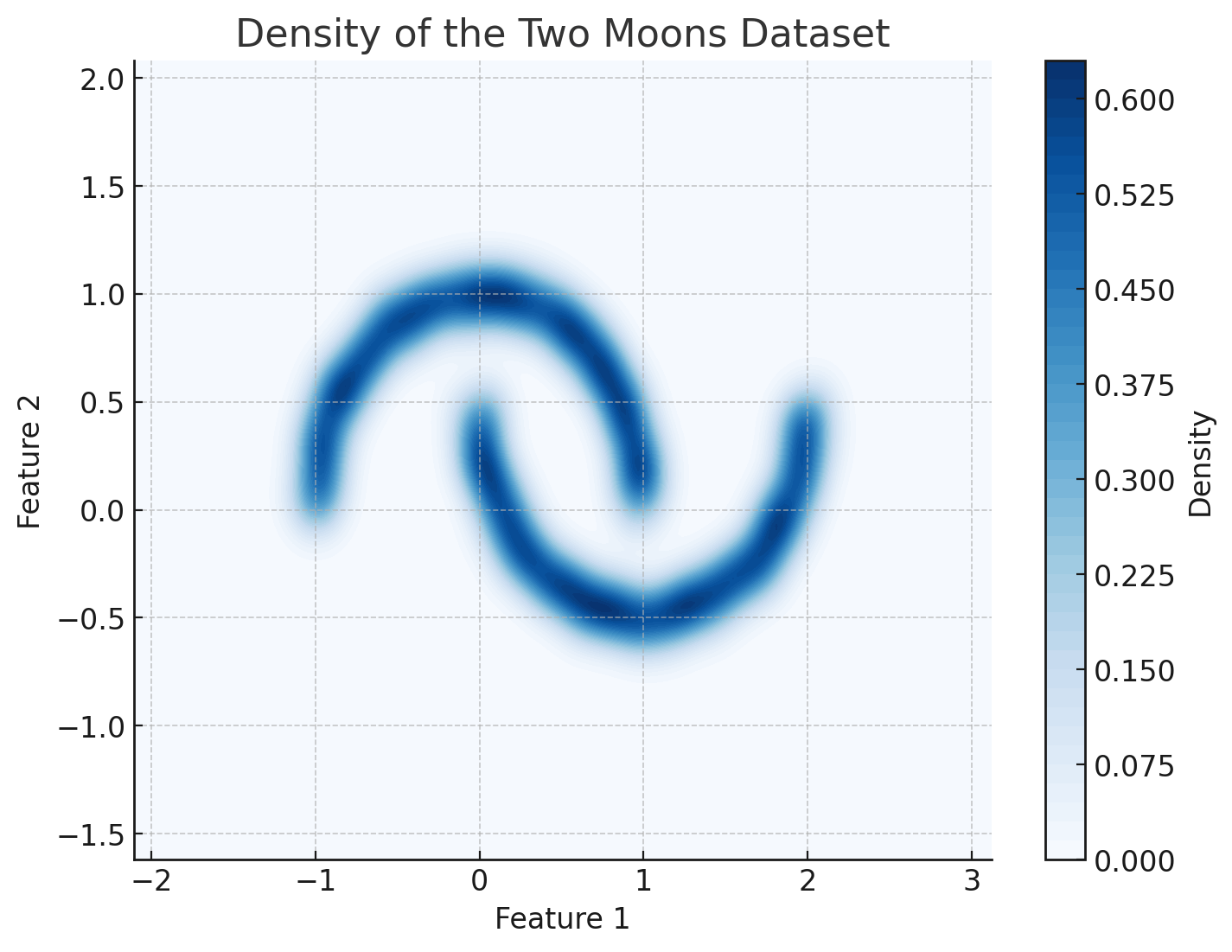

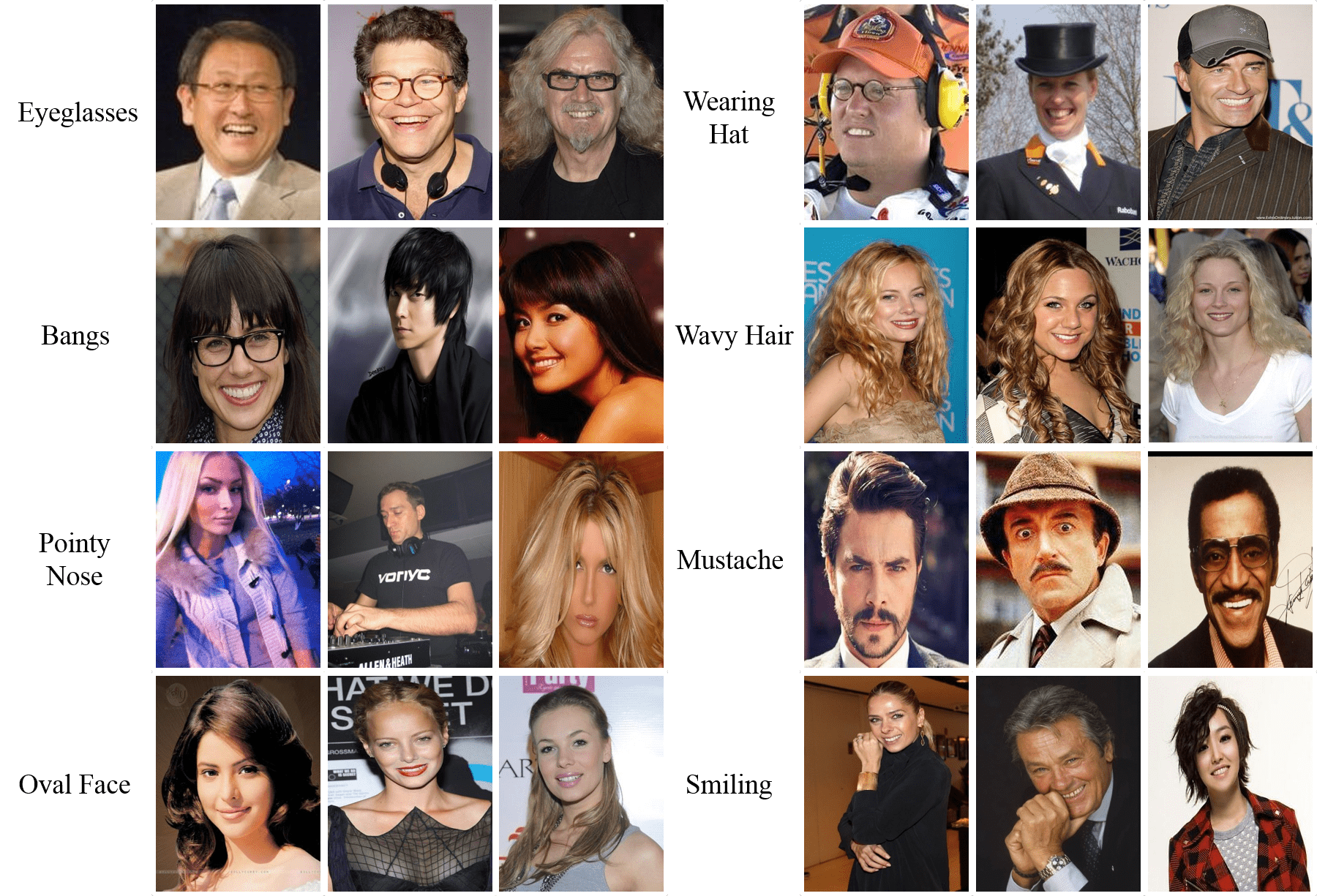

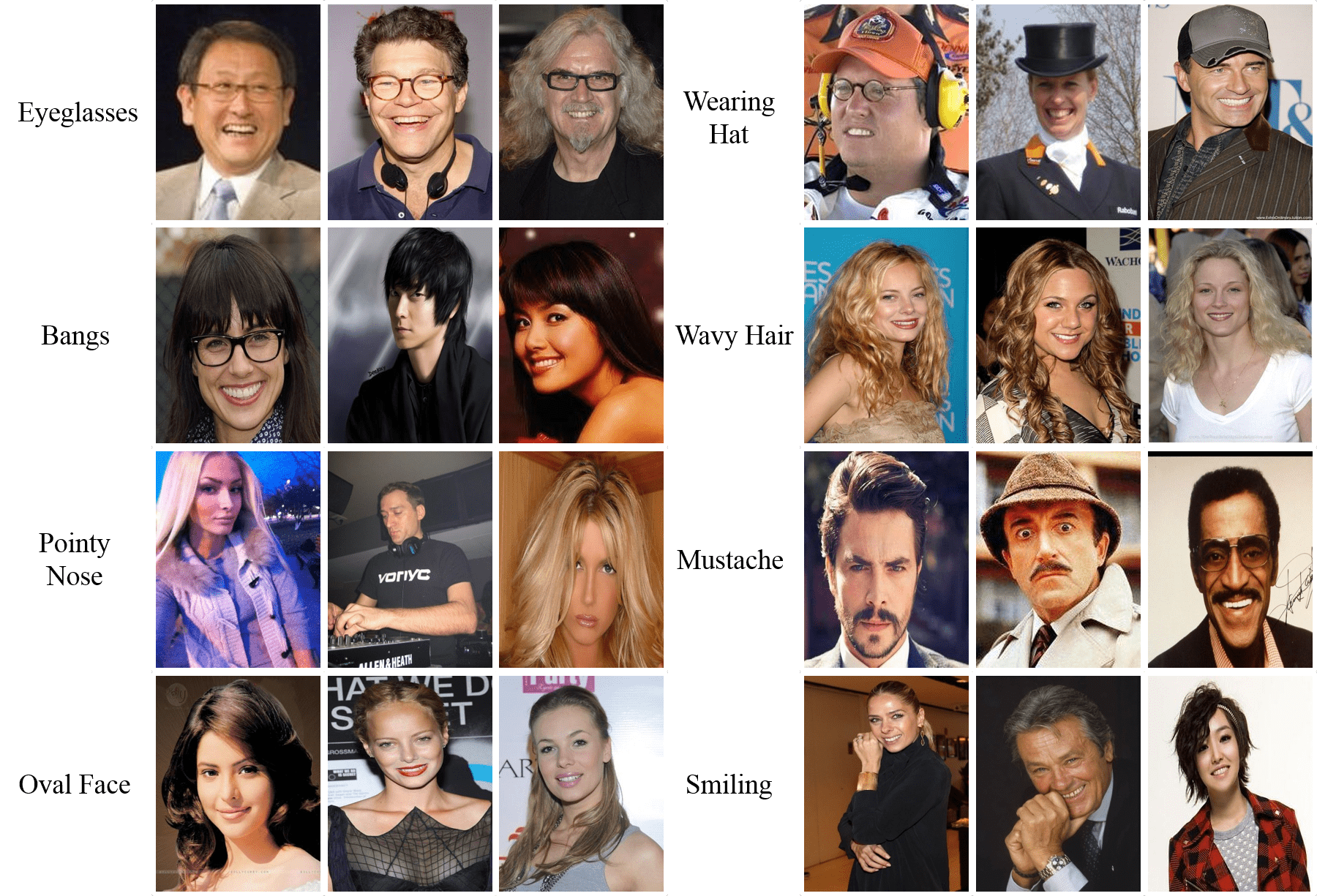

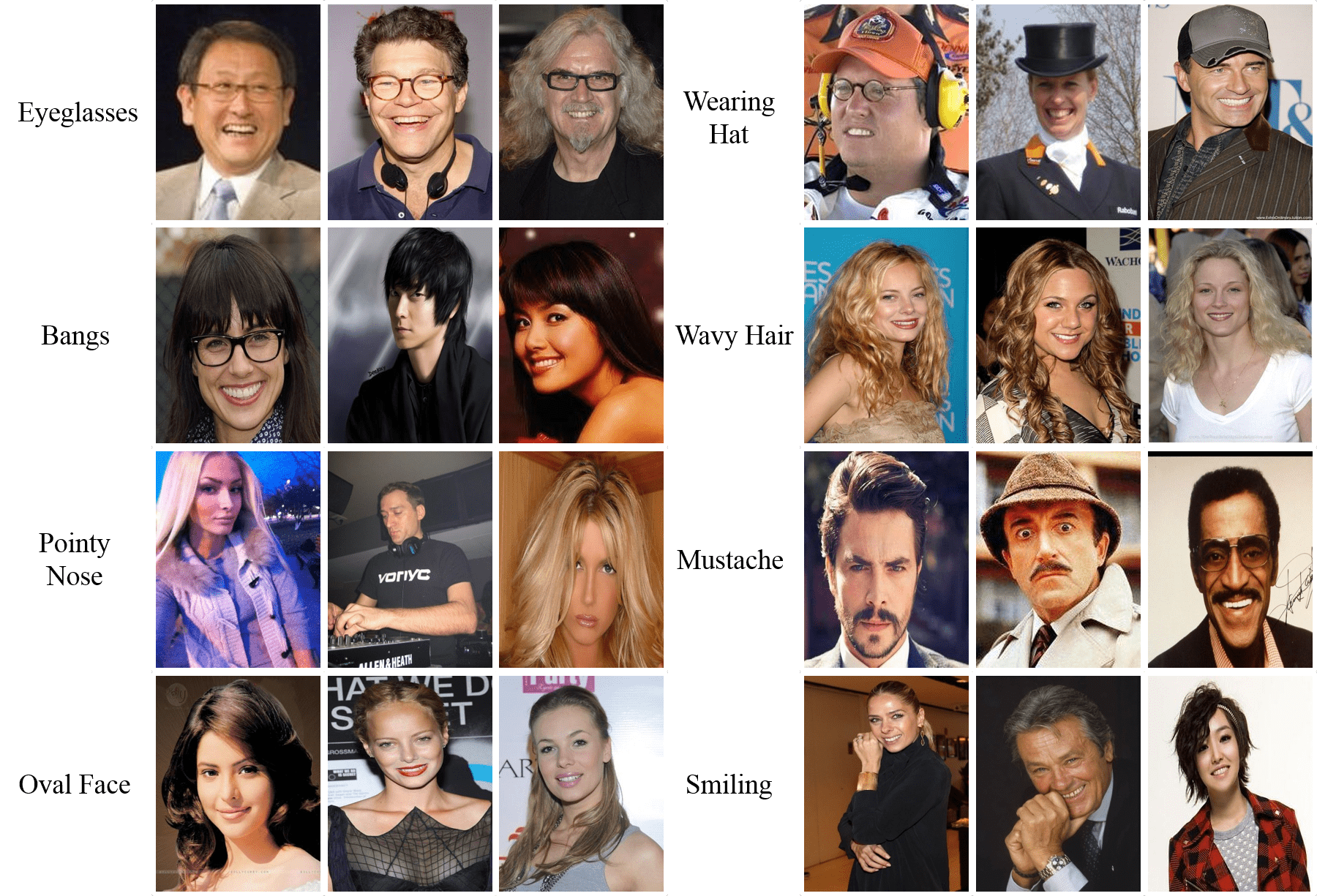

Generative Models 101

Maximize the likelihood of the training samples

Parametric Model

Training Samples

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

Trained Model

Evaluate probabilities

Low Probability

High Probability

Generate Novel Samples

Simulator

Generative Model

Fast emulators

Inference

Generative Model

Simulator

Generative Models: Simulate and Analyze

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

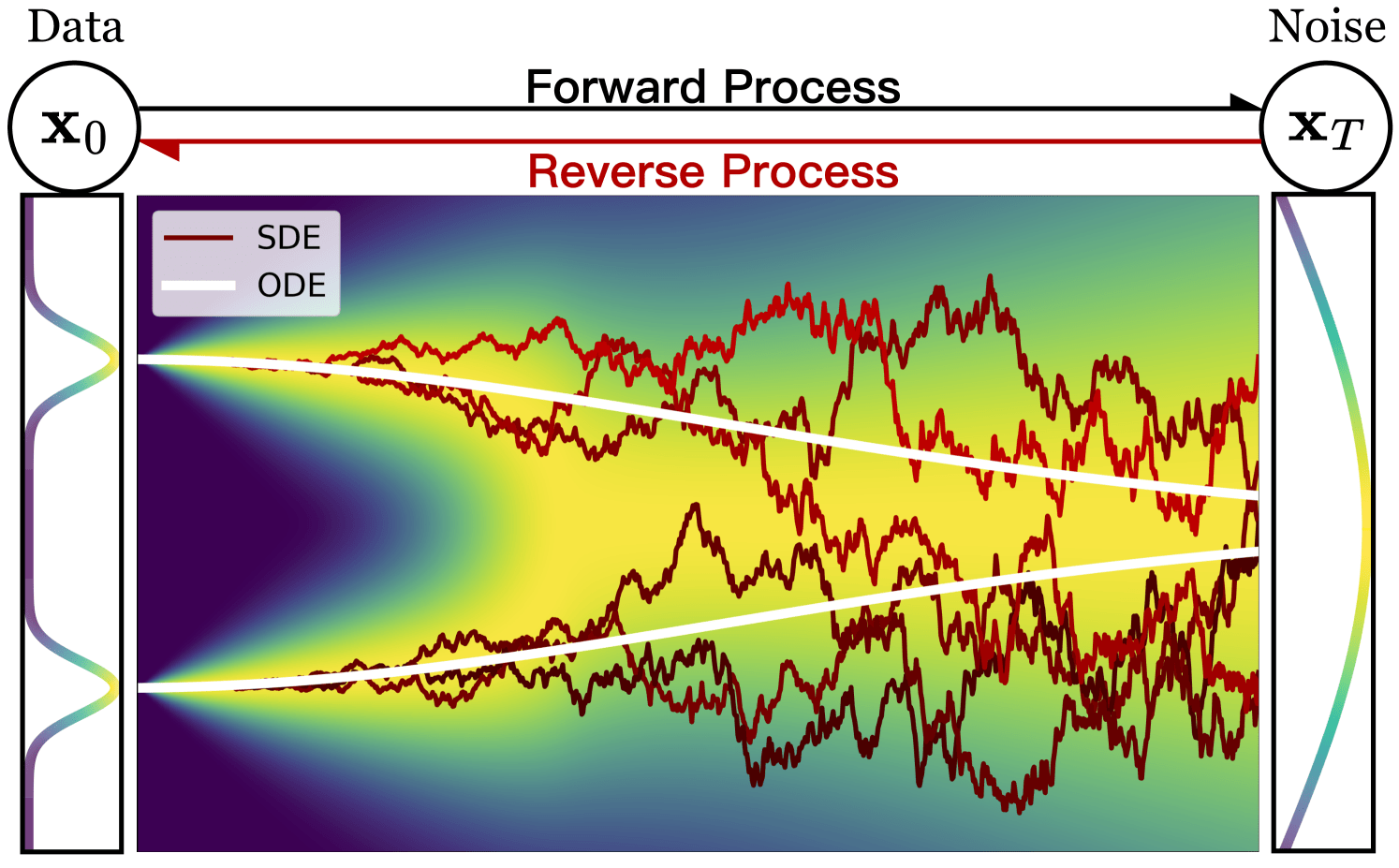

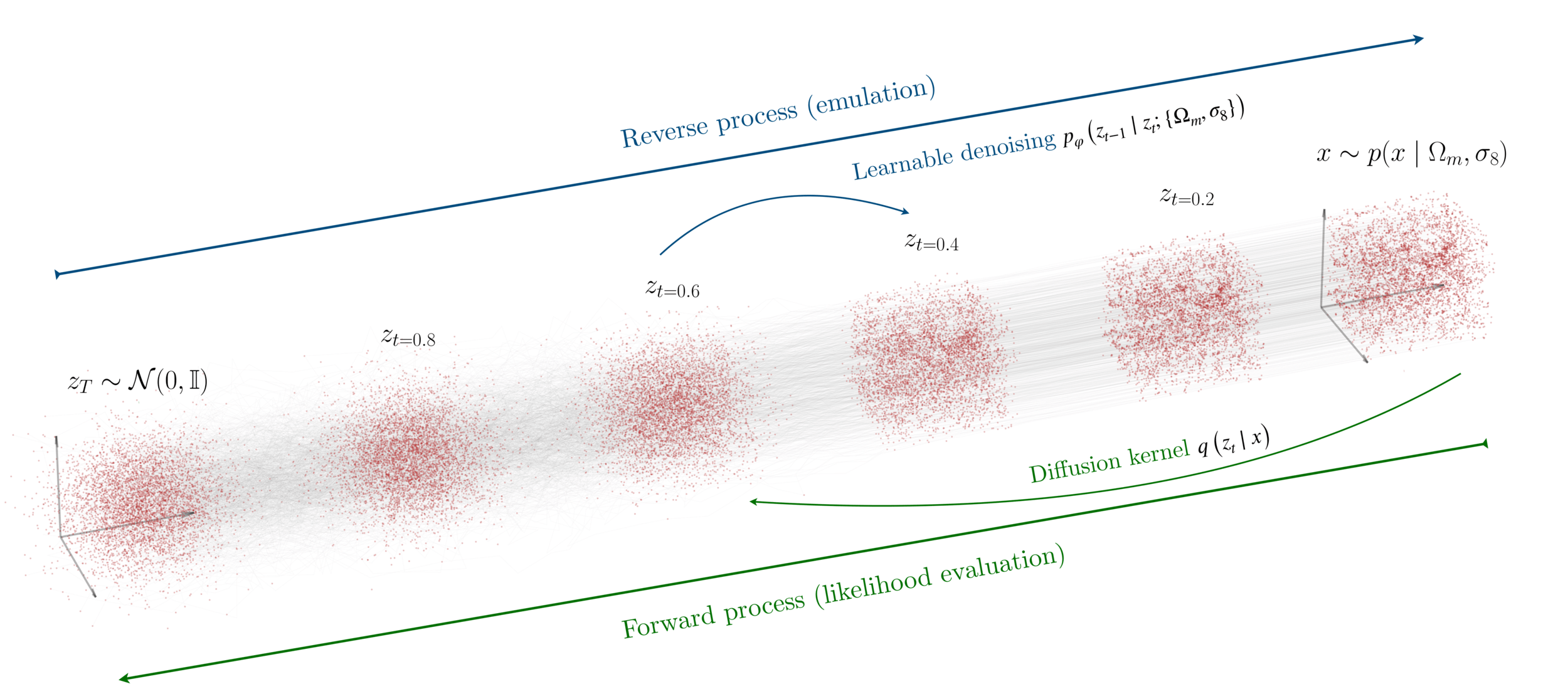

Bridging two distributions

Base

Data

"Creating noise from data is easy;

creating data from noise is generative modeling."

Yang Song

Neural Network

6 seconds / sim vs 40 million CPU hours

Fast Emulation

Density Fields

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

Marginal Likelihoods:

arXiv:2405.05255

Point Clouds

arXiv:2311.17141

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

Marginal Posteriors:

1) Sampling the Neural Likelihood (NLE) with HMC

2) Directly an optimal compression: Neural Posterior (NPE)

Learned Likelihood

CNN

Diffusion

Increasing Noise

["Diffusion-HMC: Parameter Inference with Diffusion Model driven Hamiltonian Monte Carlo" Mudur, Cuesta-Lazaro and Finkbeiner NeurIPs 2023 ML for the physical sciences, arXiv:2405.05255]

Nayantara Mudur

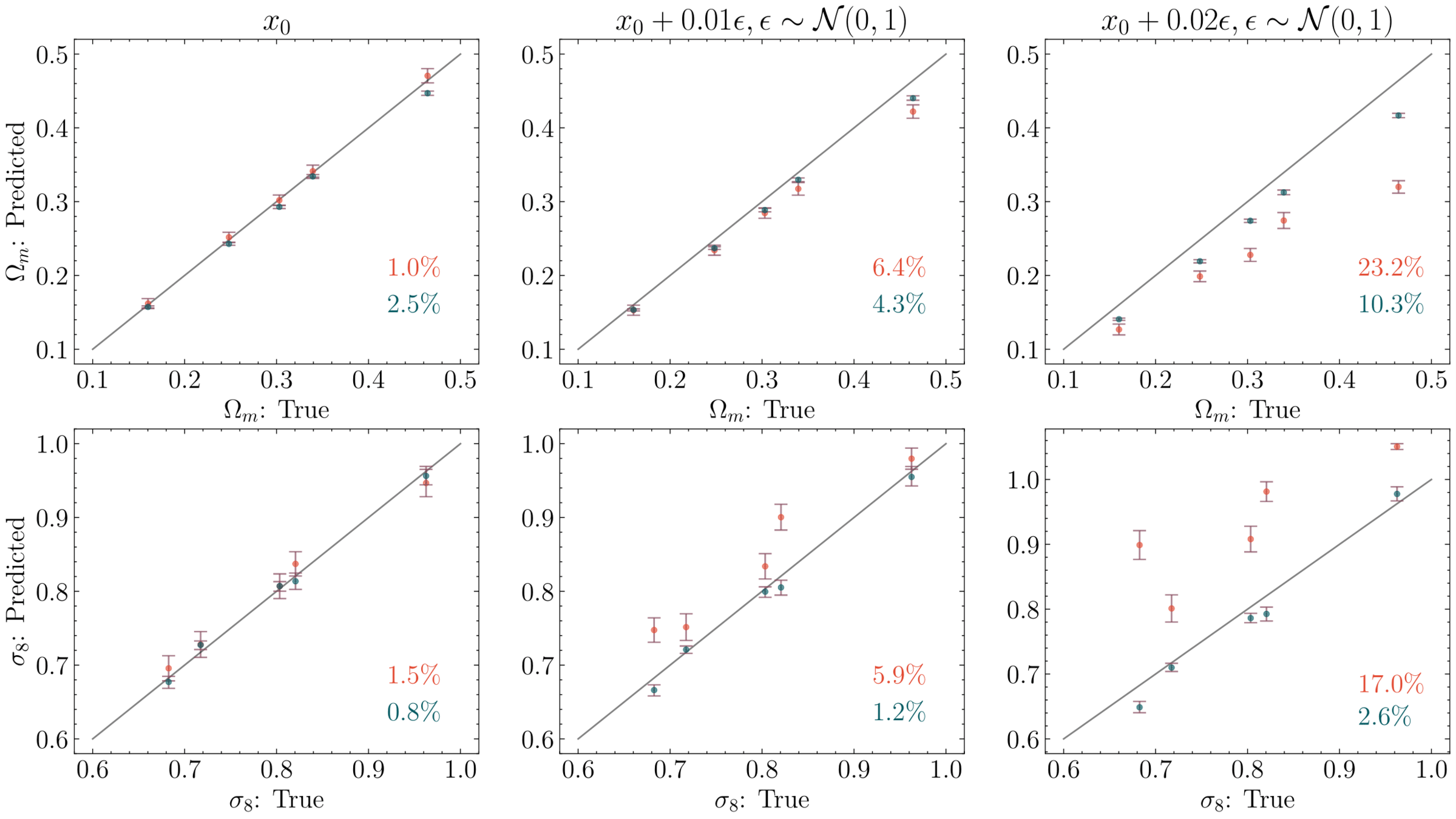

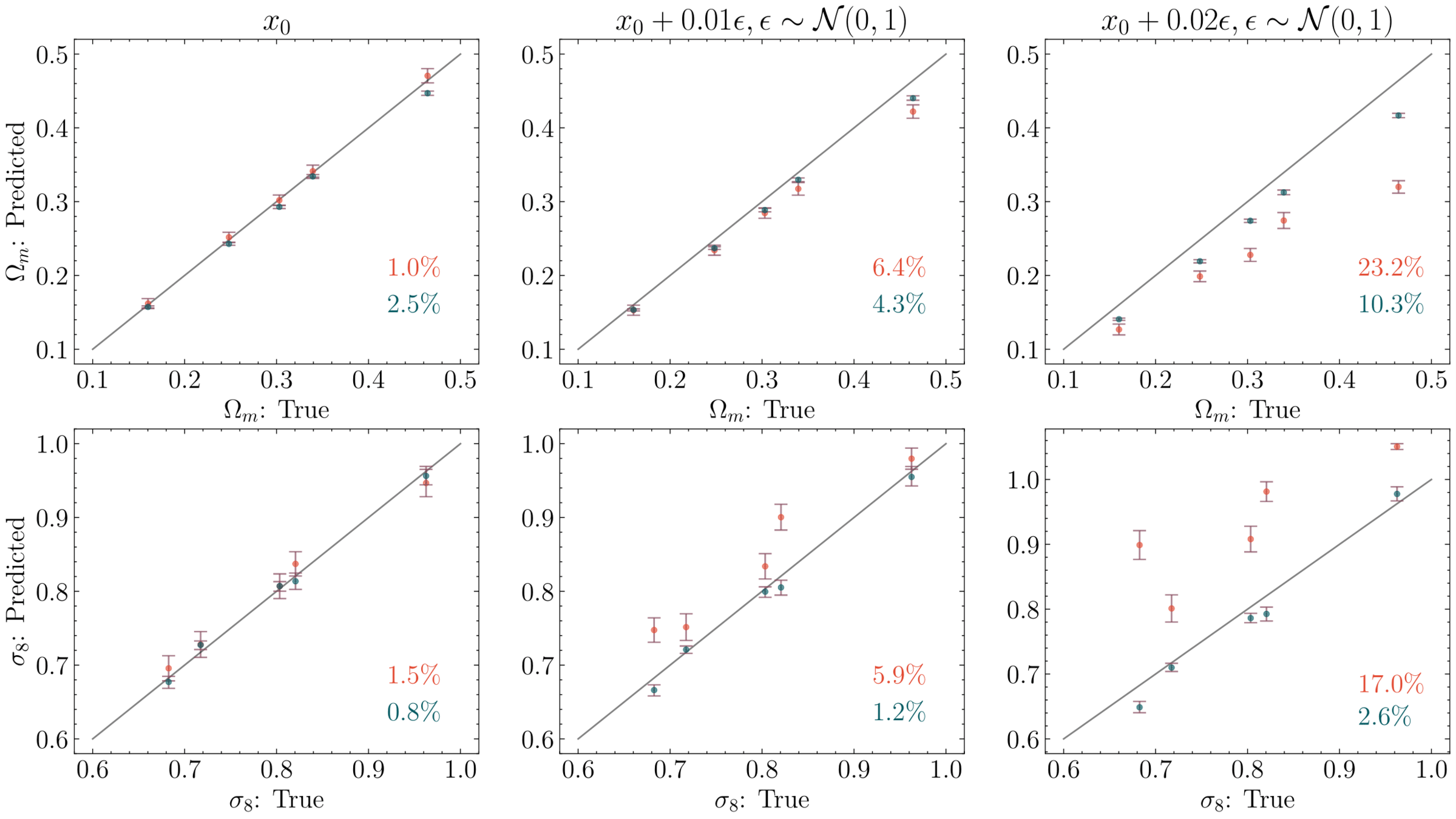

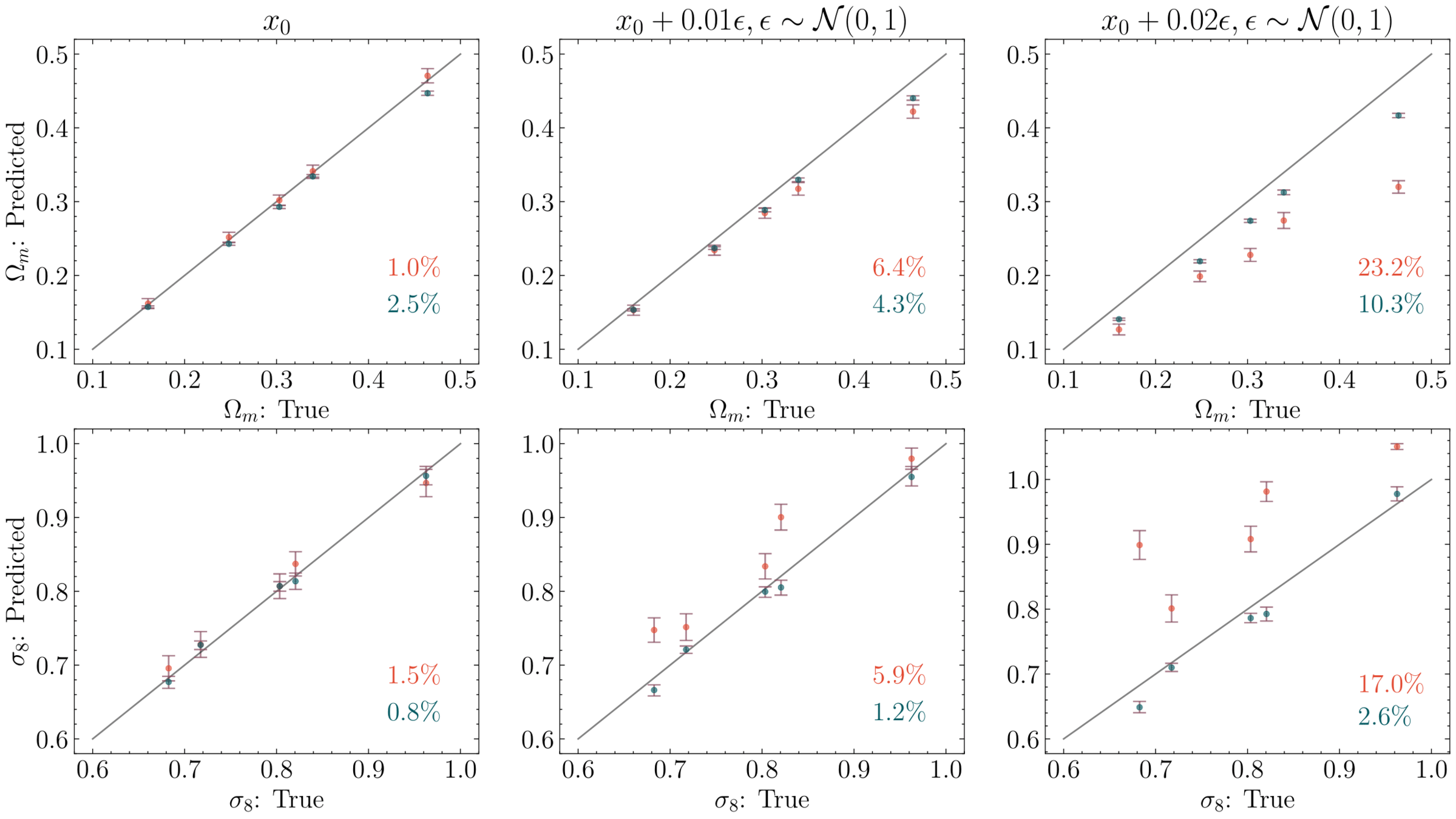

Posterior (NPE)

Likelihood (NLE)

Learning the marginal likelihood is more robust

Learned Likelihood

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

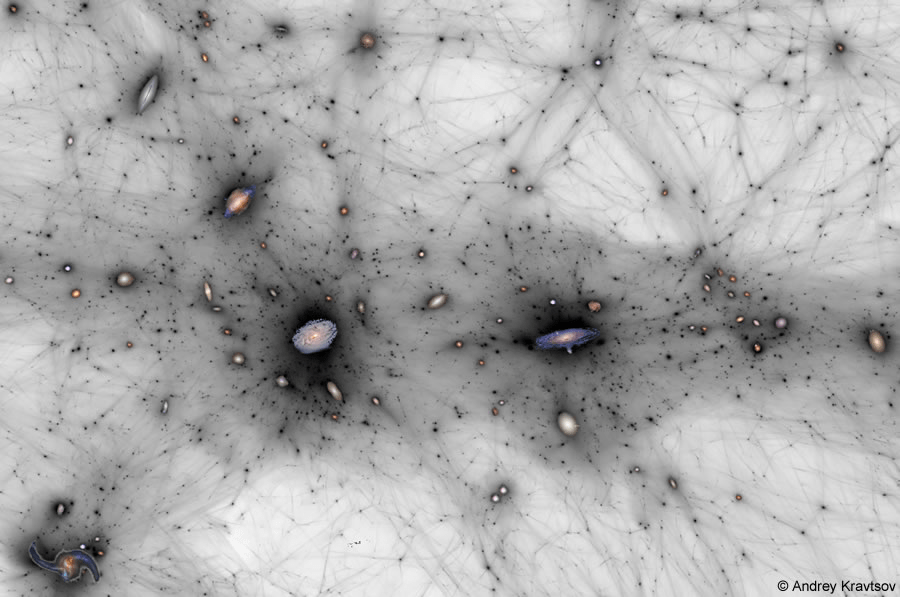

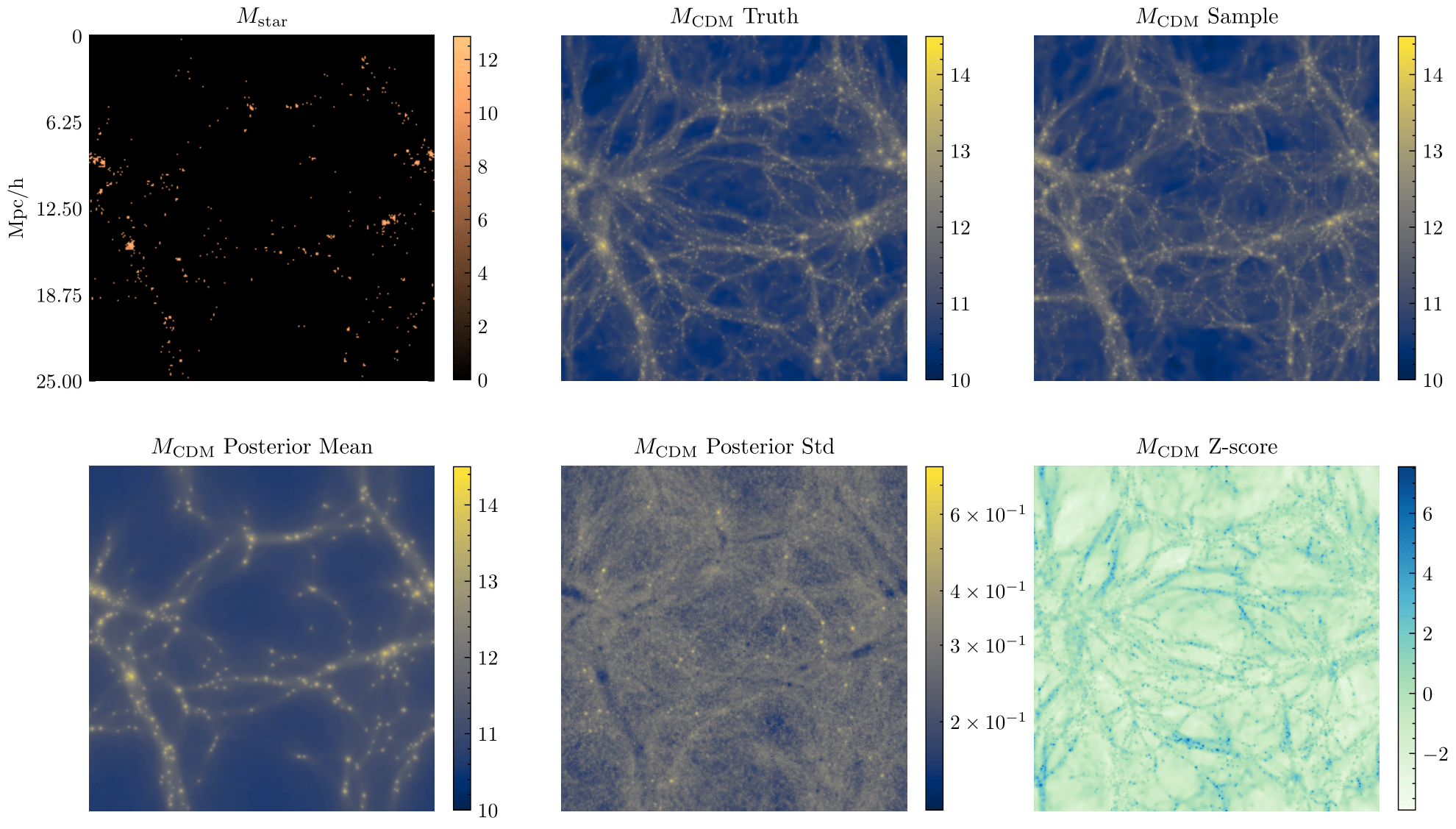

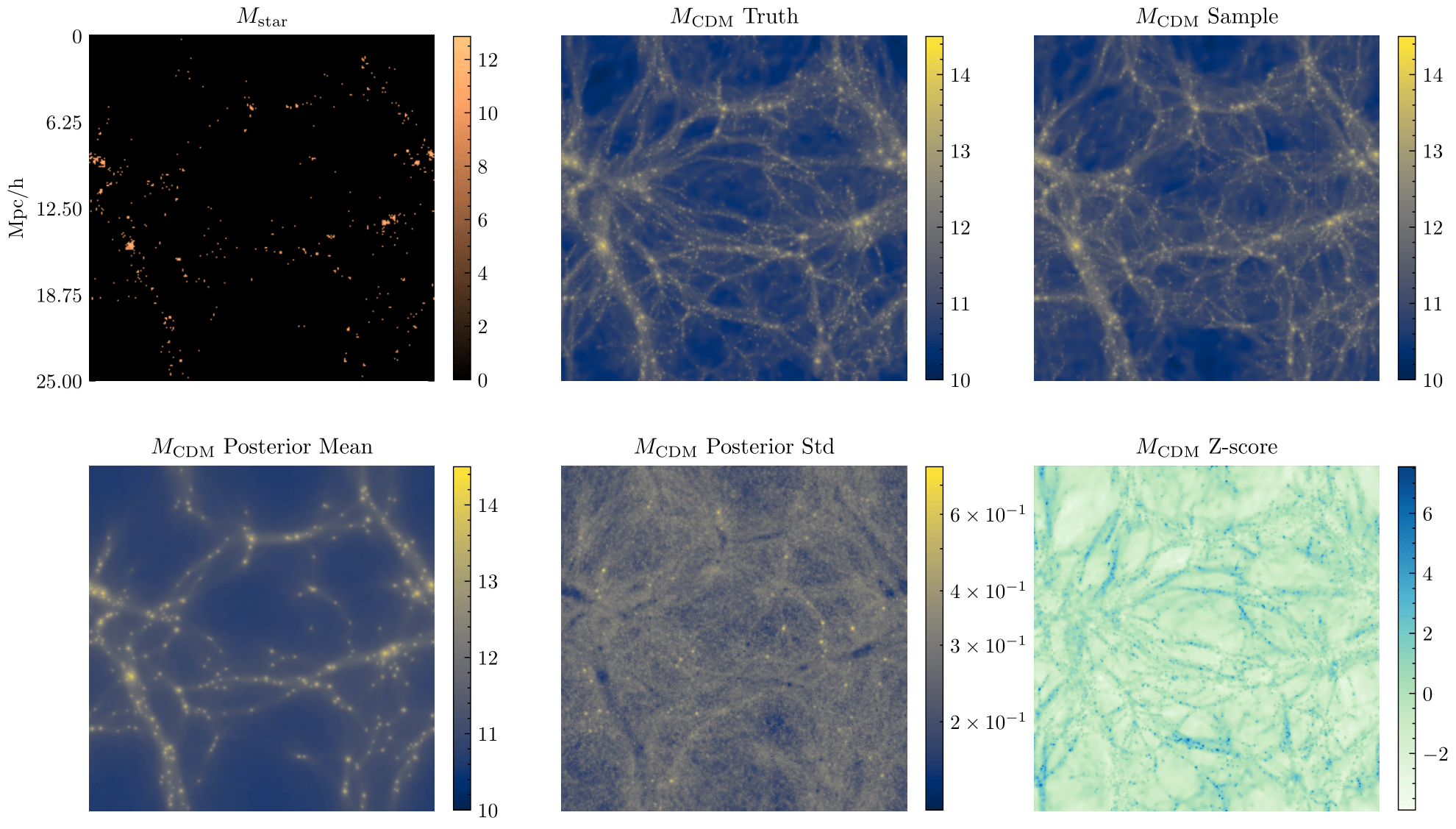

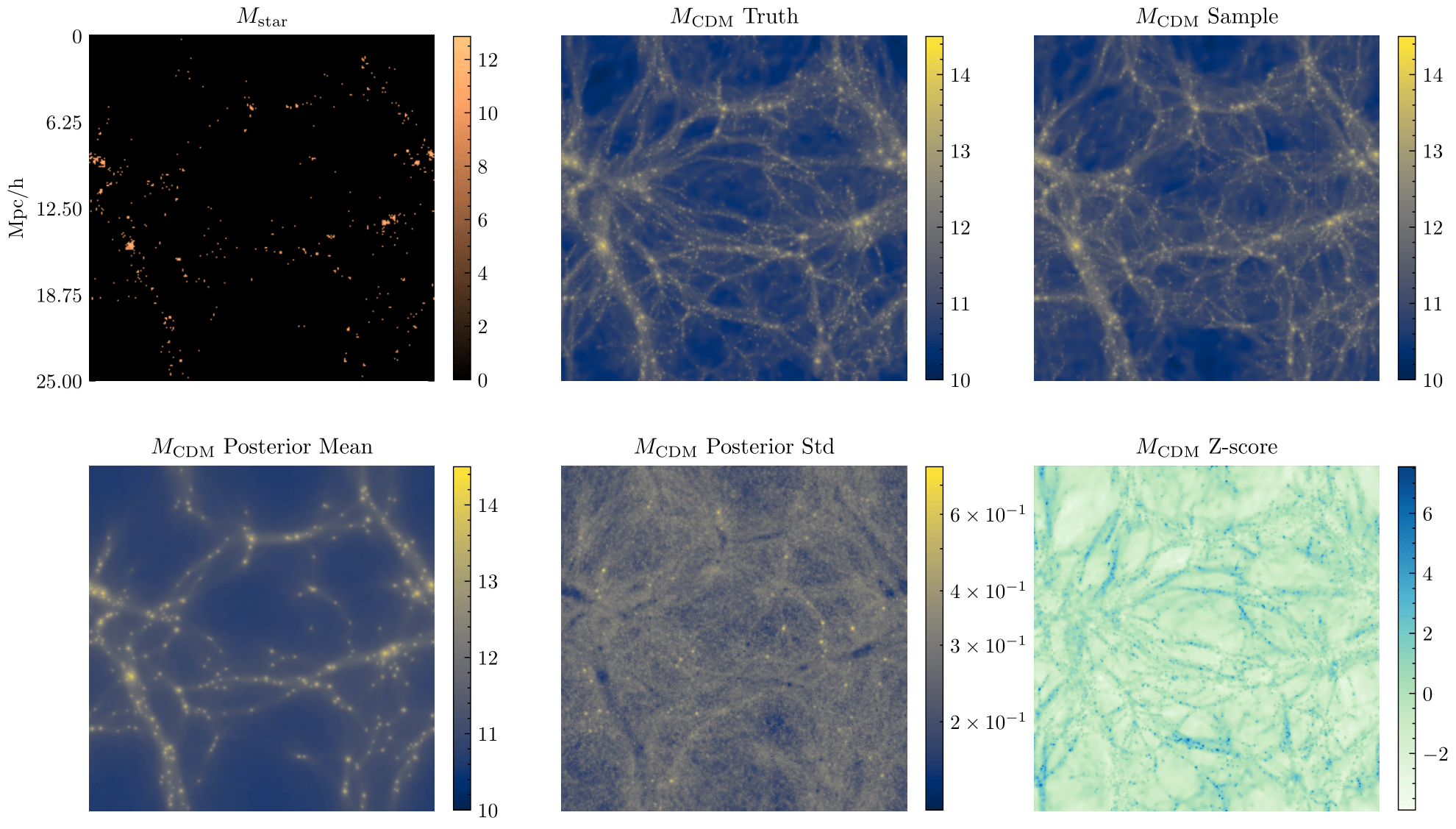

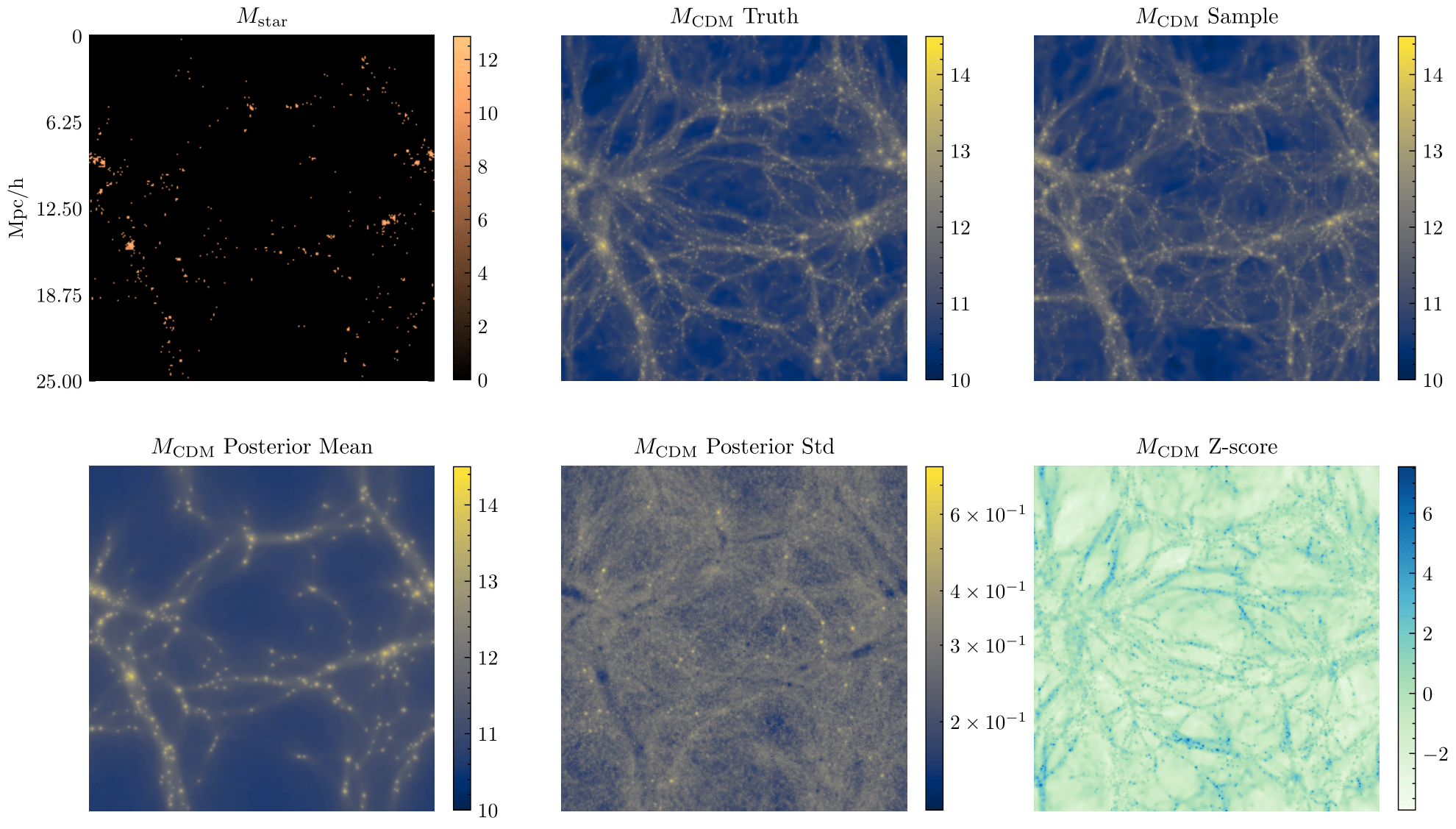

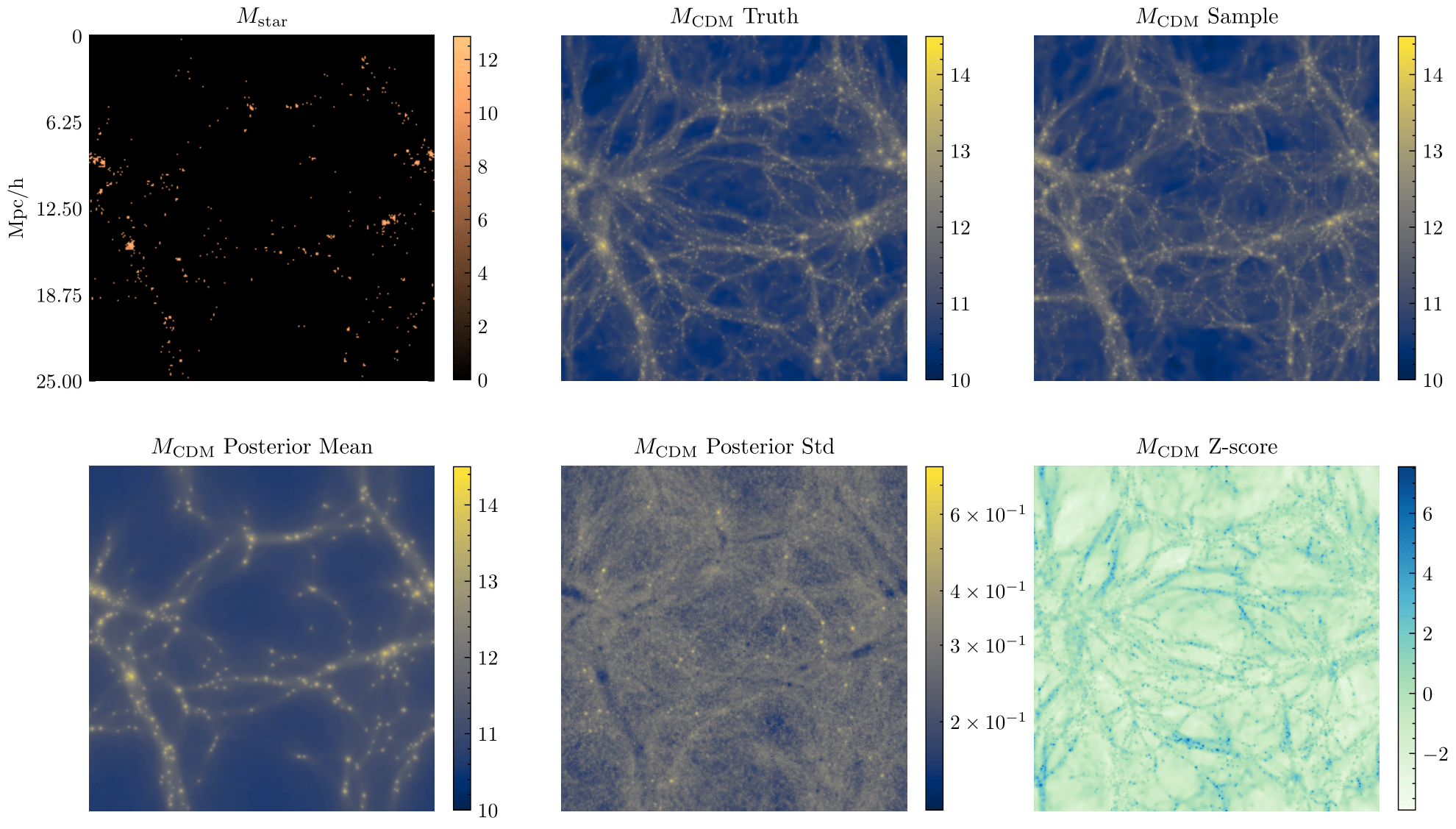

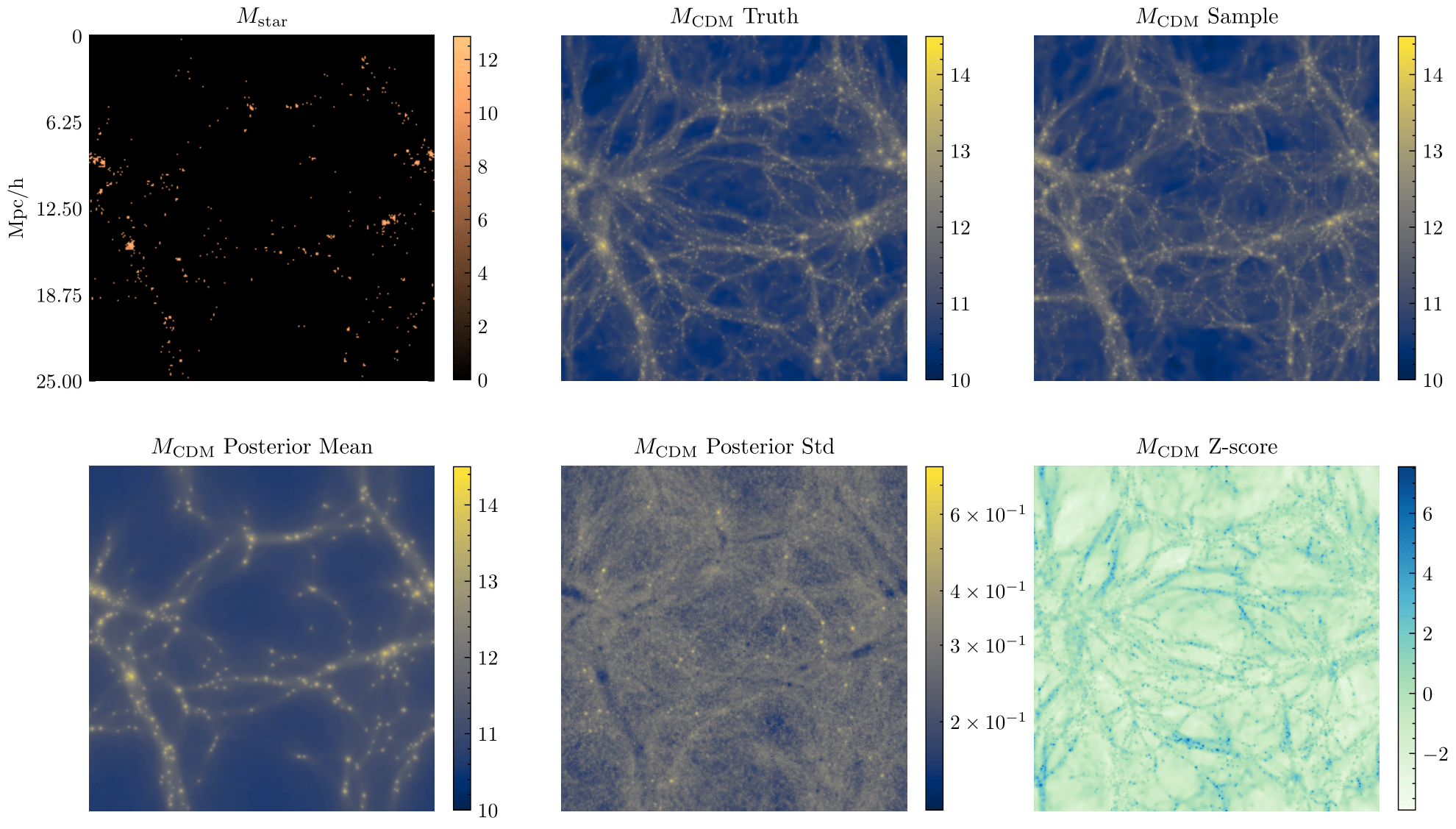

Reconstructing ALL latent variables:

Dark Matter distribution

Entire formation history

Peculiar velocities

Predictive Cross Validation:

Cross-Correlation with other probes without Cosmic Variance

[Image Credit: Yuuki Omori]

Constraining Inflation:

Inferring primordial non-gaussianity

Why field-level inference?

Data-driven Subgrid models / Data-driven Systematics

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

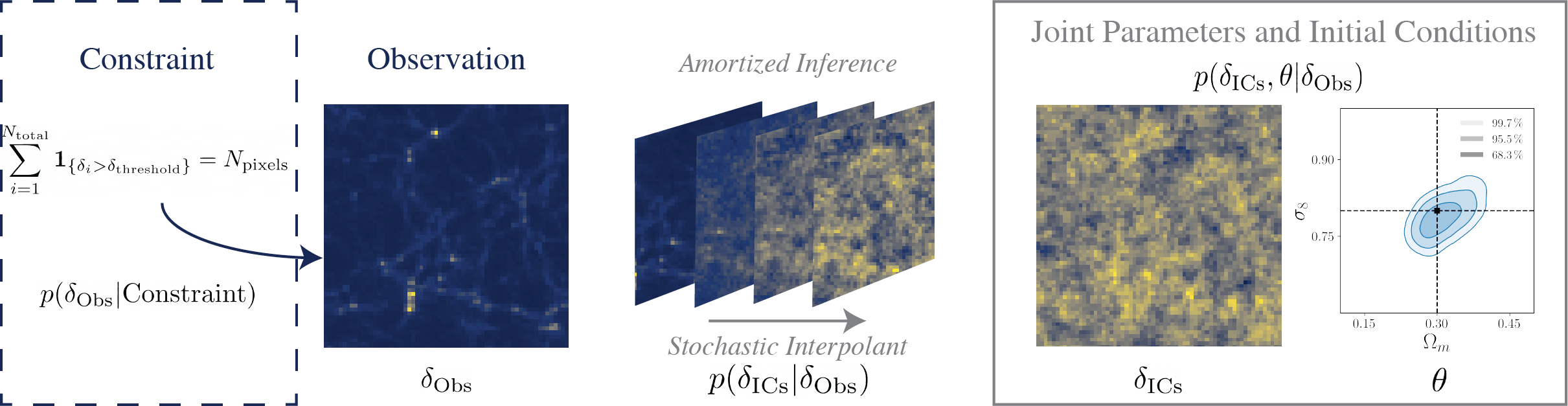

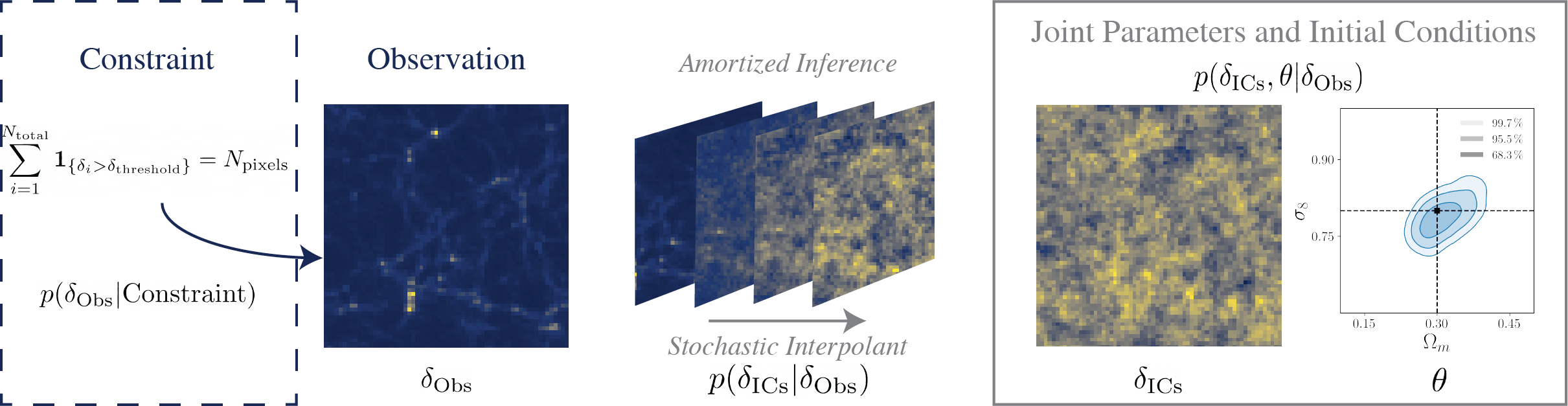

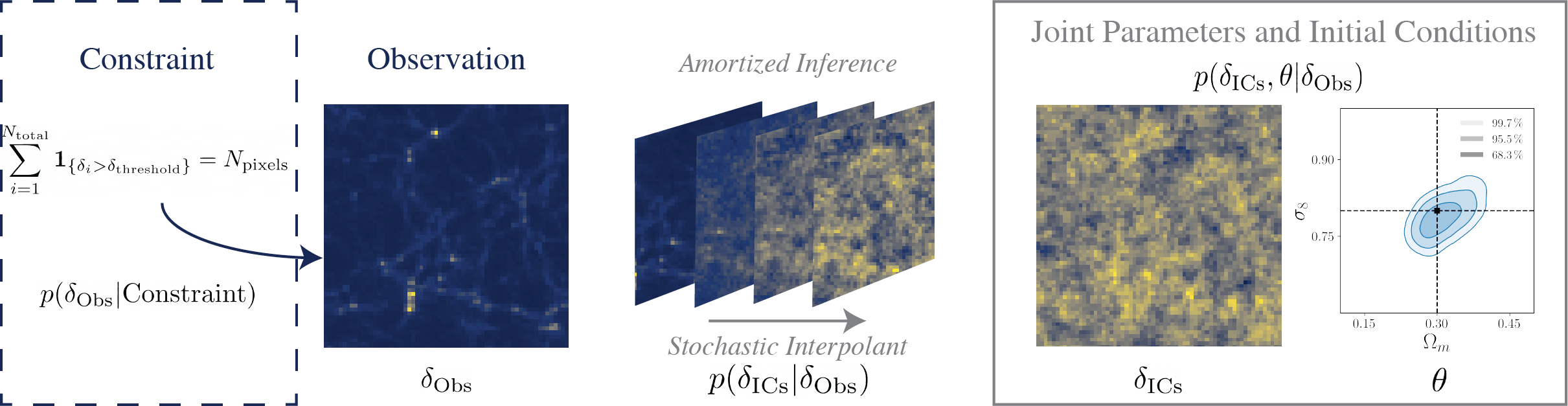

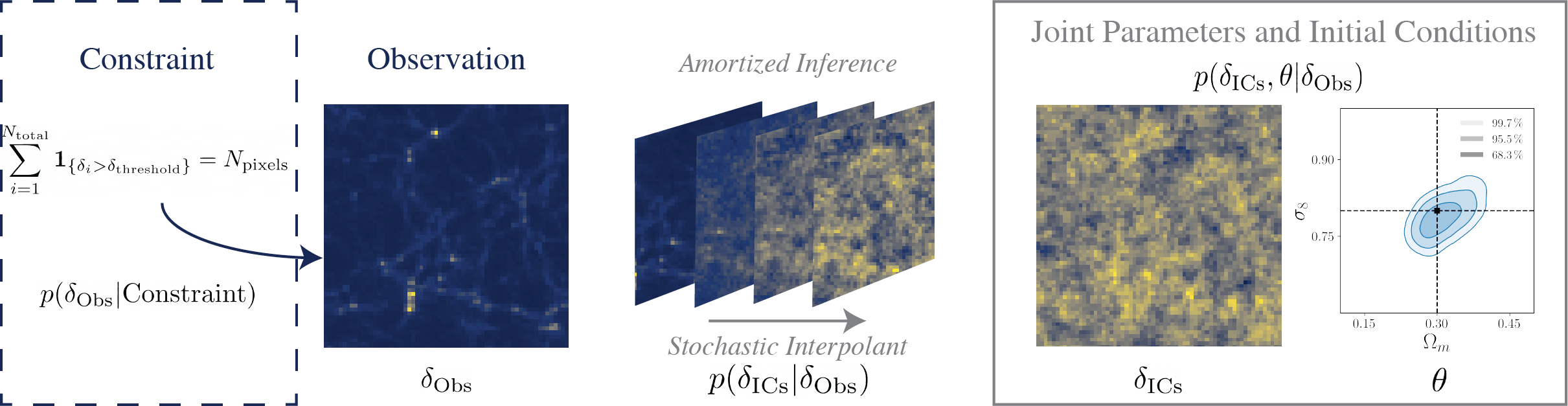

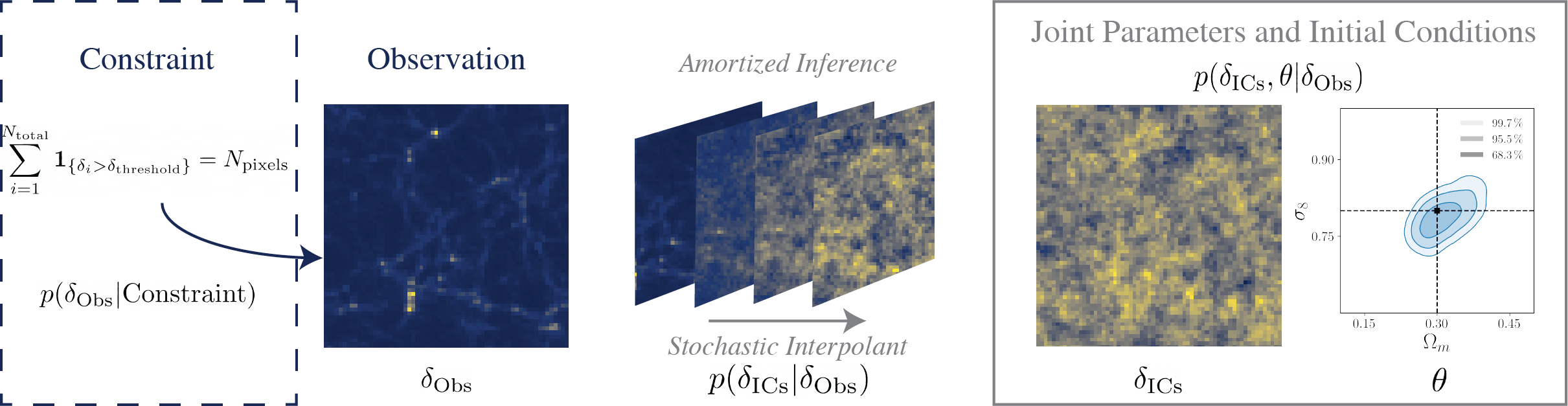

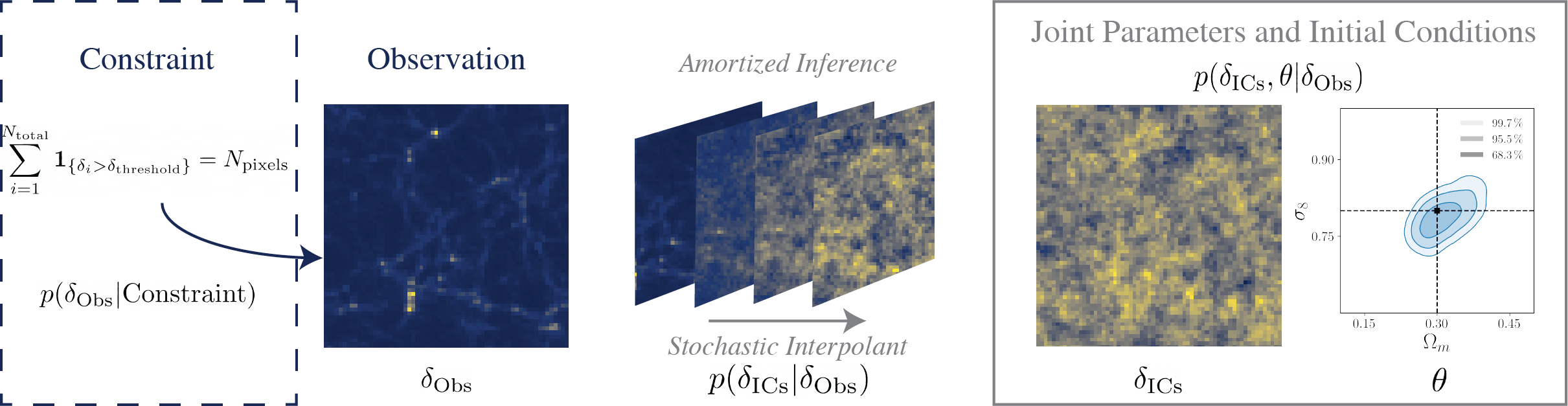

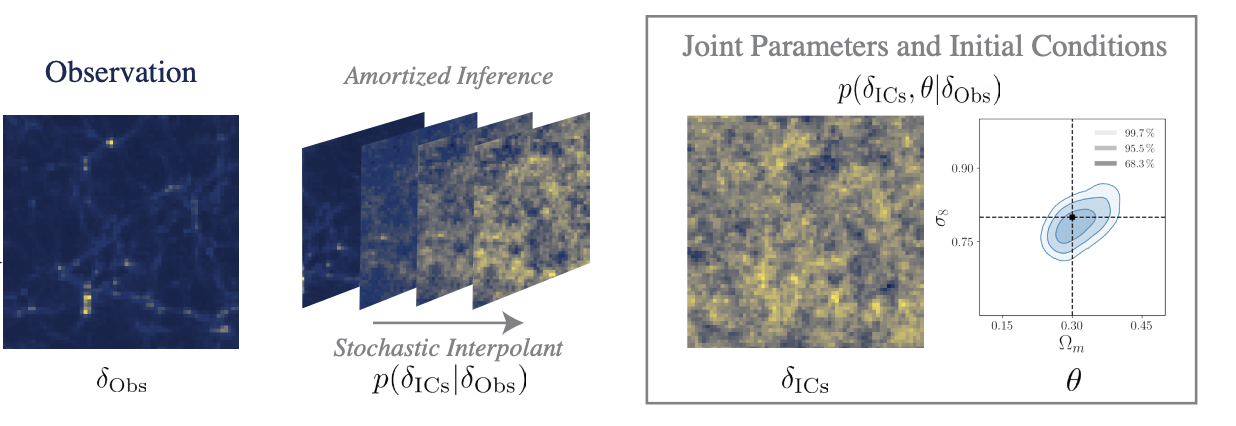

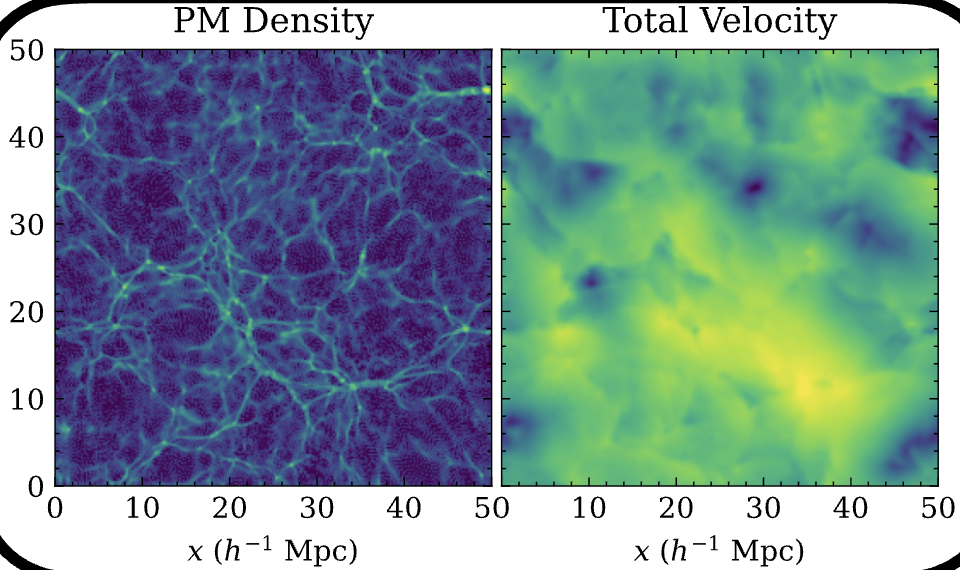

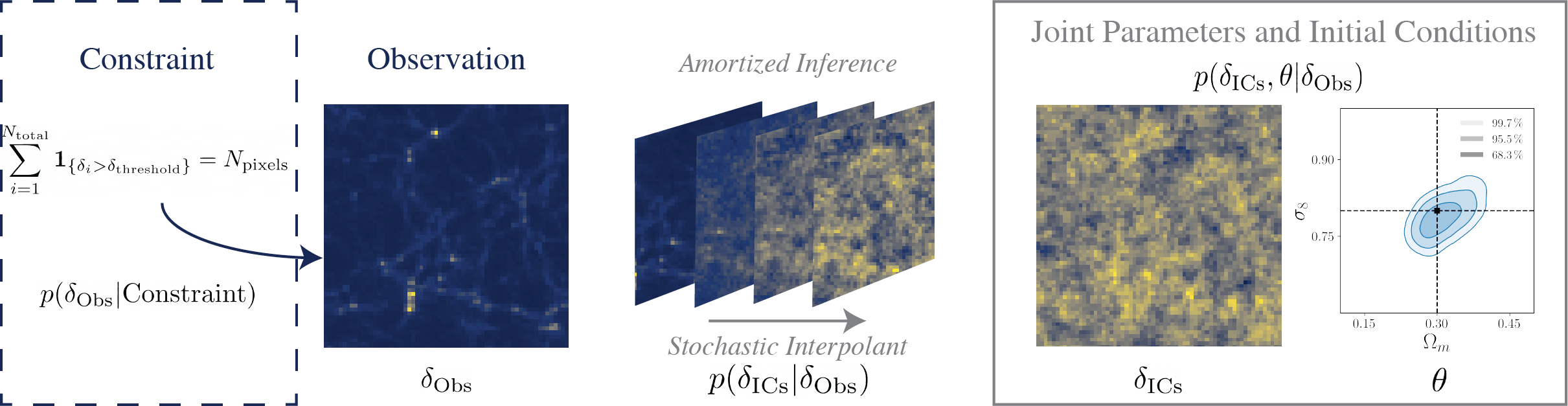

"Joint cosmological parameter inference and initial condition reconstruction with Stochastic Interpolants"

Cuesta-Lazaro, Bayer, Albergo et al

NeurIPs ML4PS 2024 Spotlight talk

Particle Mesh

Dark Matter Only

Gaussian Likelihood

Explicit Sampling vs SBI

1) Likelihood not necessarily Gaussian

2) Forward model no need differentiable

3) Amortized

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

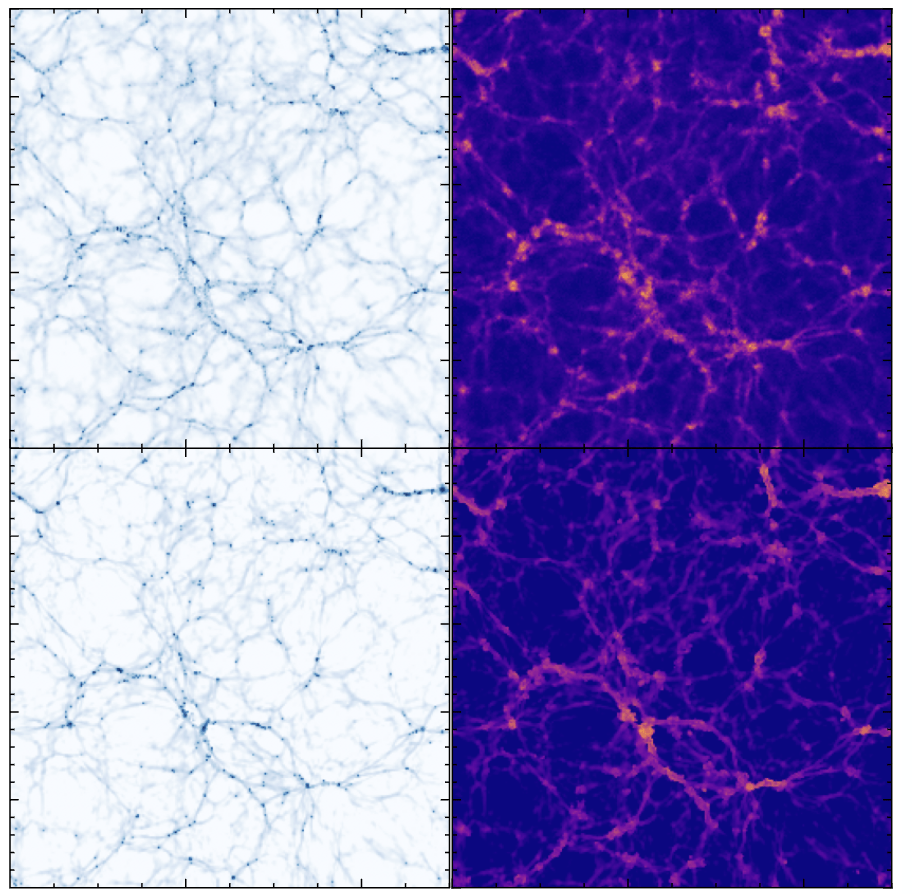

Generative Model: Marginalizing over ICs

Generative Model: Fixing ICs

HMC: Marginalizing over ICs

True

Reconstructed

Carolina Cuesta-Lazaro Flatiron/IAS - FLI

Initial Conditions

Finals

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

HMC (ICs)

SBI (ICs)

SBI (Finals)

HMC (Finals)

HMC (ICs)

SBI (ICs)

SBI (Finals)

HMC (Finals)

HMC (ICs)

SBI (ICs)

SBI (Finals)

HMC (Finals)

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

SBI

HMC

Scaling up in volume

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

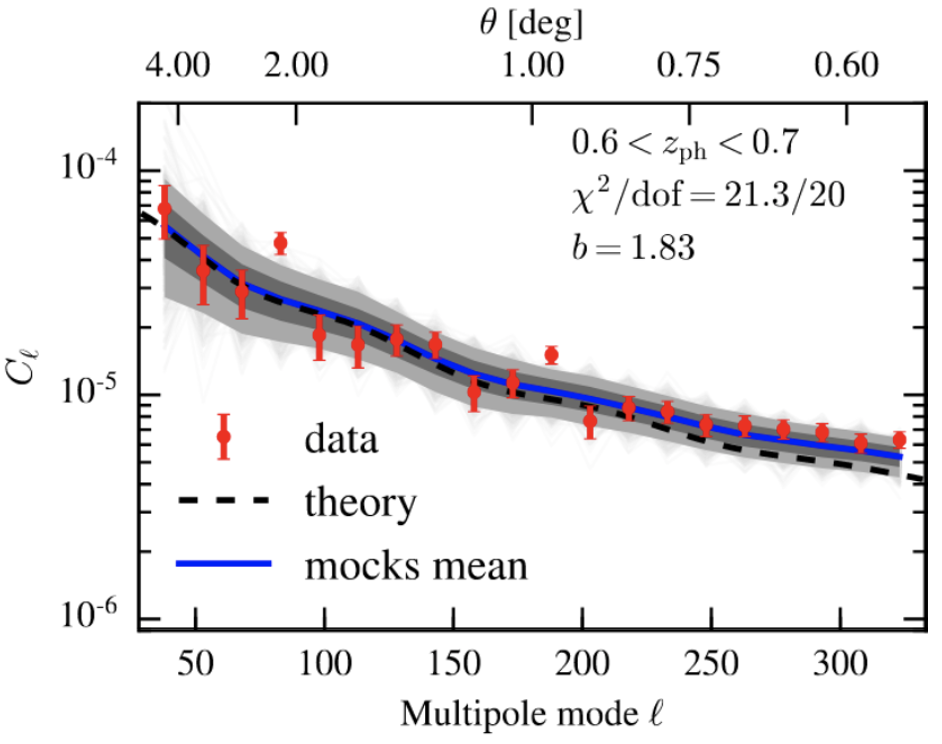

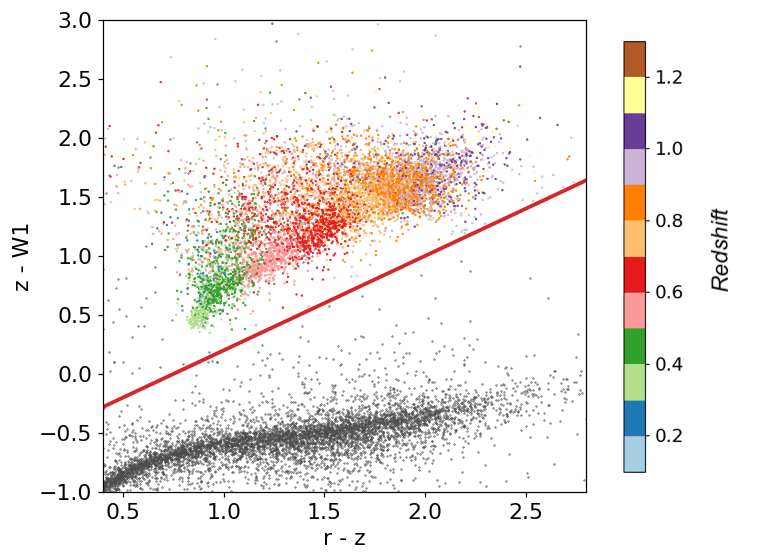

Implicit FLI for DESI

DESI Y1 LRG Effective volumes already larger than our sims!

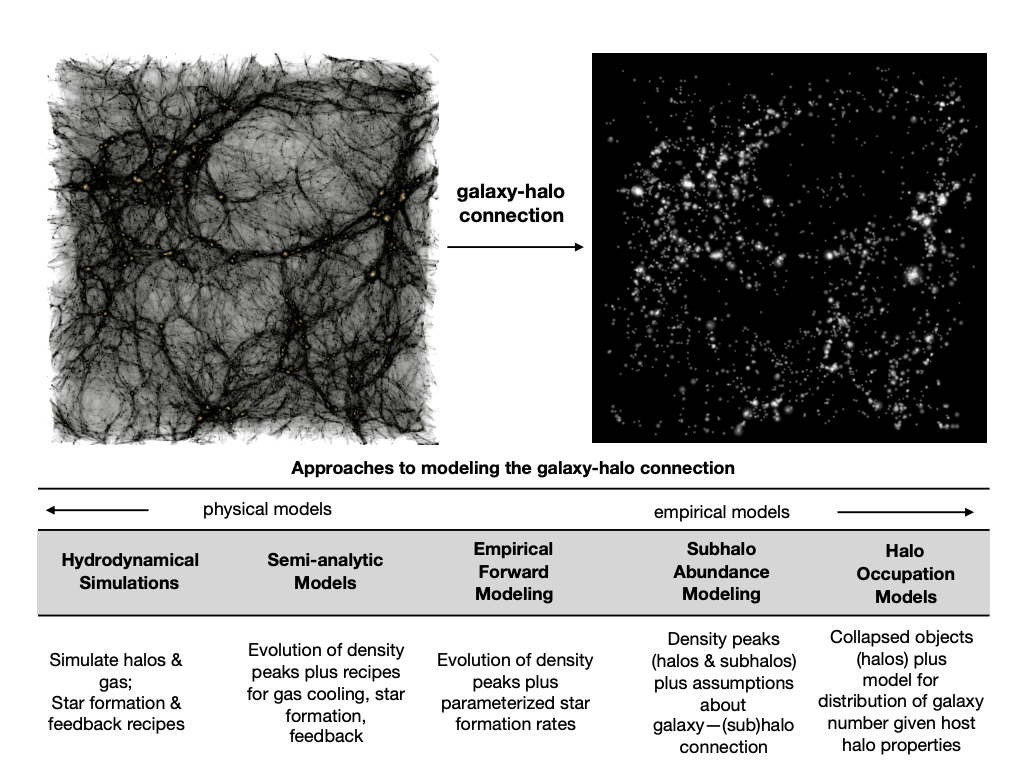

Small Scale Galaxy Bias

How galaxies are selected

Fibre collisions

Forward Modelling the Survey Systematics

EFT

Galaxy Formation

Self-Consistent Predictions across observables

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

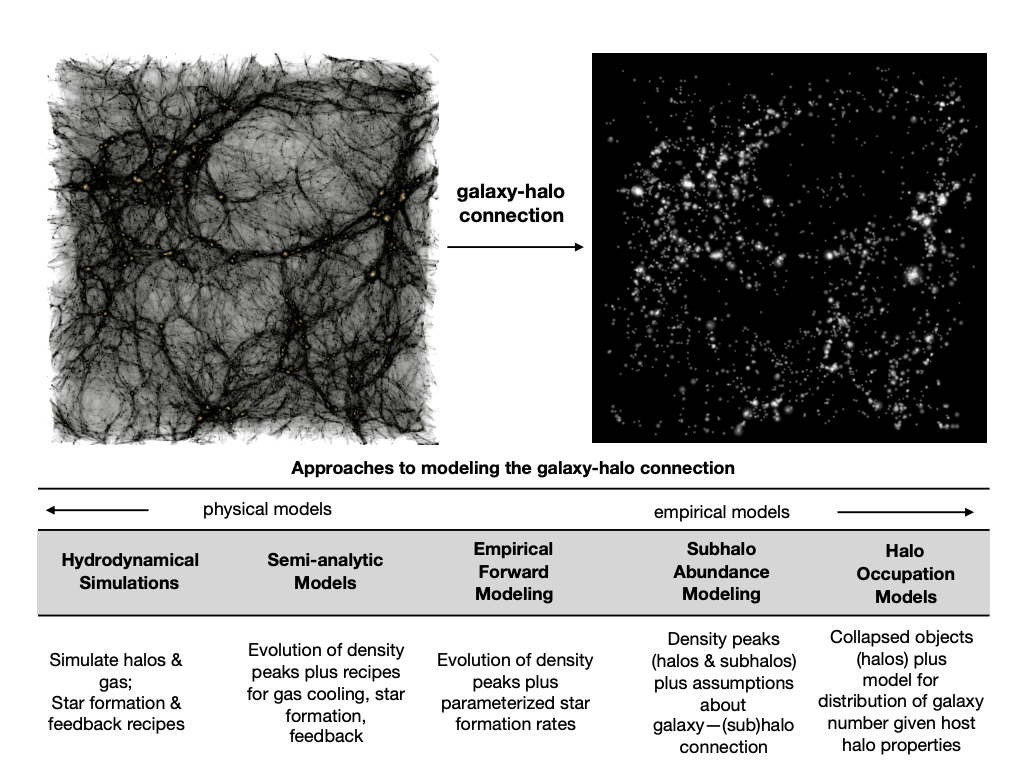

arXiv:1804.03097

X-Ray

Cluster gas mass fractions

Cluster gas density profiles

Sunyaev-Zeldovich

Galaxy Properties

Thermal Integrated electron pressure (hot electrons / big objects)

Star formation + histories

Stellar mass / halo mass relation

FRBs

Integrated electron density

Kinetic Integrated electron density x peculiar velocity

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

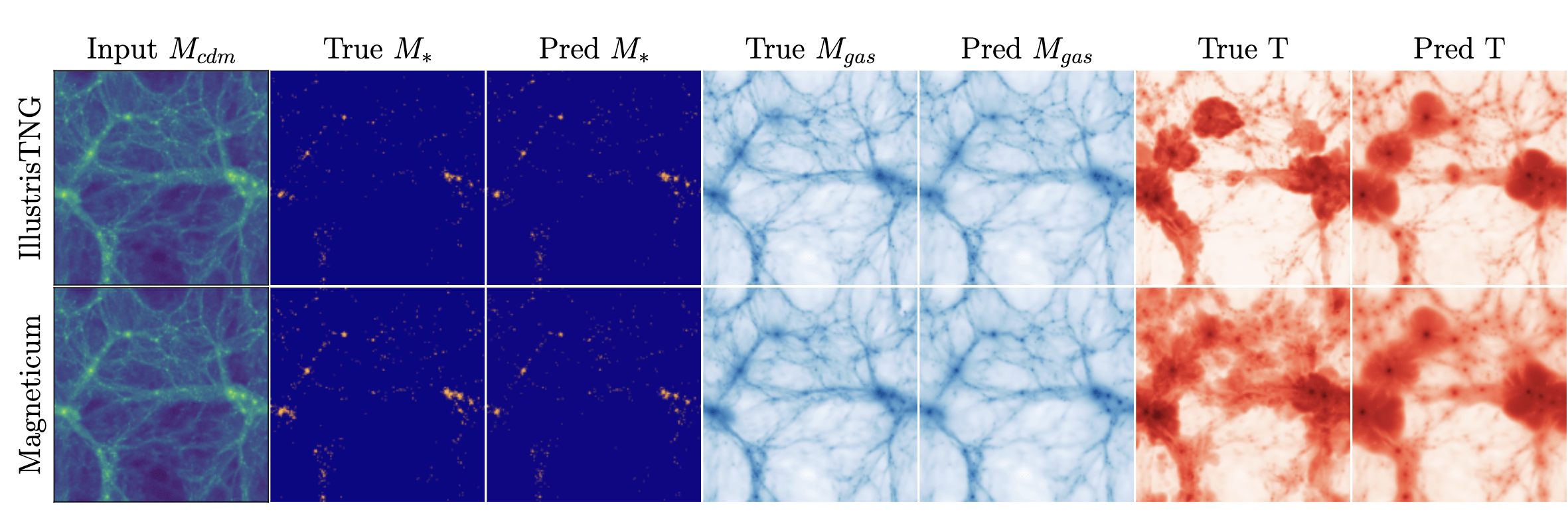

Multi-wavelength Observables

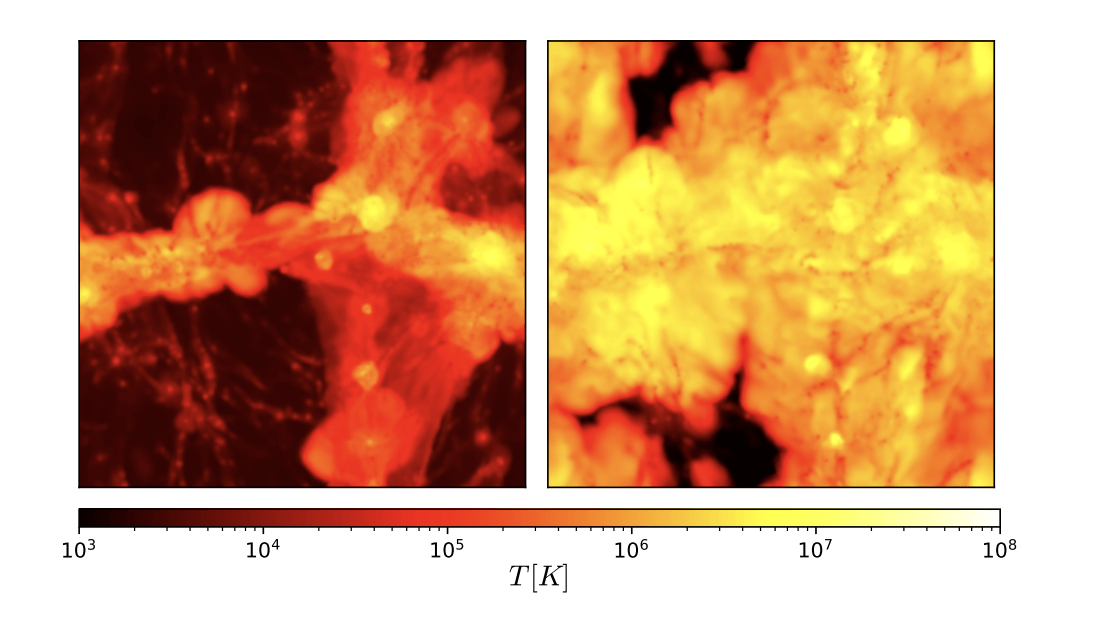

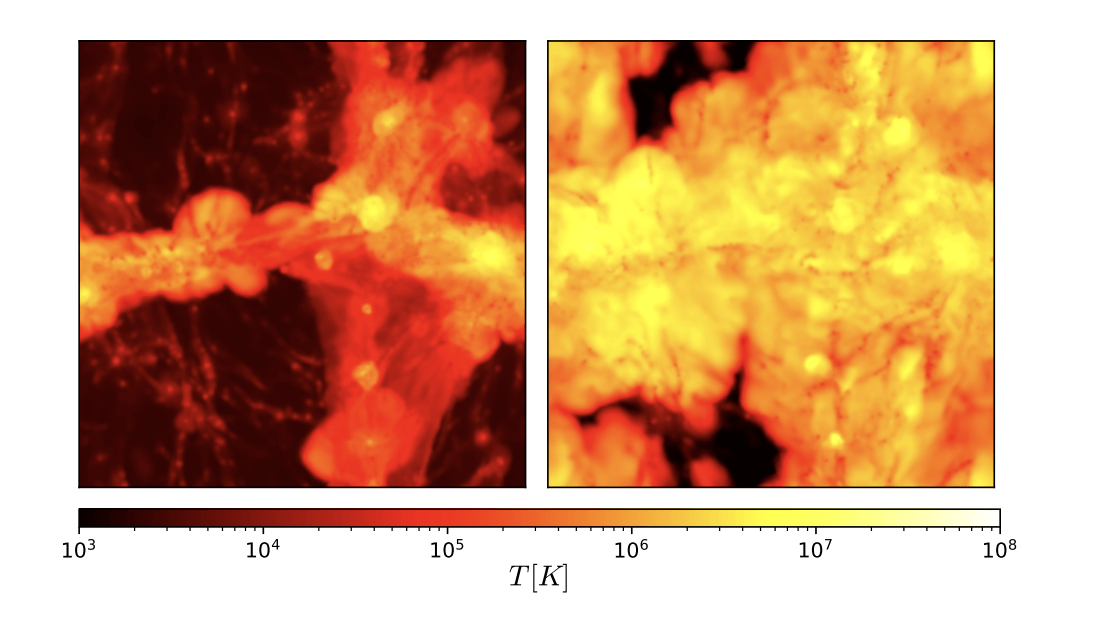

["BaryonBridge: Interpolants models for fast hydrodynamical simulations" Horowitz, Cuesta-Lazaro, Yehia ML4Astro workshop 2025]

Particle Mesh for Gravity

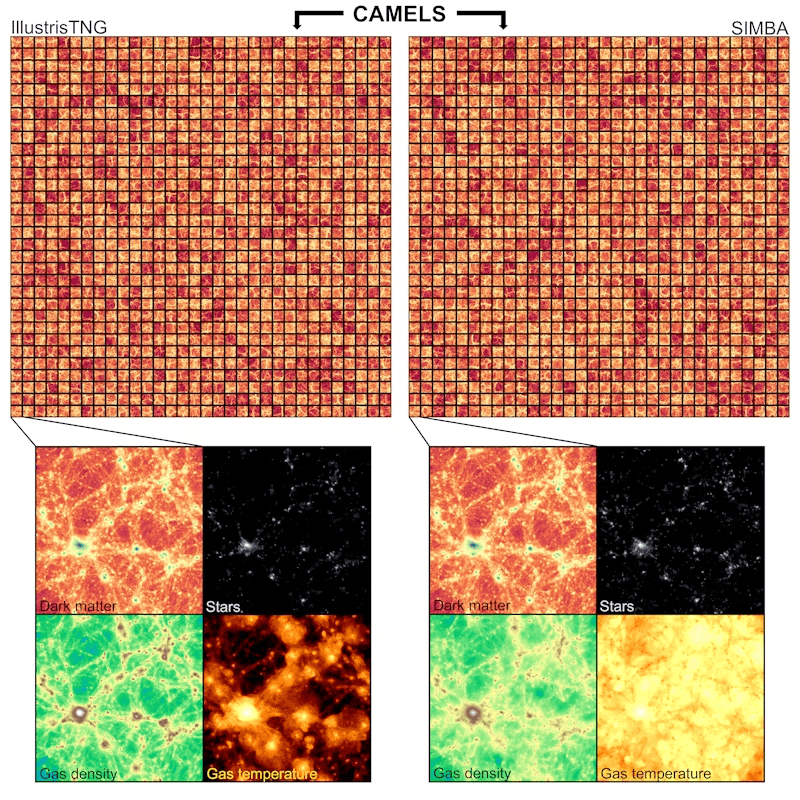

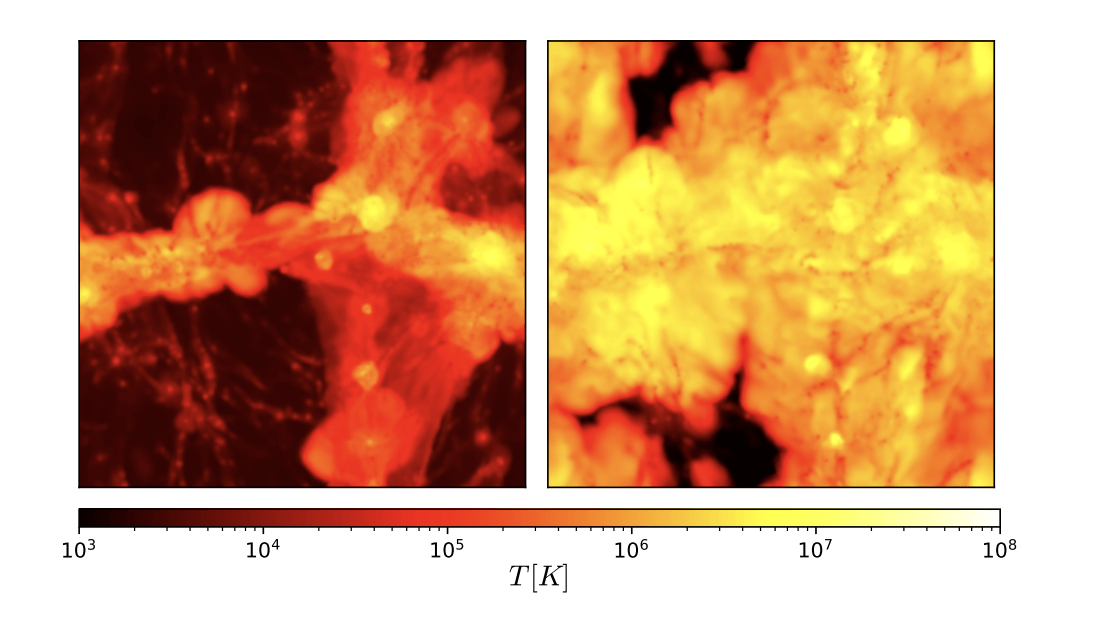

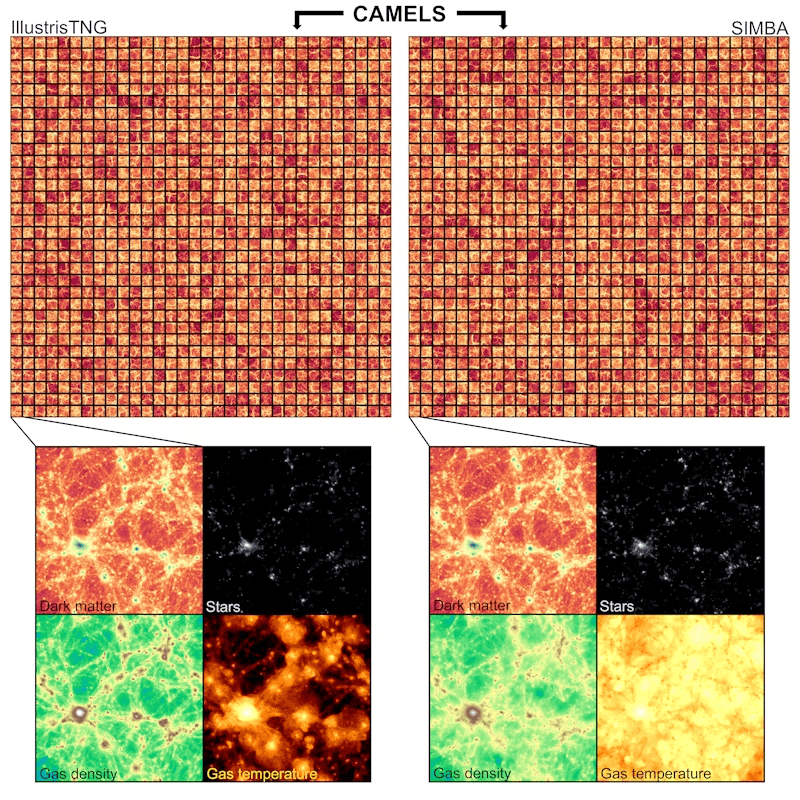

CAMELS Volumes

1000 boxes with varying cosmology and feedback models

Gas Properties

Current model optimised for Lyman Alpha forest

7 GPU minutes for a 50 Mpc simulation

130 million CPU core hours for TNG50

Density

Temperature

Galaxy Distribution

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

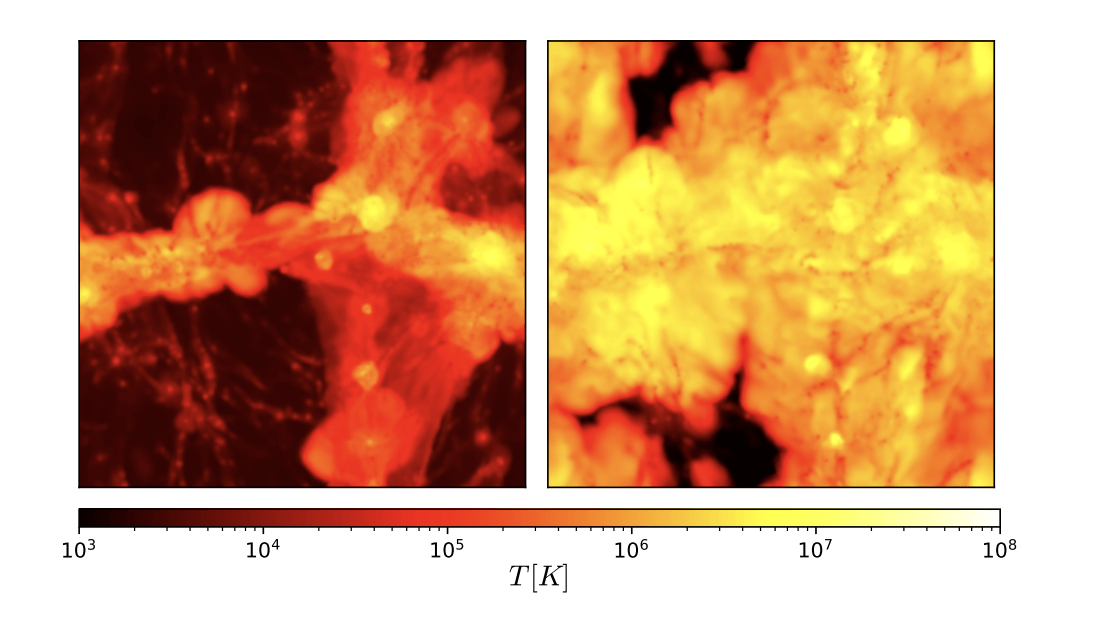

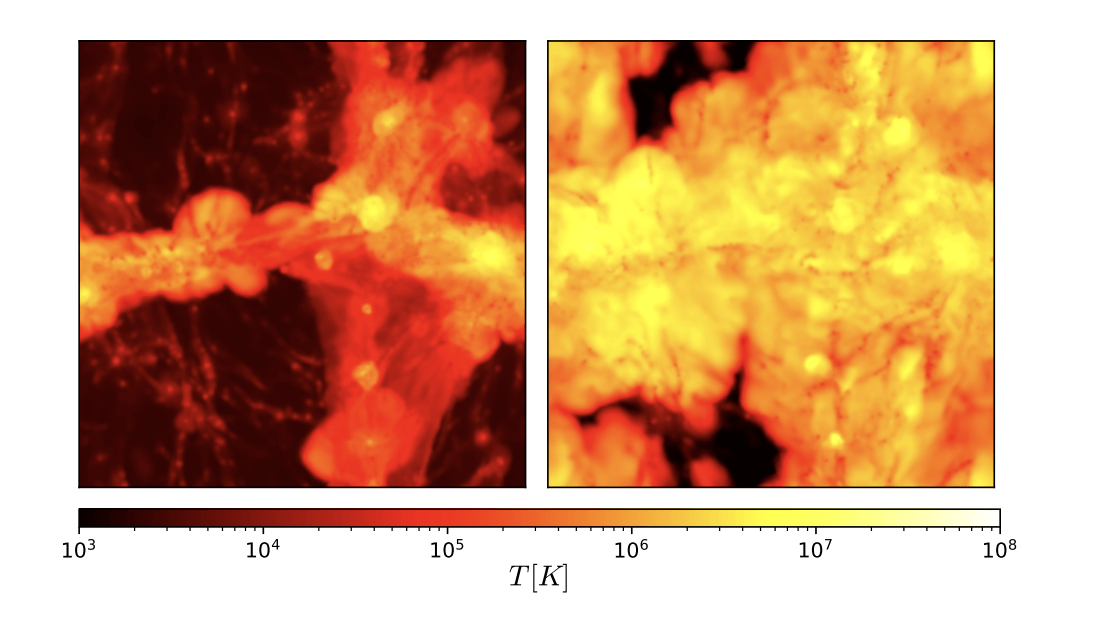

Hydro At Scale

[Video credit: Francisco Villaescusa-Navarro]

Gas density

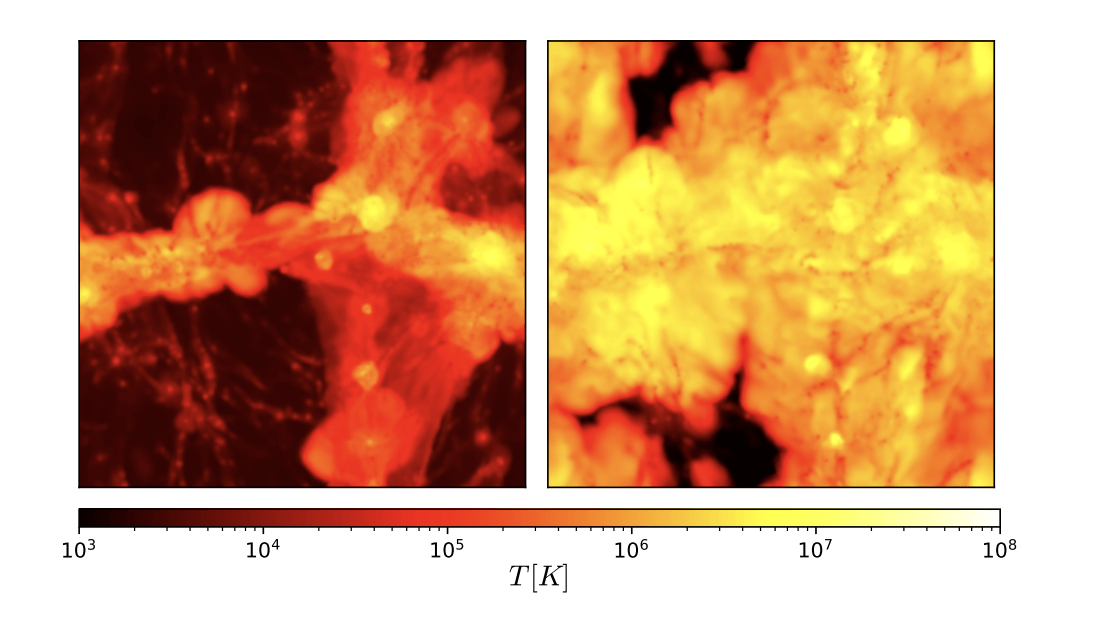

Gas temperature

Subgrid model 1

Subgrid model 2

Subgrid model 3

Subgrid model 4

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

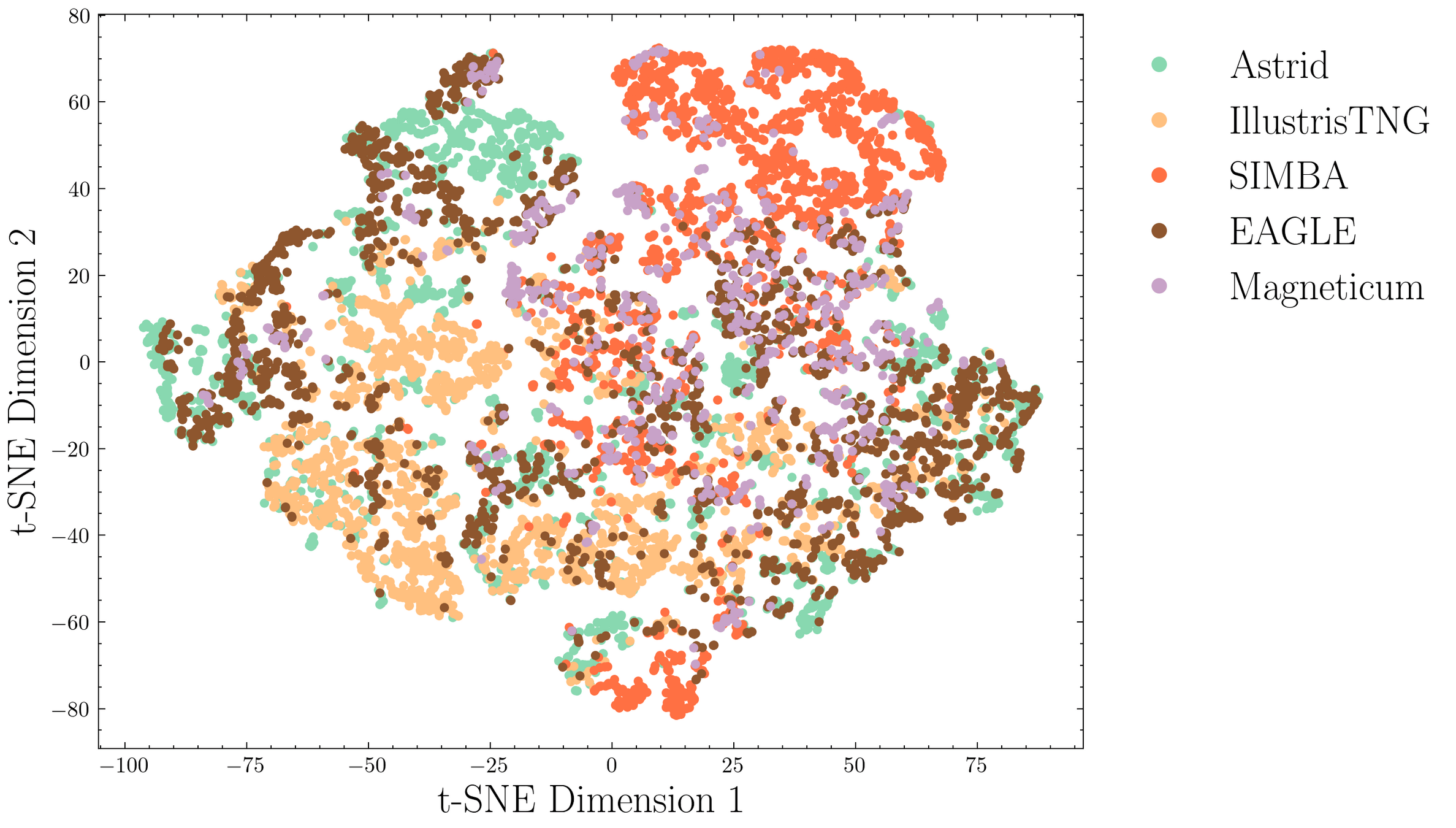

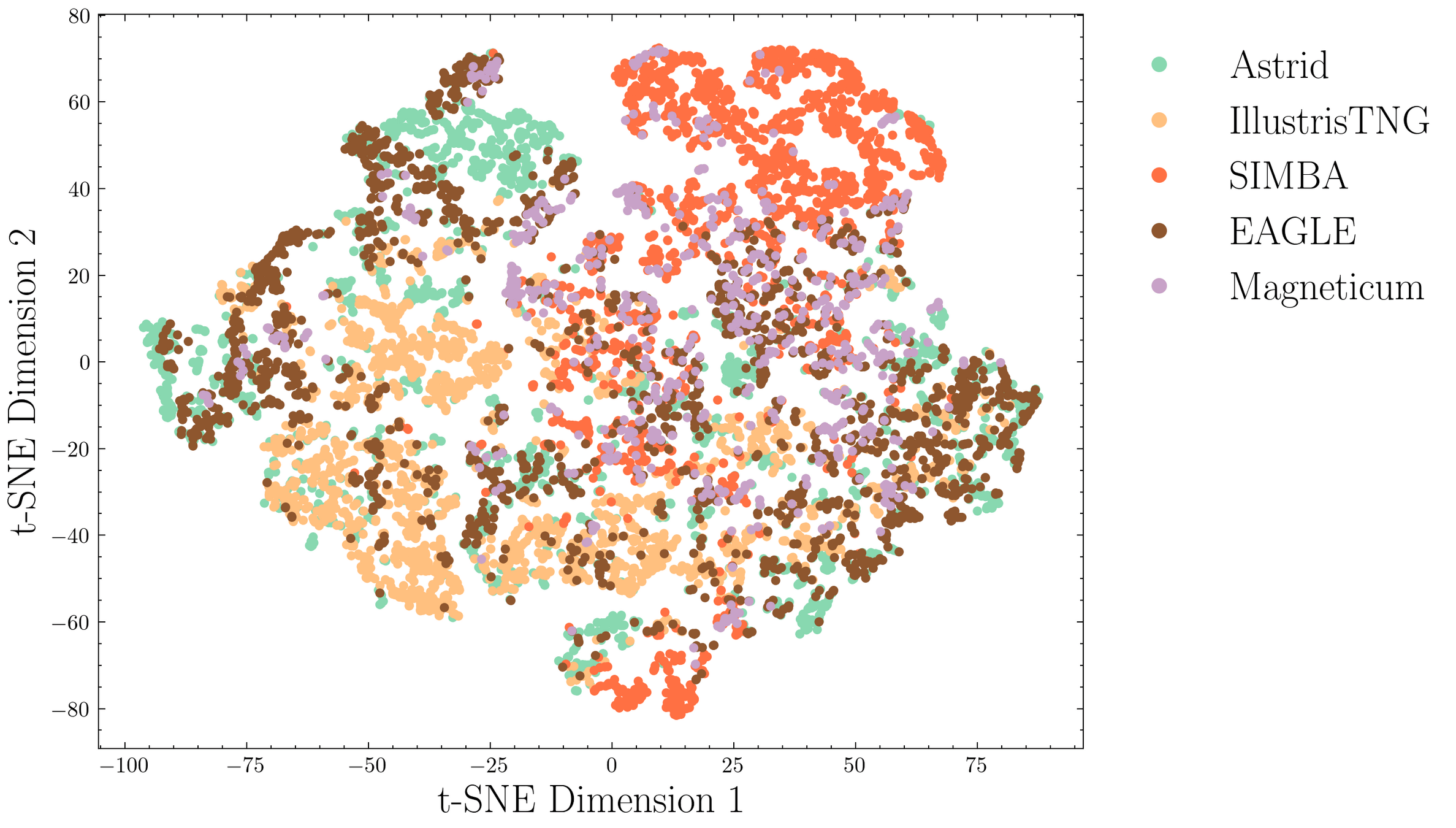

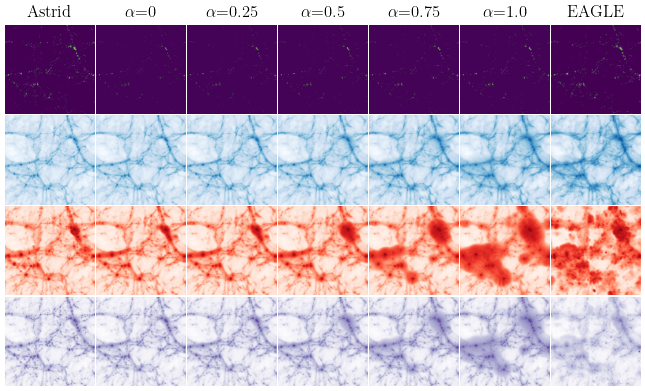

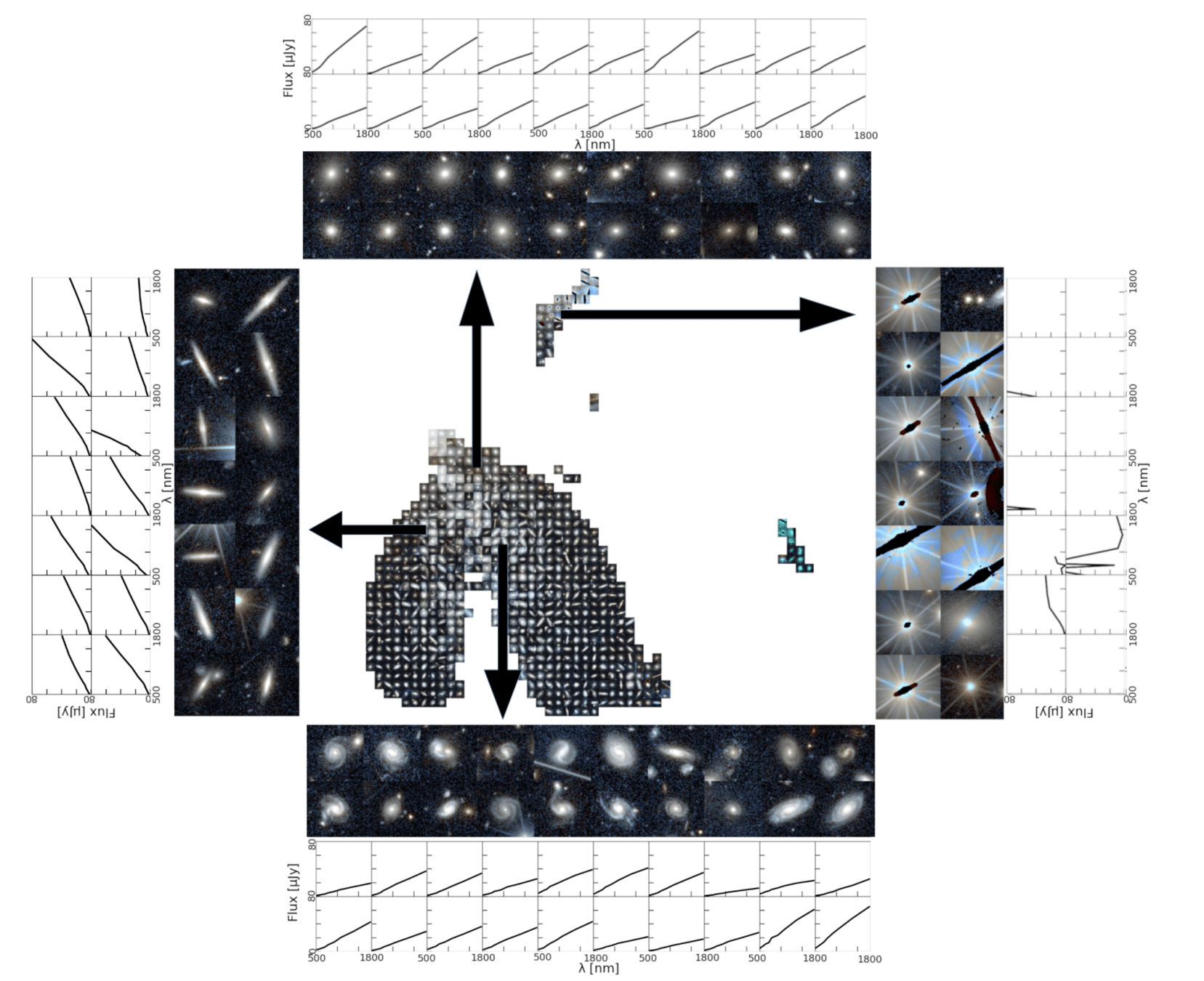

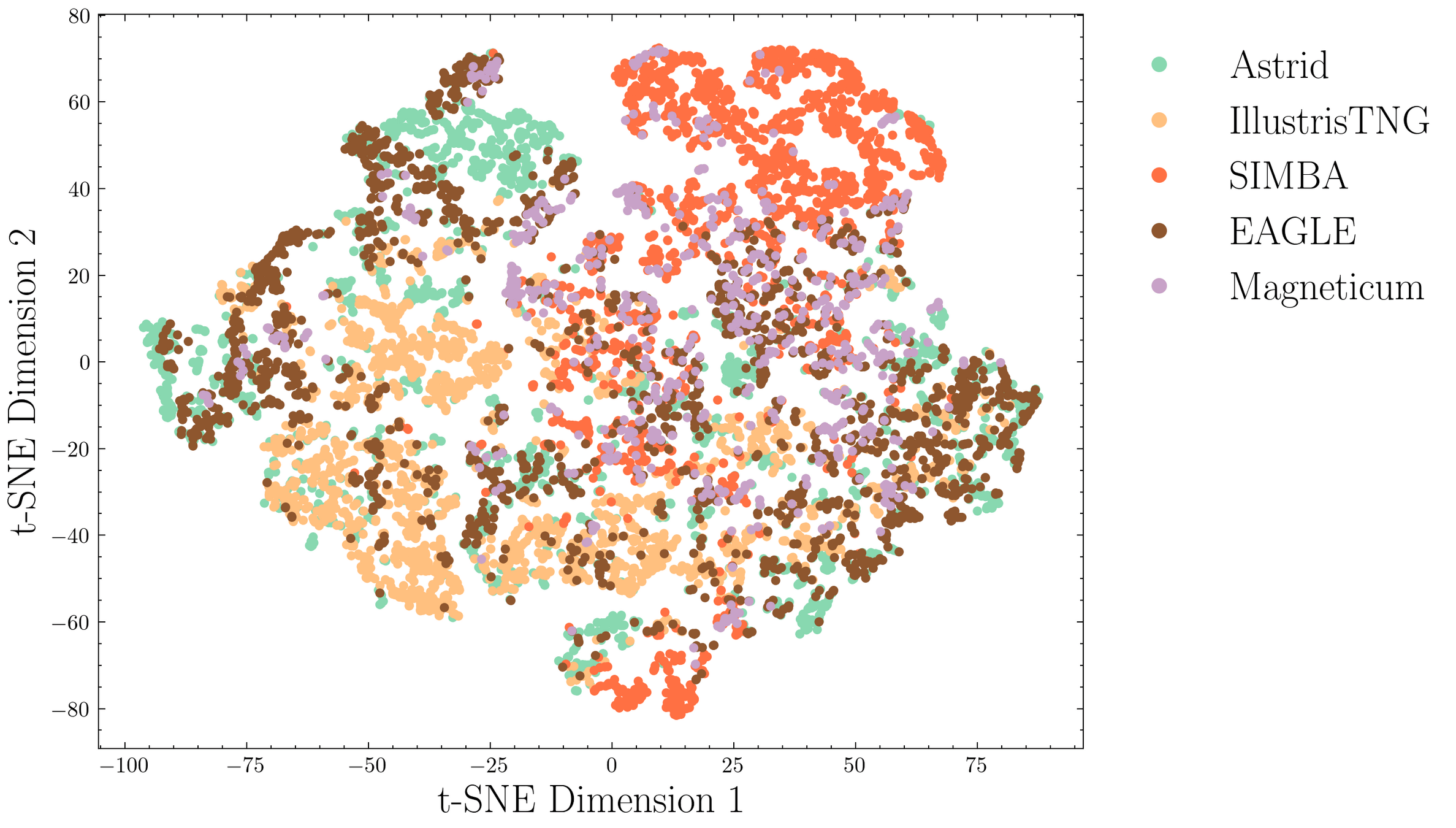

Can we learn a general and continuous representation of Baryonic feedback?

Gas

Galaxies

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

Dark Matter

Baryonic fields

Marginalize over a broader set of subgrid physics

Interpolate between simulators

Mingshau Liu

(Ming)

Constrain z via multi-wavelength observations

Carolina Cuesta-Lazaro Flatiron/IAS - Astro Seminar

Trained on:

TNG, SIMBA, Astrid, EAGLE

Encoder

1) Encoder

Gas

Galaxies

Dark Matter

Baryonic fields

2) Probabilistic Decoder

Dark Matter

Baryonic fields

Carolina Cuesta-Lazaro Flatiron/IAS - FLI

(Test suite)

Gas Density

Temperature

Astrid

EAGLE

Carolina Cuesta-Lazaro - IAS Astro Seminar

Interpolating over Simulations

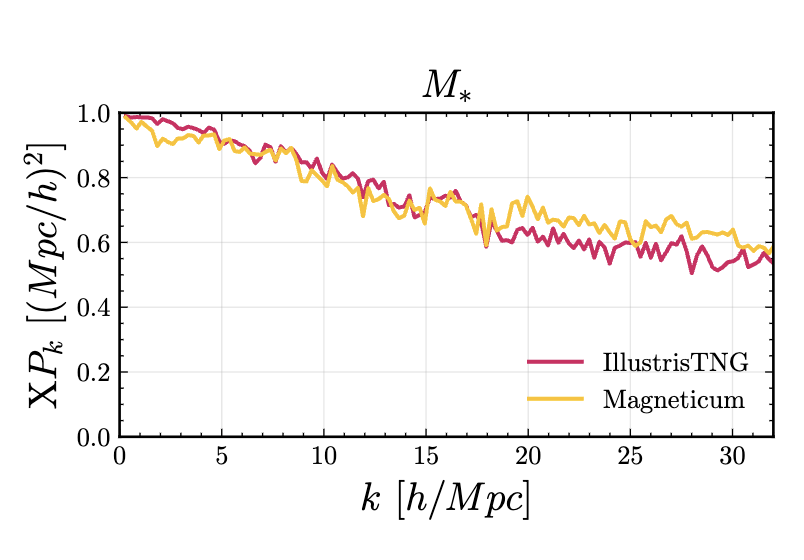

Generalizing to unseen simulations: Magneticum

Carolina Cuesta-Lazaro - IAS Astro Seminar

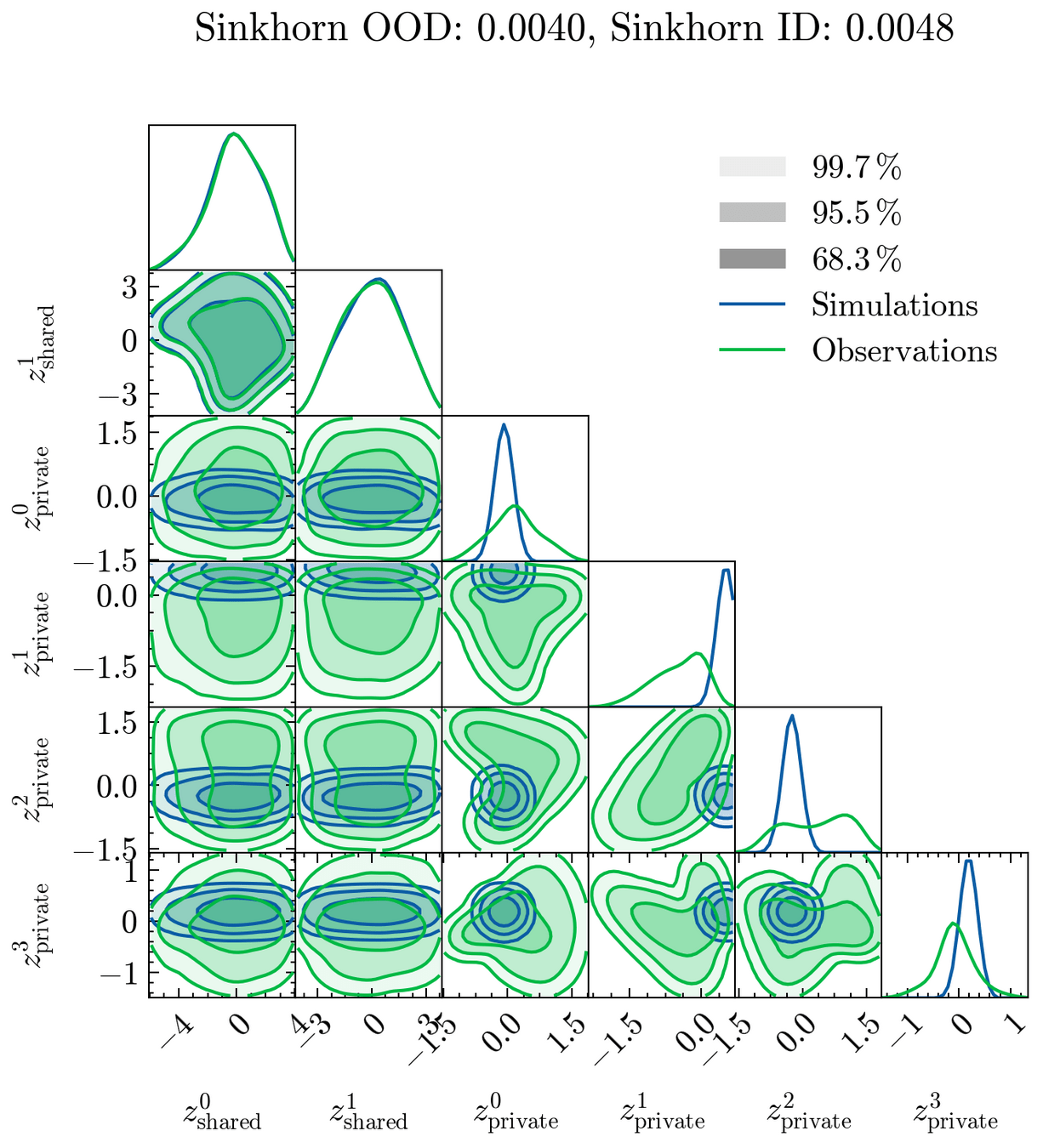

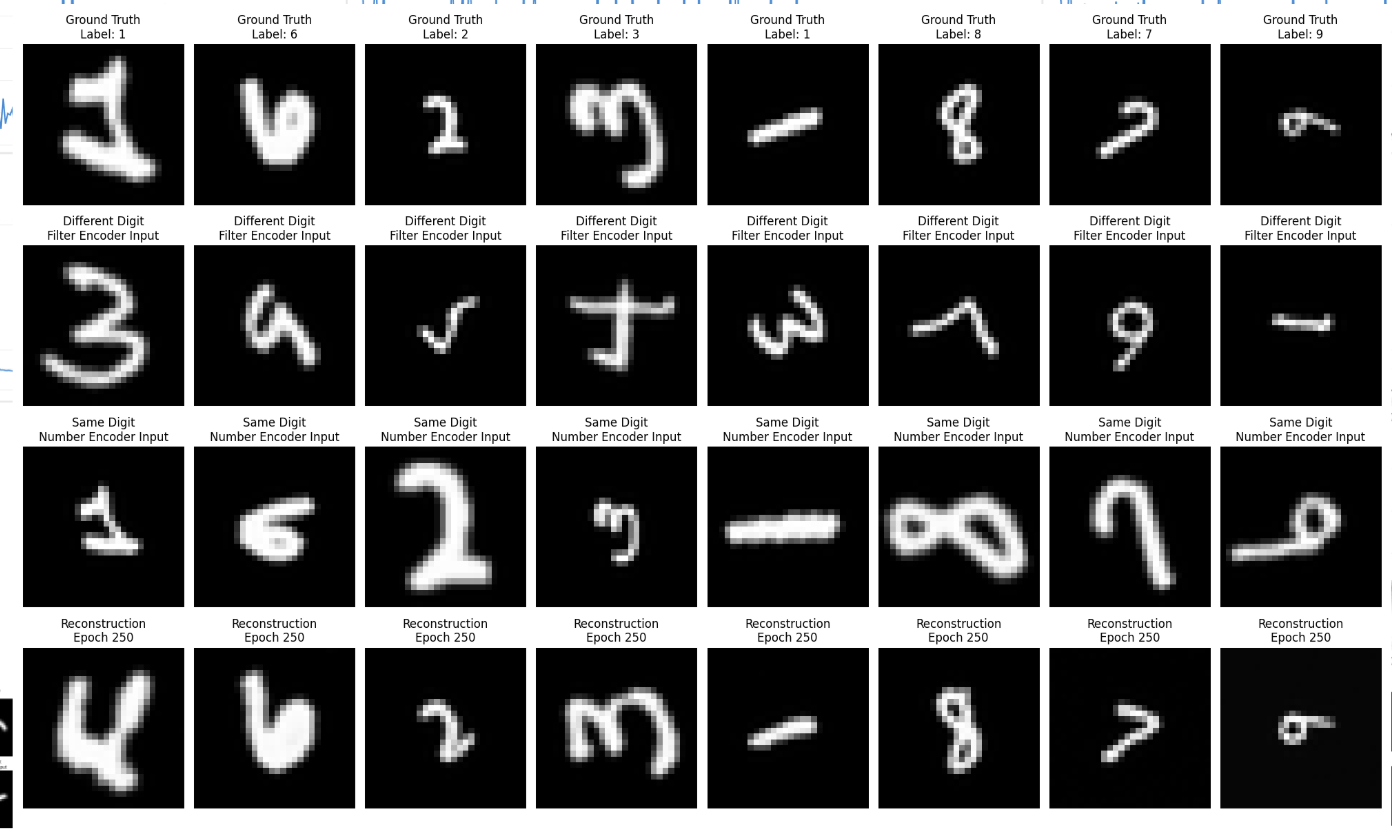

Astrophysics proliferates Simulation-based Inference

on Simulations

Carolina Cuesta-Lazaro - IAS Astro Seminar

Simulated Data

Observed Data

Alignment Loss

Reconstruction

Statistical Alignment

(OT / Adversarial)

Carolina Cuesta-Lazaro - IAS Astro Seminar

Encoder

Obs

Encoder

Sims

Private Domain Information

Shared Information

Observed Reconstructed

Simulated Reconstructed

Shared Decoder

Shared Decoder

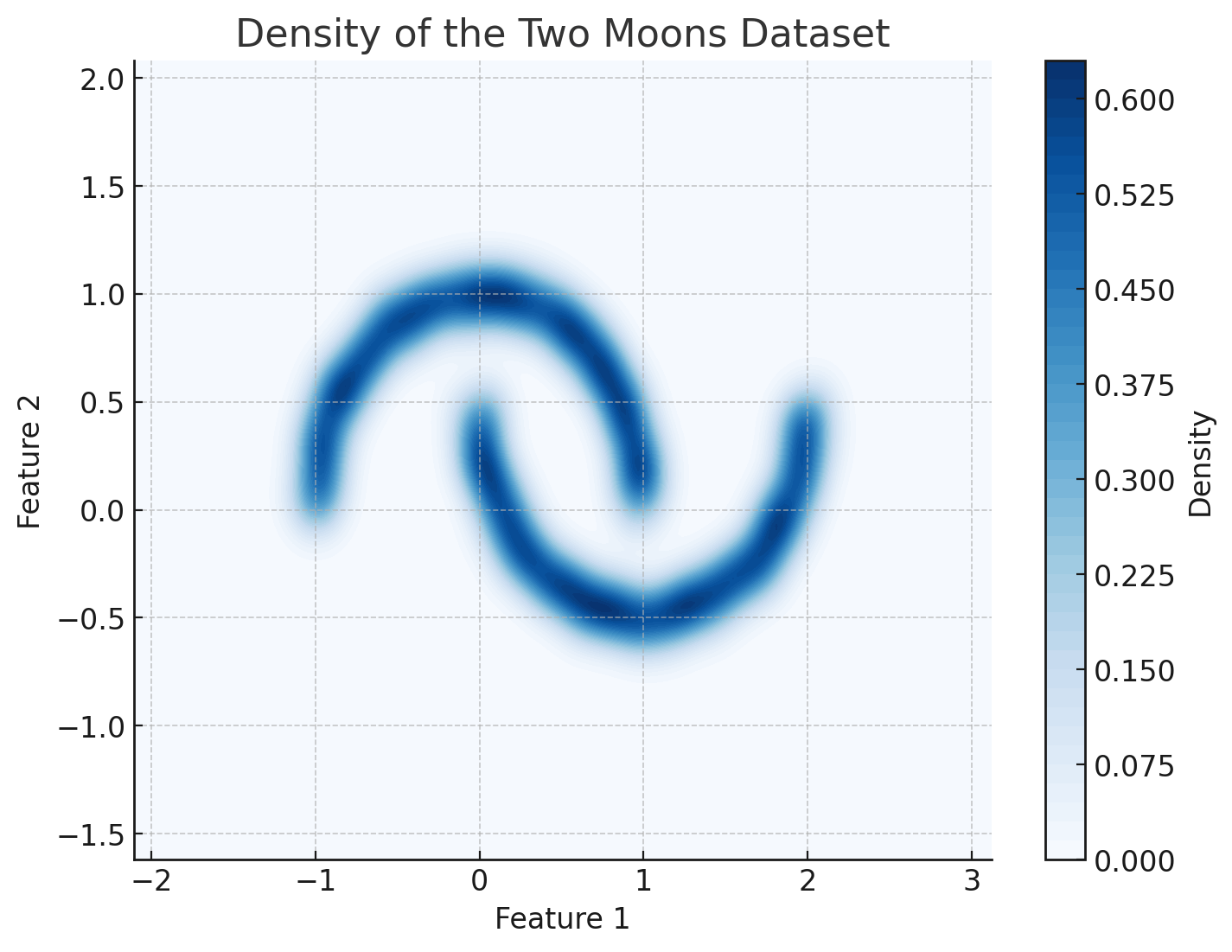

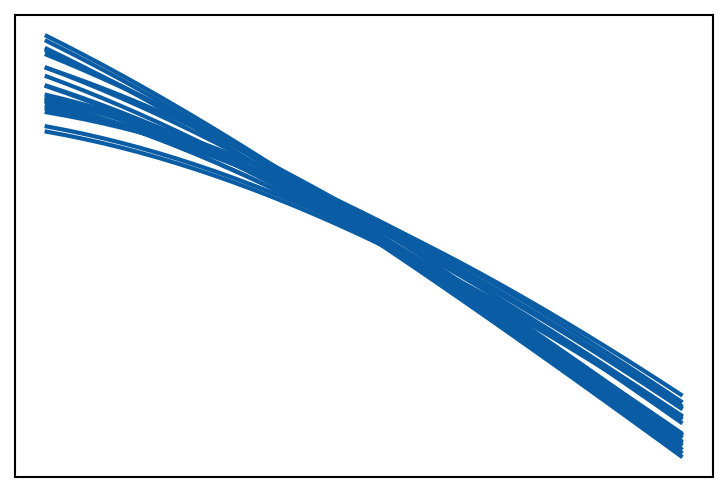

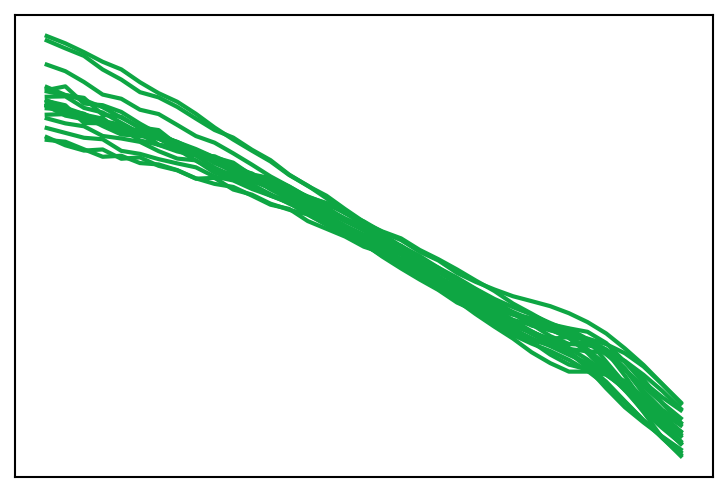

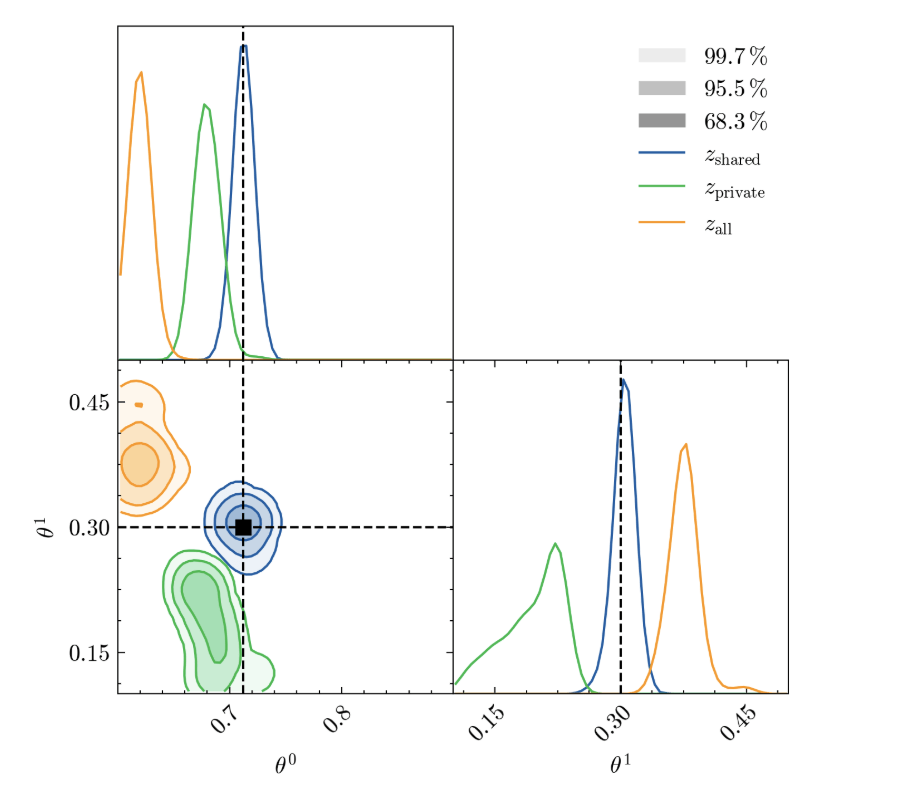

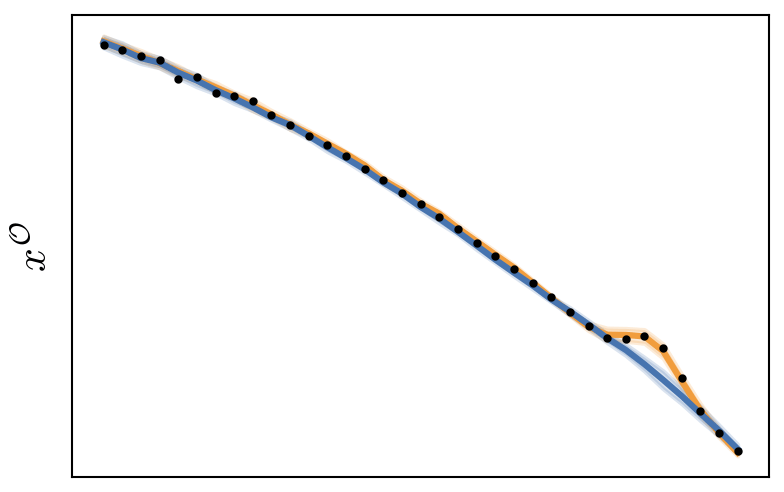

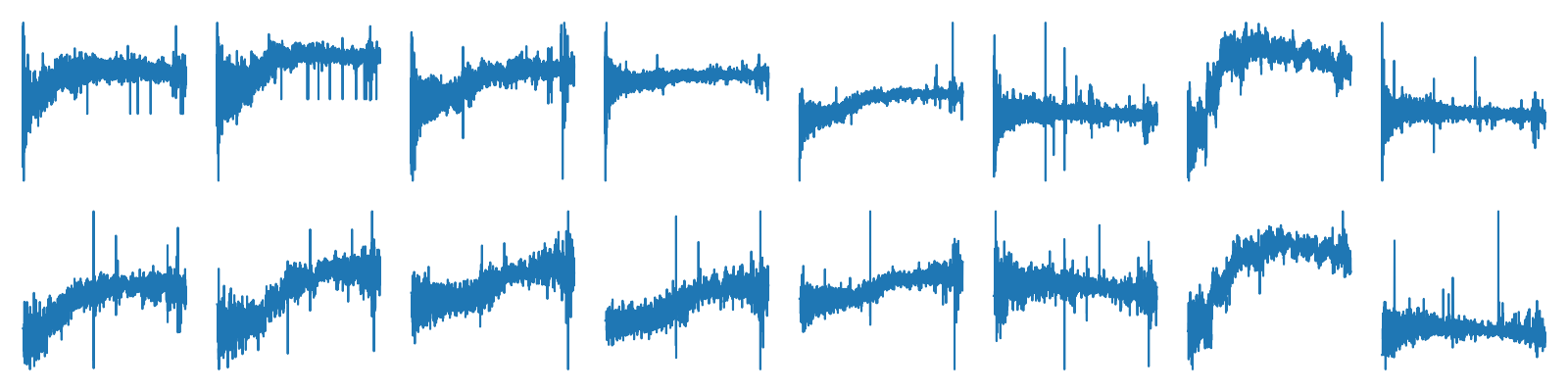

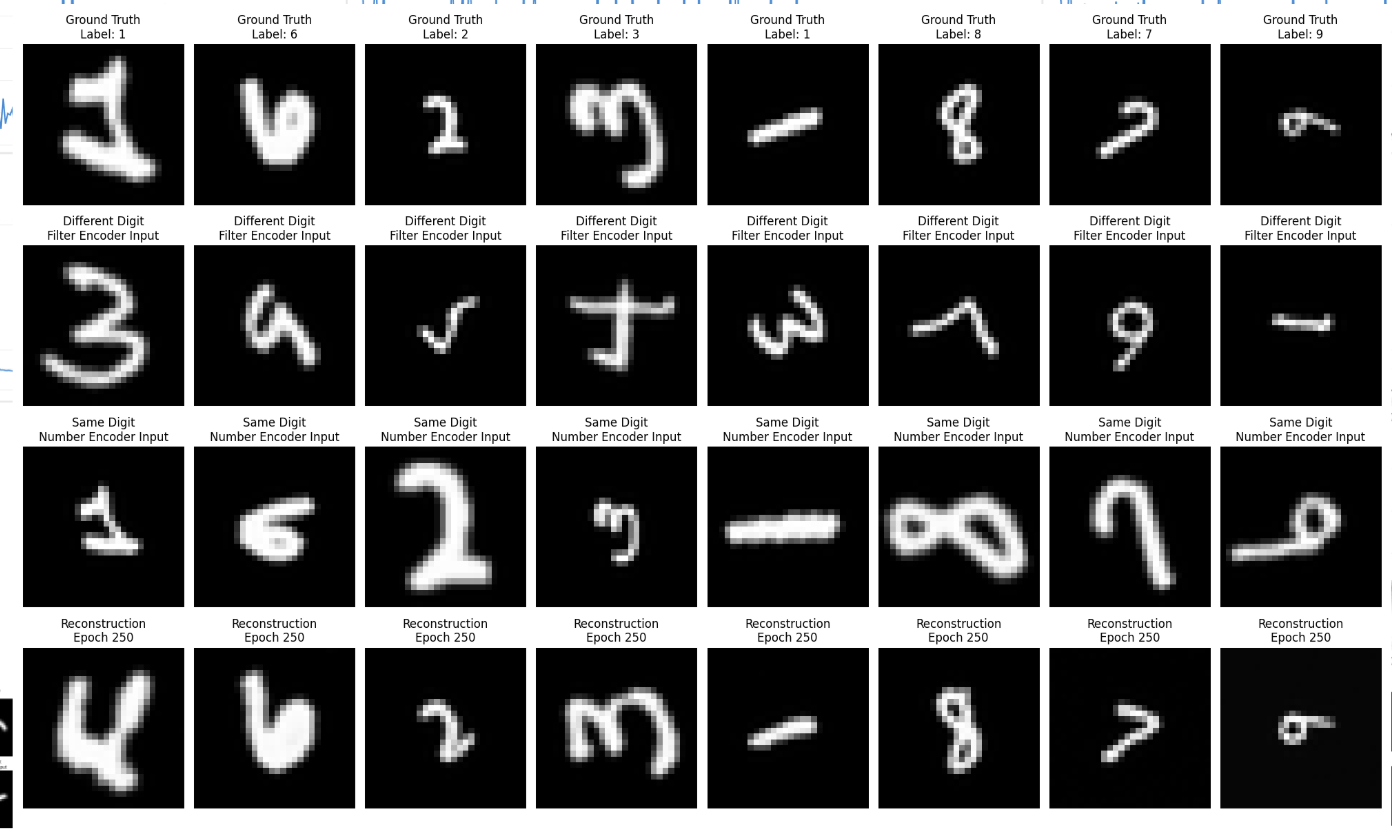

A Toy Model Example

Idealized Simulations

Observations

+ Scale Dependent Noise

+ Bump

Carolina Cuesta-Lazaro - IAS Astro Seminar

Amplitude

Tilt

Tilt

Robust SBI from Shared

Visualizing Information Split

Carolina Cuesta-Lazaro - IAS Astro Seminar

Carolina Cuesta-Lazaro - IAS Astro Seminar

Anomaly Detection in Astrophysics

arXiv:2503.15312

Carolina Cuesta-Lazaro - IAS Astro Seminar

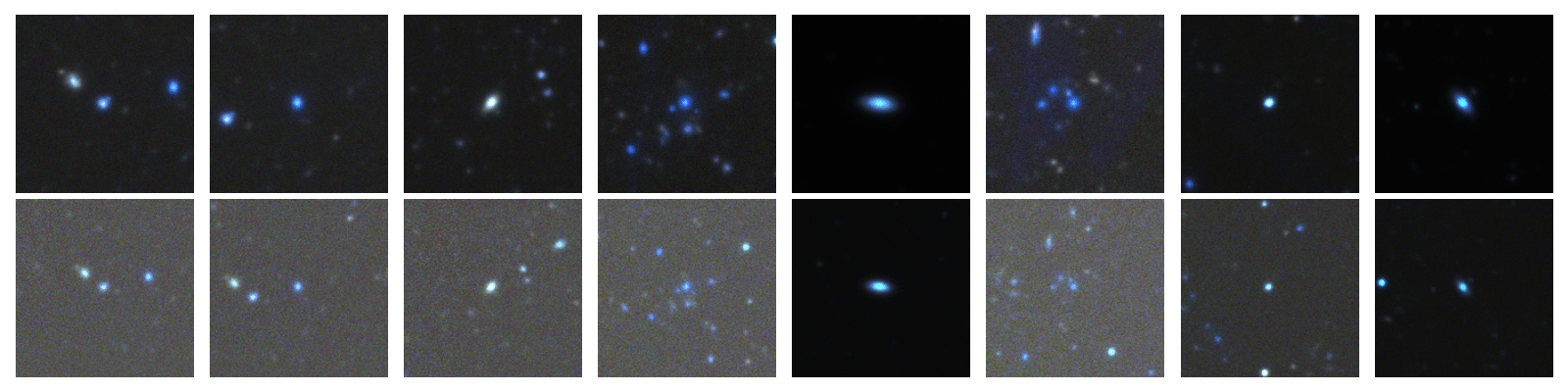

Can we separate Systematics from Physics?

Pablo Mercader

Daniel Muthukrishna

Jeroen Audenaert

Legacy Survey

HSC

DESI

SDSS

Same Object / Different Instrument

Different Object / Same Instrument

Carolina Cuesta-Lazaro - IAS Astro Seminar

Object 1

Object 2

Object 1

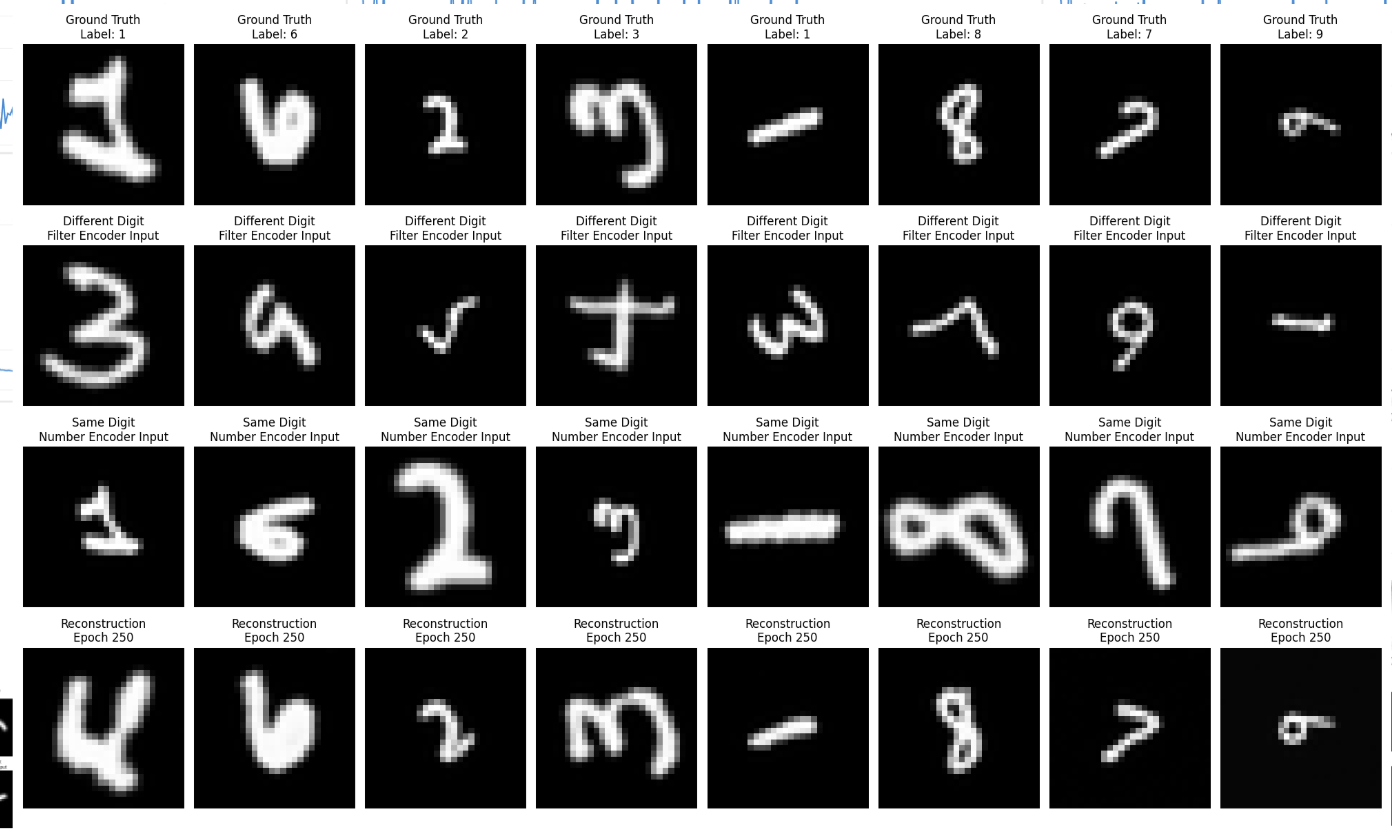

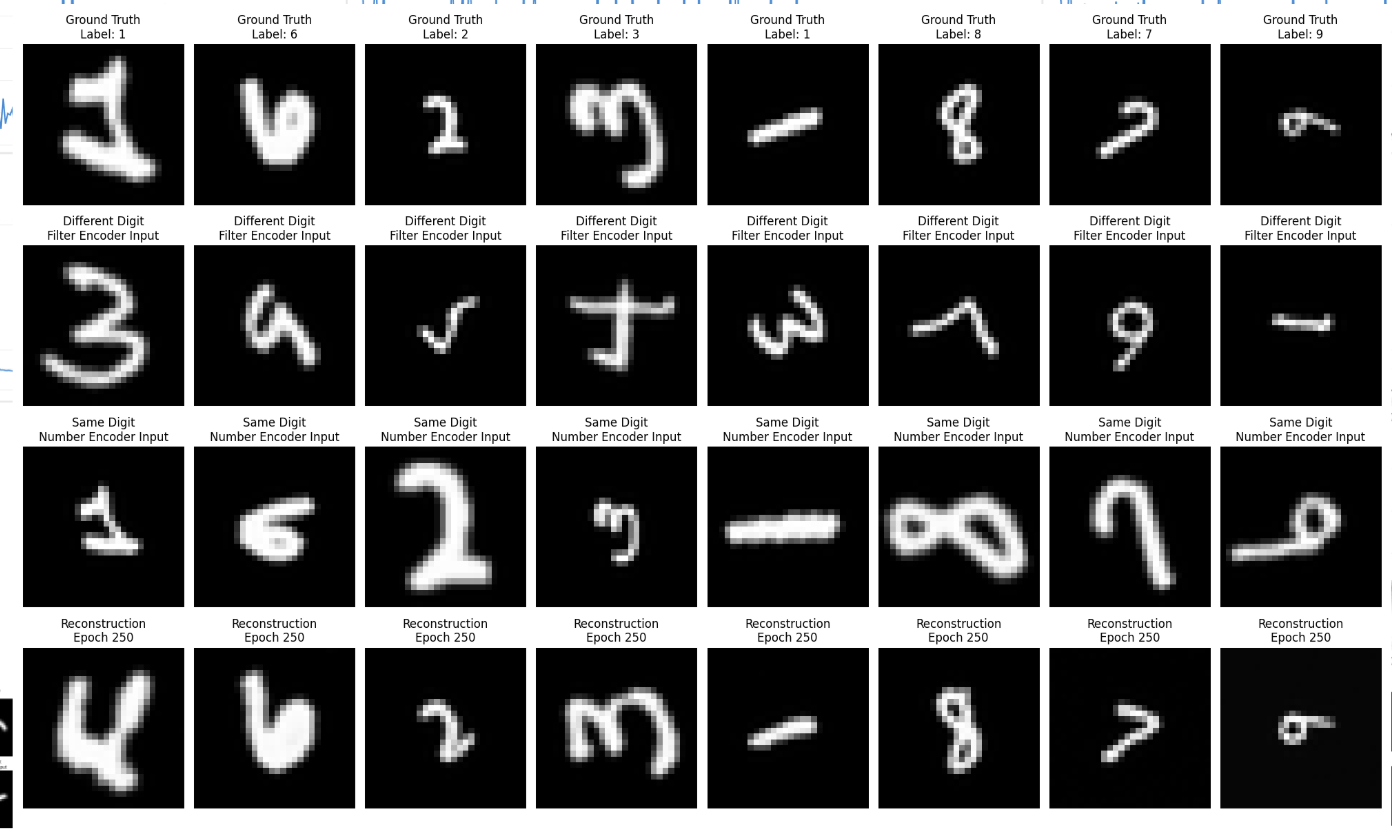

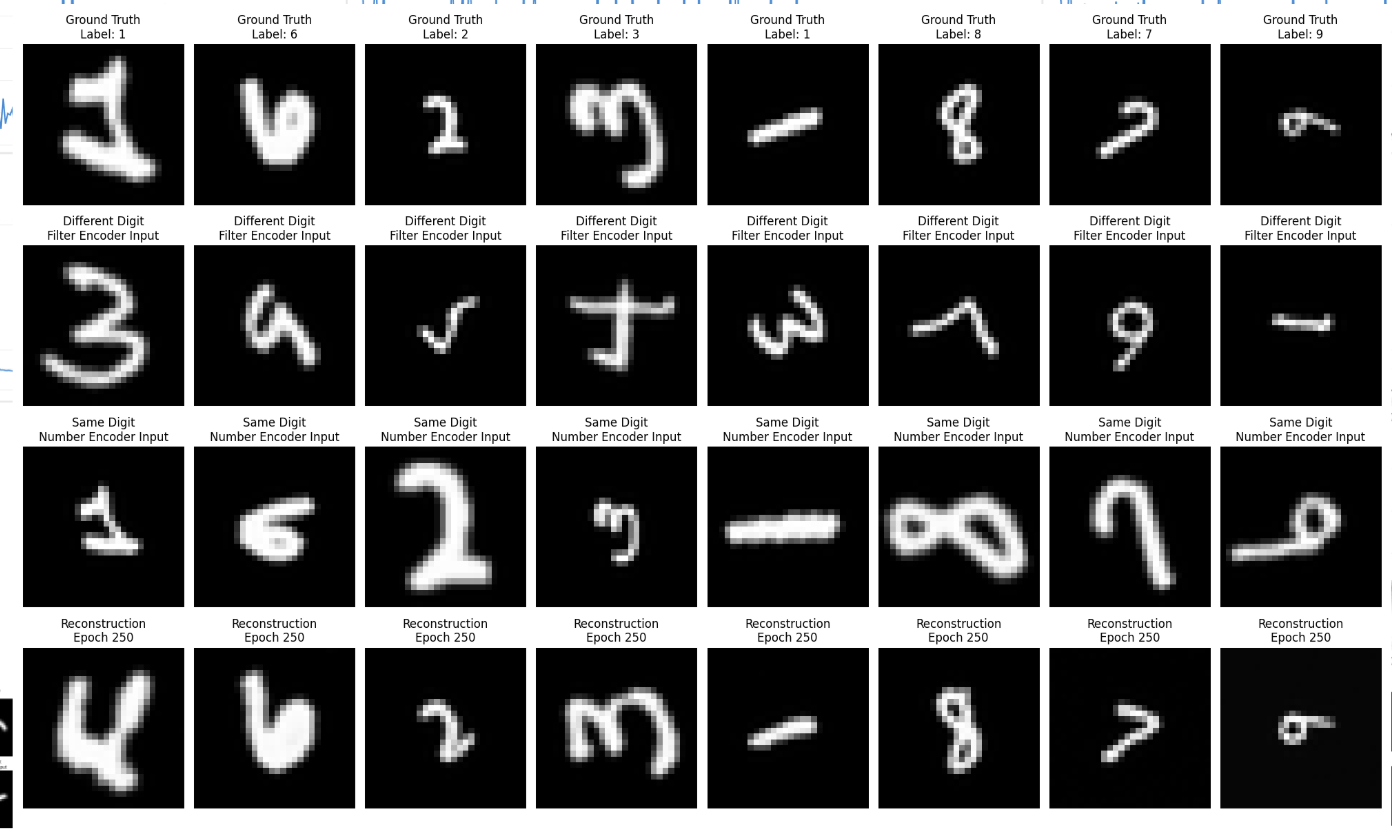

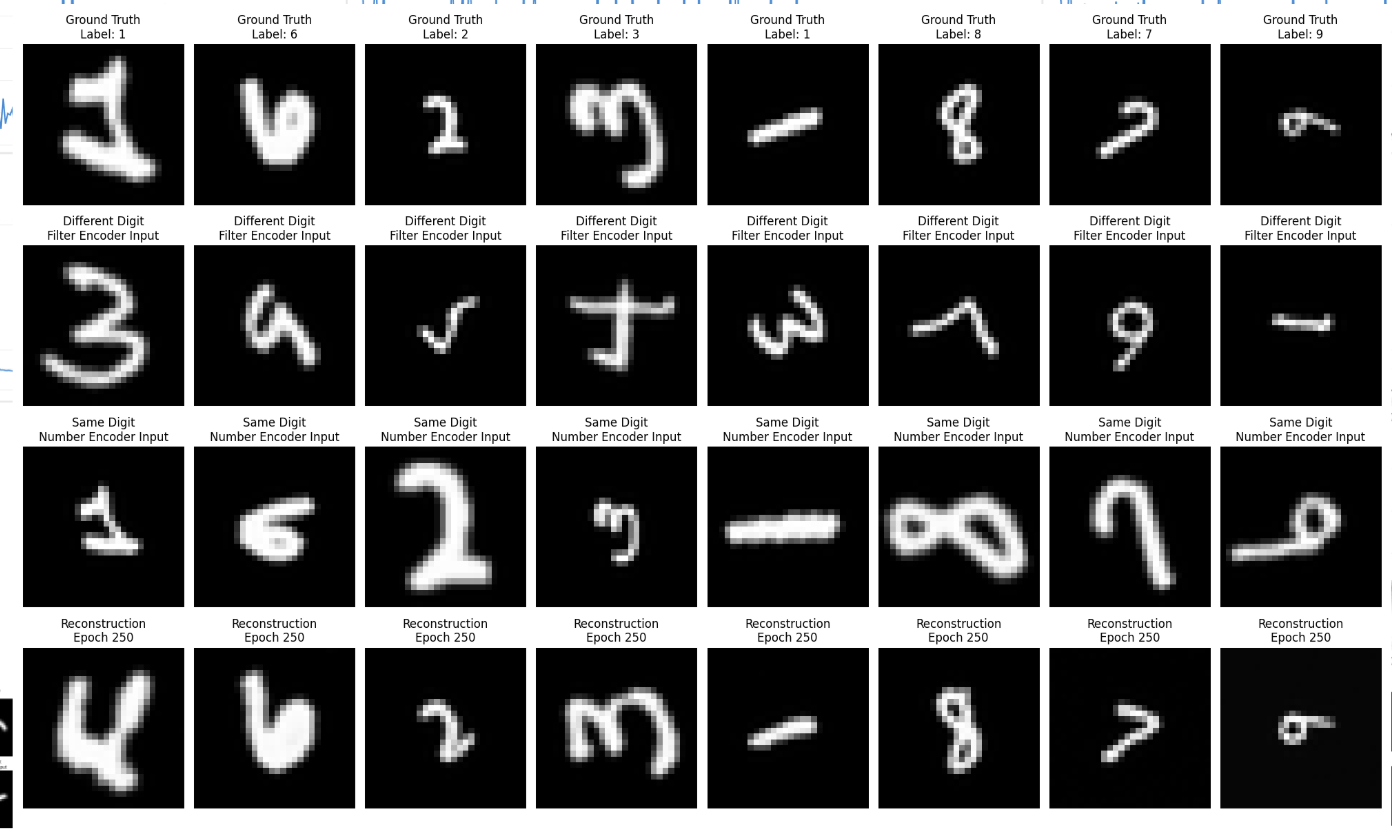

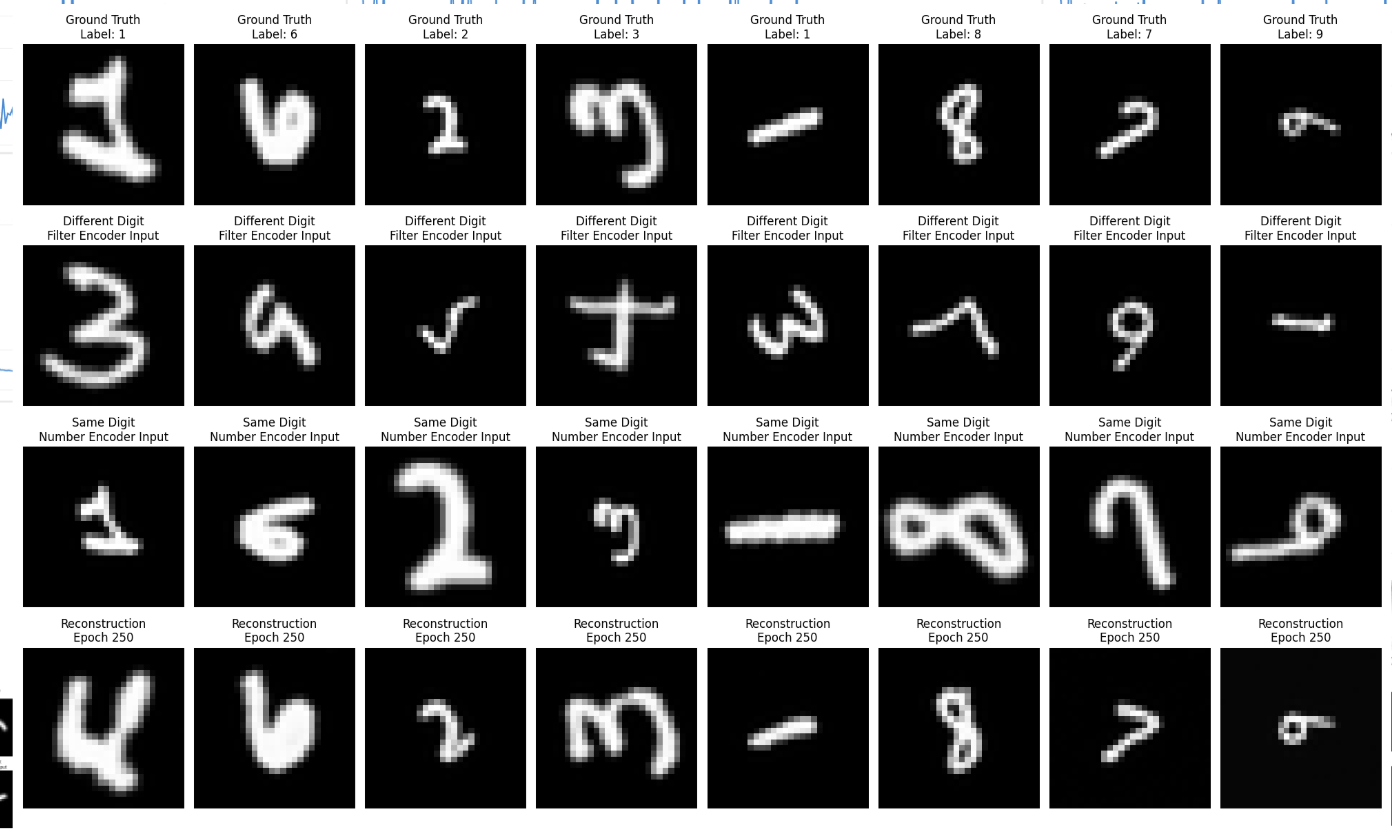

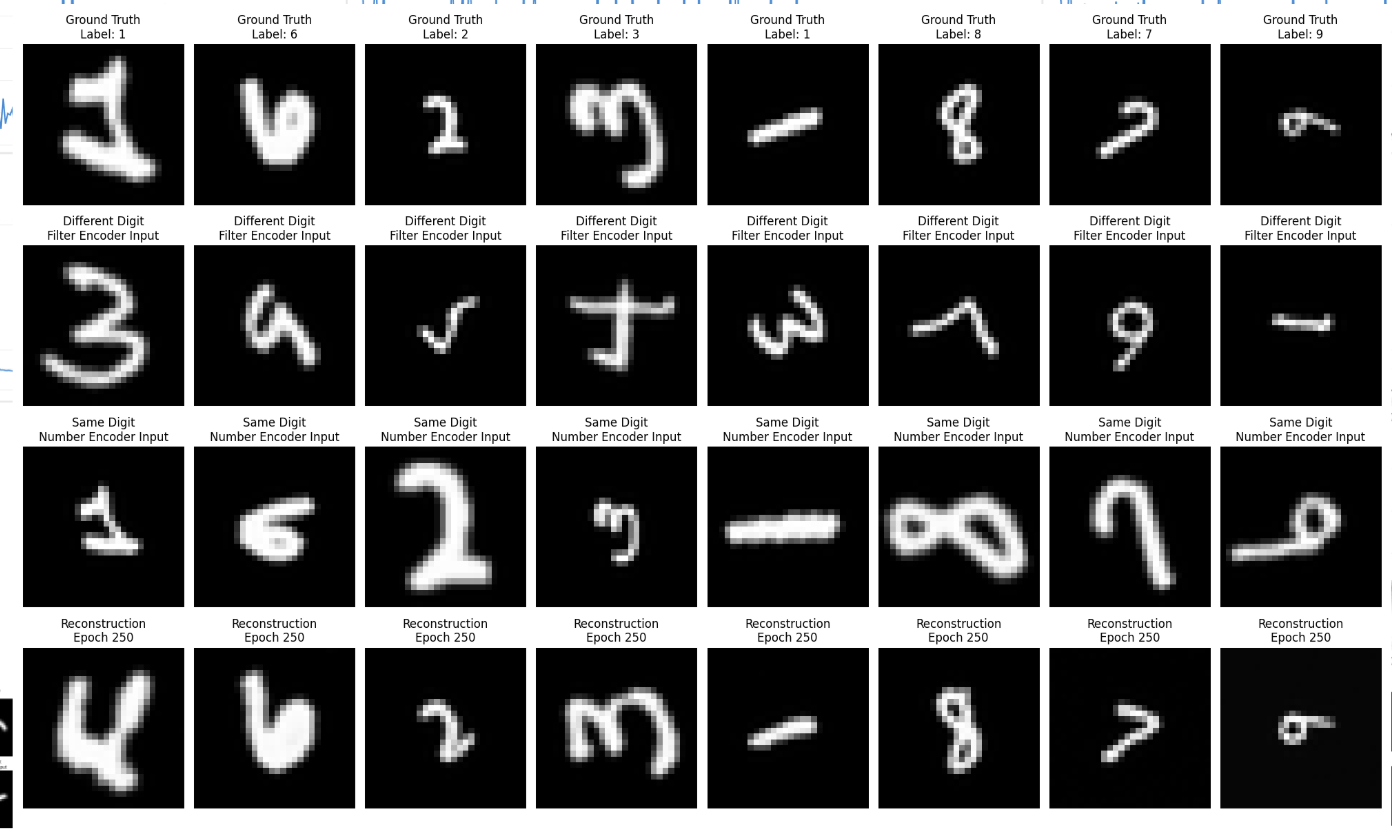

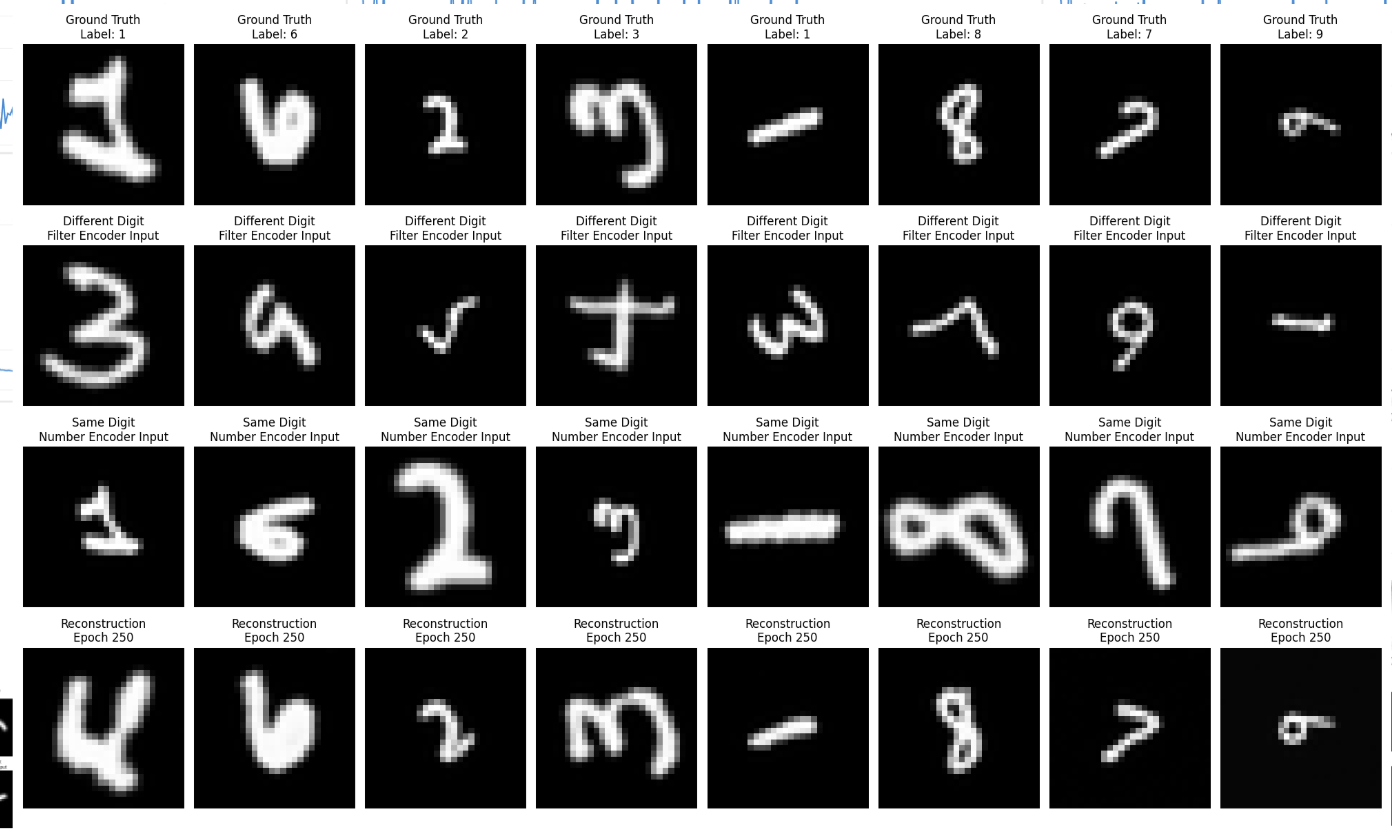

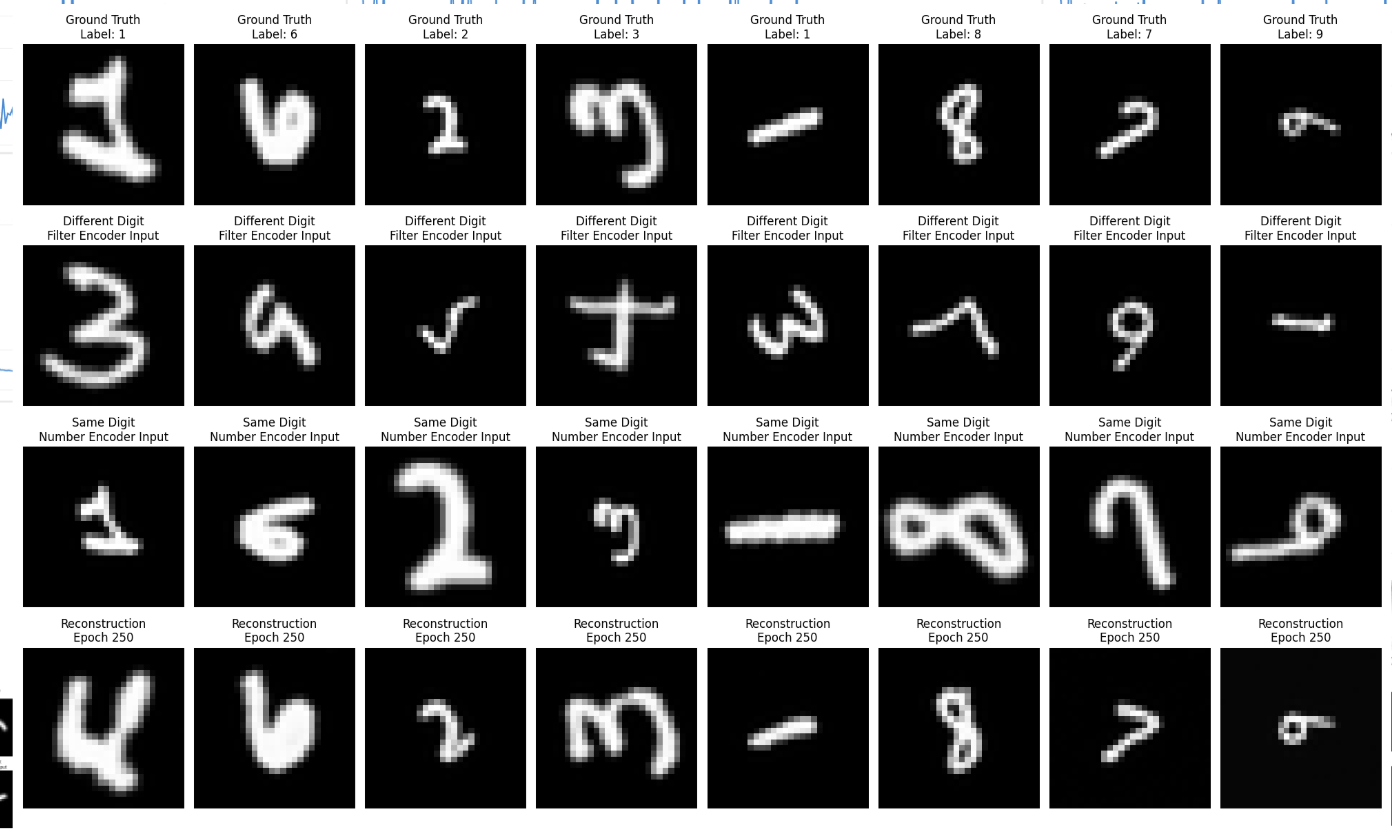

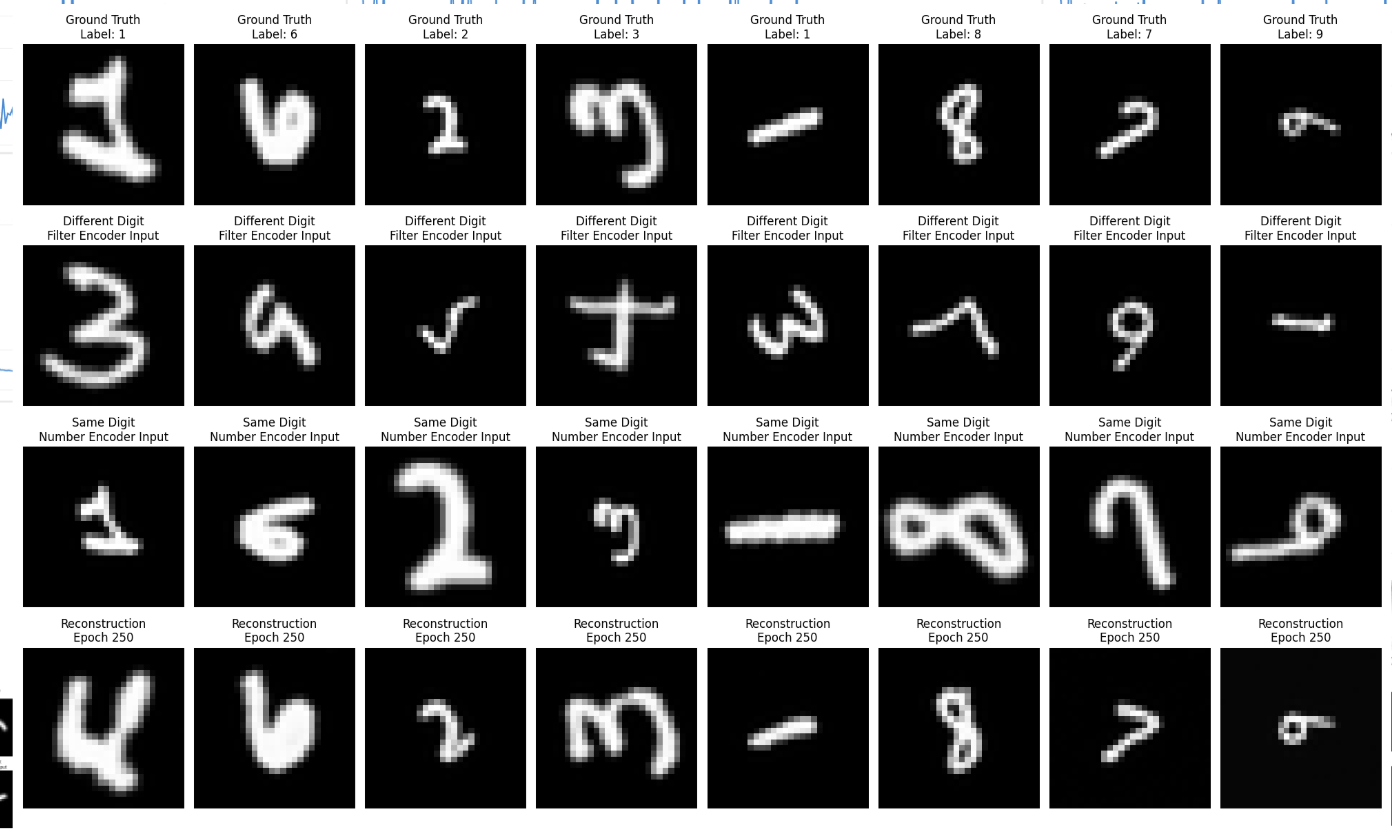

Back to the Playground!

Orientation + Scale

Number

Instrument 1

Instrument 1

Instrument 2

Instrument Encoder

Object Encoder

Instrument Pair

Object Pair

Instrument Pair

Object Pair

Carolina Cuesta-Lazaro - IAS Astro Seminar

Ground Truth

Instrument Pair

Object Pair

Recon

2. We can scale hydrodynamical simulation in volume for the analysis of LSS surveys

Conclusions

Can we leverage multi-wavelength observations?

3. Playing with the latent space will help us learn robustly

1. Cosmological field level inference can be made scalable with generative models

Can EFT help us scale in volume?

Can generally make simulators more controllable!

Carolina Cuesta-Lazaro - IAS Astro Seminar

Is resolution too low?

Private-Shared Information Split

Disentangling systematics

Observation

Question

Hypothesis

Testable Predictions

Gather data

Alter, Expand, Reject Hypothesis

Develop General Theories

[Figure adapted from ArchonMagnus] Simulators as theory models

The Scientific Method in 2025

High-dimensional data

["An LLM-driven framework for cosmological

model-building and exploration" Mudur, Cuesta-Lazaro, Toomey (in prep)]

Can LLMs turn these anomalies into new hypothesis?

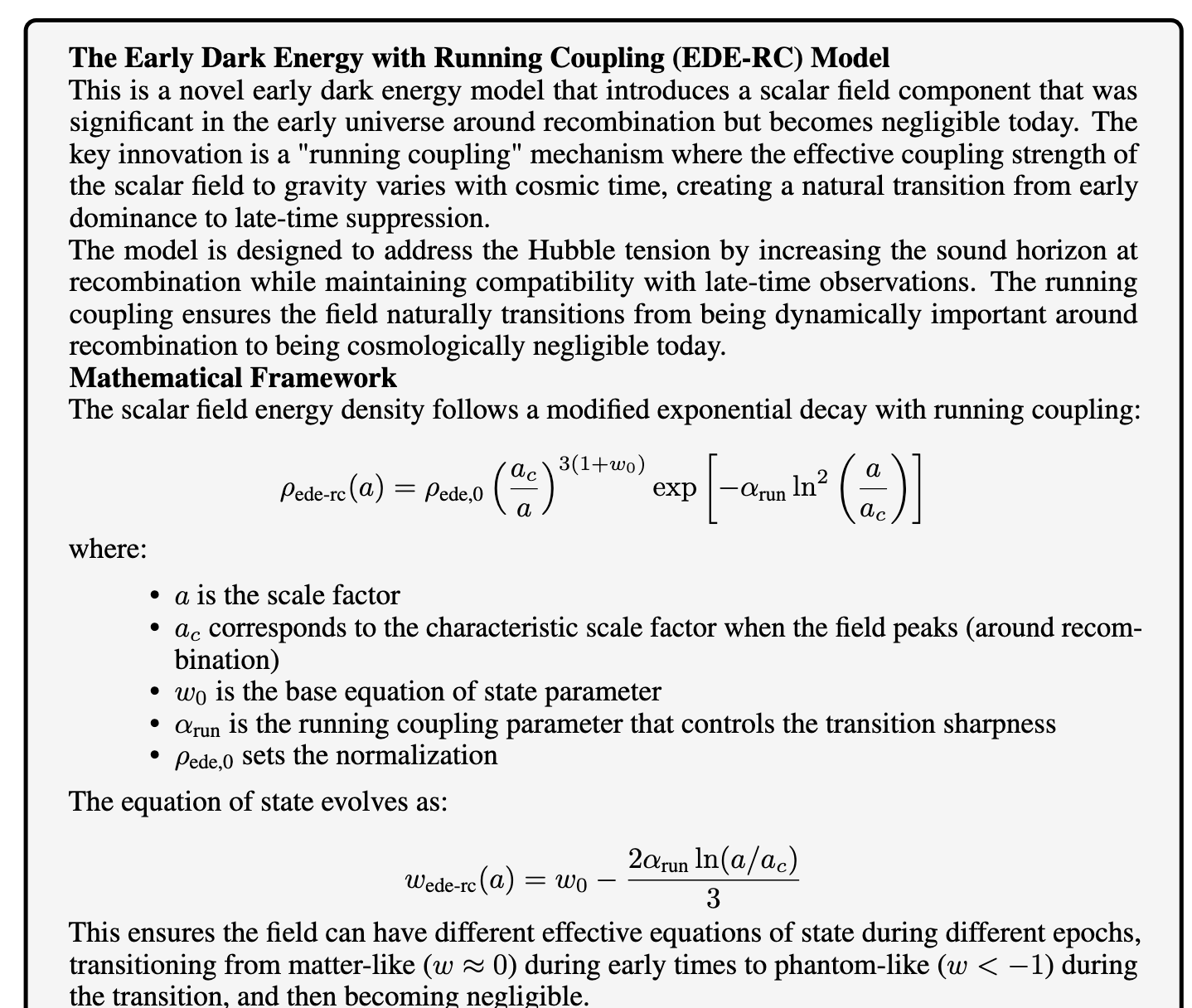

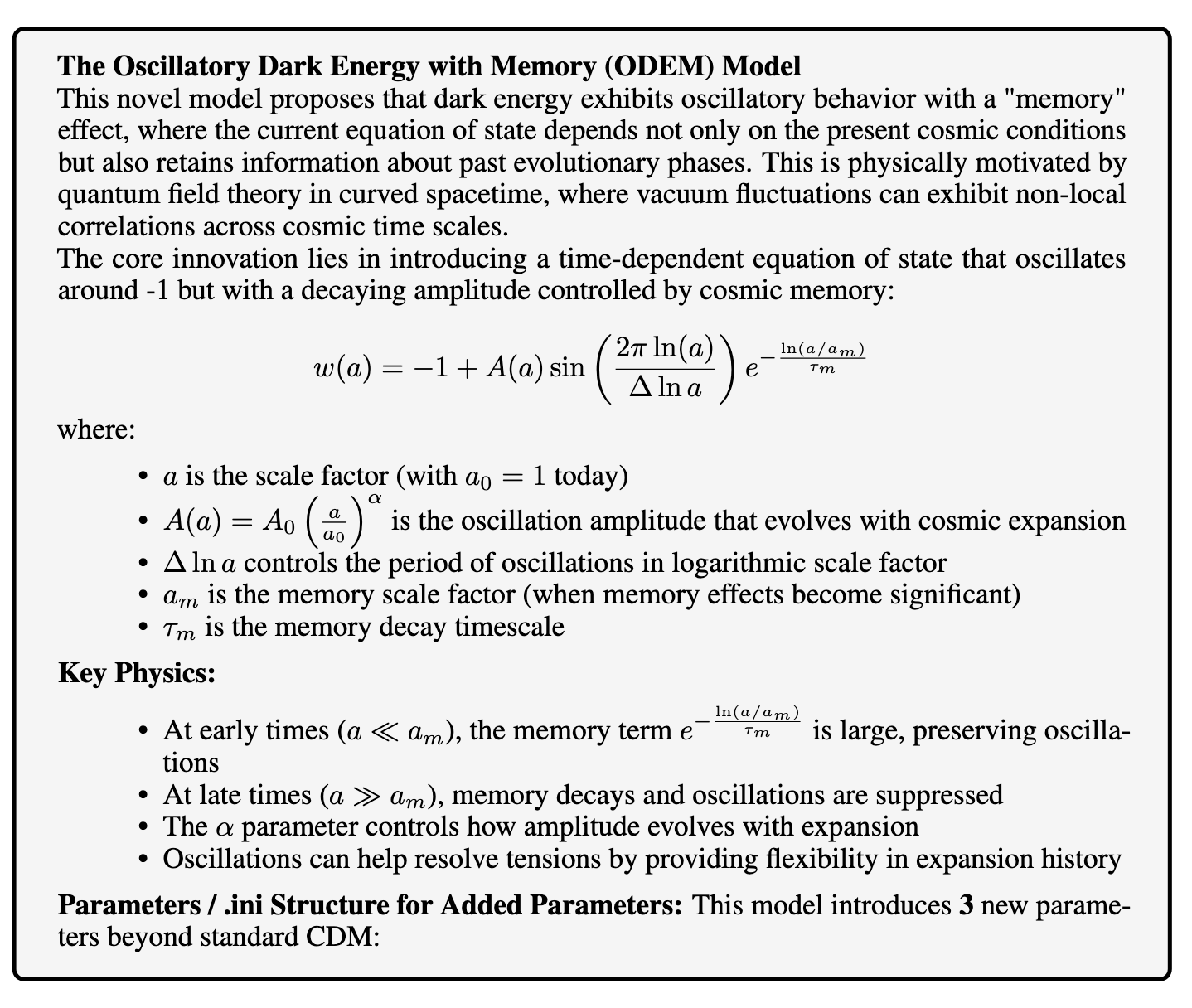

Propose a model for Dark Energy

Implement it in a Cosmology simulation code: CLASS

Test fit to DESI Observations

Iterate to improve fit

Quintessence, DE/DM interactions....

Must pass a set of general tests for "reasonable" models

Ideally, compare evidence to LCDM.

For now, Bayesian Information Criteria (BIC)

1

2

Nayantara Mudur (Harvard)

Carolina Cuesta-Lazaro - IAS Astro Seminar

Can LLMs implement new physics models?

Thawing Quintessence

Axion-like Early Dark Energy

Ultra-light scalar field that temporarily acts as dark energy in the early universe

Implementation Challenge:

Dynamic dark energy model: scalar field transitions from "frozen" (cosmological constant-like) to evolving as the universe expands.

Oscillatory behaviour

Can take advantage of existing scalar field implementations in CLASS

+ 43,000 lines of C code

+ 10,000 lines of numerical files

CLASS Challenge:

Carolina Cuesta-Lazaro - IAS Astro Seminar

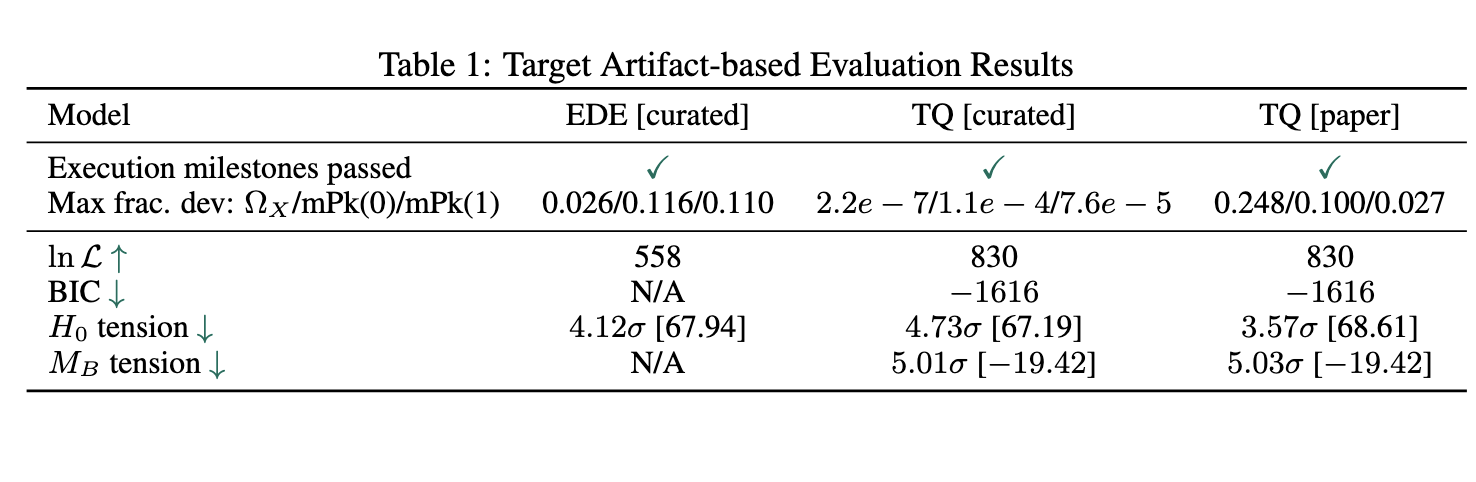

1) Code compiles + obtains reasonable observables

2) Implementation agrees with target repository

3) Goodness of fit for DESI + Supernovae

4) H0 tension metrics

Curated

1 page long description of model to be implemented, CLASS tips + very explicit units

Paper

Directly from a full paper

If fails, get feedback from another LLM

Carolina Cuesta-Lazaro - IAS Astro Seminar

Propose a Dark Energy Model

Shortcut: field that produces this?

Carolina Cuesta-Lazaro - IAS Astro Seminar

Propose a Dark Energy Model

Asked for physical motivation. It tried :(

Not true, preferred scale

Carolina Cuesta-Lazaro - IAS Astro Seminar

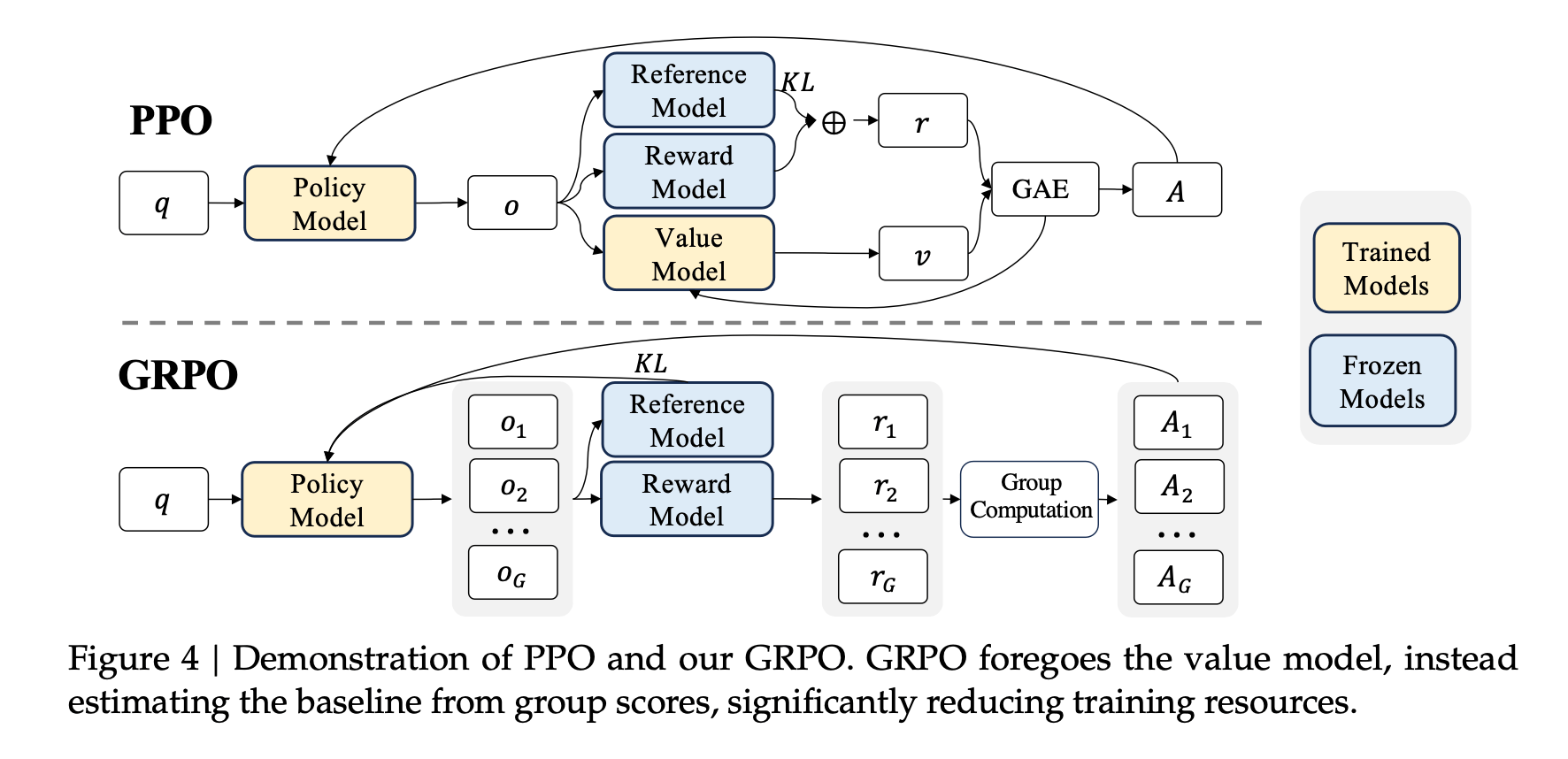

Reinforcement Learning

How to iterate

Update the base model weights to optimize a scalar reward (s)

DeepSeek R1

Base LLM

(being updated)

What rewards are more advantageous?

Base LLM

(frozen)

Develop basic skills: numerics, theoretical physics, UNIT CONVERSION

Community Effort!

Carolina Cuesta-Lazaro - IAS Astro Seminar

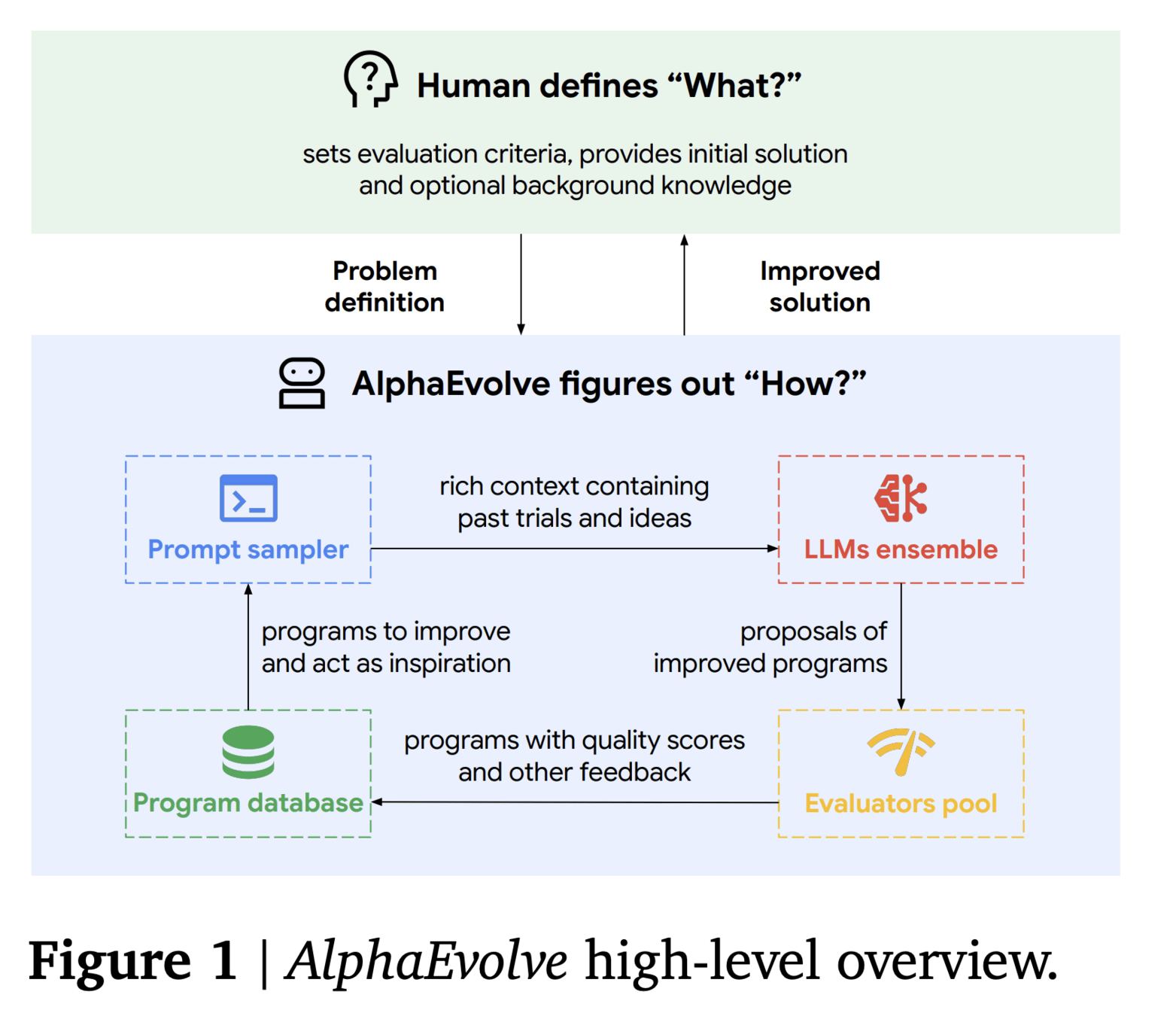

Evolutionary algorithms

Learning in natural language, reflect on traces and results

Examples: EvoPrompt, FunSearch,AlphaEvolve

How to iterate

Carolina Cuesta-Lazaro - IAS Astro Seminar

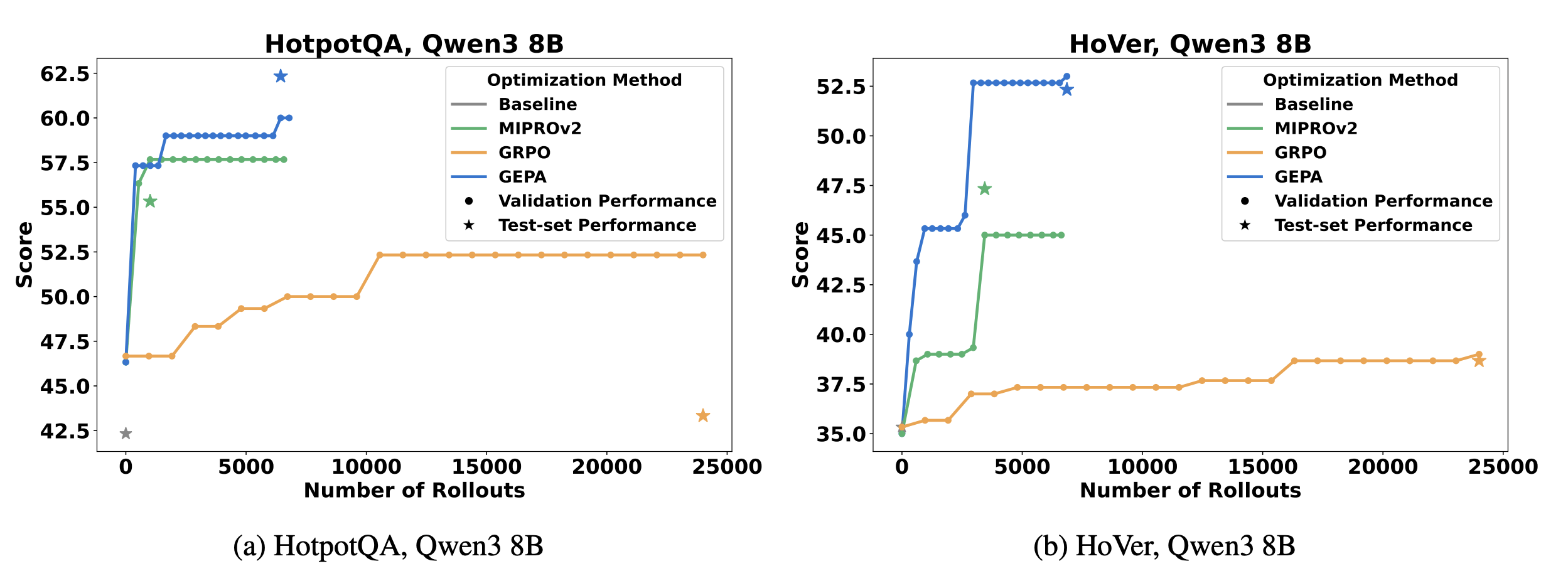

["GEPA: Reflective prompt evolution can outperform reinforcement learning" Agrawal et al]

GEPA: Evolutionary

GRPO: RL

+10% improvement over RL with x35 less rollouts

Scientific reasoning with LLMs still in its infancy!

Carolina Cuesta-Lazaro - IAS Astro Seminar

Observation

Question

Hypothesis

Testable Predictions

Gather data

Alter, Expand, Reject Hypothesis

Develop General Theories

[Figure adapted from ArchonMagnus]