Simulation-based inference

= Likelihood-free inference, implicit inference

Why?

- Likelihoods are rarely gaussian, specially true for non-two point functions

- Large parameter spaces -> MCMC hugely expensive

Density estimation

How?

\theta

Simulated Data

Data

Prior

Posterior

x_\mathrm{obs}

x

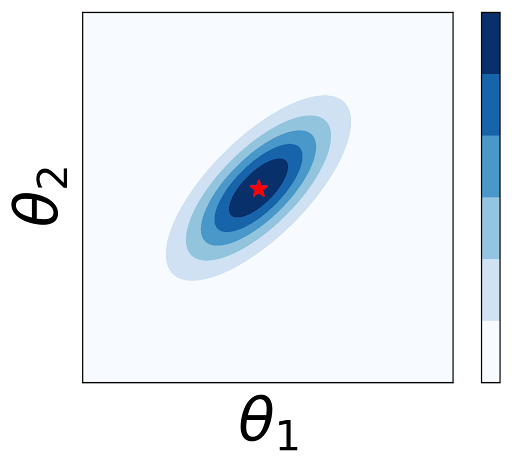

P(\theta|x_\mathrm{obs})

Forwards

Inverse

Inference = Optimisation

\mathcal{L}(\mathcal{D})

\mathcal{L}(\mathcal{D}|\theta)

\mathcal{P}(\theta|\mathcal{D})

MCMC or another density estimator

Can also estimate posterior directly!

Density estimator

x = \green{f_\phi}(z)

\mathcal{L}(\mathcal{D}) = - \frac{1}{\vert\mathcal{D}\vert}\sum_{\mathbf{x} \in \mathcal{D}} \log p(\mathbf{x})

Maximize the data likelihood

NeuralNet

Normalising flows

p(\mathbf{x}) = p_z(\mathbf{z}) \left\vert \det \dfrac{d \mathbf{z}}{d \mathbf{x}} \right\vert

= p_z(f^{-1}(\mathbf{x})) \left\vert \det \dfrac{d f^{-1}}{d \mathbf{x}} \right\vert

f must be invertible

J efficient to compute

1-D

n-D

p(\mathbf{x}) = p_z(f^{-1}(\mathbf{x})) \left\vert \det J(f^{-1}) \right\vert

x = f(z), \, z = f^{-1}(x)

p(\mathbf{x}) = p_z(\mathbf{z}) \left\vert \det \dfrac{d \mathbf{z}}{d \mathbf{x}} \right\vert

p(\mathbf{x}|\theta) = p_z(f^{-1}(\mathbf{x}|\theta)) \left\vert \det J(f^{-1}|\theta) \right\vert

Sequentially improve

Sample from

p(\mathcal{G}|\mathcal{C}, x_\mathrm{obs})

Refine accuracy on HOD parameters close to the data

Issue -> we estimate

p(\mathcal{G},\mathcal{C}| x_\mathrm{obs})

Targeting the posterior or the likelihood?

Pros posterior

- Fast sampling (no need MCMC)

Middle ground: Likelihood + Variational Inference

Pros likelihood

- combine multiple observations

- use without retraining if the prior is changed

- easier to adapt to sequential models

Implicit Likelihood vs Mean emulators

- No likelihood assumption -> Requires simulations with different seeds

- Assume Gaussian likelihood and estimate the covariance matrix from a different set of simulations

- Learn mean relation -> fixed seed simulations useful

- Learn likelihood or posterior directly

S(X|\theta)

P(\theta|S(X))

Hybrid for Abacus Summit

\{\mathcal{C}, \mathcal{G} \}

f_\phi

S

\mathcal{L}(S|\mathcal{C}, \mathcal{D})

Gaussian or estimated from fixed cosmology

Density estimator / Variational Inference

p(\mathcal{C}, \mathcal{D}|S)

f^\prime_\phi

Loss

Loss

Loss (MSE)