Why chatbots are so bad?

Demystifying Natural Language Processing Used in Chatbots

Grab the slides: slides.com/cheukting_ho/why-chatbots-are-so-bad

How do a chatbot understands you?

Intents

Entities

Chatbots catagories user message (utterance) in intents to guess what action it should take to handle that message

Information that a chatbot extract form your message

For example

message: "Hi I am Cheuk"

Intent: "Greeting"

Enterty: {"Name": "Cheuk"}message: "Book a flight to Hong Kong on 8th Dec 2020"

Intent: "Flight booking"

Enterty: {"Destination": "Hong Kong", "Date": T20201208}Popular NLP models used in NLU

Understanding Intents

catogorizing sentances

➡️ vectoring words and sentances

➡️ finding similarities in vector spaces

Extracting Entities

Named entity recognition (NER):

- hand writen rule

- Statical model + supervised ML

- Unsupervised ML

- Semi-supervised ML

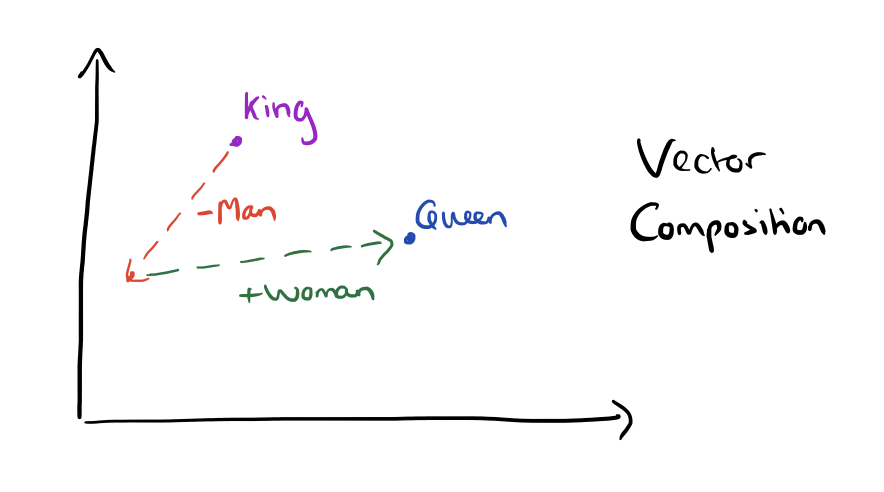

- Words or phrases from the vocabulary are represented as a vector in a vector space.

- In this vector space, words have similar meanings are close to each other and calculations like

“king” – “man” + ”woman” = “queen”

is valid. - models are used to help find the mapping to this vector space.

- Preserve the context of the word.

Vectorizing (Word Embedding)

Bag-of-word (n-gram):

number of occurances

disregarding grammar and even word order

Word2Vec:

pre-trained two-layer neural networks

large coprus and high dimension

GloVe:

unsupervised learning algorithm

aggregated global word-word co-occurrence statistics

Supervised learning approaches

- Patterns with Part of Speach tags

- (e.g. NLTK and SpaCy)

- SpaCy create a Knowledge Base with Entity Links

- Large corpus of taged data are used in training for machine learning models

- Examples: Hidden Markov Models (HMMs), decision trees, support vector machines (SVMs), and conditional random fields (CRFs)

Unsupervised learning approaches

- based on the context or on entities’ simultaneous occurrences (co-occurrence)

- not very accurate

Semi-supervised learning approaches

- small starter supervised set of seeds/rules is used

- fewer documents for training decreases the tagging time

- for example, the algrithm in this paper: https://www.aclweb.org/anthology/W09-2208.pdf

- ELMo and BERT

Machine Learning in NLP

Conditional random field

statisical model - discriminative undirected probabilistic graphical model

Linear Chain CRF - overcome label bias, great performance on sequence data

Machine Learning in NLP

Canonical correlation analysis (CCA)

investigate the relationship between two variable sets

examine the correlation of variables belonging to different sets

Machine Learning in NLP

Recurent Neural Networks (RNNs)

sequencial data

feed the output of the prvious prediction as inputs

(Bi-directionsl) GRU, LSTM

ELMo and BERT

Long Short-term-memory

- Recurrent Neural Network – the previous training data affects the next training data

- Useful for sequential data

- Have “gates” to control what previous contents to keep

ELMo

- context matters

- bi-directional LSTM trained on a specific task to be able to create those embeddings

- vast amounts of text data that such a model can learn from without needing labels

BERT

- adopting a “masked language model” concept

- fine-tuning BERT for feature extraction and NER

- Understand meaning of each word (Morphology & Lexicology Layer)

- Understanding the sentence structure (Syntax Layer)

- Understand the meaning of the sentence (Semantics Layer)

- Understand the meaning of the sentence in context (Pragmatics Layer)

To Conclude

Have you even jump in the middle of a conversation?

Conversation is difficult to understand

Context are hidden

Infer meanings

Users are unpredictable

Design choice to make things better

Design with intent in mind:

Do the user have a limited intent choices?

Guiding and hinting the users, fallback mechnisms

Collect user data and retrain

References

http://www.cs.columbia.edu/~smaskey/CS6998/

https://link.springer.com/article/10.1186/s40887-017-0012-y

https://towardsdatascience.com/named-entity-recognition-with-nltk-and-spacy-8c4a7d88e7da

https://biomedical-engineering-online.biomedcentral.com/articles/10.1186/s12938-018-0573-6

https://medium.com/@phylypo/nlp-text-segmentation-using-conditional-random-fields-e8ff1d2b6060

https://www.aclweb.org/anthology/K18-1020.pdf

http://jalammar.github.io/illustrated-bert/

https://people.cs.umass.edu/~mccallum/papers/crf-tutorial.pdf