Filesystem Layout + Consistency

Online Lecture Logistics

Instapoll

If possible, please have Instapoll open in another window/tab/app so that we don't lose too much time for the quizzes.

Slides Live

Questions

Just like last time, feel free to leave questions in the chat, use the "raise hand" button, or just unmute yourself and interrupt me.

The Story So Far

We've added persistent storage to our computers to make sure that power-offs don't completely destroy all our data.

Disks are great!

Then we added glue code to make sure we could interact with this storage in a reasonable manner. This resulted in a filesystem.

We evaluated the filesystem based on three goals:

-

Speed

-

Usability

-

Resilience

Big Picture

(made of many little pictures)

We examined some of these little pictures:

- Syscall API

- File + Data Layout

- Directories + Organization

Disk Access Model

CPU

We start knowing the i# of

the root directory (usually 2)

We have enough space in memory to store two blocks worth of data

Everything else has to be requested from disk.

The request must be in the form of a block#. E.g. we can request "read block 27", but we cannot request "read next block" or "read next file"

Under our disk access model, what steps would we take to read the third block of a file that uses direct allocation?

You may assume that we already know the i# of the file header.

CPU

Instapoll

Under our disk access model, what steps would we take to read the third block of a file that uses direct allocation?

You may assume that we already know the i# of the file header.

CPU

-

Read file inode

Under our disk access model, what steps would we take to read the third block of a file that uses direct allocation?

You may assume that we already know the i# of the file header.

CPU

-

Read file inode

H

Under our disk access model, what steps would we take to read the third block of a file that uses direct allocation?

You may assume that we already know the i# of the file header.

CPU

- Read file inode

- Find address of third data block from inode

- Read third block

H

Under our disk access model, what steps would we take to read the third block of a file that uses direct allocation?

You may assume that we already know the i# of the file header.

CPU

- Read file inode

- Find address of third data block from inode

- Read third block

H

User-usable Organization

Instead of forcing users to refer to files by the inumbers, we created directories, which were mappings from file names to inumbers.

| File Name | inode number |

|---|---|

| .bashrc | 27 |

| Documents | 30 |

| Pictures | 3392 |

| .ssh | 7 |

Users can refer to files using paths, which are a series of directory names.

The root is a special directory, and it's name is "/".

Traversing paths from the root can require many, many disk lookups. We optimized this by maintaining a current working directory.

Example Filesystems

File Allocation Table (FAT)

-

Linked Allocation (with links in header)

- Allocates first free block to file

- Simple, but missing lots of features

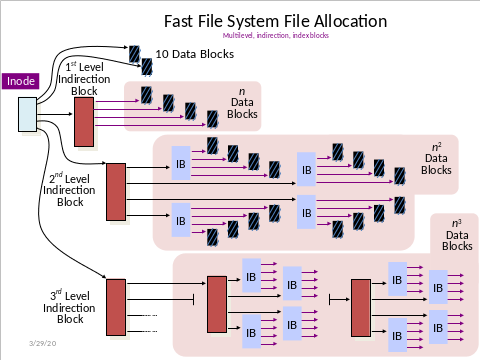

Berkeley Fast Filesystem (FFS)

- Uses multilevel indexing to allow fast access to small files (direct allocation) while still supporting large files using indirect blocks

- Descendants still the main filesystem for many BSD systems today, usually named "UFS2"

Today's Additions

-

A look at NTFS, the default Windows filesystem

-

How does the filesystem manage its own internal data? (free blocks, inodes, etc)

-

How are the filesystem control structures organized on disk?

-

How do we manage disk writes in the presence of sudden failure?

- fsck

- journaling

- copy-on-write

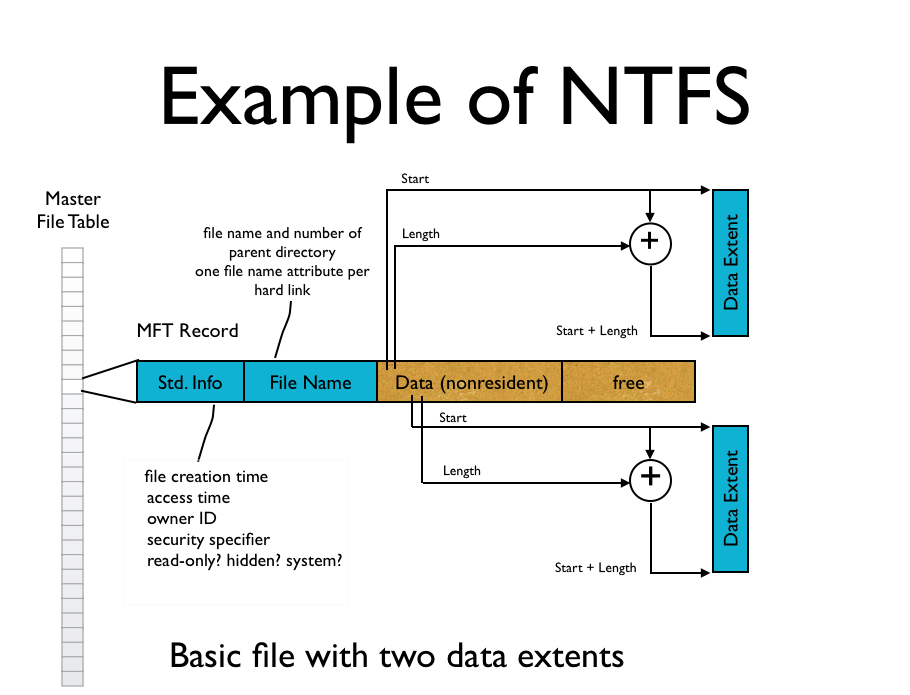

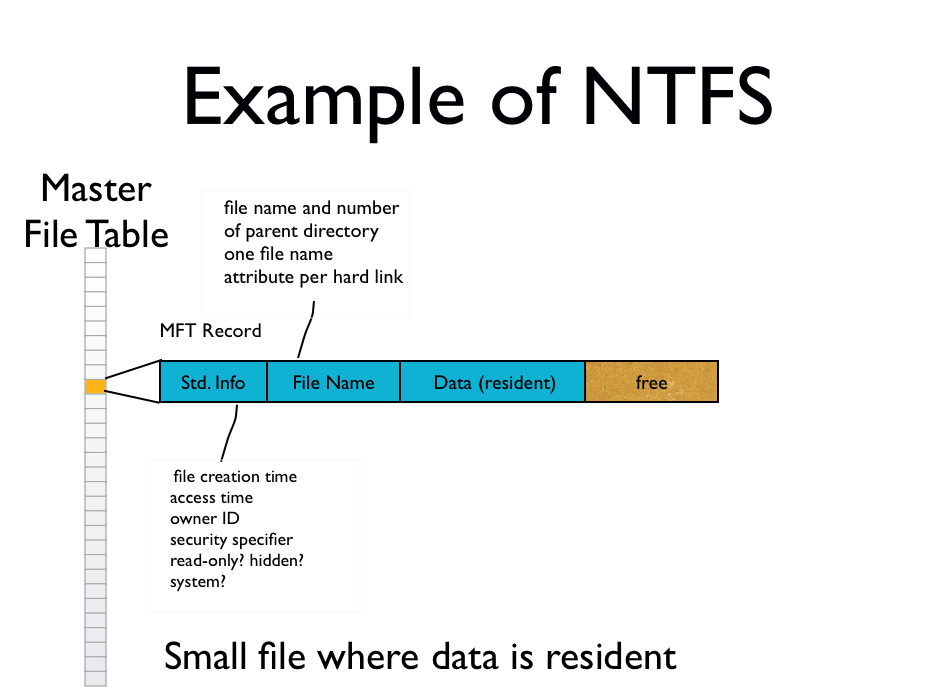

NTFS

NTFS

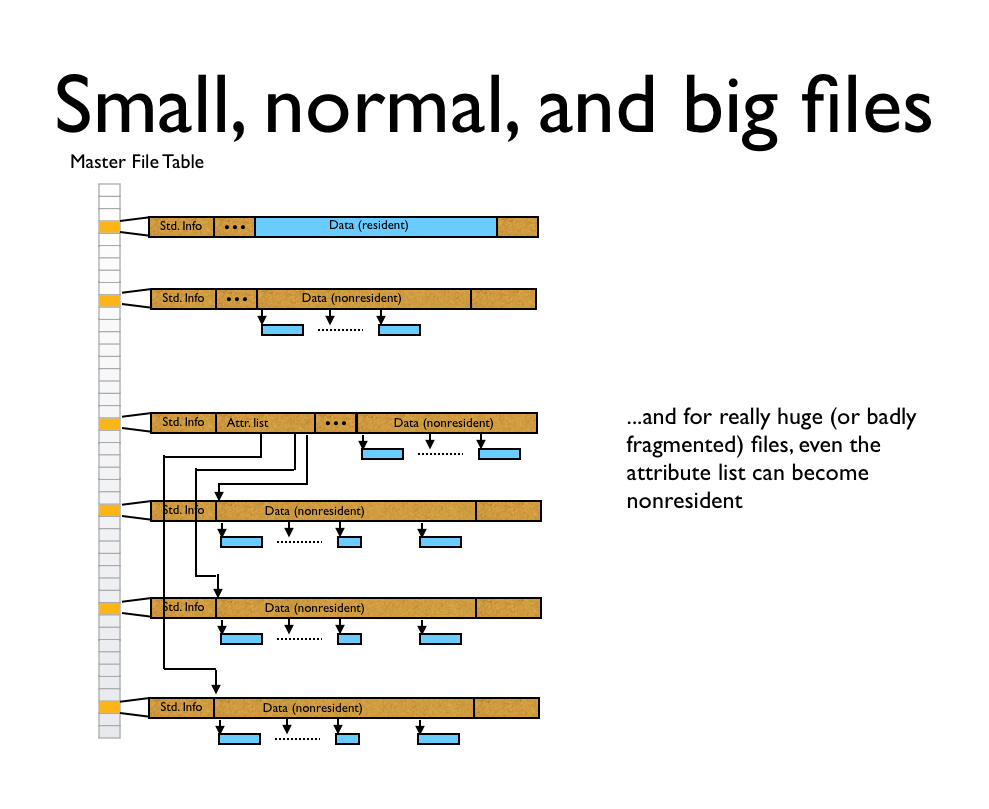

NTFS, the "New Technology File System," was released by Microsoft in July of 1993.

It remains the default filesystem for all Windows PCs--if you've ever used Windows, you've used an NTFS filesystem.

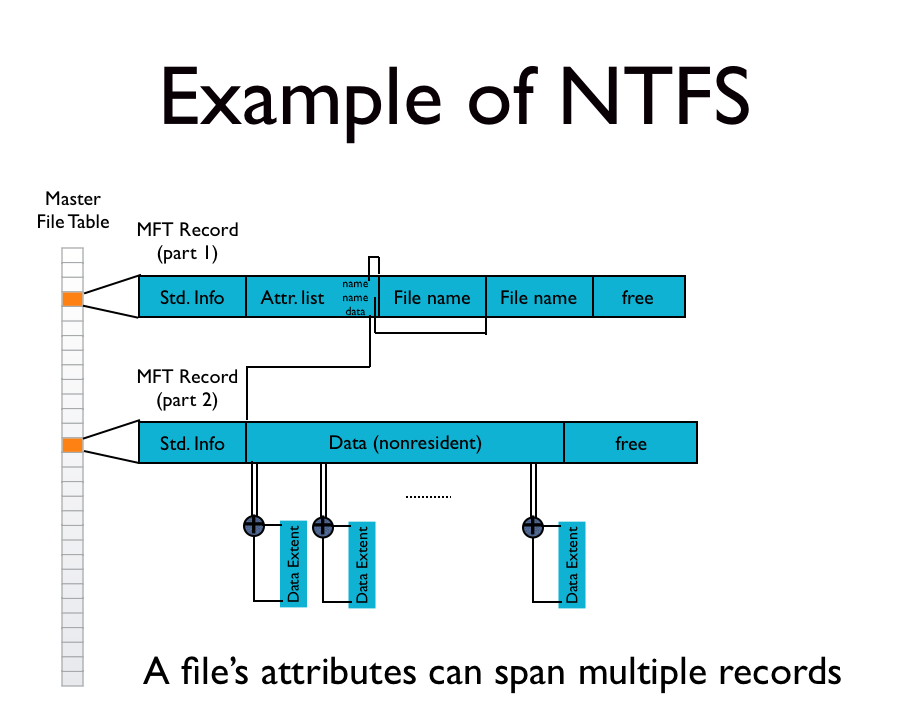

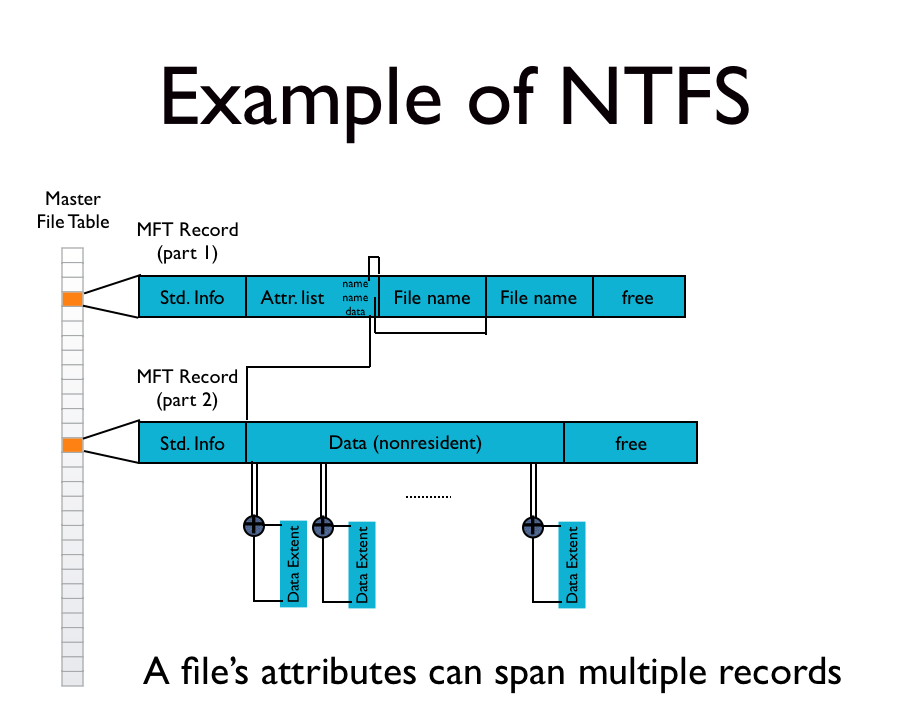

NTFS uses two new (to us!) ideas to track its files: extents and flexible trees.

Extents

Track a range of contiguous blocks instead of a single block.

Example: a direct-allocated file uses blocks 192,193,194,657,658,659

Using extents, we could store this as (192,3), (657, 3)

Flexible Trees

Files are represented by a variable-depth tree.

- A large file with few extents can be shallow.

A Master File Table (MFT) stores the trees' roots

- Similar to the inode table, entries are called records

- Each record stores a sequence of variable-sized attribute records

NTFS: Normal Files

NTFS: Tiny Files

NTFS: Lots o' Metadata

Not enough space for data!

Small file

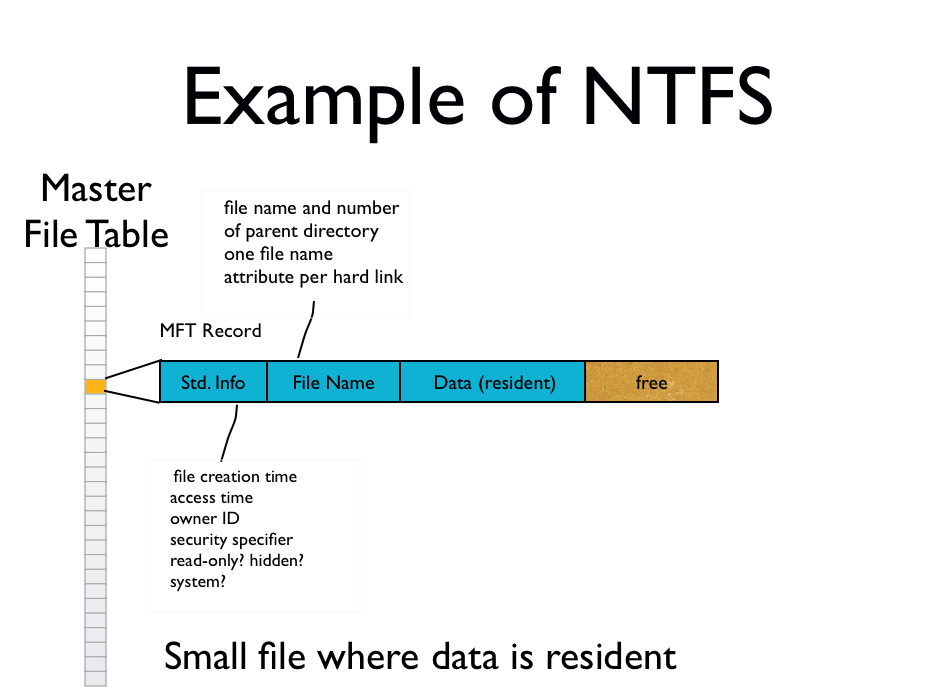

NTFS: Small, Medium, and Large

Medium file

Large file

For really, really large files, even the attribute list might become nonresident!

NTFS: Special Files

$MFT (file 0) stores the Master File Table

MFT can start small and grow dynamically--to avoid fragmentation, NTFS reserves part of the volume for MFT expansion.

$Secure (file 9) stores access controls for every file (note: not stored directly in the file record!)

File is indexed by a fixed-length key, stored in the Std. Info field of the file record.

NTFS stores most metadata in ordinary files with well-known numbers

- 5 (root directory); 6 (free space management); 8 (list of bad blocks)

NTFS: Summary

Uses a lot of structures similar to what we've seen before!

-

A single array for file headers (called file records)

-

Fixed, global file records for important files

- File records contain pointers to data blocks

And has a lot of cool stuff we haven't seen before!

-

Flexible use of file records: can store file data directly in-record for small files. Avoids an additional disk access for small files (and most files are small!)

-

Flexible use of file records: file records that get too large can spill out to other entries in the MFT. Avoids maximum limits on filesize due to file record size limits.

- Uses extents (contiguous ranges) to more-compactly store which data blocks belong to a file.

Filesystem Layout

Filesystem Layout

Last lecture, we made somewhat oblique references to some on-disk filesystem information.

On create(), the OS will allocate disk space, check quotas, permissions, etc.

Filesystem Layout

There's also some fundamental information that we just need to keep in the filesystem!

- What type of filesystem is this? (FFS? FAT32?)

- How many blocks are in this filesystem?

- How large is a block?

We also need to handle data like file headers, free space, etc.

All of this data is going to go into a superblock.

Each filesystem has at least one superblock, and we can have multiple filesystems on the same physical disk using partitions.

Layout On Disk

Partitions separate a disk into multiple filesystems.

Partitions can (effectively) be treated as independent filesystems occupying the same disk.

Not to scale

The Partition Table (stored as a part of the GPT Header) says where the various partitions are located.

Within the partition (filesystem), the superblock records information like where the inode arrays start, block sizes, and how to manage free disk space.

$ sudo e2fsck /dev/sdb1

Password:

e2fsck 1.42 (29-Nov-2011)

e2fsck: Superblock invalid, trying backup blocks...

e2fsck: Bad magic number in super-block while trying

to open /dev/sdb1Aside: Why multiple superblocks?

I hope you have backups...

There is a tradeoff between two filesystem goals when using multiple superblocks. Which goal are we sacrificing and which goal are we attaining?

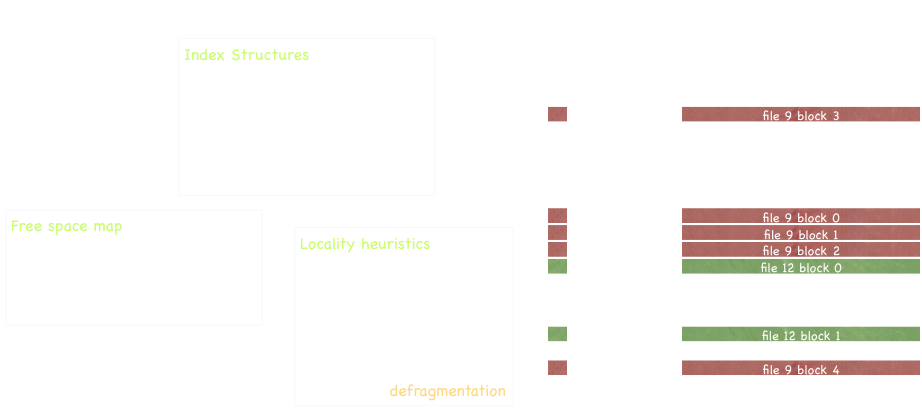

Let's Talk About Free Space

One of the things we need to track is which blocks on the disk are free/in use.

What techniques do we know of to do this?

Free list!

Where else have we seen this technique?

- malloc()

- memory relocation

- heap management

- contiguous allocation

It shows up everywhere!

But there's another allocation technique...

Free Space: Linked List Allocators

Free Space: Linked List Allocators

Free Space: Linked List Allocators

Why do we use a linked list to track what memory is in use?

- Random access of control data not a concern

- Inserting into middle of control data very frequent operation

- Variable-sized, variable# allocations means a variable-sized data structure is needed.

Why don't we use a tree to track what memory is in use?

Free Space: Linked List Allocators

Free Space: Linked List Allocators

Why don't we use a tree to track what memory is in use?

- Two pointers per node instead of one, and non-leaf nodes do not usefully track free/used memory, means that trees use more memory than lists.

- The added benefits of trees ( search, faster sorting) don't really help us in the free list.

The list is the simplest structure that does what we need.

Free Space: Bread and Concrete

Let's say that we know ahead of time that we're going to be getting a lot of memory allocation requests between 4000 bytes and 4080 bytes.

Let's just lay out a bunch of regions in memory that are all 4096 bytes large! We'll call these fixed-size memory regions chunks.

When a request comes in, we can just grab the first free chunk and give it to whoever's making the request.

What data structure should we use for the control data?

? ? ?

What data structure should we use for the control data?

How much data do we need to record that a given chunk has been used?

Can we store this data without needing additional space?

Use a bitmap (bit vector)!

i-th bit represents whether i-th chunk is used.

How much memory do we need for the bitmap?

Our chunk size was 4096 bytes. Suppose we want 32GB to allocate with chunks (2^32 bytes). This is a lot of memory already!

How much memory do we need to dedicate to the bitmap?

To track 32GB of data, we need 1MB for the bitmap.

This is a ratio of 32768:1.

To achieve this ratio with malloc(), the average allocation needs to be over 390,000 bytes.

A pool of chunks is termed a slab.

This allocation technique is known as a slab allocator.

It is much faster than linked list tracking (chasing linked lists is slow).

However, it cannot always allocate memory larger than one chunk (why)?, and can suffer from internal fragmentation.

Instapoll

What scheme should Pintos use when allocating pages with the malloc() family?

What about the palloc() family [1]?

It turns out in this case (as in many cases), Pintos does exactly what it should!

[1] e.g. palloc_get_page(), palloc_free_page()

Slab Allocators and Disks

Slab allocation (bitmap) is a perfect fit for filesystems!

| Slab Allocator Property | Filesystem Goal |

|---|---|

| Very little extra space used to track free space | Minimize amount of space used for control structures |

| Can only allocate in chunks | Can only access filesystem in blocks |

| Cannot always allocate contiguous chunks | Does not require contiguous blocks (they're nice for extents, but not required) |

| Can rapidly scan many chunks with a single read operation | Minimize the number of block reads needed to find free space |

| Can track two different slabs easily -- just create two different bitmaps | Want to track free inodes and free data blocks separately |

| Suffers from internal fragmentation | Was already suffering from internal fragmentation, so it doesn't really matter |

File Create: Free Space Management

To create a file, we need to allocate one inode and one data block.

- Scan the inode bitmap until we find an unset bit--set that bit and use the corresponding index as the inumber.

- Scan the data bitmap until we find an unset bit--set that bit and point the inode to that block.

- Write remaining data to the inode/data blocks.

Filesystem Layout: Summary

The superblock contains information about the filesystem: the type, the block size, and where the other important areas start (e.g. inode array)

A series of bitmaps are used to track free blocks separately for inodes and file data, a so-called zoned slab allocator.

Inode arrays contain important file metadata.

There may be backup superblocks scattered around the disk.

For all these reasons and more*, some part of the disk is unavailable to store actual file data.

* many modern filesystems reserve about 10% of data blocks as "wiggle room" to optimize file locality.

Resilience and Consistency

Consistency

Our primary measure of resilience will be consistency.

Consistency: Does my data agree with...itself?

You might argue that this is a pretty low bar to meet.

You'd be right!

There's a lot of work out there for guaranteeing the "correctness" of a file system after a "failure", for many different values of correctness and failure.

But if we can't even guarantee the data is consistent, what hope do we have of stronger resilience guarantees?

Consistency

How can data structures disagree?

append(file, buf, 4096)

?

What changes do we need to make to the filesystem to service this syscall?

We need to make 3 writes:

- Add the new data block

- Update the inode

- Update the data bitmap

Consistency

append(file, buf, 4096)

Block size is 4KB

With luck, this all works out!

What if only a single write succeeds?

- Add the new data block

- Update the inode

- Update the data bitmap

Which Write Succeeds?

Bitmap

Inode

Data

Data Block Write Succeeds

What's the final state of the filesystem?

-

Data has been written to the new data block.

- But the data block bitmap says the block isn't in use

- The inode doesn't point to the new block

-

Since there's no way to access this new block, it's as if the write never happened--we just overwrote garbage with more garbage.

Inode Write Succeeds

What's the final state of the filesystem?

- There's a pointer in the inode, but following it just leads us to garbage.

- The data bitmap says that block 5 is free, but the inode says that block 5 is used. FILESYSTEM INCONSISTENCY. This must be reconciled.

Data Bitmap Write Succeeds

What's the final state of the filesystem?

- The data block is marked as allocated, but it just contains junk.

- Data bitmap claims block is in use, but no inode points to it. FILESYSTEM INCONSISTENCY. We need to find out which file uses the data block...but we can't!

So...write the data block first?

What if two writes succeed?

Which two writes succeed?

Inode and bitmap updates succeed

- Filesystem is consistent, but data has not been written.

- Reading new block returns garbage. Not consistency issue, but not good.

Inode and data block updates succeed

- Inode points to written data, but data bitmap does not record the block as used

- Filesystem inconsistency, must be repaired

Data bitmap and data block updates succeed

- Data bitmap marks block as used,

- Filesystem inconsistency, must be repaired

But wait, it gets worse!

When appending to a file, there is no sequence of updates that can prevent inconsistencies in all cases.

Instapoll

A user requests a read of the file "/home/user2/test.txt". What is the minimum number of hardware disk accesses that are needed to service this request?

Disk is slow...cache all the things!

Disk caches are essentially the reason we can interact with the filesystem in (what you think of as) a reasonable amount of time.

Is this gonna be a problem?

Disks: Writing

I am an OS programmer who needs to deal with writes. Should I tell the user their write succeeded even if it only made it to a cache in RAM?

If your write only gets to cache, you could lose data! You have to make sure the write gets to disk!

Who cares? The power almost never goes out. Just cache the write and hope everything works out.

Write-through caching

Write-back caching

Writing to Cache

Two general caching schemes when it comes to writes.

Write-through

Write-back

Write now. Immediately write changes back to disk, while keeping a copy in cache for future reads.

Guarantees consistency, but is slow. We have to wait until the disk confirms that the data is written.

Write later. Keeps changed copy in-memory (and future requests to read the file use the changed in-memory copy, but defers the writing until some later point (e.g. file close, page evicted, too many write requests)

Much better performance, but modified data can be lost in a crash, causing inconsistencies.

The Situation

We've seen that a single append operation will not always result in a consistent filesystem. No ordering of write operations can prevent this.

This issue is made worse by the omnipresence of caching in the filesystem layers. Nearly every access is cached:

- Write-through caching does not make consistency issues worse, but it's slooooooooow.

- Write-back caching is faster, but increases the probability that data is lost.

So what do we do?

Old-timey UNIX Consistency

"fsck"

(no, it's not a naughty word!)

40m

So what do we do?

UNIX: User Data

UNIX uses write-back caching for user data

- Cache flush (writeback) is forced at fixed time intervals (30s)

- Within the time interval, data can be lost in a crash

- User can also issue a sync command (fsync syscall) to force the OS to flush all writes to disk.

Does not guarantee that blocks are written to disk in any order--the filesystem can appear to reorder writes. User applications that need internal consistency have to rely on other tricks to achieve that.

UNIX: Metadata

To assist with consistency, UNIX uses write-through caching for metadata. If multiple metadata updates are needed, they are performed in a predefined, global order.

This should remind you of another synchronization technique that we've seen in this class. Where else have we seen predefined global orders before?

If a crash occurs:

- Run `fsck` (filesystem check) tool to scan partition for inconsistencies

- If incomplete operations are found, fix them up.

Example: File Creation

Suppose we're creating a file in a system using direct allocation. What do we need to write?

- Write data block

- Update inode

- Update inode bitmap

- Update data bitmap

- Update directory

Data block for new file

inode for new file

inode bitmap

data bitmap

directory data

On-Crash: fsck

- Write data block

- Update inode

- Update inode bitmap

- Update data bitmap

- Update directory

What if we crashed before step 1?

What if we crashed between step 1 and 2?

Filesystem Detective

I have snapshots of several filesystems which were...unexpectedly terminated.

We think they were in the middle of creating a file when the interruption occurred. Some of them may have finished file creation. Others might not have. Fortunately, all these filesystems signed a pact to always update things in the same order.

Your job: examine a snapshot and determine the following:

- Is it in an inconsistent state?

- If so, where were it in the file creation process when the power was interrupted?

- How much information can we recover?

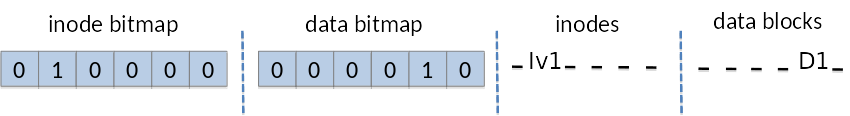

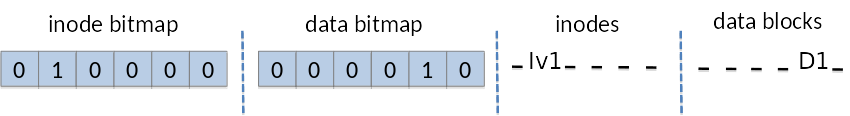

The system is a direct-allocated filesystem using zoned bitmap tracking and a single global directory.

Ø is a null pointer. Uncolored inodes have all their pointers set to Ø.

Update order:

- Data Block

- Inode

- Inode Bitmap

- Data Bitmap

- Directory

- Is it in an inconsistent state?

- If so, where were it in the file creation process when the power was interrupted?

- If applicable, how much information can we recover?

System 1

System 2

System 3

System 4

Blocks are zero-indexed (careful with orange/purple block!)

https://slides.com/chipbuster/18-fs-consistency/live

Work on System (Breakout Room # % 4) + 1

System 1

Update order:

- Data Block

- Inode

- Inode Bitmap

- Data Bitmap

- Directory

Consistent!

Question: did it feel easy?

System 2

Update order:

- Data Block

- Inode

- Inode Bitmap

- Data Bitmap

- Directory

Inconsistency: file 9 is not in the directory

...but its other data/metadata is intact! This must have failed after the data bitmap update and before the directory update.

We can recover everything about the file except for its name.

System 3

Update order:

- Data Block

- Inode

- Inode Bitmap

- Data Bitmap

- Directory

Inconsistency: file 3 is not in the directory

Inconsistency: file 3 points to blocks D4 and D5, but they are not marked as used in the bitmap.

The inodes are marked as used in the inode bitmap, so this must have failed between inodde and data bitmap updates.

We can recover everything about the file except for its name.

System 4

Update order:

- Data Block

- Inode

- Inode Bitmap

- Data Bitmap

- Directory

Something is funny about file 1...

- It's not in the directory

- It's inode isn't allocated

- It's data block isn't allocated

What happened here?

Two possibilities:

- We failed after writing inode but before updating bitmaps.

- We deleted the file by unsetting its data + inode bitmaps!

Conclusion: failing before inode bitmap update is the same as failing in first two steps: looks like file creation never happened.

Problems with fsck

-

Not a very principled approach (what if there's some edge case we haven't thought about that breaks everything?)

-

Tricky to get correct: even minor bugs can have catastrophic consequences

-

Write-through caching on metadata leads to poor performance

-

Recovery is slow. At bare minimum, need to scan:

-

inode bitmap

-

data block bitmap

-

every inode (!!)

-

every directory (!!!)

-

Possibly even more work if something is actually inconsistent.

Problems with fsck

What if we need operations on multiple files to occur as a unit?

Problems with fsck

What if we need operations on multiple files to occur as an indivisible unit?

Problems with fsck

What if we need operations on multiple files to occur as an indivisible unit?

What are these things called?

Problems with fsck

What if we need operations on multiple files to occur atomically?

Solution: use transactions.

Transactions + Journaling

Part 2: Electric Boogaloo

Transactions (Review)

Transactions group actions together so that they are:

- atomic -- either they all happen or none of them happen

- serializable -- transactions appear to happen one after another

- durable -- once a transaction is done, it sticks around

To achieve these goals, we tentatively apply each action in the transaction.

- If we reach the end of the transaction, we commit, making the transaction permanent.

- If we have a failure in the middle, we rollback, undoing the actions that we have already applied.

Transactions (Review)

Undoing writes on disk (rollback) is hard!

Exhibit A: All y'all that have really wished you could revert to a previous working version of Pintos at some point.

Instead, use transaction log!

TxBegin

Write Block32

Write Block33

Commit

TxFinalize

<modify blocks>

Step 1: Write each operation we intend to apply into the log, without actually applying the operation (write-ahead log)

Step 2: Write "Commit." The transaction is now made permanent.

Step 3: At some point in the future (perhaps as part of a cache flush or an fsync() call), actually make the changes.

...wait, what??

Step 2: Write "Commit." The transaction is now made permanent.

Step 3: At some point in the future (perhaps as part of a cache flush or an fsync() call), actually make the changes.

Once the transaction is committed, the new data is the "correct" data.

So....somehow we need to present the new data even though it hasn't been written to disk?

How does that work?

Transactions (Review)

Recovery of partial transactions can be completed using similar reasoning to fsck.

TxBegin

Write Block32

Write Block33

Commit

TxFinalize

<modify blocks>

TxBegin

Write Block32

Write Block33

Commit

TxBegin

Write Block32

Write Block33

Transaction was completed and all disk blocks were modified.

No need to do anything.

Transaction was committed, but disk blocks were not modified.

Replay transaction to ensure data is correct.

Transaction was aborted--data changes were never made visible, so we can pretend this never happened.

Journaling Filesystems

From the early 90s onwards, a standard technique in filesystem design.

All metadata changes that can result in inconsistencies are written to the transaction log (the journal) before eventually being written to the appropriate blocks on disk.

Eliminates need for whole-filesystem fsck. On failure, just check the journal! Each transaction recorded must fall into one of three categories:

- Finished: we don't need to do anything

- Uncommitted: this transaction never happened: don't

need to do anything either - Committed but incomplete: replay the incomplete

operations to complete the transaction

Journaling Filesystems: An Example

We want to add a new block to file Iv1.

-

Write 5 blocks to the log:

- TxBegin

- Iv2

- B2

- D2

- Commit

- Write the blocks for IV2, B2, D2

- Clear the transaction in the journal + write TxFinalize to clear the transaction

TxBegin

Commit

D2

B2

IV2

TxFinalize

What if we crash and "Commit" isn't in the journal?

What if we crash and "TxFinalize" isn't in the journal?

Journaling Filesystems: An Example

Problem: Issuing 5 sequential writes ( TxBegin | Iv2 | B2 | D2 | Commit ) is kinda slow!

Solution: Issue all five writes at once!

Problem: Disk can schedule writes out-of-order!

First write TxBegin, Iv2, B2, TxEnd

Then write D2!

TxBegin

Commit

D2

B2

IV2

Solution: Force disk to write all updates by issuing a sync command between "D2" and "Commit". "Commit" must not be written until all other data has made it to disk.

Journaling Filesystems: Summary

TxBegin

Commit

D2

B2

IV2

Use the transaction technique we saw in the Advanced Synchronization lecture for all metadata updates (regular data still written with writeback caching).

This guarantees us reliability, and avoids the overhead of an fsck scan on the entire disk (only need to scan journal!)

But now we have to write all the metadata twice! Once for the journal and then a second time to get it in the right location.

Is there another solution?

COW Filesystems

COW Filesystems

COW systems, or "Copy-on-Write," are the hot new thing in production filesystems right now.

- BSD/Solaris ZFS: 2005

- Windows Server ReFS: 2012

- Linux Btrfs: 2013

- Apple APFS: 2017

Copy-on-write is a rule for how data is written. Instead of overwriting the old value, a copy is made and the write is applied to the copy. Why would you want to do this? Well...

Unfortunately, Kevin ran out of time to make pretty pictures while making these slides, so you're getting a storybook straight out of "Kevin's Budget Animation Studio"

Copy-On-Write Fundamentals

I have a thing!

Copy-On-Write Fundamentals

I have a thing!

Can I see the thing?

Copy-On-Write Fundamentals

Sure!

Can I see the thing?

Hmm, it's pretty important...better send a copy.

Copy-On-Write Fundamentals

Copy-On-Write Fundamentals

Copy-On-Write Fundamentals

Copy-On-Write Fundamentals

Copy-On-Write Fundamentals

Copy-On-Write Fundamentals

Copy-On-Write Fundamentals

Thanks! ....This looks boring.

Copy-On-Write Fundamentals

It...didn't even use the thing! I did all that copy work for nothing...

Copy-On-Write Fundamentals

Hey, I see you have a thing! Can I see?

Copy-On-Write Fundamentals

Hey, I see you have a thing! Can I see?

Sure, I guess....

Copy-On-Write Fundamentals

Hey, I see you have a thing! Can I see?

Sure, I guess....

Copy-On-Write Fundamentals

Hey, I see you have a thing! Can I see?

Sure, I guess....

Copy-On-Write Fundamentals

Hey, I see you have a thing! Can I see?

Sure, I guess....

Copy-On-Write Fundamentals

Hey, I see you have a thing! Can I see?

Sure, I guess....

Copy-On-Write Fundamentals

Hey, I see you have a thing! Can I see?

Sure, I guess....

Copy-On-Write Fundamentals

Hmmm.....this isn't quite what I needed. Thanks for helping me though!

Sure, I guess....

Copy-On-Write Fundamentals

Why am I doing all this work if I don't need to?

Copy-On-Write Fundamentals

Hey, the others said you had a thing, can I see the thing?

Copy-On-Write Fundamentals

You know what, just take the original.

Copy-On-Write Fundamentals

Copy-On-Write Fundamentals

Thanks! I wonder how it would look in red?

Copy-On-Write Fundamentals

Thanks! I wonder how it would look in red?

Copy-On-Write Fundamentals

Thanks! I wonder how it would look in red?

WHAT ARE YOU DOING!?!?!?

I NEEDED THAT!

What happened here?

The left smiley had something that a lot of smileys wanted copies of.

Most of the copies were read-only copies...but it couldn't make other smileys promise that, so it started off by just making copies: if the other smiley modified the copy, the original was still intact.

Making copies was a lot of work, and most smileys weren't modifying the thing...so why not just send over the original?

Murphy's Law happened, and now the thing is changed.

What should have happened here?

I have a thing!

What should have happened here?

I have a thing!

Can I see the thing?

What should have happened here?

Sure, but you gotta promise to ask me if you want to change anything.

Okay!

What should have happened here?

What should have happened here?

Wow! Can I try painting a stripe?

What should have happened here?

Yeah, use that copy I made for you so you don't change the original!

What should have happened here?

What should have happened here?

What should have happened here?

Cool!

What should have happened here?

Thanks!

No problem!

Copy-on-Write

A rule for how writes are applied to data.

Only works if we can stop user from directly writing the data.

When users request a copy of the data, they are just shown the original data.

In practice, there's no "asking permission" or "making promises"--the OS just intercepts the write and makes it do the right thing, similar to a page fault.

If the user tries to change the data, a copy is triggered, hence "copy-on-write."

Allows us to make lots of "copies" very quickly, since there's actually no copy made, at a slight sacrifice to write speed.

Copy-on-Write

Only works if we can stop people from directly writing to the thing we care about.

When "changes" are made, make a copy of the original and change that.

The original data remains unchanged.

COW Trees

Here's a tree with data in its leaves

3

5

2

7

4

2

1

Let's change 1 to 6 with copy-on-write!

Here's a tree with data in its leaves

3

5

2

7

4

2

1

6

Yay! Have we changed the tree?

Here's a tree with data in its leaves

3

5

2

7

4

2

1

6

Here's a tree with data in its leaves

3

5

2

7

4

2

1

6

Wait...that's not allowed: we just wrote to the parent node! (by changing it's child pointers)

Here's a tree with data in its leaves

3

5

2

7

4

2

1

6

Let's try this again.

Here's a tree with data in its leaves

3

5

2

7

4

2

1

6

Here's a tree with data in its leaves

3

5

2

7

4

2

1

6

Almost done...what else needs to change?

Here's a tree with data in its leaves

3

5

2

7

4

2

1

6

Almost done...what else needs to change?

Here's a tree with data in its leaves

3

5

2

7

4

2

1

6

Finally, declare that the new root node is the "real root."

The True Root!

Note: any any point before this very last change, it would look like the tree hadn't updated at all!

BTRFS: COW B-Trees

A BTRFS filesystem is just a bunch of trees, where the both the metadata and data are contained at blocks in the leaves, and the superblock contains the pointer to the root.

3

5

2

7

4

2

1

Superblock

Metadata?

Data?

BTRFS: COW B-Trees

Superblock

3

5

2

7

4

2

1

6

To update a file, we follow the update protocol we saw earlier, changing the tree and building a new root.

BTRFS: COW B-Trees

Superblock

3

5

2

7

4

2

1

6

If the update fails...no data was actually changed, so we cannot possibly have inconsistencies!

BTRFS: COW B-Trees

Superblock

3

5

2

7

4

2

1

6

If the update fails...no data was actually changed, so we cannot possibly have inconsistencies!

BTRFS: COW B-Trees

Superblock

3

5

2

7

4

2

1

6

If the update succeeds, we have a consistent view of the new data because the root changes in one operation!

BTRFS: COW B-Trees

Superblock

3

5

2

7

4

2

1

6

If the update succeeds, we have a consistent view of the new data because the root changes in one operation!

BTRFS: COW B-Trees

Superblock

3

5

2

7

4

2

1

6

Does this need to be deleted?

BTRFS: COW B-Trees

Superblock

3

5

2

7

4

2

1

6

Does this need to be deleted?

No! Can retain it to see what data looked like on disk previously: COW enables easy snapshots of data without having to make explicit copies!

Summary

NTFS

Filesystem Layout

The New Technology Filesystem uses two new ideas to manage its data:

- Extents are contiguous ranges of disk blocks

- A Flexible Tree allows for great variance in how data is stored and tracked

The filesystem superblock points to the inode array, data arrays, and free space management on the filesystem.

The free space tracking usually uses zoned slab allocation, because its properties mesh very well with those of the filesystem.

Filesystem Consistency

The fact that disks can fail at any time leads us to potential data loss issues. The simplest of these is consistency, i.e. the metadata on a disk should agree with itself.

The in-memory disk cache makes this worse: we need this cache for reasonable performance, but it also makes consistency harder!

Three general solutions for this problem:

fsck

Journaling

COW

Updates are made in an agreed-upon order with write-through caching.

On failure, scan the entire stinking partition to see if any inconsistencies exist.

Slow to write (because of write-through caching), slow to recover, and easy to break!

Use transactions to record all metadata changes. Transactions are recorded in the journal.

On failure, scan the journal and fix up any partially completed transactions.

Fast recovery, but still requires that all metadata written to disk twice (once for journal, once for realsies)

Never change any data in-place, so that inconsistencies cannot arise.

To change files, make a copy of their data blocks and update the COW tree structure.

To switch to the new copy, simply change the root node in the superblock. Atomic!

Can retain old root nodes as snapshots.

Announcements!

-

Reminder: Exam 2 is Wednesday, 7pm-9pm on Zoom/Gradescope

-

No lecture Wednesday

-

Possible exam review session Wednesday (don't count on it, we haven't found volunteers for it yet)

-

No discussion sections this week

-

Project 3 is groups are due today, 4/6

-

Project 3 is due 4/21, and the data structures are due Friday