Filesystem Fundamentals

The Story So Far

(Almost) everything we've discussed so far occurs in main memory (RAM):

- The PCB and TCB are stored in main memory.

- Virtual address spaces are mapped onto physical address spaces.

- The heap/stack/SDS are part of the process's virtual address space

RAM is nice! It's relatively speedy, and you can store a lot of stuff in there.

....but it's got a major drawback.

RAM is not persistent! If the power gets cut off, all data in main memory is lost.

Imagine having not only to reload chrome if you run out of power on your laptop, but also having to reinstall the entire operating system.

The Story So Far

To solve this problem, we introduce stable storage (disks). These devices retain data even after the power to them has been shutoff (i.e. they are persistent).

Now we can turn off the computer without losing important data!

But now we have to deal with communication between the CPU and disk, which is very different from communication with main memory!

So where are we now?

We need to come up with some scheme to organize and interact with this storage.

(Almost) everything we've discussed so far occurs in main memory (RAM):

- The PCB and TCB are stored in main memory.

- Virtual Address spaces are mapped onto physical address spaces.

- The heap is part of the process's virtual address space

Now we've added persistent storage which is large, very slow, and order-dependent.

This is the filesystem.

Today's Questions

-

What makes a good filesystem?

-

How should applications interact with the filesystem?

-

How do we organize a file on-disk?

-

How can we present files in a way that makes sense to users?

Goals in Filesystem Design

What makes a filesystem good?

Speed

But we can use lots of common systems design tricks to make sure this doesn't affect us too badly!

-

Minimize required accesses

-

Cache everything

-

Interleave I/O with computation

Remember: disk access is slow !

Unfortunately, we cannot always avoid paying the disk access cost (if we could, we wouldn't need the disk!)

Reliability

Stable storage: we can be interrupted at any point in time, and we have to be able to recover afterwards!

Our filesystem must provide tools to recover from a crash!

Otherwise we could be irrecoverably stuck in an invalid state.

Read account A from disk

Read account B from disk

Add $100 to account A

Subtract $100 from account B

Write new account B to disk

Write new account A to diskWe mention this here for completeness, but we won't discuss this idea in detail until next lecture.

If our system crashes here, we've subtracted $100 from account B, but haven't added it to account A. We've destroyed $100.

Usability

The filesystem consists of raw block numbers. User programs are responsible for keeping track of which blocks they use, and for making sure they don't overwrite other program's blocks.

If the filesystem isn't easy to use, users will try to implement their own filesystem (usually poorly)

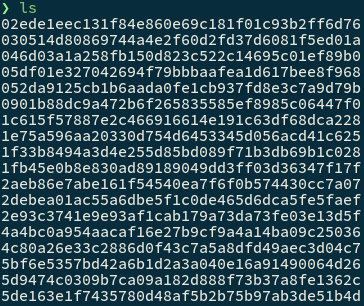

For example, the following filesystem is easy to implement, and is as fast and as consistent as the user chooses to make it:

Nobody is going to use this. They'll build their own.

Usability

A file is named by the a hash of its contents. All files are in the root directory. Filenames cannot be changed.

Who in their right mind is going to use this??

Filesystem: Is it good?

When analyzing a potential filesystem design, we'll use these three criteria to determine how much we like it:

Speed:

Reliability:

Usability:

How fast is this design? How many disk accesses do we need in order to perform common operations?

Will this design cause application programmers to tear their hair out? Does it require some deep knowledge of the system or is that abstracted away?

Can this design become corrupted if the computer fails (e.g. through sudden power loss), or if small pieces of data are damaged? Can it be recovered? How fast is the recovery procedure?

Today, we mostly focus on speed and usability. We'll talk about reliability next time!

An Aside: Bits, Bytes, Sectors, and Blocks

Sector: Smallest unit that the drive can read, usually 512 bytes large.

Last Time: Sectors

This Time: Blocks

Blocks are the smallest unit which the software (usually) accesses the disk at, which is an integer number of contiguous sectors.

Imperfect Analogy

| Memory | Disk | |

|---|---|---|

| Smallest Unit of Data | bit | bit |

| Smallest unit addressable by hardware | byte | sector |

| Smallest unit usually used by software | machine word | block |

Sector 0

Sector 1

Sector 2

Block 1

Block 2

File Design And Layout

Files Are Stored as Data and Metadata

Metadata: the file header contains information that the operating system cares about: where the file is on the disk, and attributes of the file.

Metadata for all files is stored at a fixed location (something known by the OS) so that they can be accessed easily.

Data is the stuff the user actually cares about. It consists of sectors of data placed on disk.

Examples: file owner, file size, file permissions, creation time, last modified time, location of data blocks.

META

DATA

DATA

Logical layout of a file (not necessarily how it's placed on disk!)

Files Are Stored as Data and Metadata

META

DATA

DATA

Logical layout of a file (not necessarily how it's placed on disk!)

For now, we'll focus on how the file data is laid out on disk.

Assume we already know where the metadata is.

What sorts of files do we need to care about? Are most files large or small? Is most storage space used by large or small files? Do we need to worry about unusual files?

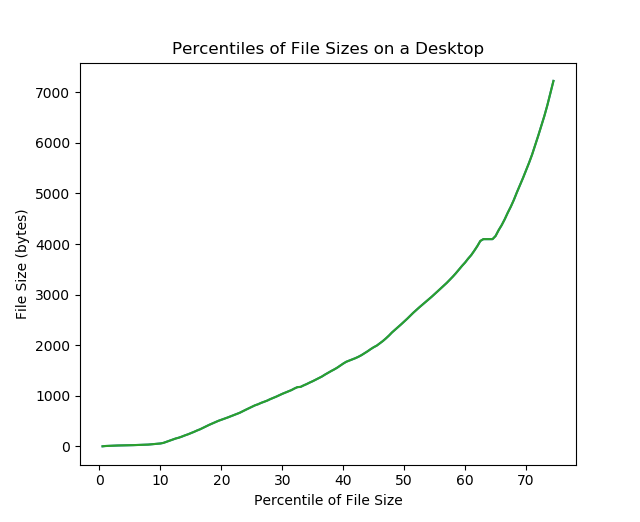

To answer these questions illustratively, I listed all the files on my desktop and ordered them by size, then plotted. The plot on the next slide shows percentile of file size. E.g. to find out the median file size, find the 50 point on the x-axis.

These systems are mine, but the trends we see tend to be true across a wide variety of systems.

Evaluating File Layouts

Speed and Usability

Most files on a computer are small!

- Figures to to the right are from my desktop

- 25% of files are smaller than 800 bytes

- 50% of files are smaller than 2.5 kilobytes

So we should have good support for lots of small files!

The user probably cares about accessing large files (they might be saved videos, or databases), so large file access shouldn't be too slow!

Most disk space is used by large files.

Evaluating File Layouts

-

Fast access to small files

-

Reasonably efficient access to large files

-

Limit fragmentation (wasted space!)

-

We care about both internal and external fragmentation

-

We care about both internal and external fragmentation

-

Allow files to grow past their initial size

- Allow random and sequential access (at decent speeds!)

Knowing what the common patterns among files, we can now lay out several properties that would be nice to have in a filesystem.

We have to allocate some of these data blocks to hold a file.

How do we choose?

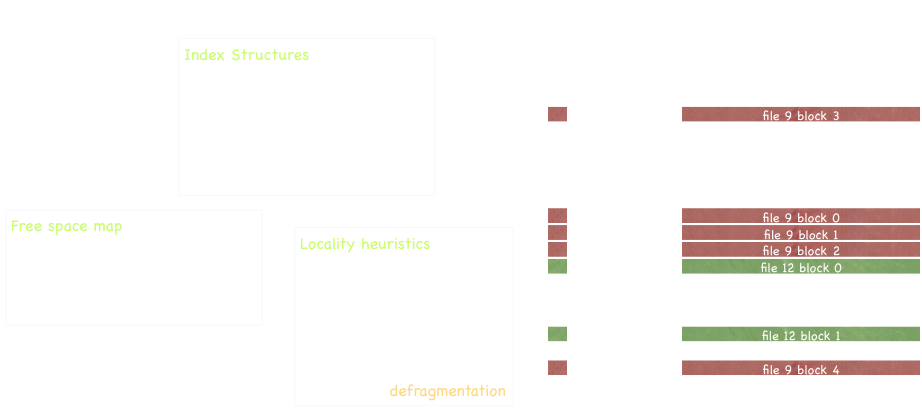

Contiguous Allocation

- Files are allocated as a contiguous (adjacent) set of blocks.

- The only location information needed in the file header is the first block and the size.

- How do we keep track of free blocks and allocate them to files?

Contiguous Allocation: Access

How many disk reads do we need to access a particular block?

CPU

We start knowing the block # of the appropriate file header

We have enough space in memory to store two blocks worth of data

Everything else has to be requested from disk.

The request must be in the form of a block#. E.g. we can request "read block 27", but we cannot request "read next block" or "read next file"

Aside: why only two memory spaces?

This is all just a game. In an actual system, you'll have millions of spots in memory to store billions of pages. This makes it very difficult to answer questions like "is this algorithm good for ____" because there's so many pieces to keep track of.

By simplifying down to two spots in memory, we make easy to immediately see the impacts that different file layouts have on the access speed. This is not terribly realistic, but you can repeat these exercises with more memory slots to see what things would look like in a large system.

Contiguous Allocation: Access

How many disk reads do we need to access a particular block?

CPU

How many disk reads to access the first block?

(We always start with the block# of the file header)

Note: Animation slides have been deleted in this version of the slides--see in-class version for a step-by-step breakdown.

Contiguous Allocation: Access

How many disk reads do we need to access a particular block?

CPU

H

How many disk reads to access the first data block?

(We always start with the block# of the file header)

- Read header block

- Find address of first data block

-

Read first data block

Contiguous Allocation: Access

How many disk reads do we need to access a particular block?

- Read header block

- Find address of first data block

- Add three to this index

- Read third data block

What if I want to do random access?

Let's say I want to read only the third block.

The random access trick only works because allocation is contiguous: we KNOW that if the first block is at N, the third block is at N+2.

In later schemes, we'll see that this doesn't work nearly as well--in fact, contiguous allocation has the best performance for both sequential and random access. It's the other properties of this design that are problematic.

Contiguous Allocation: Assessment

How can we get around these problems?

This method is very simple (this is good!)

How fast is sequential access? Great.

How fast is random access? Great.

What if we want to grow a file? If another file is in the way, we have to move and reallocate.

How bad is fragmentation? Lots of external.

Linked Allocation

- The file is stored as a linked list of blocks.

- In the file header, keep a pointer to the first and last block allocated.

- In each block, keep a pointer to the next block.

The observant reader might wonder why we keep a pointer to the last block. After all, we don't have reverse-pointers (the list is singly-linked), so it can't help us traverse the linked list. It seems that the only thing that it helps us do is get the last block of the file.

In fact, this is exactly what we want! When growing the file, we need to get the last block of the file so that we can link another block to the end of it--the last-block pointer lets us grab that block quickly instead of having to scan the entire list.

Linked Allocation: Access

CPU

How many disk reads to access the first block?

(We always start with the block# of the file header)

- Read header block

- Find address of first block

-

Read first block

H

1

What if I want to read the second block from here? (sequential access)

Linked Allocation: Access

CPU

How many disk reads to access the second block if we already have read the first block?

- Read header block

- Find address of first block

- Read first block

- Find address of second block using data in first block

-

Read second block

2

1

Linked Allocation: Access

CPU

How many disk reads to access the third block without having read anything?

Random Access

Linked Allocation: Access

CPU

How many disk reads to access the third block?

(We always start with the block# of the file header)

Random Access

- Read header block

- Find address of first block (oh no)

- Read first block

- Find address of second block in first block (oh no oh no)

- Read second block

- Find address of third block in second block (auuugh)

- Read third block

H

3

Linked Allocation: Assessment

How fast is sequential access? Is it always good? Generally good.

How bad is fragmentation? Only internal, maximum of 1 block.

What if we want to grow the file? No problems

How fast is random access? Functionally sequential access to the Nth block.

What happens if a disk block becomes corrupted? We can no longer access subsequent blocks.

Example: FAT File System

File Allocation Table (FAT)

Started with MS-DOS (Microsoft, late 70s)

Descendants include FATX and exFAT

A very simple filesystem which is used in lots of locations, like optical media (DVDs), flash drives, and the Xbox console series.

With the appropriate resources, you could probably implement a program to read a FAT filesystem in a few weeks.

I really do mean "you" and not "some hypothetical programmer." Implementing a FAT filesystem is a surprisingly common project in undergraduate courses across the US.

FAT File System

Advantages

Disadvantages

Simple!

-

Poor random access

- Requires sequential traversal of linked blocks

-

Limited Access Control

- No file owner or group ID

- Any user can read/write any file

- No support for hard links

-

Volume and file size are limited

- Example: FAT-32 is limited to 32 bits

- Assuming 4KB blocks (fairly typical), filesystem cannot be larger than 2TB

- Individual files cannot be larger than 4GB

- No support for transactional updates (more on this next time)

Universal: every OS supports it (probably due to that first point)

Direct Allocation

File header points to each data block directly (that's it!)

Direct Allocation: Assessment

How fast is sequential access? How about random access?

How bad is fragmentation?

What if we want to grow the file?

Does this support small files? How about large files?

What if other file metadata takes up most of the space in the header?

What do we do about large files?

One possible solution for large files is to allow file headers to be variable-sized.

This is a bad idea! With fixed-size file headers, it's possible to use indexing arithmetic to get the block of the current header, e.g. header 27 is located at FILE_HEADERS_START + sizeof(header) * 27.

If you decide to switch to variable sized headers, you have to scan the file headers from the beginning every time you want to access a header, e.g. accessing header 27 will take at least 27 disk accesses.

Disk access is expensive. Requiring 1 million disk accesses to even find out where the 1 million-th file is located is going to be slow.

User-level Organization

Or: All About Directories

The Story So Far

We know how to get the data associated with a file if we know where its metadata (file header) is. We also know how to identify file headers (by their index in the file header array).

Great! Are we done? Imagine the following instructions:

To edit your shell configuration, open file 229601, unless you have Microsoft Word installed, in which case you need to edit file 92135113

(╯°□°)╯︵ ┻━┻

Which of our three goals are we not meeting here?

We don't have the ability to name files right now. Which of our three main goals is this a failure in?

The Story So Far

To edit your shell configuration, open file 229601, unless you have Microsoft Word installed, in which case you need to edit file 92135113

We still don't have human-readable names or organization!

This is a big usability issue.

The Simpleton's Guide to File Organization

Use one name space for the entire disk.

- Use a special area of the disk to hold the directory info.

- Directory info consists of <filename, inode #> pairs.

- If one user uses a name, nobody else can.

| File Name | inode number |

|---|---|

| .user1_bashrc | 27 |

| .user2_bashrc | 30 |

| firefox | 3392 |

| .bob_bashrc | 7 |

Is this a good scheme?

Early computers, which were single-user and had very little stable storage, used variants on this scheme. They were quickly replaced.

The Simpleton's Guide to File Organization: Part 2

- Simple improvement: make a separate "special area" for each user.

- Now files only have to be uniquely named per-user.

(Yeah, it's not that great of an improvement)

| File Name | inode number |

|---|---|

| .bashrc |

30 |

| Documents | 173 |

| File Name | inode number |

|---|---|

| .bashrc | 391 |

| failed_projects | 8930 |

| zsh |

3392 |

user1's Directory

user2's Directory

Introducing: Directories

Directories are files that contain mappings from file names to inode numbers.

- The "special reserved area" we saw previously was an example of a directory, albeit a very primitive one.

- The inode number is called the inumber.

-

Only the OS can modify directories

- Ensures that mappings cannot be broken by a malicious user (referee role)

- User-level code can read directories

- Directories create a name space for files (file names within the same directory have to be unique, but can have same filename in different directories)

Introducing: Directories

Note: the inumber (the index of the inode in the inode array, written "i#") in a directory entry may refer to another directory!

The OS keeps a special bit in the inode to determine if the file is a directory or a normal file.

There is a special root directory (usually inumber 0, 1, or 2).

| i# | Filename |

|---|---|

| 3226 | .bashrc |

| 251 | Documents |

| 7193 | pintos |

| 2086 | todo.txt |

| 1793 | Pictures |

2B

Example directory with 16B entries

14B

So...how do you find the data of a file?

To find the data blocks of a file, we need to know where its inode (file header) is.

To find an inode (file header), we need to know its inumber.

To find a file's inumber, read the directory that contains the file.

The directory is just a file, so we need to find its data blocks.

There is actually not an infinite loop here.

The data blocks of the file at the beginning and the data blocks of the file at the end are *not* actually from the same file! One is the file we're trying to read, and one is the directory containing that file.

However, at some point, we're still going to need to have a way to find a file without needing to read its directory, else we will never be able to look up data blocks.

There's an infinite loop in here...or is there?

To find a file's inumber, read the directory that contains the file.

We can break the loop here by agreeing on a fixed inumber for a special directory.

It should be possible to reach every other file in the filesystem from this directory.

This is the root directory. On UNIX, it is called "/"

On most UNIX systems, the root directory is inumber 2

int config_fd = open("/home/user1/.bashrc", O_RDONLY);How does the filesystem service this syscall?

int config_fd = open("/home/user1/.bashrc", O_RDONLY);CPU

In previous examples, we started knowing the inumber of the file to read. This time, we don't have that.

But we do have....what?

CPU

- Read inode 2 (the root inode)

- Use the inode to locate the data in the root directory

- Read the data in the root directory

- See that our next path target is "home", which has i# = 11

- Read inode 11 (the inode for "/home")

- Use the inode to locate the data in the "/home" directory

- Read the data in the "/home" directory

- See that our next path target is "user1", which has i# = 6

- Read inode 6 (the inode for "/home/user1")

- Use the inode to locate the data for "/home/user1"

- Read the data for "/home/user1"

- We know what inode the file is at now!

i6

| 273 | Documents |

| 94 | .ssh |

| 2201 | .bash_profile |

| 23 | .bashrc |

| 61 | .vimrc |

int config_fd = open("/home/user1/.bashrc", O_RDONLY);B 537

That's a lot of reads!

Contents of Block #537

How many disk reads was that?

- Read inode 2 (the root inode)

- Use the inode to locate the data in the root directory

- Read the data in the root directory

- See that our next path target is "home", which has i# = 11

- Read inode 11 (the inode for "/home")

- Use the inode to locate the data in the "/home" directory

- Read the data in the "/home" directory

- See that our next path target is "user1", which has i# = 6

- Read inode 6 (the inode for "/home/user1")

- Use the inode to locate the data for "/home/user1"

- Read the data for "/home/user1"

- We know what inode the file is at now!

6 disk reads just to open the file

We didn't even try to read anything out of the file--that was just an open() call!

Simple optimization: cwd

Maintain the notion of a per-process current working directory.

Users can specify files relative to the CWD

We can't avoid this disk access...

OS caches the data blocks of CWD in the disk cache (or in the PCB of the process) to avoid having to do repeated lookups.

What We Have

We now know how to do the following:

- Given a file header, find the data blocks of the file

- Given a file header, extend the file (if necessary)

- Find a file header from a human-readable path

OS job: illusionist. Hide this complexity behind an interface.

But we don't want the user to have to know how to do all this!

More Usability

The UNIX Filesystem API

The UNIX Filesystem API

Armed with what we know about files and directories, let's take a look at the classic UNIX Filesystem API.

You already have an idea of what some of these functions do. Let's look at how some of them are implemented.

But before we dive into it, we need to look at one last piece of the API and how it behaves...

Filesystem API Structures

Think about the following scenario:

- Process A opens file F

- Process A reads 5 bytes from F

- Process B opens file F

- Process A reads 5 bytes from F

- Process B reads 5 bytes from F

Process A should get bytes 5-9 of the file

Process B should get bytes 0-4 of the file

Suggests that we need two different types of "files": one to track a file-in-use, and one to track a file-on-disk.

Filesystem API Structures

"Open /var/logs/installer.log"

File

Descriptor

Open File Tracker

On-Disk File Tracker

On-Disk Data

User Memory

Per-Process Memory

Global System Memory

On Disk

The user gets everything to the left of the thick line and interacts with it via system calls (since they can't directly edit system memory). The OS is responsible for updating things to the right of the line.

+syscall

struct file

struct inode

open()

Creates in-memory data structures used to manage open files. Returns file descriptor to the caller.

open(const char* name, enum mode);On open(), the OS needs to:

- Check if the file is already opened by another process. If it is not:

- Find the file

- Copy information into the system-wide open file table

- Check protection of file against requested mode. If not allowed, abort syscall.

- Increment the open count (number of processes that have this file open).

- Create an entry in the process's file table pointing to the entry in the system-wide file table.

- Initialize the current file pointer to the start of the file.

- Return the index into the process's file table.

- Index used for subsequent read, write, seek, etc. operations.

struct file {

struct file_header* metadata;

file_offset pos;

int file_mode; //e.g. "r" or "rw"

};File

Descriptor

On-Disk Data

User Memory

Per-Process Memory

Global System Memory

On Disk

struct file

struct inode

close()

Close the file.

close(int fd);On close(), the OS needs to:

- Remove the entry for the file in the process's file table

- Decrement the open count in the system-wide file table

- If the open count is zero, remove the entry from the system-wide file table

File

Descriptor

On-Disk Data

User Memory

Per-Process Memory

Global System Memory

On Disk

struct file

struct inode

read()

Read designated bytes from the file

read(int fd, void* buffer, size_t num_bytes)On read(), the OS needs to:

- Determine which blocks correspond to the requested reads, starting at the current position and ending at current position + num_bytes (note: where is current position stored?)

- Dispatch disk reads to the appropriate sectors

- Place the read results into the buffer pointed at by buffer

File

Descriptor

On-Disk Data

User Memory

Per-Process Memory

Global System Memory

On Disk

struct file

struct inode

create()

Creates a new file with some metatdata and a name.

On create(), the OS will:

- Allocate disk space (check quotas, permissions, etc).

- Create metadata for the file in the file header, such as name, location, and file attributes

- Add an entry to the directory containing the file

create(const char* filename);File

Descriptor

On-Disk Data

User Memory

Per-Process Memory

Global System Memory

On Disk

struct file

struct inode

link()

Creates a hard link--a user-friendly name for some underlying file.

On link(), the OS will:

- Add the entry to the directory with a new name

- Increment counter in file metadata that tracks how many links the file has

link(const char* old_name, const char* new_name);This new name points to the same underlying file!

File

Descriptor

On-Disk Data

User Memory

Per-Process Memory

Global System Memory

On Disk

struct file

struct inode

unlink()

Removes an existing hard link.

To delete() a file, the OS needs to:

- Find directory containing the file

- Remove the file entry from the directory

- Clear file header

- Free disk blocks used by file and file headers

unlink(const char* name);The OS decrements the number of links in the file metadata. If the link count is zero after unlink, the OS can delete the file and all its resources.

File

Descriptor

On-Disk Data

User Memory

Per-Process Memory

Global System Memory

On Disk

struct file

struct inode

Other Common Syscalls

-

write() is like read(), but copies the buffer to the appropriate disk sectors

-

seek() updates the current file pointer

- fsync() does not return (blocks) until all data is written to persistent storage. This will be important for consistency.

Does write() require us to access the disk?

How about seek()?

A. Yes, Yes

B. Yes, No

C. No, Yes

D. No, No

Summary

Without persistent storage, computers are very annoying to use.

Persistent storage requires a different approach to organizing and storing data, due to differences in its behavior (speed, resilience, request ordering). This leads naturally to the idea of a file system.

When designing filesystems, we care about three properties:

- Speed

- Reliability

- Usability

We should use these three properties to guide our design choices.

Use of the filesystem involves the filesystem API, in-memory bookkeeping structures, and the structure of data on disk. All three need to be considered when designing a filesystem.