GPT3

How do you get Creativity from Determinism?

Tokens

Transformers

Creativity

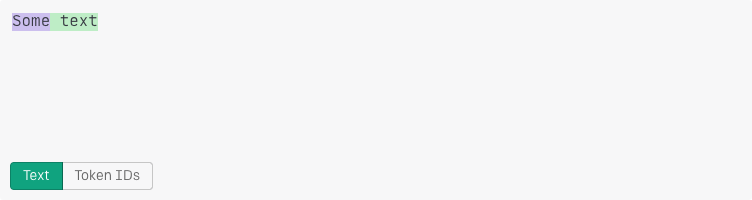

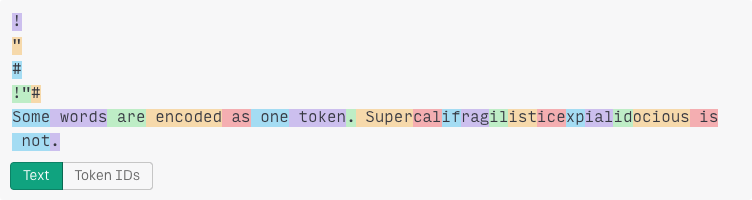

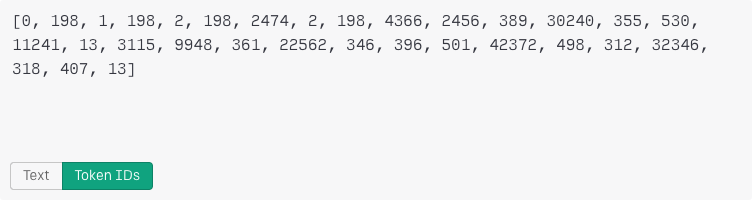

TOKENS

"Some"

4366

token

"Some words are encoded as one token."

[4366, 2456, 389, 30240, 355, 530, 11241, 13]

tokenise

TRANSFORMERS

tokenise("Once upon a")

token(" time")model

tokenise("Once upon a" + " time")token(",")model

Response length

Run for n cycles

Stop Sequences

Run until the last n tokens match one of the stop sequences

DETERMINISTIC

creativity

tokenise("Once upon a")

token("time")model

tokenise("Once upon a")(token(" time"), logprob("96.45%"))

model

tokenise("Once upon a")

[(token(" time"), logprob("96.45%")),

(token(" Time"), logprob("0.67%")),

(token(" midnight"), logprob("0.31%")),

...]

model

StartS with a thorough understanding of expectations

tokenise("Once upon a" + " midnight")token("dreary")model

TEMPERATURE

The likelihood that a lower probability token will have its relative probability increased.

Top P

Only consider the top n most likely tokens, having a cumulative probability of p.

SELECTS THE LESS WELL-TRODDEN PATH

Best of

Generate m sequences of n tokens, then select the sequence of tokens with the highest probability.

Generates a few ideas and picks the best

CONCLUSIONS

The models, despite their vast complexity, are deterministic

Creativity starts with a comprehensive understanding of expectations

It then chooses to take a less well trodden path

Repeating this process requires more effort but can improve the result, by ranking the ideas and selecting the best

Thanks

Read more

https://blog.scottlogic.com/cprice/

watch the COMPUTERPHILE EPISODE on AI LANGUAGE MODELS & TRANSFORMERS

https://www.youtube.com/watch?v=rURRYI66E54