OpenAI

If you (can) example it,

they will come

(& eat your lunch)

T&Cs

-

The demos you are about to see have not yet (nor will they ever...) been approved for launch by OpenAI.

-

This session must not be recorded.

-

Everyone watching this stream works for the same organisation.

The

OpenAI API

is a service provided by

OPENAI

which is powered by A MODEL CALLED

GPT3

which was fine-tuned to produce

CODEX

which is the model powering

Copilot

which is a service provided by

GitHub

which is owned by

Microsoft

who are significant investors in

OpenAI

The

OpenAI API

is a service provided by

OPENAI

which is powered by A MODEL CALLED

GPT3

which was fine-tuned to produce

CODEX

which is the model powering

Copilot

which is a service provided by

GitHub

which is owned by

Microsoft

who are significant investors in

OpenAI

AND ARE GROWING

INTENTIONS TO ACQUIRE THEM

-

Background to using OpenAI

-

Obligatory demos

-

Building a terrible, but revealing, version of Copilot

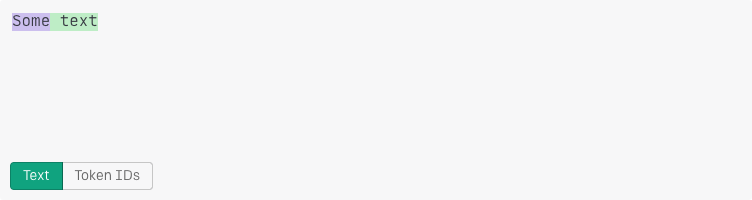

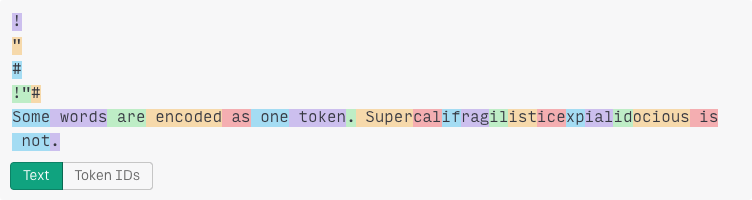

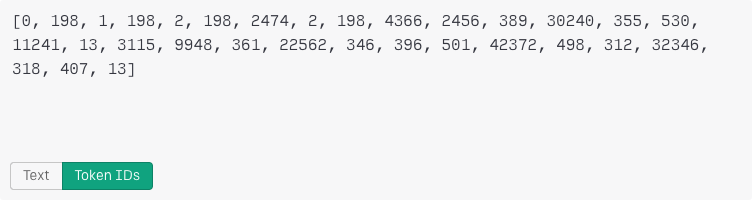

TOKENS

Demo

TRANSFORMERS

tokenise("Once upon a")

tokenise(" time")

tokenise("Once upon a" + " time")

tokenise(",")

Response length

Run for n cycles

Stop Sequences

Run until the last n tokens match one of the stop sequences

DEMO

Controlling creativity

tokenise("Once upon a")

tokenise("time")

tokenise("Once upon a")

(tokenise(" time"), logprob("96.45%"))

tokenise("Once upon a")

[(tokenise(" time"), logprob("96.45%")),

(tokenise(" Time"), logprob("0.67%")),

(tokenise(" midnight"), logprob("0.31%")), ...]

FREQUENCY PENALTY

The extent to which each repeated occurrence of a token, lowers the future probability of that token.

PRESENSE PENALTY

The extent to which any previous occurrence of a token, lowers the future probability of that token.

Demo

TEMPERATURE

The likelihood that a lower probability token will have its relative probability increased.

Top P

Only consider the top n most likely tokens, having a cumulative probability of p.

0 = The best guess

0.5 = A good guess

1 = Hold my beer...

N.B. It only makes sense to use one of these, the other should be set to 1.

Demo

Best of

Generate m sequences of n tokens, then select the sequence of tokens with the highest probability.

Expensive...

Inject start text

Before submission, concatenate this string onto the prompt.

E.g. "\nA:"

Inject restart text

After receiving a response, concatenate this string onto the completion.

E.g. "\n\nQ:"

No Demo

(It's a bit dull)

One SHOT

FEW SHOT

FINE TUNE

One Shot

- E.g. "Once upon a"

- Unambiguous high probability intent

Few Shot

- E.g. "A: Apple, B: Banana, C:" versus "A: Apple, B: Broadcom, C:"

- Select from highly probable intents

Fine Tune

- E.g. Copilot*

- Requires extensive, accurate and non-biased datasets of examples

CheapPilot

The Challenge

~ 25 seconds

~ 50 seconds

~ 15 seconds

~ 15 seconds

Demo

Conclusions

-

Applying AI to business problems is becoming more accessible

-

AI is going to change the way we work as developers

-

It might not be be revolutionary but things will change

Conclusions

-

Applying AI to business problems is becoming more accessible

-

AI is going to change the way we work as developers

-

It might not be be revolutionary but things will change

-

When should you start to look into it? Tomorrow. It would be foolish not too