GRAPL

WHY DO WE NEED A

NEW APPROACH TO A SIEM?

Log data is extremely "raw" & very disconnected.

An event may reference something opaque, like a "pid" or "uid", leaving it to the consumer to resolve what the entity behind it is.

Most SIEMs are built on databases that do a horrible job of performing JOIN operations - time series or streaming databases. JOIN is the most important operation!

The query languages are often bespoke and optimize for short queries, but attacker behaviors are becoming more complex than they can handle.

Security Engineers are being told to solve operations and data science problems when they should be focusing on security

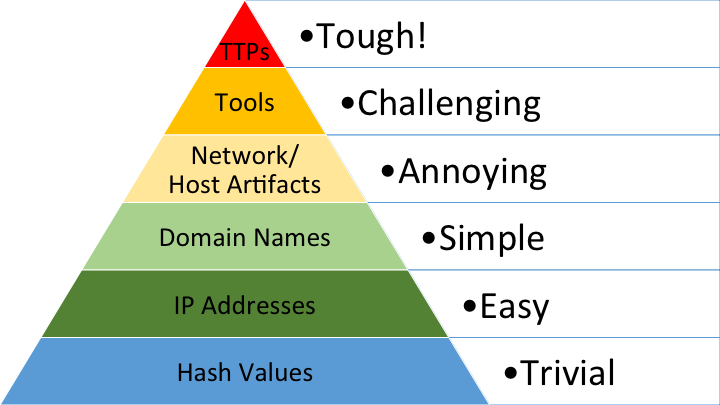

PYRAMID OF PAIN

WE WANT

TO BE HERE!

AT BEST,

WE'RE HERE

MOST ARE HERE

This is not

how we climb

the pyramid of pain:

image_name="procdump"

args = "*-ma*"

args = "*lsass.exe*"[

{

'ppid': 100,

'pid': 250,

'action': 'started',

'time': '2019-02-24 18:21:25'

},

{

'ppid': 250,

'pid': 350,

'action': 'started',

'time': '2019-02-24 18:22:31'

}

][

{

'pid': 350,

'action': 'connected_to',

'domain': 'evil.com',

'time': '2019-02-24 18:24:27'

},

{

'pid': 350,

'action': 'created_file',

'path': 'downloads/evil.exe',

'time': '2019-02-24 18:28:31'

}

]seen_at: '2019-02-24 18:21:25'

created_at: '2019-02-24 18:21:25'

created_at: '2019-02-24 18:22:31'

seen_at: '2019-02-24 18:24:27'

seen_at: '2019-02-24 18:28:31'

seen_at: '2019-02-24 18:22:31'

seen_at: '2019-02-24 18:24:27'

seen_at: '2019-02-24 18:28:31'

What is Grapl?

Technically a SIEM

Platform focused -

"build a solution on Grapl"

Exposes data as a graph, not logs

Query Language in Python -

not another made up query language

Performs data cleaning upfront

Graph Platform

Platform Focus

Grapl is built on cloud services.

The idea is that if Grapl doesn't have a feature, someone can build one.

AWS gives us tons of primitives to do that.

Every service in Grapl is event-based, which is inherently extendable.

Grapl provides a Plugin interface, which lets you extend the system in a well defined way.

Graph Based

Graphs are an extremely general data structure, we can model anything as a graph, given the right instrumentation.

Attacker behaviors generally span a number of different entities and events.

Joining data together is critical to understanding the attacker.

"Defenders think in lists. Attackers think in graphs.

As long as this is true, attackers win."

{

"host_id": "cobrien-mac",

"parent_pid": 3,

"pid": 4,

"image_name": "word.exe",

"create_time": 600,

}

{

"host_id": "cobrien-mac",

"parent_pid": 4,

"pid": 5,

"image_name": "payload.exe",

"create_time": 650,

}explorer.exe

payload.exe

word.exe

word.exe

payload.exe

word.exe

word.exe

payload.exe

ssh.exe

/secret/file

11.22.34.55

mal.doc

PARSING

SUBGRAPH

GENERATION

IDENTIFICATION

MERGING

ANALYSIS

ENGAGEMENTS

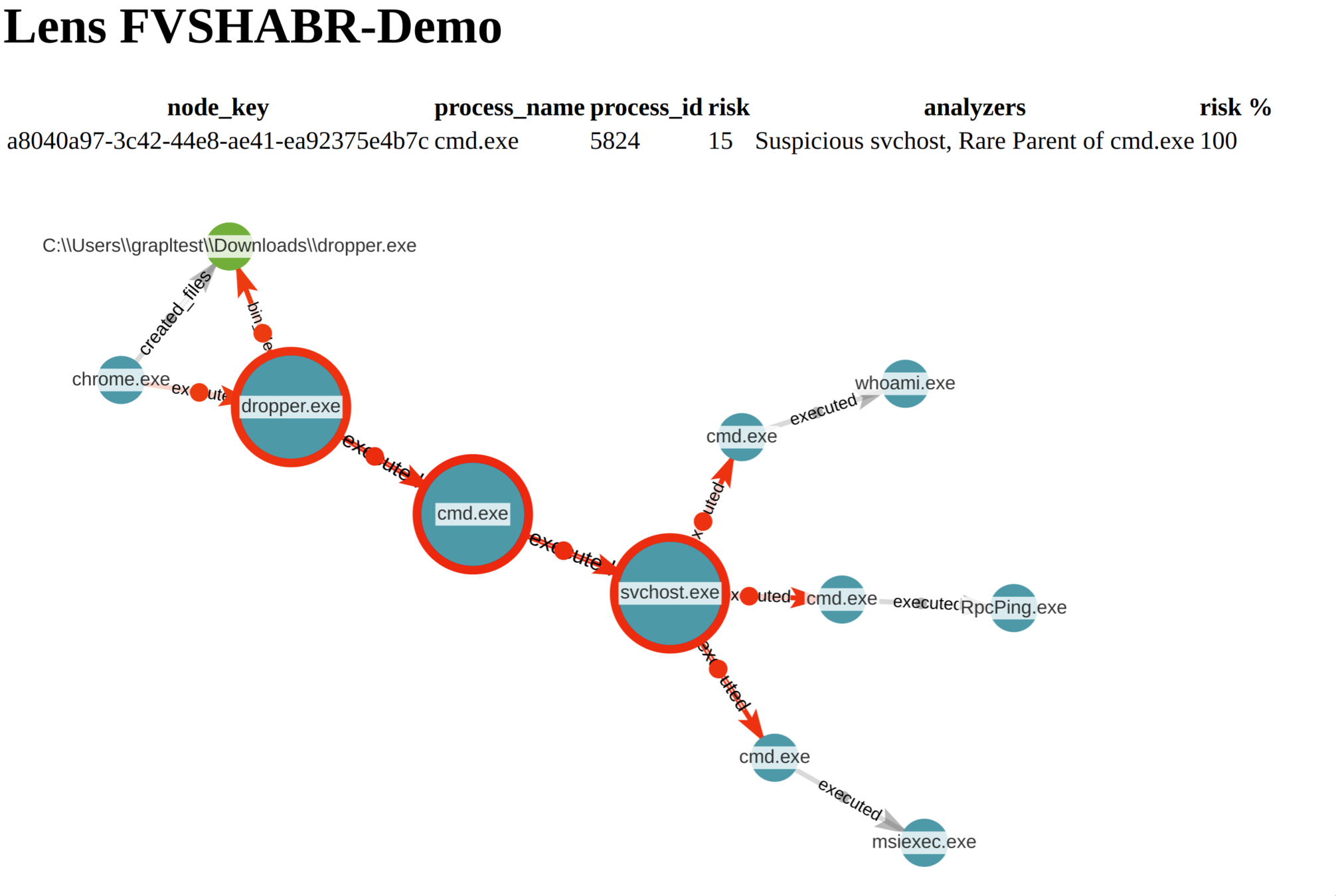

Dropper:

- Dropper executes

- Dropper reaches out to network

- Dropper creates file

- Dropper executes file

A process that downloads

a payload & executes it.

// Search all processes over 1 hour for dropper-like behavior

search process_executions

| join [

| search process_executions process_id = $parent_process_id$

]

| join [

| search network_connections process_id = $parent_process_id$

]

| join [

| search file_creations creator=$parent_process_id$

AND $file_path$ = image_name

]

// TODO: Handle pid collisions"By default, subsearches return a maximum of 10,000 results and have a

maximum runtime of 60 seconds. In large production environments,

it is possible that subsearches [..] will timeout"

"Performing full SQL-style joins in a distributed system like

Elasticsearch is prohibitively expensive." [..]

"If you care about query performance, do not use this query."

class DropperAnalyzer(Analyzer):

def get_queries(self) -> OneOrMany[ProcessQuery]:

return (

ProcessQuery().with_bin_file()

.with_parent(

ProcessQuery()

.with_external_connections()

.with_created_files()

)

)

def on_response(self, payload: ProcessView, output: Sender):

dropper = payload.get_parent()

if payload.bin_file.get_file_path() in dropper.get_created_files():

output.send(

ExecutionHit(

analyzer_name="Dropper",

node_view=payload,

risk_score=75,

)

)

<any process>

<any file>

<external ip>

Process with external network access creates file, executes child from it

<any browser>

Browser Executing Child Process

<any process>

<winrar/7zip/zip>

<any file>

Process Executed From Unpacked Binary

<any process>

created file

executed as

Mitre ATT&CK | T1204 - User Execution

<any process>

Rare Parent Child Process

<any process>

executed

executed

created file

executed as

connected to

executed

<any process>

Rare Parent LOLBAS Process

<any process>

executed

<binary>

<lolbas path>

<word/reader/etc>

Commonly Target Application with Non-Whitelisted Child Process

<non whitelisted process>

executed

<any process>

svc_parent = svchost.get_parent()

Grapl + AWS

ec2 instance

role

ec2 instance

assumed role with IP

assumed role with IP

assumed role with IP

If we see a call with a sourceIPAddress that doesn’t match the previously observed IP, then we have detected a credential being used on an instance other than the one to which it was assigned, and we can assume that credential has been compromised.

NETFLIX CLOUD SECURITY:

Detecting Credential Compromise in AWS

class AwsCredentialReuse(Analyzer):

def get_queries(self) -> OneOrMany[Queryable]:

return (

AwsRoleQuery()

.with_assuming_ec2(

Ec2RoleAssumptionQuery()

.with_ip_address()

)

)

def on_response(self, role: AwsRoleView, output: Any):

unique_ips = set()

for role_assumption in role.assuming_ec2:

for ip in role_assumption.ip_address:

unique_ips.add(ip)

if len(unique_ips) > 1:

output.send(

ExecutionHit(

analyzer_name="AwsCredentialReuse",

node_view=role,

risk_score=100,

lenses=[role.arn, role.account]

)

)