MXnet to onnx to ml.net

Cosmin Catalin Sanda

th

4 April 2019

TOPICs

- About me

- Machine Learning in .NET

- The ONNX interchange format

- ML workflow in AWS

ABOUT ME

Cosmin Catalin Sanda

Data Scientist and Engineer at AudienceProject

Blogging at https://cosminsanda.com

Machine learning in .NET before 2018

...THEN something happened

In early 2018 Microsoft open-sourced ML.NET

- Heavily supported by Microsoft

- A great generalist framework

- No deep learning capabilities

- ONNX support

What is ONNX

Open source interchange format between ML models

- Model using one language/library you want and Infer using another language/library you want

- Performance

- Some limitations apply

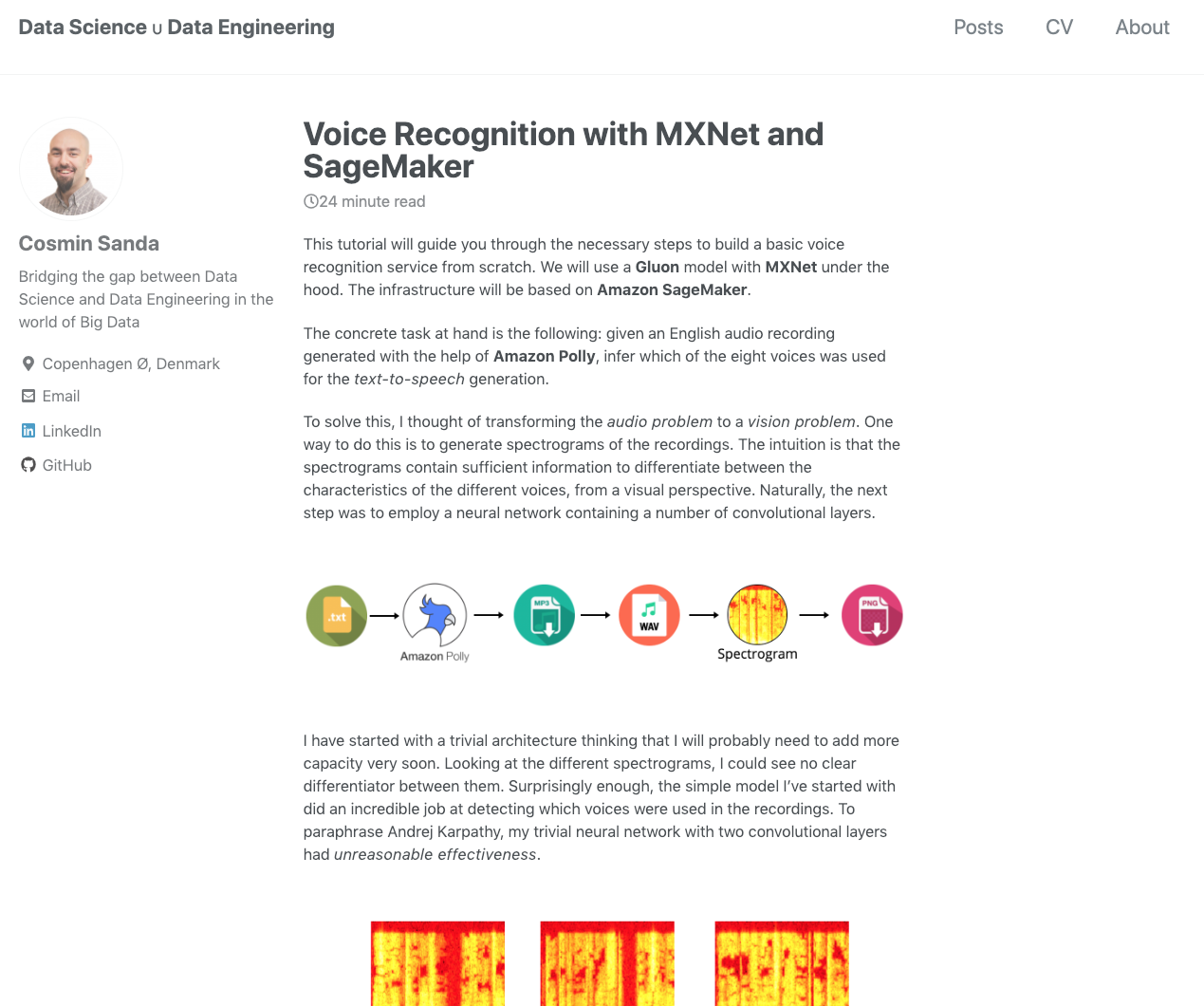

Typical Workflow

- Model a regression problem using MXNet

- Export awesome model to ONNX

- Use ONNX model for real-time inference in a .NET application

Unfortunately there's a but

ONNX scoring in ML.NET works only on Windows X64 platforms (currently)

ML pipeline components

- Amazon Sagemaker for building the model

- Amazon S3 for storing the model

- AWS EC2 for building the application image using Windows containers

- AWS Elastic Container Registry for storing the Docker image

- AWS Elastic Container Service for running the Docker image

- Remote Desktop Connection client

- Internet browser

Amazon SAGEMAKER

MXNet Model & DATA

from mxnet.gluon.nn import HybridSequential, Dense, Dropout

net = HybridSequential()

with net.name_scope():

net.add(Dense(9))

net.add(Dropout(.25))

net.add(Dense(16))

net.add(Dropout(.25))

net.add(Dense(1))

Kaggle's New York City Taxi Fare Prediction

MXNET to onnx

from mxnet.contrib import onnx as onnx_mxnet

def save(net, model_dir):

net.export("model", epoch=4)

onnx_mxnet.export_model(sym="model-symbol.json",

params="model-0004.params",

input_shape=[(1, 4)],

input_type=np.float32,

onnx_file_path="{}/model.onnx".format(model_dir),

verbose=True)

Model artifact (archive) is uploaded to S3 by Sagemaker

building the .net application

- Use your own Windows x64 installation

- Provision a Window EC2 instance

Two options for developing

PROVISIONED tools

- Docker

- AWS CLI

Making predictions in ml.net using onnx

MLContext _env = env;

string _onnxFilePath = onnxFilePath;

var pipeline = new ColumnConcatenatingEstimator(_env, "Features", "RateCode", "PassengerCount", "TripTime", "TripDistance")

.Append(new ColumnSelectingEstimator(_env, "Features"))

.Append(new OnnxScoringEstimator(_env, _onnxFilePath, "Features", "Estimate"))

.Append(new ColumnSelectingEstimator(_env, "Estimate"))

.Append(new CustomMappingEstimator<RawPrediction, FlatPrediction>(_env, contractName: "OnnxPredictionExtractor",

mapAction: (input, output) =>

{

output.Estimate = input.Estimate[0];

}));

var transformer = pipeline.Fit(data);

Dockerizing

FROM microsoft/dotnet:sdk AS build-env

COPY inference/*.csproj /src/

COPY inference/*.cs /src/

WORKDIR /src

RUN dotnet restore --verbosity normal

RUN dotnet publish -c Release -o /app/ -r win10-x64

FROM microsoft/dotnet:aspnetcore-runtime

COPY --from=build-env /app/ /app/

COPY models/model.onnx /models/

COPY lib/* /app/

WORKDIR /app

CMD ["dotnet", "inference.dll", "--onnx", "/models/model.onnx"]

RUNNING inference on AWS

- Create Docker registry in AWS ECR

- Push local image to ECR

- Create Windows Docker cluster in AWS ECS

- Configure task and run the application

KEY takeAways

- ONNX gives flexibility and power when using deep learning libraries

- Docker works for Windows native apps

- ML.NET is a great framework for doing cross-platform development on .NET Core