Differentiable Rendering

Daniel Yukimura

scene

parameters

2D image

- camera pose

- geometry

- materials

- lighting

- ...

rendering

scene

parameters

2D image

rendering

rendering

differentiable

Feedback

Learning

- Inverse Graphics

- Optimization

- 3D Reconstruction

- Fast rendering

- ...

Applications:

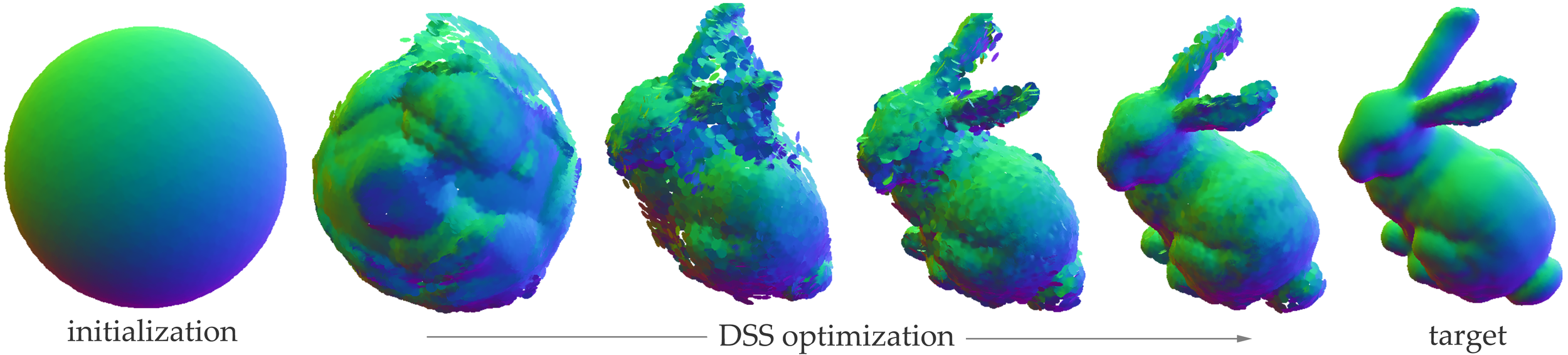

Wang Yifan, Felice Serena, Shihao Wu, Cengiz Öztireli, Olga Sorkine-Hornung - ACM SIGGRAPH ASIA 2019.

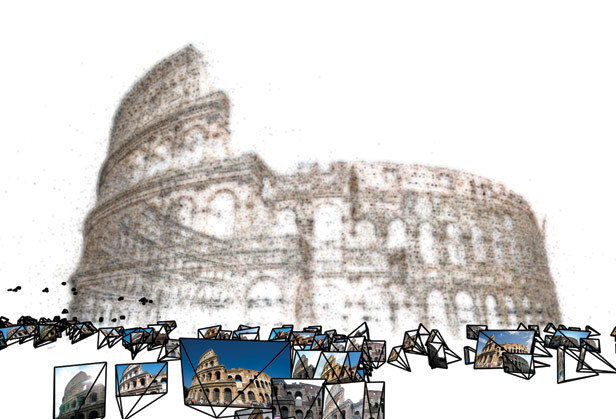

Sameer Agarwal, Noah Snavely, Ian Simon, Steven M. Seitz and Richard Szeliski - International Conference on Computer Vision, 2009, Kyoto, Japan.

Differentiable Monte Carlo Ray Tracing through Edge Sampling

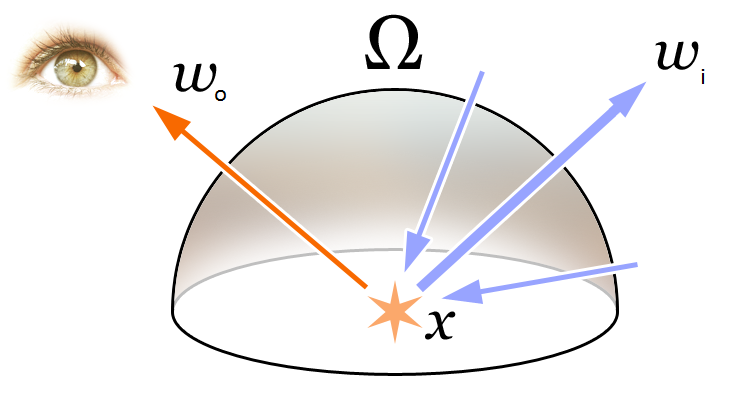

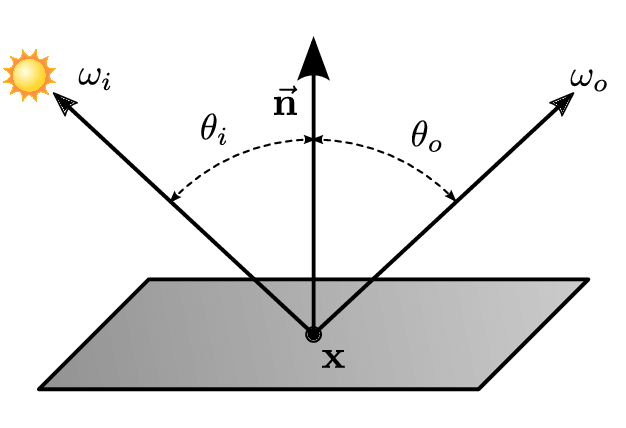

Rendering equation:

Global Illumination

(Recalling)

Monte Carlo Integration

(Recalling)

Monte Carlo Integration

(Recalling)

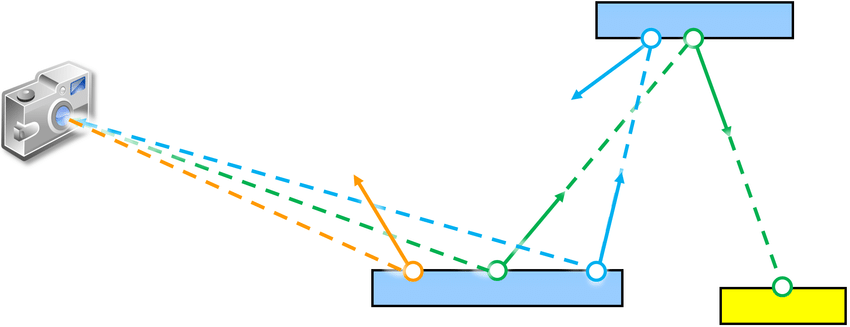

Path Tracing

(Recalling)

Differentiable Monte Carlo Ray Tracing through Edge Sampling

- camera pose

- geometry

- materials

- lighting

- ...

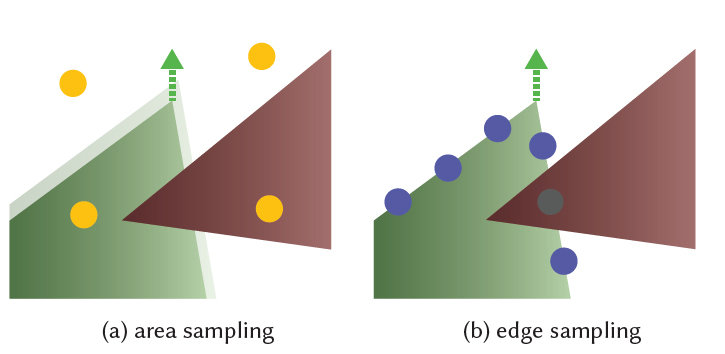

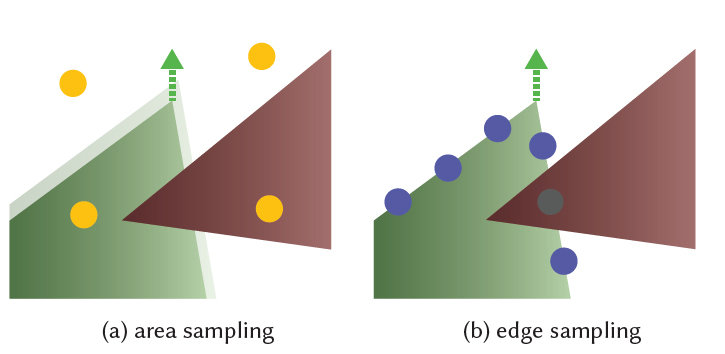

Strategy: Break into smooth and discontinuous regions.

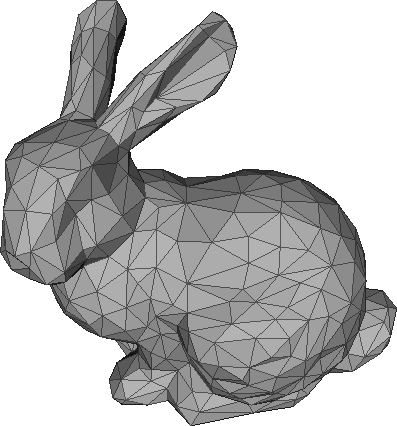

Assumptions:

- triangular meshes (with no interpenetration)

- no point light sources

- no perfectly specular surfaces

Primary visibility

(2D screen-space domain)

- All discontinuities happen at triangle edges.

Monte Carlo estimation:

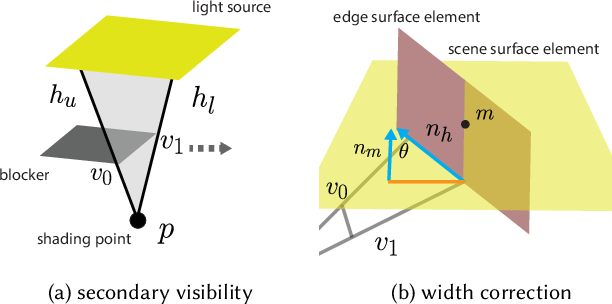

Secondary visibility

(global illumination - 3D)

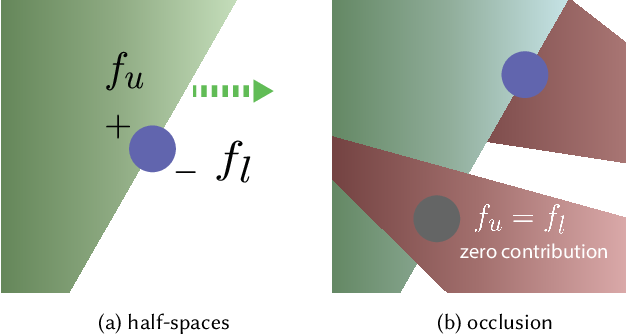

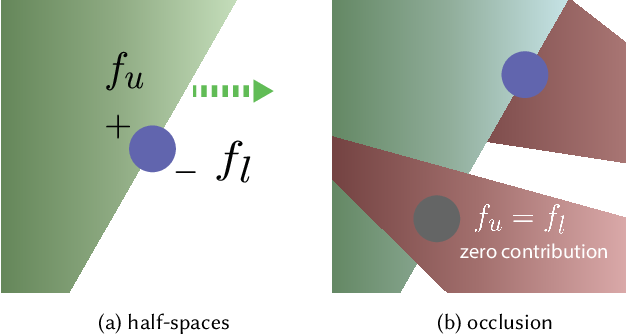

edge portion:

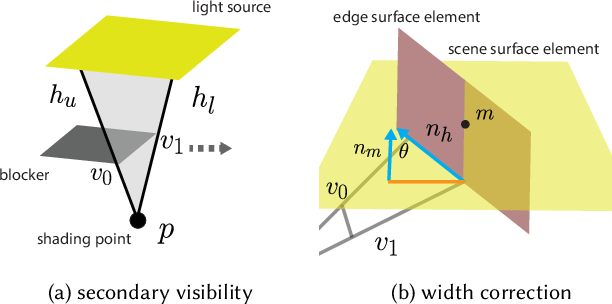

Importance Sampling The Edges

- There are many triangles to sample.

- Now we have to sample edges

- and sample points from the edges...

- Most edges are not silhouette

- not all points have non-zero contribution

Hierarchical edge sampling

two hierarchies:

- Triangle edges that associate with only one face and meshes w/ no smooth shading.

- All the remaining edges

volume hierarchie:

- 3D bounding volume: 3D pos. of edge endpoints.

- 6D bounding volume: pos. of edge endpoints and the normals associated w/ the faces of the edge

Importance sampling a single edge

- Sample based on the BRDF

- Precompute a table of fitted linearly transformed cosines for all BRDFs.

Results

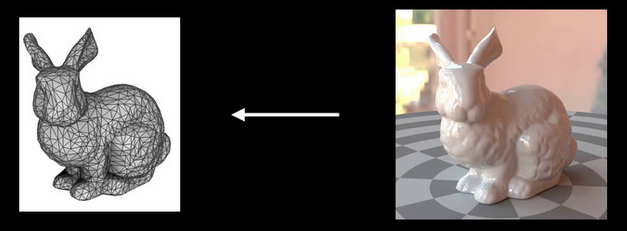

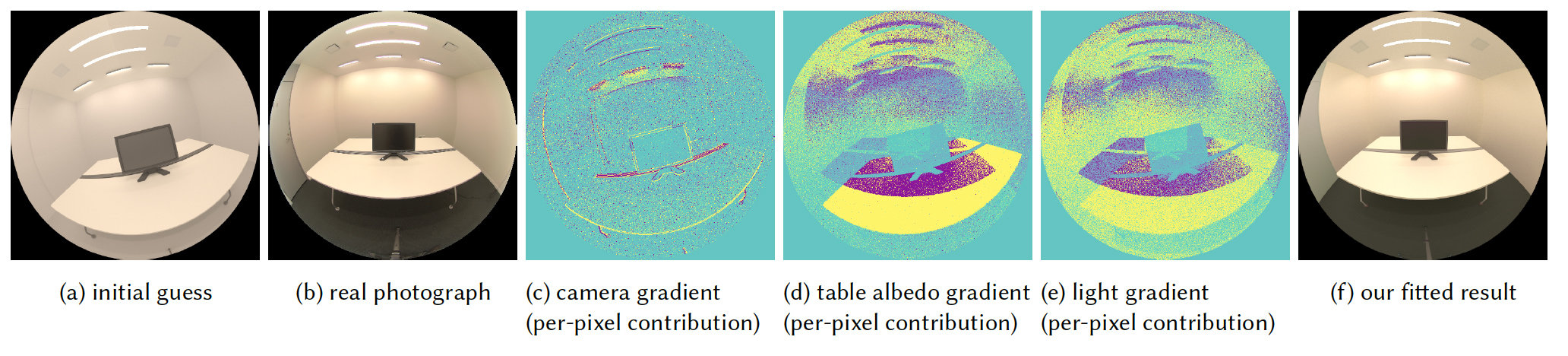

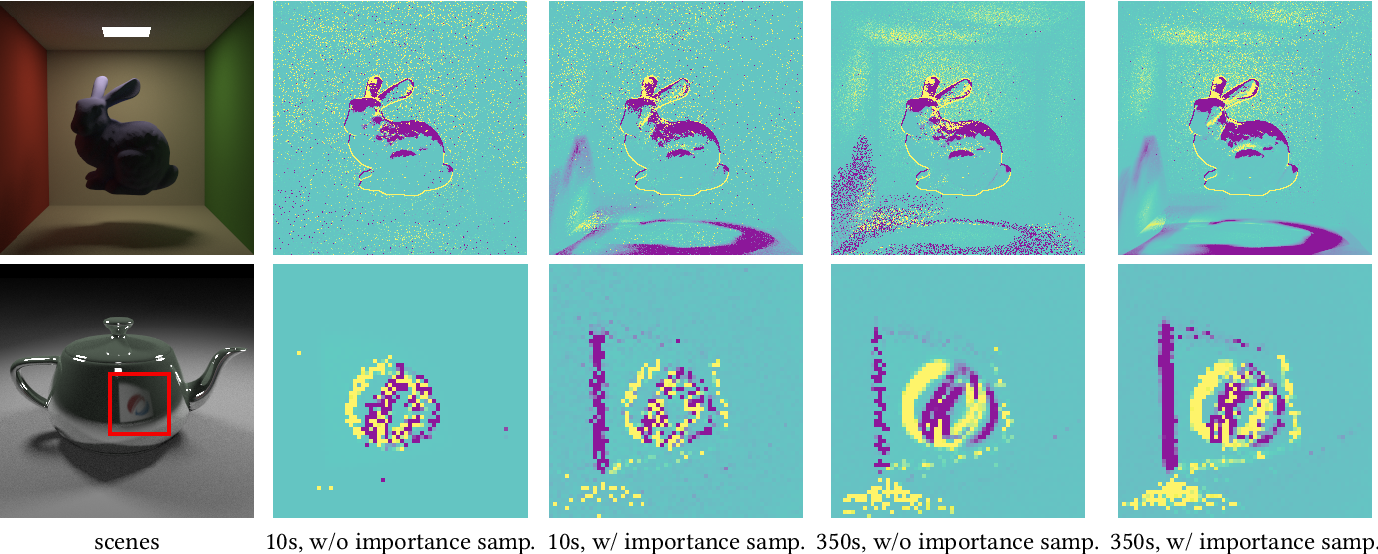

Inverse rendering:

Adversarial examples:

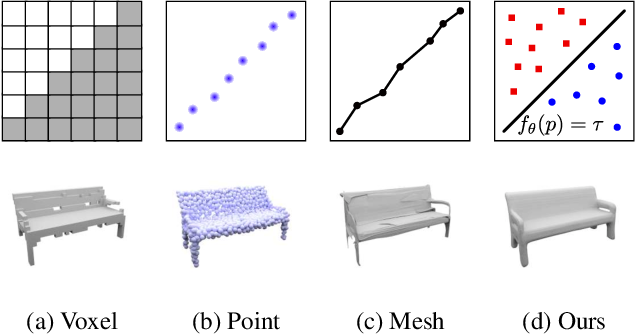

Implicit Representation

- Do not represent 3D shape explicitly

- Instead, consider it implicitly as decision boundary of a classifier

Occupancy networks

- Represent the implicit surface as a neural network.

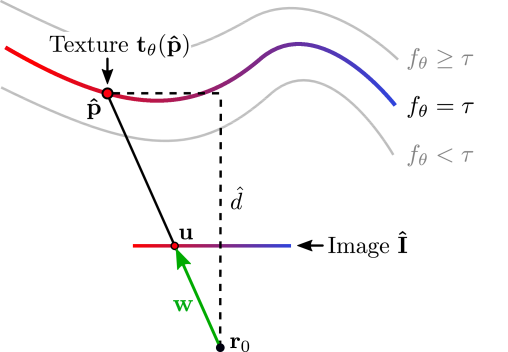

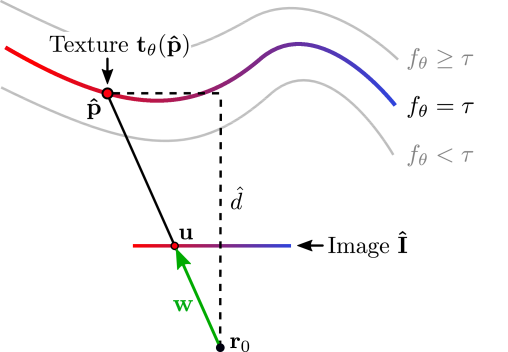

Differentiable Volumetric Rendering

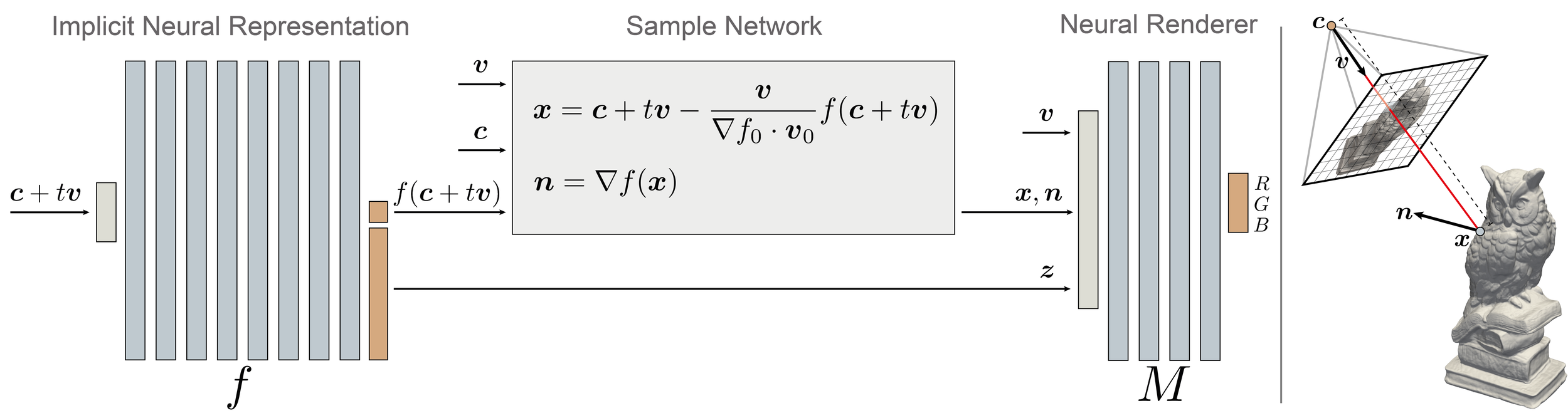

Architecture

- Volumetric Rendering is differentiable here!

- Depth gradients

Forward Pass - Rendering:

Texture mapping:

Loss:

Differentiable Rendering:

KNOWN

??

Depth Gradients:

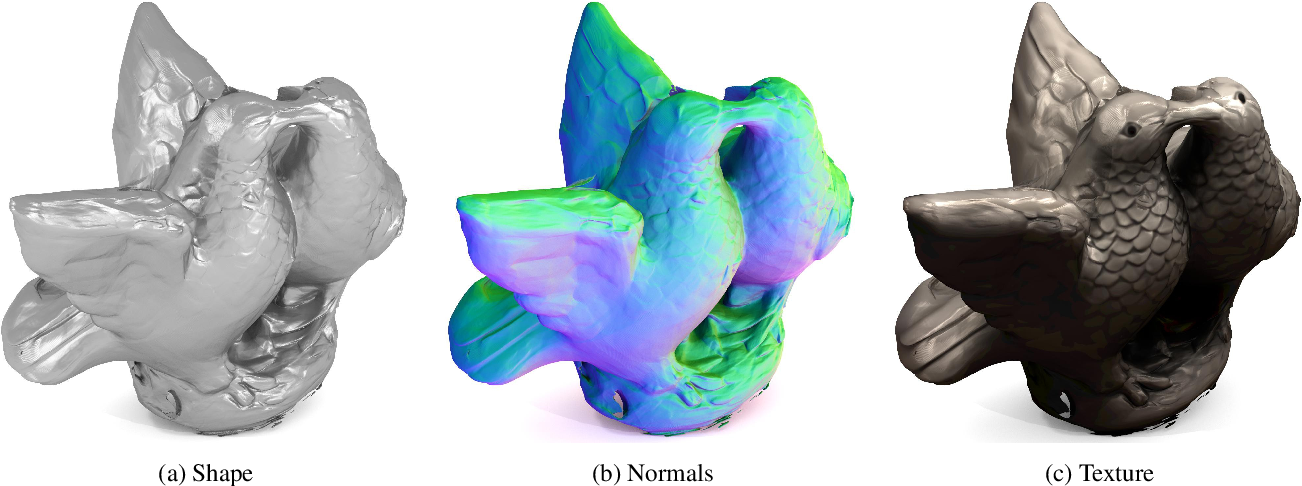

Results

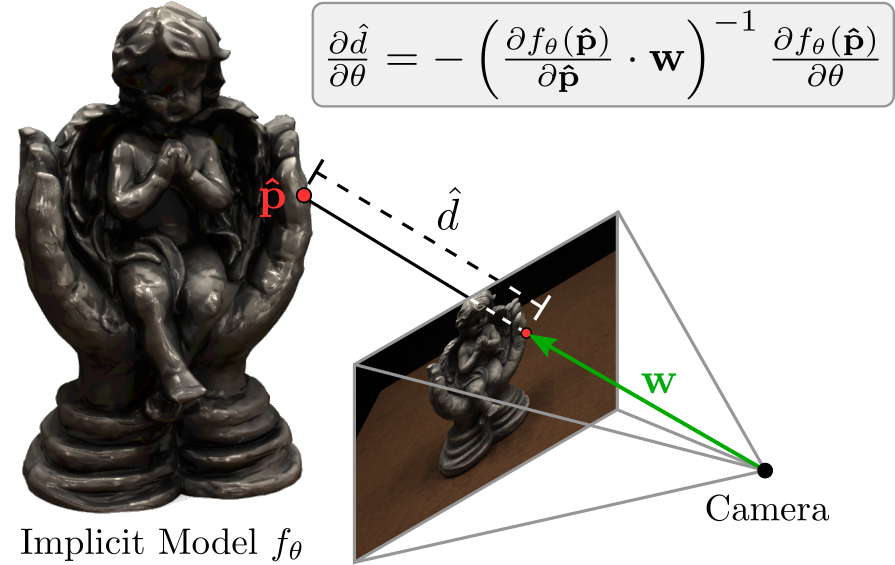

Implicit Differentiable Rendering

Multiview 3D Surface Reconstruction

Input: Collection of 2D images (masked)

with rough or noisy camera info.

Targets:

- Geometry

- Appearance (BRDF, lighting conditions)

- Cameras

Method:

Geometry:

signed distance function (SDF) +

implicit geometric regularization (IGR)

- geometry parameters

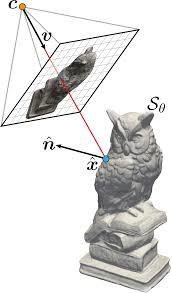

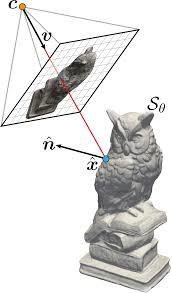

IDR - Forward pass

Ray cast:

(first intersection)

IDR - Forward pass

- appearance parameters

Output (Light Field):

Surface normal

Global gometry feature vector

Differentiable intersections

Lemma:

Light Field Approx.

BRDF function

out direction

income direction

emitted

radiance

incoming radiance

Results: