Descisionmaking Beyond Prediction

Dhrumil Mehta

Associate Prof @ Columbia U. Graduate School of Journalism

Visiting Prof @ Harvard Kennedy School of Government

dhrumil.mehta@columbia.edu

@datadhrumil

@dmil

Guest Lecture @ Cornell Tech

"Off The Record" Please

Highlights:

Currently

-

Associate Prof. @ Columbia Graduate School of Journalism

-

Visiting Prof. @ Harvard Kennedy School

Previously

-

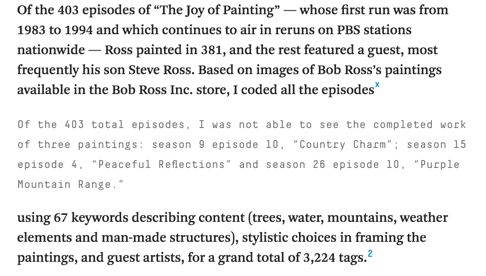

Database Journalist, Politics @ FiveThirtyEight

- Software Development Engineer @ Amazon

- Northwestern:

- BA in Philosophy + Minor in Cognitive Science

- MS in Computer Science

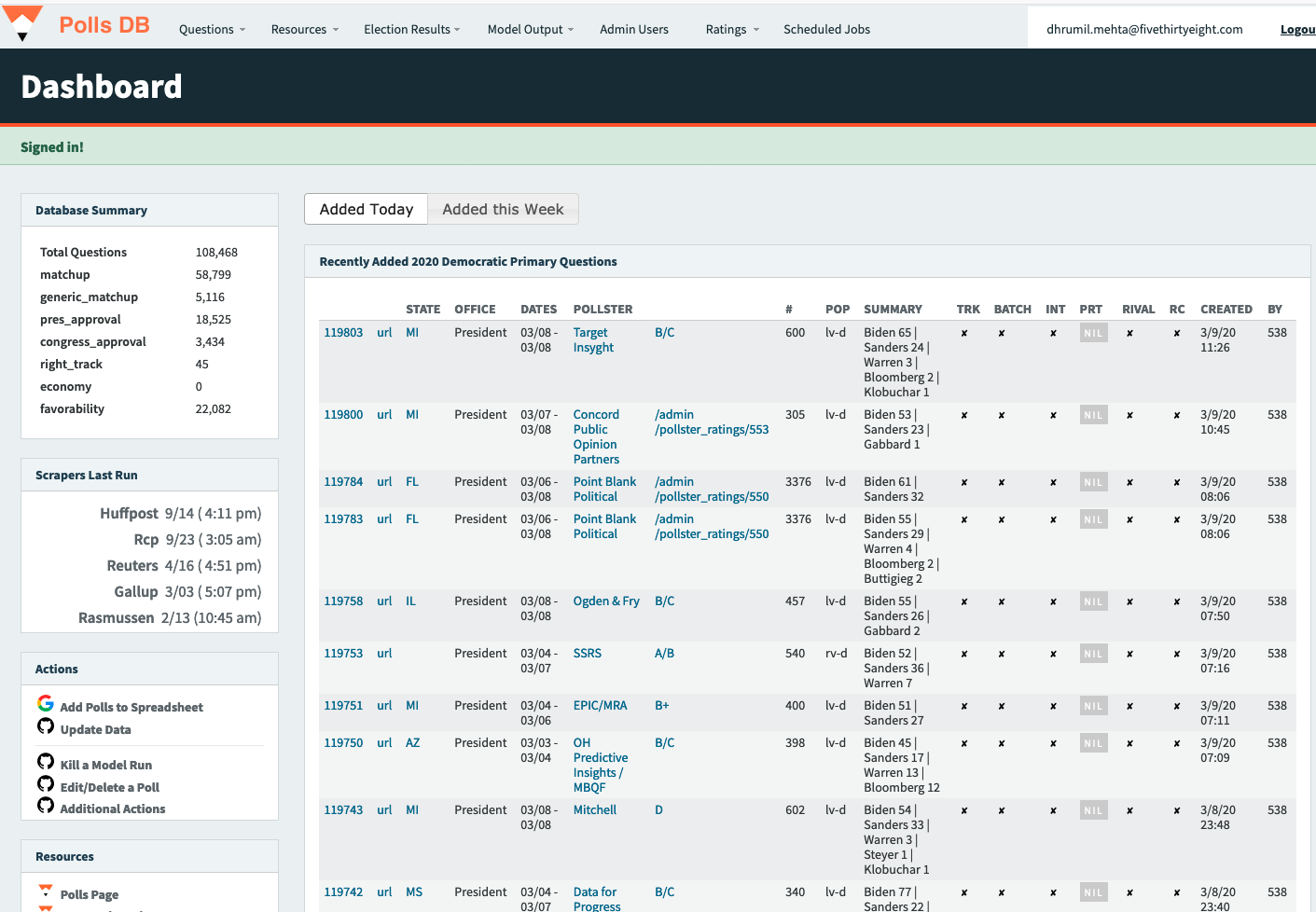

Database Journalist, Politics

Themes

-

Identifying editorial decisions when doing data analysis

- Communicating those decisions transparently and efficiently to readers

- Communicating uncertainty to readers

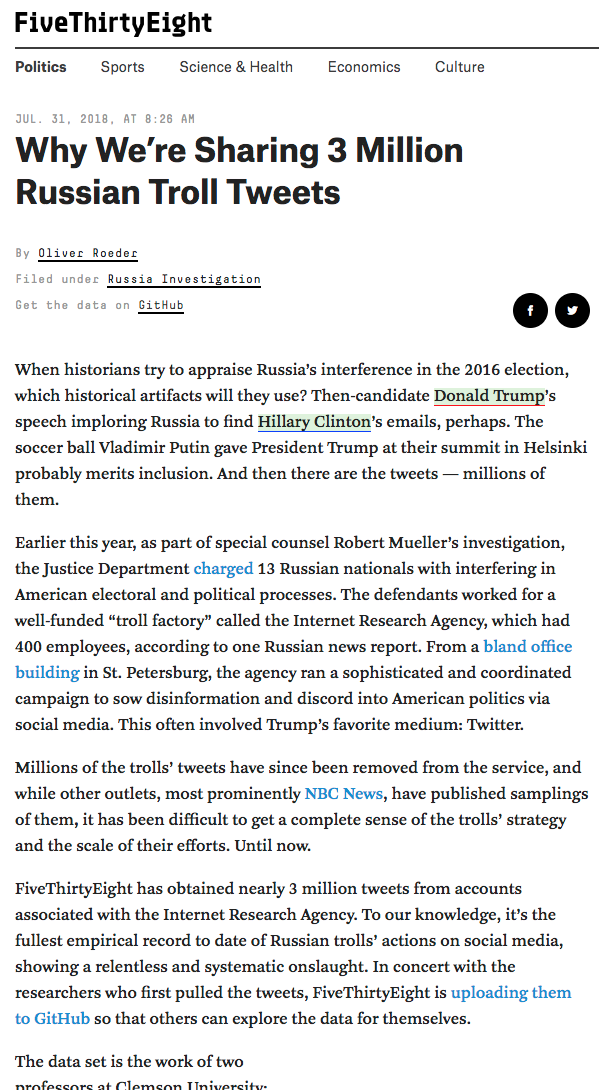

Data Collection

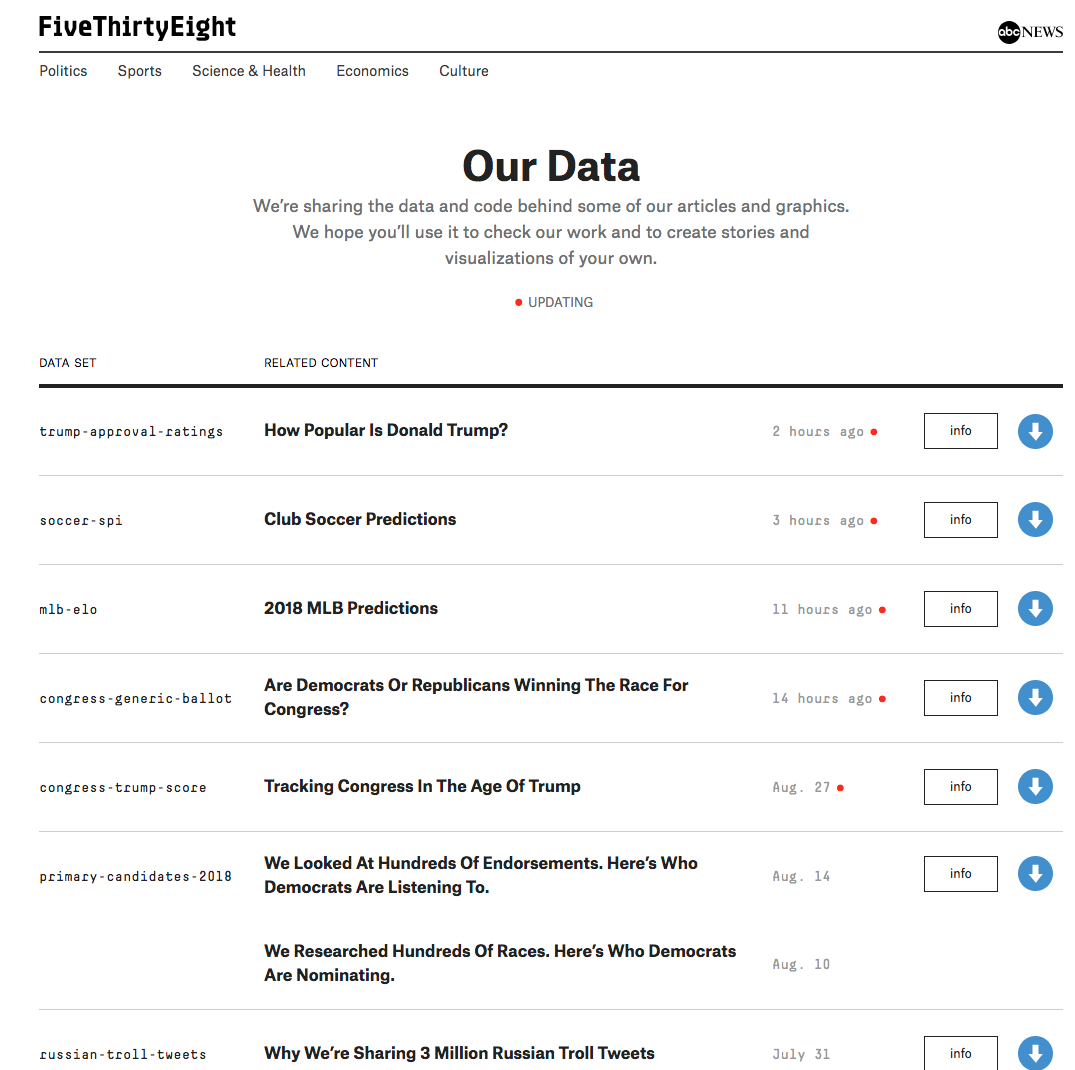

Datasets in the classroom

Datasets at work

Build our own dataset

- With Code / Scrapers

- By Hand

- By Survey Tool

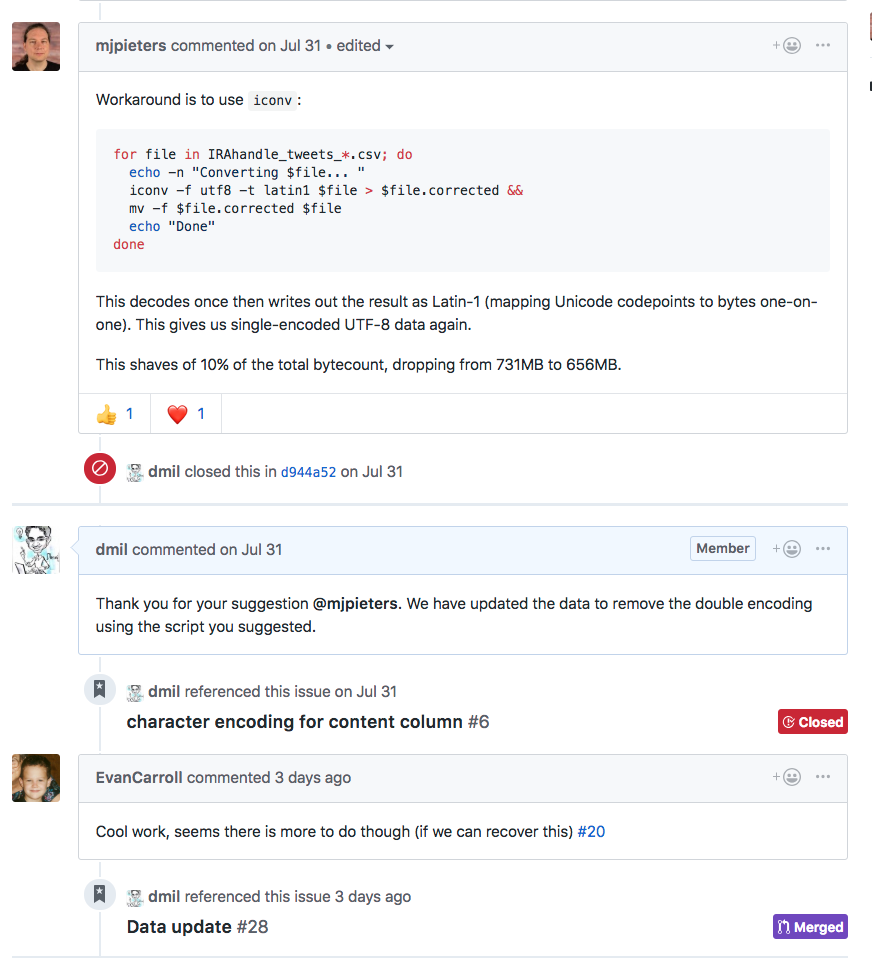

Know your dataset

- Find the point at which the data was collected

- Can you see the actual forms or artifacts from the point of data collection?

- Read any documentation available about the data.

- People to consider contacting:

- Who collected the data?

- Who is the data collected from/about?

- Who are other users of this data?

- Who is impacted by this data?

- What are the limitations of this data collection?

- Be able to trace every statistical treatment that was applied from the raw form of the data to the current form and answer questions from your editors about the nature of the data

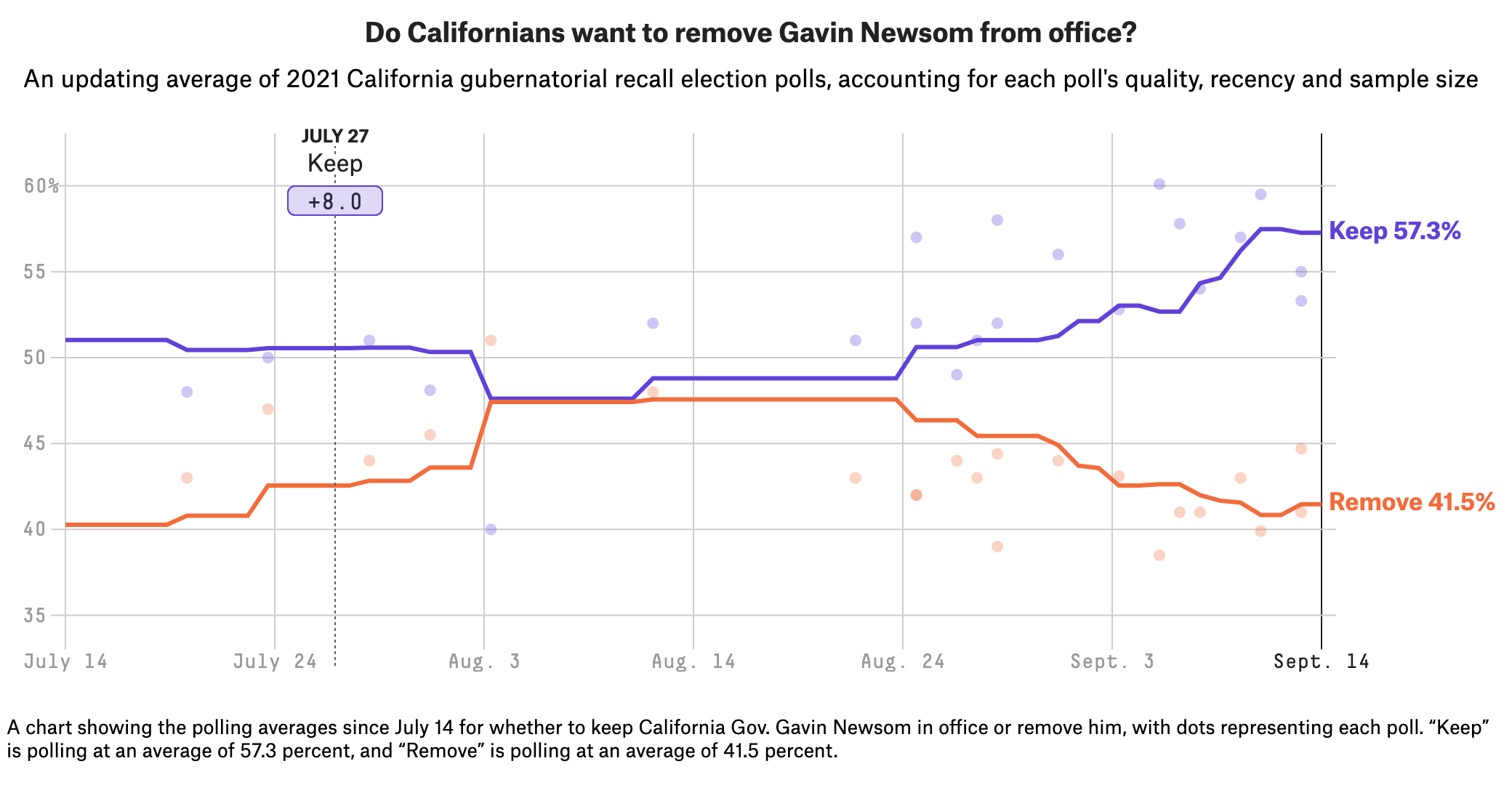

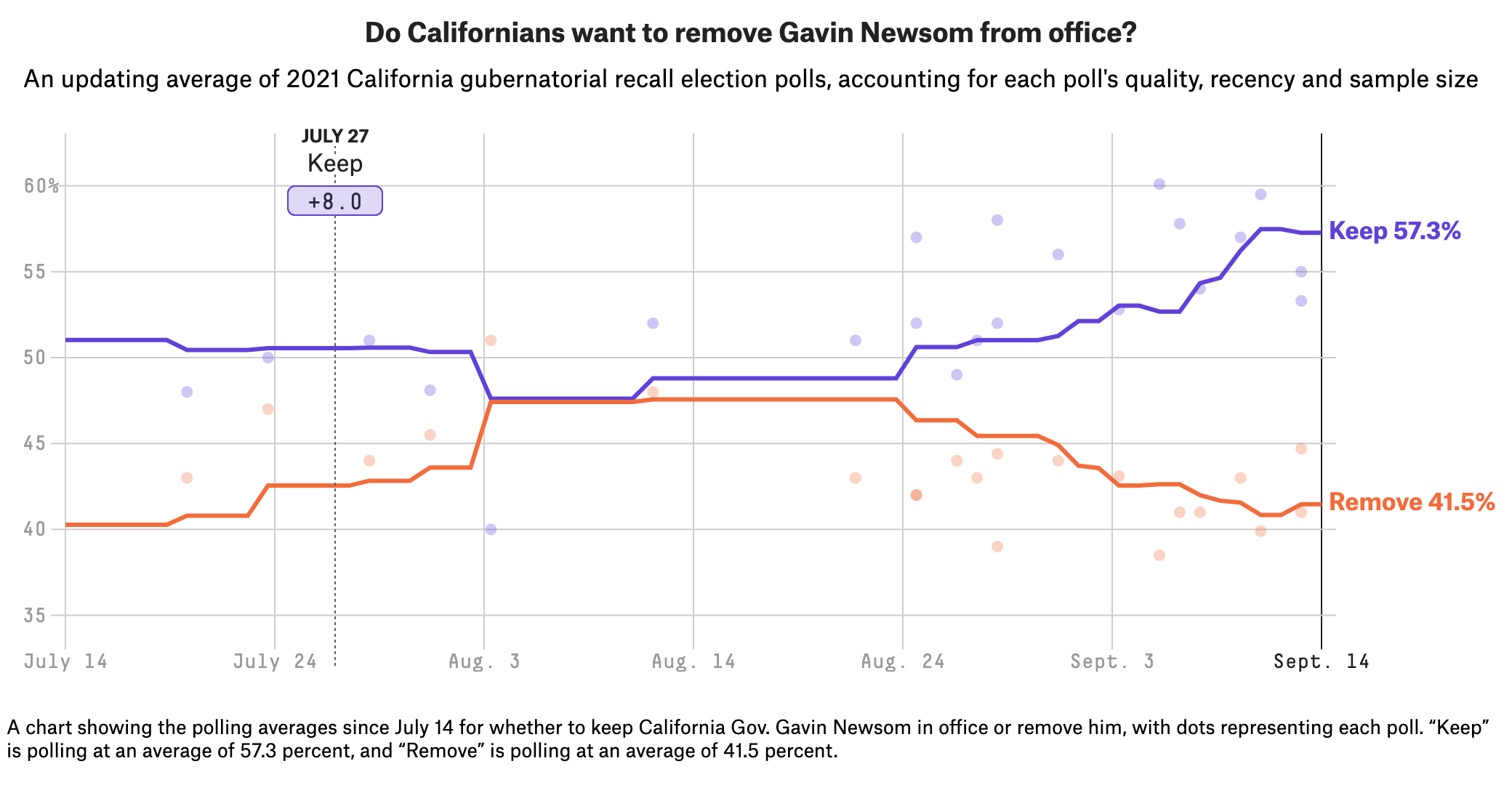

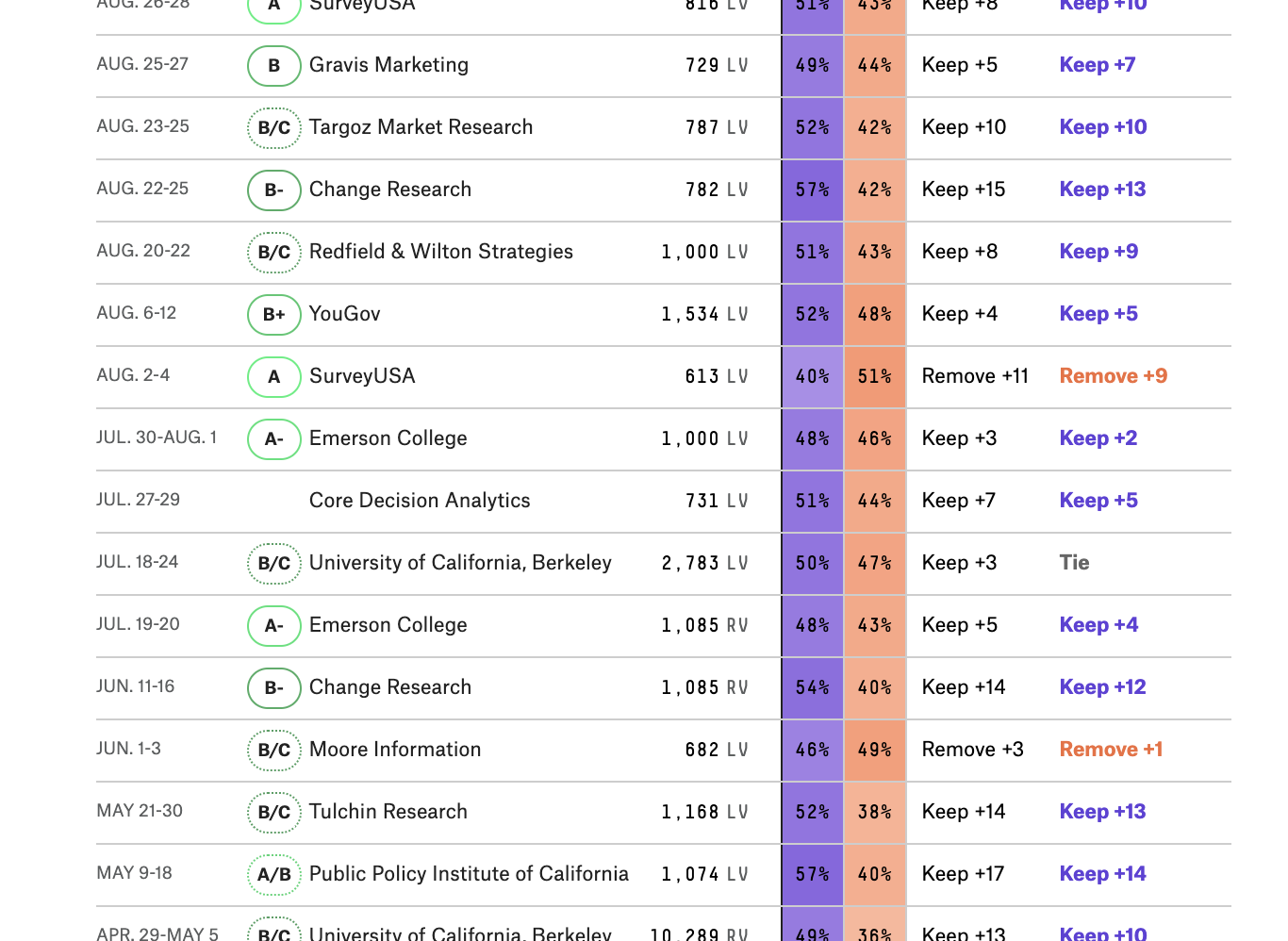

Collecting Polling Data

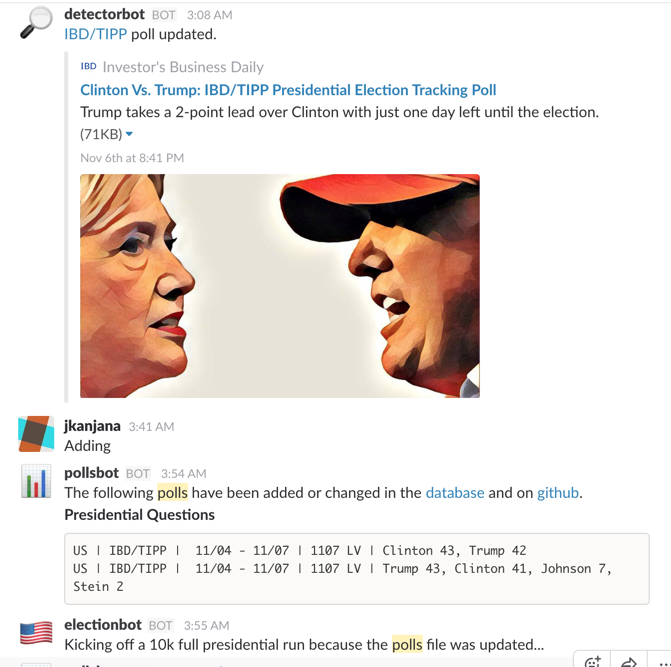

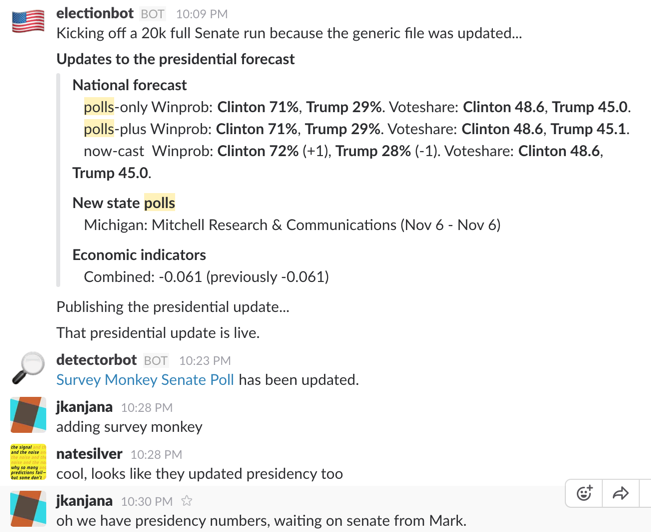

Bots

Internal Workflows

An Individual Poll

An Individual Poll

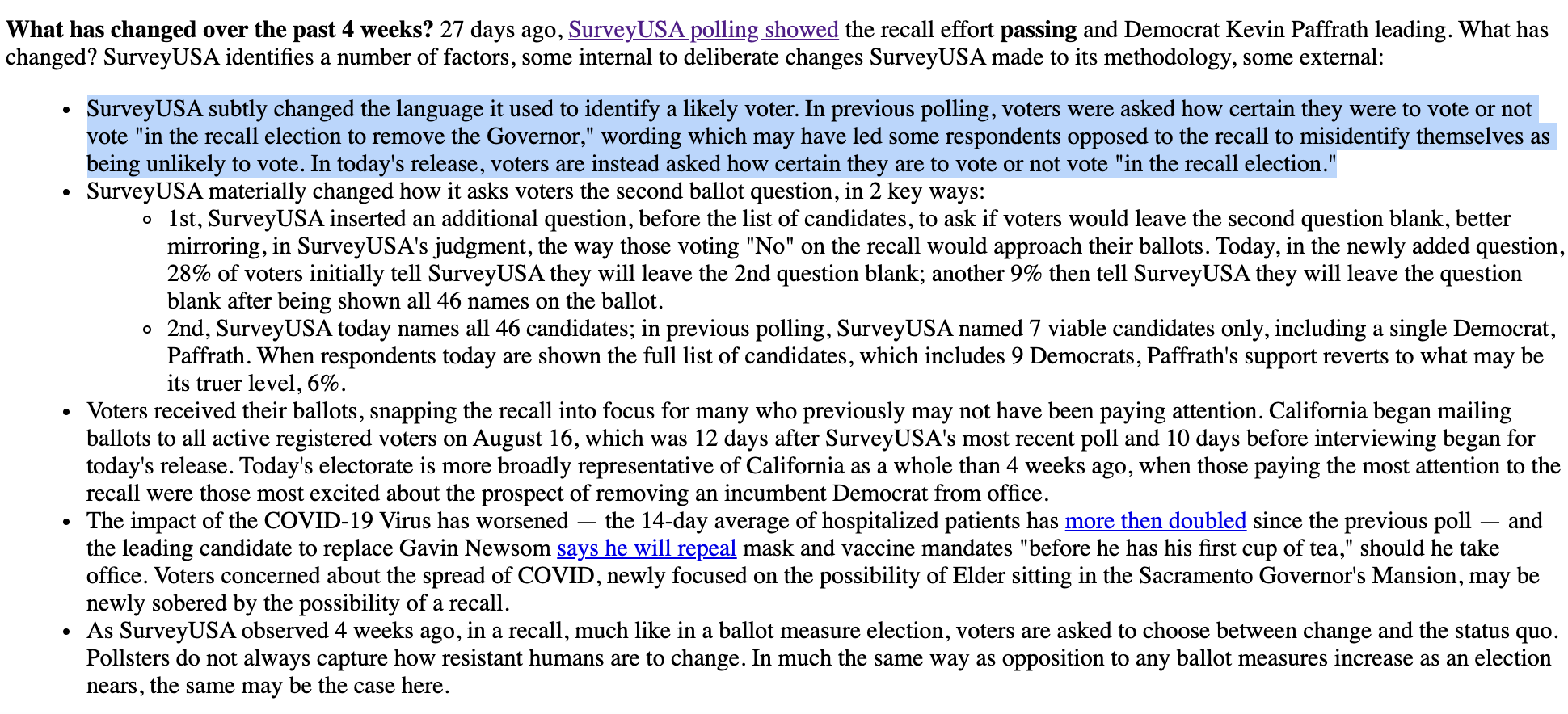

Editorial Descision making

Do you keep the poll in the polling average or do your remove it?

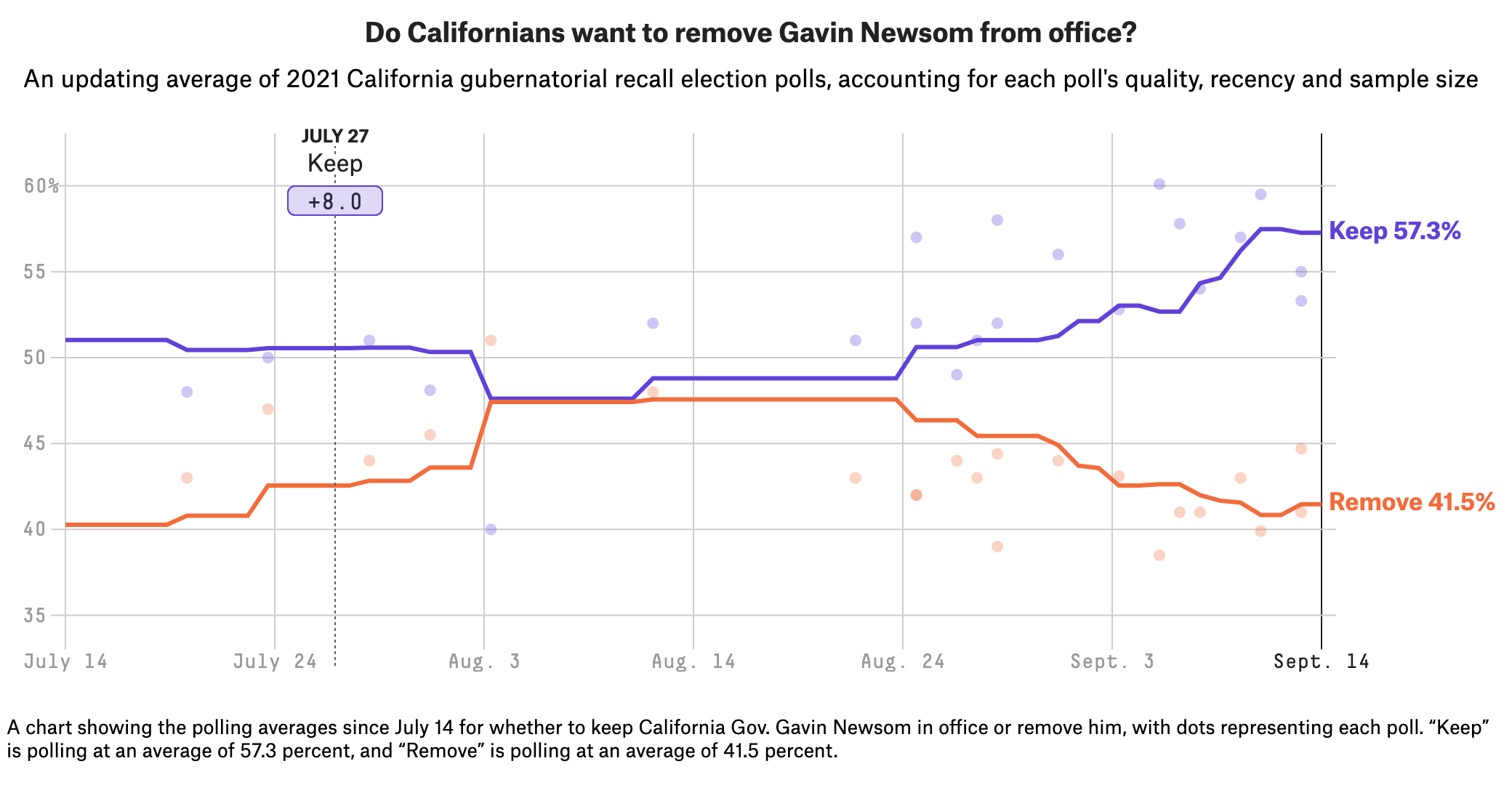

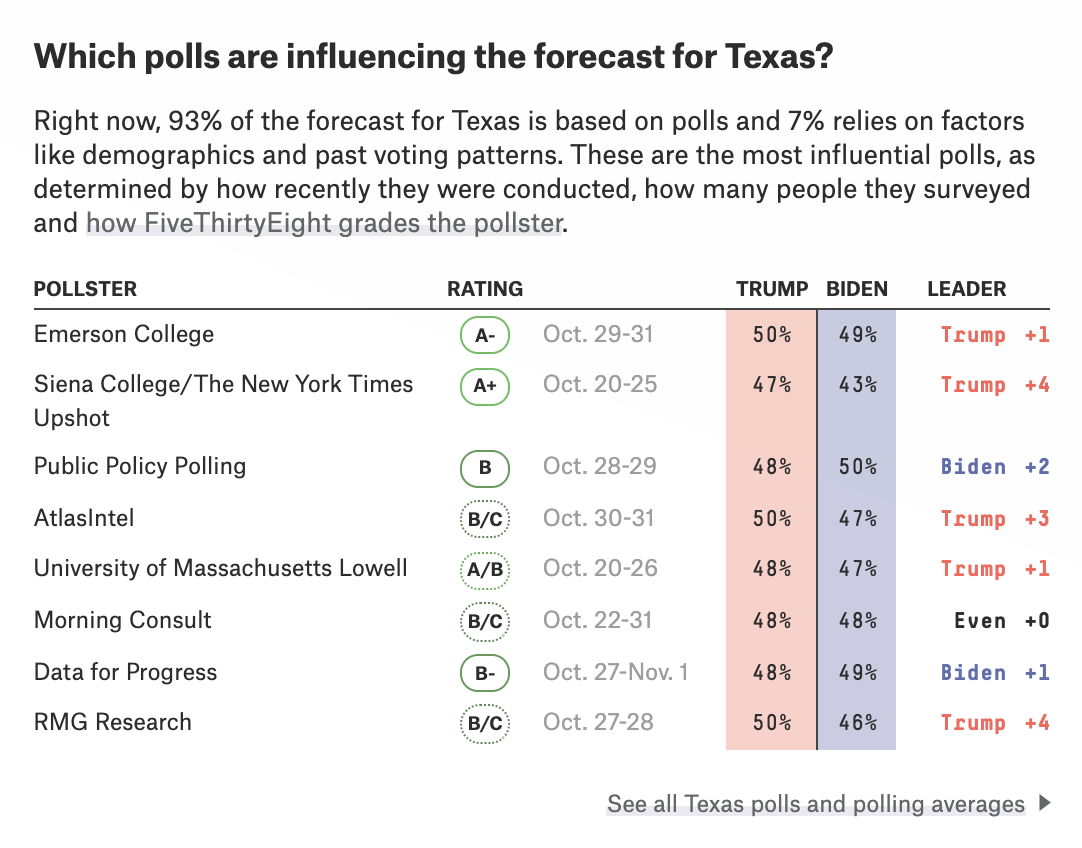

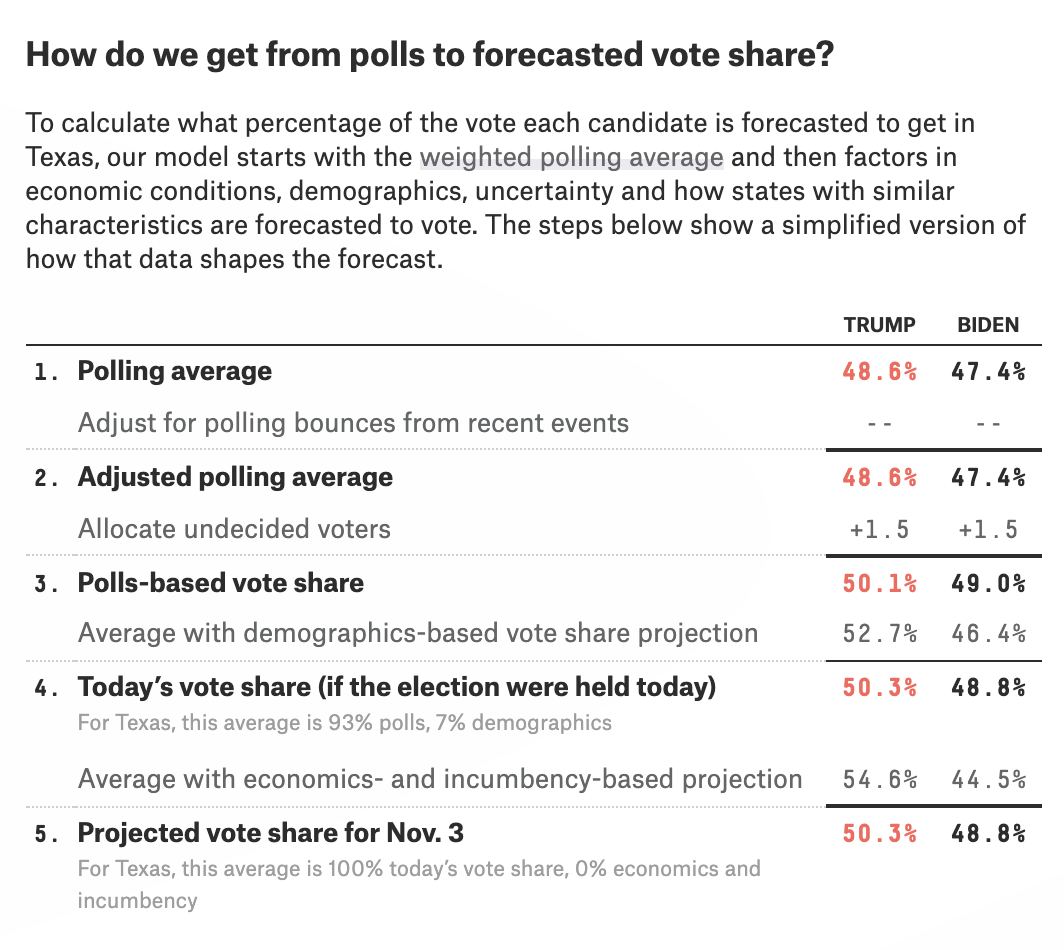

Aggregating Polls

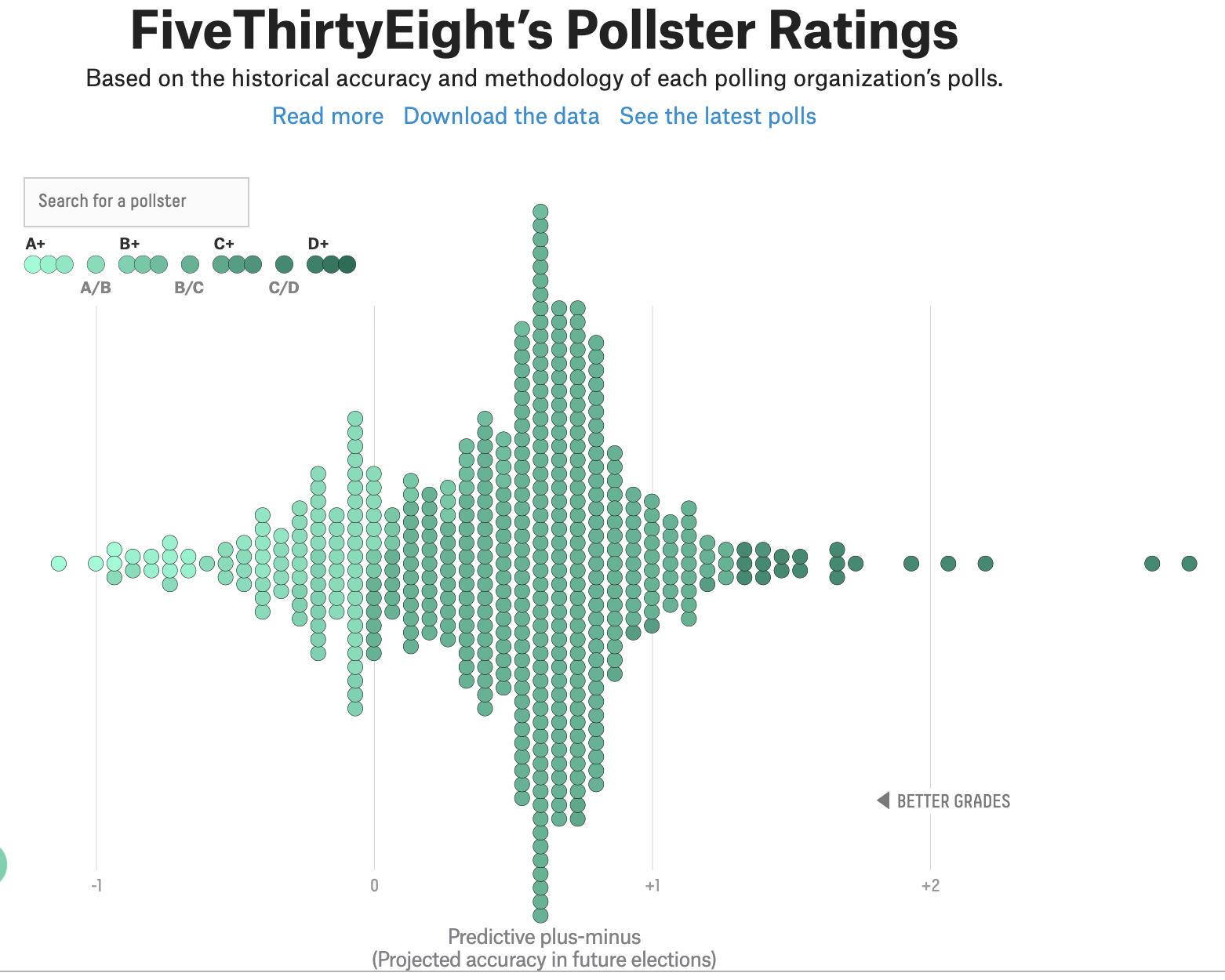

Weighting polls by the historical accuracy of their pollster

But...How do we define historical accuracy?

- Polls from last 21 days prior to any election* since 1998

- What should we take into account?

Senate, House, Governor, Presidential and Pres Primary

-

Step 1: Collect and classify polls

-

Step 2: Calculate simple average error

-

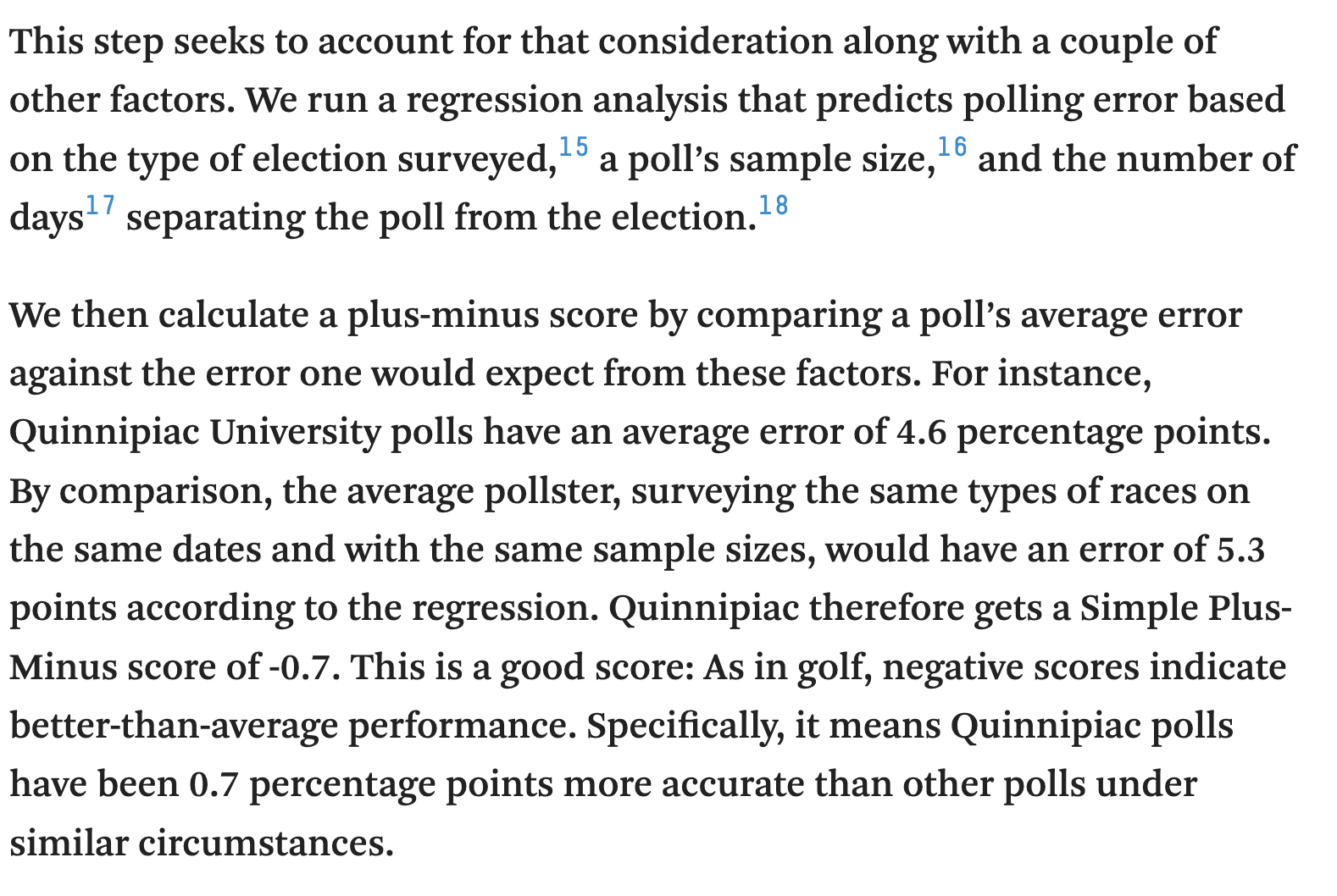

Step 3: Calculate Simple Plus-Minus

-

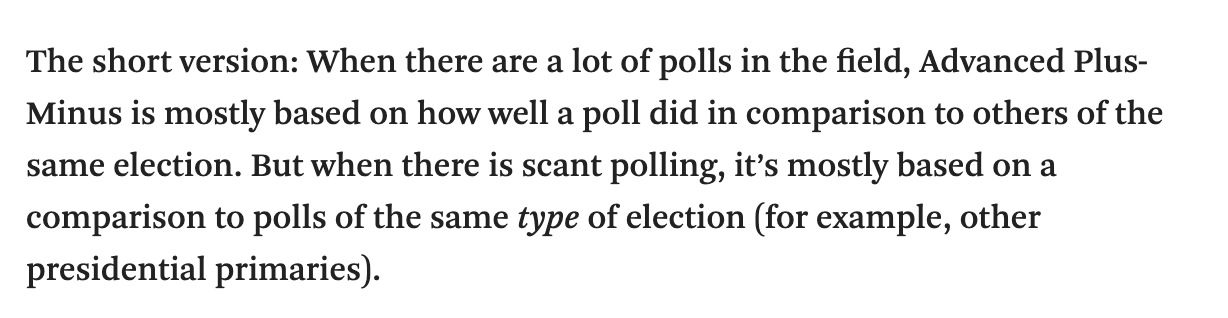

Step 4: Calculate Advanced Plus-Minus

-

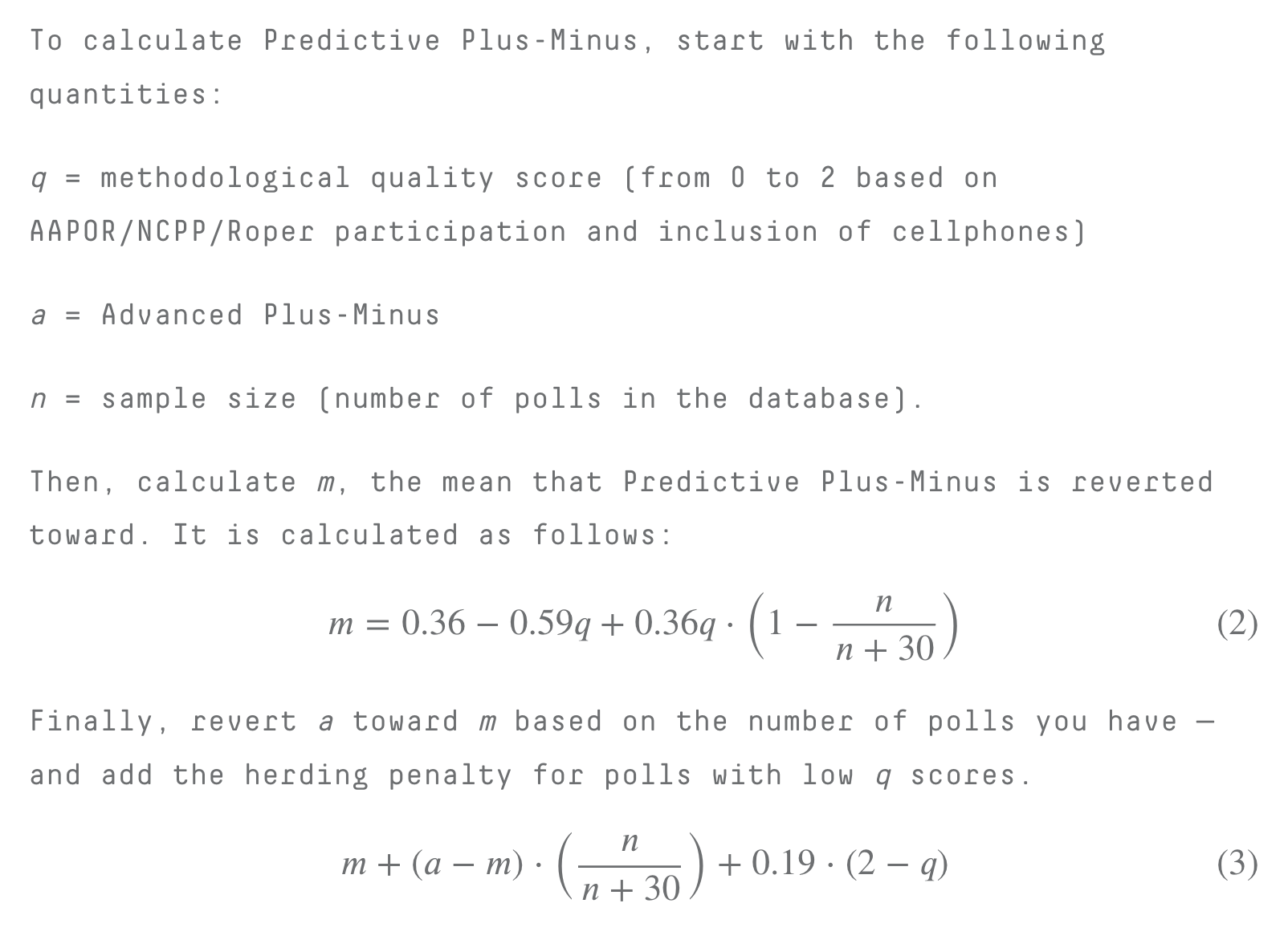

Step 5: Calculate Predictive Plus-Minus

Step 3: Calculate Simple Plus-Minus

Step 4: Calculate Advanced Plus-Minus

Step 5: Calculate Predictive Plus-Minus

Accounts for other markers of quality like methodological standards (NCPP/AAPOR/Roper membership) and whether or not they call cell phones

But if the end goal is to know as much as we can about the state of an election, polls don't tell us everything...

Forecasting Elections

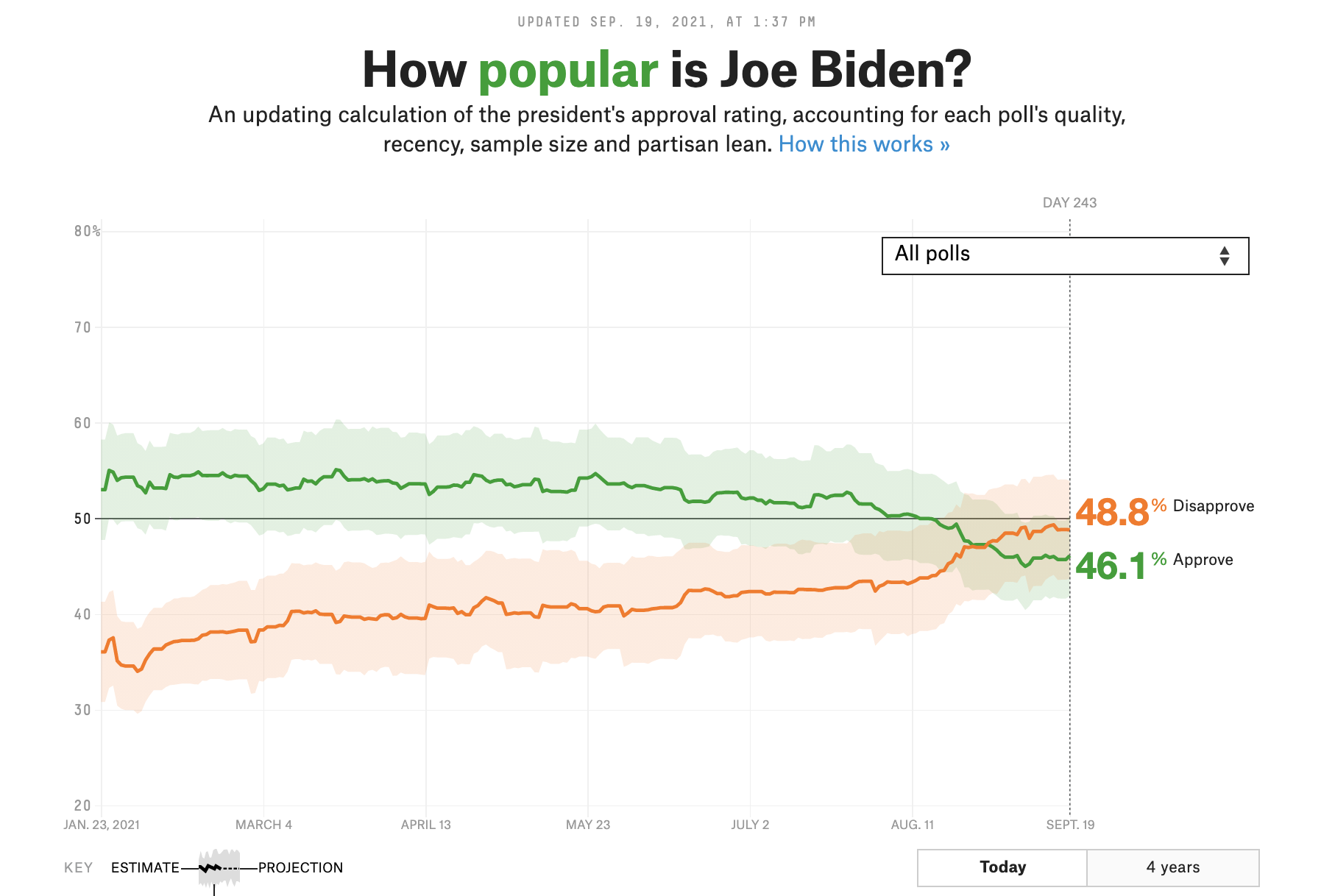

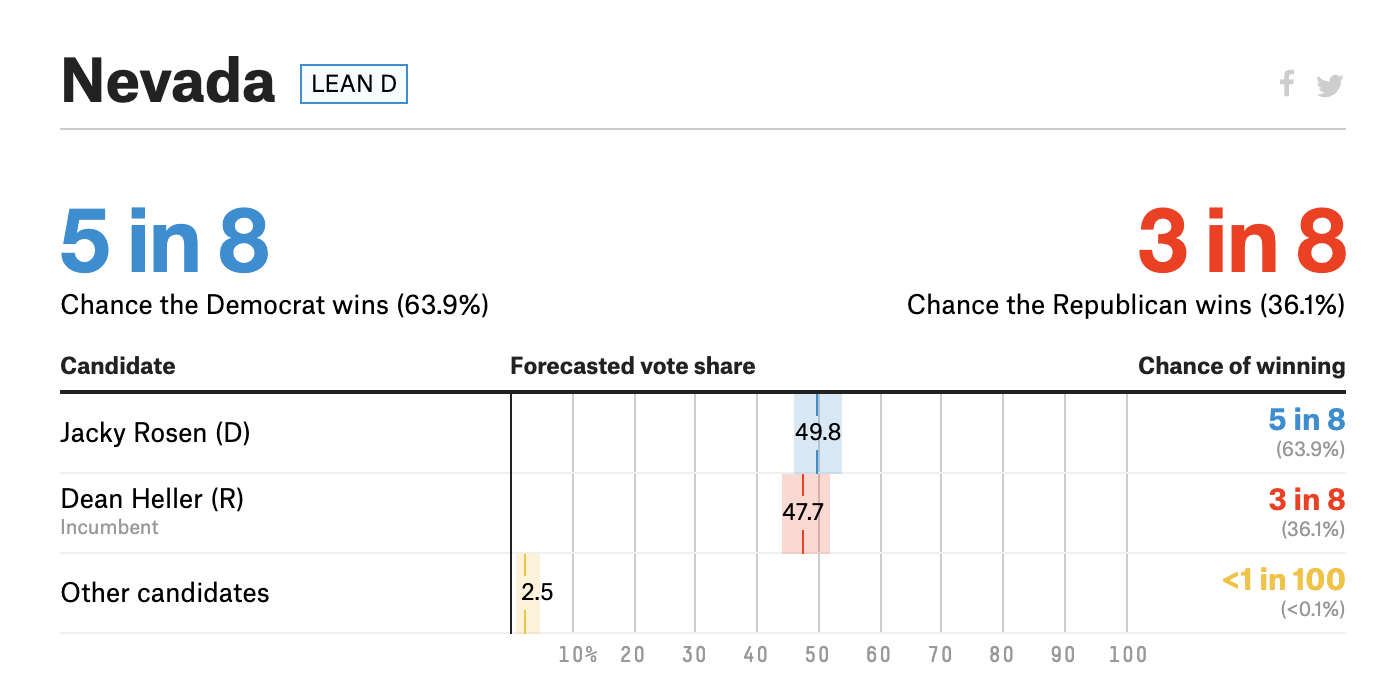

Communicating Probabilistic Data

Communicating Uncertainty

Communicating Uncertainty

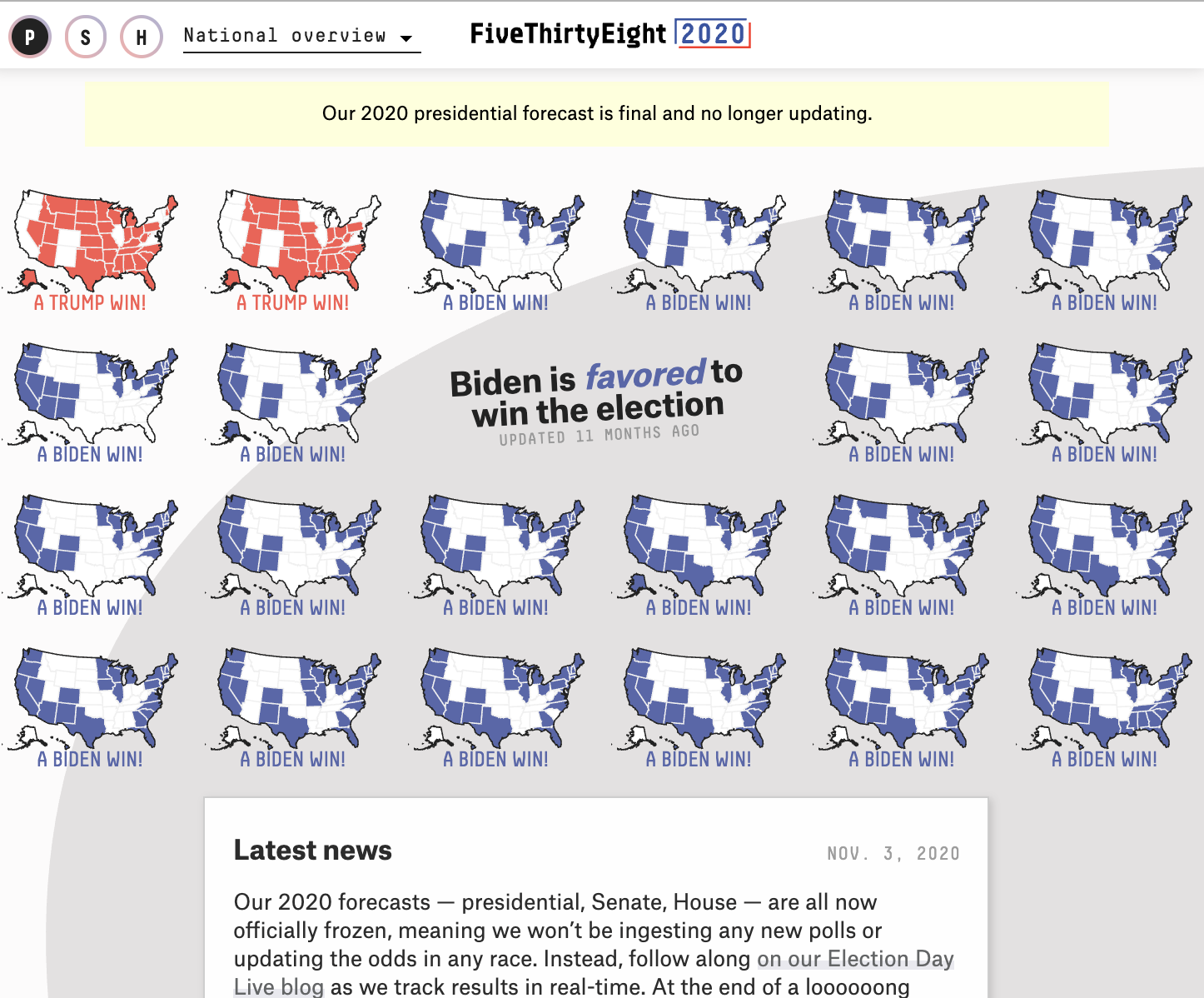

Whether we show the chances in percentages or odds, this is the portion of an election forecast that is most anticipated — and has the most potential to be misunderstood. In 2016, we aimed for simplicity, both visually and conceptually. In 2018, we leaned into the complexity of the forecast. For 2020, we wanted to land somewhere in between.

Communicating Uncertainty

Communicating Uncertainty

https://fivethirtyeight.com/features/how-fivethirtyeights-2020-forecasts-did-and-what-well-be-thinking-about-for-2022/

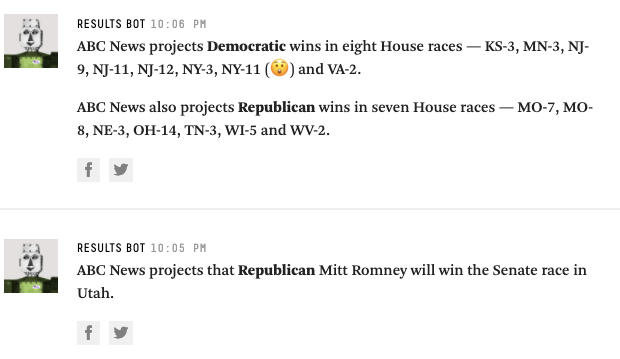

Bots

Lets readers see results that FiveThirtyEight deems unexpected

Expectations are calibrated before results ever start coming in.