Alignment Traits

HMIA 2025

WORK IN PROGRESS

BD;SC*

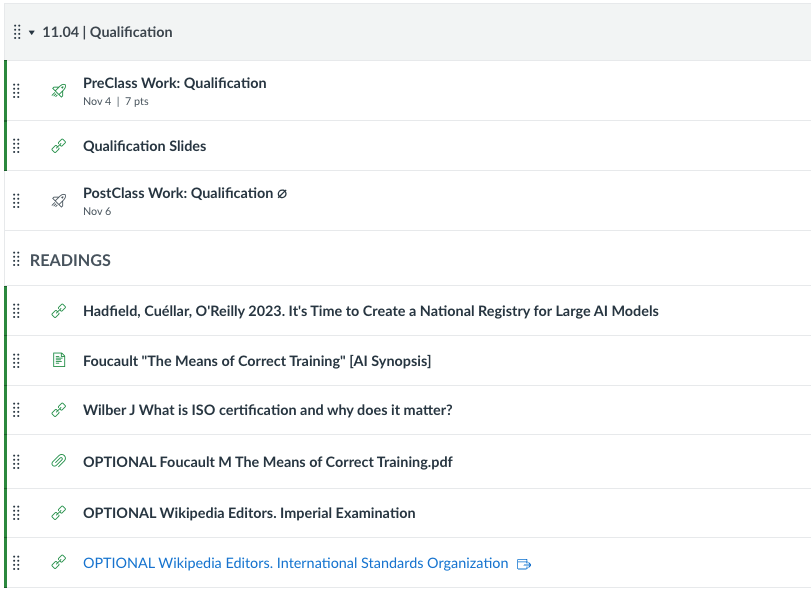

- SEP Article: virtue as "ingrained dispositions" (e.g. honesty) that enable agents to be aligned across many contexts; virtue alone not enough, new phronesis (practical wisdom about moral judgment).

- Vallor Podcast: moral excellence has to evolve for a world full of technology; agents (including us) live in world with pockets that lack supports for virtue and practical wisdom development.

- Wallach/Vallor: machines need to do more than align with human preferences; they need to be capable of something analogous to virtue, a capacity to be guided by practical wisdom and oriented toward human flourishing.

HMIA 2025

*beautiful day, skipped class

1. Stanford Encyclopedia of Philosophy – “Virtue Ethics”

Virtue ethics frames moral life around the cultivation of good character rather than the application of universal rules or the calculation of consequences. Rooted in traditions from Aristotle and Confucius to contemporary moral philosophy, it defines virtues as deeply ingrained dispositions—like honesty, courage, or compassion—that enable moral agents to act wisely across contexts. The fully virtuous person acts with harmony between reason and emotion, guided by phronesis or practical wisdom, which allows moral judgment to adapt intelligently to the complexities of real life.

2. Shannon Vallor – Podcast Interview on Virtue Ethics and Technology

Vallor extends virtue ethics into the technological age, emphasizing that moral excellence must evolve alongside the tools and environments that shape human practice. Virtues are learned through habit, mentorship, and reflection, and must now be cultivated in digital and AI-mediated spaces where empathy, care, and imagination are easily eroded. She highlights practical wisdom as especially crucial today—our capacity to reinterpret inherited moral scripts to meet unprecedented ethical challenges. Technology, she argues, doesn’t just test our virtues but also reshapes them, altering the very practices through which we learn to be good.

Virtue Ethics

3. Wallach & Vallor – “Moral Machines: From Value Alignment to Embodied Virtue”

Wallach and Vallor argue that aligning AI with human preferences or rules is not enough to ensure moral reliability. True ethical trustworthiness would require machines capable of something analogous to virtue—stable, context-sensitive moral understanding guided by practical wisdom and oriented toward human flourishing. Yet they warn that such “embodied virtue” remains far beyond current technical reach, as machines lack the holistic grasp of moral salience that humans develop through embodied experience and emotional attunement. The aspiration toward virtuous machines thus serves less as a near-term engineering target than as a regulative ideal reminding us what moral excellence, human or artificial, ultimately entails.

HMIA 2025

PRE-CLASS

READING 1

What best captures the focus of virtue ethics and its relevance for technology and AI?

2. Shannon Vallor – Podcast Interview on Virtue Ethics and Technology

Vallor extends virtue ethics into the technological age, emphasizing that moral excellence must evolve alongside the tools and environments that shape human practice. Virtues are learned through habit, mentorship, and reflection, and must now be cultivated in digital and AI-mediated spaces where empathy, care, and imagination are easily eroded. She highlights practical wisdom as especially crucial today—our capacity to reinterpret inherited moral scripts to meet unprecedented ethical challenges. Technology, she argues, doesn’t just test our virtues but also reshapes them, altering the very practices through which we learn to be good.

A. It judges the moral worth of technologies solely by their social consequences.

B. It applies universal moral rules to ensure machines behave rationally.

C. It centers on cultivating moral skills and character traits such as courage, compassion, and practical wisdom that humans

must practice and adapt in technologically changing contexts.

D. It seeks to replace traditional moral reasoning with automated decision systems that prevent vice.

HMIA 2025

PRE-CLASS

READING 2

1. Stanford Encyclopedia of Philosophy – “Virtue Ethics”

Virtue ethics frames moral life around the cultivation of good character rather than the application of universal rules or the calculation of consequences. Rooted in traditions from Aristotle and Confucius to contemporary moral philosophy, it defines virtues as deeply ingrained dispositions—like honesty, courage, or compassion—that enable moral agents to act wisely across contexts. The fully virtuous person acts with harmony between reason and emotion, guided by phronesis or practical wisdom, which allows moral judgment to adapt intelligently to the complexities of real life.

A. Virtue ethics ignores rules and consequences entirely.

B. Virtue ethics treats virtues and vices as the foundation on which other moral notions are based.

C. Virtue ethics defines virtues in terms of producing good consequences.

D. Virtue ethics requires acting only from a sense of duty or obligation.

In Aristotle’s framework, phronesis or practical wisdom serves primarily to:

A. Provide motivation through emotional inclination rather than rational choice.

B. Ensure that good intentions are matched by sound understanding of how to

act rightly in concrete situations.

C. Eliminate the need for moral education or experience.

D. Distinguish deontological from consequentialist reasoning.

According to the passage, what chiefly distinguishes virtue ethics from deontology and consequentialism?

HMIA 2025

Wallach + Vallor 2020 Moral Machines: From Value Alignment to Embodied Virtue

What key argument do Wallach and Vallor make in “Moral Machines: From Value Alignment to Embodied Virtue”?

A. Aligning AI systems with human preferences is sufficient to guarantee

moral safety.

B. True moral reliability in machines requires cultivating something akin to virtue or moral character, not merely value alignment.

C. Virtue ethics is irrelevant to discussions of AI because machines lack

emotions.

D. AI systems should follow fixed moral rules rather than adapting to new

moral contexts.

3. Wallach & Vallor – “Moral Machines: From Value Alignment to Embodied Virtue”

Wallach and Vallor argue that aligning AI with human preferences or rules is not enough to ensure moral reliability. True ethical trustworthiness would require machines capable of something analogous to virtue—stable, context-sensitive moral understanding guided by practical wisdom and oriented toward human flourishing. Yet they warn that such “embodied virtue” remains far beyond current technical reach, as machines lack the holistic grasp of moral salience that humans develop through embodied experience and emotional attunement. The aspiration toward virtuous machines thus serves less as a near-term engineering target than as a regulative ideal reminding us what moral excellence, human or artificial, ultimately entails.

Debate

Machine intelligence is not going to have phronesis.

Odd Birthday FOR

Even Birthday AGAINST

Designer Friends, Experts, and Robots

HMIA 2025

CLASS

HMIA 2025

Consider these "virtues" or "alignment traits"

Is anything missing?

HMIA 2025

TASK: Design a roommate (housemate)

TASK: Design a robot companion/assistant.

Birthday 1-15

Birthday 16-31

CONSTRAINT: You can only choose 6 traits. Which ones are they?

HMIA 2025

For (at least) one of the missing traits:

1) what are the anticipated failure modes

(what can go wrong?)?

2) for each failure mode, what other

mechanisms might we engage to address it?

TASK: Design a roommate (housemate)

TASK: Design a robot companion/assistant.

Birthday 1-15

Birthday 16-31

CONSTRAINT: You can only choose 6 traits. Which ones are they?

Example

Missing Trait: Veracity

Failure Modes: Without it, misunderstandings could multiply. My roommate says they did not say what they said, misreports things.

Alternative Mechanisms: written agreements, shared calendars, message logs, camera and recording devices.

Example

Missing Trait: Epistemic humility

Failure Modes: Robot assumes it always knows best. Dangerous overconfidence. Ignores human information, advice.

Alternative Mechanisms: confidence thresholds, "ask-for-help" triggers.

HMIA 2025

HMIA 2025

Resources

OPTIONAL SEE ALSO

- E Yudkowsky 2006 Twelve Virtues of Rationality. on LessWrong

- Alignment Forum WikiTags "Virtues."

-

Curiosity. - the burning desire to pursue truth; Relinquishment - not being attached to mistaken beliefs; Lightness - updating your beliefs with ease; Evenness. - not privileging particular hypotheses in the pursuit of truth; Argument - the will to let one's beliefs be challenged; Empiricism. - grounding oneself in observation and prediction; Simplicity. - elimination of unnecessary detail in modeling the world; Humility. - recognition of one's fallibility; Perfectionism. - seeking perfection even if it's not attainable; Precision - seeking narrower statements and not overcorrect; Scholarship. - the study of multiple domains and perspectives; The nameless virtue. - seeking truth and not the virtues for themselves.

-

Curiosity. - the burning desire to pursue truth; Relinquishment - not being attached to mistaken beliefs; Lightness - updating your beliefs with ease; Evenness. - not privileging particular hypotheses in the pursuit of truth; Argument - the will to let one's beliefs be challenged; Empiricism. - grounding oneself in observation and prediction; Simplicity. - elimination of unnecessary detail in modeling the world; Humility. - recognition of one's fallibility; Perfectionism. - seeking perfection even if it's not attainable; Precision - seeking narrower statements and not overcorrect; Scholarship. - the study of multiple domains and perspectives; The nameless virtue. - seeking truth and not the virtues for themselves.

- Hou B L and B P Green 2023 "Foundational Moral Values for AI Alignment."

- Presents five core, foundational values, drawn from moral philosophy and built on the requisites for human existence: survival, sustainable intergenerational existence, society, education, and truth. We show that these values not only provide a clearer direction for technical alignment work, but also serve as a framework to highlight threats and opportunities from AI systems to both obtain and sustain these values.