The Four Alignment Problems

HMIA 2025

Class Title

HMIA 2025

"Readings"

Video: x [3m21s]

Activity: TBD

PRE-CLASS

CLASS

HMIA 2025

The Four Alignment Problems

CHAI Internship

https://humancompatible.ai/jobs#chai-internship

Outline

- GitHub questions - work in progress

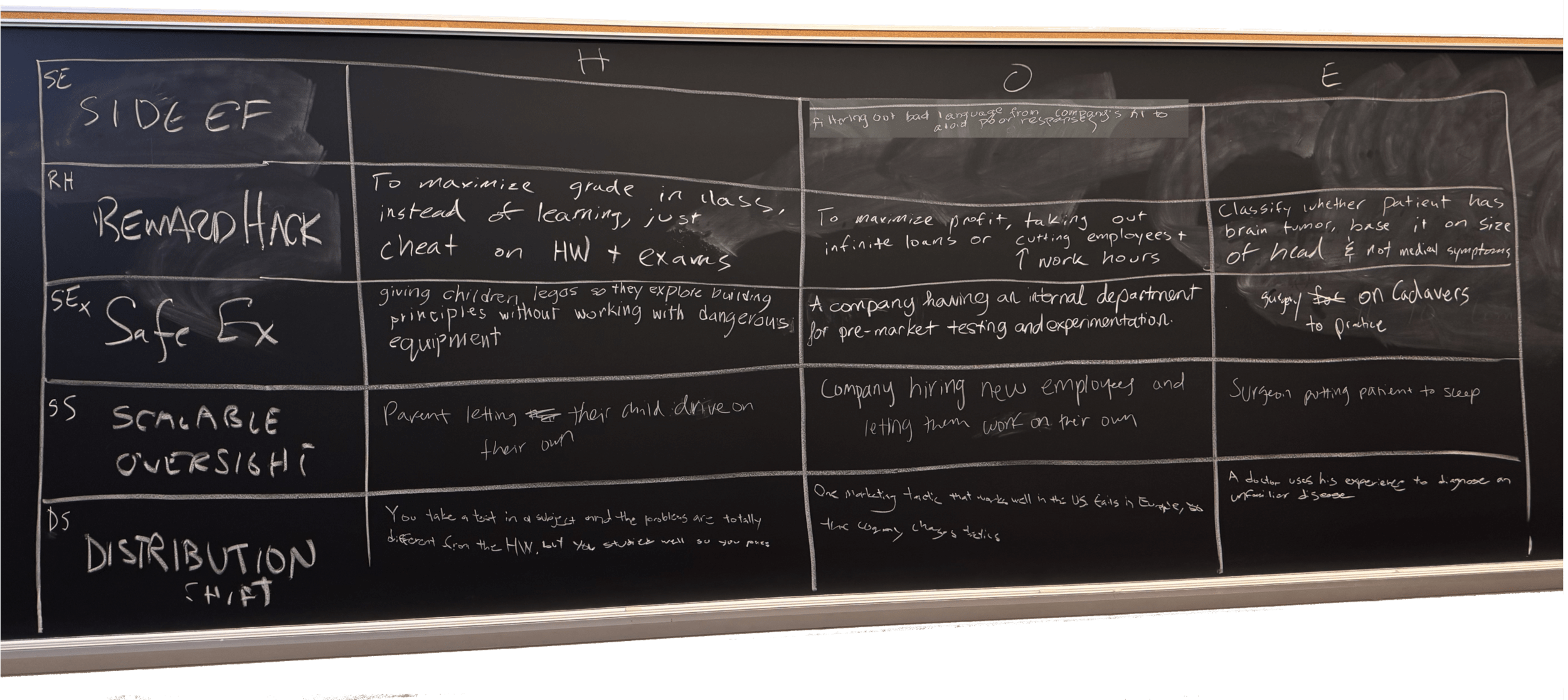

- Review board from last time

- Baseball Game

- Alignment definitions beg the question of human alignment

- The readings

- Our premise

- Concrete Problems of Human Intelligence Alignment

- Concrete Problems of Expert Intelligence Alignment

- Concrete Problems of Organizational Intelligence Alignment

- Next time Analogical Thinking and Solutions

HMIA 2025

The Four Alignment Problems

HMIA 2025

The Four Alignment Problems

from last time

Right. This is distribution shift because the environment you are trying to navigate (the test) draws questions from a distribution that is different from the distribution that the homework problems were sampled from.

The student is optimizing the objective function they were given : get as good a grade as you can. But grades CAN be a bad proxy for learning (the true objective) if they system can be gamed.

This is a tricky one - not clear who the agent is. Seems like you are saying that a company allows pursuit of short term profit to cause it to do things that harm long term sustainability and that undermine it as a "good" company.

This DOES sound like an example of a bad "proxy" measure but not quite clear of what the reward is. Perhaps it's just taking an easy (and ineffective) course of action?

All three great examples. We want kids to learn, we want the marketing department to experiment, we want medical students to learn anatomy. In all three examples we have ways to do that the makes mistakes and failure less costly.

Requires a little context. Parent letting child drive unsupervised is scalable oversight problem to the degree that "you simply can't be with them all the time" and "there is no test you can give that's going to make you feel comfortable about that."

Requires a little context. We allow the unsupervised new employee to work because it is too expensive to fully supervise them. That is, we can watch them a bit, but that process does not scale.

This sounds like an example where surveillance by the patient does NOT scale as oversight. Presumably, we are relying on something else if we allow the surgeon to put us to sleep while she works on us.

A marketing tactic that was tested in the US fails in Europe. Perfect - the distribution of consumer reactions in Europe is different from the distribution of consumer reactions in the US that we "trained" on. An example of NON-robustness until we come up with new tactics in response to new environment.

The doctor who can build on knowledge of diseases to diagnose one she has never seen is demonstrating robustness to distribution shift.

Sounds like we are saying this is an example of avoiding side effects. We have language on our website that does what we need it to do (communicate our message) but it might also offend someone so we find a different phrasing.

PRE-CLASS

HMIA 2025

The Four Alignment Problems

PRE-CLASS

HMIA 2025

The Four Alignment Problems

PRE-CLASS

HMIA 2025

The Four Alignment Problems

PRE-CLASS

HMIA 2025

The Four Alignment Problems

The Four Alignment Problems

CLASS

HMIA 2025

The Four Alignment Problems

What is AI alignment?

Commonality: human provide the point of view for all these definitions - the alignment in each case is WITH THE HUMANS.

BUT...

Working definitions so far:

AI systems do what we want them to do

or

AI systems behave in a way that is consistent with human values

or

AI systems behave in ways that are humanly beneficial.

begs the question

To Beg the Question: assume the truth of an argument or proposition to be proved, without arguing it

are the humans aligned?

CLASS

HMIA 2025

The Four Alignment Problems

CLASS

HMIA 2025

The Four Alignment Problems

A

B

C

D

E

F

G

I

H

CLASS

The Four Alignment Problems

HD and A&C entertain us with the breakdown of shared reality.

Catch-22 and the Wire remind us that organizations, while powerful, can be massively pathological in their normal modes of operation.

The parenting and "failure" pieces remind us of the balancing act necessary to socialize young humans, grow as individuals, and work in organizations.

Goethe and Disney remind us that magic can very easily get out of control.

You worry about the machines, I worry about the humans.

CLASS

The Four Alignment Problems

Humanity is 7 billion agents with human level intelligence living side by side.

Our premises:

Organizations are supra-individual level intelligences that harness the power and attenuate the weaknesses of multiple human intelligences

Experts (magicians, translators, scientists, priests, doctors, engineers) are super intelligences that societies rely on to answer questions that are beyond the scope of ordinary people.

Throughout human history, we have invented widely varying mechanisms for managing the intelligence alignment problems that these situations generate.

We can think (and talk) smarter about machine alignment if we have learned lessons from how humans have grappled with the challenge of aligning other forms of intelligence.

Ryan: Human and Machine Intelligence Alignment Fall 2025

Dan Ryan on Social Theory and Alignment [6m]

This

is not our

first rodeo

CLASS

The Four Alignment Problems

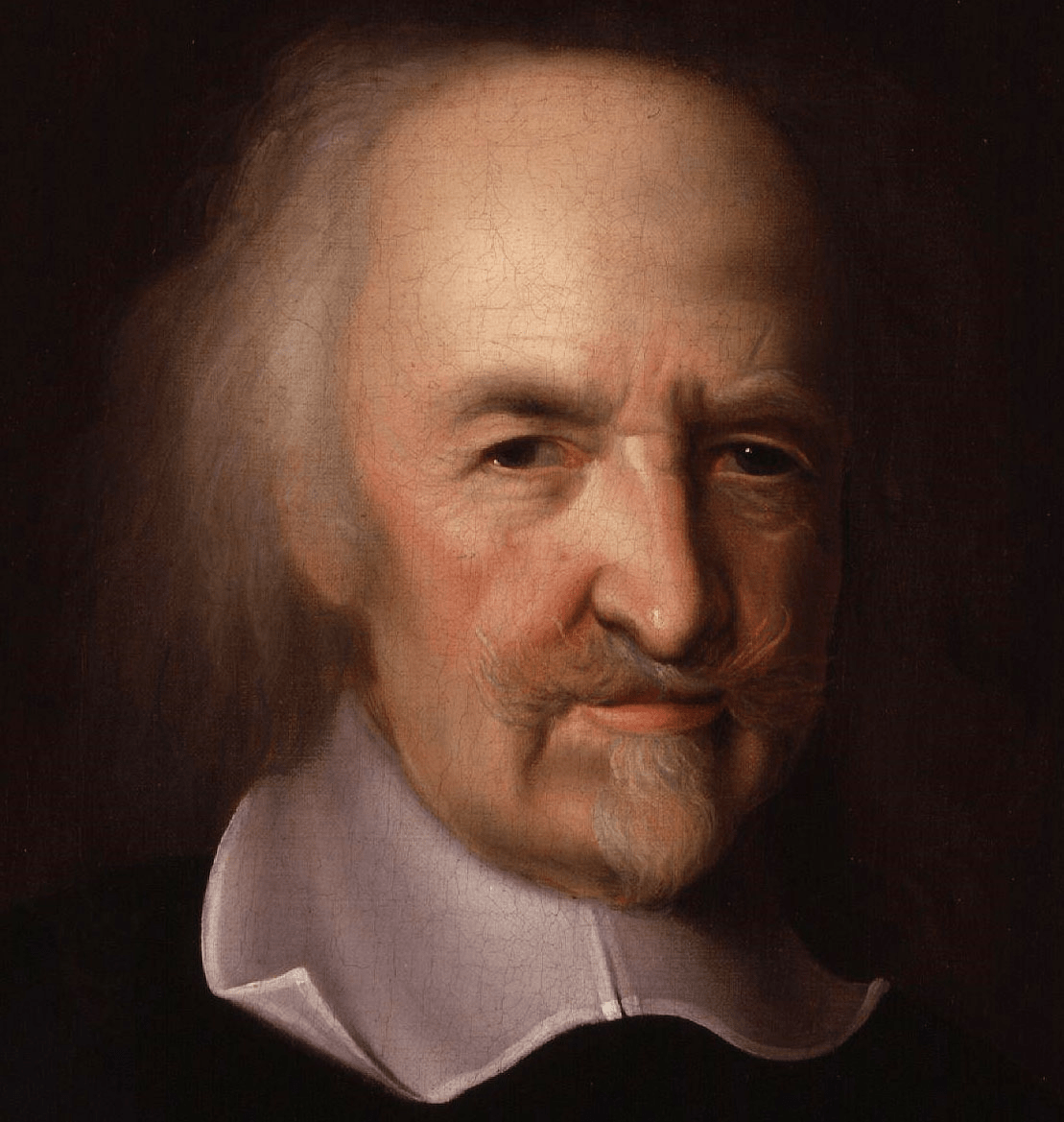

Thomas Hobbes (1588 – 1679) English philosopher

1651 Leviathan

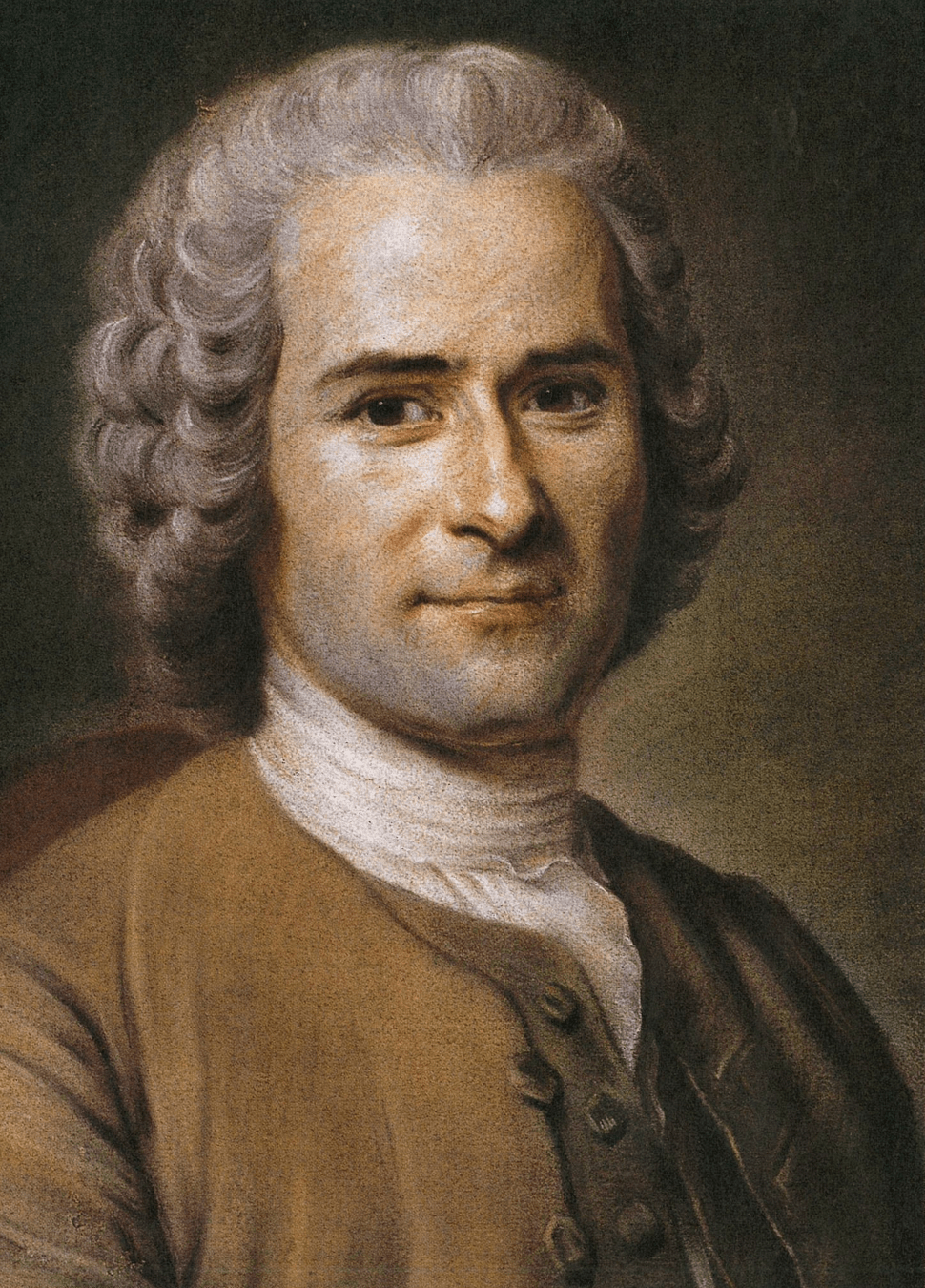

Jean-Jacques Rousseau (1712 – 1778) French(sic) philosopher

1762 The Social Contract

Two Myths of the Origins of Human Intelligence Alignment

[Without political order], there is no place for industry; because the fruit thereof is uncertain: and consequently no culture of the earth; no navigation, nor use of the commodities that may be imported by sea; no commodious building; no instruments of moving, and removing, such things as require much force; no knowledge of the face of the earth; no account of time; no arts; no letters; no society; and which is worst of all, continual fear, and danger of violent death; and the life of man, solitary, poor, nasty, brutish, and short.

"Each of us puts his person and all his power in common under the supreme direction of the general will, and, in our corporate capacity, we receive each member as an indivisible part of the whole."

CLASS

The Four Alignment Problems

Recognition of other agents - "theory of mind"

Neither Myth Addresses the "PreContractual" Prerequisites for Alignment

Communication and shared symbols/meaning

Trust, prediction of others' behavior

Norms, enforcement

Shared Intentionality

CLASS

The Four Alignment Problems

STOP+THINK: How do humans grapple with these human intelligence alignment challenges?

Recognition of other agents - "theory of mind" (treat others as agents not objects)

Communication and shared symbols/meaning

Trust, prediction of others' behavior

Norms, enforcement

Shared Intentionality

Socialization: we are taught the "common sense" of living in a social world

Play: humans spend about 20 years in a sandbox

ReligionEtc: sacred texts are almost all about alignment practices

Confucian Analects (2.4)

The Master said: "At fifteen, I set my heart on learning; at thirty, I took my stand; at forty, I was free from doubt; at fifty, I understood Heaven’s will; at sixty, my ear was attuned; and at seventy, I could follow my heart’s desire without overstepping the bounds."

Reward Hacking: Sermon on the Mount: warns against following rules outwardly while harboring bad intentions; it's the spirit, not just the letter, that matters.

Specification Gaming: Bhagavad Gita: warns against overattachment to outcomes—sometimes goals need to be pursued with humility and context, not rigidly. Also corrigibility: be open to direction/feedback.

Avoid Side Effects: Ten Commandments: a simple, conservative list of “do nots” designed to avoid harm—like a safeguard against AI causing side effects.

Goal Misgeneralization: Confucian Analects: moral alignment takes time and staged learning—don’t assume early success equals lifelong understanding.

The Ten Commandments(Exodus 20:1–17)

"Honor your father and mother. Do not murder. Do not commit adultery. Do not steal. Do not bear false witness. Do not covet your neighbor’s house or spouse."

The Sermon on the Mount (Matthew 5:21–22, 27–28)

"You have heard that it was said, ‘You shall not murder,’ but I tell you, anyone who is angry with a brother or sister will be subject to judgment… You have heard that it was said, ‘You shall not commit adultery,’ but I tell you that anyone who looks at a woman lustfully has already committed adultery with her in his heart."

Bhagavad Gita(2:47)

"You have the right to perform your duties, but not to the fruits of your actions. Never consider yourself the cause of the results, and never be attached to inaction."

The Diamond Sutra (Chapter 32)

"So you should view this fleeting world—

A star at dawn, a bubble in a stream,

A flash of lightning in a summer cloud,

A flickering lamp, a phantom, and a dream."

Deceptive Alignment: Diamond Sutra: teaches suspicion toward appearances and fixed identities—don’t trust the mask; see through illusion.

Reward Ambiguity: Hillel’s Rule: distills complex guidance into a simple principle—"what is hateful to you..."—like a legible value function.

Model Humility, Multiagent, CIRL: An early example of professional alignment, Ptahhotep trains Egyptian elites to exercise power with restraint, compassion, and listening. Alignment here is ethical humility in leadership.

Alignment is empirical: Belief, the text argues, is not proven by words or rituals but by how one treats the most vulnerable. True alignment with divine judgment manifests in daily social behavior.

Hillel in the Talmud (Shabbat 31a)

A prospective convert asked Hillel to summarize the Torah while standing on one foot. Hillel replied: "What is hateful to you, do not do to your neighbor. That is the whole Torah; the rest is commentary. Now go and learn."

The Instructions of Ptahhotep (Egypt, ca. 2400 BCE)

"If you are a man who leads, listen calmly to the speech of one who pleads, and do not stop him from purging his heart. A petitioner likes attention to his words better than the fulfilling of what he asks."

The Qur’an (Surah Al-Ma’un, 107:1–3)

"Have you seen the one who denies the Judgment? That is the one who repulses the orphan and does not encourage the feeding of the poor."

CLASS

The Four Alignment Problems

Epistemic asymmetry and mystification

Experts

Unauthorized practice

Fraud

Sacred/Profane boundaries

Harm and Malicious Use

Expert knowledge can be used with ill intent, either for personal gain, social manipulation, or direct harm

Some knowledge is seen as too dangerous or valuable for general circulation.

Non-experts may imitate the performance of expertise while lacking true competence

In the wrong "hands," the same expert knowledge can be used to harm, deceive or destabilize

Others cannot understand allowing experts to mystify and bamboozle.

The Problem: Epistemic asymmetry exists when one party possesses knowledge that others cannot verify or understand but may need. This creates the opportunity for mystification—where the expert obscures rather than clarifies or teaches—and opens the door to manipulation or undue authority.

Example (Ancient): In Mesopotamia, court diviners interpreted omens from sheep livers to advise kings. The readings were recorded in standardized omen handbooks, yet only state-trained experts could interpret them. Their counsel might sway decisions on war or governance, yet their expertise was largely opaque and insulated from lay evaluation, criticism, or verification.

Example (Modern): Financial derivatives engineers or AI researchers may design systems no policymaker or public body fully understands. Non-experts must rely on designers assurance of safety or robustness with no means to scrutinize or confirm such claims. Scientific peer review or external audits can balance expertise asymmetry (though they also just push the question down the road). When experts follow legitimated methods the epistemological status of their statements is bolstered.

The Problem: The same expert knowledge that can heal, protect, or forecast can also harm, deceive, or destabilize. Societies develop licensing regimes or sacred boundaries to control who may wield this knowledge and how on the belief that meta-rules about who can practice can control how expertise is used.

Example (Ancient): In the Roman Republic, the Lex Cornelia de Sicariis et Veneficis criminalized unlicensed use of love spells, potions, or curses. Magic wasn't banned outright, but was perceived to be a threat to social order if practiced outside approved temples.

Example (Modern): Only licensed physicians may prescribe pharmaceuticals. Unauthorized individuals (e.g., online “biohackers” or fake cancer healers or drug abusers) are criminalized or banned because of the potential for harm despite using similar underlying tools or knowledge.

Here, alignment is use for the intended purpose and we trust that role specification can help to ensure this.

The Problem: Non-experts may imitate the performance of expertise—through jargon, ritual, or charisma—while lacking true competence. Bad outcomes can undermine public trust. Competition can undermine expertise's earning potential.

Example (Ancient): Biblical Israel imposed death on false prophets whose predictions did not come to pass. A prediction’s success was the test of its validity—an early form of empirical falsifiability.

Example (Modern): “Wellness influencers” who peddle pseudoscience about vaccines or nutrition present as experts, but operate without training or peer accountability, often reaching larger audiences than genuine experts.

Here alignment means expertise is detectable and verifiable. Expert knowledge may be inaccessible, but there are generally accepted techniques for assessing expert competence.

The Problem: In many societies some knowledge is seen as too dangerous, special, or valuable for general circulation. General knowledge about what goes on behind the scenes might undermine system legitimacy. Secular power that contributes to social stability might depend on sacred secrets. Confining special knowledge in physical and social space can contribute to order.

Example (Ancient): The Egyptian heka was divine magic practiced by priestly castes. Laypersons could practice harmless folk magic, but temple rituals and “dangerous” books were tightly guarded. In one famous case, conspirators were executed for accessing “destructive magic” from the royal library.

Example (Modern): Modern analogs include export-controlled cryptographic algorithms, classified national security research, or restricted knowledge of synthetic biology protocols. The control mechanisms reflect similar logic: dangerous knowledge should not be democratized.

Alignment that knowledge which cannot or should not be openly shared is nonetheless safely and beneficently held by those entrusted with it, serving the community without destabilizing it, the community gets the benefit of there being secret or sacred knowledge. It depends on alignment and control of the knowers.

The Problem: Expert knowledge can be used with ill intent, either for personal gain, social manipulation, or direct harm. Institutions must anticipate and defend against such use without eliminating the benefits of expertise.

Example (Ancient): The Assyrian state distinguished between sanctioned priests who used incantations to ward off evil, and rogue sorcerers who cast secret curses. The same techniques were treated as legitimate or criminal depending on the actor’s alignment with state goals.

Example (Modern): AI researchers or social media algorithm designers may create tools that optimize engagement, even while knowingly producing addiction or disinformation. The knowledge is real and the tools powerful—but alignment with social welfare may be lacking.

Alignment here means either an irrational commitment to use the knowledge in the public interest or a means of ensuring accountability through peers or codes of conduct or public tribunals.

CLASS

The Four Alignment Problems

STOP+THINK: What Alignment Problems Does Engineering Ethics Address?

Integrity and Honesty: Be honest, trustworthy, and avoid any form of corruption, bribery, or deceptive practices.

Public Safety and Welfare

-

Alignment problem: Negative side effects / “don’t optimize the wrong metric.”

-

Rationale: Safety is elevated above other optimizations precisely because expert outputs are often inscrutable to laypeople. Without this clause, the expert could be rewarded for technical cleverness or efficiency while imposing hidden costs on human health or the environment.

-

Mechanism: Side-effect avoidance; safety overrides serve as explicit guardrails against misaligned optimization.

Competence

-

Alignment problem: Qualification + robustness to distribution shift.

-

Rationale: Competence is a gatekeeping mechanism: only those with the right background may act. But it also functions dynamically: the obligation to abstain when a task moves outside one’s domain is exactly an alignment to distributional shift — not presuming competence in a new domain.

-

Mechanism: Upstream filtering (qualification) and abstention under distributional shift (robustness).

Truthfulness and Objectivity

-

Alignment problem: Epistemic corruption (intrusion of interest into knowledge).

-

Rationale: The expert is a vehicle for the epistemic corpus (engineering knowledge, scientific standards). When their persuasion reflects their own interest (career, client loyalty, expediency), knowledge is corrupted. Truthfulness/objectivity isolates this “vehicle problem”: the expert’s speech must track the abstract body of knowledge, not private interest.

-

Mechanism: Epistemic community alignment — practices that tether persuasion to truth (peer review, transparency, replicability).

Professional Development

Alignment problem: Drift and distribution shift.

Rationale: Knowledge and technology evolve; an expert who remains static drifts out of alignment. Continuous development ensures robustness under changing conditions.

Truthfulness and Objectivity: Issue public statements in a truthful and objective manner.

Competence: Perform services only in areas where engineers have proven expertise and competence.

Public Safety and Welfare: Engineers must hold paramount the safety, health, and welfare of the public in all their endeavors.

Faithful Agency: Act as faithful agents or trustees for employers and clients..

Professional Development: Continue to learn and develop professionally throughout their careers.

Integrity and Honesty

-

Alignment problem: Secrecy / withholding / deception in interaction.

-

Rationale: This isn’t about the truth-value of technical claims, but about communicative reliability in the professional role: reporting results, disclosing errors, not hiding information. It’s about forthrightness in the interaction channel.

-

Mechanism: Transparency & disclosure obligations.

Faithful Agency

-

Alignment problem: Principal–agent misalignment.

-

Rationale: When engaged by a client or employer, the professional undertakes their legitimate interests. Misalignment arises when the agent pursues private goals or substitutes their own judgment for what the client has a right to decide.

-

Mechanism: Incentive alignment to the principal’s interests — bounded by public safety and epistemic integrity.

CLASS

The Four Alignment Problems

Delegation and Oversight

Concrete Problems of Organizational Intelligence

Shared Understanding

Information Problems

Principals and agents

Goal alignment

How to coordinate goal behavior of different parts of an organization

How to ensure agent's own interests are subordinated to organizational interests.

Members distributed in space and time, information siloes, people know what one another know. Opacity.

Not just words, also reflective knowledge of the organization (who does what around here?).

Efficiency requires delegation. Delegation requires relinquishing control/oversight.

Malicious Use

How does society prevent misuse of organization as a tool.

Mission Drift

Capture / Corruption

Adaptivity / Robustness

Internal Free-Riding

In joint production, contributions hard to monitor and measure. It's rational not to contribute.

Organizations as conservative and innovation-averse.

Conflicts of interest can arise both for individuals in the organization and for the organization in society.

Organizations can forget why they were created.

CLASS

The Four Alignment Problems

STOP+THINK: What rules or practices address these challenges?

-

Delegation & Oversight → Supervisory hierarchies, audits

-

Shared Understanding → Org charts, standard operating procedures

-

Common Knowledge → All-hands meetings, intranets, memos

-

Principal–Agent → Performance contracts, incentive pay

-

Goal Alignment → Strategic plans, balanced scorecards, OKRs

-

Malicious Use → External regulation, compliance reviews

-

Mission Drift → Periodic mission/values reviews, board oversight

-

Capture / Corruption → Conflict-of-interest rules, independent watchdogs

-

Transparency / Info Asymmetry → Whistleblower channels, disclosure requirements

-

Adaptivity / Robustness → Pilot projects, scenario planning

-

Internal Free-Riding → Team-based incentives, peer evaluation

Delegation and Oversight

Shared Understanding

Information Problems

Principals and agents

Goal alignment

Malicious Use

Mission Drift

Capture / Corruption

Adaptivity / Robustness

Internal Free-Riding

HMIA 2025

The Four Alignment Problems

Re-read Amodei et al. with focus on the solutions.

Recommended: use ChatGPT to generate analogies for different solutions in Organizational, Expert, and "Human" Realms.

F1: Fibonacci sequence

F2 up is easy, but how about down?

F3 fib(8)? f(7)+fib(6) etc.

F4 tree and repetition. Look at how many steps for F(5)!

F5 could save time with scratch pad

F6 how to communicate this?

F7 Heads down with our own ideas

F8 Pitch our idea to a catcher (classmate)

F9 Catcher gives iteration-forward feedback

F10 Back to the drawing board, make use of feedback

Text

Next Time: Thinking Analogically

POSTCLASS

Text

HMIA 2025

The Four Alignment Problems

Resources

Author. YYYY. "Linked Title" (info)

HMIA 2025

The Four Alignment Problems