Managing Kubernetes Webhook Failures

About me

-

AWS Sr. Cloud Support Engineer (Container and DevOps)

-

AWS Certificates All-Five (2017)

-

Started my Kubernetes journey since 2017 (EKS)

-

Author of《Mastering Elastic Kubernetes Service on AWS》

-

Certificates: CKA, CKS, CKAD (CNCF) + Solution Architect Professional, DevOps Engineer Professional (AWS)

✍️ Created EasonTechTalk.com

🏃 Marathon runner

🤿 PADI AOW diver

https://easoncao.com/about/

Outline

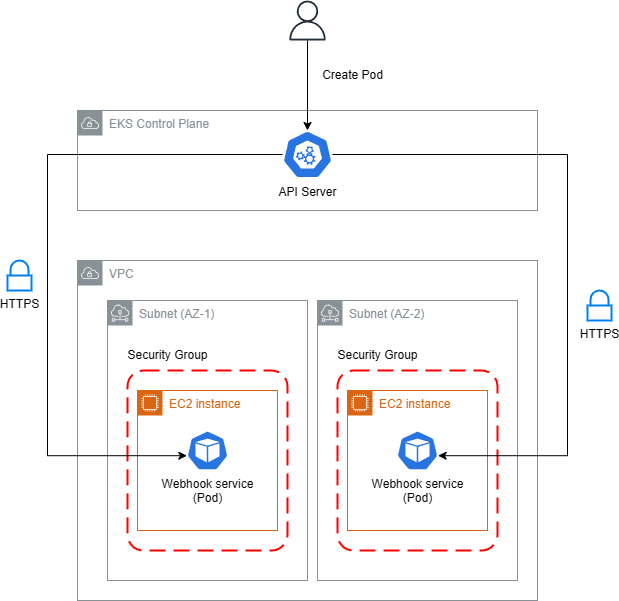

- Overview of Kubernetes admission webhooks

- Understanding Admission Webhooks

- Common Failure Patterns

- Detection and Monitoring

- Best Practices and Solutions

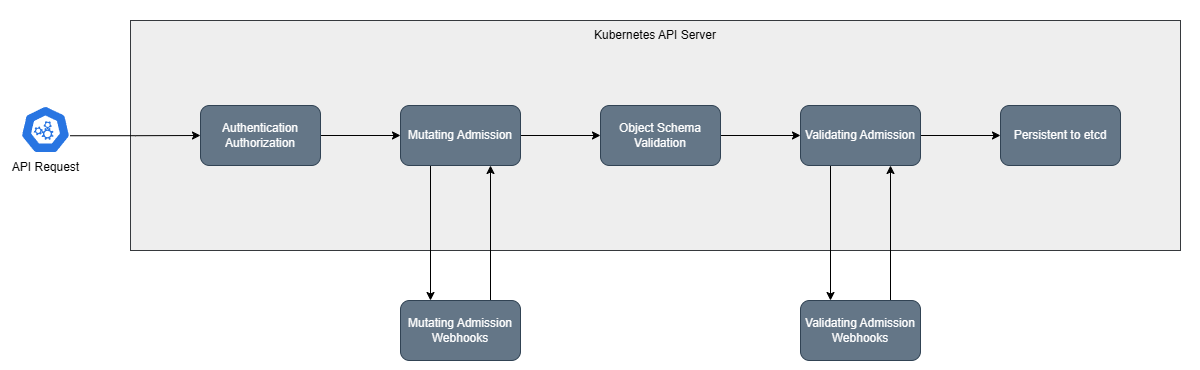

What are Admission Webhooks?

常見使用情境

- 鏡像安全掃描

- 命名空間管理

- Sidecar 注入

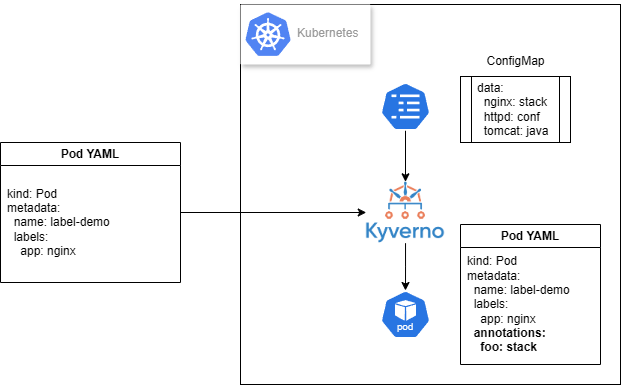

Understanding Admission Webhooks

驗證型 (Validating) Webhook

- 負責驗證資源請求是否符合特定規則,可以接受或拒絕請求,但不能修改請求內容。

修改型 (Mutating) Webhook

- 除了可以驗證請求外,還能修改請求內容,例如新增預設值或注入額外配置。

$ kubectl describe MutatingWebhookConfiguration/aws-load-balancer-webhook

Name: aws-load-balancer-webhook

API Version: admissionregistration.k8s.io/v1

Kind: MutatingWebhookConfiguration

Webhooks:

Admission Review Versions:

v1beta1

Client Config:

Service:

Name: aws-load-balancer-webhook-service

Namespace: kube-system

Path: /mutate-v1-pod

Port: 443

Failure Policy: Fail

Name: mpod.elbv2.k8s.aws

Namespace Selector:

Match Expressions:

Key: elbv2.k8s.aws/pod-readiness-gate-inject

Operator: In

Values:

enabled

Object Selector:

Match Expressions:

Key: app.kubernetes.io/name

Operator: NotIn

Values:

aws-load-balancer-controller

Rules:

API Versions:

v1

Operations:

CREATE

Resources:

pods

...

Common Failure Patterns

Network connectivity issues (Timeout)

error when patching "istio-gateway.yaml": Internal error occurred: failed calling webhook "validate.kyverno.svc-fail": failed to call webhook: Post "https://kyverno-svc.default.svc:443/validate/fail?timeout=10s": context deadline exceeded

Certificate expiration and TLS problems

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedDeployModel 53m (x9 over 63m) ingress (combined from similar events): Failed deploy model due to Internal error occurred: failed calling webhook "mtargetgroupbinding.elbv2.k8s.aws": Post "https://aws-load-balancer-webhook-service.kube-system.svc:443/mutate-elbv2-k8s-aws-v1beta1-targetgroupbinding?timeout=30s": x509: certificate has expired or is not yet valid: current time 2022-03-03T07:37:16Z is after 2022-02-26T11:24:26Z

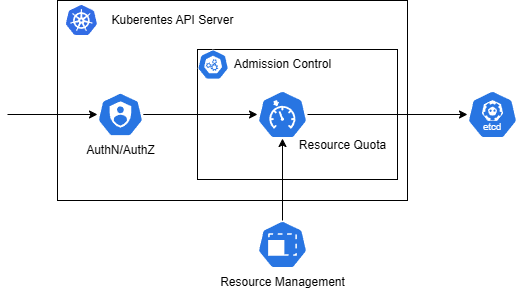

Performance degradation (Control Plane)

E0119 11:37:53.532226 1 shared_informer.go:243] unable to sync caches for garbage collector

E0119 11:37:53.532261 1 garbagecollector.go:228] timed out waiting for dependency graph builder sync during GC sync (attempt 73)

I0119 11:37:54.680276 1 request.go:645] Throttling request took 1.047002085s, request: GET:https://10.150.233.43:6443/apis/configuration.konghq.com/v1beta1?timeout=32s

I0119 11:37:54.831942 1 shared_informer.go:240] Waiting for caches to sync for garbage collector

I0119 11:38:04.722878 1 request.go:645] Throttling request took 1.860914441s, request: GET:https://10.150.233.43:6443/apis/acme.cert-manager.io/v1alpha2?timeout=32s

E0119 11:38:04.861576 1 shared_informer.go:243] unable to sync caches for resource quota

E0119 11:38:04.861687 1 resource_quota_controller.go:447] timed out waiting for quota monitor sync

| Parameter | Default |

|---|---|

| --concurrent-resource-quota-syncs | 5 |

| --resource-quota-sync-period | 5m0s |

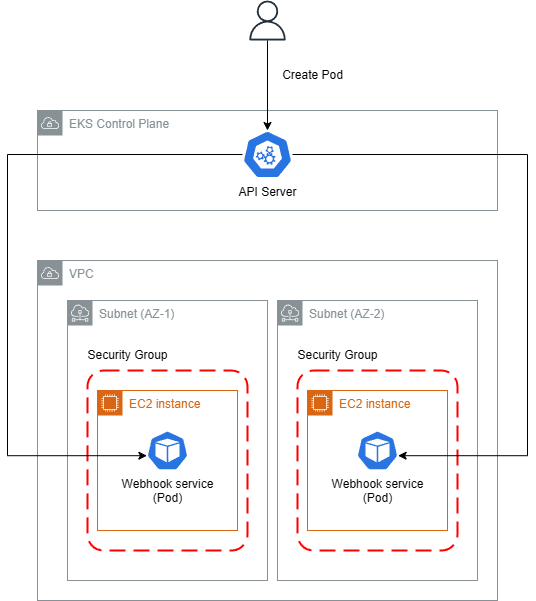

Resource constraints and scaling issues (Data plane)

- CPU

- Memory

- I/O performance (e.g. Disk)

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

namespace: pod-resources-example

spec:

resources:

requests:

memory: "100Mi"

limits:

memory: "200Mi"

containers:

- name: memory-demo-ctr

image: nginx

command: ["stress"]

args: ["--vm", "1", "--vm-bytes", "150M", "--vm-hang", "1"]

Case studies

Case 1: System resource exhaustion

$ kubectl get events -n curl

...

23m Normal SuccessfulCreate replicaset/curl-9454cc476 Created pod: curl-9454cc476-khp45

22m Warning FailedCreate replicaset/curl-9454cc476 Error creating: Internal error occurred: failed calling webhook "namespace.sidecar-injector.istio.io": failed to call webhook: Post "https://istiod.istio-system.svc:443/inject?timeout=10s": dial tcp 10.96.44.51:443: connect: connection refused

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

example-pod-1234 0/1 Evicted 0 5m

example-pod-5678 0/1 Evicted 0 10m

example-pod-9012 0/1 Evicted 0 7m

example-pod-3456 0/1 Evicted 1 15m

example-pod-7890 0/1 Evicted 0 3mResource exhaustion (OOM, DiskPressure or PIDPressure)

-> Node-pressure Eviction

-> Webhook Pod terminated

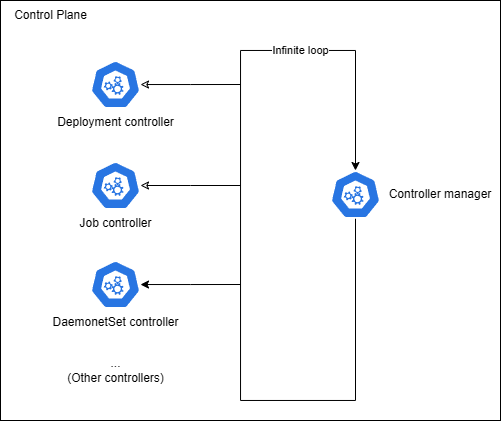

Case 2: Job controller blocked cluster status

I0623 12:15:42.123456 1 job_controller.go:256] Syncing Job default/example-job

E0623 12:15:42.124789 1 job_controller.go:276] Error syncing Job "default/example-job": Internal error occurred: failed calling webhook "validate.jobs.example.com": Post "https://webhook-service.default.svc:443/validate?timeout=10s": dial tcp 10.96.0.42:443: connect: connection refused

W0623 12:15:42.125000 1 controller.go:285] Retrying webhook request after failure

E0623 12:15:52.130123 1 job_controller.go:276] Error syncing Job "default/example-job": Internal error occurred: failed calling webhook "validate.jobs.example.com": Post "https://webhook-service.default.svc:443/validate?timeout=10s": dial tcp 10.96.0.42:443: connect: connection refused

W0623 12:15:52.130456 1 controller.go:285] Retrying webhook request after failure

...

Case 3: Calico + Kyverno: Cluster down

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedCreate 18s (x14 over 60s) daemonset-controller Error creating: Internal error occurred: failed calling webhook "validate.kyverno.svc-fail": failed to call webhook: Post "https://kyverno-svc.kyverno.svc:443/validate/fail?timeout=10s": no endpoints available for service "kyverno-svc"

{

"kind": "Event",

"apiVersion": "audit.k8s.io/v1",

"level": "RequestResponse",

"stage": "ResponseComplete",

"requestURI": "/api/v1/namespaces/calico-system/services/calico-typha",

"verb": "update",

"responseStatus": {

"metadata": {},

"status": "Failure",

"message": "Internal error occurred: failed calling webhook \"validate.kyverno.svc-fail\": failed to call webhook: Post \"https://kyverno-svc.kyverno.svc:443/validate/fail?timeout=10s\": no endpoints available for service \"kyverno-svc\"",

"reason": "InternalError",

"details": {

"causes": [{

"message": "failed calling webhook \"validate.kyverno.svc-fail\": failed to call webhook: Post \"https://kyverno-svc.kyverno.svc:443/validate/fail?timeout=10s\": no endpoints available for service \"kyverno-svc\""

}]

},

"code": 500

},

}

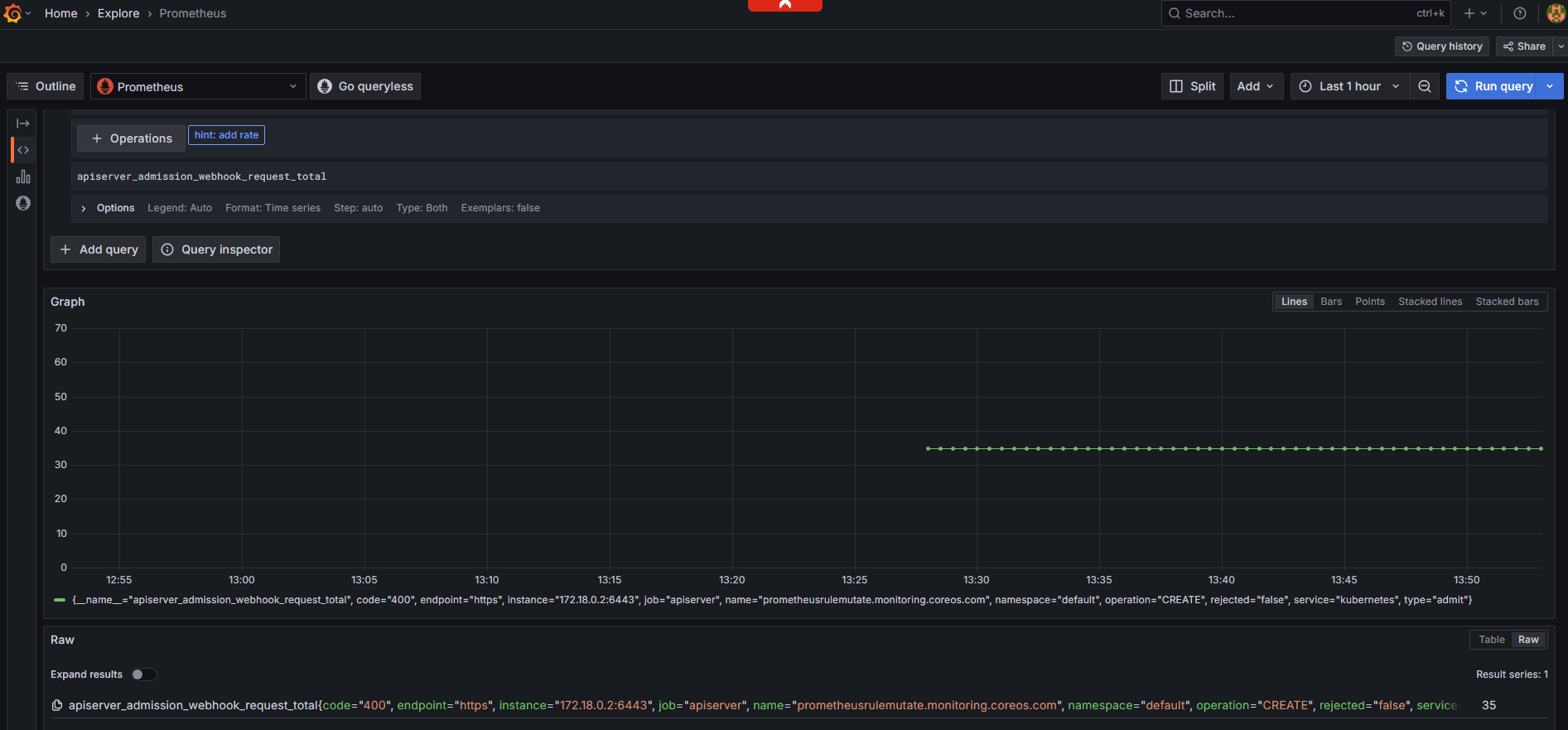

Detection & Monitoring

Key Metrics to Monitor (API Sserver)

- webhook_rejection_count

- webhook_request_total

- webhook_fail_open_count

$ kubectl get --raw /metrics | grep "apiserver_admission_webhook"

apiserver_admission_webhook_request_total{code="400",name="mpod.elbv2.k8s.aws",operation="CREATE",rejected="true",type="admit"} 17

$ kubectl get --raw /metrics | grep "apiserver_admission_webhook_rejection"

apiserver_admission_webhook_rejection_count{error_type="calling_webhook_error",name="mpod.elbv2.k8s.aws",operation="CREATE",rejection_code="400",type="admit"} 17

Monitoring Tools

- kube-prometheus-stack

Monitoring

Application level

針對關鍵性的應用和服務,可以參考是否提供對應的 Prometheus 或對應指標監控應用的可用性,建立特定的監控指標和警報閾值。同時監控相關的資源使用情況,如 CPU、記憶體使用率,以及網絡延遲等指標。

Kubernetes API Server logs

fields @timestamp, @message, @logStream

| filter @logStream like /kube-apiserver/

| filter @message like 'failed to call webhook'

Failure Recovery

Quick Fixes

- Fix webhook service (Review Pod events and logs)

- timeoutSeconds adjustments (default: 10 seconds)

- failurePolicy: Ignore option

apiVersion: admissionregistration.k8s.io/v1

kind: MutatingWebhookConfiguration

metadata:

name: aws-load-balancer-webhook

webhooks:

- clientConfig:

service:

name: aws-load-balancer-webhook-service

namespace: kube-system

path: /mutate-v1-pod

failurePolicy: Fail # <--- Replace "Fail" to "Ignore"

name: mpod.elbv2.k8s.aws

...

Best Practices

資源配置最佳化

- 為 webhook 服務設置適當的資源請求和限制

- 實施水平自動擴展(HPA)以應對負載變化

- 使用 Pod Disruption Budget 確保服務可用性

- 定期輪換 TLS 證書

可靠性和效能

- 如果運行自定義 webhook 服務,確保定義專用的 Namespace,避免故障時影響所有資源

- ValidatingAdmissionPolicy (Kubenretes >= v1.30)

- MutatingAdmissionPolicy (KEP-3962)

Validating Admission Policy

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingAdmissionPolicy

metadata:

name: "demo-policy.example.com"

spec:

failurePolicy: Fail

matchConstraints:

resourceRules:

- apiGroups: ["apps"]

apiVersions: ["v1"]

operations: ["CREATE", "UPDATE"]

resources: ["deployments"]

validations:

- expression: "object.spec.replicas <= 5"

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingAdmissionPolicyBinding

metadata:

name: "demo-binding-test.example.com"

spec:

policyName: "demo-policy.example.com"

validationActions: [Deny]

matchResources:

namespaceSelector:

matchLabels:

environment: test

Kubernetes v1.30 [stable]

Thank you