Investigating ChatGPT Response Variety

To Introduce Natural Language Processing

DH 2024 Reinvention & Responsibility, Washington, DC

Panel: Pedagogy and Generative AI

8 August 2024, 10:30am - 12pm, Hazel Hall 225

Link to these slides: bit.ly/nlp-gpt

Teaching context (1)

- Digital Media, Arts, and Technology ("DIGIT") undergraduate major at Penn State Behrend

-

core requirements, sophomore/junior level in the major include:

- Digit 110: Text Encoding (every fall)

- Digit 210: Large-Scale Text Analysis (every spring)

- Other coursework in the major: digital project design, digital audio, animation, 3D modeling, game design and programming, data visualization

-

Students consider themselves "digital creatives," but also cultivate analysis—usually in the cause of building something.

- Students all learn command line, strong file management skills, care a lot about web design/development to share their projects in my classes.

- My semester project assignments always culminate in static website development on git/GitHub

Teaching context (2)

- Don't expect most students to be highly proficient with programming or language (they're often very impatient readers)

- Do expect them to be curious and want to learn by doing things, “hands-on” tinkering.

- DIGIT 210: Text Analysis class: traditionally where they learn Pythonic application of NLP via NLTK or spaCy

Assignment series, at the start of the semester for DIGIT 210: Text Analysis

This assignment combines a review of their GitHub workflow from previous semesters with the challenge to craft prompts for ChatGPT and save the results as text files in their repositories.

The students save their prompts and outputs from ChatGPT from this assignment, so that they can work with the material later during their orientation to natural language processing with Python.

ChatGPT and Git Review Exercise 1

- Visit ChatGPT: https://chat.openai.com/auth/login and set up a (free) login for yourself (you can use a Google account).

- Experiment with prompts that generate something about a very specific topic with a distinct structure: a news story, a poem, a song, a recipe, an obituary? The structure is up to you, but your challenge with this exercise is to test your prompt repeatedly to review its output and try to craft a prompt that triggers a lot of variation when you repeat it, when you run the same prompt at least 3 times.

- Save the prompt and its responses in a plain text file (saved with file extension .txt).

- Decide where you'll be working on your personal GitHub assignments this semester: (could be a repo you used for a previous class or a new one). Organize a directory in your GitHub repo for ChatGPT experiments like this. Save, add, commit, and push your text file to the new directory.

- To submit the assignment (on Canvas): post a link to your git commit of your ChatGPT experimental prompt and response file.

- In the Text Entry window comment on what what you noticed about Chat GPT's different responses to your prompt.

ChatGPT and Git Review Exercise 2

For this assignment, come up with a prompt that generates more text than last round. Also try to generate text in a different form or genre than you generated with our first experiment. We'll be working with these files as we start exploring natural language processing with Python--so you're building up a resource of experimental prompt responses to help us study the kinds of variation ChatGPT can generate.

Design a prompt that generates one or more of the following on three tries:

-

Questionable, inaccurate, or interesting variety of information about a named entity (person, place, event, etc). (This might not work on a famous mainstream name--but try simple names that might be a bit ambiguous, or historic, or only partially known.)

- Or, see what kinds of associations AI generates around very specific names that are made-up.

- Try a combination with a prompt involving a well-known real name with a made-up entity.

- A topical wikipedia-style information resource with a bibliography / works cited page where you test the results: (do they lead to actual resources?)

- Surprise me—but continue playing the game of trying to make Chat GPT produce three responses on the same topic that have some interesting variety in the "word salad" responses.

In the Canvas text box for this homework, provide some reflection/commentary on your prompt experiment for this round: What surprises or interests you about this response, or what should we know about your prompt experiments this time?

Student prompts and responses

- Most found they could generate more variety by asking ChatGPT to write a fictional story about something with just a few details

- "Write a story about a girl in a white dress" (which strangely resulted in stories in which the girl always lives in a small village near the sea.)

- "Write a new Futurama episode": generated suprisingly long, full scripts, but no salty language for Bender

-

Request to write about "Prince Charles" (as known to the AI based on its pre-2021 training) entering the SCP-3008 universe

- had to deal repeatedly with ChatGPT objecting that it would not produce sensational false news.

- The student each time said that "fiction is okay" and returned a collection of four very entertaining tales that we opted to use as a class for our first modeling of a Python assignment.

In the next two weeks, students worked their way through Pycharm's excellent "Introduction to Python course" while also reading about word embeddings and ethical issues in AI. They annotated these readings together in a private class group with Hypothes.is.

In January 2023, I was surprised that most of my students had not been following all the excitement and dismay about ChatGPT that I had been eagerly following in December, though several students were aware of Stable Diffusion and other applications for generating digital art.

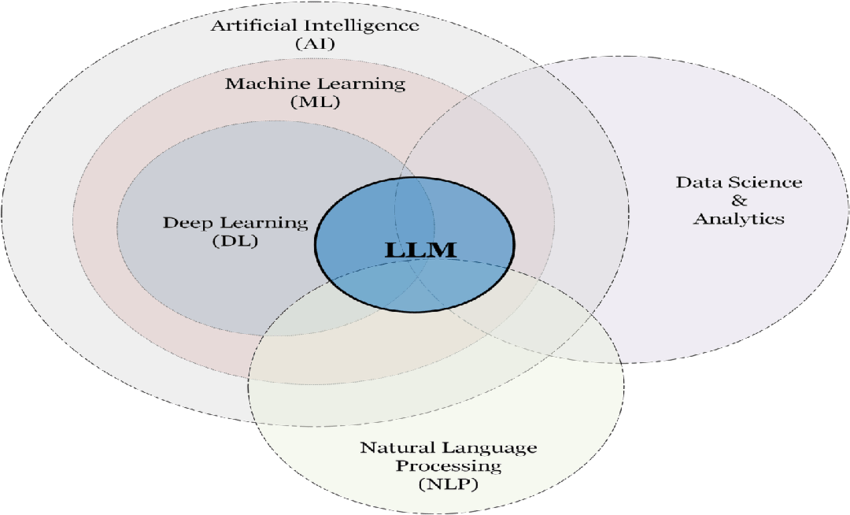

I took time on the first days of semester to discuss how this would likely come up for them in other classes as a source of concern for their assignments, and how we would be exploring it in our class. These discussions introduced some readings about ethical issues in the training of large language models, and led us to discussions of the data on which ChatGPT, Google, and Facebook trained their models.

- Activate your Hypothes.is browser plugin, log in to Hypothes.is and select our Sp2024-DIGIT210 private class group. [....here I linked some guidance for setting up Hypothes.is for those who needed it.]

- Provide at least 7 annotations on the following two readings with questions, comments, ideas, relevant multimedia. You may respond to each other. Each of your annotations should raise an idea or thoughtful question or concern (do more than simply say, "yes, that's interesting".)

Learn about Word Embeddings: Reading Set 1

Annotate Readings on Data Annotation and Labor Issues in AI

- Open the readings, activate your Hypothes.is browser plugin, log in to Hypothes.is and select our Sp2024-DIGIT210 private class group.

-

Provide at least 5 annotations on the following two readings with questions, comments, ideas, relevant multimedia. You may respond to each other. Each of your annotations should raise an idea or thoughtful question or concern (do more than simply say, "yes, that's interesting".)

- Data Annotation in 2023: Why it matters & Top 8 Best Practices by Karatas

- The Exploited Labor Behind Artificial Intelligence by Williams, Miceli, and Gebru

Dated? But useful just read...

Tutorial: Exploring Gender Bias in Word Embedding

- Read, annotate with Hypothes.is, and run executable cells in the Google Colab Notebook cellblocks in Tutorial: Exploring Gender Bias in Word Embedding. Add 3 -5 annotations here (more if you wish).

- Try the hands-on (Your turn!) sections but don't worry about anything that requires writing new Python code just yet unless you want to experiment.

-

This worked in spring 2023, but not spring 2024

- shared notebooks get moldy fast

Write some Python code to do the following:

- Open and read your text file.

- Check to make sure your Pycharm configuration is working (Pycharm will help with this as you press the green arrow to run your code)

- Convert the document to a string

- Review operations you can do on strings from the Introduction to Python tutorial you just completed and practice a little with printing interesting things.

- At the top of the file, import the spacy module as shown in my sample code (let's make sure Pycharm imports this)

- For the first time, add this line to import space's core English linguistics stats package:

nlp = spacy.cli.download("en_core_web_sm")

After the first run, you won't need this line anymore

-

Add

nlp = spacy.load('en_core_web_sm') - Now, read the first 3 sections of the spaCy.io guide: https://spacy.io/usage/spacy-101#whats-spacy

- Process your project text with the

nlp()function, and use spaCy's tokenization algorithm to explore one of the linguistics annotations features documented there. You can also try adapting and building on the code we shared from class for this. -

Our goal is to get you up and running with writing some natural language processing code in Python and see some results printed to your console. Use the

print()commands we've been using to view whether your for loops and variables are working. Write comments in your code if you get stuck. - Save your code, add, commit, and push it to your personal GitHub repo. Post a link to that folder in your GitHub repo to submit the assignment on Canvas.

- FULL ASSIGNMENT HERE (intructions on setting Pycharm in GitHub directories, etc.)

Python NLP Exercise 2: A Word of Interest, and Its Relatives

-

To introduce this exercise, we worked with the student's ChatGPT prompt with Prince Charles entering the SCP3008 universe.

-

I purposefully did not finish developing the dictionary results and asked students to look up how to complete the sorting of values in a Python dictionary.

- At this early stage, they are adapting a “recipe” for their own work, with a tiny coding challenge, and an emphasis on studying outputs and seeing what happens as they make changes to my starter script.

Python NLP Exercise 2: A Word of Interest, and Its Relatives

For this exercise, you may continue working in the Python file you wrote for Python NLP 1 if it worked for you. Or you may choose to work in a new directory.

This time, you will work with a directory of text files so you can learn how to open these and work with them in a for loop. Our objective is to apply spaCy's nlp() function to work with its normalized word vector information.

- Just to explore: We will use this to read word vector information in your documents (and explore what you can see with spaCy's stored information.

- Our objective is for you to choose a word token of interest from your documents and look at what other word tokens are *most similar* to it as calculated via spaCy's vector math similarity calculations.

- We're looking at a collection of files so we can see whether these files contain a different variety of similar words to the word you chose. (And you can play around with studying other words for their similar range. You can also opt to make the code show you the most dissimilar tokens).

Follow and adapt my sample code in the textAnalysis-Hub here to work with your own collection of files.

Read the script and my comments carefully to follow along and adapt what I'm doing to your set of files. Notes:

-

You will see that I've opted to create a dictionary of information and print it out with a structure like this:

{word1 : vectorSimilarityScore; word2 : vectorSimilarityScore, } - But the output isn't sorted. I'd love to see your output to be sorted from highest to lowest similarity.

-

To sort the output, study and adapt a tutorial on how to sort dictionaries based on values:

https://www.freecodecamp.org/news/sort-dictionary-by-value-in-python - Read this very carefully: Understand what sorting does to change the structure of the output (it won't be a dictionary any more, but you can convert it back into one.) Try this.

Push your directory of text file(s) and python code to your personal repo and post a link to it on Canvas.

These outputs are sorted based on highest to lowest cosine similarity values of their spaCy's word embedding. We set a value of .3 or higher as a simple screening measure:

ChatGPT output 1:

This is a dictionary of words most similar to the word panic in this file.

{confusion: 0.5402386164509124, dangerous: 0.3867293723662065, shocking: 0.3746970219959627, when: 0.3639973848847503, cause: 0.3524045041675451, even: 0.34693562533865335, harm: 0.33926869345182253, thing: 0.334617802674614, anomalous: 0.33311204685701973, seriously: 0.3290226136508412, that: 0.3199346037146467, what: 0.3123327627287958, it: 0.30034611967158426}

ChatGPT output 2:

This is a dictionary of words most similar to the word panic in this file.

{panic: 1.0, chaos: 0.6202660691803873, fear: 0.6138941609247863, deadly: 0.43952932322993377, widespread: 0.39420462861870775, shocking: 0.3746970219959627, causing: 0.35029004564759286, even: 0.34693562533865335, that: 0.3199346037146467, they: 0.30881649272929873, caused: 0.3036122578603176, it: 0.30034611967158426}

Chat GPT output 3:

{confusion: 0.5402386164509124, dangers: 0.3939297105912422, dangerous: 0.3867293723662065, shocking: 0.3746970219959627, something: 0.3599935769414534, unpredictable: 0.3458318113571637, anomalous: 0.33311204685701973, concerns: 0.32749574848035723, that: 0.3199346037146467, they: 0.30881649272929873, apparent: 0.30219898650476046, it: 0.30034611967158426}

Chat GPT output 4:

{dangers: 0.3939297105912422, shocking: 0.3746970219959627, anomalous: 0.33311204685701973, struggling: 0.32224357512011353, that: 0.3199346037146467, repeatedly: 0.30081027485016304, it: 0.30034611967158426}

Sample Output from the "Prince Charles in the SCP3008 Universe" Student Collection

So...what were the Prince Charles in the SCP Universe stories from ChatGPT?

You can read them in the published version of this assignment series.

Check out the AI Literacy section! Lots of great pedagogy applications. :-)

Next steps...?

These are simple exercises, intended to introduce students to NLP while they're learning Python in the first weeks of a course.

-

Students can see how they can use NLP tools to learn about the relatedness of outputs. They can begin to think about things (like relatedness) that they might want to explore and measure according to a model.

-

A next step could be moving to a larger spaCy model, and then to a different LLM and try comparing outputs.

- Recommendation: Measuring Document Similarity with LLMs (Sentence-T5 Model), by Greg Yauney, Melanie Walsh, and The AI for Humanists team

- Sentence-T5 works on short texts (up to 525 tokens): Helpful for exploring and curating short input data which can demystify text analysis methods for students.

- Great section on measuring cosine similarity