Introduction to Probabilistic Machine Learning with PyMC3

Daniel Emaasit

Data Scientist

Haystax Technology

Bayesian Data Science DC Meetup

April 26, 2018

Data Science & Cybersecurity Meetup

Materials

Download slides & code: bit.ly/intro-pml

Application (1/3)

- Optimizing expensive functions in autonomous vehicles (using Bayesian optimization).

Application (2/3)

- Probabilistic approach to ranking & matching gamers.

Application (3/3)

- Supplying internet to remote areas (using Gaussian processes).

Media Attention

Academics flocking to Industry

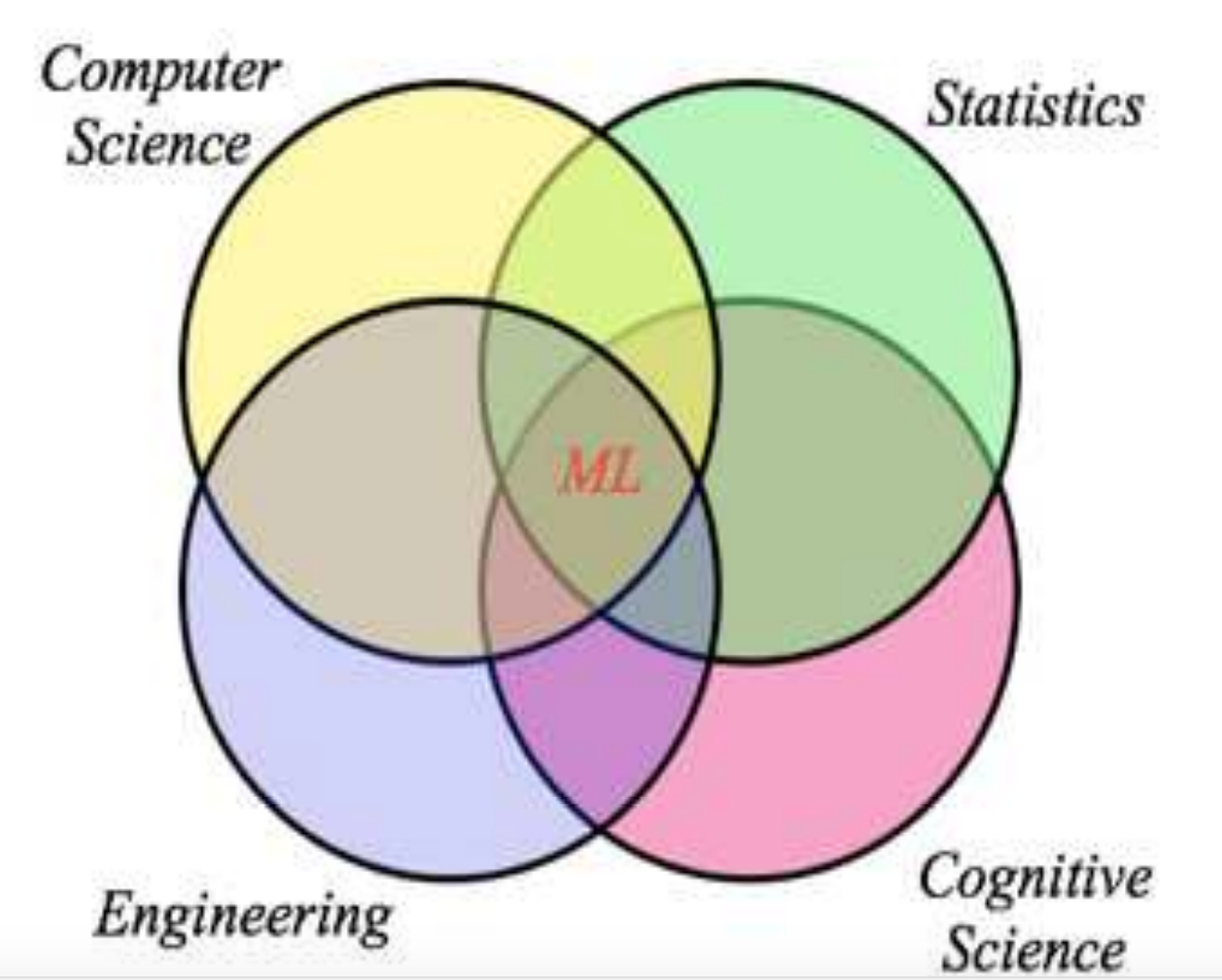

Intro to Probabilistic Machine Learning

ML: A Probabilistic Perspective (1/3)

- Probabilistic ML:

- An Interdisciplinary field that develops both the mathematical foundations and practical applications of systems that learn models of data. (Ghahramani, 2018)

ML: A Probabilistic Perspective (2/3)

- A Model:

- A model describes data that one could observe from a system (Ghahramani, 2014)

- Use the mathematics of probability theory to express all forms of uncertainty

Generative Process

Inference

ML: A Probabilistic Perspective (3/3)

- \(\mathbf{\theta}\) = parameters e.g. coefficients

\(p(\mathbf{\theta})\) = prior over the parameters

\(p(\textbf{y} \mid \mathbf{\theta})\) = likelihood given the covariates & parameters

\(p(\textbf{y})\) = data distribution to ensure normalization

\(p(\mathbf{\theta} \mid \textbf{y})\) = posterior over the parameters, given observed data

where:

Probabilistic ML Vs Traditional ML

| Algorithmic ML | Probabilistic ML | |

|---|---|---|

| Examples | K-Means, Random Forest | GMM, Gaussian Process |

| Specification | Model + Algorithm combined | Model & Inference separate |

| Unknowns | Parameters | Random variables |

| Inference | Optimization (MLE) | Bayes (MCMC, VI) |

| Regularization | Penalty terms | Priors |

| Solution | Best fitting parameter | Full posterior distribution |

Limitations of deep learning

- Deep learning systems give amazing performance on many benchmark tasks but they are generally:

- very data hungry (e.g. often millions of examples)

- very compute-intensive to train and deploy (cloud GPU resources)

- poor at representing uncertainty

- easily fooled by adversarial examples

- finicky to optimize: choice of architecture, learning procedure, etc, require expert knowledge and experimentation

- uninterpretable black-boxes, lacking in transparency, difficult to trust

Probabilistic Programming

Probabilistic Programming (1/2)

- Probabilisic Programming (PP) Languages:

- Software packages that take a model and then automatically generate inference routines (even source code!) e.g Pyro, Stan, Infer.Net, PyMC3, TensorFlow Probability, etc.

Probabilistic Programming (2/2)

- Steps in Probabilisic ML:

- Build the model (Joint probability distribution of all the relevant variables)

- Incorporate the observed data

- Perform inference (to learn distributions of the latent variables)

Demo

Resources to get started

- Winn, J., Bishop, C. M., Diethe, T. (2015). Model-Based Machine Learning. Microsoft Research Cambridge.

- R. McElreath (2012) Statistical Rethinking: A Bayesian Course with Examples in R and Stan (& PyMC3 & brms too)

- Probabilistic Programming and Bayesian Methods for Hackers: Fantastic book with many applied code examples.

Thank You!

Appendix

References

- Ghahramani, Z. (2015). Probabilistic machine learning and artificial intelligence. Nature, 521(7553), 452.

- Bishop, C. M. (2013). Model-based machine learning. Phil. Trans. R. Soc. A, 371(1984), 20120222.

- Murphy, K. P. (2012). Machine learning: a probabilistic perspective. MIT Press.

- Barber, D. (2012). Bayesian reasoning and machine learning. Cambridge University Press.