Reproducibility crisis

Or: the many facets of a scientific crisis that forced us to rethink the way we do science

| smoia | |

| @SteMoia | |

| s.moia.research@gmail.com |

Lausanne, 11.09.23

(Formerly) EPFL, Lausanne, Switzerland, and UniGE, Geneva, Switzerland; physiopy (https://github.com/physiopy)

Reproducibility crisis

Or: the many facets of a scientific crisis that forced us to rethink the way we do science

Lausanne, 11.09.23

Disclaimers

1. I am an "open" scientist. I have a bias toward the

core tenets of Open Science as better practices.

2. My background is psychology, I am a methodologist

and neuroscientist specialised in neuroimaging.

While most examples come from my field, the

concepts are cross-disciplinary,

3. It's easy to take the higher moral ground - don't.

I won't judge if you did something I'll talk about.

I'm here to raise awareness of an issue. Speak

about it freely, we're here to learn from it.

0. Rules & Materials

You're asking questions,

I'm doing that too!

This is a new chapter

Take home #0

This is a take home message

1. Terminology

Replicable, Robust, Reproducible, Generalisable

The Turing Way Community, & Scriberia, 2022 (Zenodo). Illustrations from The Turing Way (CC-BY 4.0)

Guaranteeing reproducibility is important for "reusable, transparent" research.

1

2

3

4

2. Reproducibility crisis

Where does it come from?

2005: "metascience" gets its name

2010s:

- Failed attempts to reproduce core concepts of social psychology and biomedical research

- Studies on p-hacking and questionable research practices

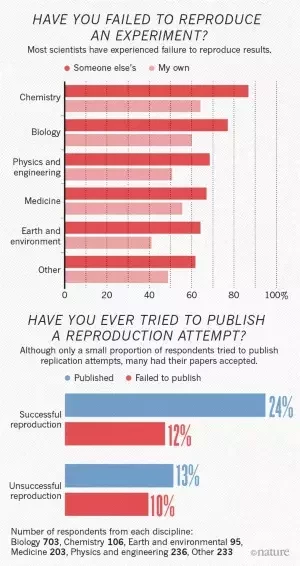

2016: Survey by Nature¹: 70% of researchers failed to reproduce other's results, 50%+ failed to reproduce their own

1. Baker 2016 (Nature)

Causes of "ir-reproducibility"

- Unavailable data / code / materials

- Lack of sufficient information / procedures

- Human mistakes / bugs

- "Novelty over reproducibility" culture

- Different analysis environments (OS / libraries / versions)

- Fraud

Really reproducible?

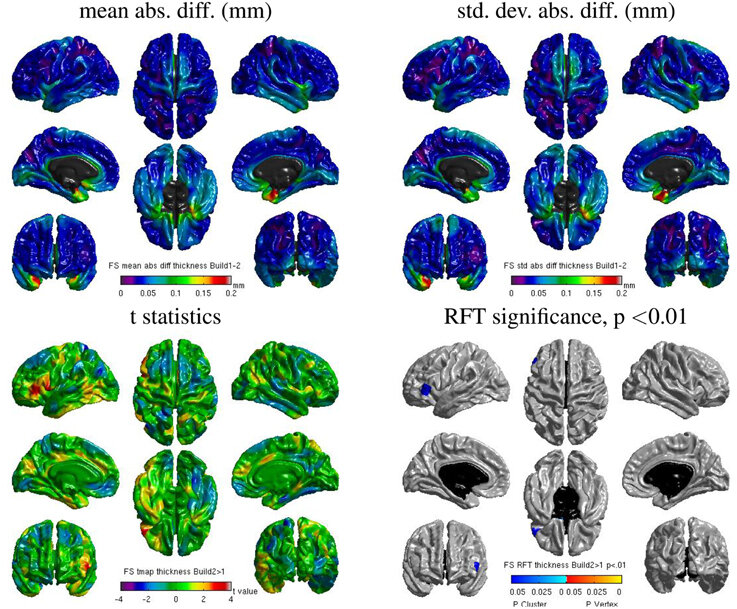

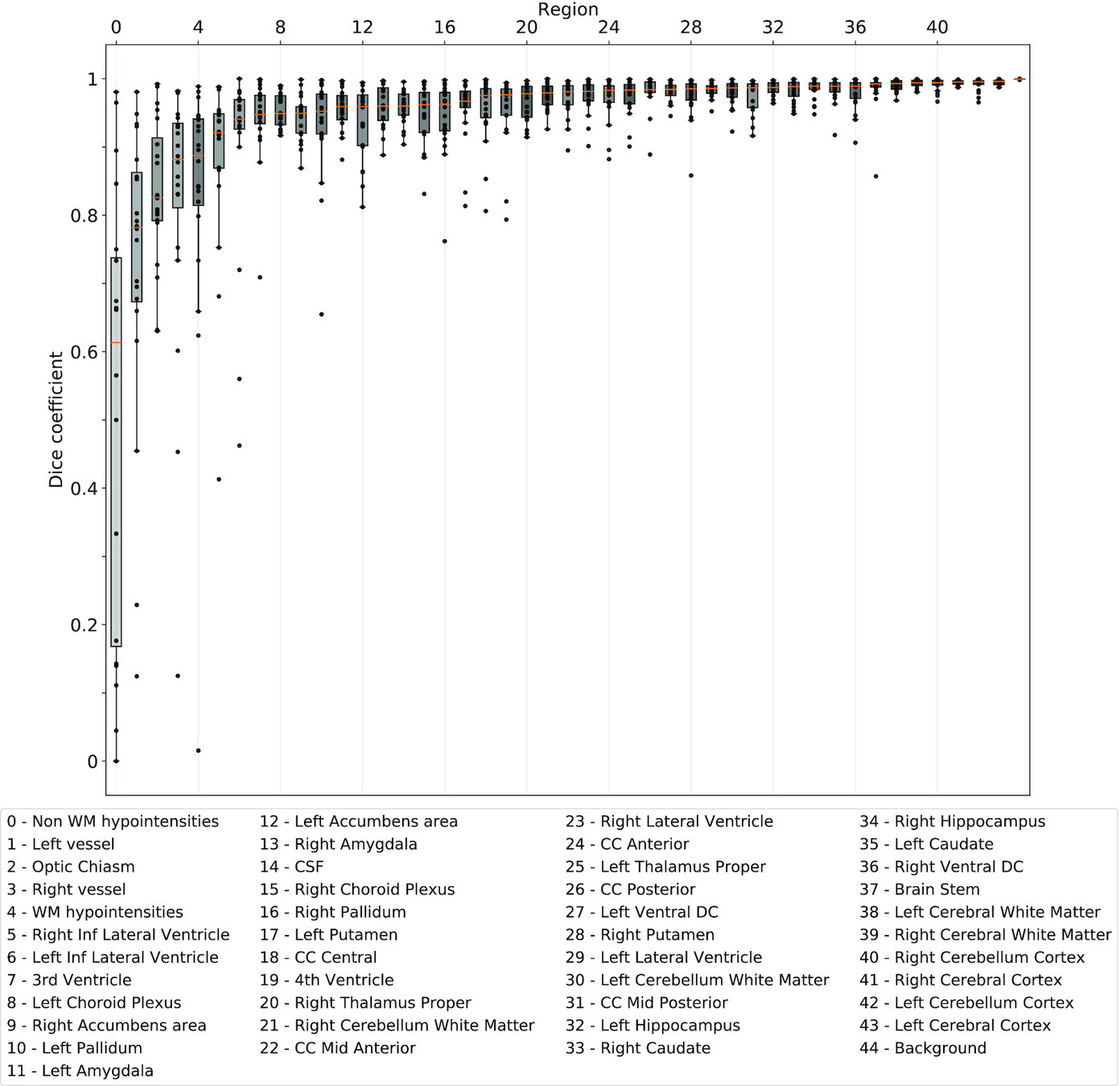

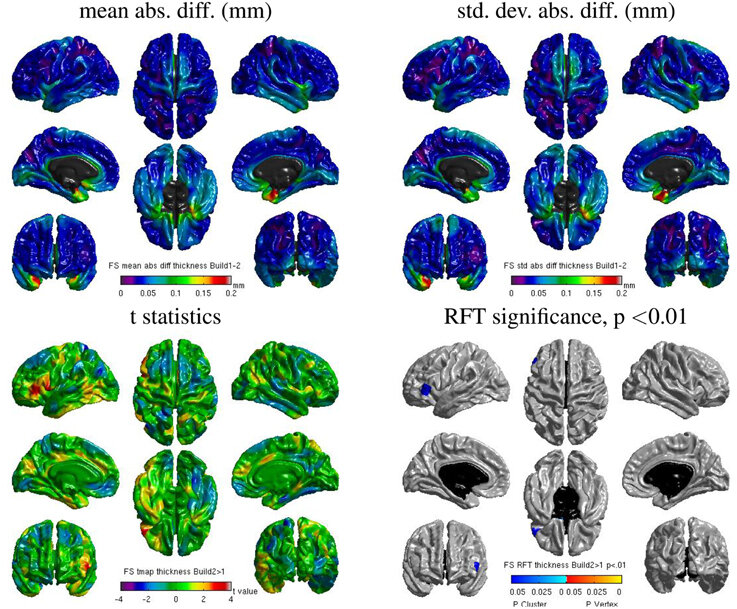

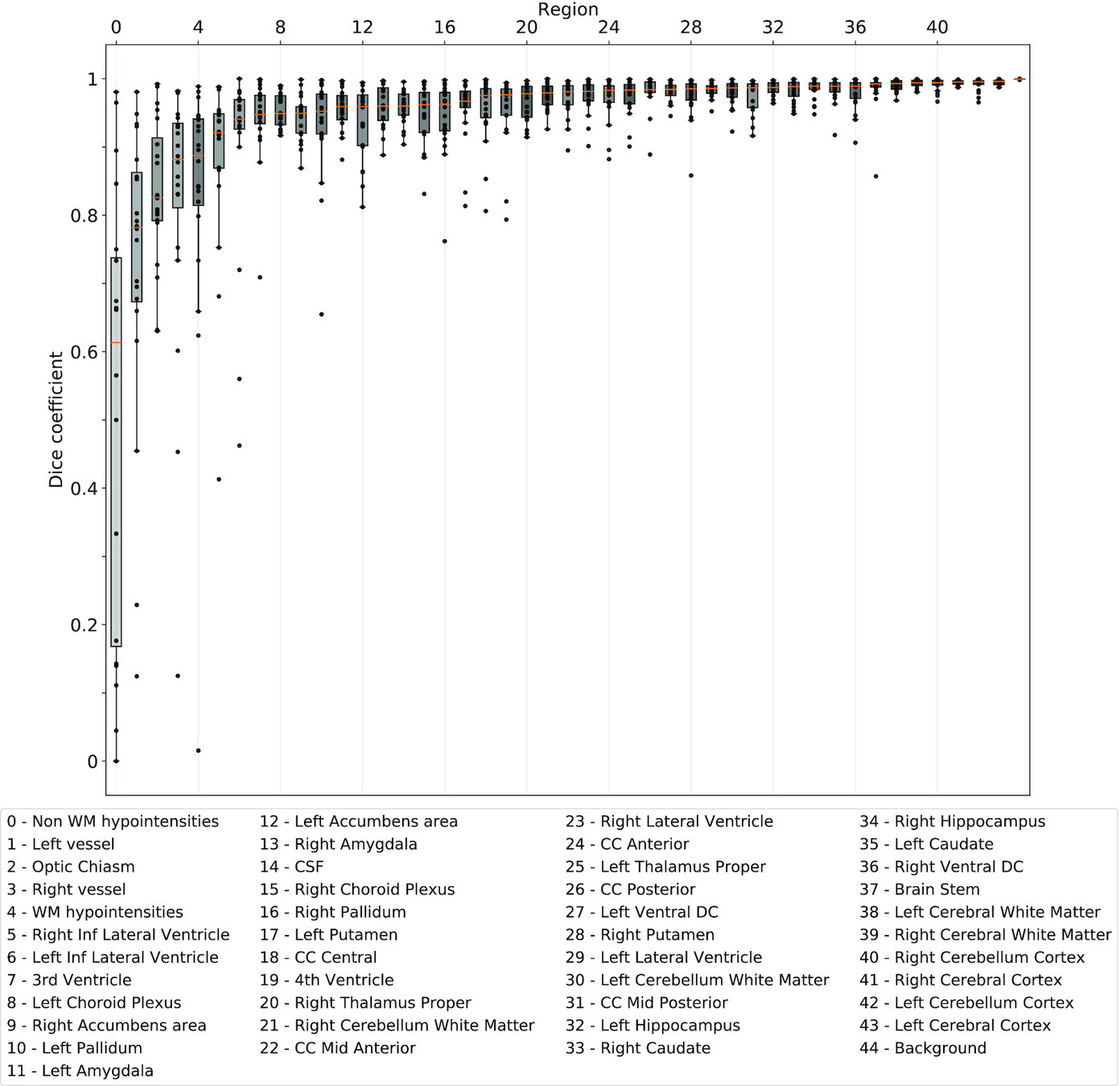

Same hardware, two Freesurfer builds (different glibc version)

Difference in estimated cortical tickness.¹

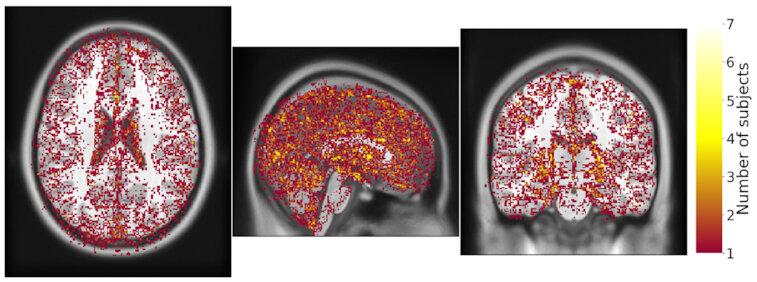

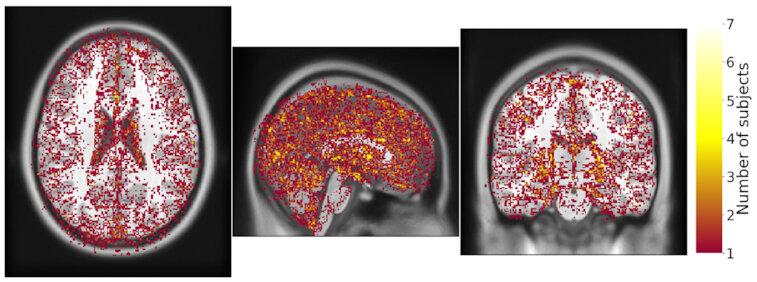

Same hardware, same FSL version, two glibc versions

Difference in estimated tissue segmentation.²

Same hardware, two Freesurfer builds (two glibc versions)

Difference in estimated parcellation.²

1. Glatard, et al., 2015 (Front. Neuroinform.) 2. Ali, et al., 2021 (Gigascience)

Really reproducible?

Same hardware, two Freesurfer builds (different glibc version)

Difference in estimated cortical tickness.¹

Same hardware, same FSL version, two glibc versions

Difference in estimated tissue segmentation.²

Same hardware, two Freesurfer builds (two glibc versions)

Difference in estimated parcellation.²

1. Glatard, et al., 2015 (Front. Neuroinform.) 2. Ali, et al., 2021 (Gigascience) 3. Muller (YouTube, Veritasium)

Reproducibility crisis

Or: the many facets of a scientific crisis that forced us to rethink the way we do science

| smoia | |

| @SteMoia | |

| s.moia.research@gmail.com |

Lausanne, 11.09.23

(Formerly) EPFL, Lausanne, Switzerland, and UniGE, Geneva, Switzerland; physiopy (https://github.com/physiopy)

Reproducibility crisis

Or: the many facets of a scientific crisis that forced us to rethink the way we do science

| smoia | |

| @SteMoia | |

| s.moia.research@gmail.com |

Lausanne, 11.09.23

(Formerly) EPFL, Lausanne, Switzerland, and UniGE, Geneva, Switzerland; physiopy (https://github.com/physiopy)

Replicability/Robustness/Generalisation crisis

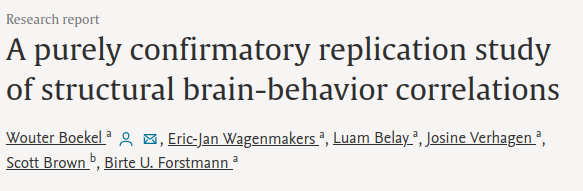

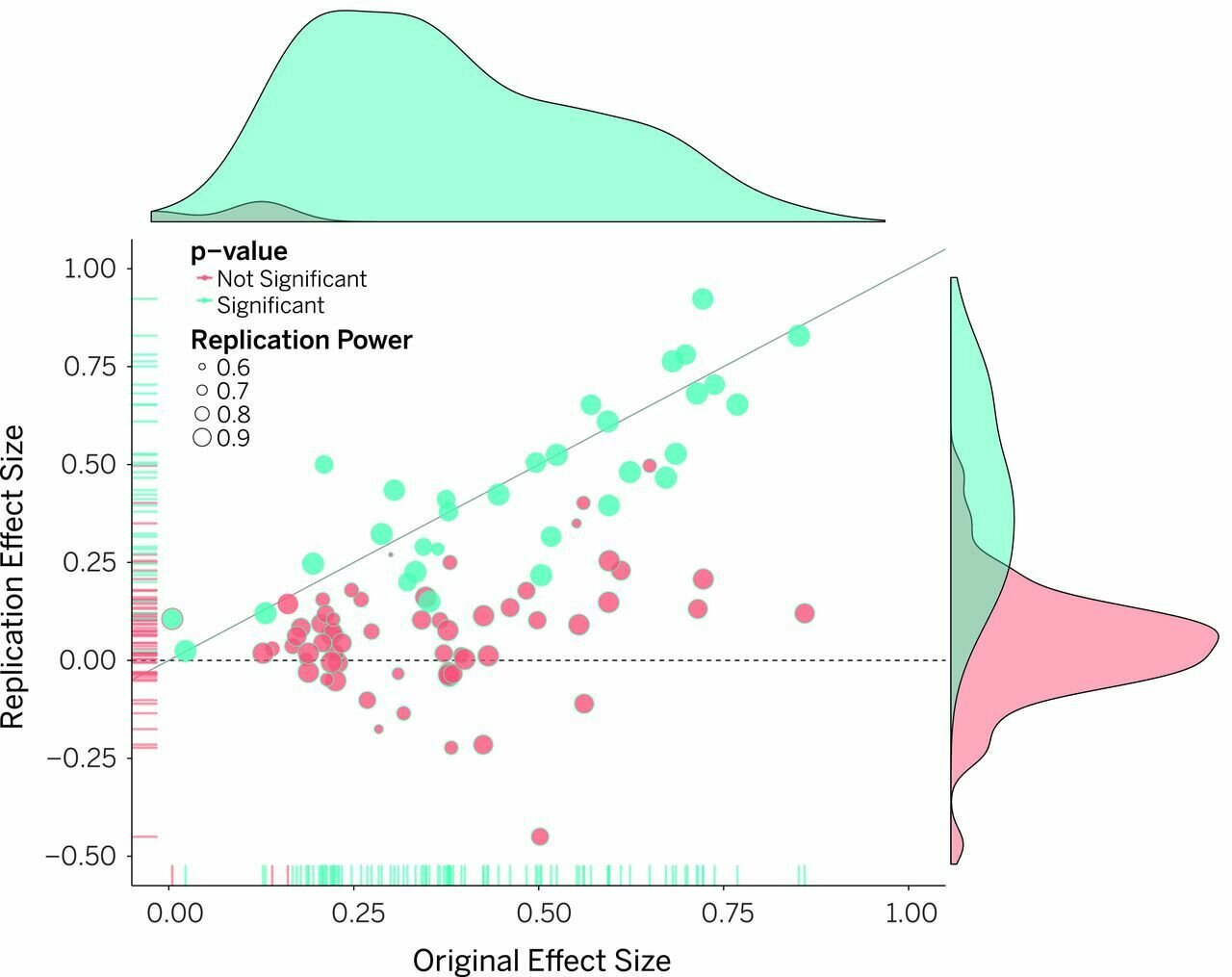

3. Robustness and replicability crisis

Really Robust?

Causes of "un-robustness"

- Different (valid) algorithmic implementations

- "Researchers degrees of freedom"

- Lack of "real underlying truth"

- Bias towards "positive" methods

Causes of "ir-replicability"

- Unclear statistical heterogeneity

- Lack of power analyses

- Insufficient data collection

- Data dredging / selection

- Bias towards "positive" results

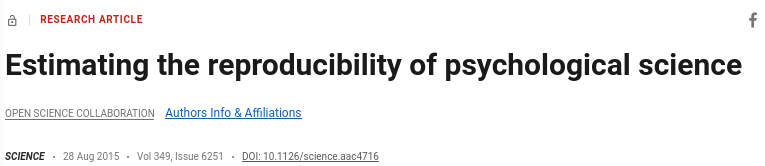

4. Generalisation crisis

The mass extintion level issue

Aarts et al. 2015 (Science)

What does failure to generalise tell us about hypotheses and scientific facts?

5. Procedural crisis

Procedural crisis

- Persons first, communities after

- Objective = not-human

- "Exploratory" = lack of procedures

- Hypotheses driven by results (HARKing)

6. Professional and systemic crisis

Professional and systemic crisis

- Lack of resources = Competition

- "Publish or perish" culture of academia

- "Null results" rejection → Bias toward "positive results"

- P-hacking, data dredging, data fishing, HARKing, ...

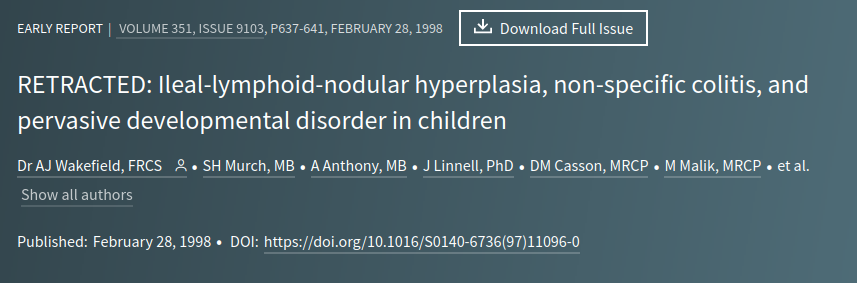

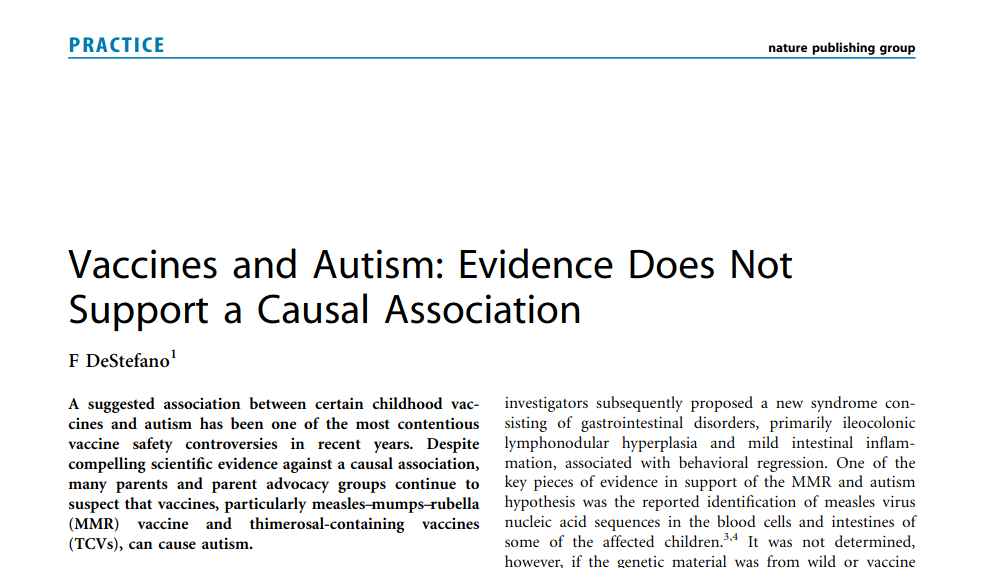

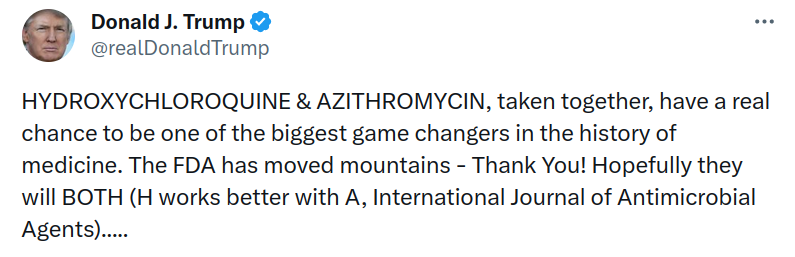

7. What are the risks?

The risks of non-replicable science

The risks

of non-replicable science

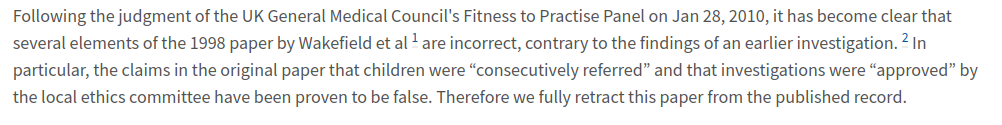

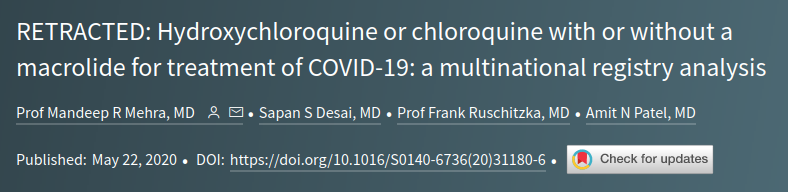

- Erratum

- Retraction

- Misinformation

- Public trust

- Impact

-

Erratum

Retraction

We are retracting this article due to concerns with Figure 5. In Figure 5A, there is a concern that the first and second lanes of the HIF-2α panel show the same data, [...], despite all being labeled as unique data. [...] We believe that the overall conclusions of the paper remain valid, but we are retracting the work due to these underlying concerns about the figure. Confirmatory experimentation has now been performed and the results can be found in a preprint article posted on bioRxiv [...]

8. What are the solutions?

The solutions against non-replicable science

Take home #1: replication

Be clear in reporting your methods,

share your protocols, use metadata!

Share your data, code, and environment

Plan your study towards sharing

Insert an element of replication

or generalisation in your studies

Take home #2: robustness

Don't reinvent the wheel:

find what is available to use,

contribute to open software

Join / follow scientific communities

Disclaim your choices, go "multiverse"

Take home #3: replication

If you can't match a power analysis, piggyback on other datasets

If you can't run it, read the literature,

do meta-analyses

Plan a power analysis

Take home #4: procedure

Plan alternative/concurrent hypotheses, plan a "risk factor analysis"

Pre-register or, even better,

plan and submit a registered report

Remember we are humans:

disclaim your biases

Take home #5: system

Publish all results, either alone (arXiv!)

or as a part of a bigger picture

Put the science first, put yourself second

Bring back to your lab what you learn this week

The solutions against non-replicable science

Last take home message:

What you do in your scientific work has an impact on society.

It's not about you.

Remember that.

Thanks to...

...the MIP:Lab @ EPFL

...you for the (sustained) attention!

That's all folks!

...the organisers, for having me here

...the Physiopy contributors

| smoia | |

| @SteMoia | |

| s.moia.research@gmail.com |