Federica B. Bianco

University of Delaware

Physics and Astronomy

Biden School of Public Policy and Administration

Data Science Institute

NYU Center for Urban Science and Progress

Rubin Observatory LSST Science Collaborations Coordinator

Rubin LSST Transients and Variable Stars Science Collaborations Chair

Big Data in astronomy:

an overview

SOMACHINE 2020

Federica B. Bianco

University of Delaware

Physics and Astronomy

Biden School of Public Policy and Administration

Data Science Institute

NYU Center for Urban Science and Progress

Rubin Observatory LSST Science Collaborations Coordinator

Rubin LSST Transients and Variable Stars Science Collaborations Chair

Big Data in astronomy:

an overview

SOMACHINE 2020

this slide deck:

https://slides.com/federicabianco/bdastro

@fedhere

- historical perspective: BD context

- BD from astronomical surveys

- optical

- radio

- gravitational waves

- space-based astronomy BD problem

- corwdsourcing approach

- time domain astronomy BD problems

- platforms

- computational platforms in astro

- computational platforms in other fields

- VO

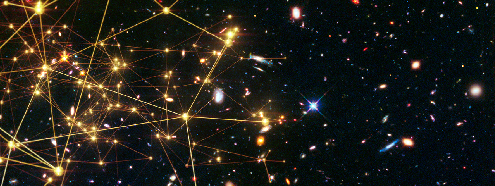

Historical perspective

1/6

Historical perspective

"Data larger than can be analyzed with typical tool"

"Data that does not fit in memory"

"Data that stresses the infrastructure"

@fedhere

Historical perspective

"Data larger than can be analyzed with typical tool"

John R. Mashey Chief Scientist, SGI, mid-1990s

@fedhere

Historical perspective

"Data larger than can be analyzed with typical tool"

"Data that stresses the infrastructure"

John R. Mashey Chief Scientist, SGI, mid-1990s

@fedhere

Historical perspective

"Data that does not fit in memory"

@fedhere

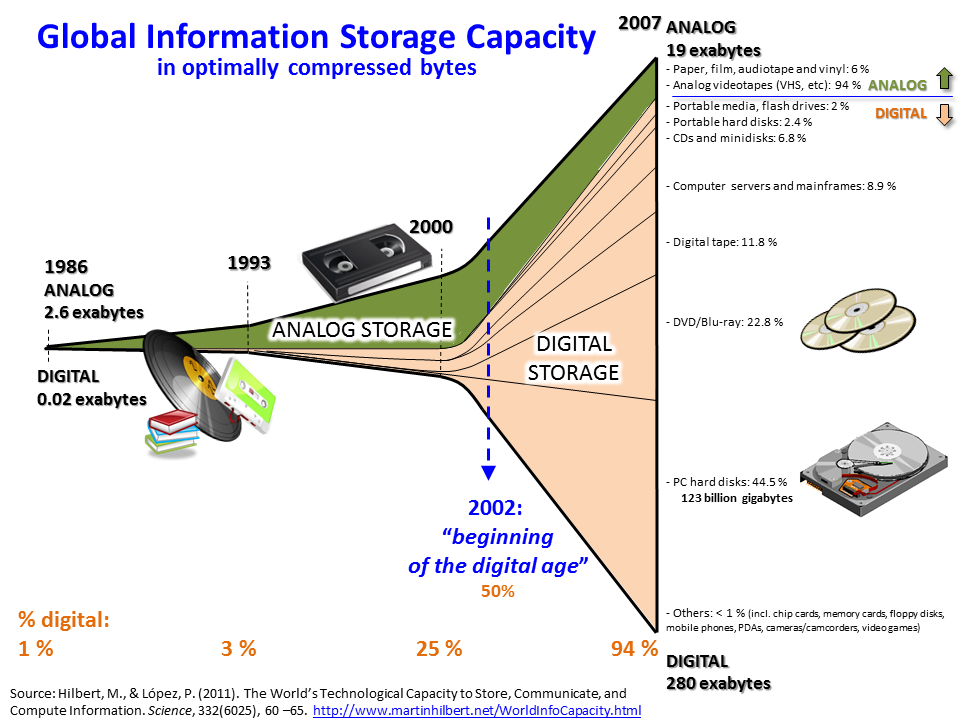

Historical perspective

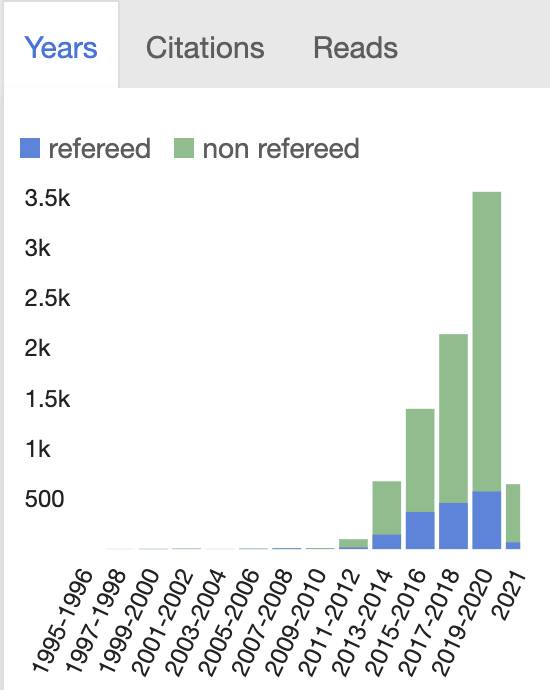

Big Data in astronomy papers

(source: ADS)

@fedhere

@fedhere

@fedhere

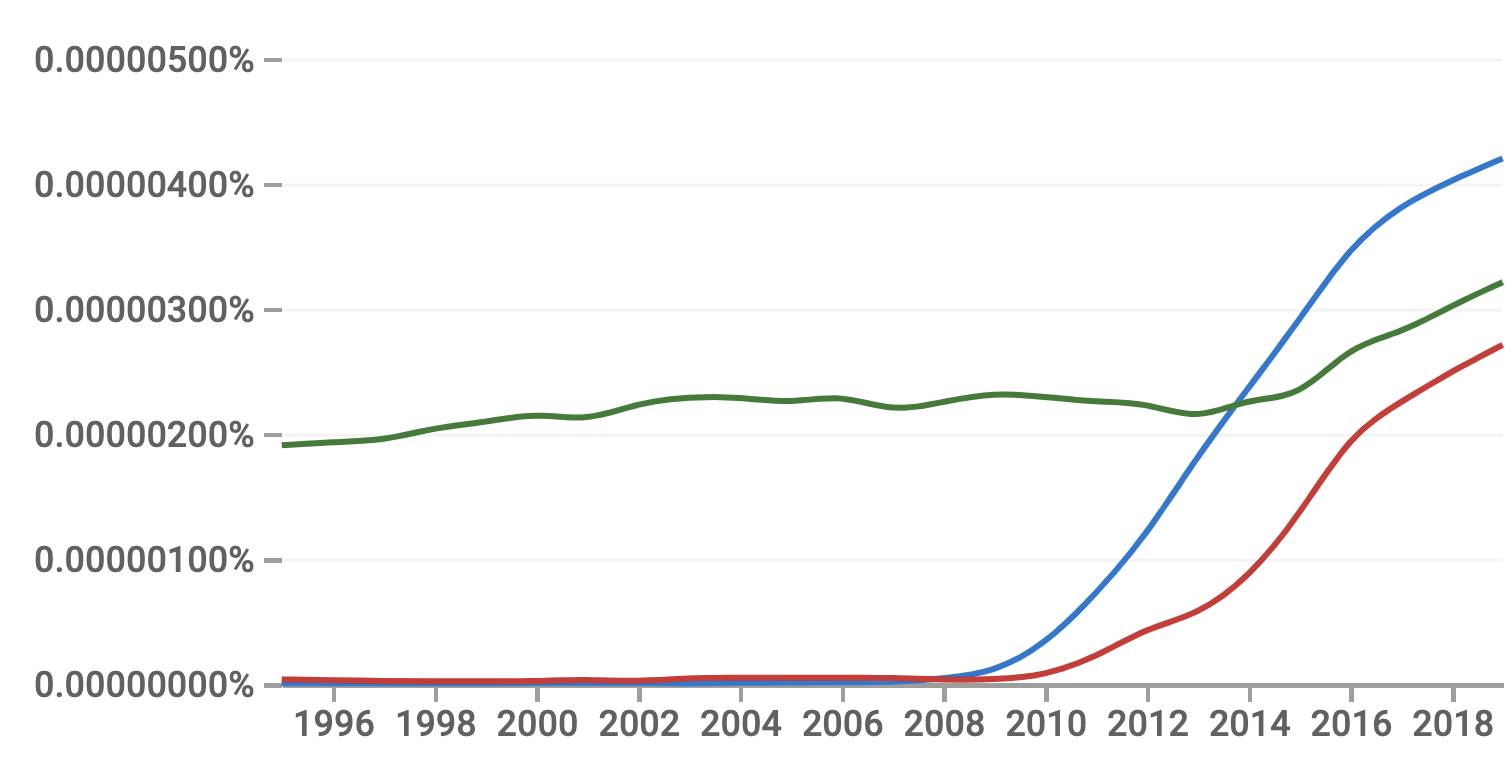

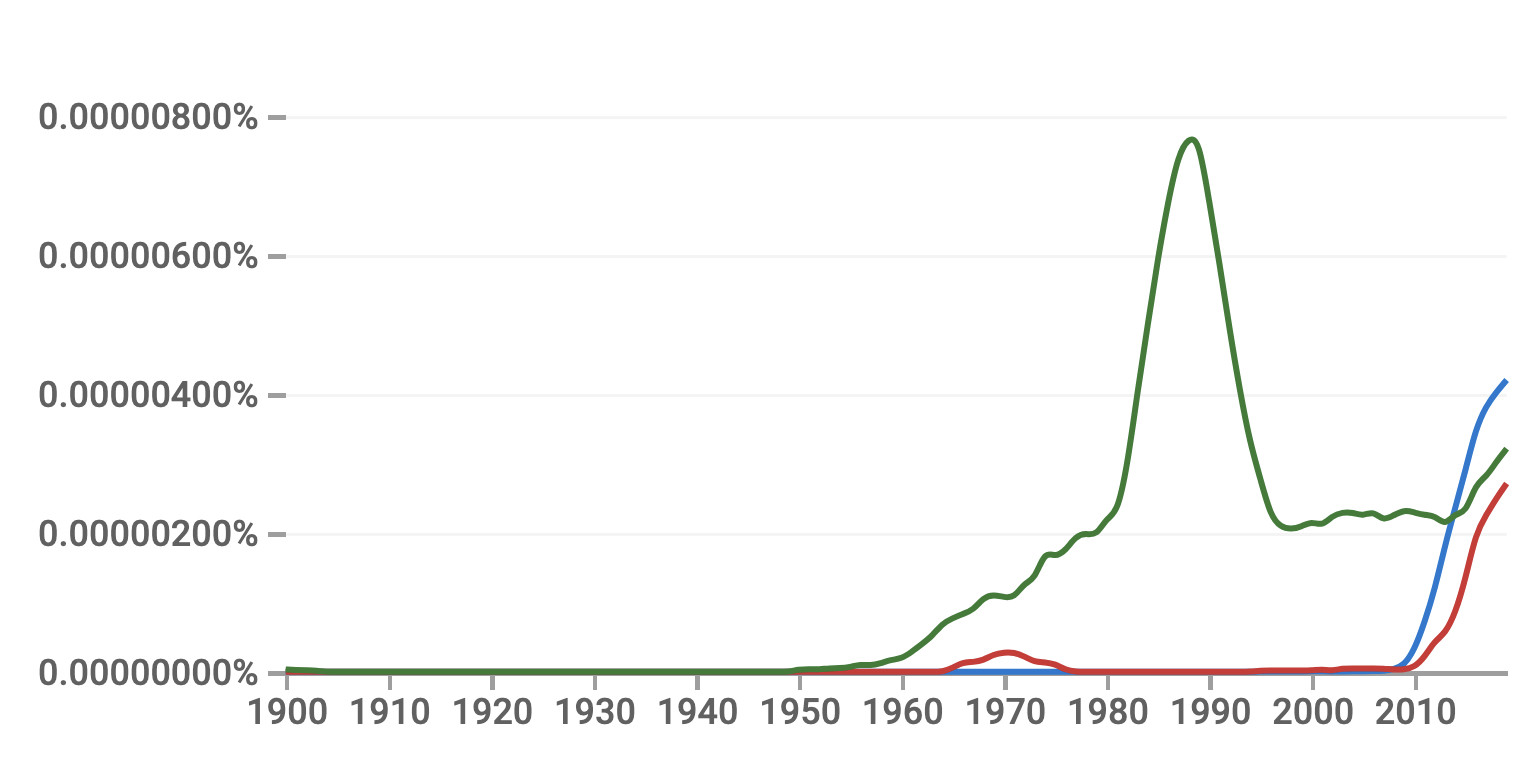

Historical perspective

- Big Data

- Data Science (x30) (x30)

- Artificial Intelligence (x10)

-

1996 2006 2016

occurrence of term in Google-books corpus https://books.google.com/ngrams

Historical perspective

- Big Data

- Data Science (x30) (x30)

- Artificial Intelligence (x10)

-

occurrence of term in Google-books corpus https://books.google.com/ngrams

Historical perspective

Gartner report 2001

@fedhere

Historical perspective

Gartner report 2001

@fedhere

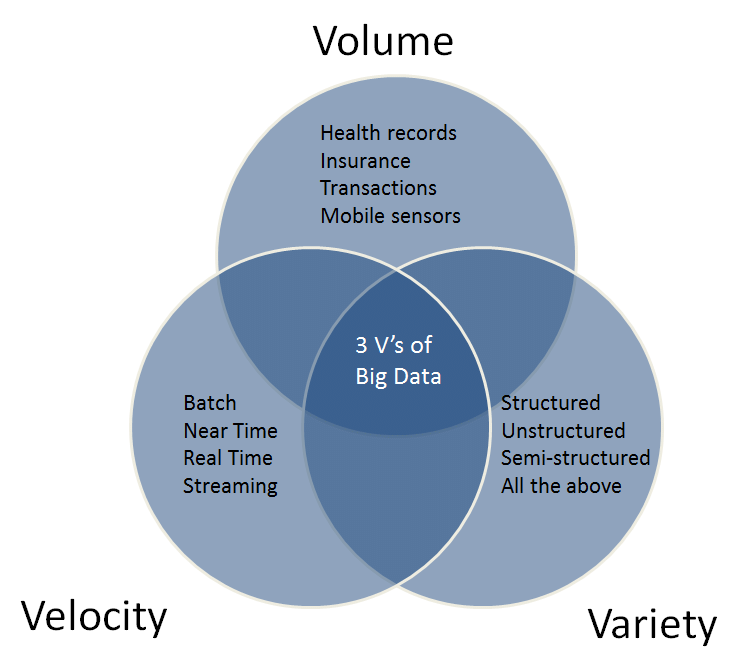

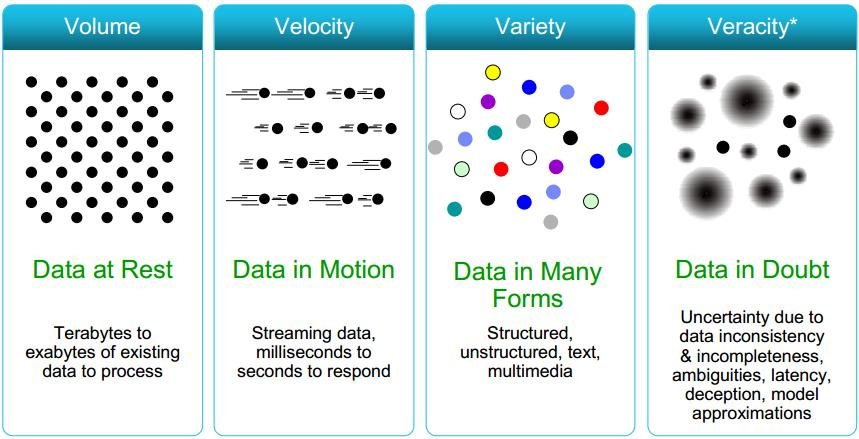

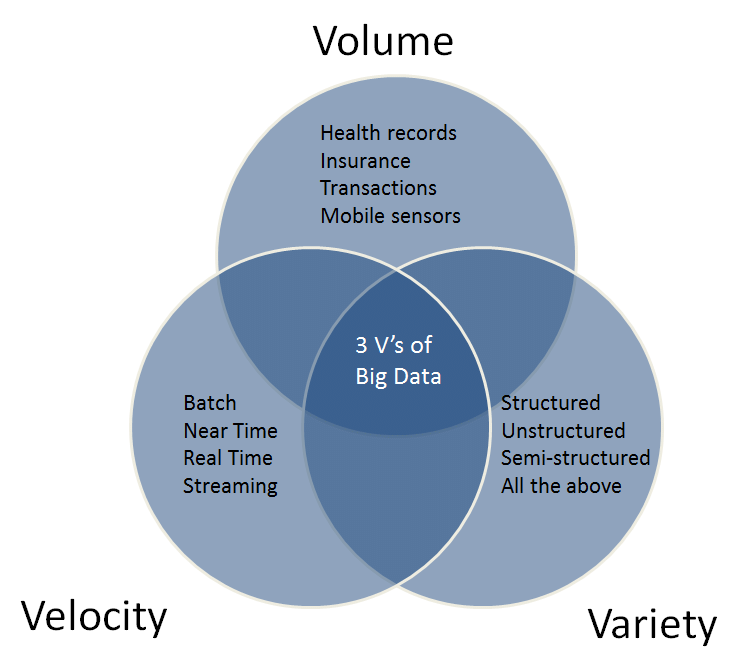

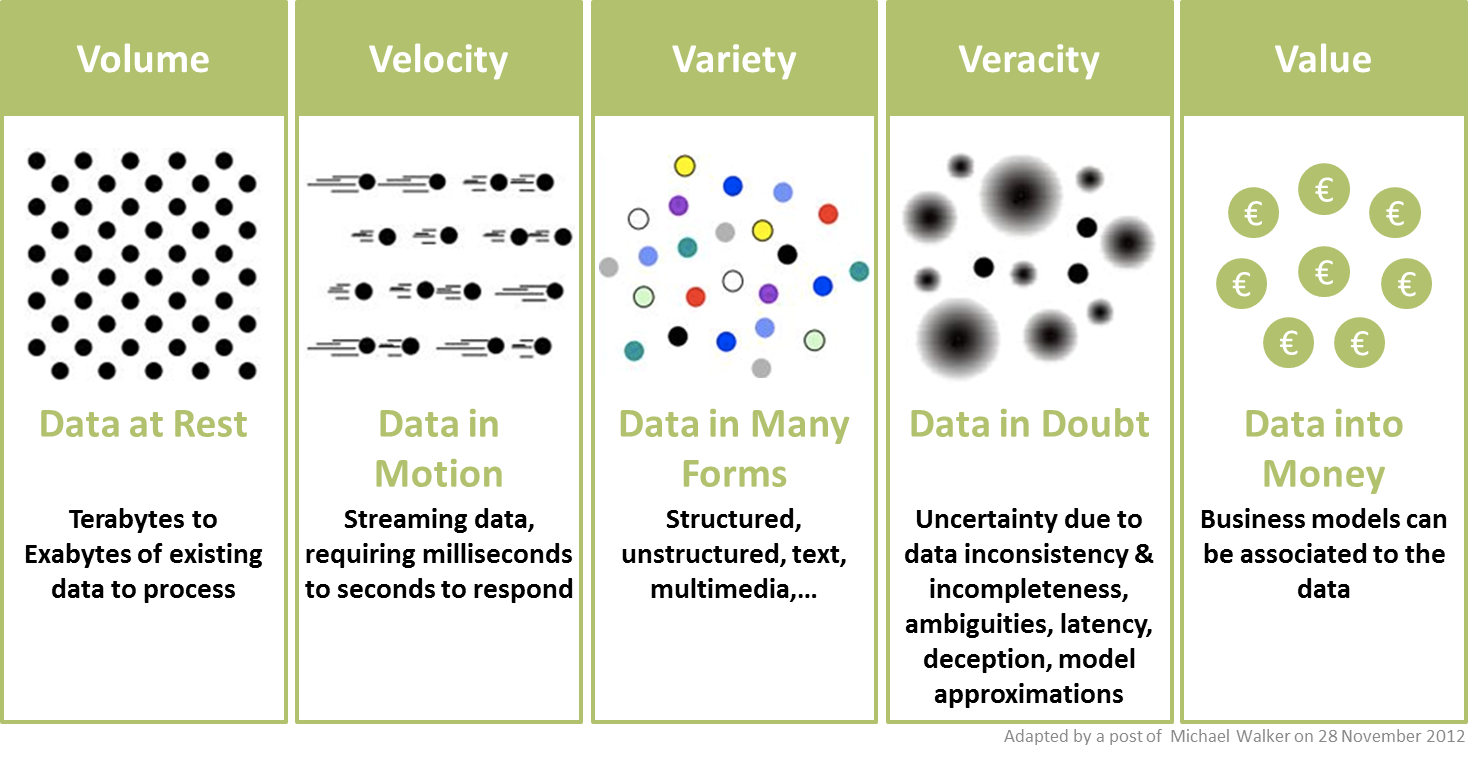

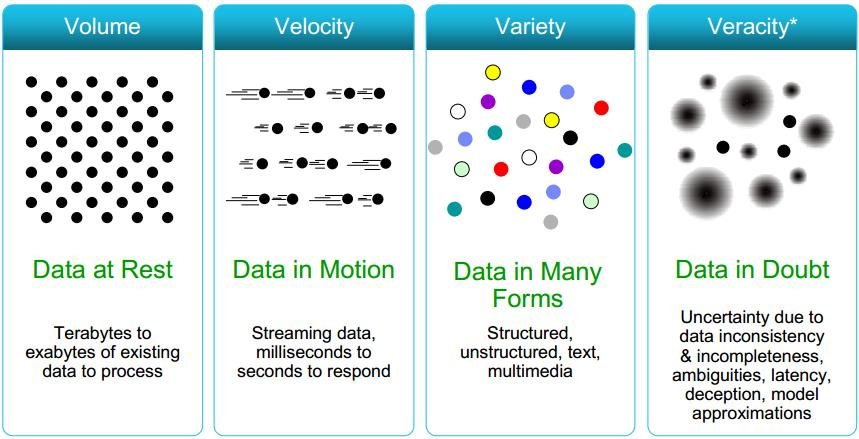

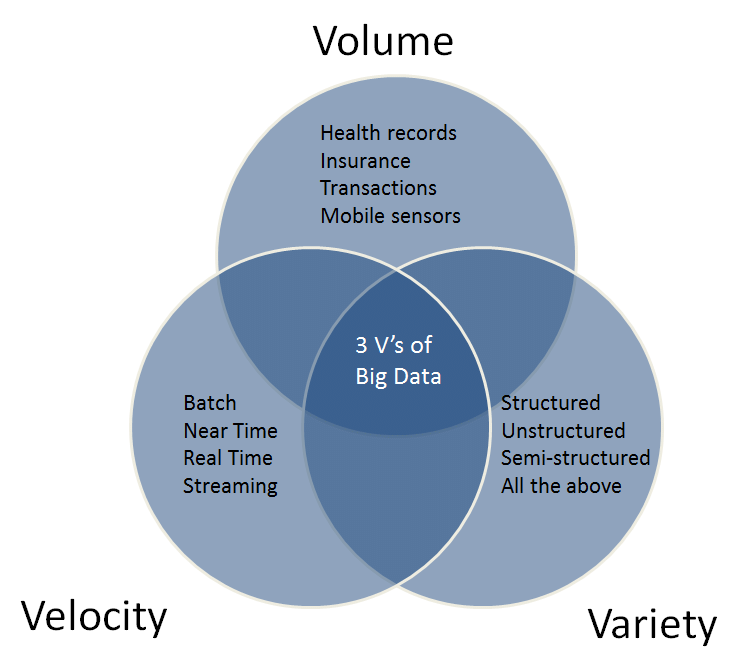

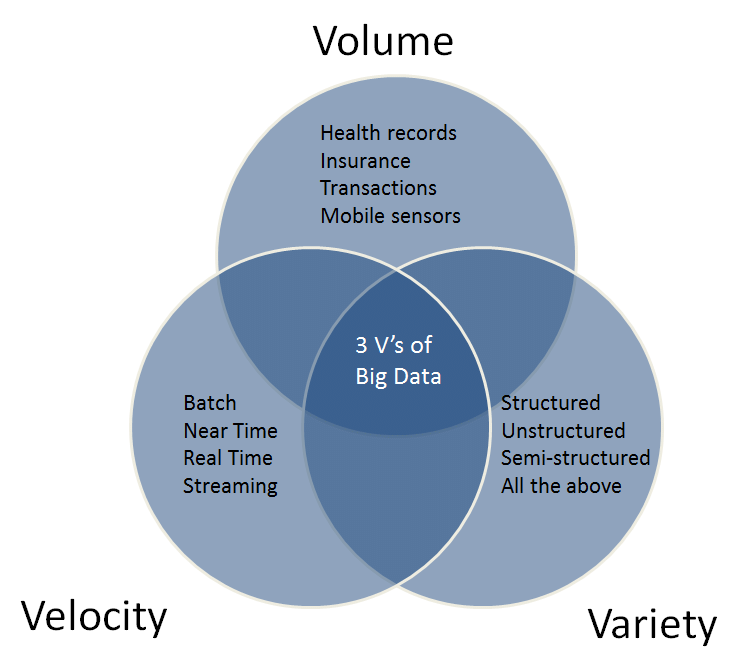

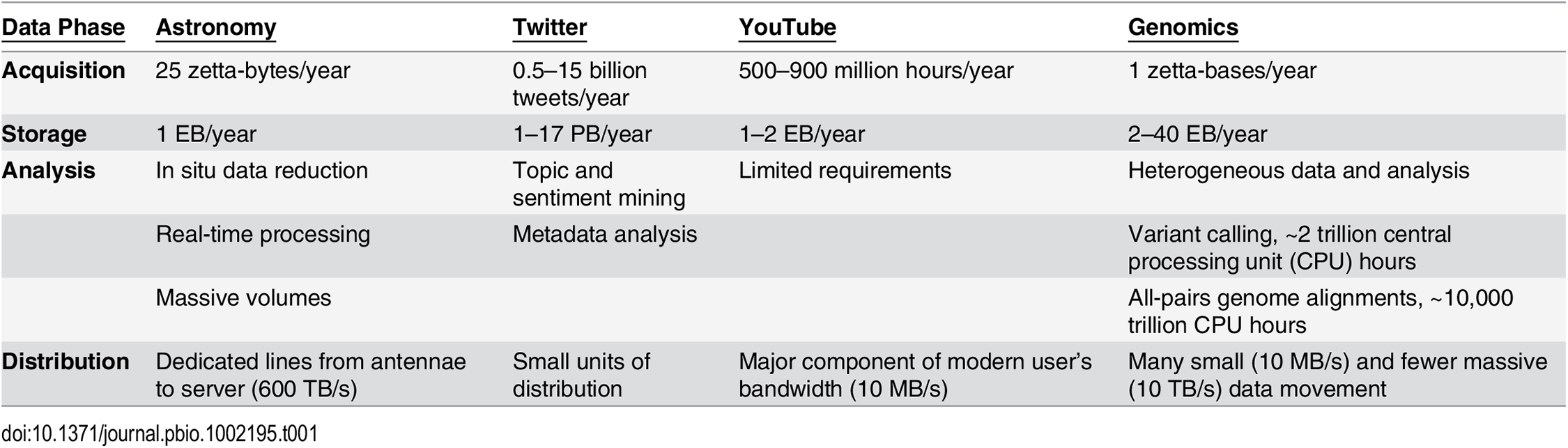

4-V of Big Data

V1: Volume

Number of bites

Number of pixels

Number of rows in a data table x number of columns for catalogs

V2: Variety

Diverse science return from the same dataset.

Multiwavelength

Multimessenger

Images and spectra

V4: Veracity

This V will refer to both data quality and availability (added in 2012)

V3: Velocity

real time analysis, edge computing, data transfer

Gartner report 2001

@fedhere

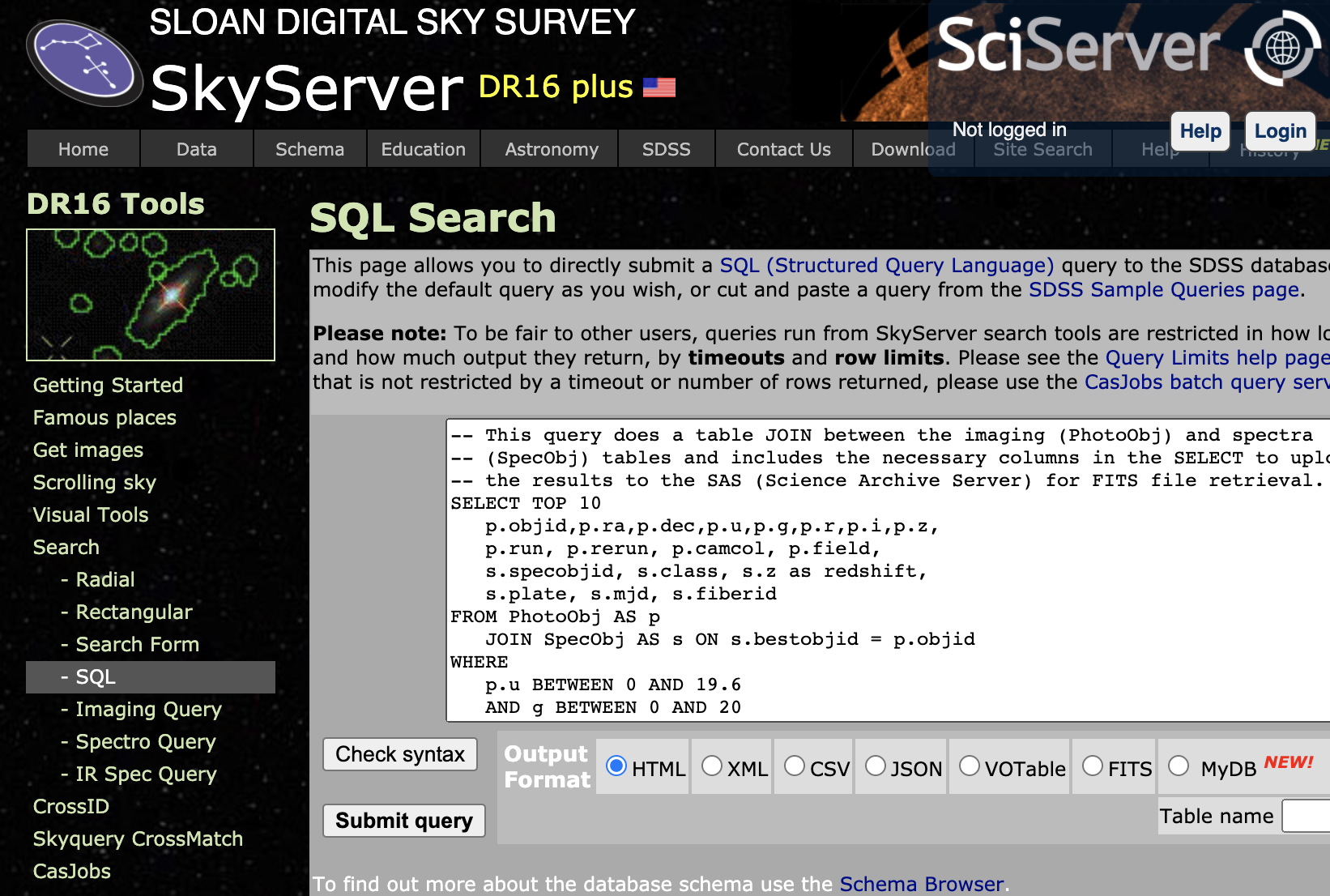

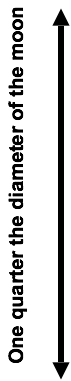

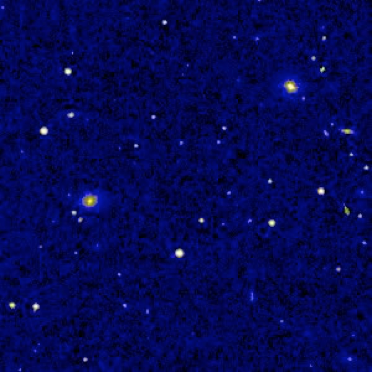

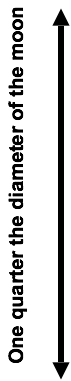

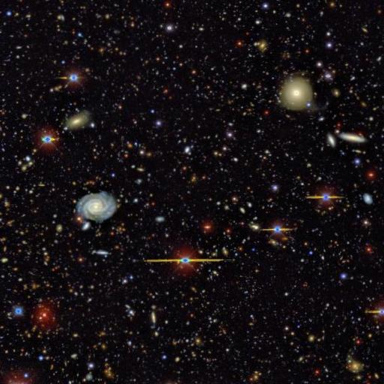

Exquisite image quality

all over the sky

over and over again

SDSS image circa 2000

HSC image circa 2018

when you look at the sky at this resolution and this depth...

everything is blended and everything is changing

Gartner report 2001

Gartner report 2001

@fedhere

Historical perspective

Text

complexity

@fedhere

complexity

Exquisite image quality

all over the sky

over and over again

SDSS image circa 2000

HSC image circa 2018

when you look at the sky at this resolution and this depth...

everything is blended and everything is changing

Gartner report 2001

Gartner report 2001

@fedhere

Historical perspective

Text

complexity

@fedhere

complexity

= Astronomical data mainly include images, spectra, time-series data, and simulation data.

Most of the data are saved in catalogues or databases. The data from different telescopes or projects have their own formats, which causes difficulty with integrating data from various sources in the analysis phase. In general, each data item has a thousand or more features; this causes a large dimensionality problem. Moreover, data have many data types: structured, semi-structured, unstructured, and mixed.

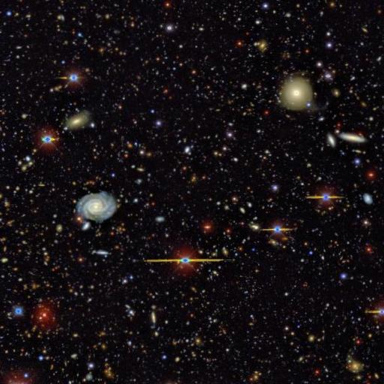

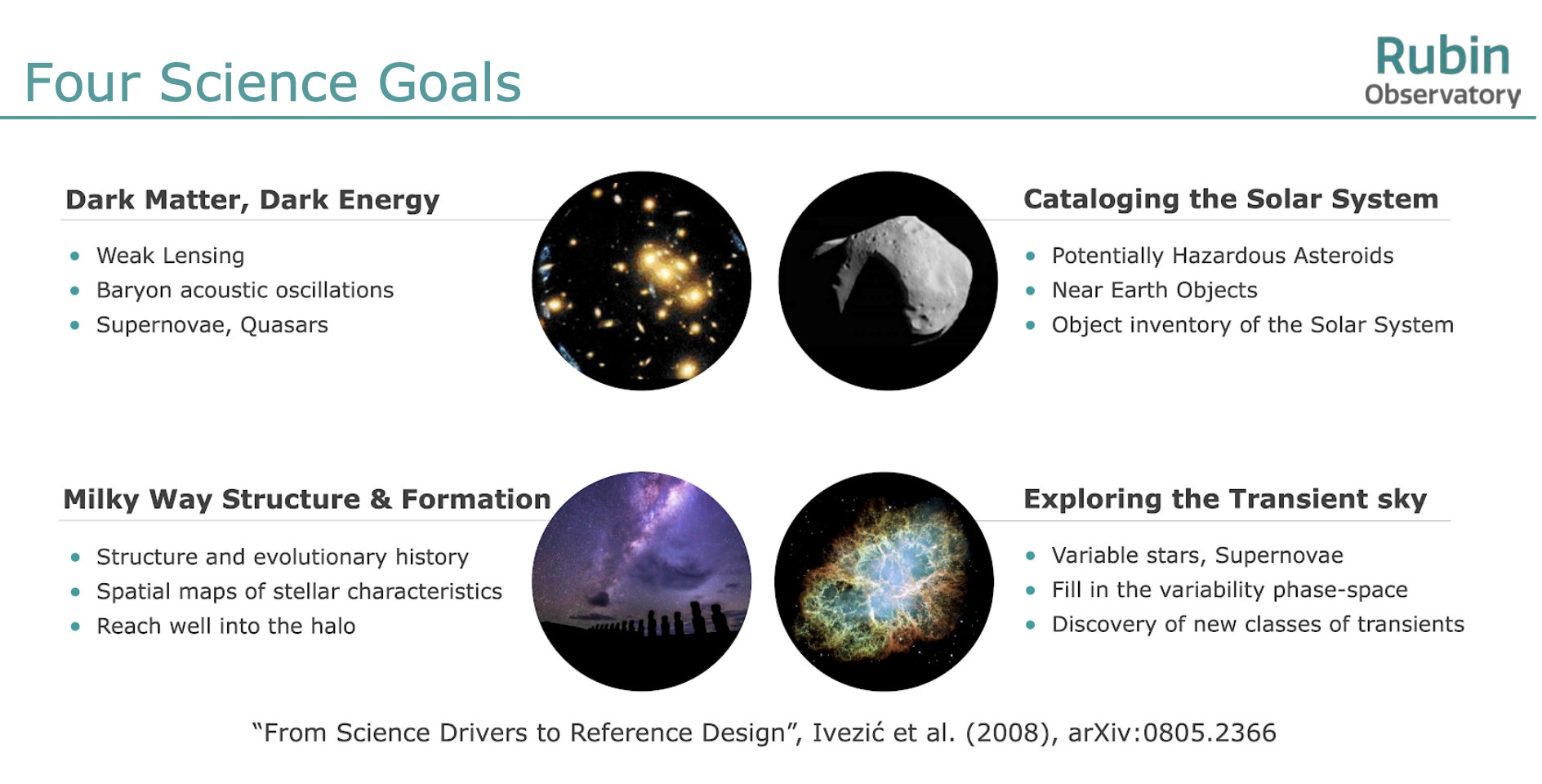

Rubin Observatory LSST

next talk by M Rawls!

@fedhere

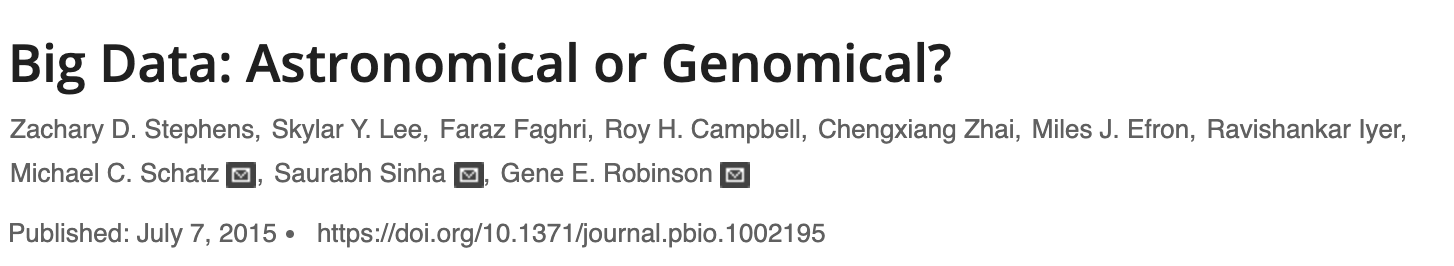

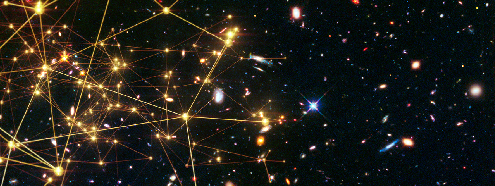

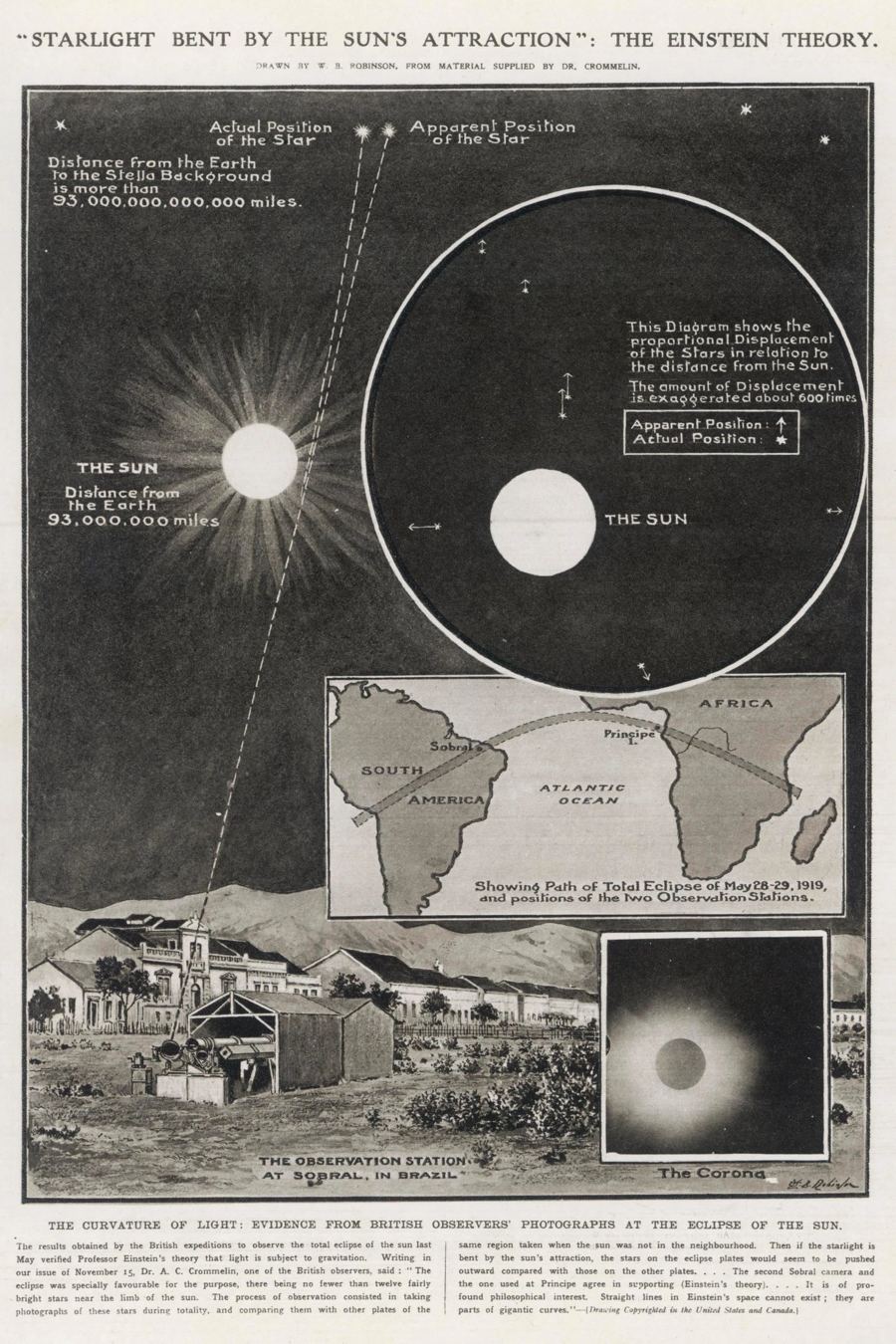

Galileo Galilei 1610

what drives scientific discovery in

astronomy

@fedhere

Galileo Galilei 1610

Following: Djorgovski

https://events.asiaa.sinica.edu.tw/school/20170904/talk/djorgovski1.pdf

Experiment driven

what drives

astronomy

@fedhere

Enistein 1916

what drives

astronomy

Theory driven | Falsifiability

Experiment driven

@fedhere

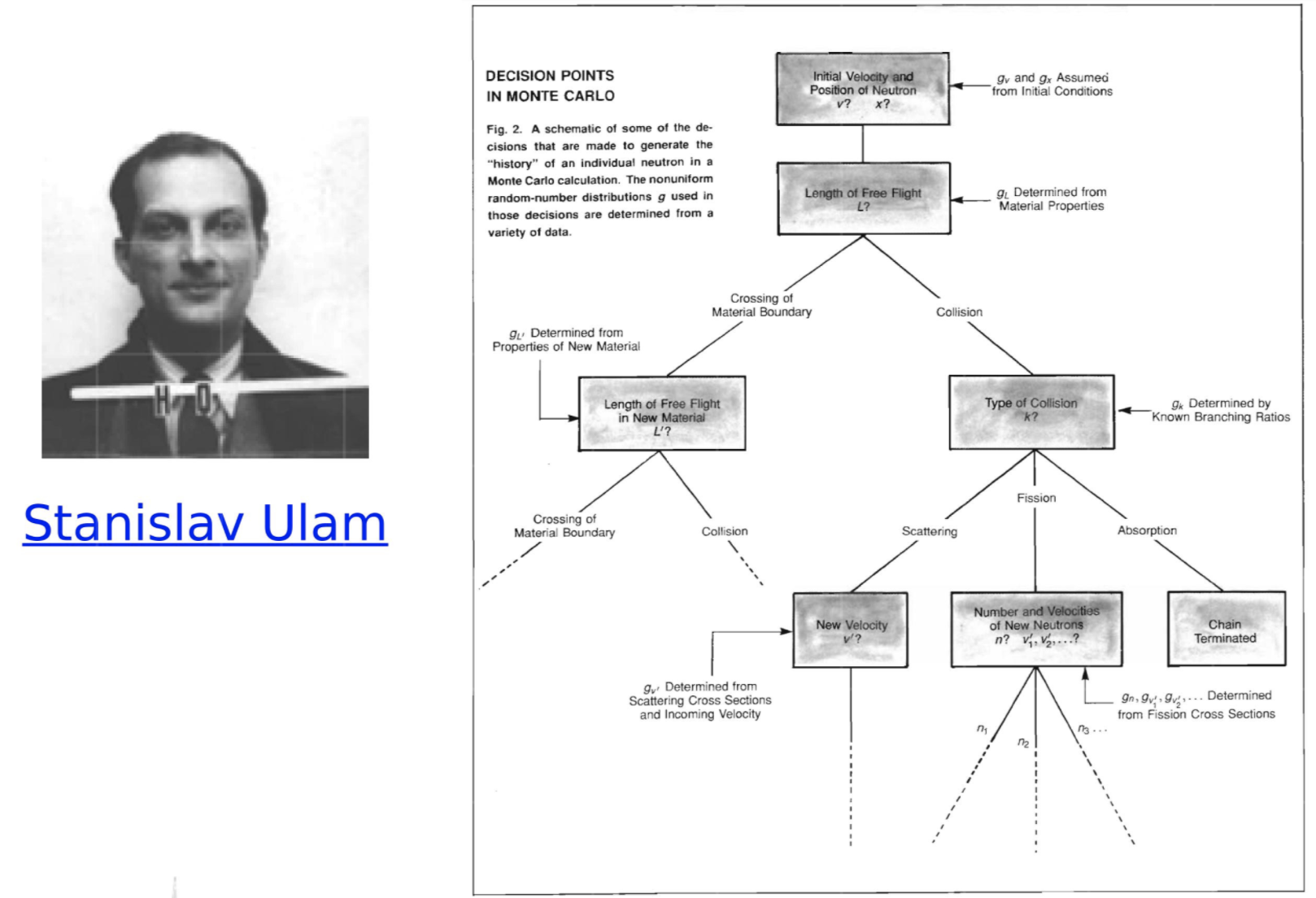

Ulam 1947

Theory driven | Falsifiability

Experiment driven

Simulations | Probabilistic inference | Computation

http://www-star.st-and.ac.uk/~kw25/teaching/mcrt/MC_history_3.pdf

what drives

astronomy

@fedhere

what drives

astronomy

Theory driven | Falsifiability

Experiment driven

Simulations | Probabilistic inference | Computation

Ulam 1947

@fedhere

what drives

astronomy

the 2000s

Theory driven | Falsifiability

Experiment driven

Simulations | Probabilistic inference | Computation

Data | Survey astronomy | Computation | pattern discovery

@fedhere

what drives

astronomy

Theory driven | Falsifiability

Experiment driven

Simulations | Probabilistic inference | Computation

Data | Survey astronomy | Computation | pattern discovery

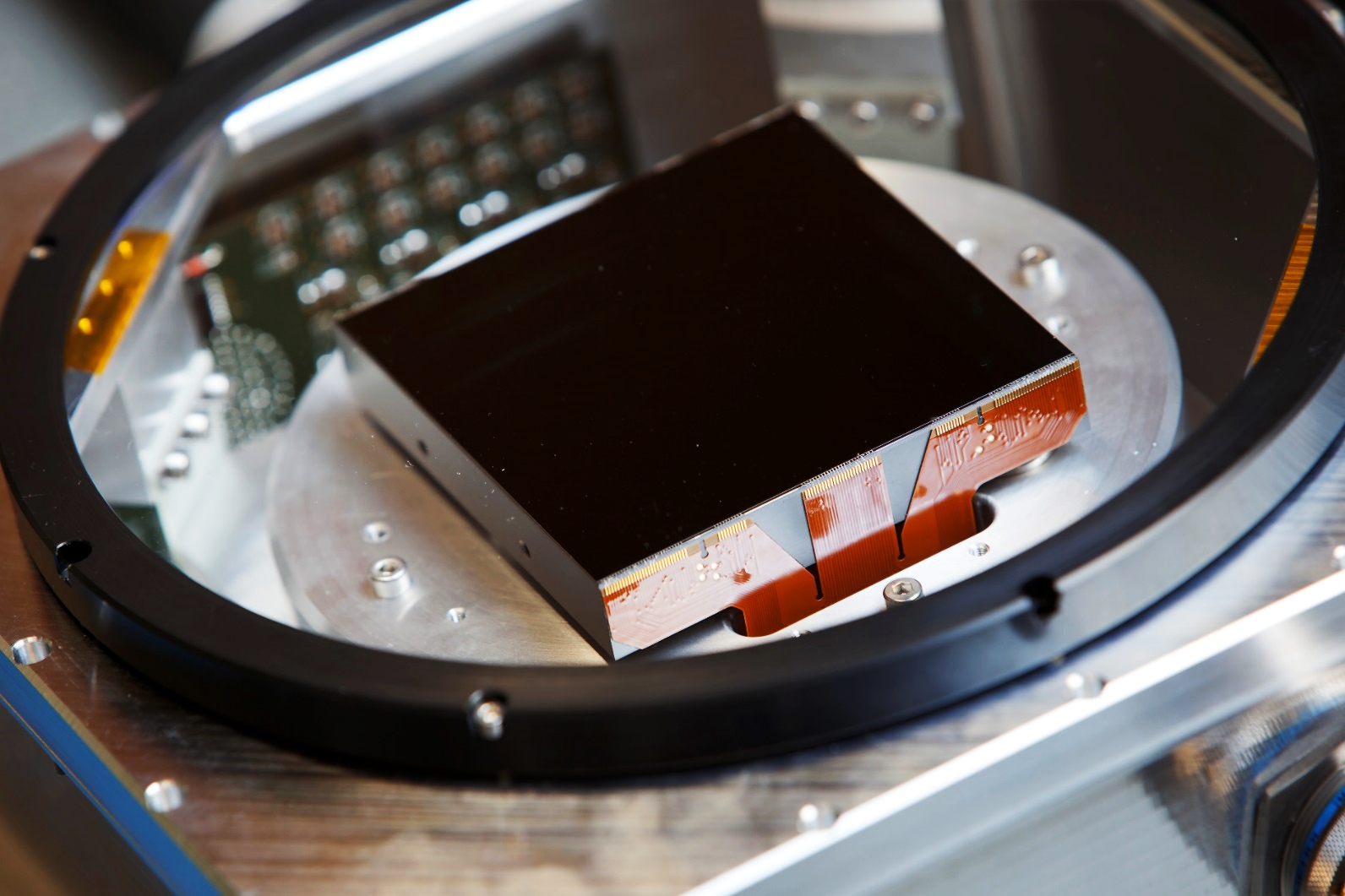

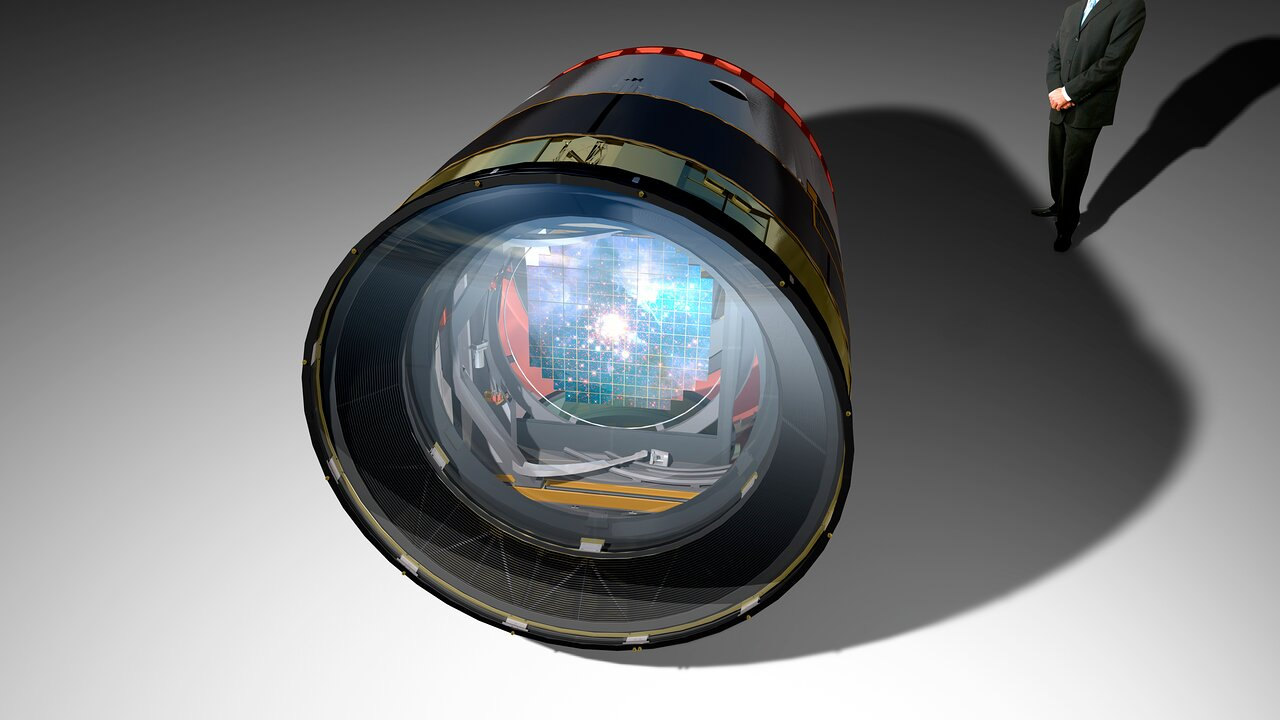

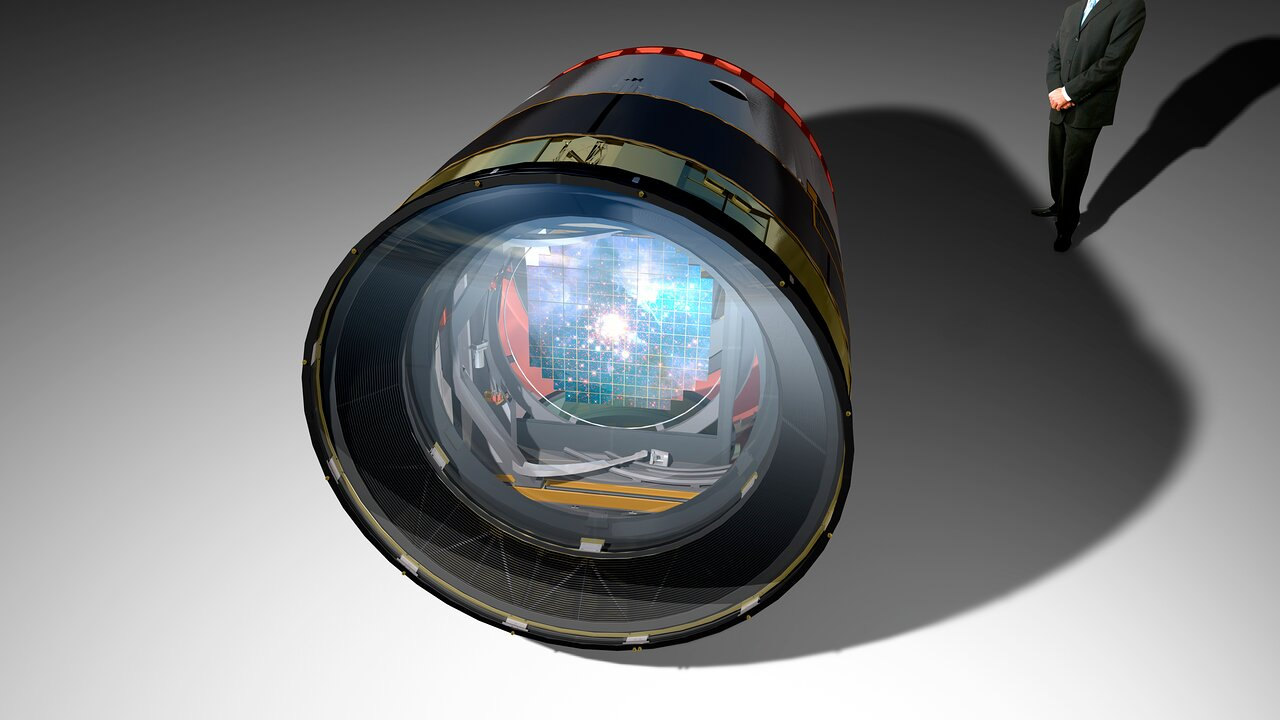

3.2 Gpix Rubin camera

the 2000s

@fedhere

what drives

astronomy

Theory driven | Falsifiability

Experiment driven

Simulations | Probabilistic inference | Computation

Data | Survey astronomy | Computation | pattern discovery

3.2 Gpix Rubin camera

lazy learning

learning by example

(supervised learning)

pattern discovery

(unsupervised learning)

@fedhere

BD from astronomical surveys

2/6

astronomical data production

@fedhere

astronomical data production

@fedhere

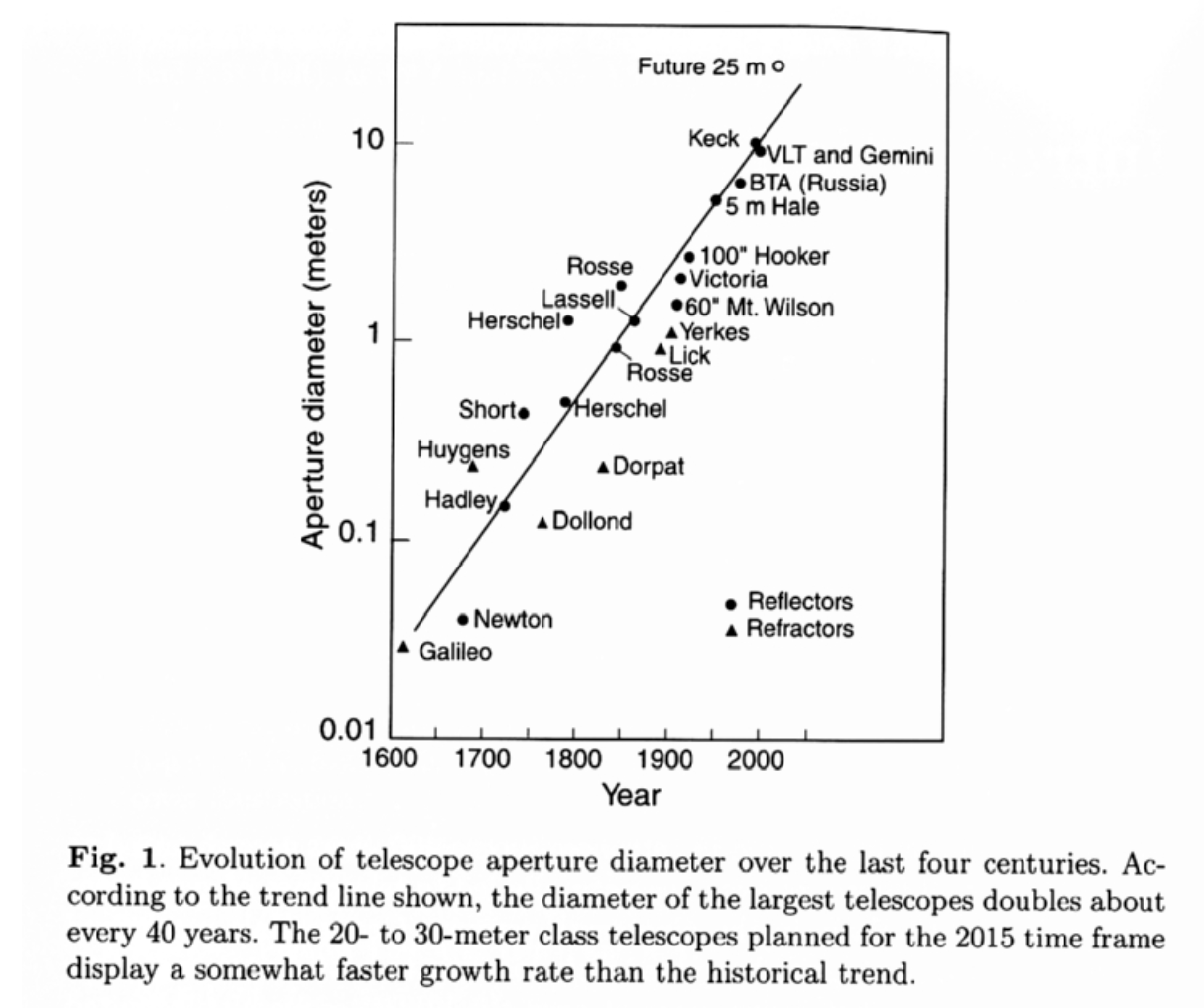

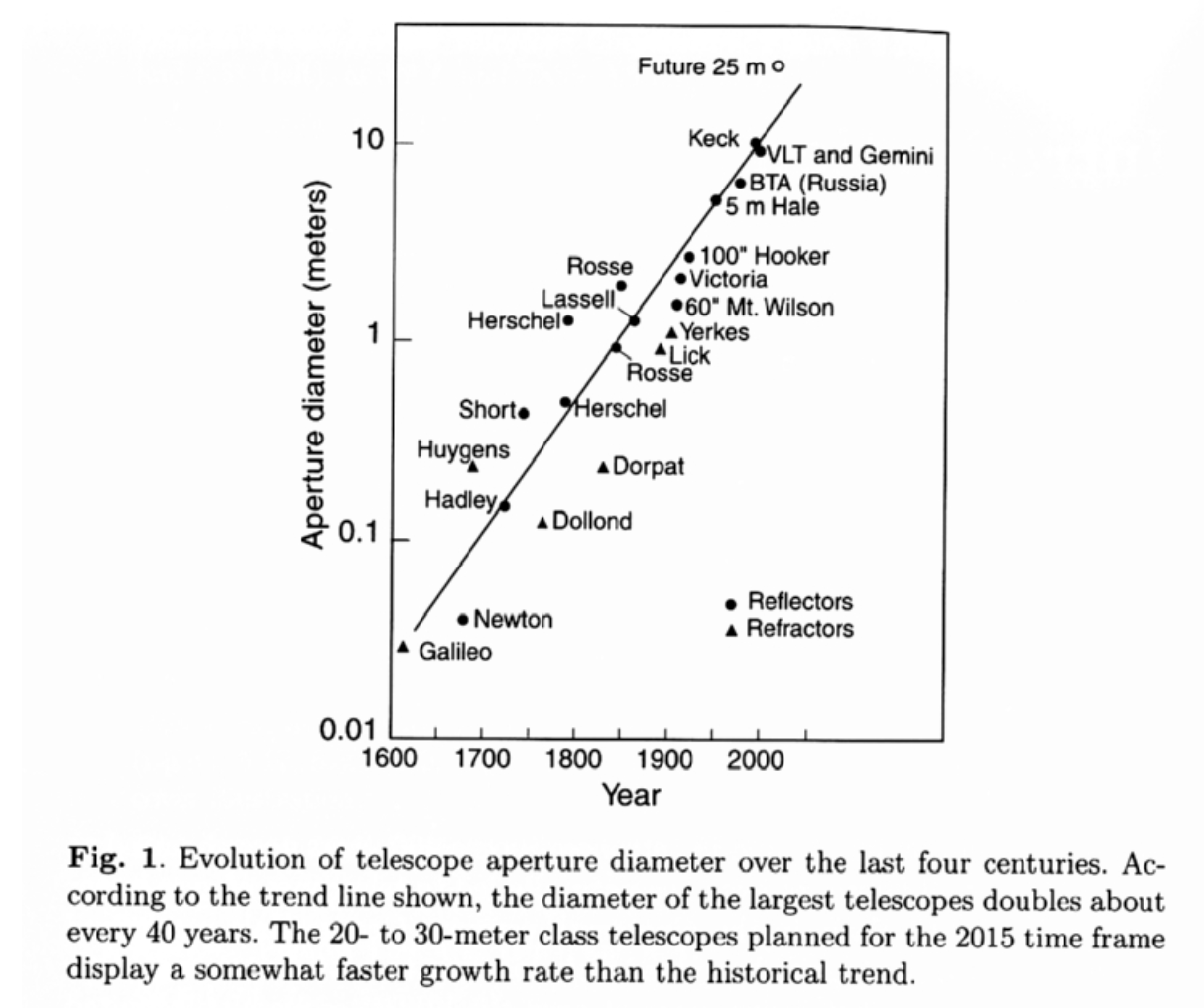

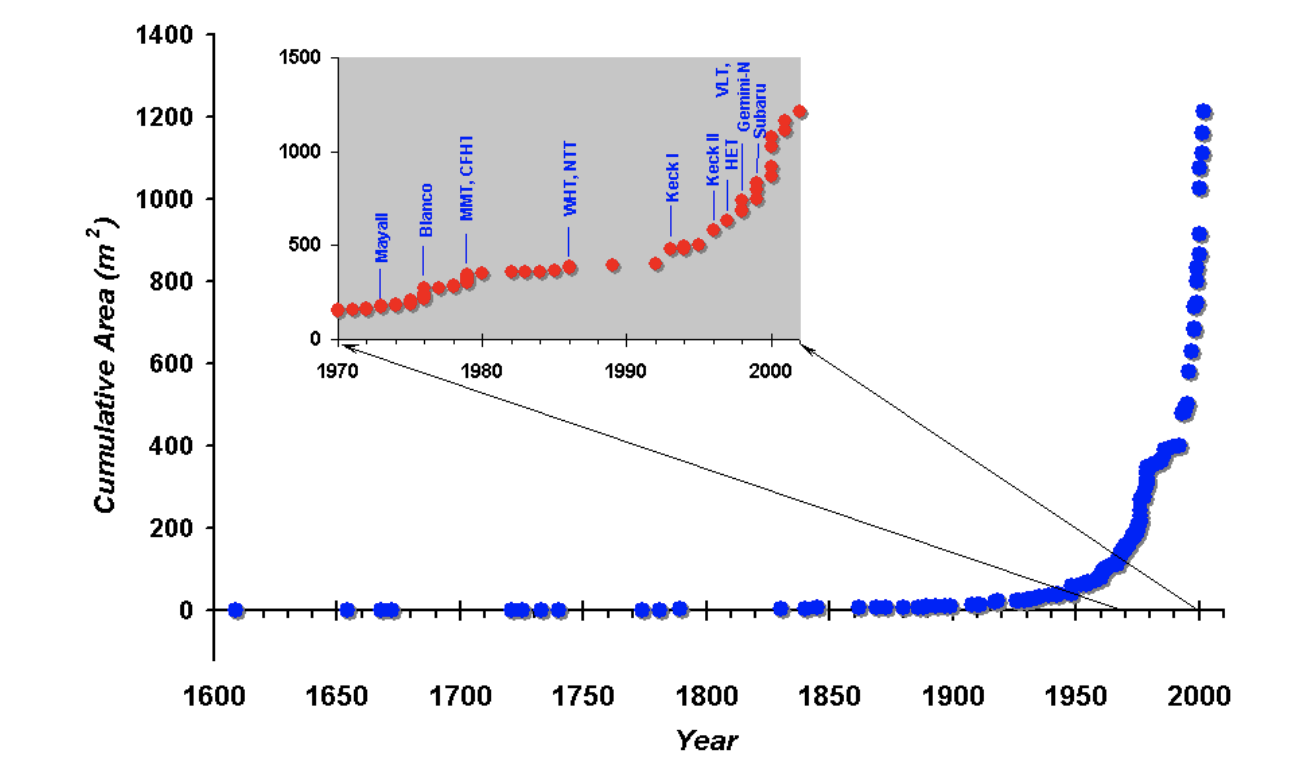

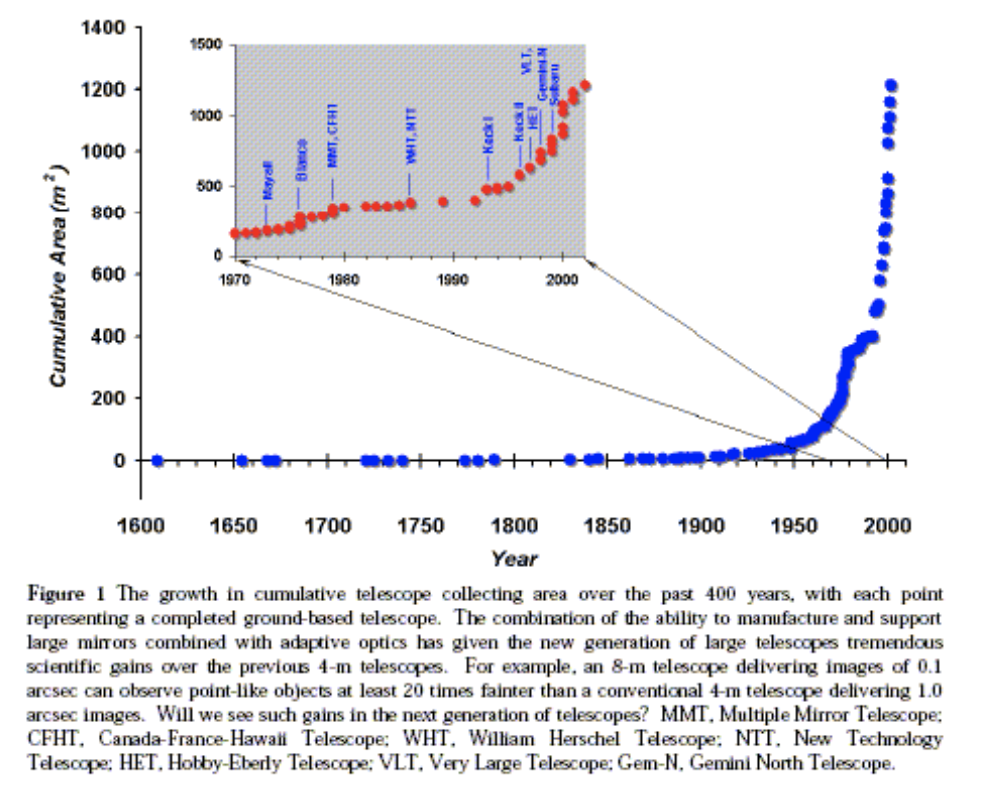

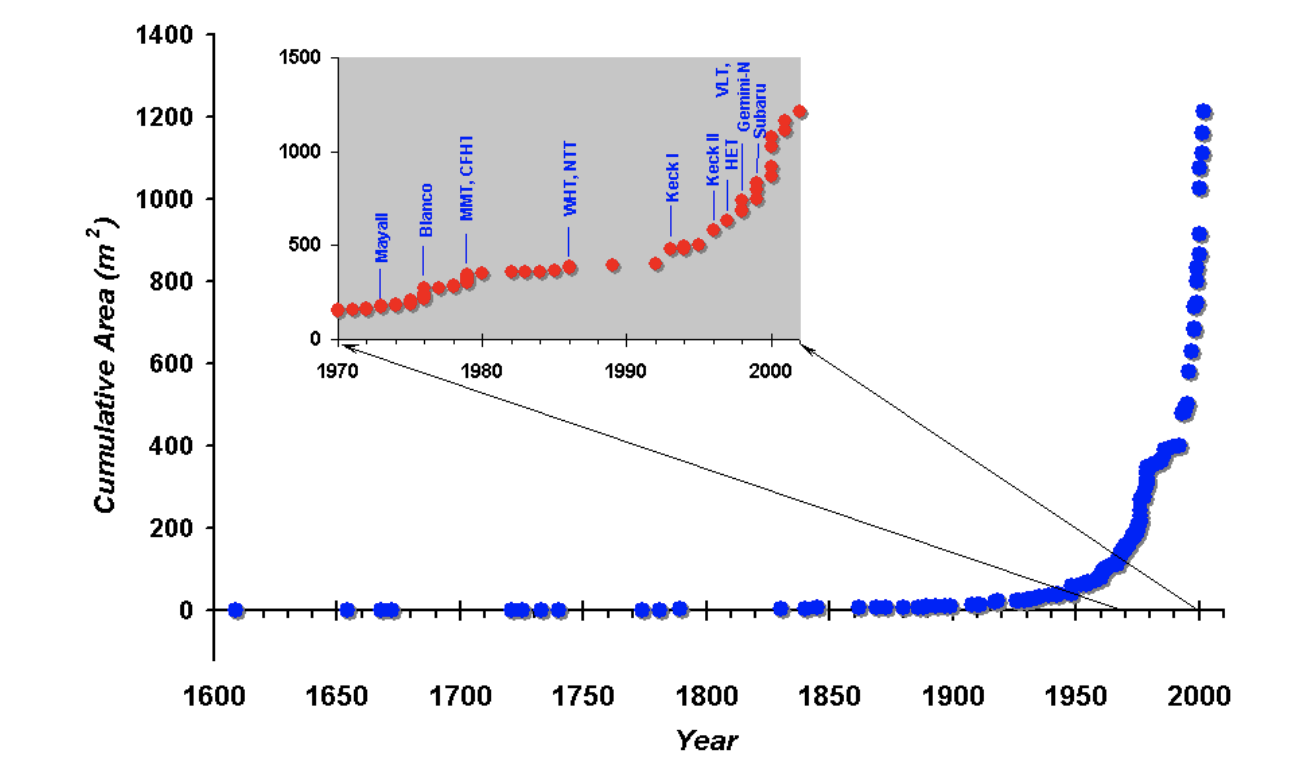

astronomical data production

Bely, The Design and Construction of Large Telescopes

astronomical data production

@fedhere

Mountain & Gillett, "The Revolution in Telescope Aperture",

http://hat.astro.princeton.edu/

astronomical data production

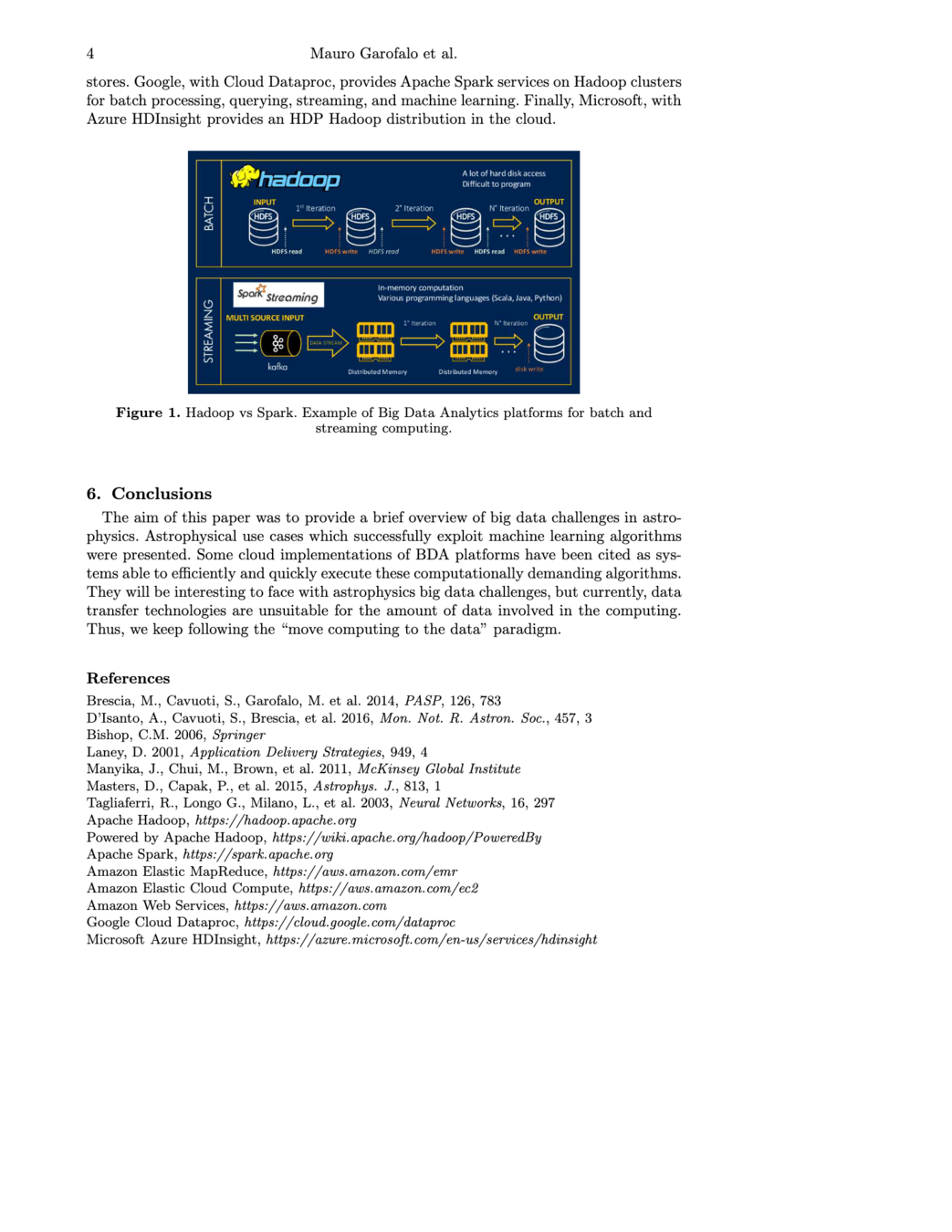

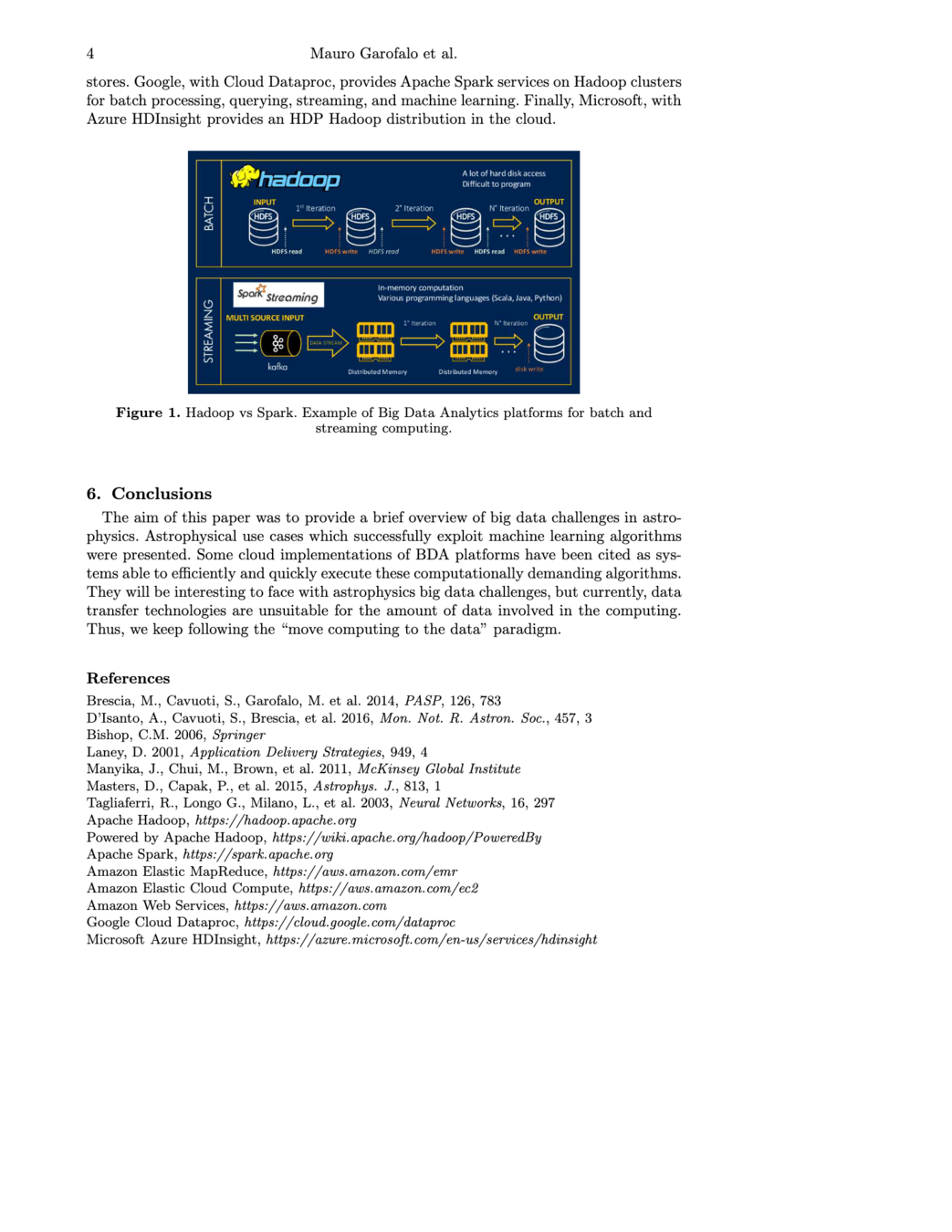

Both data volumes and data rates grow exponentially, with a doubling time ~ 1.5 years

It is also estimated that everyone has access to 50% of the existing data!

@fedhere

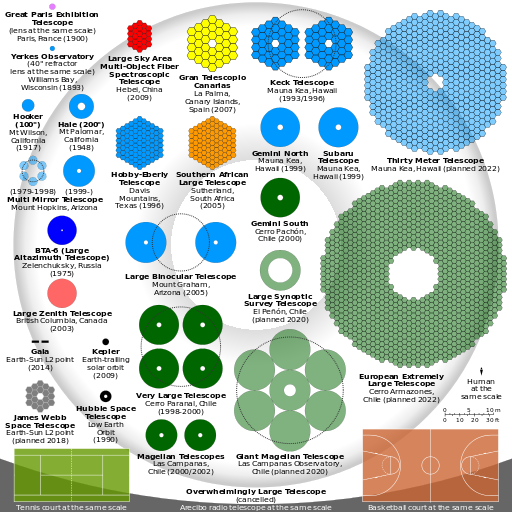

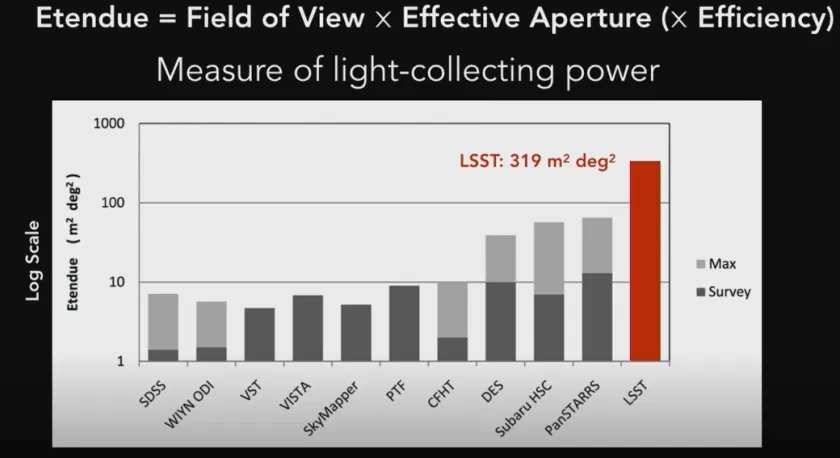

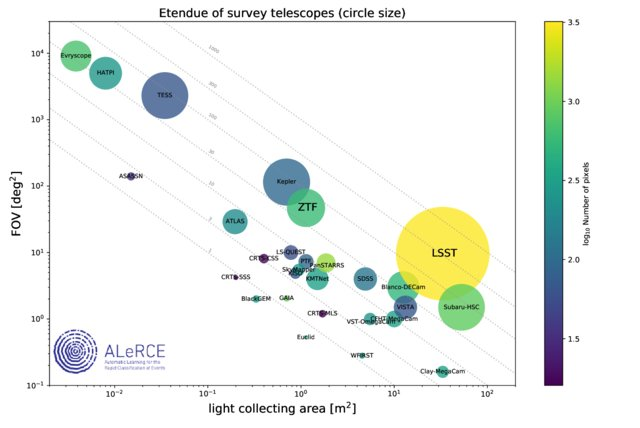

Area vs Volume

astronomical data production

Etendue: area x FoV

@fedhere

data volume :

area x FoV

x

resolution

x

sensitivity

astronomical data production

log number of pixels

1.5 2.0 2.5 3.0 3.5

Etendue: area x FoV

@fedhere

4-V of Big Data in astronomy

V1: Volume

Number of bites

Number of pixels

Number of rows in a data table x number of columns for catalogs

@fedhere

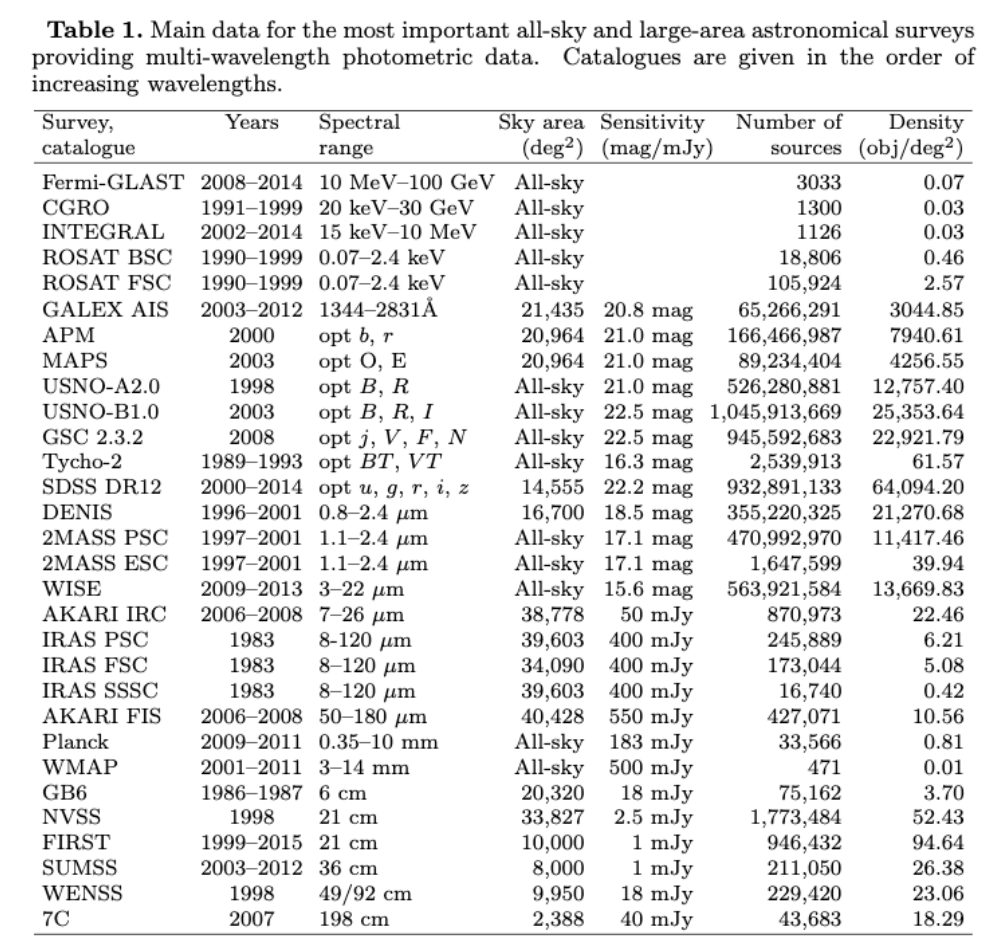

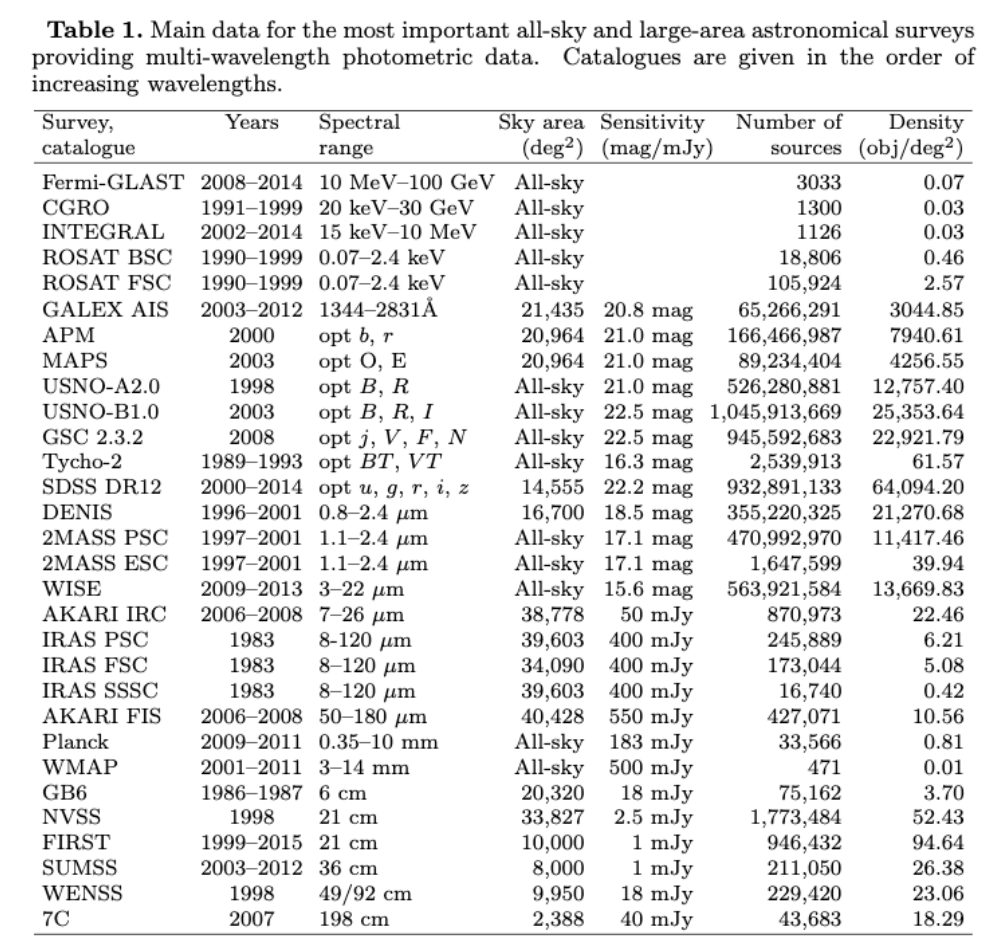

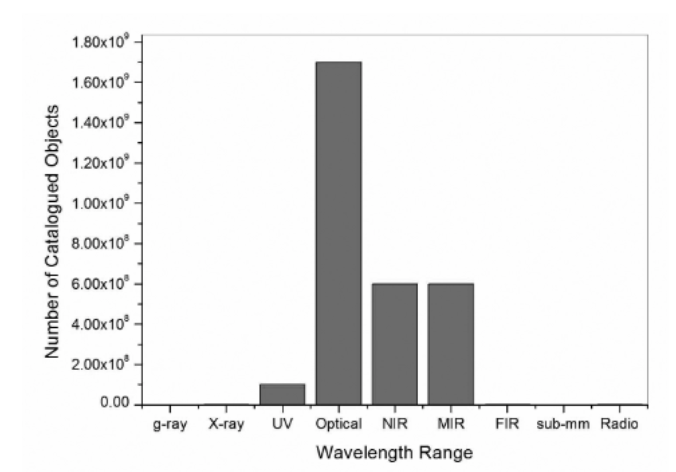

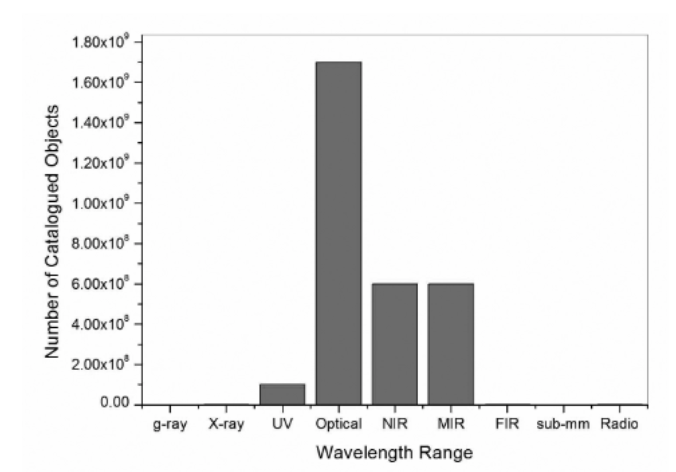

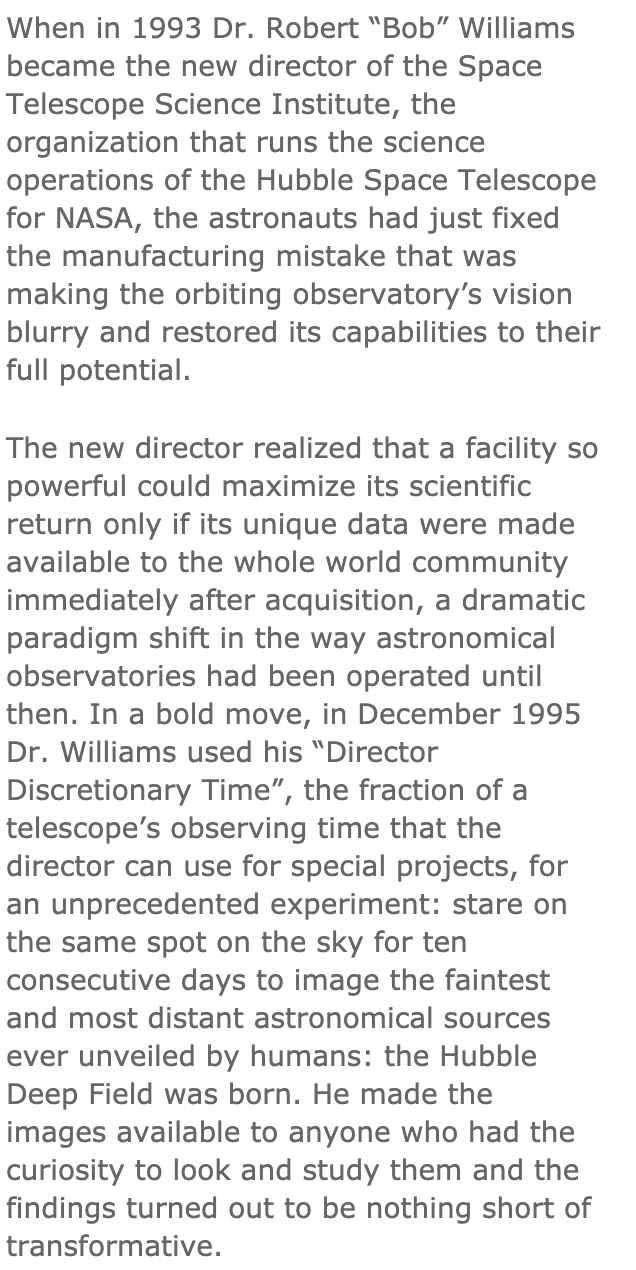

astronomical data volume

number of sources

@fedhere

astronomical data volume

number of sources

@fedhere

4-V of Big Data

V1: Volume

Number of bites

Number of pixels

Number of rows in a data table x number of columns for catalogs

V2: Variety

Diverse science return from the same dataset.

Multiwavelength

Multimessenger

Images and spectra

4-V of Big Data in astronomy

@fedhere

how do the data get big?

telescope size

FoV

camera size

resolution

fainter, more distant

more sky area at once

more data units

more objects/details

filters

variety (complexity)

ground based

optical

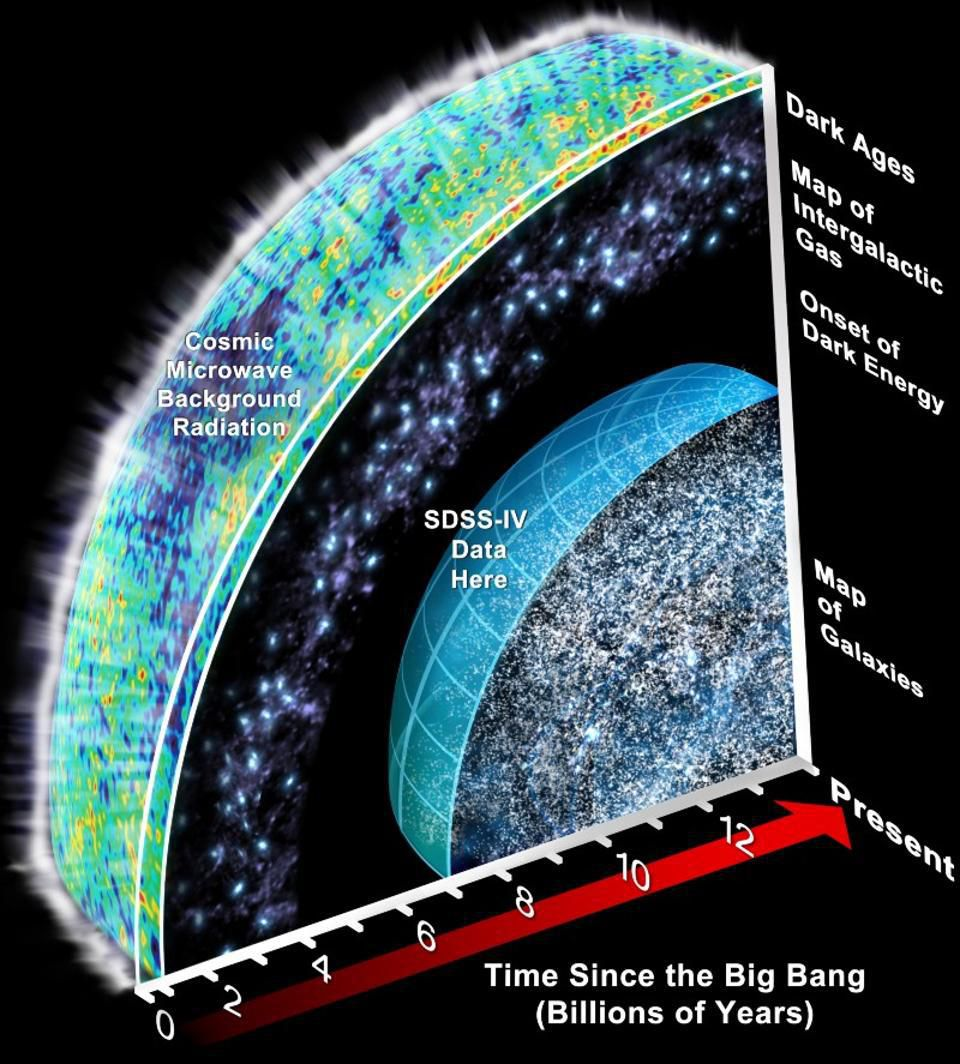

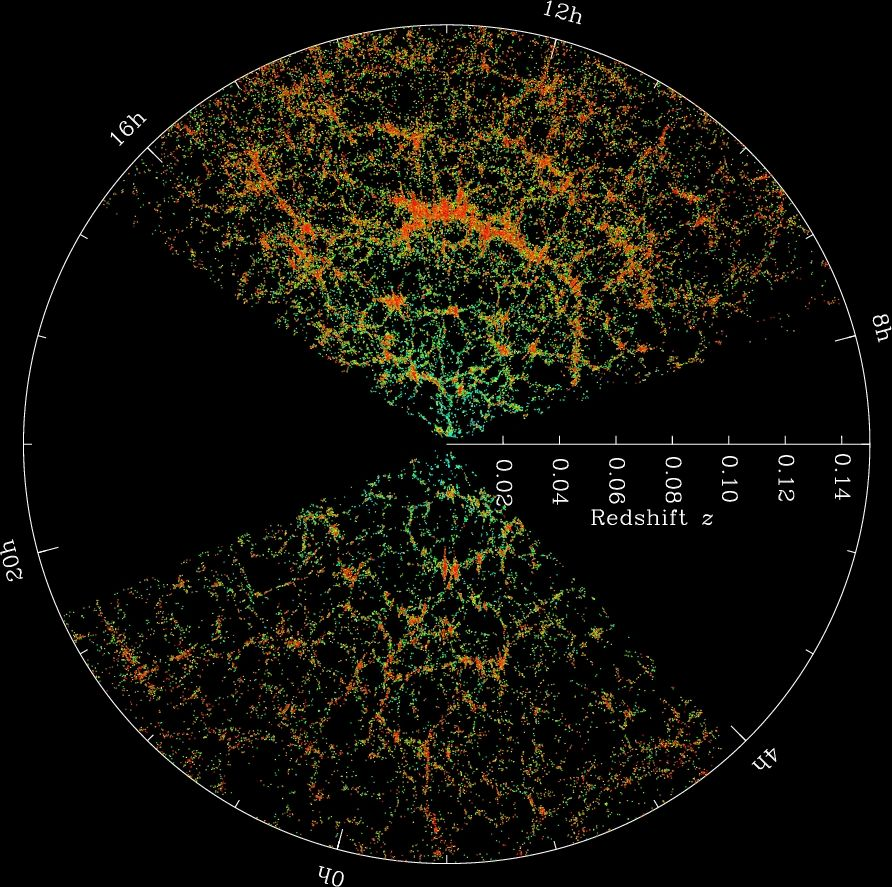

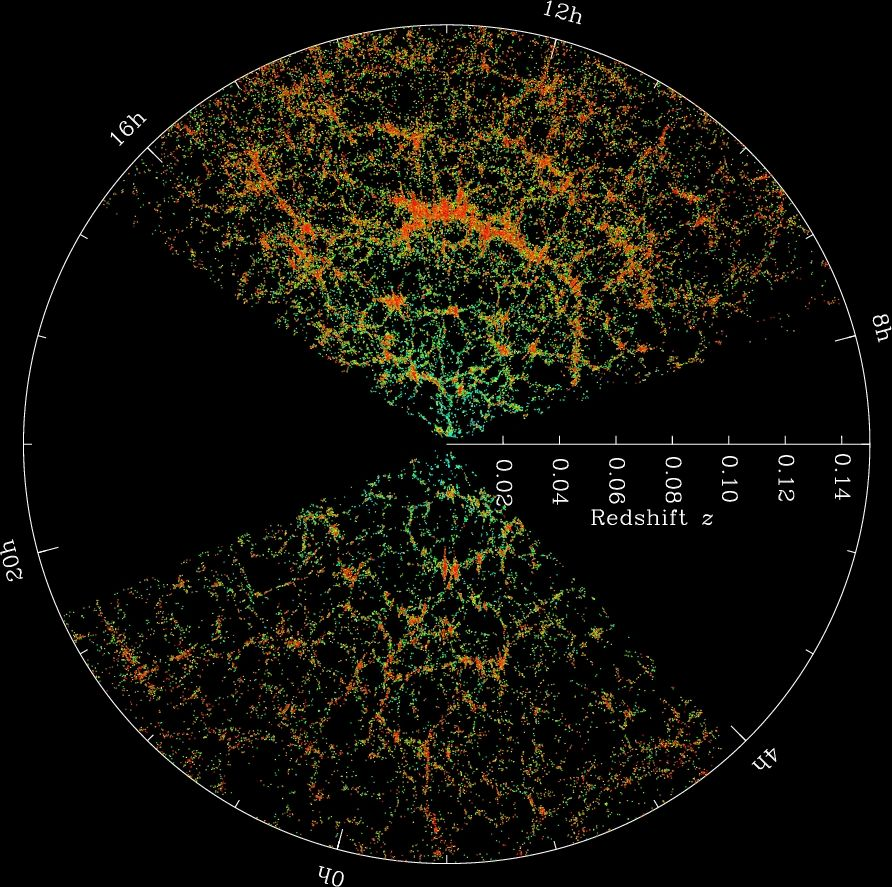

"The Sloan Digital Sky Survey has created the most detailed three-dimensional maps of the Universe ever made, with deep multi-color images of one third of the sky, and spectra for more than three million astronomical objects. Learn and explore all phases and surveys—past, present, and future—of the SDSS."

2.5m

6 sq degree

4Mpix

1''/pix

5 bands

optical: data releases

| SDSS DR | images | catalog | 1D+2D spectra |

|---|---|---|---|

| 2003 DR1 | 2.3Tb | 0.5Tb | |

| 2003 DR2 | 5Tb | 0.7Tb | |

| 2004 DR3 | 6Tb | 1.2Tb | |

| ... | |||

| 2009 DR7 | 15.7Tb | 18Tb | 3.5 TB |

| ... | |||

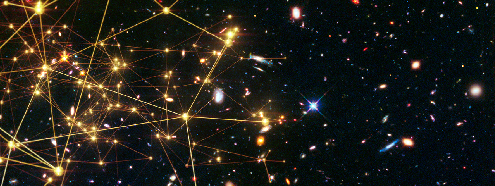

The SDSS map of the Universe. Each dot is a galaxy; the color bar shows the local density.

2019 DR16

273 TB

photometric parameters for 53 million unique objects.

@fedhere

optical: data releases

@fedhere

2019 DR2 PanSTARRS

1.6 Pb

The amount of imaging data is equivalent to two billion selfies, or 30,000 times the total text content of Wikipedia. The catalog data is 15 times the volume of the Library of Congress.

optical PS1

1.8m

7 sq degree

1.4Gpix

0.26''/pix

6 bands

@fedhere

optical: data releases

| Number of raw on-sky camera exposures ingested: | 40,000 | 23 TB |

|---|---|---|

| Volume (with ancillary files): | 195 TB | |

| Number of lightcurves | 319 | 110 GB |

@fedhere

optical: DES

4m

3 sq degree

96 Mpix

0.2''/pix

570 MP camera with a 3 deg2 field of view installed at the prime focus of the Blanco 4 m

0.5Gpix

0.2''/pix

5 bands

@fedhere

optical: ZTF

1.2m

0.5Gpix

1.2m

47 sq degree

1''/pix

2

band

@fedhere

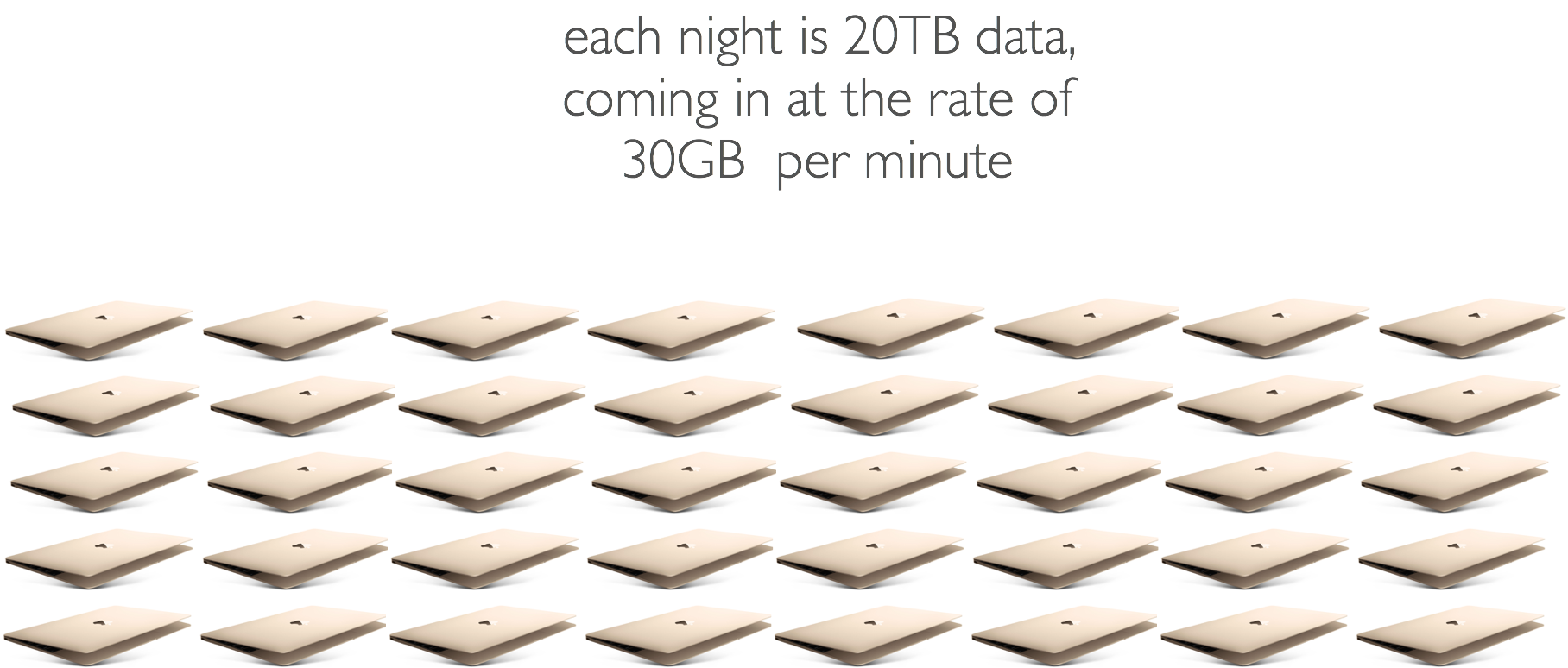

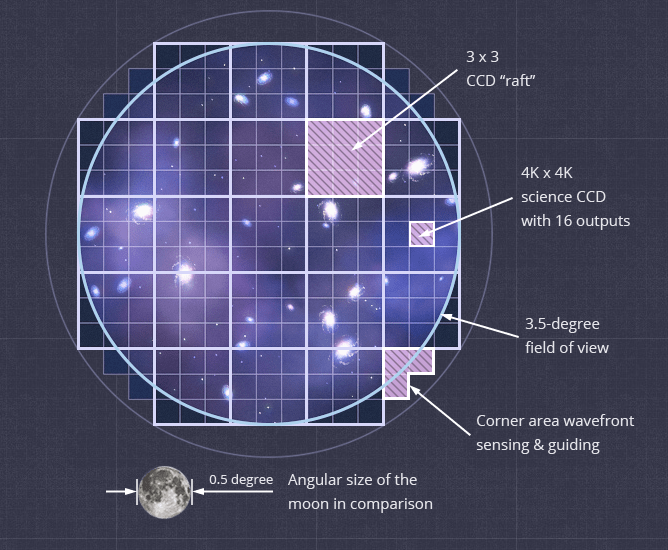

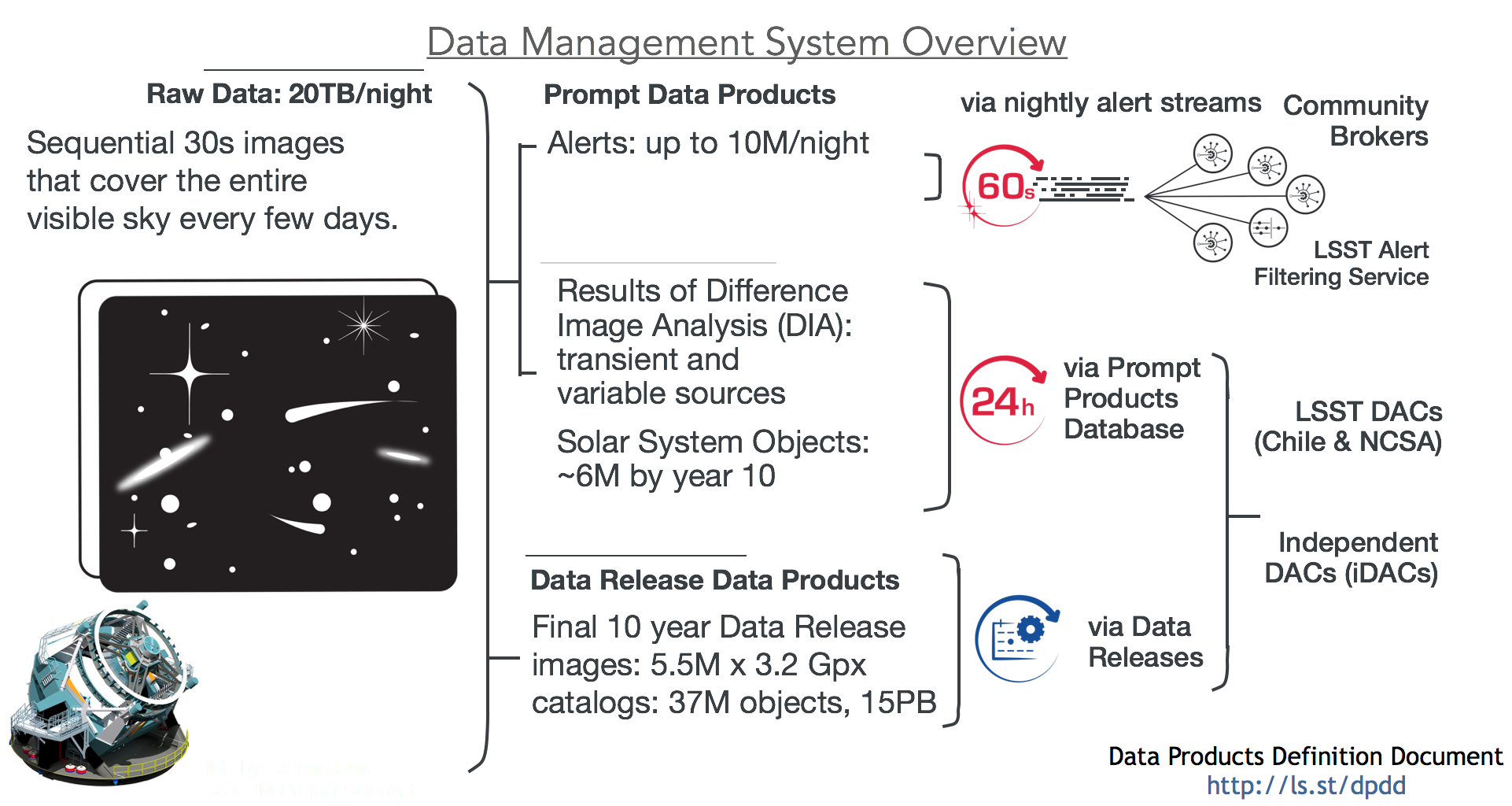

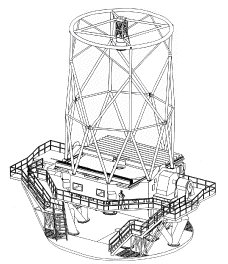

optical: Rubin LSST

1.2m

3.2Gpix

8m (6.5 effective)

9 sq degree

0.2''/pix

6 bands

@fedhere

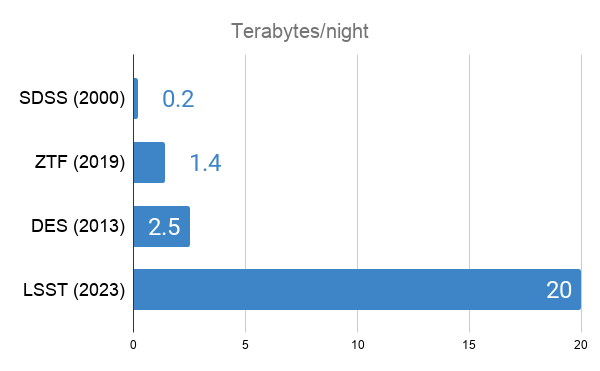

optical

Nightly data rates

optical: Rubin LSST

@fedhere

DSS:

digitized photographic plates

@fedhere

SDSS

@fedhere

DLS

20 sq deg ultra-deep multi-band sky survey.

@fedhere

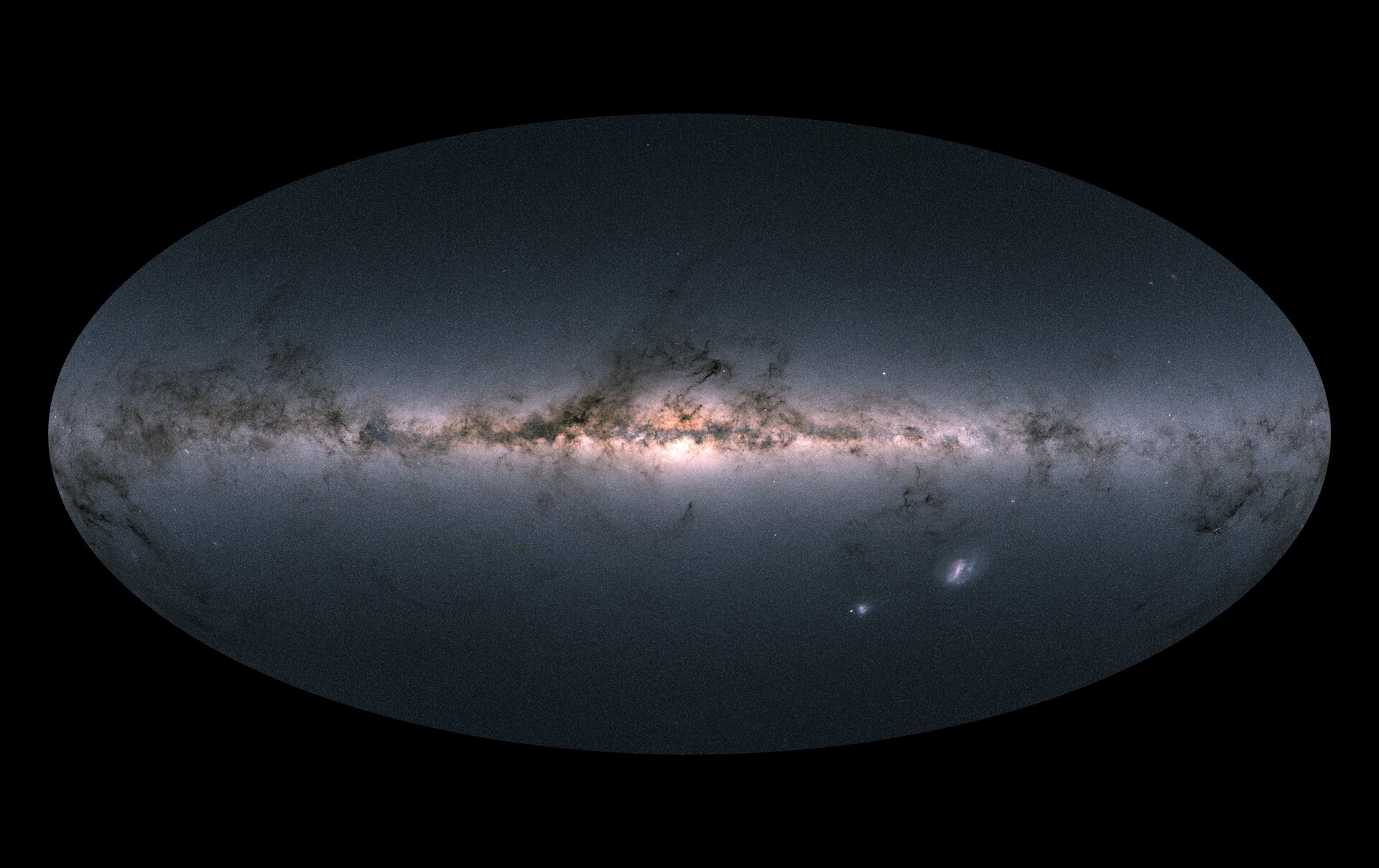

Rubin

LSST

(simulated)

@fedhere

optical: other surveys

MACHO (Microlensing)

SNLS (Supernovae)

OGLE (Microlensing)

DLA (weak lensing)

Palomar Distant Solar System Survey

...

@fedhere

Event Horizon Telescope, 2019

https://iopscience.iop.org/journal/2041-8205/page/Focus_on_EHT

5Pb data

Extreme imaging via physical model inversion: seeing around corners and imaging black holes,

Dr. Katie Bouman

@fedhere

Event Horizon Telescope, 2019

https://iopscience.iop.org/journal/2041-8205/page/Focus_on_EHT

5Pb data

Extreme imaging via physical model inversion: seeing around corners and imaging black holes,

reconstruct images and video from a sparse telescope array distributed around the globe. Additionally, it presents a number of evaluation techniques developed to rigorously evaluate imaging methods in order to establish confidence in reconstructions done with real scientific data.

Extreme imaging via physical model inversion: seeing around corners and imaging black holes,

Dr. Katie Bouman

@fedhere

Event Horizon Telescope, 2019

https://iopscience.iop.org/journal/2041-8205/page/Focus_on_EHT

5Pb data

Extreme imaging via physical model inversion: seeing around corners and imaging black holes,

Extreme imaging via physical model inversion: seeing around corners and imaging black holes,

Dr. Katie Bouman

@fedhere

@fedhere

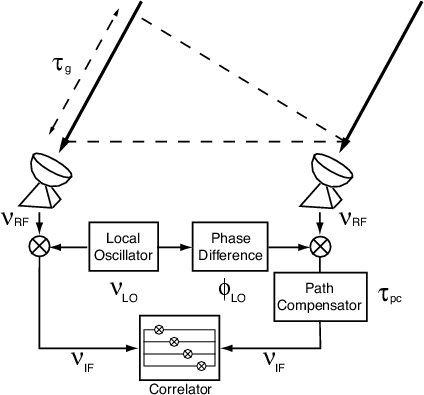

radio

interferometry:

Create a virtual telescope by combining multiple antennae.

Cross correlating the signal from each antenna produces coherent interpretable radio images of the sky

The directed use of the Earth’s rotation for filling the Fourier plane is known as Earth rotation aperture synthesis and was the subject of the 1974 Nobel Prize in Physics

Radio interferometers do not image the sky directly. Instead they measure the amount of power on different angular scales,

@fedhere

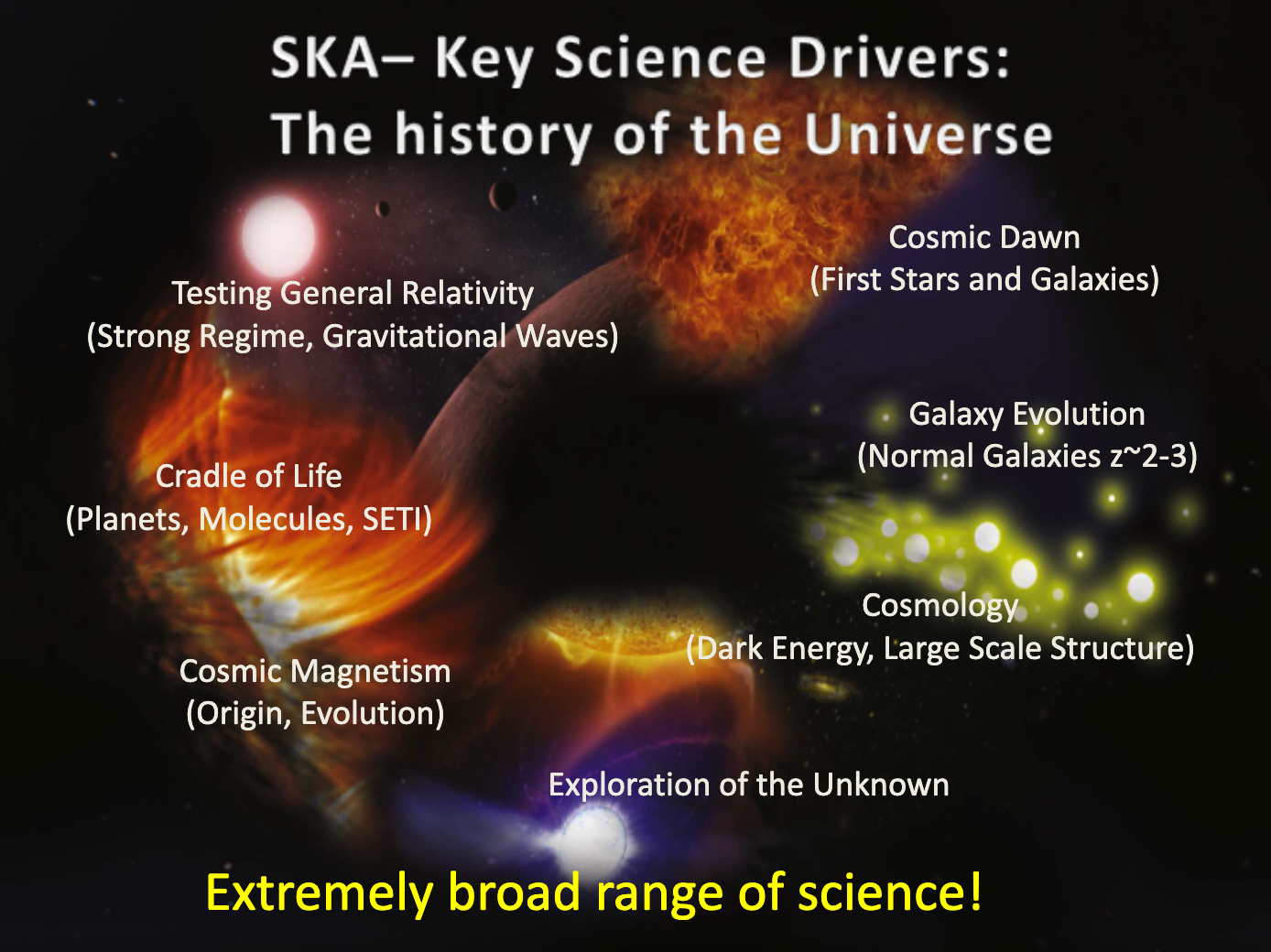

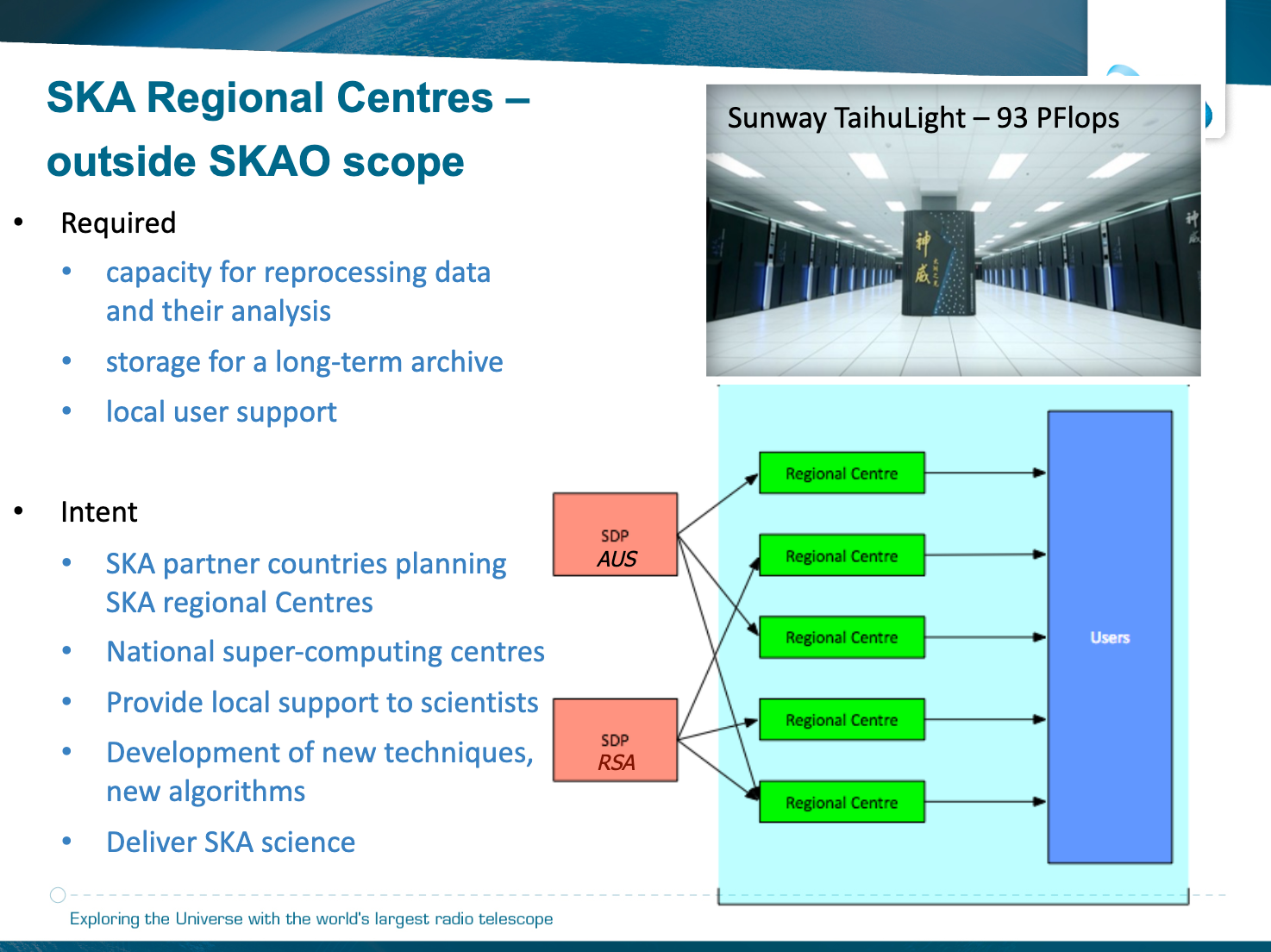

... if you thought LSST was Big Data...SKA

@fedhere

Square Kilometer Array

@fedhere

Square Kilometer Array

@fedhere

Square Kilometer Array

@fedhere

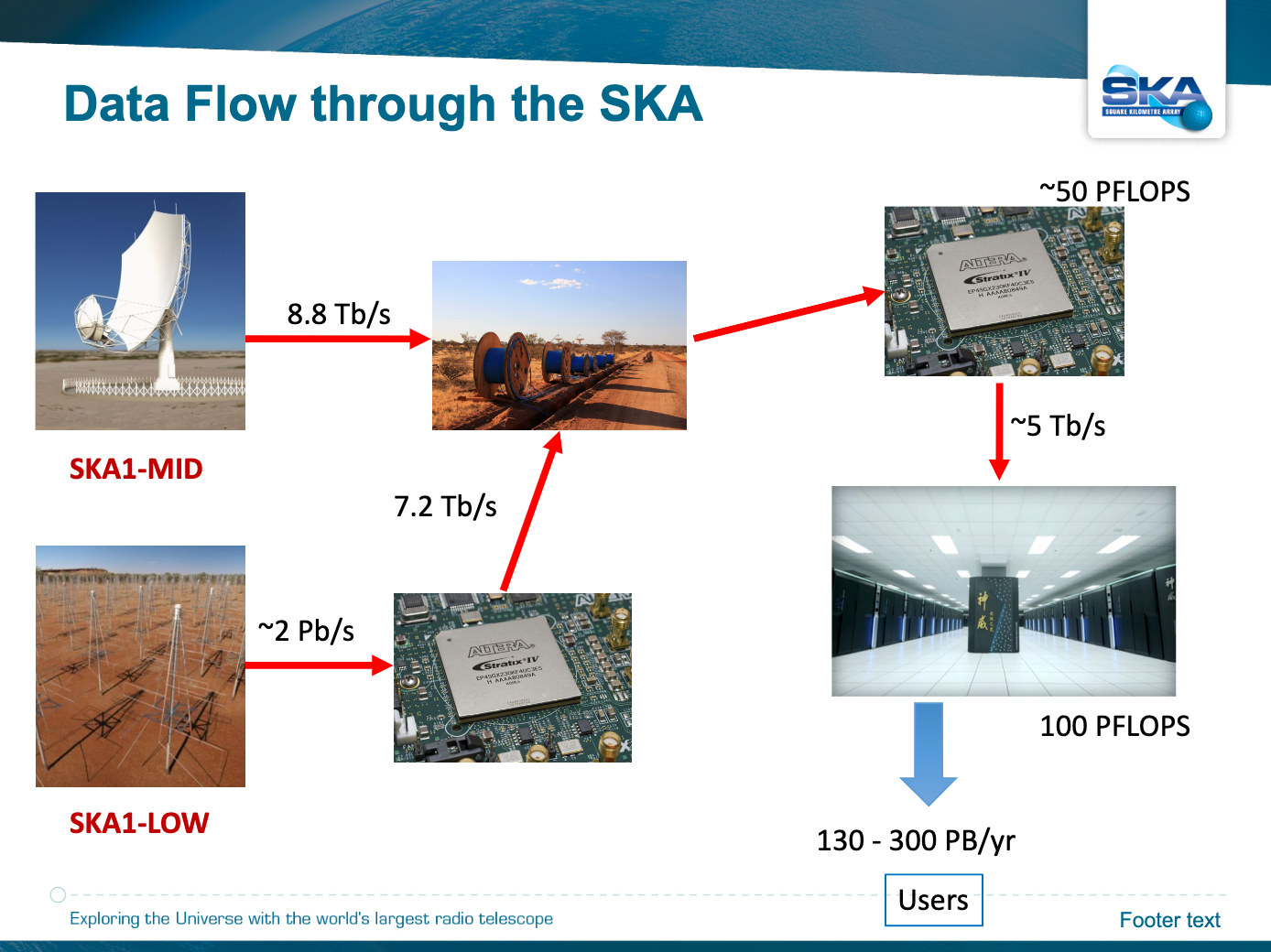

Data rate: 0.5–1 TB s−1.

Data from the individual antennas of the SKA1-MID, or the individual stations of the SKA1-LOW, are transported to the central signal processing facility, where the data from each pair of antennas/stations are correlated to produce the visibility data

300PB/telescope/year = 8.5EB

Typical images will have spatial axes with 2e15 x 2e15 pixels up to 2e16 frequency channels. This dimensionality results in petabyte scale volumes for individual data products,

Performing multiple Fourier transforms on data with image sizes as large as 2e15 or even 2e16 pixels on a side is computationally prohibitive.

[...] In spite of these challenges, the computing for the SKA over the coming decade is certainly achievable, and it is likely that its implementation will be instrumental in driving the next generation of global e-infrastructure.

The computers must be able to make decisions on objects of interest, and remove data which is of no scientific benefit, such as radio interference from things like mobile phones or similar devices, even with the remote locations which will host the SKA.

@fedhere

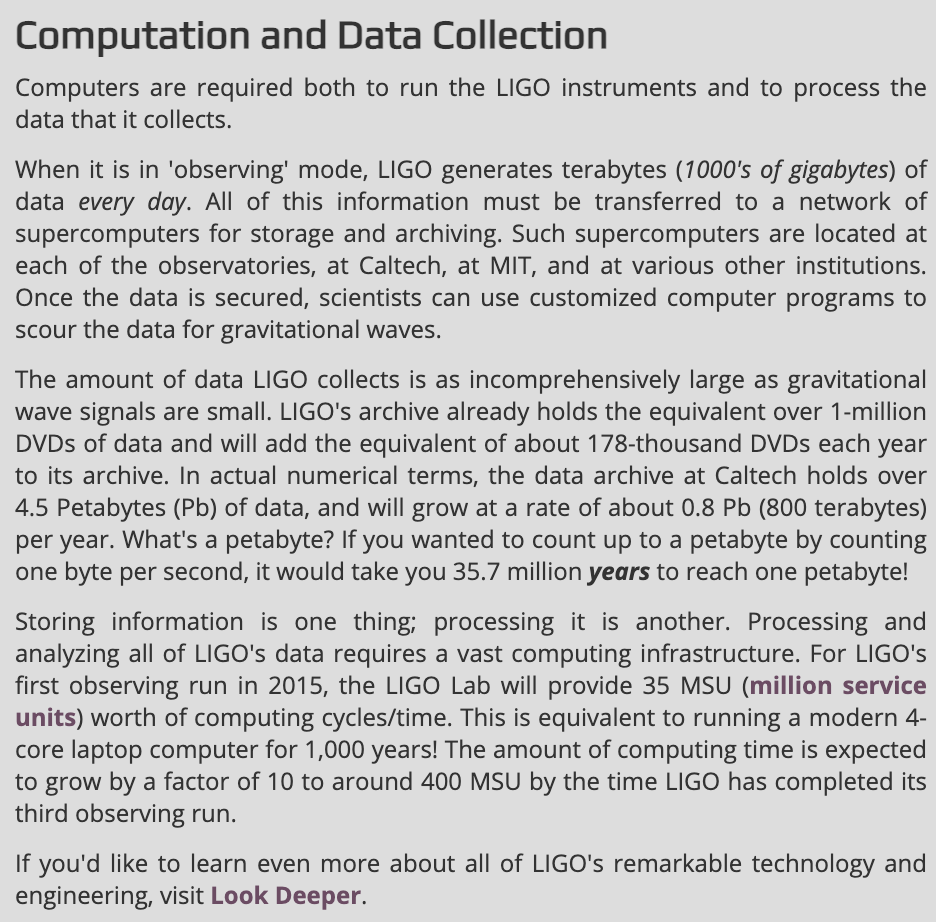

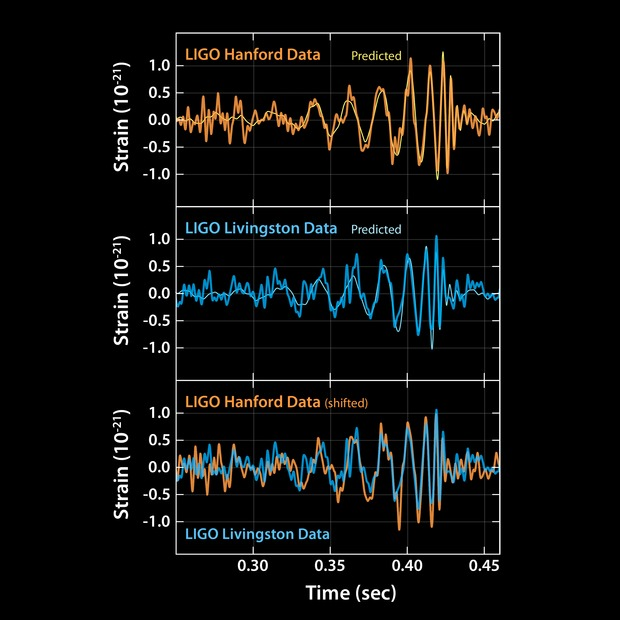

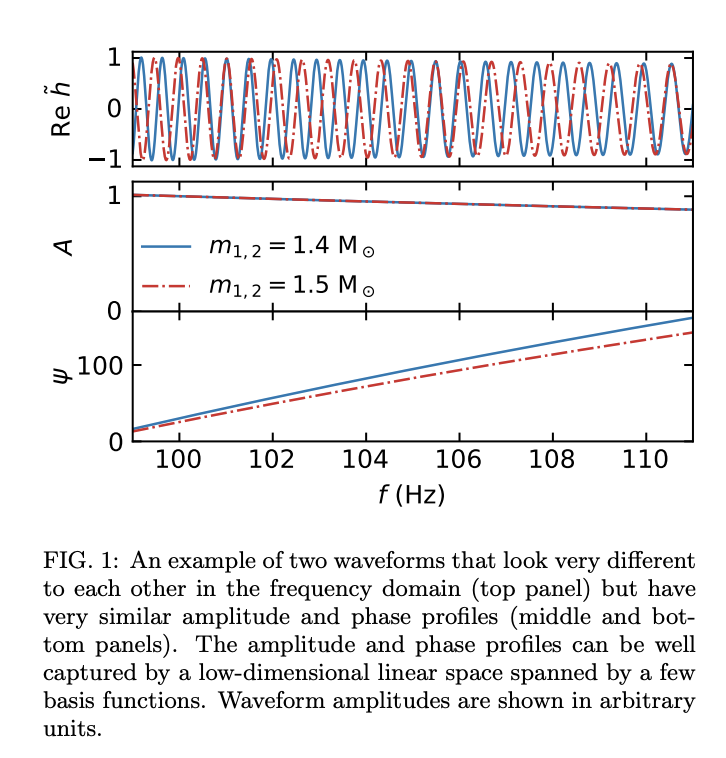

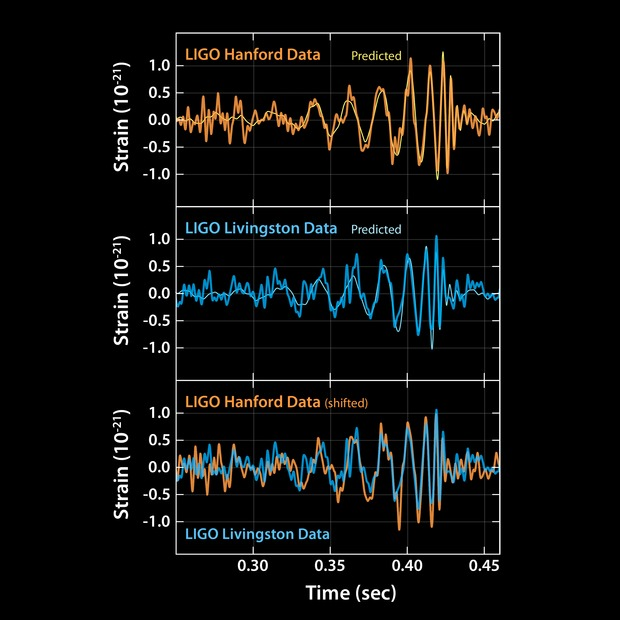

Gravitational Wave and MMA

@fedhere

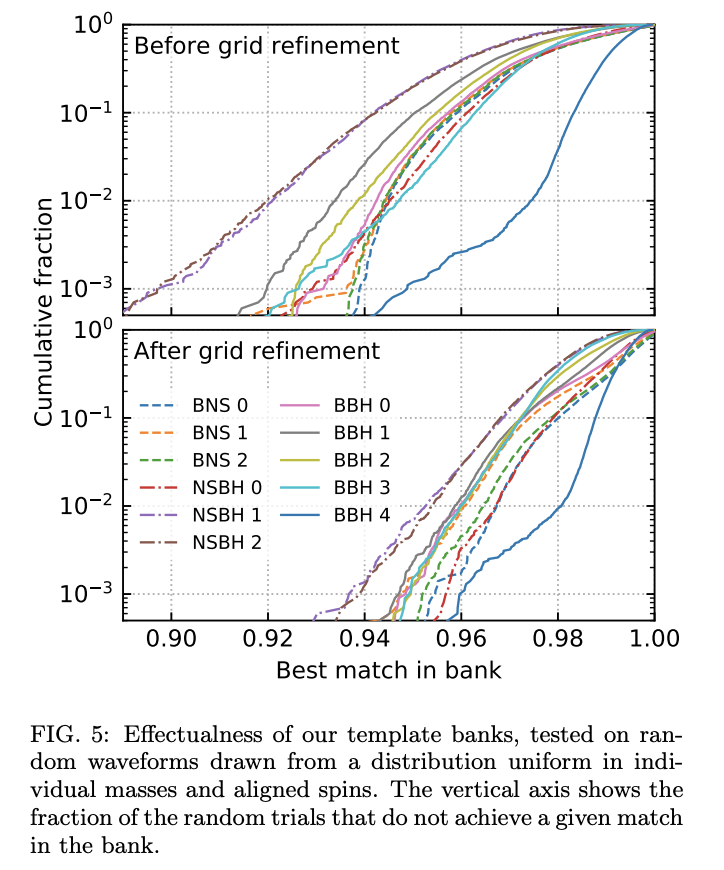

Gravitational Wave and MMA

To search for binaries with components more massive than mmin=0.2M⊙ while losing no more than 10% of events the initial LIGO interferometers will require 1x10e11flops for data analysis to keep up with data acquisition.

Advanced LIGO will require 7.8e11 flops, and VIRGO 4.8e12 flops.

If the templates are stored rather than generated as needed, storage requirements range

1.5e11 (TAMA) - 6.2e14 (VIRGO)

https://journals.aps.org/prd/abstract/10.1103/PhysRevD.60.022002

Owen+1999

https://arxiv.org/pdf/1904.01683.pdf

Roulet+2019

@fedhere

Gravitational Wave and MMA

@fedhere

space-based astronomy BD problems

3/6

4-V of Big Data

V1: Volume

Number of bites

Number of pixels

Number of rows in a data table x number of columns for catalogs

V2: Variety

Diverse science return from the same dataset.

Multiwavelength

Multimessenger

Images and spectra

V3: Velocity

real time analysis, edge computing, data transfer

4-V of Big Data in astronomy

space based

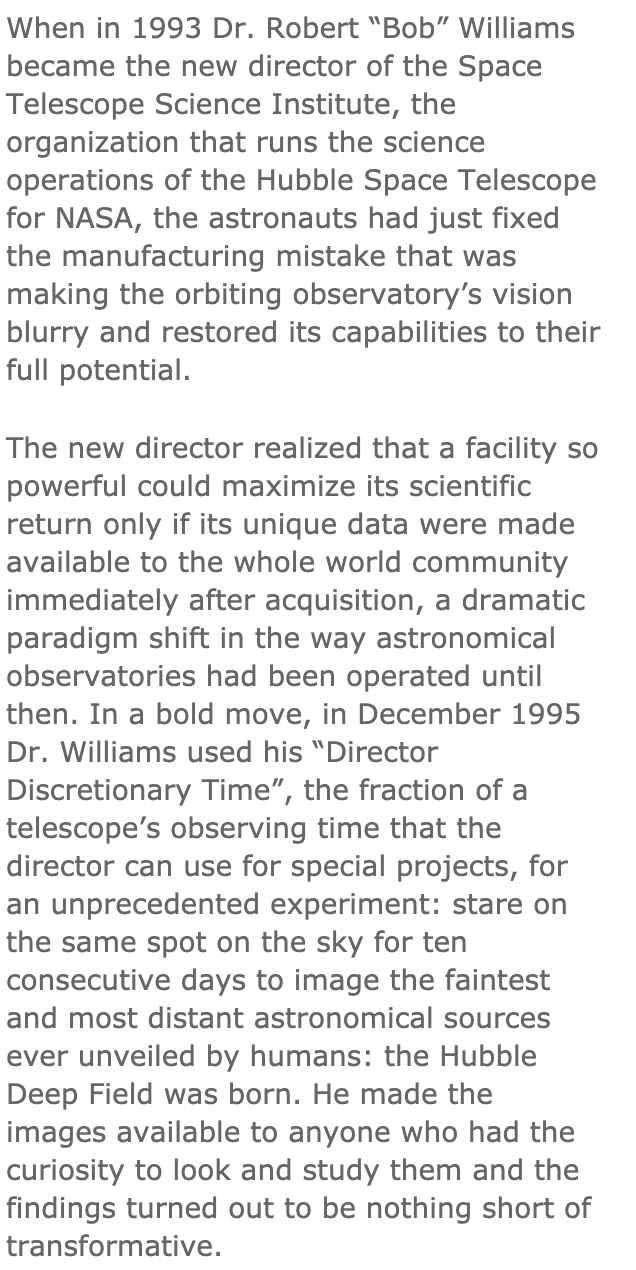

Hubble deep field:

https://www.umass.edu/events/talk-robert-williams-probing

Bob decided that this data was so overwhelmingly powerful, in terms of what it was telling us about the universe, that it was worth it for the community to be able to get their hands on the data immediately. And so the original deep field team processed the data, found the objects in it, and then catalogued each of them, so that every object in the deep field had a description in terms of size, distance, color, brightness and so forth. And that catalogue was available to researchers from the very start---it started a whole new model, where the archive does all the work.

@fedhere

γ- and X-ray

@fedhere

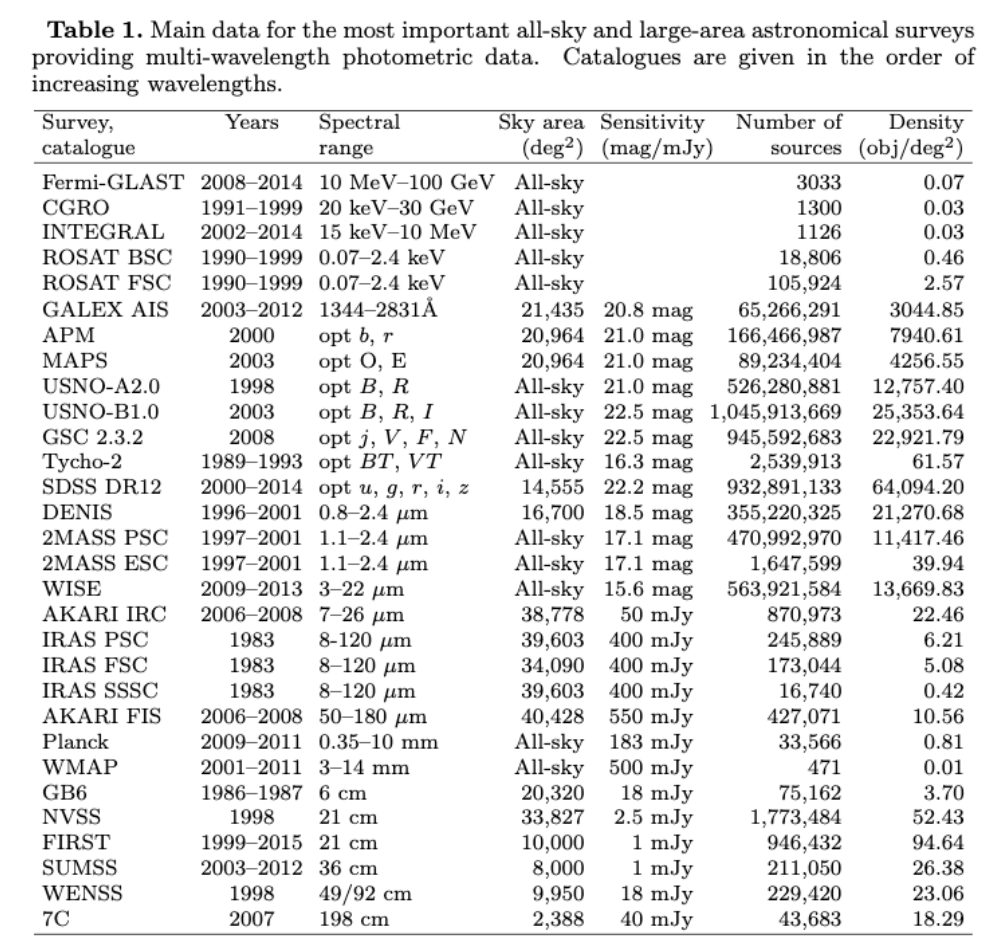

Gaia: big data and telemetry do not get along...

@fedhere

Gaia: big data and telemetry do not get along...

crowd-sourcing

4/6

4-V of Big Data

V1: Volume

Number of bites

Number of pixels

Number of rows in a data table x number of columns for catalogs

V2: Variety

Diverse science return from the same dataset.

Multiwavelength

Multimessenger

Images and spectra

V4: Veracity

This V will refer to both data quality and availability (added in 2012)

V3: Velocity

real time analysis, edge computing, data transfer

4-V of Big Data in astronomy

@fedhere

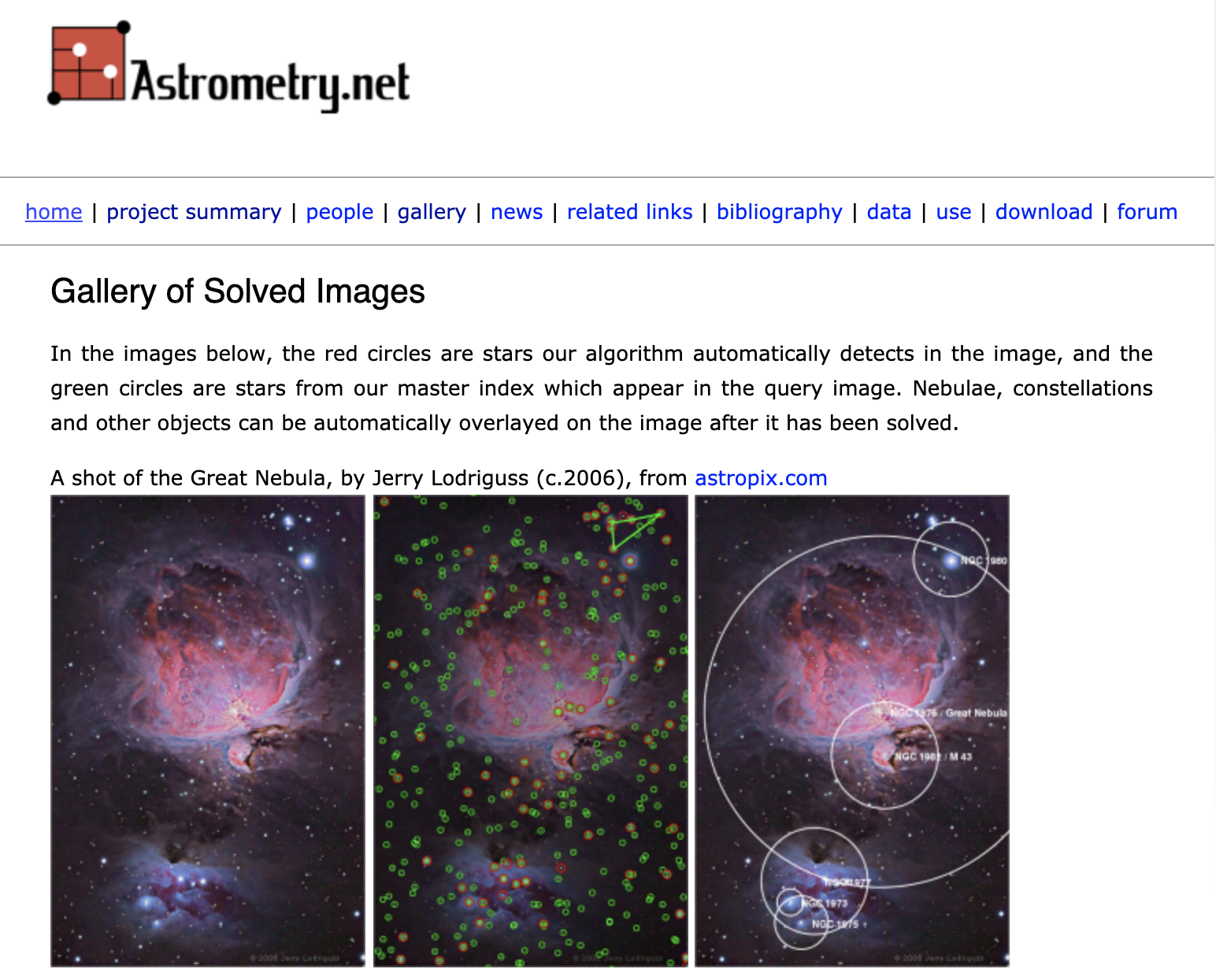

crowd sourcing

crowd sourcing

LSST: 2x3.2 GPix images/minute for 10 years:

scaling the Galaxy Zoo the entire population of the Earth would be insufficient to study the full dataset.

crowd sourcing

An all-sky search for continuous wave signals in the frequency range 50-1190 Hz + with frequency derivative range from

-20e-10 Hz/s to 1.1e-10 Hz/s collected in 2005-2007 during the fifth LIGO science run.

Hundreds of thousands of host machines, that contributed a total of approximately 25000 CPU

crowd sourcing

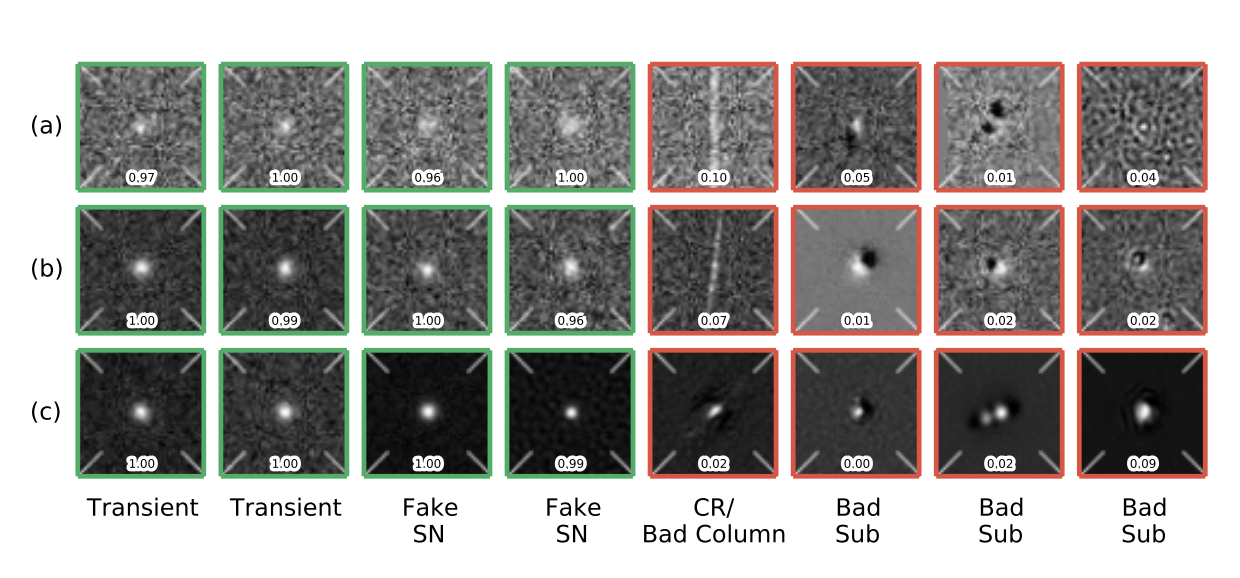

But some of the most crucial problems of veracity are ... boring

Goldstein et al 2015 https://arxiv.org/pdf/1504.02936.pdf

time-domain

astronomy BD problems

5/6

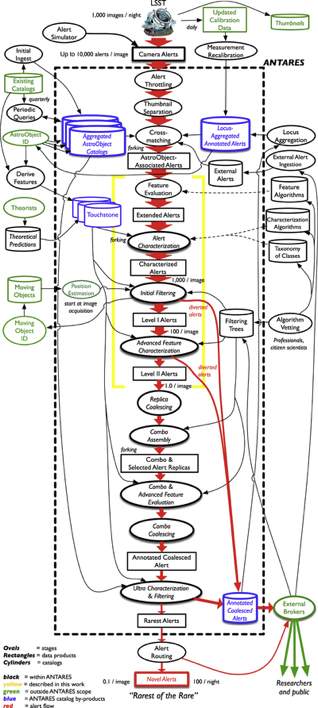

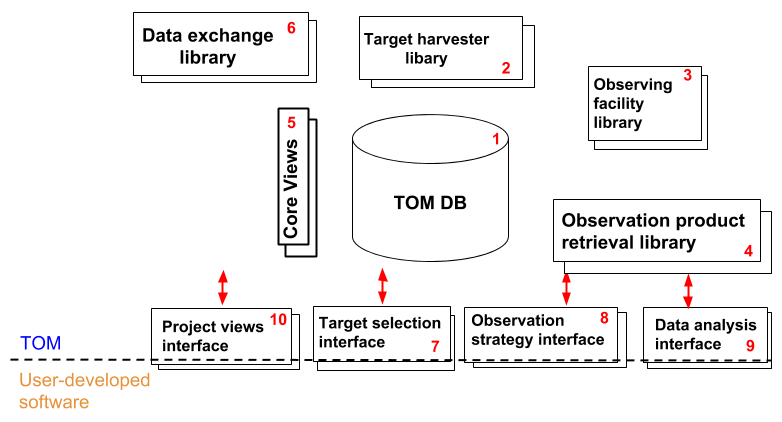

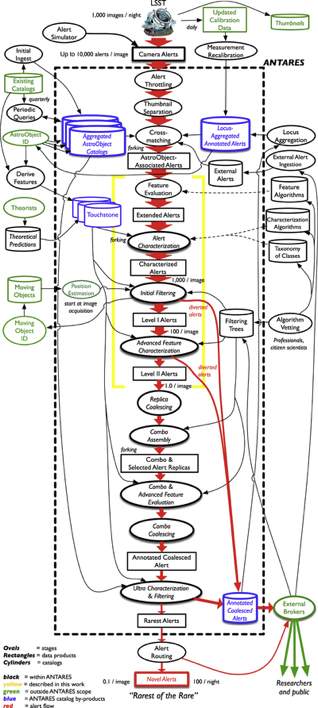

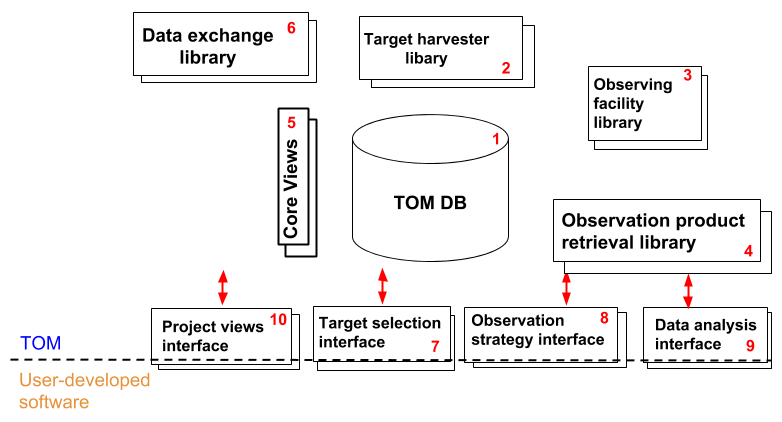

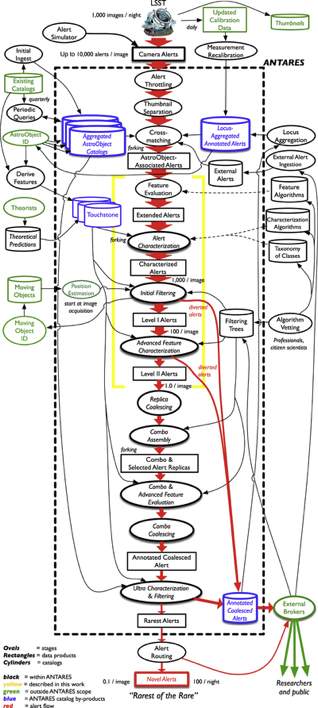

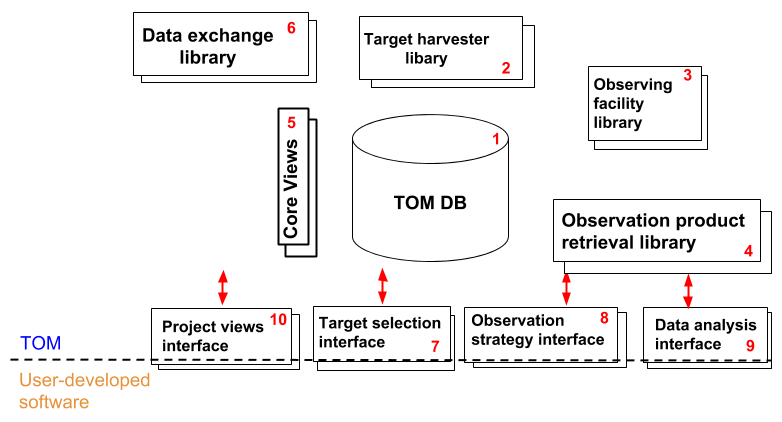

Astronomy’s Discovery Chain

Discovery Engine

10M alerts/night

Community Brokers

target observation managers

the astronomy discovery chain

Big data and time domain astrophysics

@fedhere

Discovery

@fedhere

Text

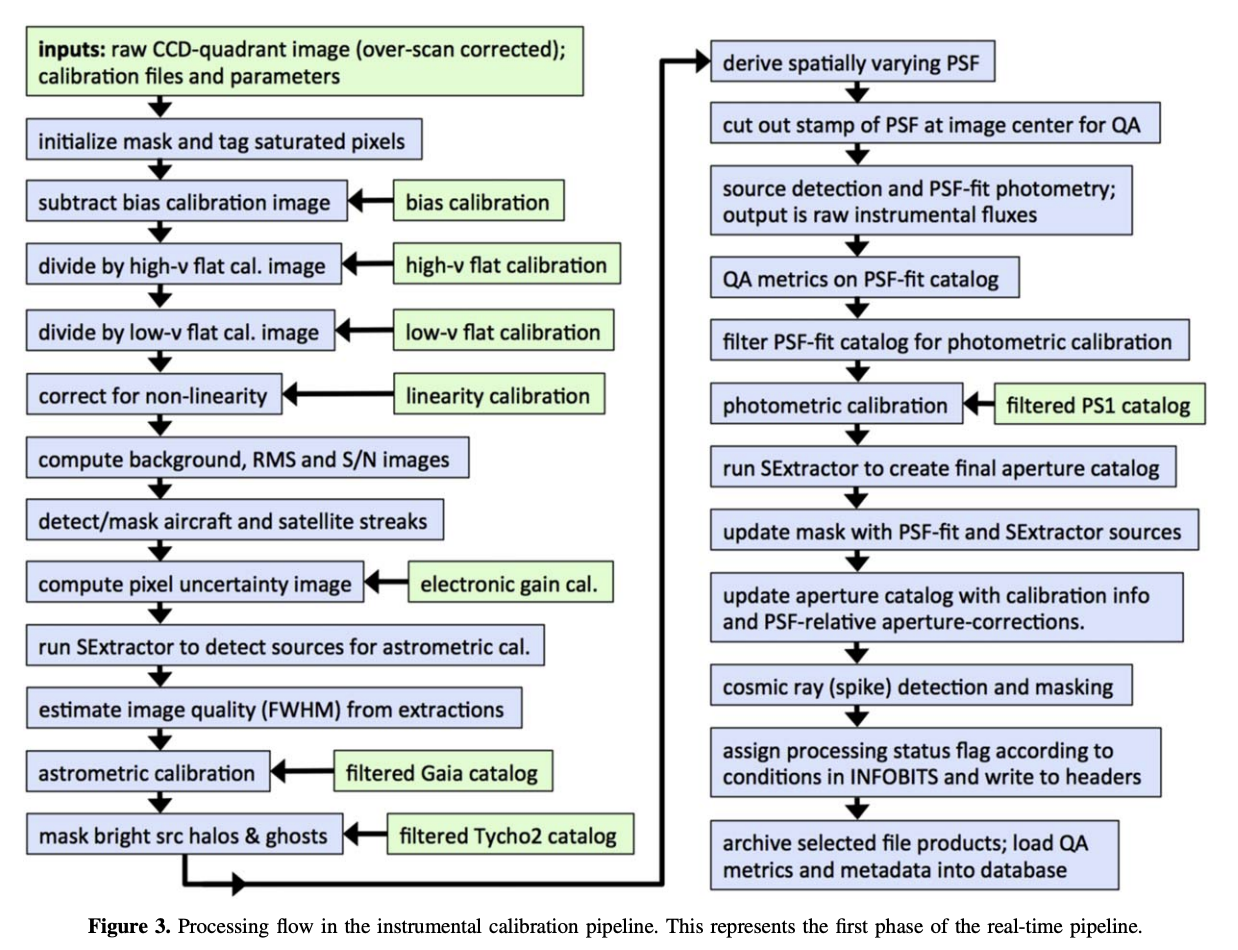

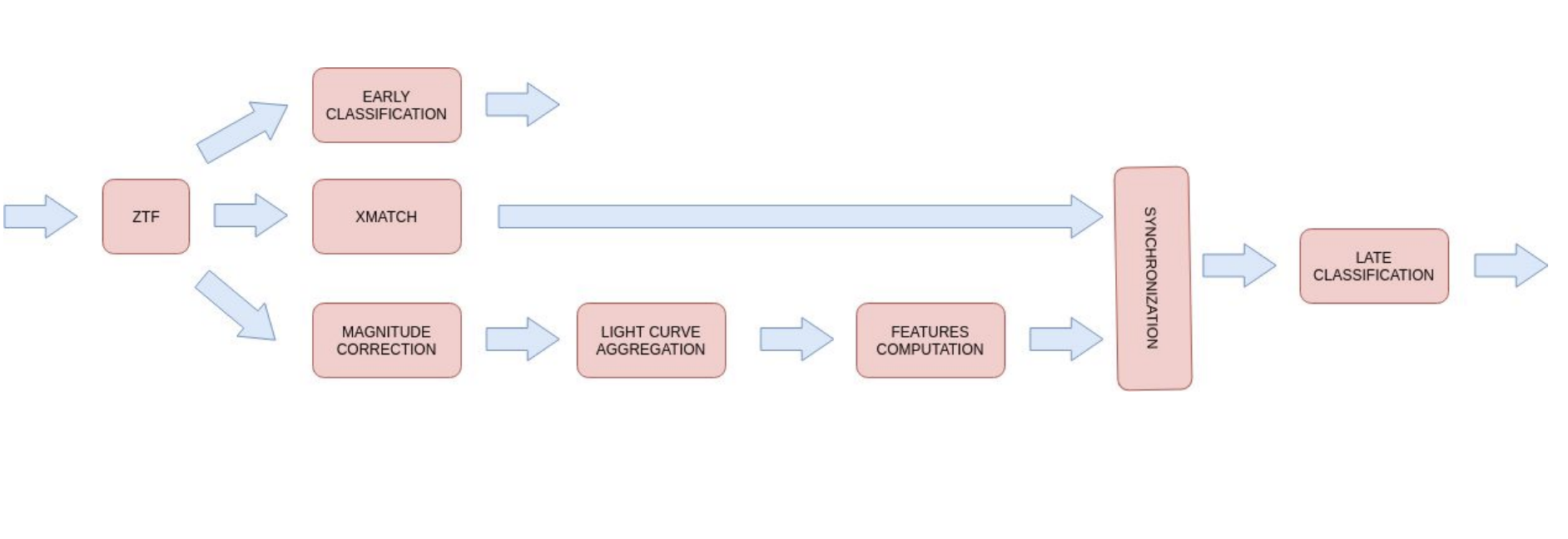

Big data and time domain astrophysics

ZTF realtime pipeline

@fedhere

Discovery

Big data and time domain astrophysics

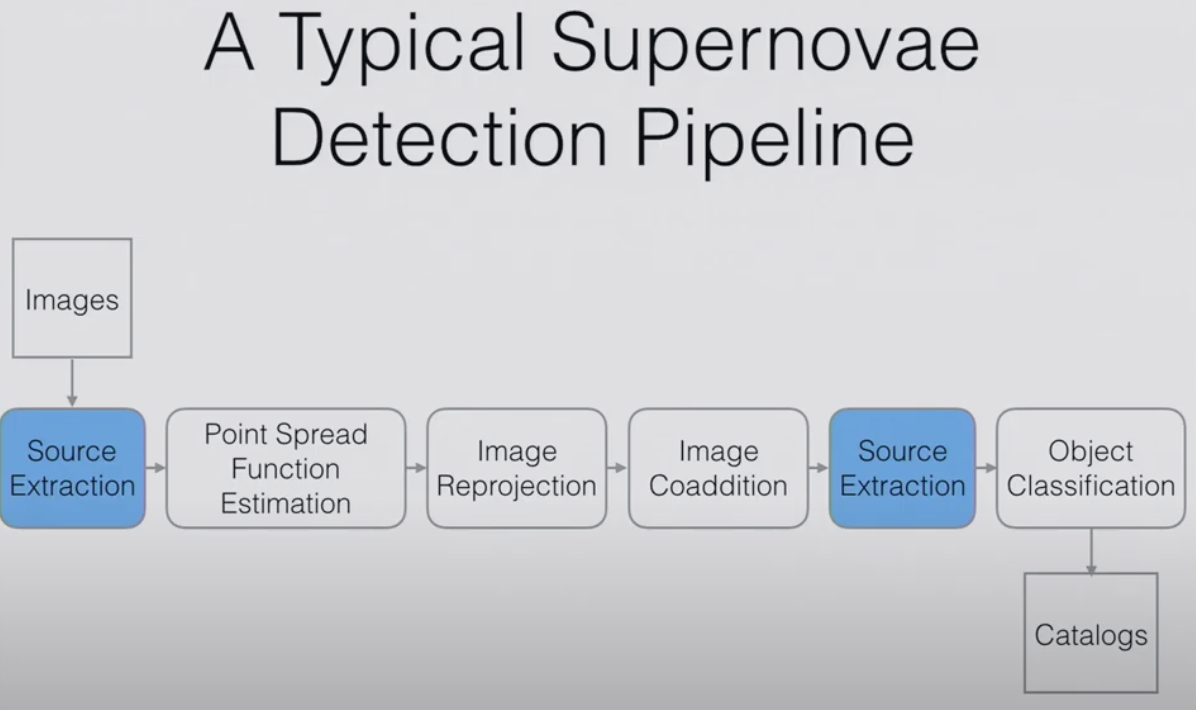

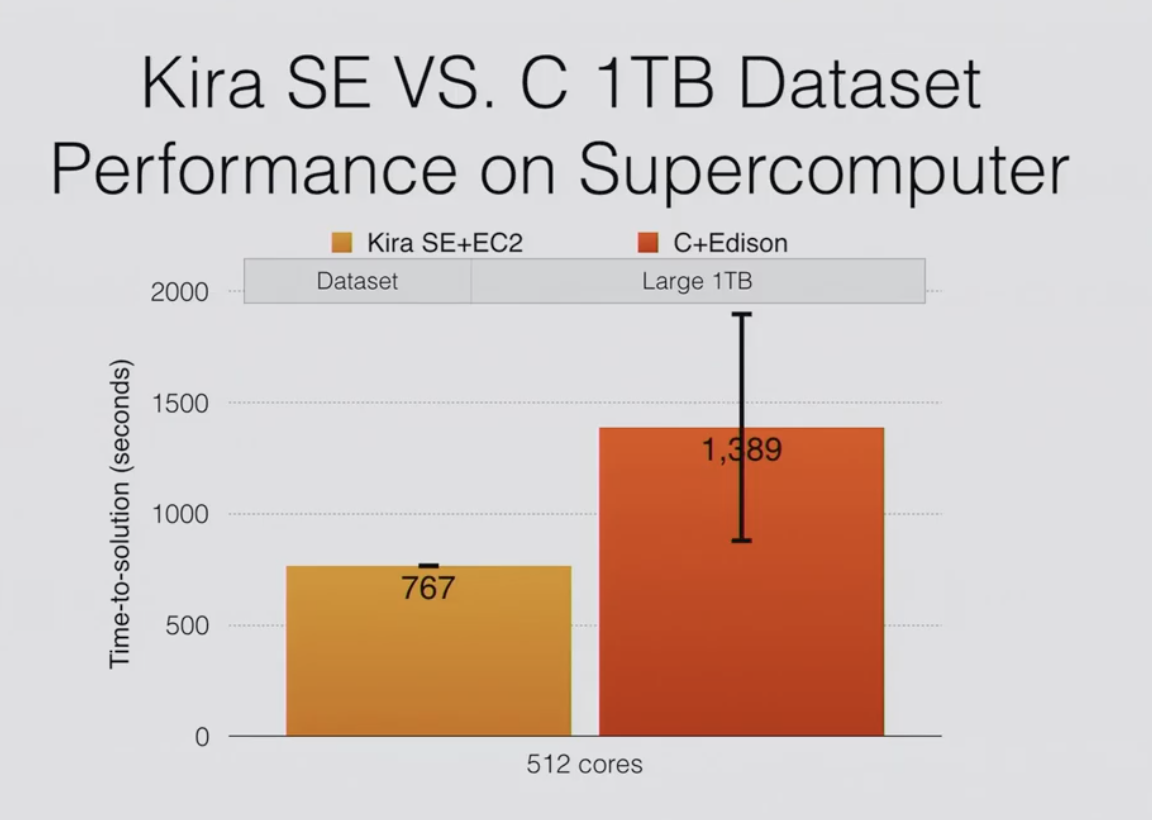

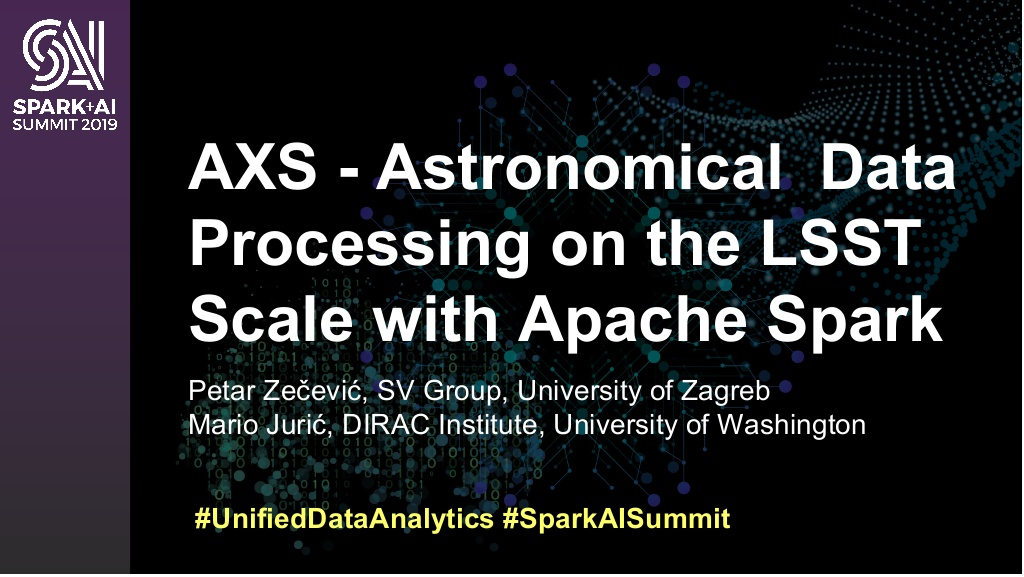

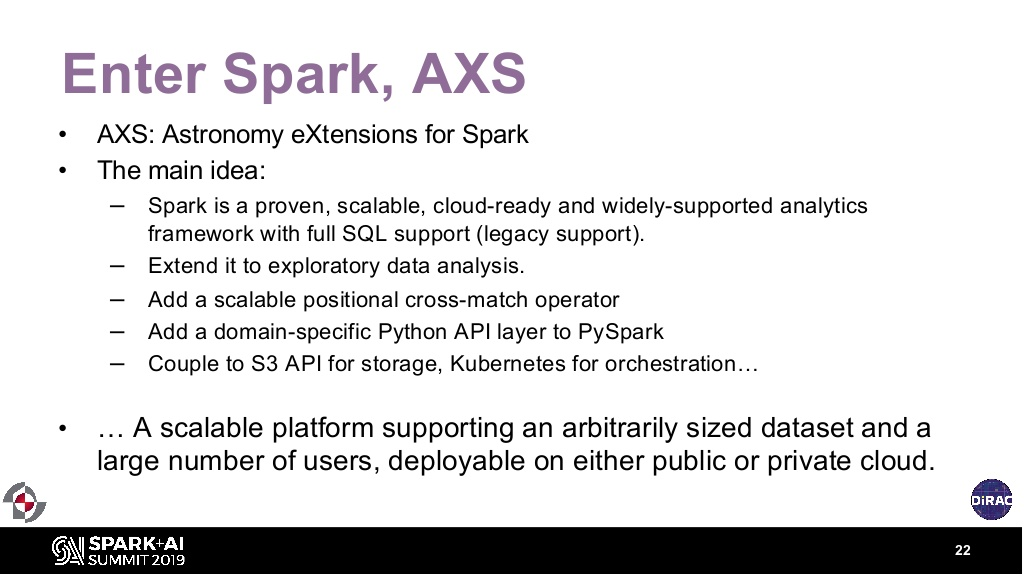

Kira: Processing Astronomy Imagery Using Big Data Technology

BIDS Spring 2016 Data Science Faire | May 3, 2016 UC Berkeley Zhao Zhang

Particularly because the data (IO) intensive applications are

Demonstrated computational gain by using Big Data platforms

@fedhere

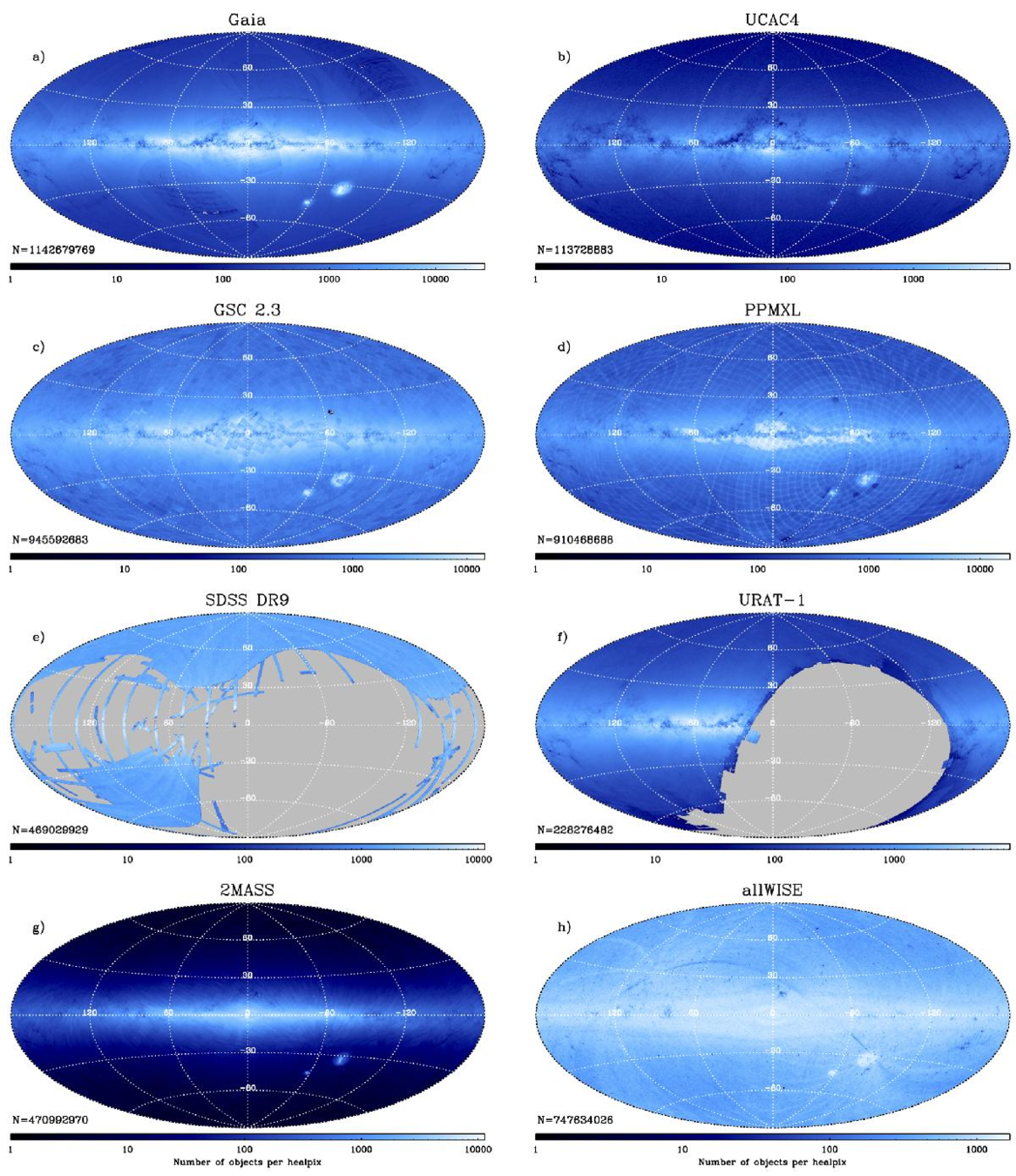

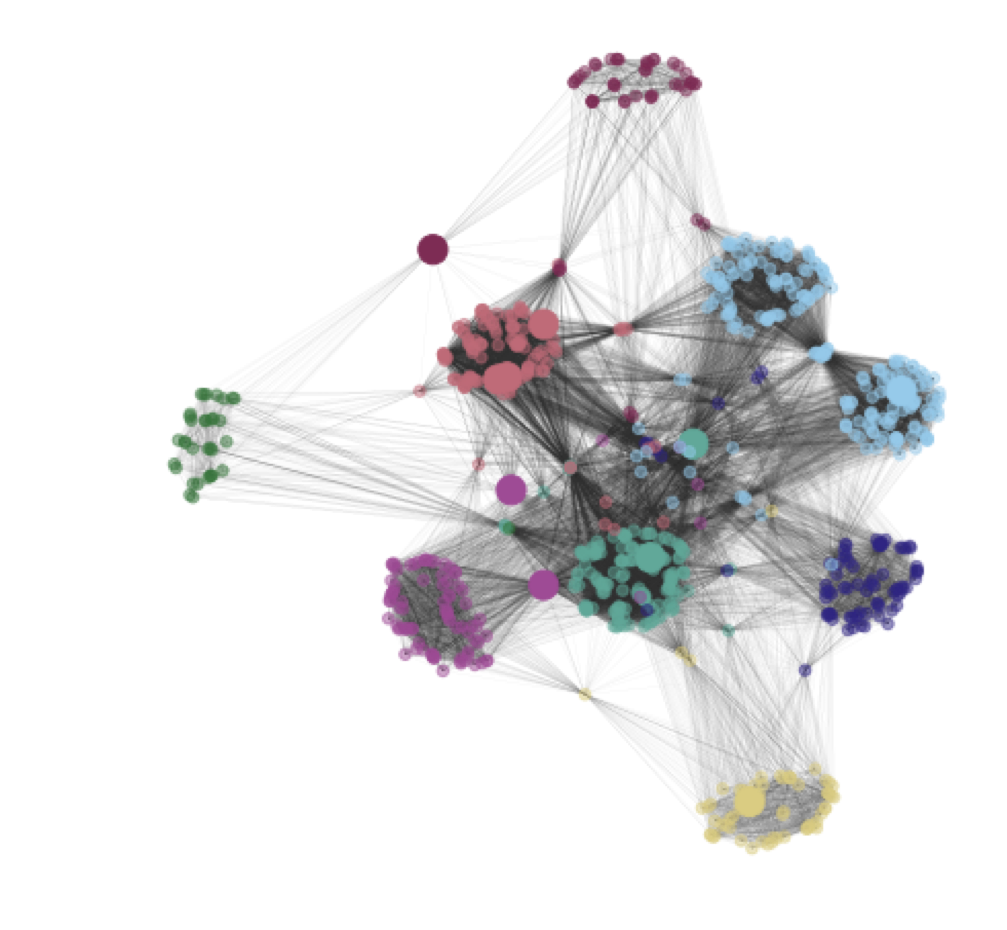

Cross matching catalogs is vital for physical inference. The complexity of the data scales ~with the square of the data sources

Cross-matching

Big data and time domain astrophysics

@fedhere

Astronomy’s Discovery Chain

Discovery Engine

10M alerts/night

Community Brokers

target observation managers

the astronomy discovery chain

Big data and time domain astrophysics

@fedhere

Text

augmentation and classification

Big data and time domain astrophysics

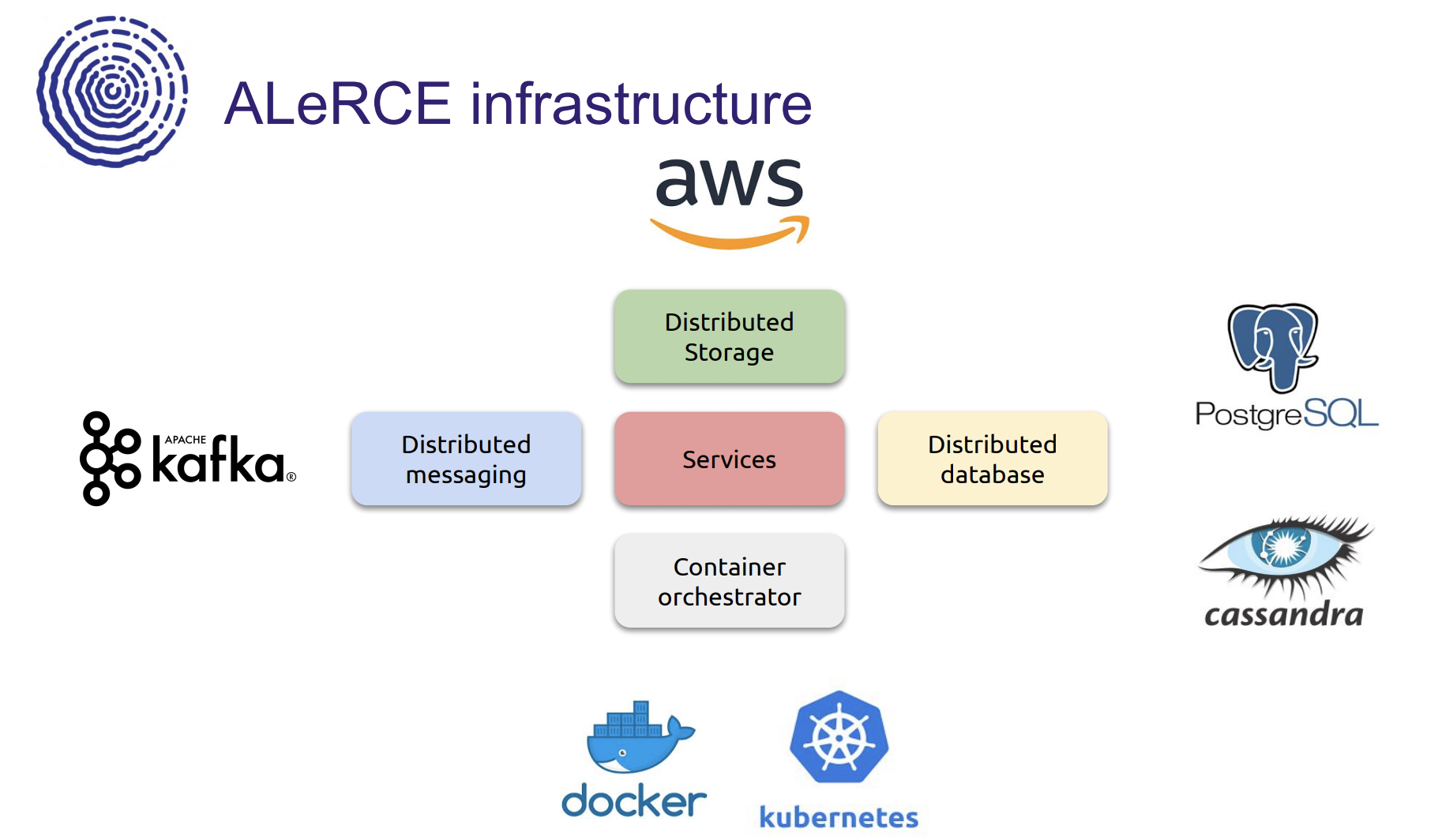

Alert brokers

@fedhere

Astronomy’s Discovery Chain

Discovery Engine

10M alerts/night

Community Brokers

target observation managers

the astronomy discovery chain

Big data and time domain astrophysics

@fedhere

platforms

6/6

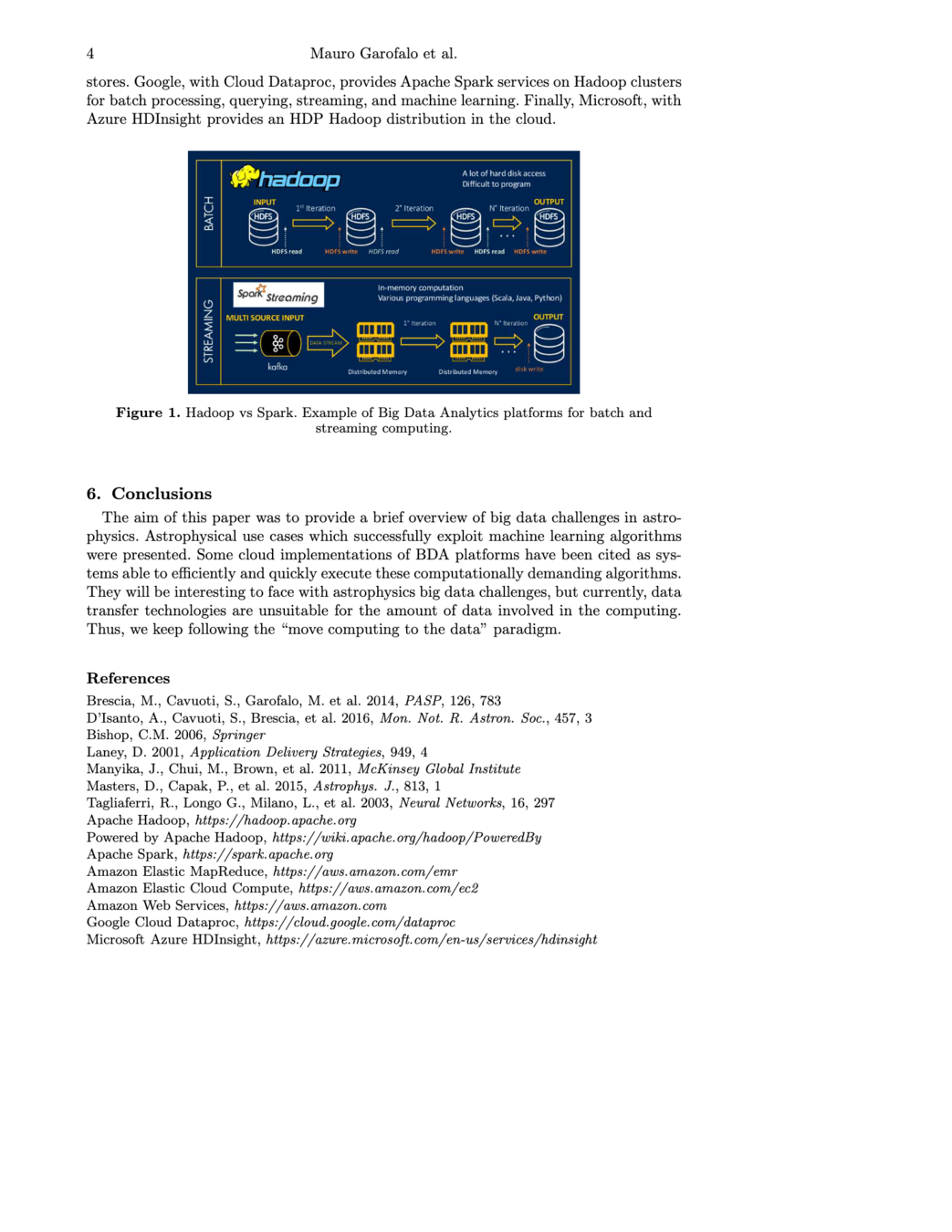

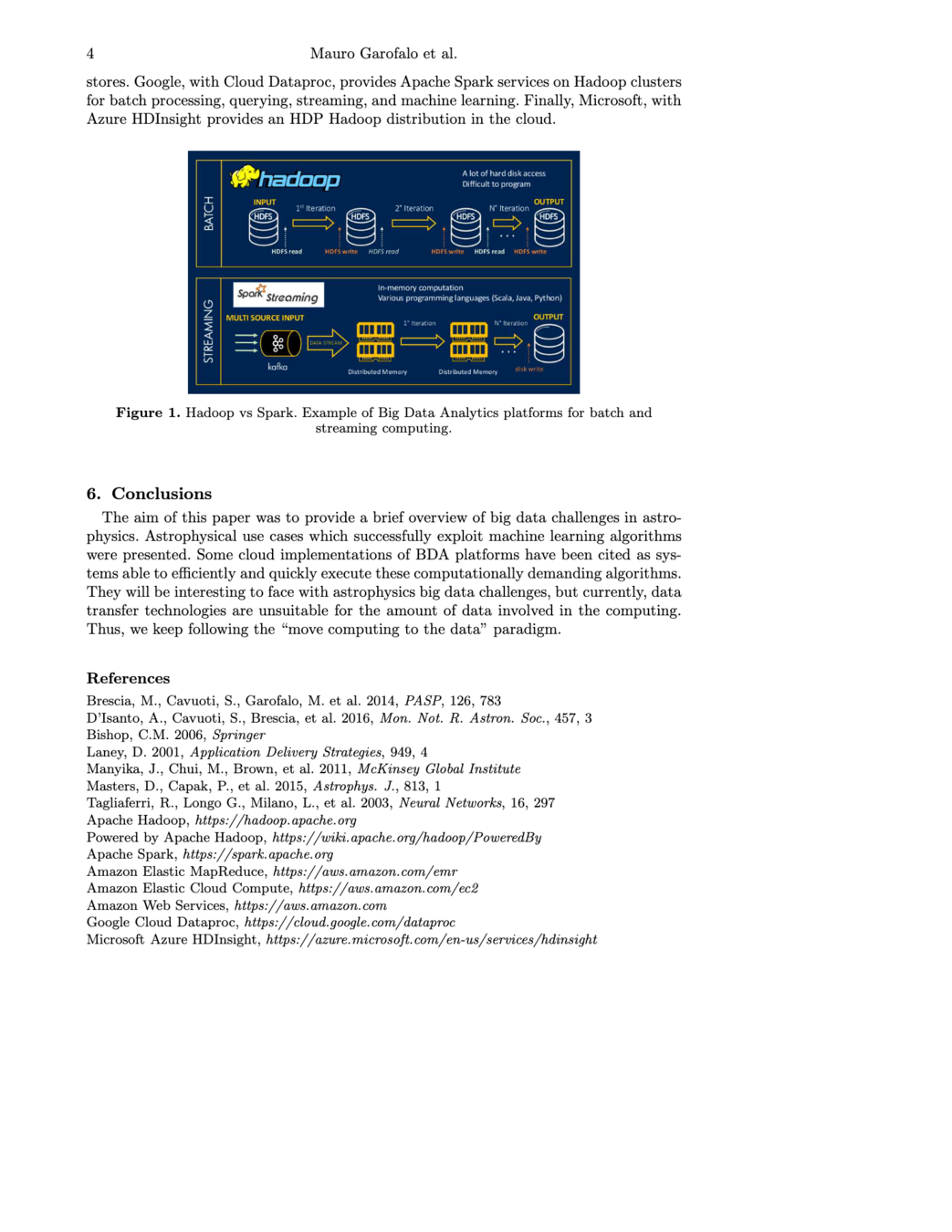

data platforms

data platforms

data platforms

data platforms

data platforms

data platforms

data platforms

https://www.youtube.com/watch?v=4irmRLrNGeE&t=151s

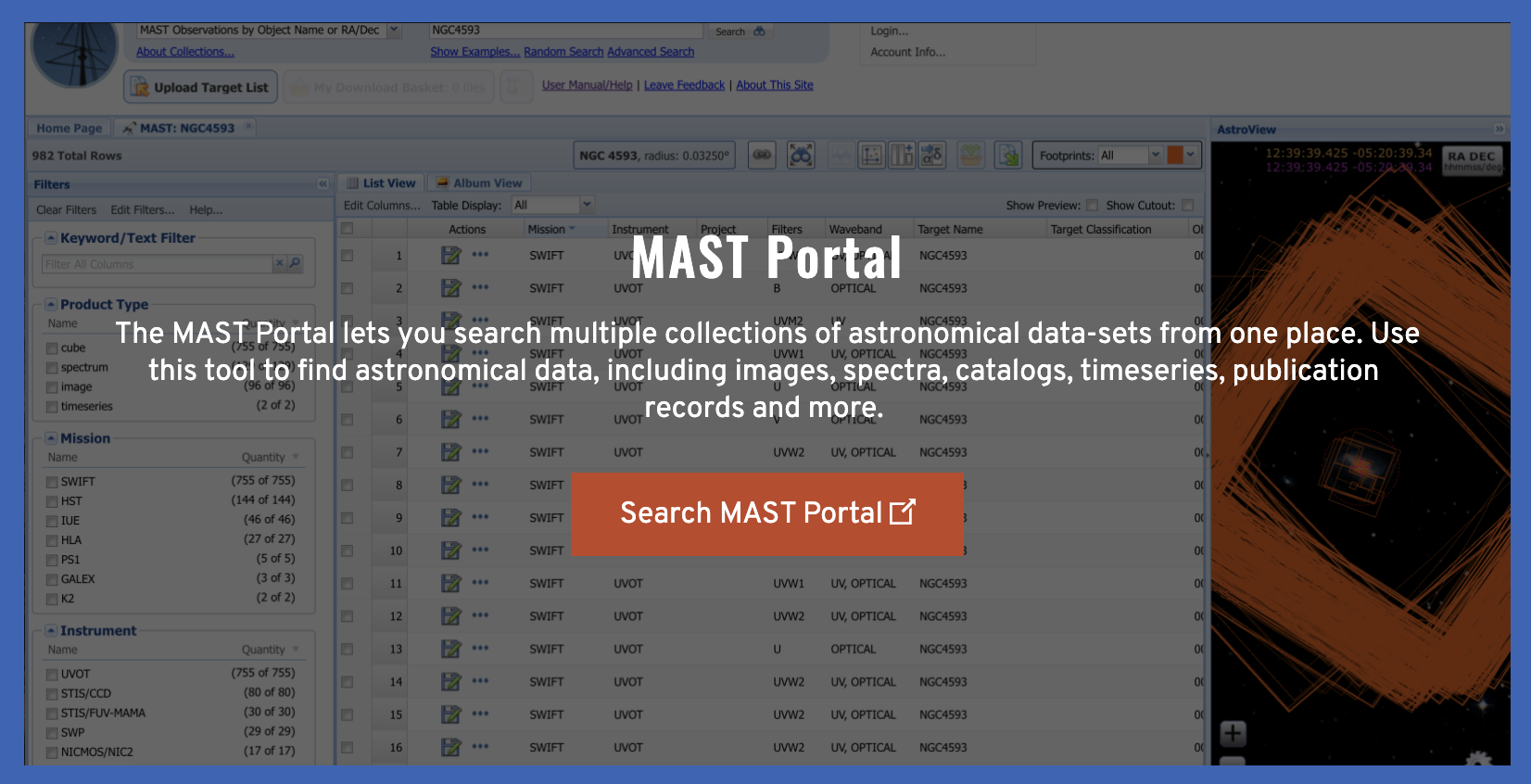

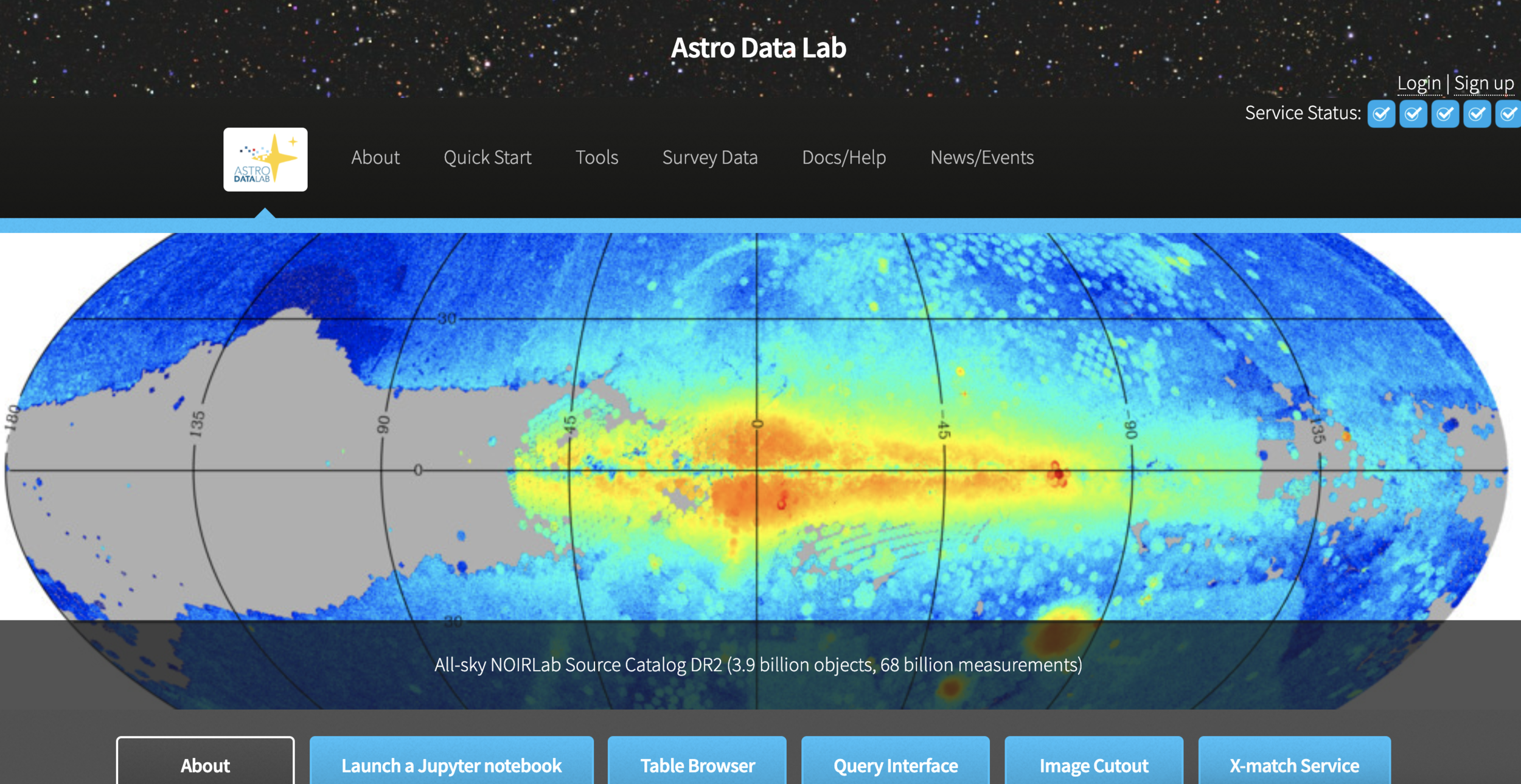

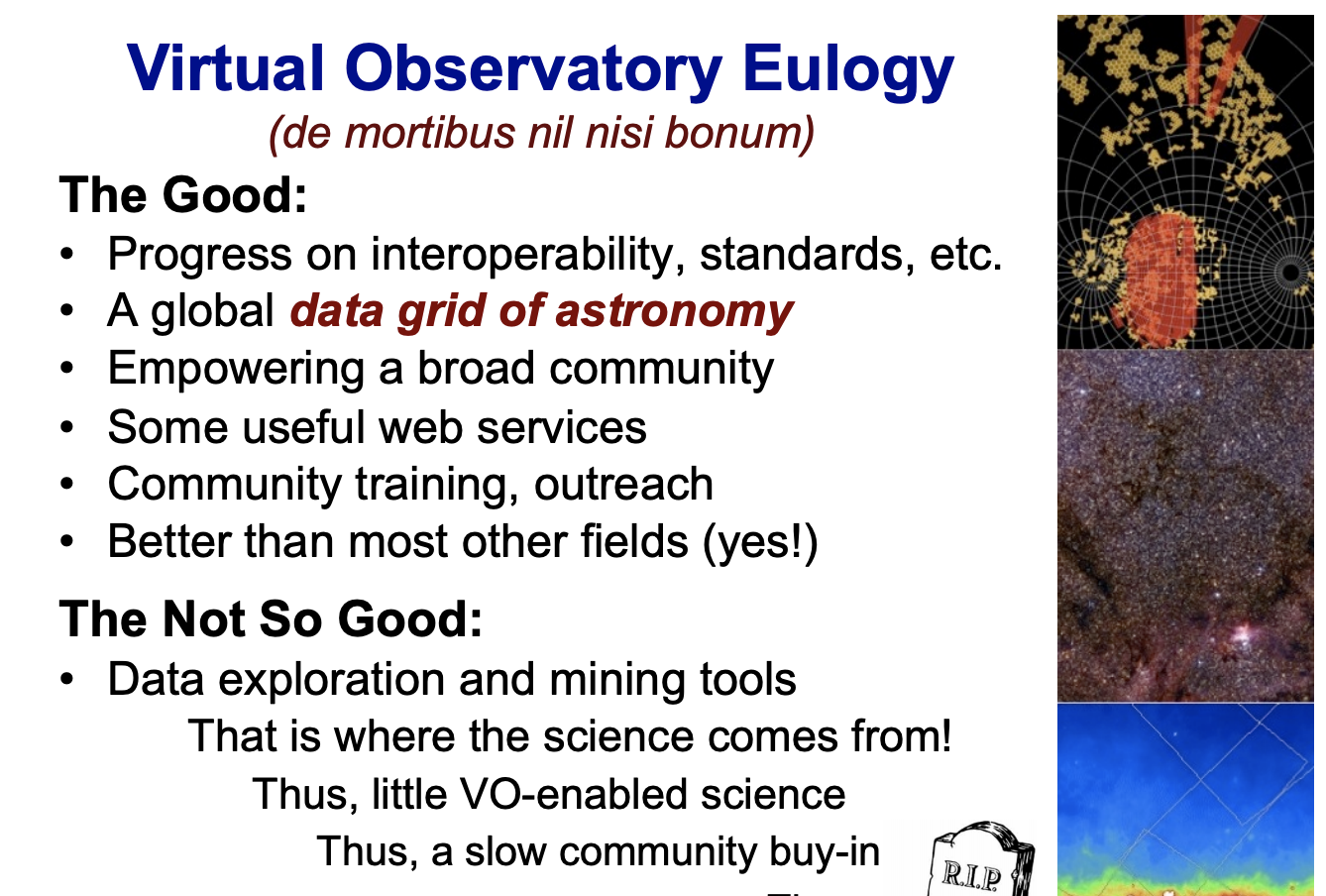

VO

Provide and federate content (data, metadata) services, standards, and analysis/compute services – Develop and provide data exploration and discovery tools

VO

Provide and federate content (data, metadata) services, standards, and analysis/compute services – Develop and provide data exploration and discovery tools

Djorovski (Caltech) 2017

Are VO's successful in other fields...

Google Earth Engine

OPINION:

what astro did right about BD

FITS files: universal data storage

Strong pressure on making data public

Strong tradition of collaboration

OPINION:

what astro did right about BD

Still lack of trust in cloud services

sparse collaboration between institutes generating solutions, a ton of platforms that work differently

slow integration of methods

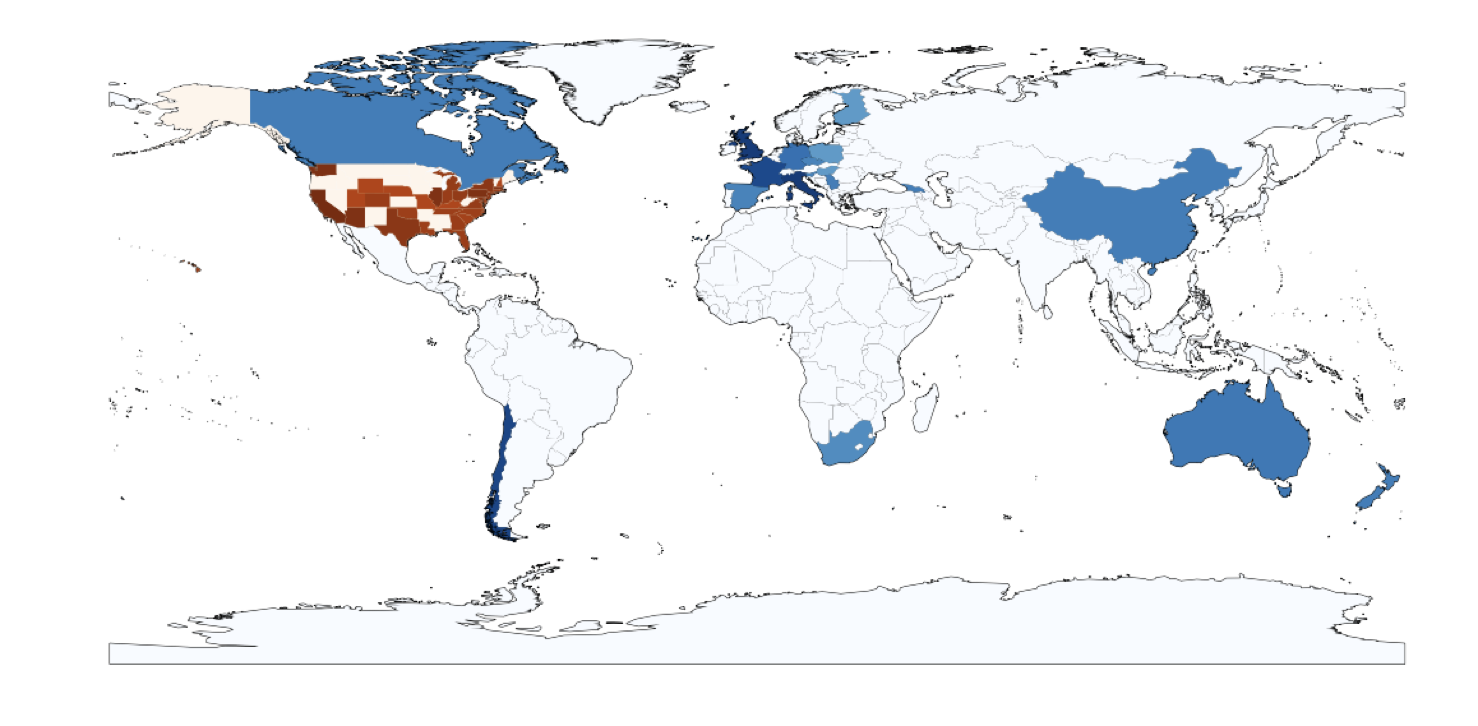

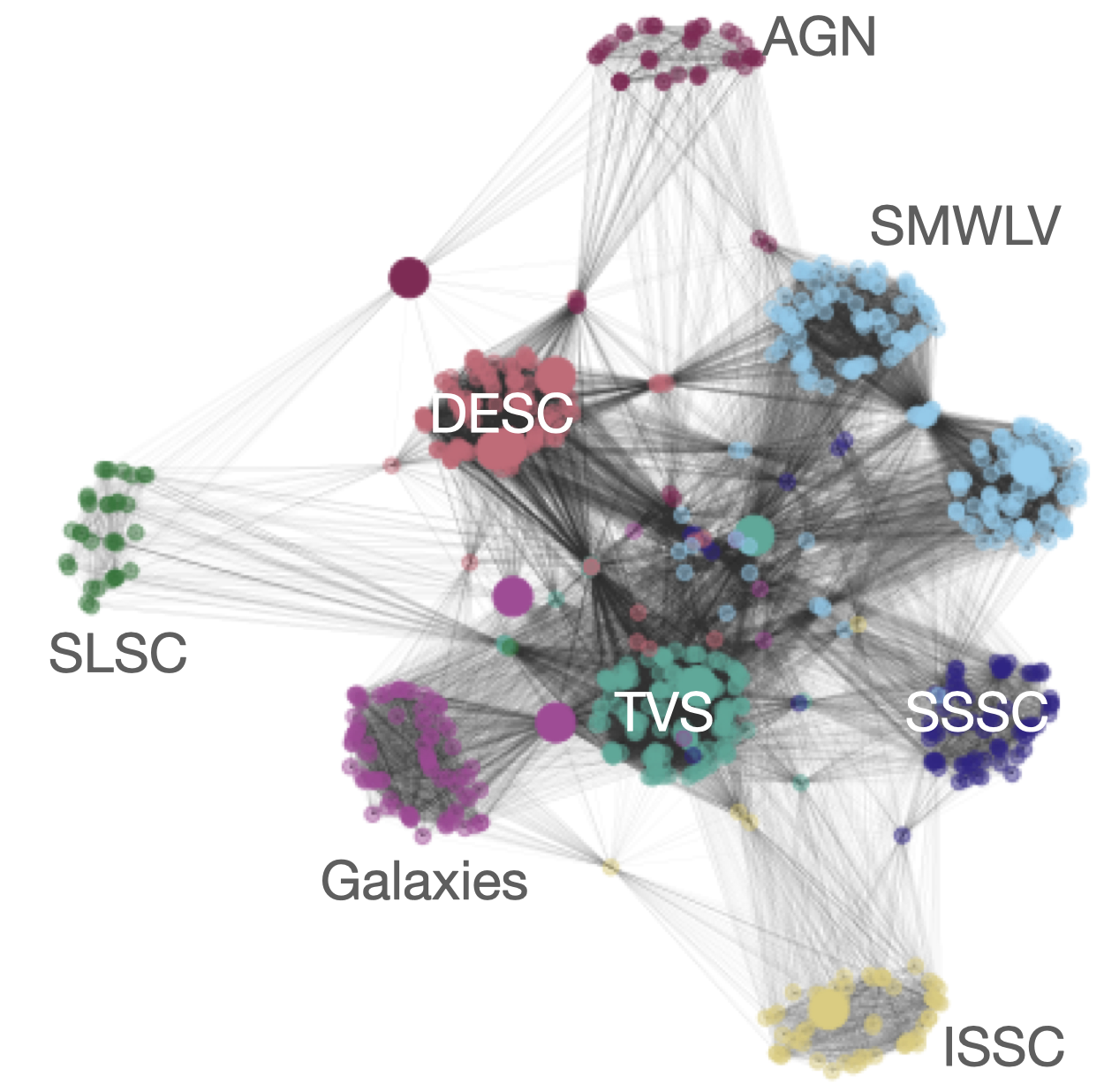

Shameless plug: Rubin LSST Science Collaborations

No federally funded LSST science

No science is reserved for any one group

1500+ members

Rubin LSST Science Collaborations

federica bianco fbianco@udel.edu

@fedhere

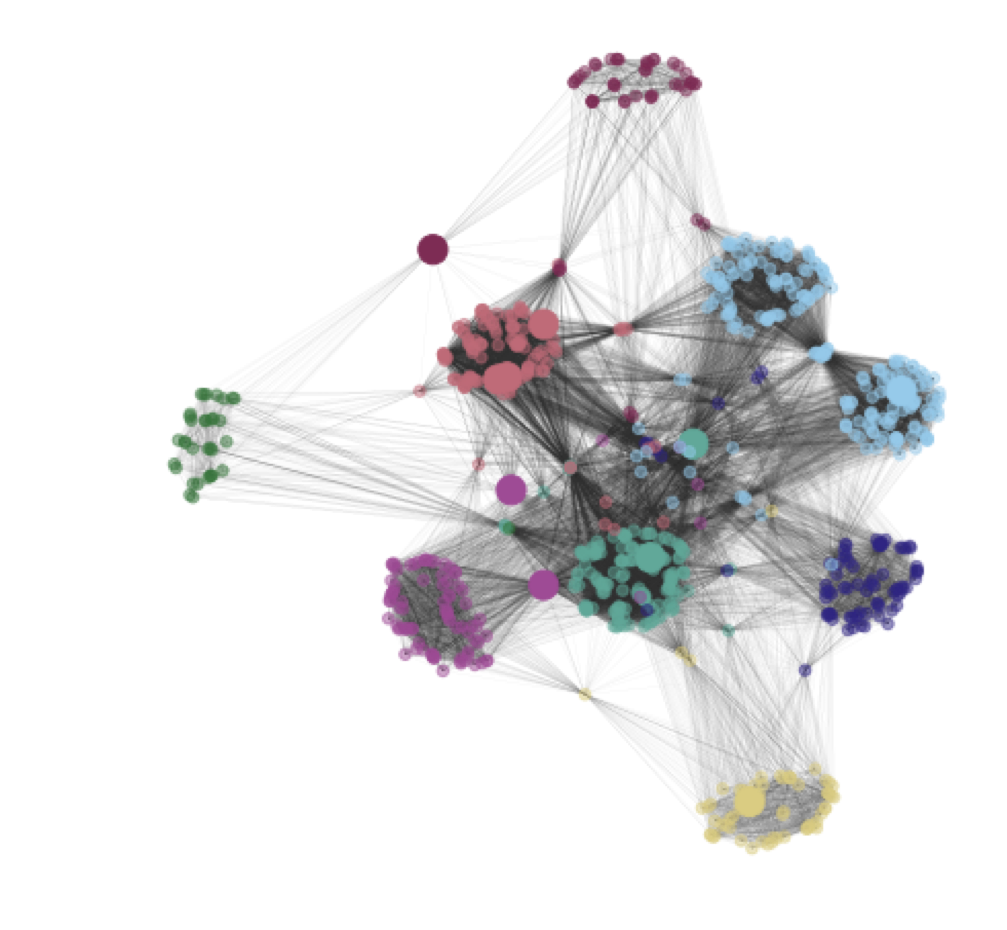

Science Collaborations

Rubin Observatory LSST SCs

federica bianco fbianco@udel.edu

@fedhere

Science Collaborations

Rubin Observatory LSST SCs

federica bianco fbianco@udel.edu

@fedhere

Science Collaborations

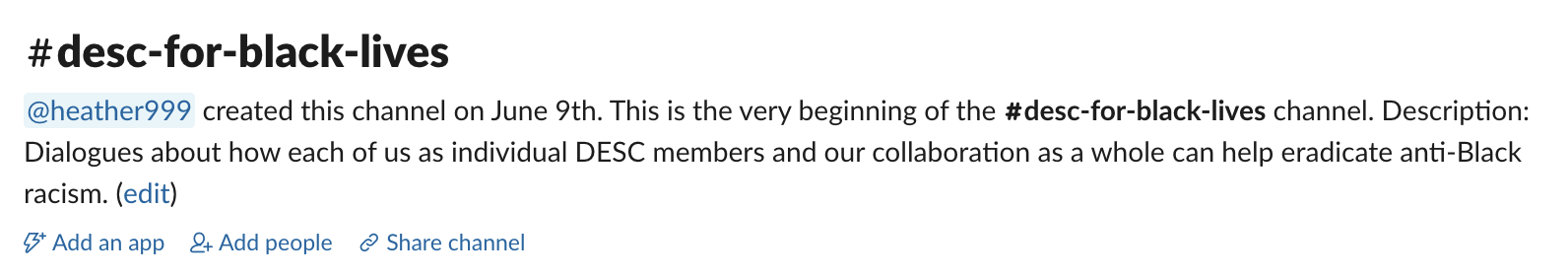

Rubin LSST Science Collaborations

We aspire to be an inclusive, equitable, and ultimately just group and we are working with renewed vigor in the wake of the recent event that exposed inequity and racism in our society to turning this aspiration into action.

Diversity Equity and Inclusion council of the SCs

in the works

federica bianco fbianco@udel.edu

@fedhere

Science Collaborations

Thank you!

Federica B. Bianco

University of Delaware

Physics and Astronomy

Biden School of Public Policy and Administration

Data Science Institute

NYU Center for Urban Science and Progress

Rubin Observatory LSST Science Collaborations Coordinator

Rubin LSST Transients and Variable Stars Science Collaborations Chair

please email me if you have questions! fbianco@udel.edu