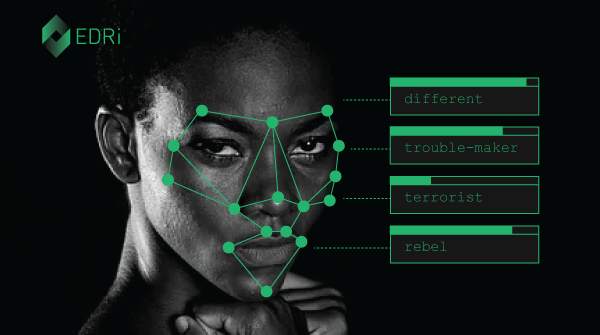

what's behind facial recognition: technology and bias

University of Delaware

Department of Physics and Astronomy

federica bianco

Biden School of Public Policy and Administration

Data Science Institute

who needs to learn

Educate Policy makers

without understanding how ML works policy makers do not have the instruments to regulate it

Education for the people

but does this put the burden on the victims?

Educating DS practitioners in communicating DS concepts

the put the burden back on the practitioners

Datascience Education to Help and Protect us

Jack Dorsey (Twitter CEO) at TED 2019

boring the TED audience with details

Zuckerberg (Facebook CEO) deflecting questions at senate hearing

#UDCSS2020

@fedhere

Data Science is a black box

Models are neutral, data is biased

two dangerous data-ethics myths

#UDCSS2020

@fedhere

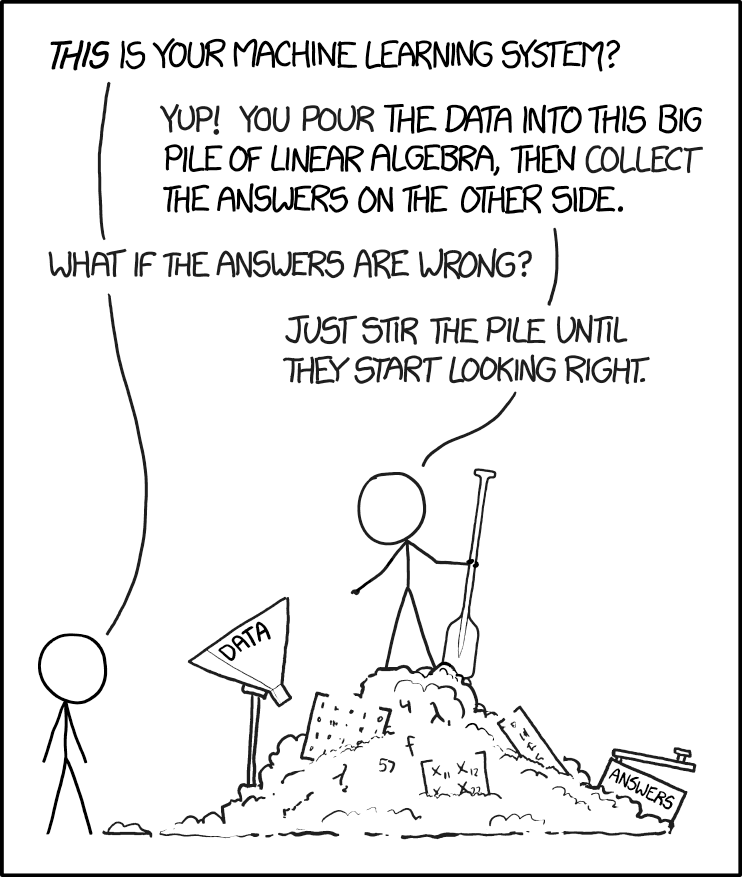

Data Science is a black box

machine learning models are

Epistemic transparency

tration by Hanne Morstad

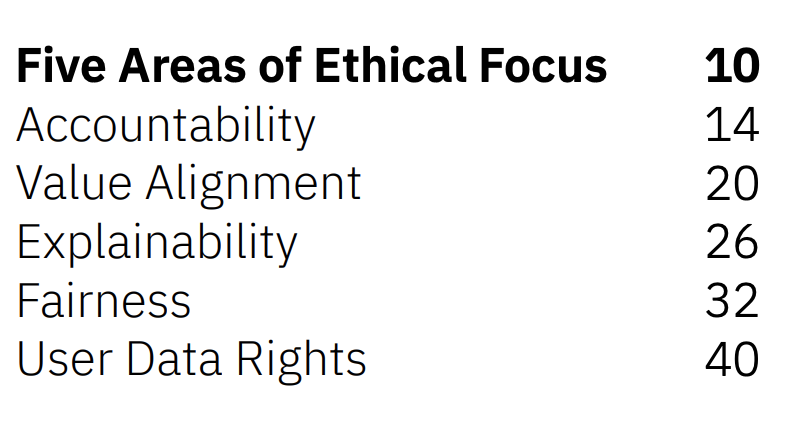

Accountability: who is responsible if an algorithm does harm

[Machine Learning is the] field of study that gives computers the ability to learn without being explicitly programmed.

Arthur Samuel, 1959

what is a ML?

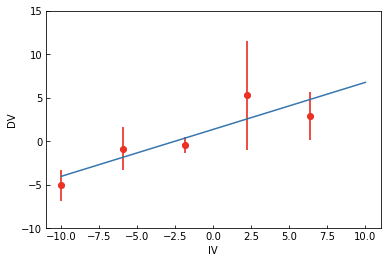

a model is a low dimensional representation of a higher dimensionality datase

what is a "model" in ML?

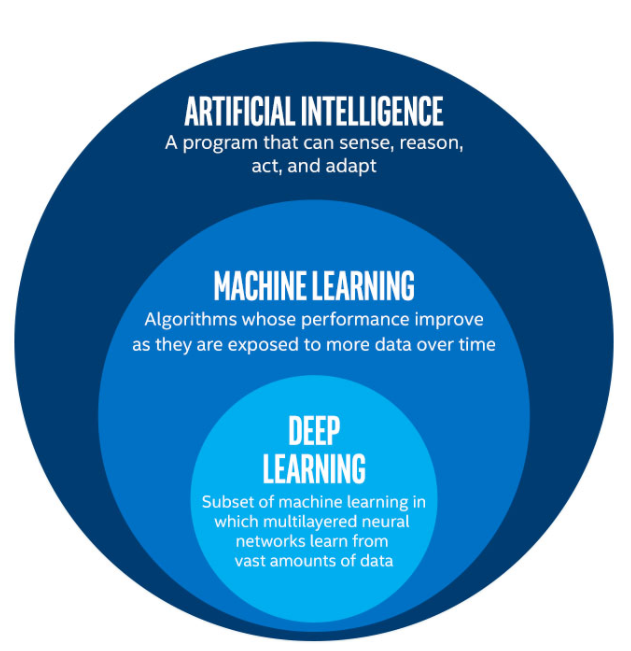

what is machine learning?

[Machine Learning is the] field of study that gives computers the ability to learn without being explicitly programmed.

Arthur Samuel, 1959

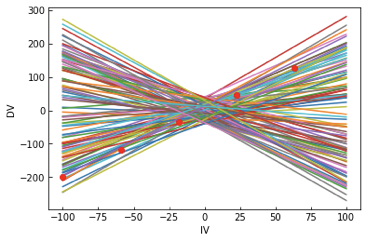

model parameters: slope, intercept

data

mathematical formula

what is machine learning?

ML: study, development, and applicaton of any model with parameters learnt from the data

Objective Function

time

time

time

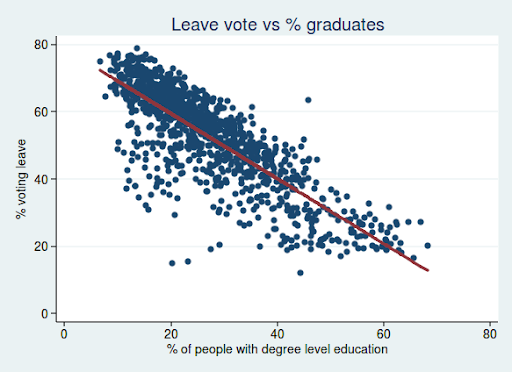

which is the "best fit" line? A , B, C, D?

A

B

C

D

to select the best fit parameters we define a function of the parameters to minimize or maximize

Objective Function

x1

x2

to select the best fit parameters we define a function of the parameters to minimize or maximize

Objective Function

x1

x4

to select the best fit parameters we define a function of the parameters to minimize or maximize

Machine Learning models are parametrized representation of "reality" where the parameters are learned from finite sets of realizations of that reality

(note: learning by instance, e.g. nearest neighbours, may not comply to this definition)

Machine Learning is the disciplines that conceptualizes, studies, and applies those models.

Key Concept

what is machine learning?

used to:

- classify based on examples

- understand structure of feature space

- regression (classification with infinitely small classes)

- understand which features are important in prediction (to get close to causality)

General ML usage

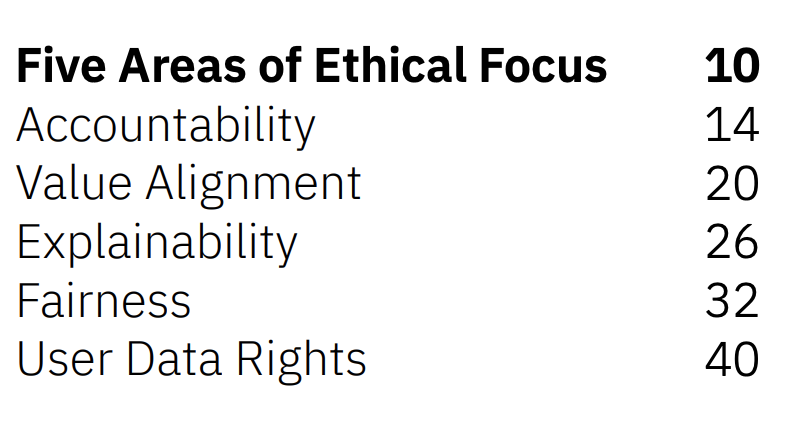

Accountability

Accountability

Explainability

Accountability

Explainability

Fairness

Fairness

Accountability

Explainability

Fairness

Fairness

Privacy and data rights

What drives inference

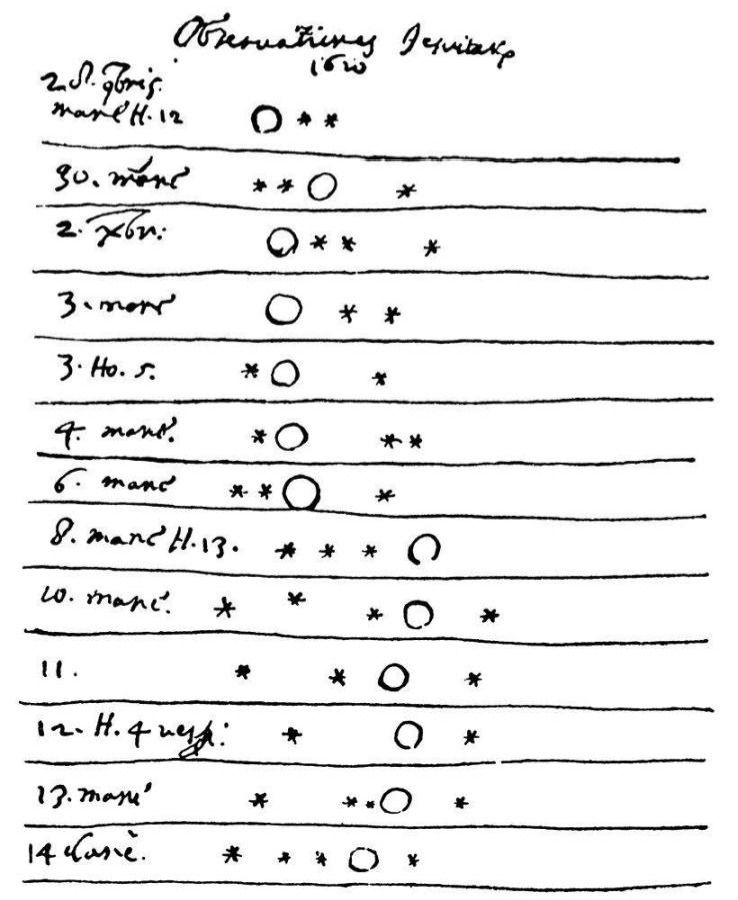

Inference has different drievers in different times depending on the resources available and dominant culture

Accountability will look different in these different

Galileo Galilei 1610

Following: Djorgovski

https://events.asiaa.sinica.edu.tw/school/20170904/talk/djorgovski1.pdf

Experiment driven

what drives

inference

@fedhere

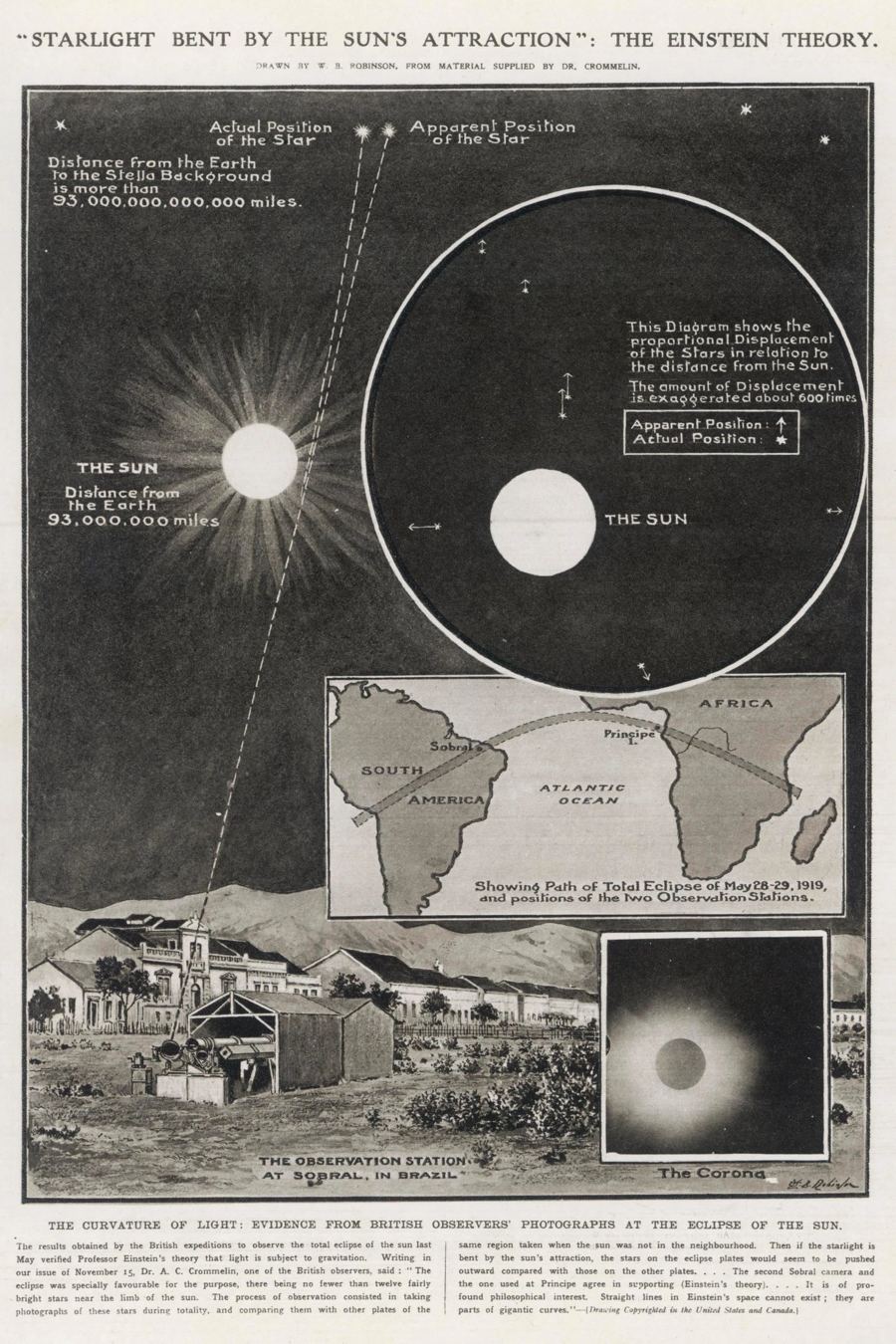

Enistein 1916

what drives

inference

Theory driven | Falsifiability

Experiment driven

@fedhere

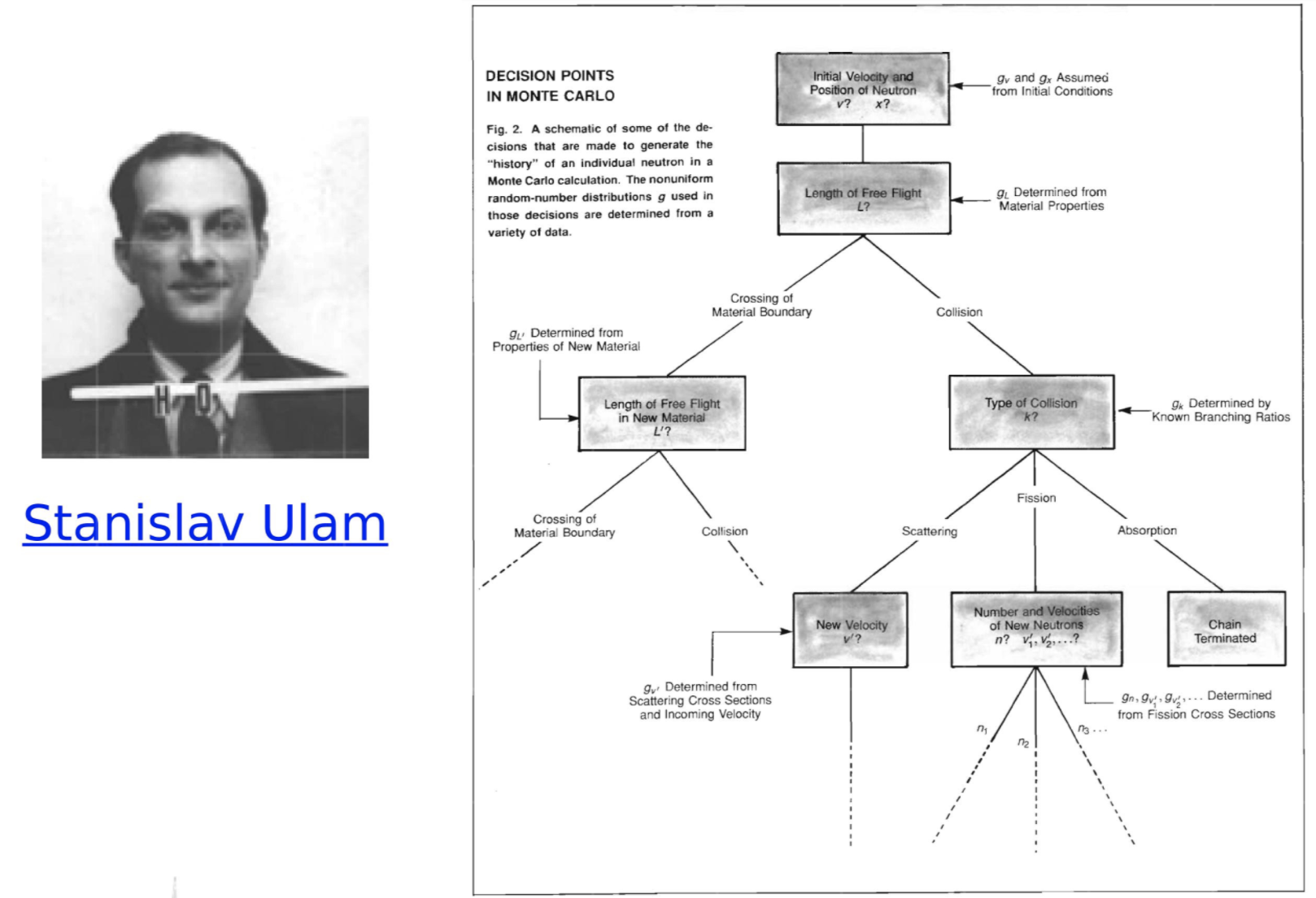

Ulam 1947

Theory driven | Falsifiability

Experiment driven

Simulations | Probabilistic inference | Computation

http://www-star.st-and.ac.uk/~kw25/teaching/mcrt/MC_history_3.pdf

@fedhere

what drives

inference

what drives

astronomy

the 2000s

Theory driven | Falsifiability

Experiment driven

Simulations | Probabilistic inference | Computation

Big Data + Computation | pattern discovery | predict by association

@fedhere

data driven: lots of data, drop theory and use associations

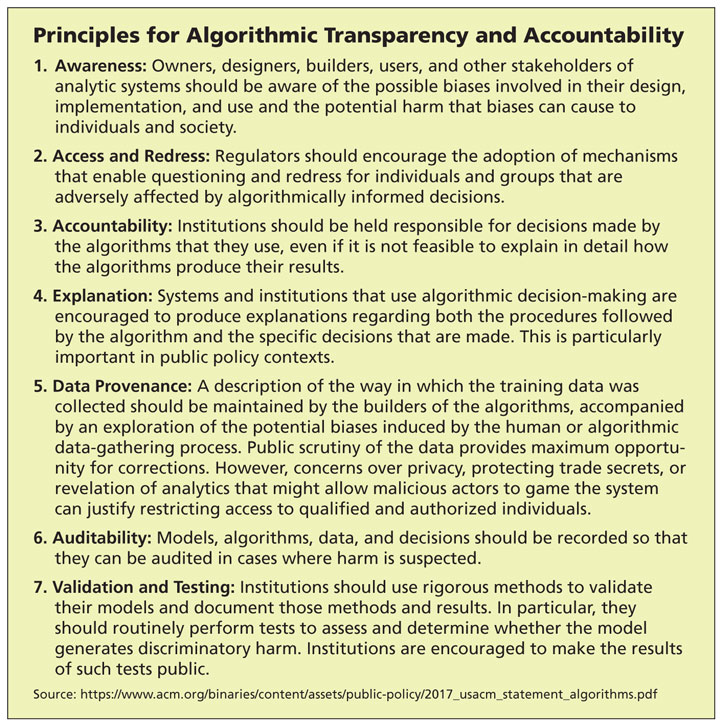

algorithmic transparency

strictly policy issues:

proprietary algorithms + audability

#UDCSS2020

@fedhere

technical + policy issues:

data access and redress + data provenance

algorithmic transparency

https://www.darpa.mil/attachments/XAIProgramUpdate.pdf

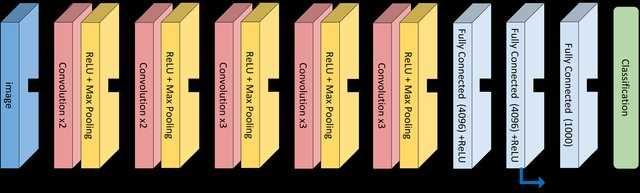

trivially intuitive

generalized additive models

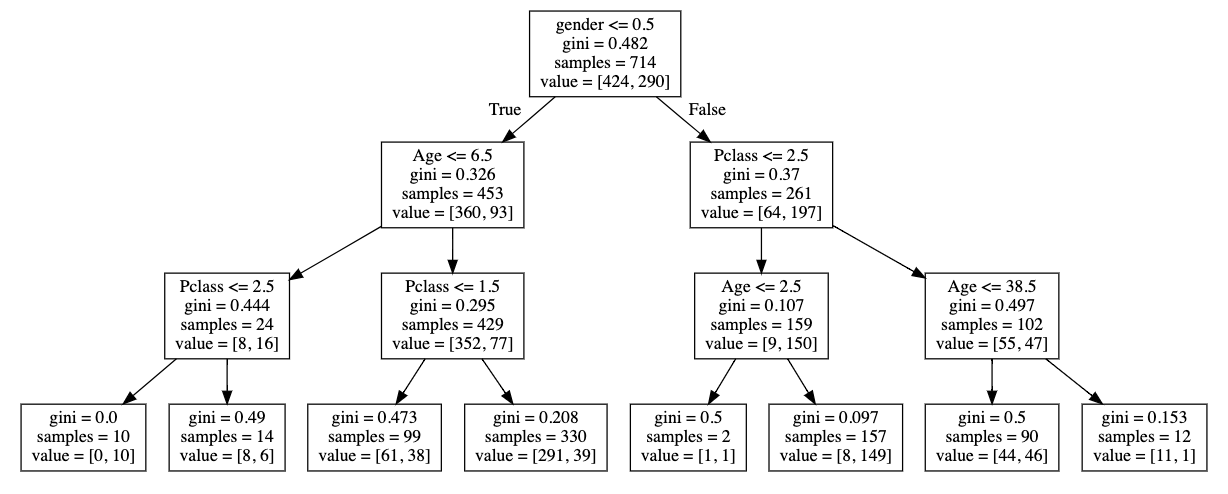

decision trees

SVM

Random Forest

Deep Learning

Accuracy

univaraite

linear

regression

algorithmic transparency

#UDCSS2020

@fedhere

we're still trying to figure it out

algorithmic transparency

https://www.darpa.mil/attachments/XAIProgramUpdate.pdf

trivially intuitive

generalized additive models

decision trees

SVM

Random Forest

Deep Learning

Accuracy in solving complex problems

univaraite

linear

regression

algorithmic transparency

#UDCSS2020

@fedhere

we're still trying to figure it out

algorithmic transparency

trivially intuitive

generalized additive models

decision trees

Deep Learning

number of features that can be effectively included in the model

thousands

1

SVM

Random Forest

univaraite

linear

regression

https://www.darpa.mil/attachments/XAIProgramUpdate.pdf

algorithmic transparency

#UDCSS2020

@fedhere

Accuracy in solving complex problems

we're still trying to figure it out

algorithmic transparency

trivially intuitive

univaraite

linear

regression

generalized additive models

decision trees

Deep Learning

SVM

Random Forest

https://www.darpa.mil/attachments/XAIProgramUpdate.pdf

time

algorithmic transparency

#UDCSS2020

@fedhere

Accuracy in solving complex problems

we're still trying to figure it out

algorithmic transparency

1

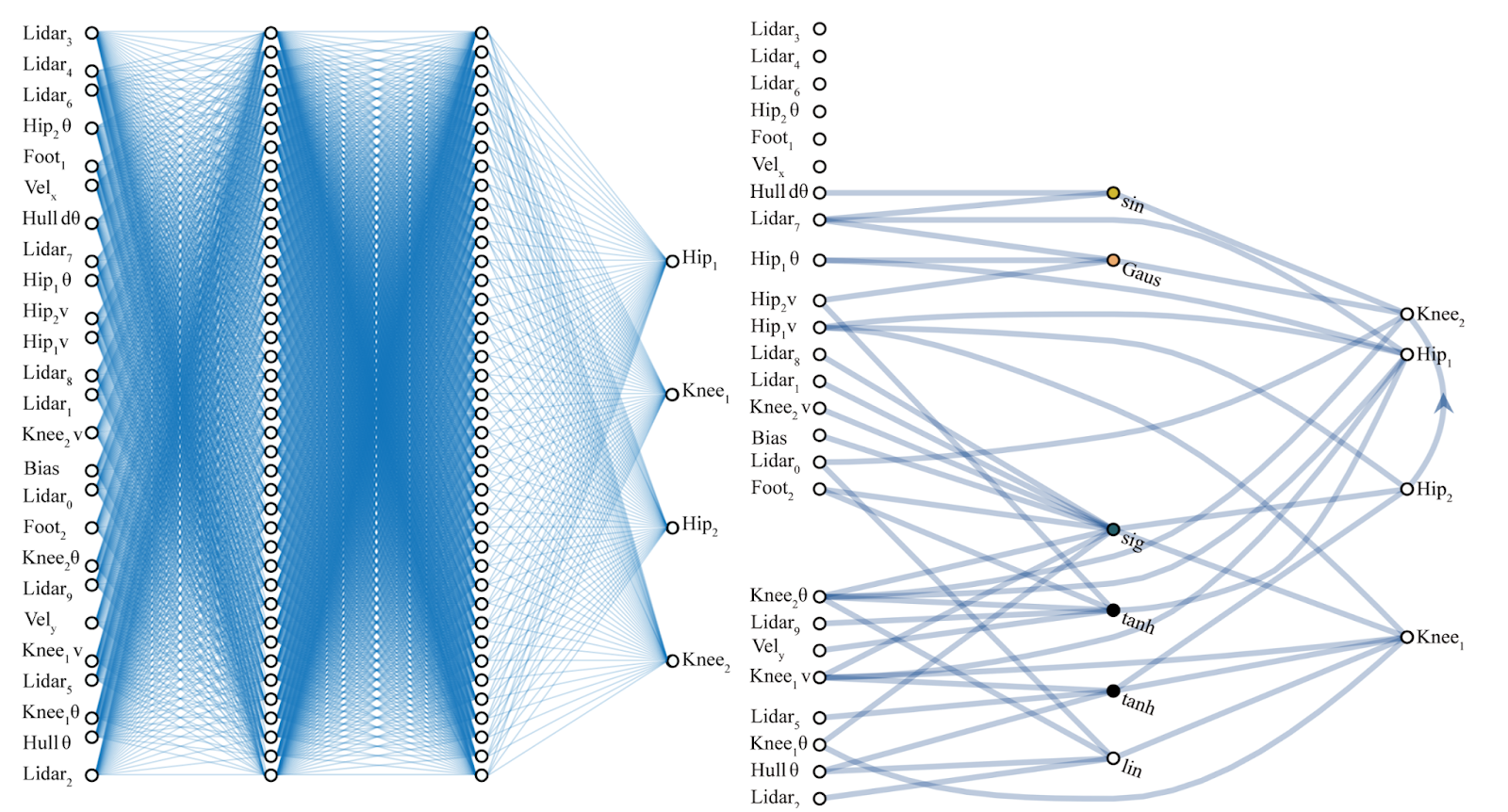

Machine learning: any method that learns parameters from the data

2

The transparency of an algorithm is proportional to its complexity and the complexity of the data space

3

The transparency of an algorithm is limited by our own ability and preparedness to interpret it

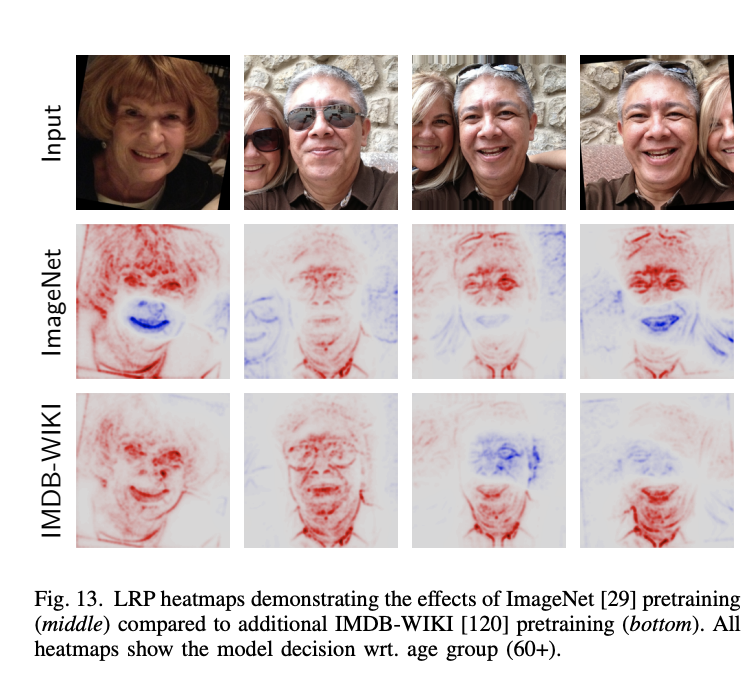

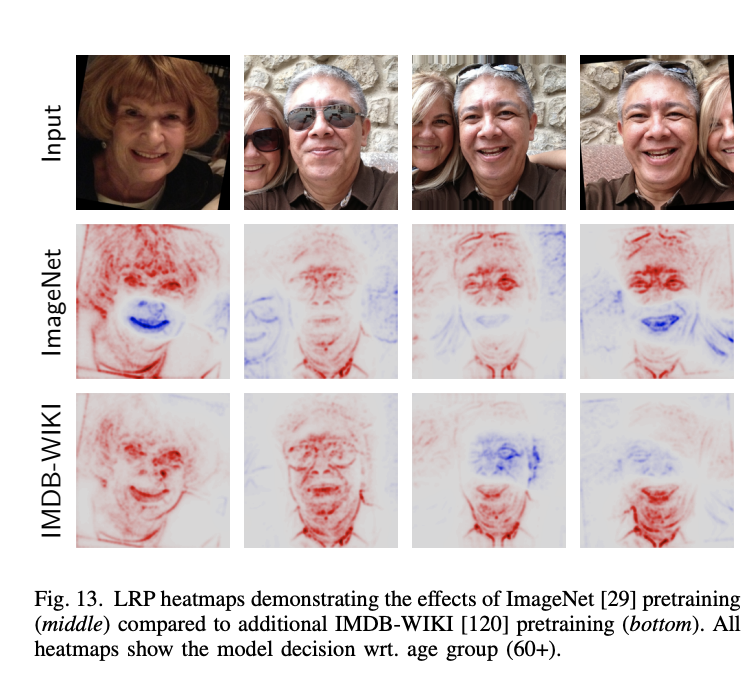

Toward Interpretable Machine Learning, Samek+2003

algorithmic transparency

#UDCSS2020

@fedhere

linear regression

algorithmic transparency

A single tree model

algorithmic transparency

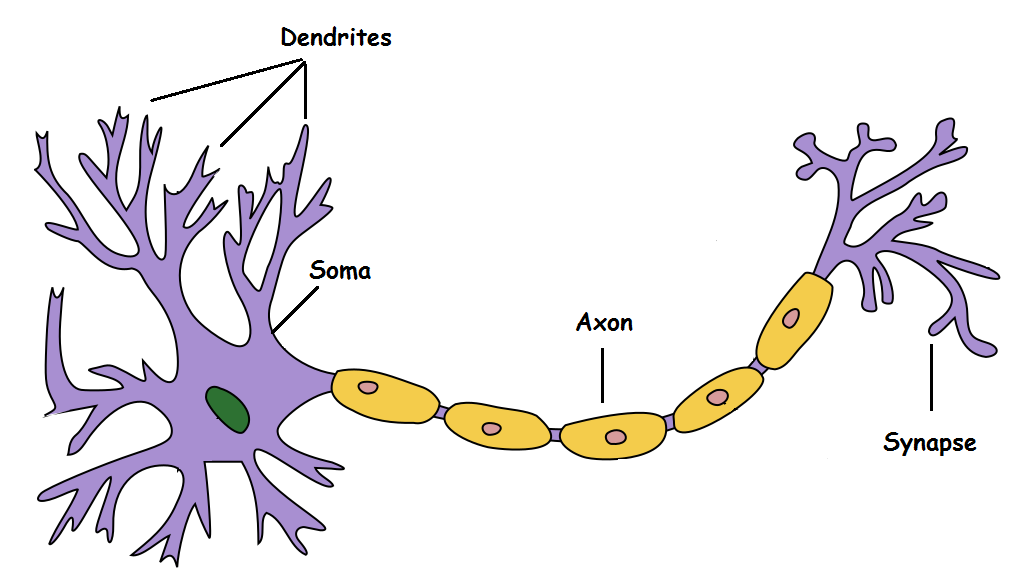

1943

M-P Neuron McCulloch & Pitts 1943

M-P Neuron

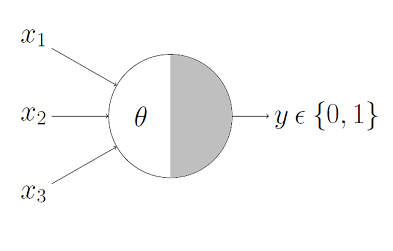

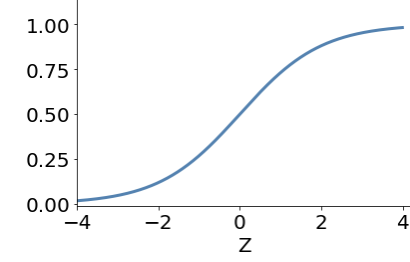

Perceptrons are linear classifiers: makes its predictions based on a linear predictor function

combining a set of weights (=parameters) with the feature vector.

The perceptron algorithm : 1958, Frank Rosenblatt

x

y

1958

The perceptron algorithm : 1958, Frank Rosenblatt

output

activation function

weights

bias

sigmoid

.

.

.

Perceptrons are linear classifiers: makes its predictions based on a linear predictor function

combining a set of weights (=parameters) with the feature vector.

The perceptron algorithm : 1958, Frank Rosenblatt

Perceptron

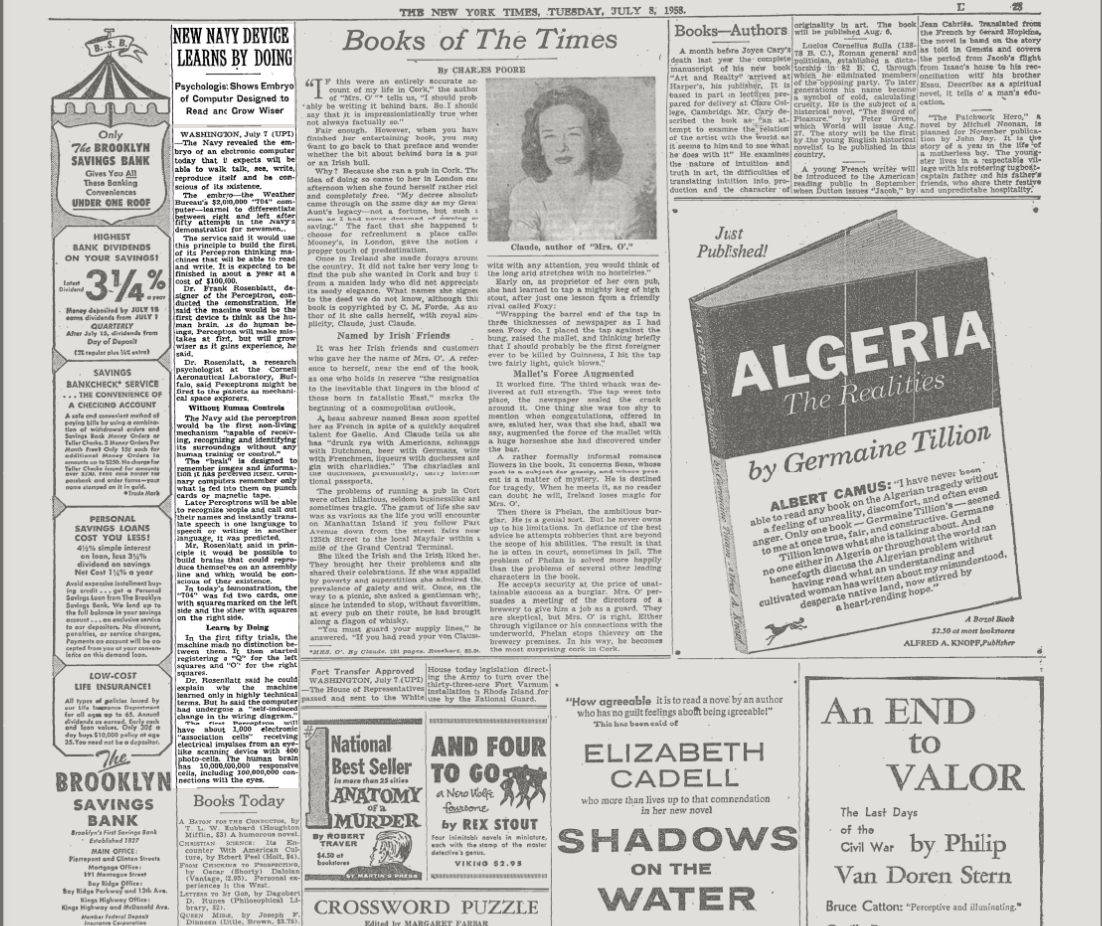

The Navy revealed the embryo of an electronic computer today that it expects will be able to walk, talk, see, write, reproduce itself and be conscious of its existence.

The embryo - the Weather Buerau's $2,000,000 "704" computer - learned to differentiate between left and right after 50 attempts in the Navy demonstration

NEW NAVY DEVICE LEARNS BY DOING; Psychologist Shows Embryo of Computer Designed to Read and Grow Wiser

July 8, 1958

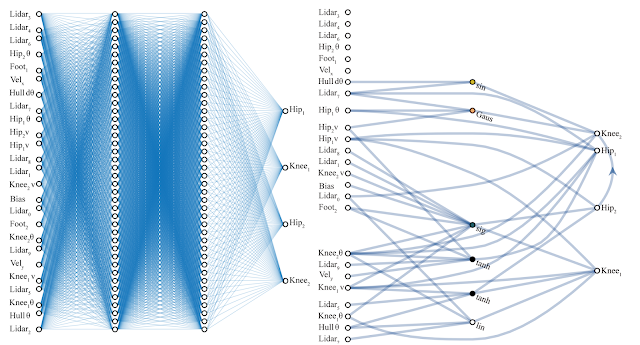

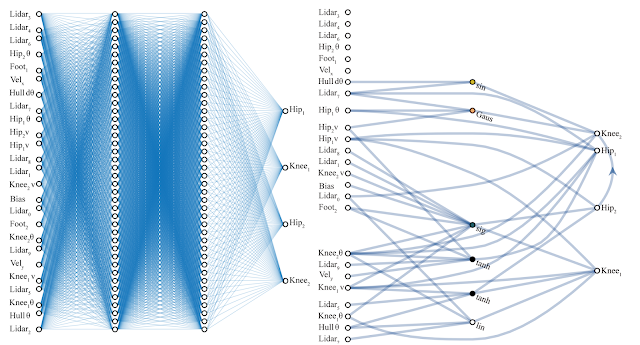

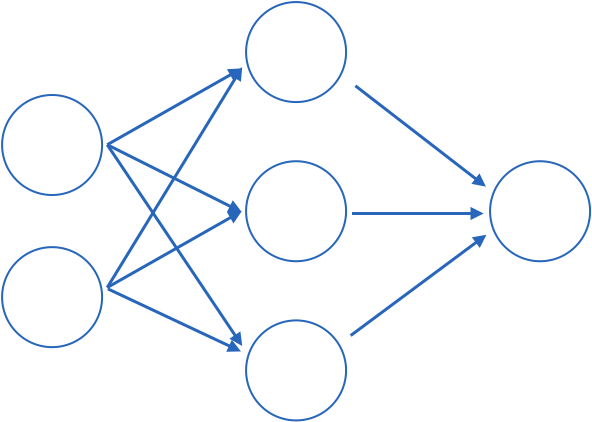

x1

x2

b1

b2

b3

b

w11

w12

w13

w21

w22

w23

multilayer perceptron

w: weight

sets the sensitivity of a neuron

b: bias:

up-down weights a neuron

EXERCISE

output

how many parameters?

input layer

hidden layer

output layer

hidden layer

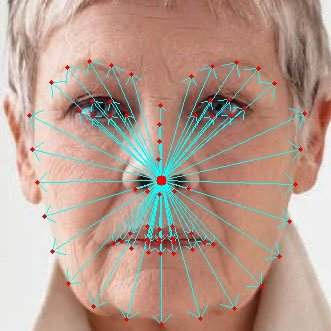

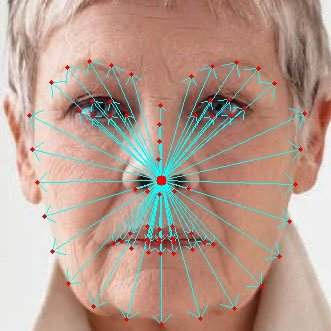

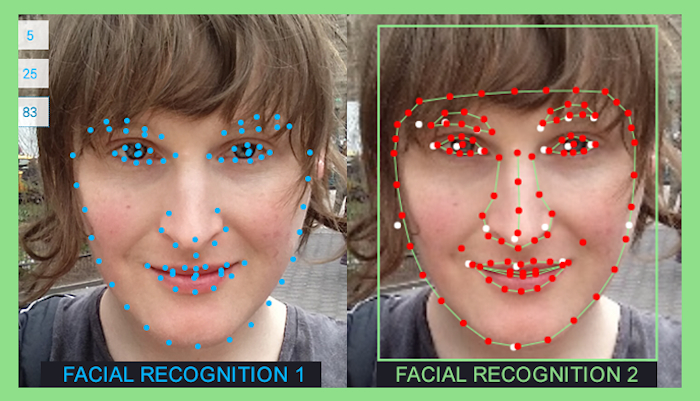

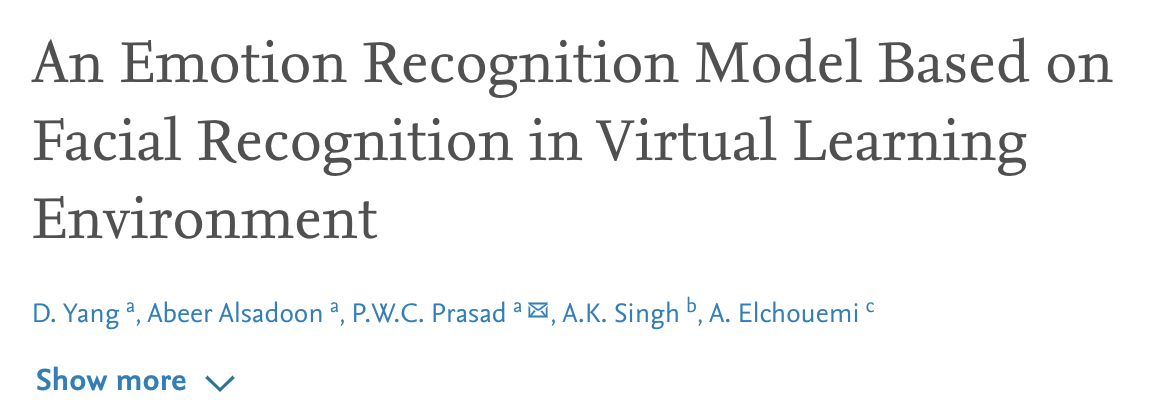

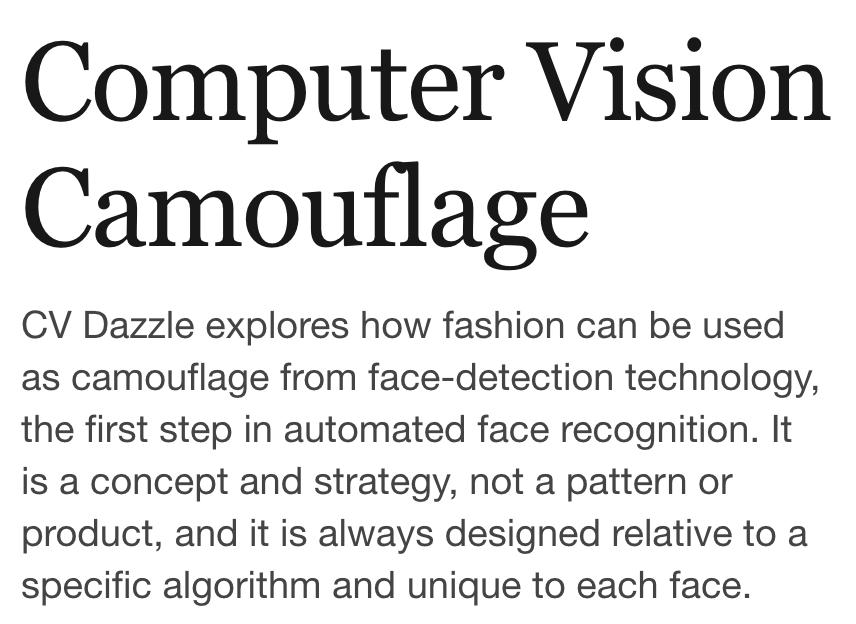

facial recognition

how it works

#UDCSS2020

@fedhere

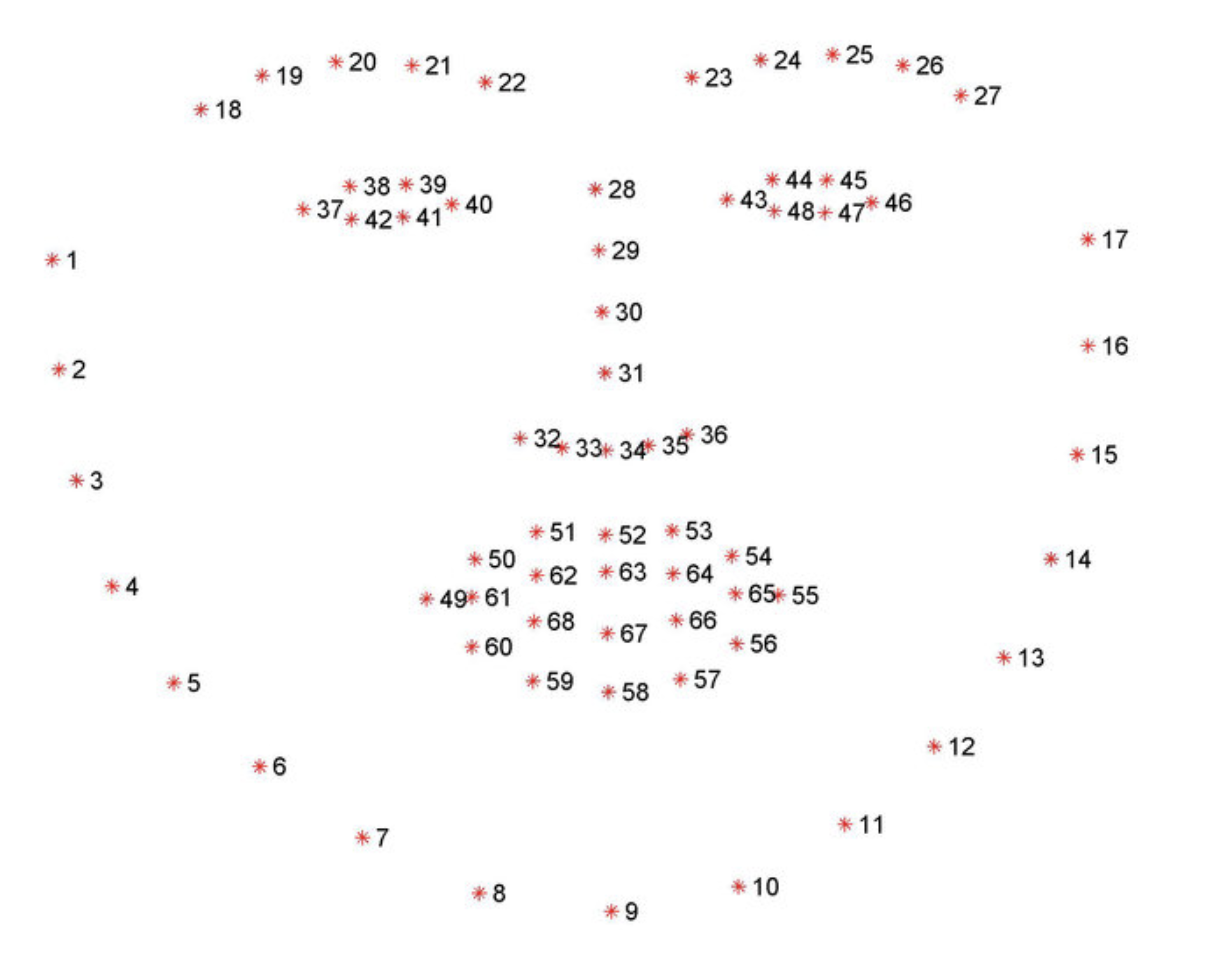

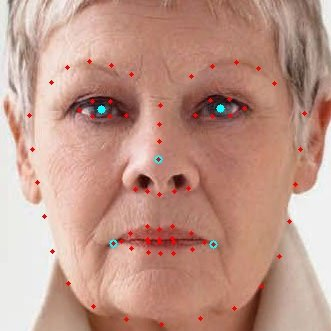

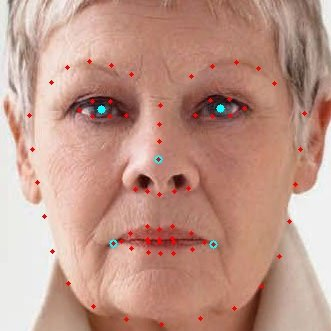

facial recognition

typically 68 landmarks

how it works

facial recognition

typically 68 landmarks

how it works

facial recognition

what its not:

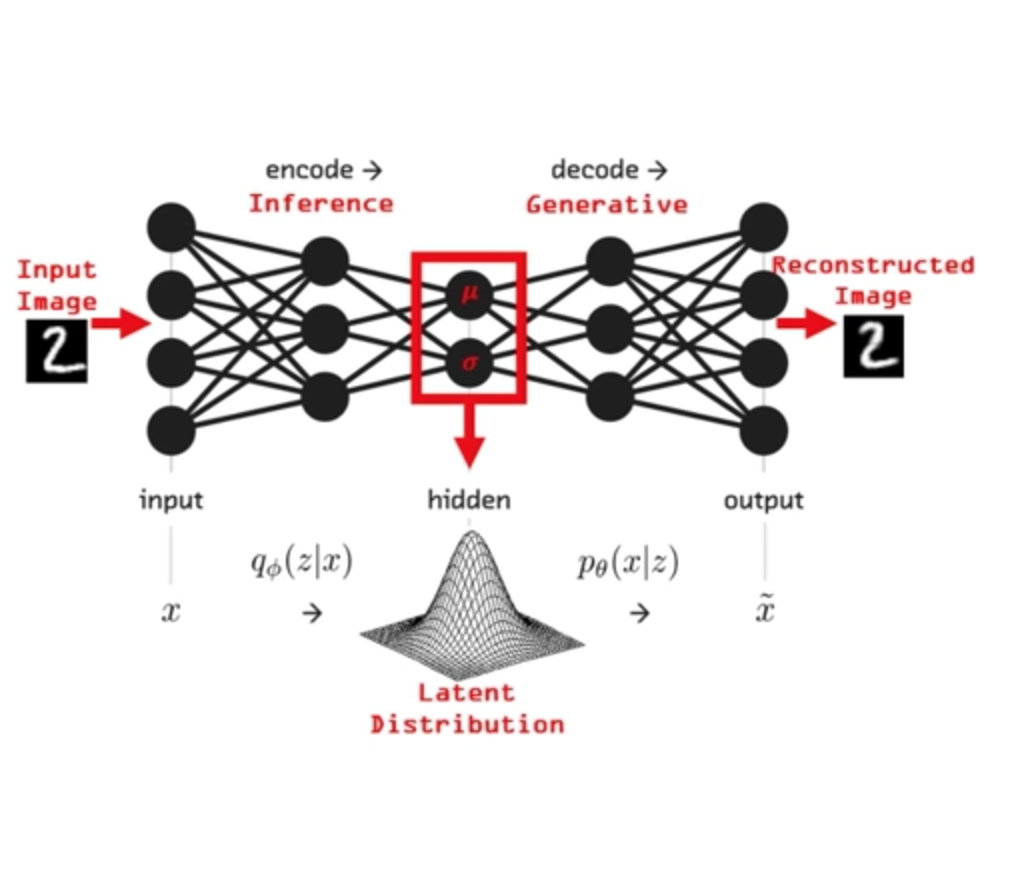

Autoencoders

What do NN do? approximate complex functions with series of linear functions

.... so if my layers are smaller what I have is a compact representation of the data

Autoencoder Architecture

Feed Forward DNN:

the size of the input is 5,

the size of the last layer is 2

Unsupervised learning with

Neural Networks

What do NN do? approximate complex functions with series of linear functions

To do that they extract information from the data

Each layer of the DNN produces a representation of the data a "latent representation" .

The dimensionality of that latent representation is determined by the size of the layer (and its connectivity, but we will ignore this bit for now)

.... so if my layers are smaller what I have is a compact representation of the data

- Encoder: outputs a lower dimensional representation z of the data x (similar to PCA, tSNE...)

- Decoder: Learns how to reconstruct x given z: learns p(x|z)

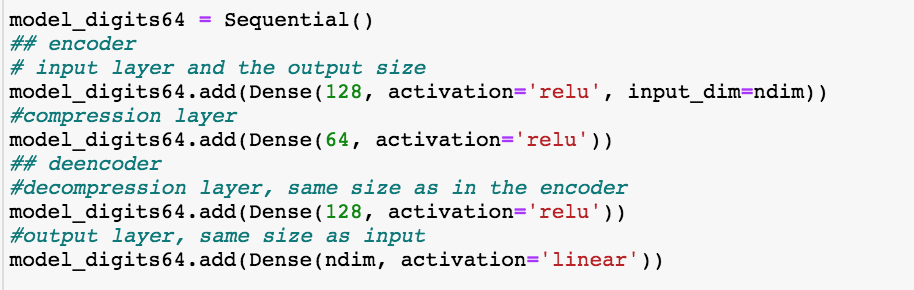

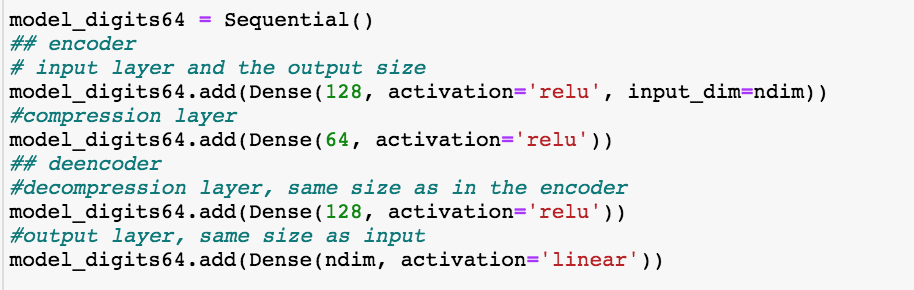

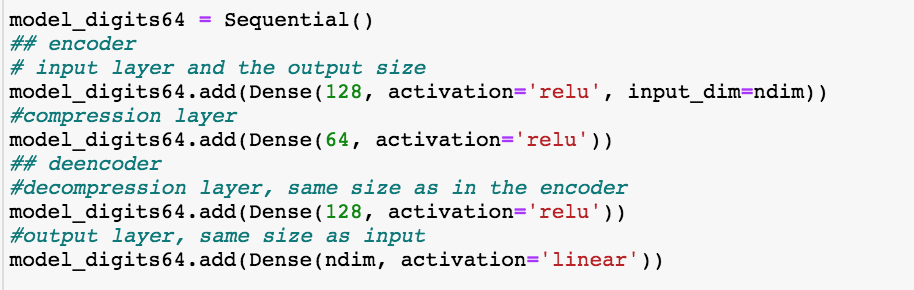

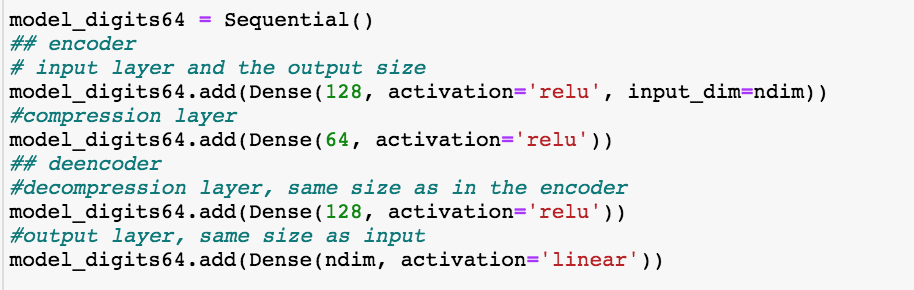

Building a DNN

with keras and tensorflow

Trivial to build, but the devil is in the details!

Building a DNN

with keras and tensorflow

Trivial to build, but the devil is in the details!

from keras.models import Sequential

#can upload pretrained models from keras.models

from keras.layers import Dense, Conv2D, MaxPooling2D

#create model

model = Sequential()

#create the model architecture by adding model layers

model.add(Dense(10, activation='relu', input_shape=(n_cols,)))

model.add(Dense(10, activation='relu'))

model.add(Dense(1))

#need to choose the loss function, metric, optimization scheme

model.compile(optimizer='adam', loss='mean_squared_error')

#need to learn what to look for - always plot the loss function!

model.fit(x_train, y_train, validation_data=(x_test, y_test),

epochs=20, batch_size=100, verbose=1)

#note that the model allows to give a validation test,

#this is for a 3fold cross valiation: train-validate-test

#predict

test_y_predictions = model.predict(validate_X)Building a DNN

with keras and tensorflow

autoencoder for image recontstruction

encoder

This autoencoder model has a 64-neuron bottle neck. This means it will generate a compressed representation of the data out of that layer which is 16-dimensional (the original size is 784 pixels)

Building a DNN

with keras and tensorflow

autoencoder for image recontstruction

This autoencoder model has a 64-neuron bottle neck. This means it will generate a compressed representation of the data out of that layer which is 16-dimensional (the original size is 784 pixels)

Building a DNN

with keras and tensorflow

autoencoder for image recontstruction

decoder

This autoencoder model has a 64-neuron bottle neck. This means it will generate a compressed representation of the data out of that layer which is 16-dimensional (the original size is 784 pixels)

Building a DNN

with keras and tensorflow

autoencoder for image recontstruction

This autoencoder model has a 64-neuron bottle neck. This means it will generate a compressed representation of the data out of that layer which is 16-dimensional (the original size is 784 pixels)

bottle neck

Building a DNN

with keras and tensorflow

autoencoder for image recontstruction

This simple odel has 200000 parameters!

My original choice is to train it with "adadelta" with a mean squared loss function, all activation functions are relu, appropriate for a linear regression

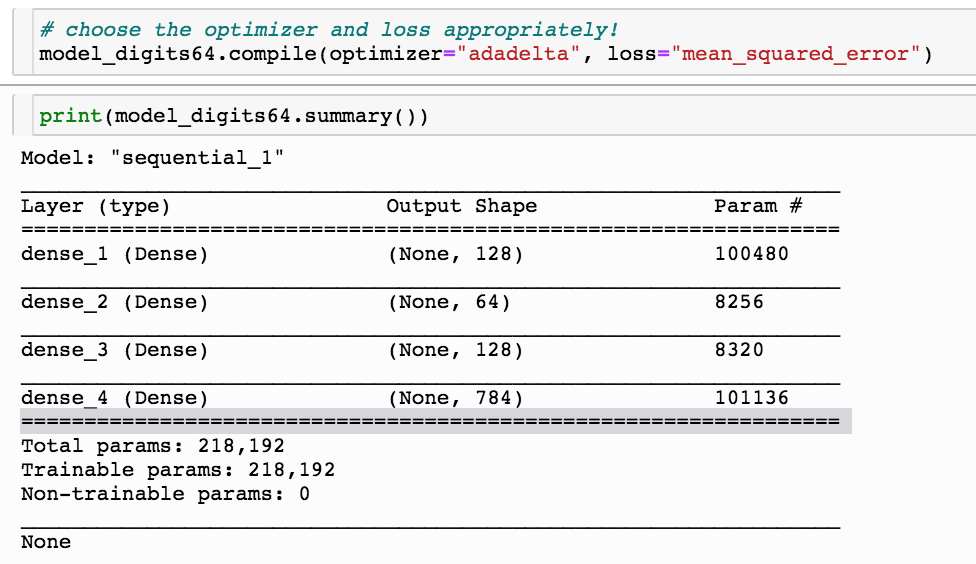

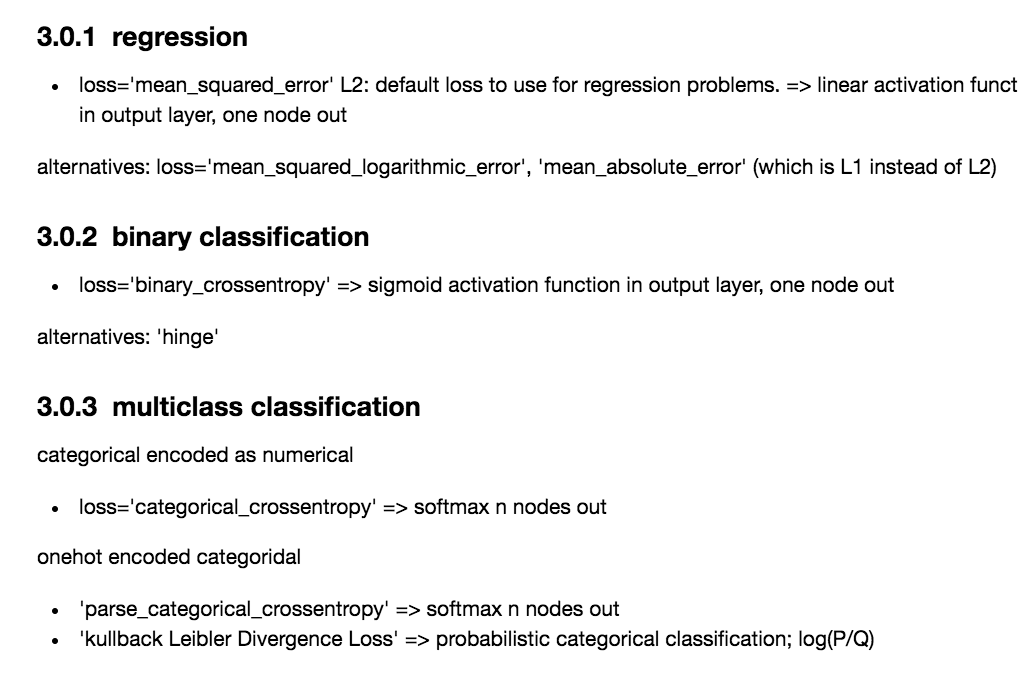

Building a DNN

with keras and tensorflow

autoencoder for image recontstruction

What should I choose for the loss function and how does that relate to the activation functiom and optimization?

Building a DNN

with keras and tensorflow

autoencoder for image recontstruction

What should I choose for the loss function and how does that relate to the activation functiom and optimization?

| loss | good for | activation last layer | size last layer |

|---|---|---|---|

| mean_squared_error | regression | linear | one node |

| mean_absolute_error | regression | linear | one node |

| mean_squared_logarithmit_error | regression | linear | one node |

| binary_crossentropy | binary classification | sigmoid | one node |

| categorical_crossentropy | multiclass classification | sigmoid | N nodes |

| Kullback_Divergence | multiclass classification, probabilistic inerpretation | sigmoid | N nodes |

resources

Neural Network and Deep Learning

an excellent and free book on NN and DL

http://neuralnetworksanddeeplearning.com/index.html

Deep Learning An MIT Press book in preparation

Ian Goodfellow, Yoshua Bengio and Aaron Courville

https://www.deeplearningbook.org/lecture_slides.html

History of NN

https://cs.stanford.edu/people/eroberts/courses/soco/projects/neural-networks/History/history2.html

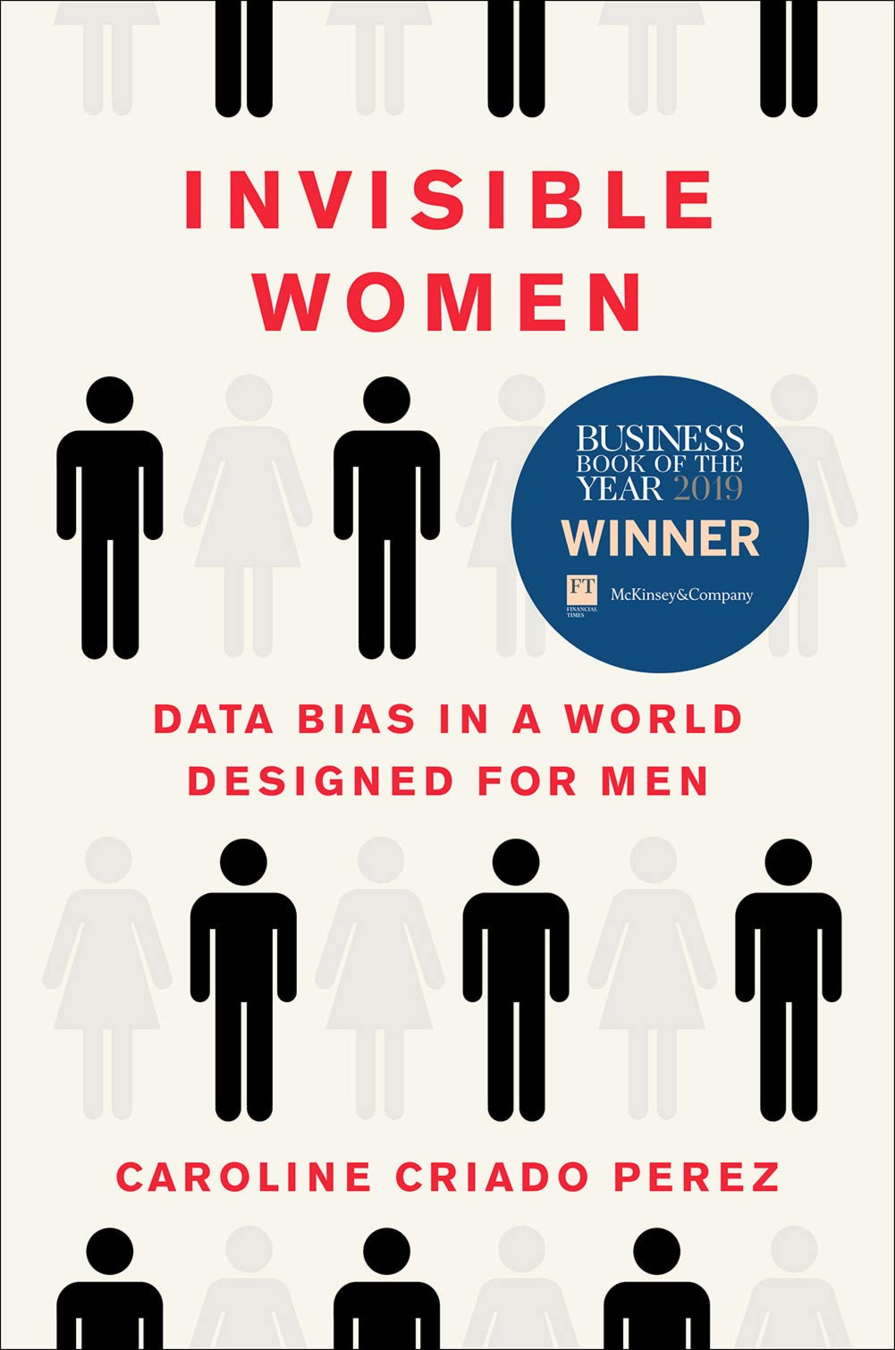

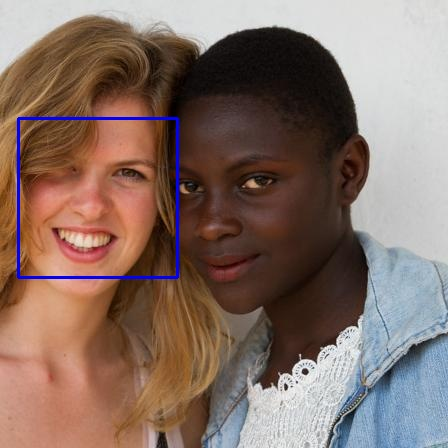

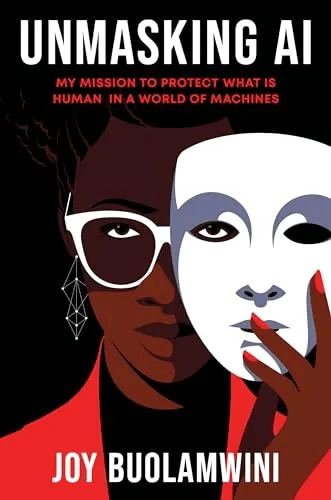

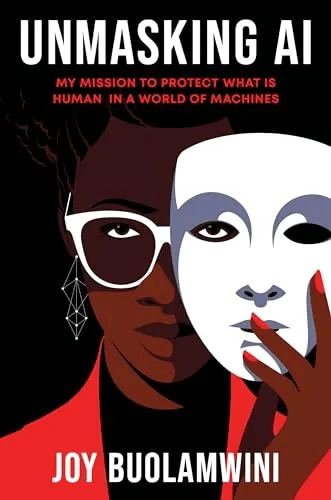

models are neutral, the bias is in the data (or is it?)

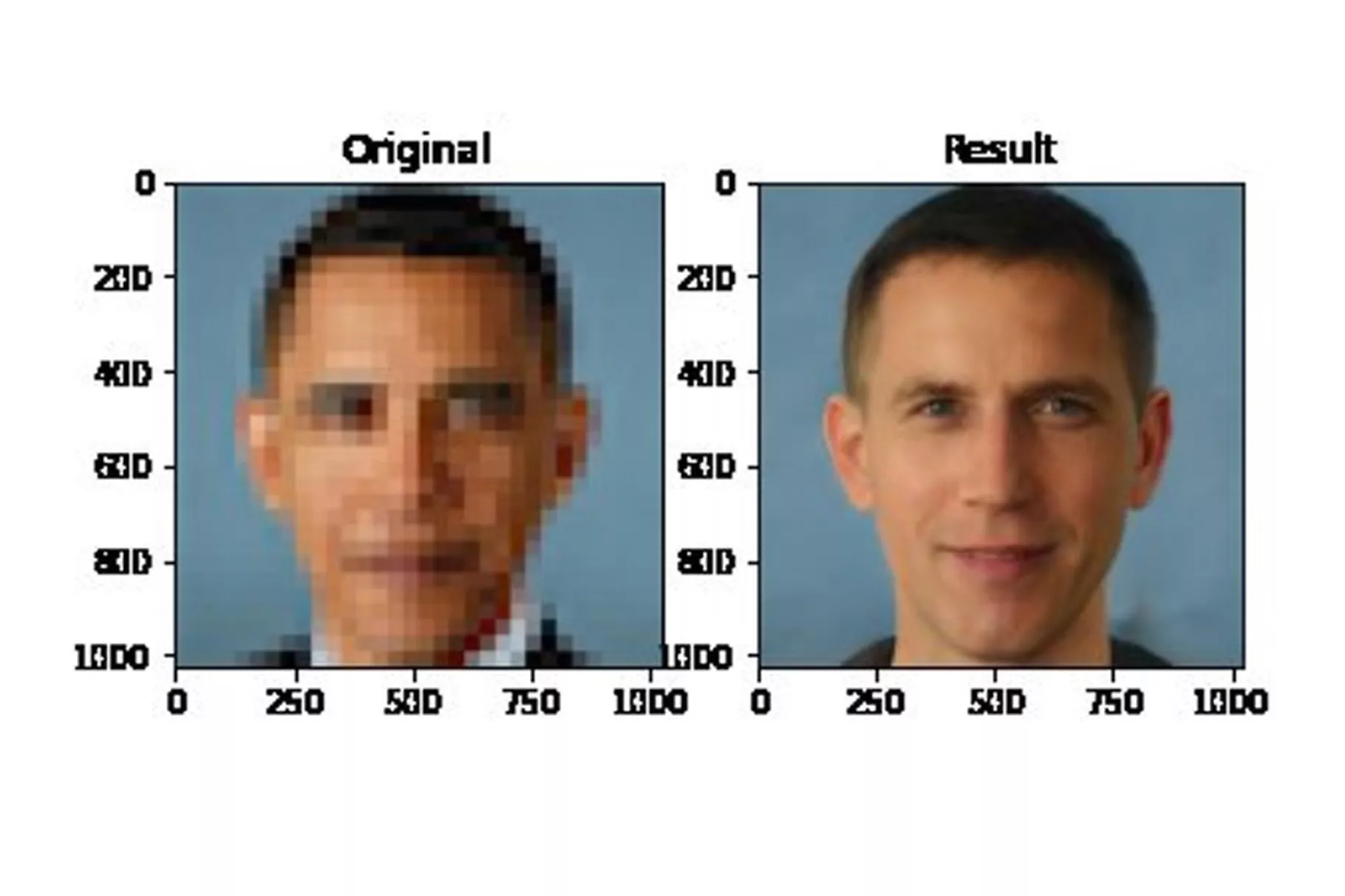

Why does this AI model whitens Obama face?

Simple answer: the data is biased. The algorithm is fed more images of white people

models are neutral, the bias is in the data (or is it?)

Why does this AI model whitens Obama face?

Simple answer: the data is biased. The algorithm is fed more images of white people

But really, would the opposite have been acceptable? The bias is in society

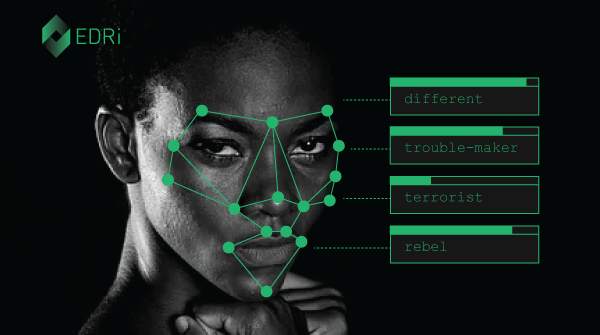

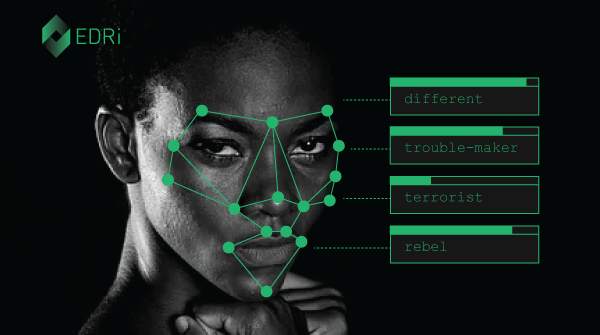

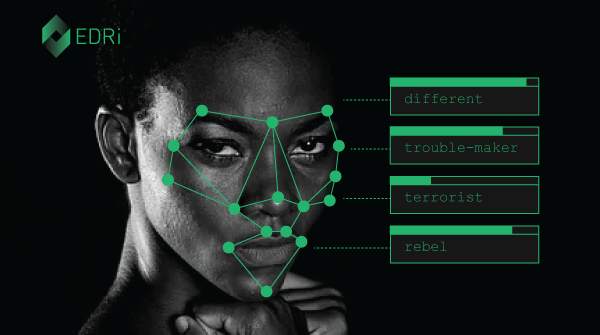

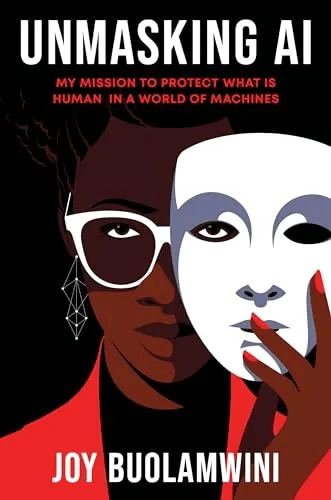

Joy Boulamwini

models are neutral, the bias is in the data (or is it?)

accountability

- can scientists be held responsible?

- should whoever commissions be responsible?

- is nobody responsible under the premise that decisions are objective? -> are they objective?, what does objective mean?, how can we objectively measure objectivity

accountability

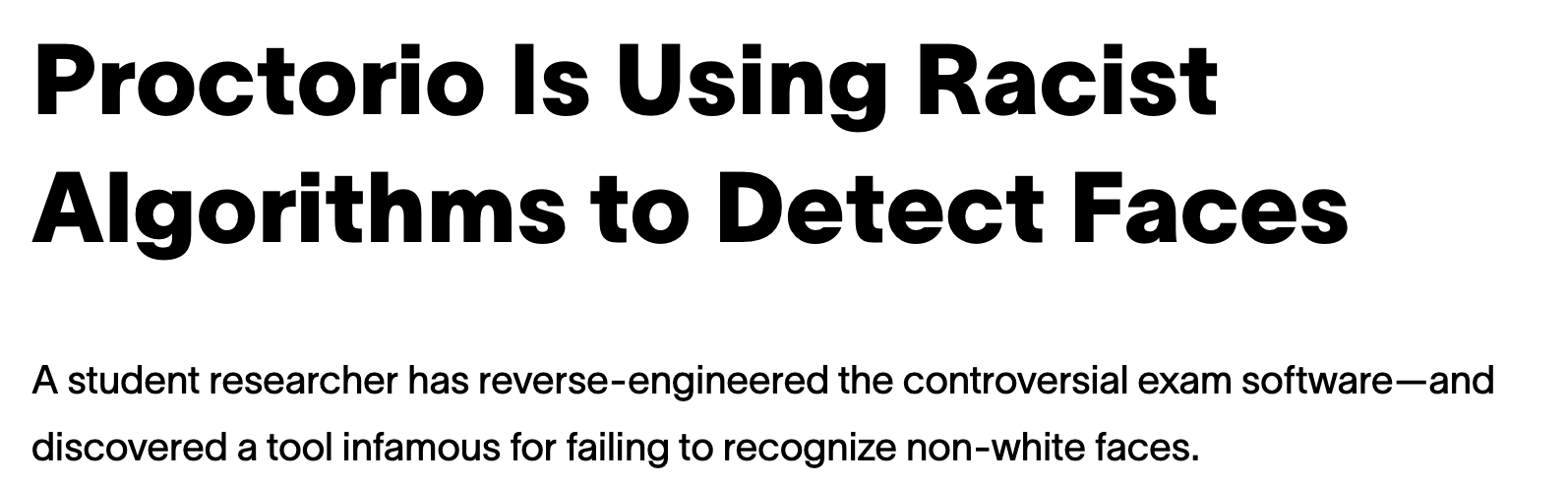

because of its complexity FR is cmmissioned to specialists

accountability

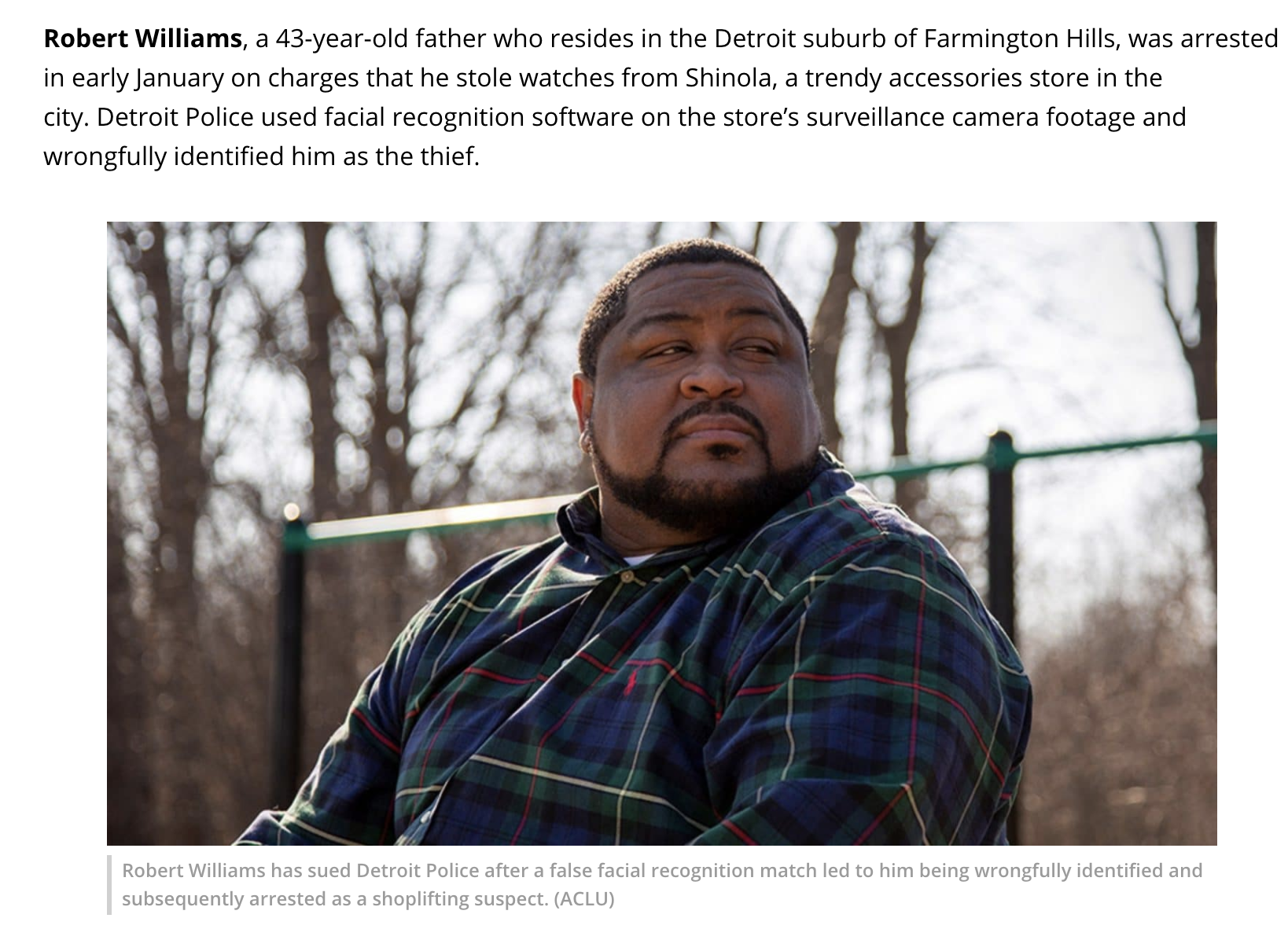

In a press release, the ACLU wrote, “Mr. Williams’ experience was the first case of wrongful arrest due to facial recognition technology to come to light in the United States.”

accountability

In a press release, the ACLU wrote, “Mr. Williams’ experience was the first case of wrongful arrest due to facial recognition technology to come to light in the United States.”

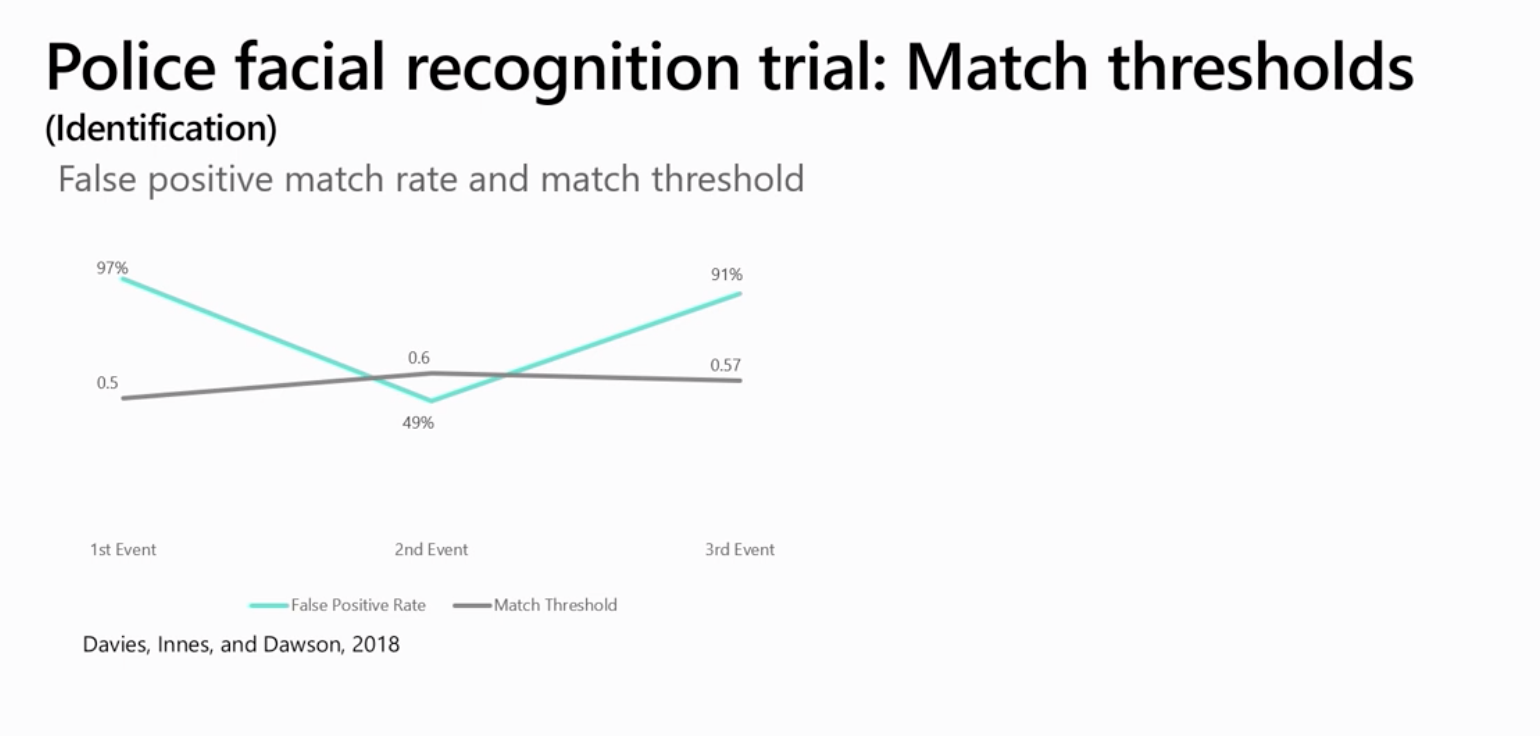

Who is responsible for setting the threshold?

FR returns a probabilistic result

a threshold is chosen to turn it into a T/F match for decision making

Decide which model is appropriate (depends on data and question)

where is the bias?

#UDCSS2020

@fedhere

1 - model selection

we are still trying to figure it out

we are still trying to figure it out

trivially intuitive

generalized additive models

decision trees

SVM

Random Forest

Deep Learning

Accuracy

univaraite

linear

regression

where is the bias?

Decide what your target function is

Machine learning models are functions that "learn" their parameters from the data.

They "learn" by minimizing or maximize some quantity.

What should you minimize?

#UDCSS2020

@fedhere

https://towardsdatascience.com/machine-learning-fundamentals-via-linear-regression-41a5d11f5220

2 - cost function

where is the bias?

They "learn" by minimizing or maximize some quantity.

What should you minimize?

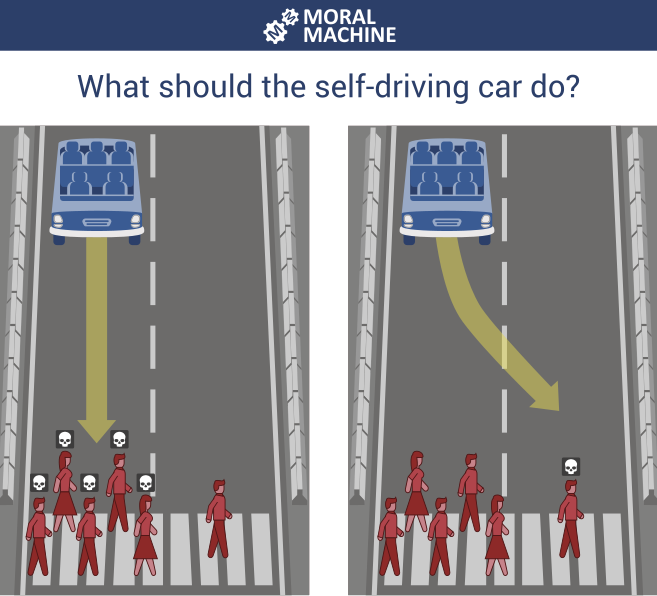

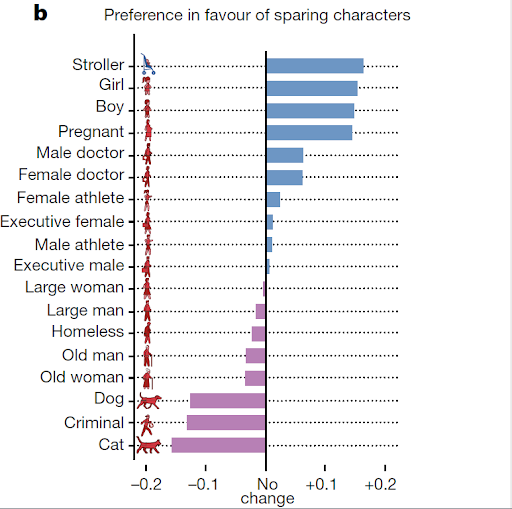

the hypothetical trolley problem suddenly is real

self-driving cars

#UDCSS2020

@fedhere

2 - cost function

where is the bias?

They "learn" by minimizing or maximize some quantity.

What should you minimize?

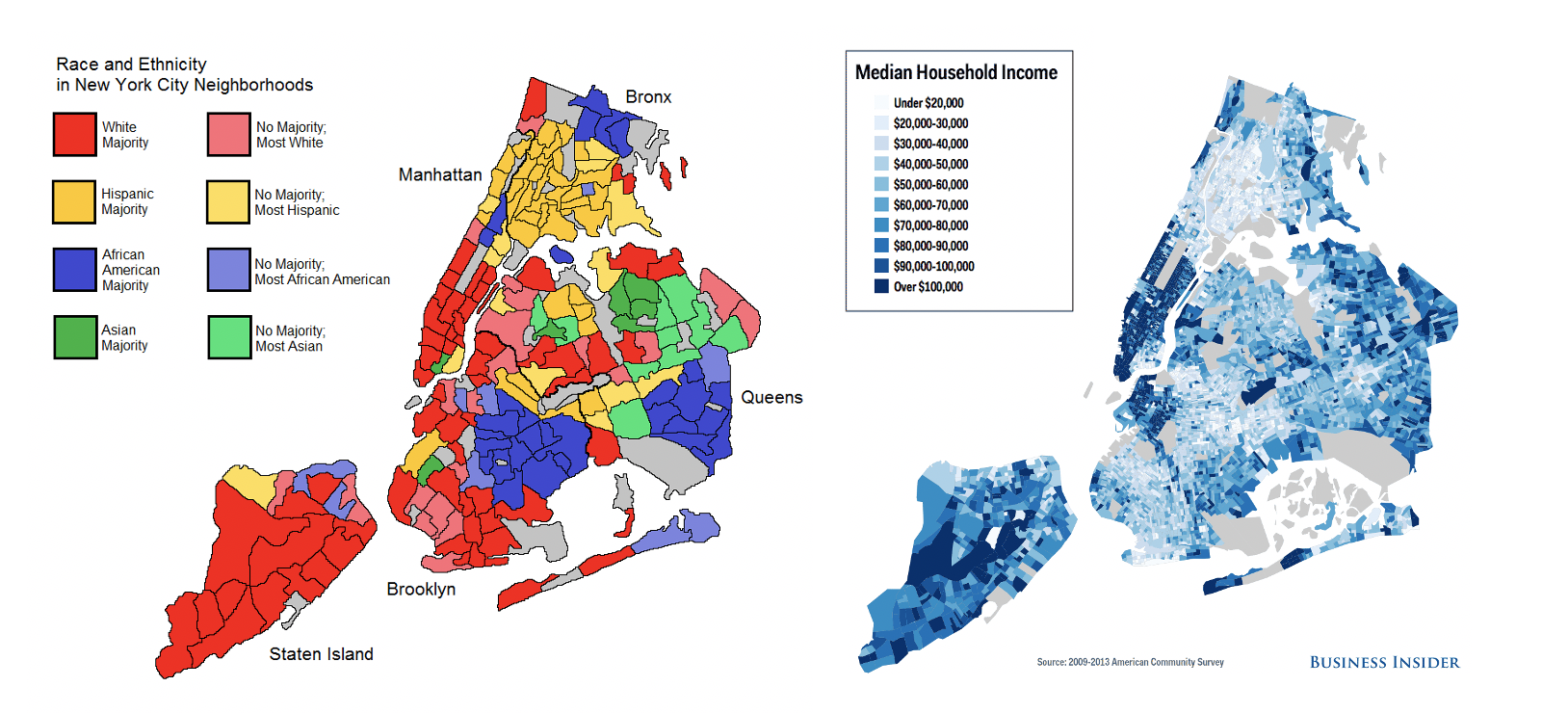

prosecutorial justice

minimize number of people incarcerated unjustly

maximize public safety

#UDCSS2020

@fedhere

OR

2 - cost function

Explore the data

discover some of the bias

(trust me, there is more!)

it's not easy

there's covariance

missing data

where is the bias?

#UDCSS2020

@fedhere

3 - data selection and preparation

remove the bias...

(few try)

#UDCSS2020

@fedhere

where is the bias?

3 - data selection and preparation

Machine learning learns from examples... what if the examples are ... racist?

GPT-3

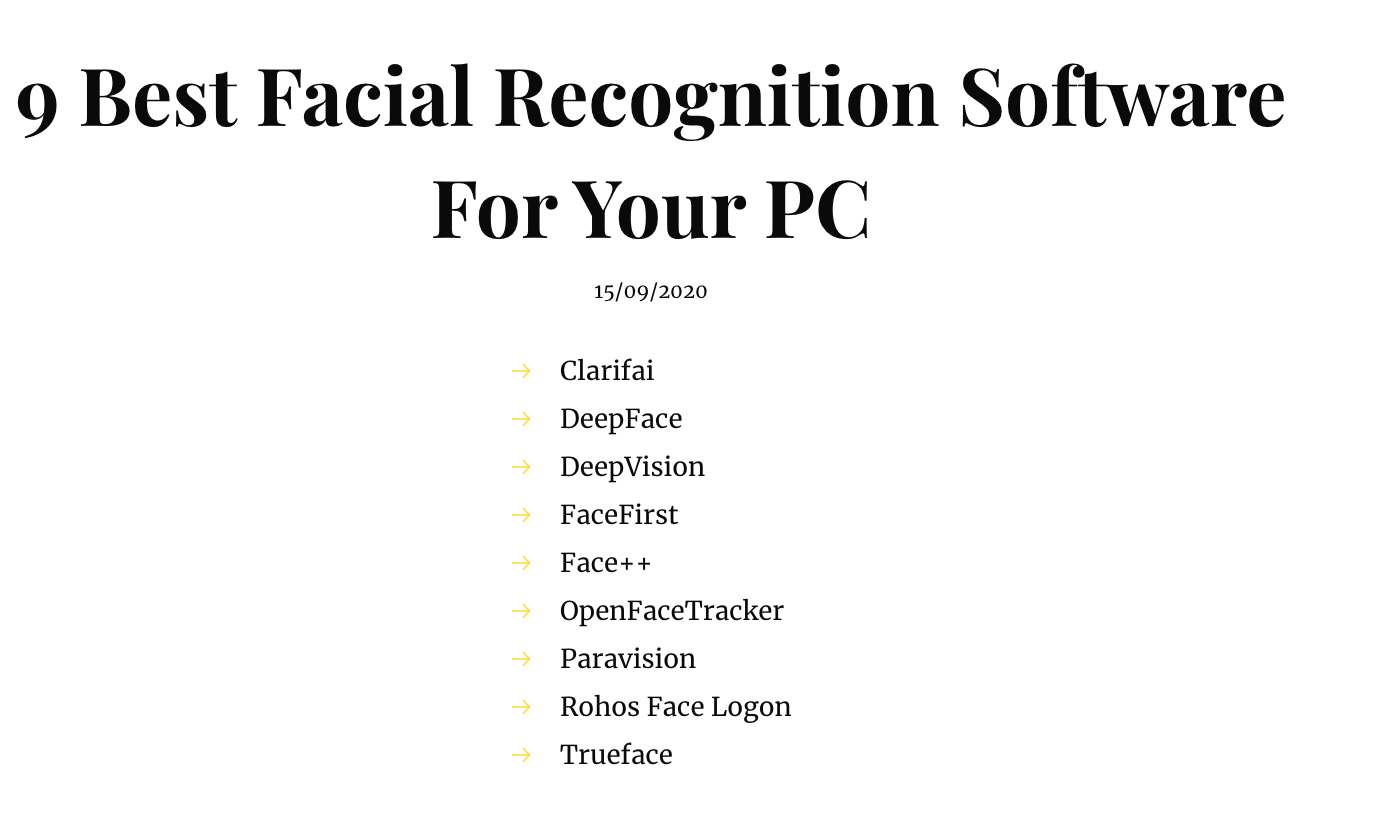

unethical applications of FR

https://modelviewculture.com/pieces/the-hidden-dangers-of-ai-for-queer-and-trans-people

unethical applications of FR

unethical applications of FR

Text

unethical applications of FR

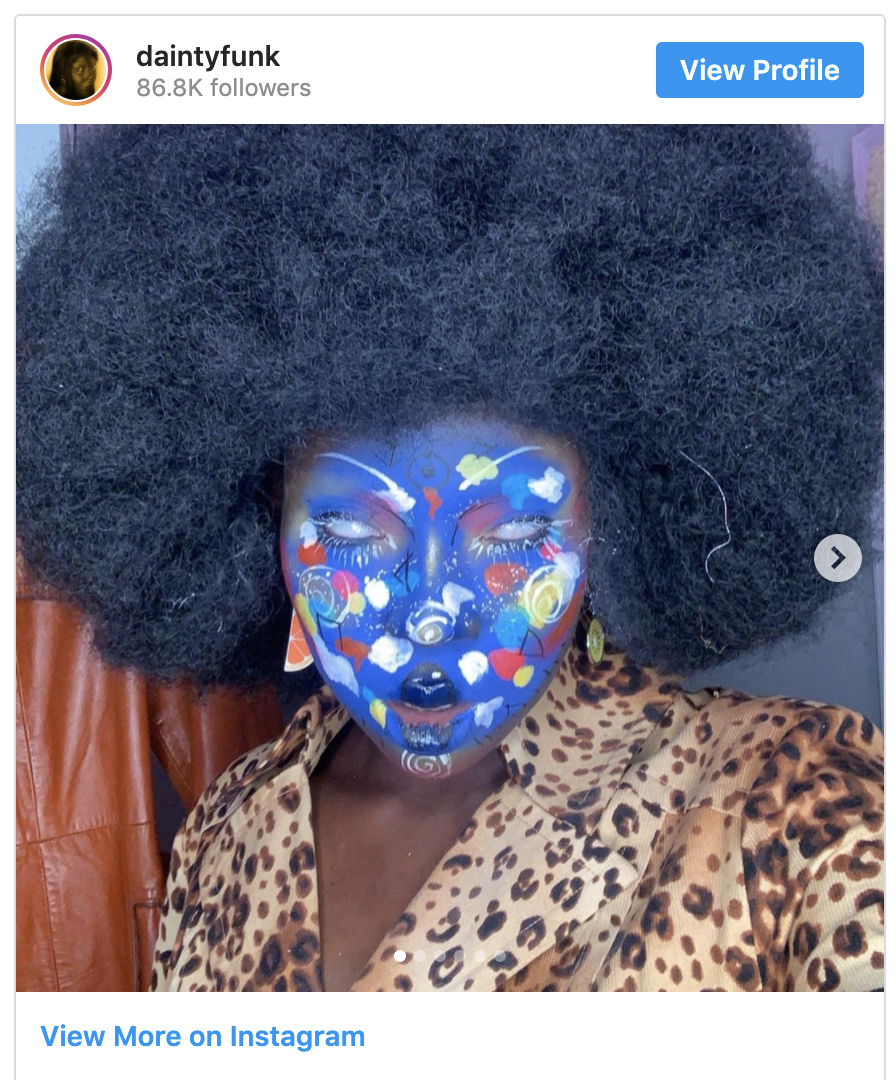

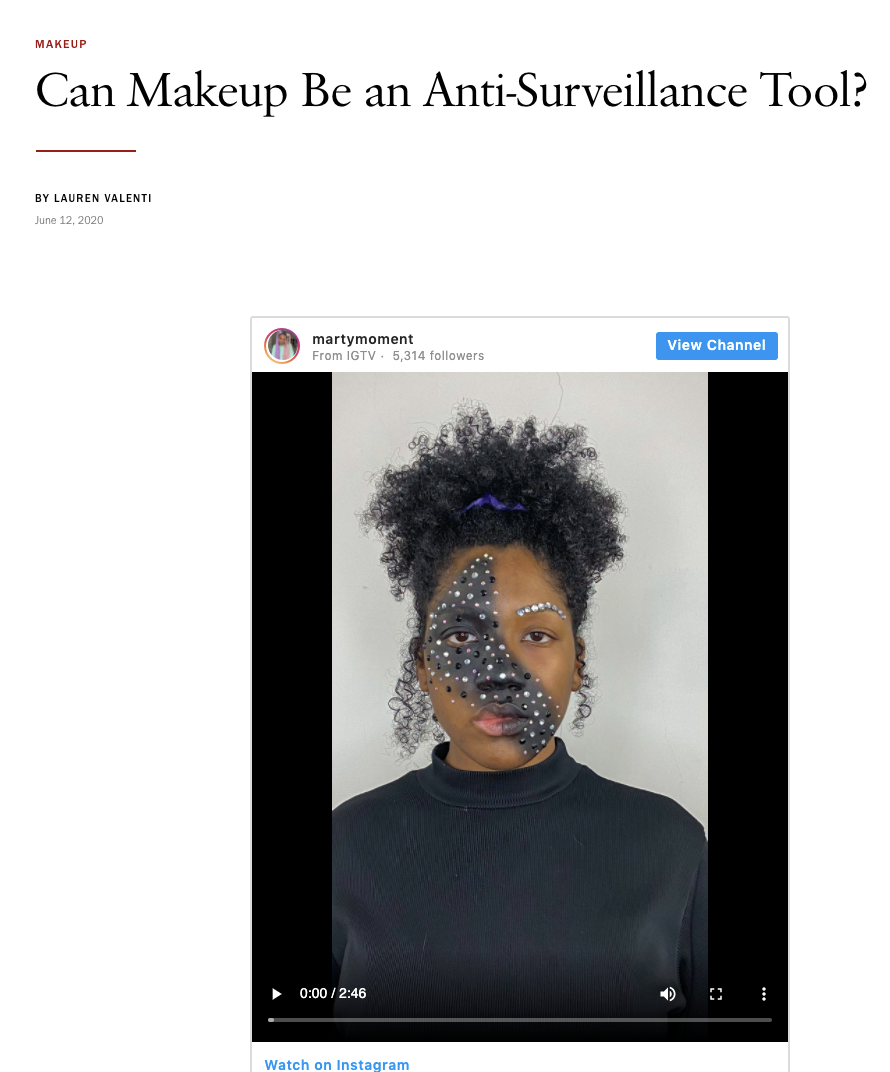

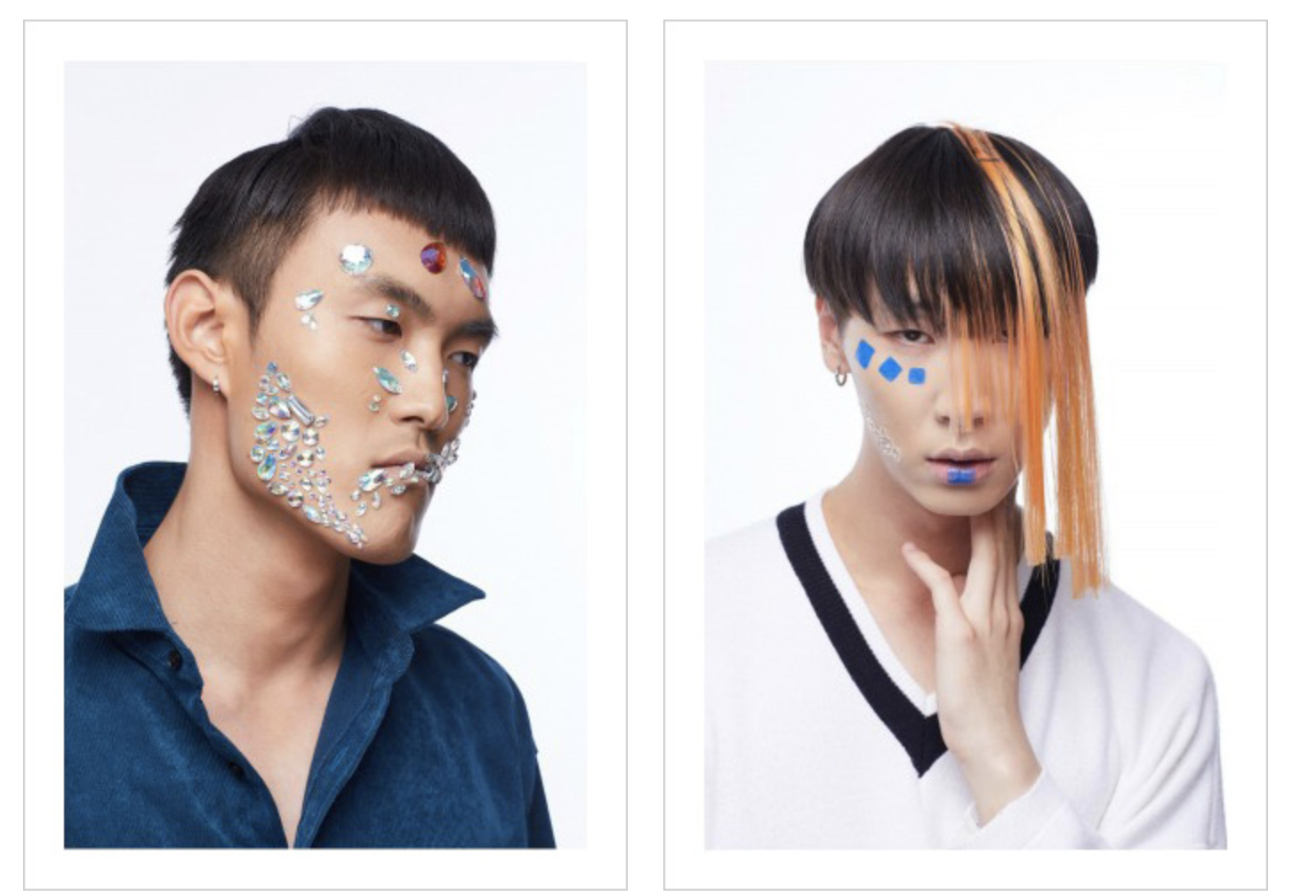

social protests

https://www.washingtonpost.com/technology/2020/06/12/facial-recognition-ban/

Policy and Resistance

https://www.washingtonpost.com/technology/2021/02/17/facial-recognition-biden/

https://www.washingtonpost.com/technology/2019/05/22/blasting-facial-recognition-technology-lawmakers-urge-regulation-before-it-gets-out-control/

ethics of AI

Challange + Opportunity

Knowledge is power

- Physics data is a sandbox. It has no social value, no privacy risk. We can safely learn about how bias builds into algorithm and how to correct it

Knowledge is power

- Astrophysical data is a sandbox. It has no social value, no privacy risk. We can safely learn about how bias builds into algorithm and how to correct it

- Ethics of AI is a critical element of the education of a technologist

With great power comes grteat responsibility

"Sharing is caring"

- Astrophysical data is a sandbox. It has no social value, no privacy risk. We can safely learn about how bias builds into algorithm and how to correct it

- Ethics of AI is a critical element of the education of a technologist

- AI is a transferable skill - use if for good!

the butterfly effect

We use astrophyiscs as a neutral and safe sandbox to learn how to develop and apply powerful tool.

Deploying these tools in the real worlds can do harm.

Ethics of AI is essential training that all data scientists shoudl receive.

Joy Boulamwini

models are neutral, the bias is in the data (or is it?)

is a word I am borrowing from Margaret Atwood to describe the fact that the future is us.

However loathsome or loving we are, so will we be.

Whereas utopias are the stuff of dream dystopias are the stuff of nightmares, ustopias are what we create together when we are wide awake

US-TOPIA

key concepts

MACHINE LEARNING

- Machine Learning models are parametrized representation of "reality" where the parameters are learned from finite sets of realizations of that reality

- Unsupervised learning: all variables observed for all data, looking for natural grouping of datapoints in the N-dim space

- Supervised learning: a target variable is known for (a subset of) the data and the goal is to predict it for new (the rest of the) data

DATA ETHICS

- epistemic transparency:not all models are the same

- there is a tradeoff between epistemic transparency and the ability to handle complex data

- The bias enter data science in (at least) data; model selection; target function and optimization choices; validation

Text

thank you!

#UDCSS2020

@fedhere

University of Delaware

Department of Physics and Astronomy

federica bianco

Biden School of Public Policy and Administration

Data Science Institute

fbianco@udel.edu

https://www.tandfonline.com/doi/full/10.1080/1369118X.2018.1477967

https://medium.com/payoff/transparency-in-data-science-9a8778083b3

https://www.americanscientist.org/article/a-peek-at-proprietary-algorithms

https://medium.com/payoff/transparency-in-data-science-9a8778083b3

https://weaponsofmathdestructionbook.com/

The Ethics of the Ethics of AI https://www.oxfordhandbooks.com/view/10.1093/oxfordhb/9780190067397.001.0001/oxfordhb-9780190067397-e-2 , Thomas M. Powers and Jean-Gabriel Ganascia