Assignment 5

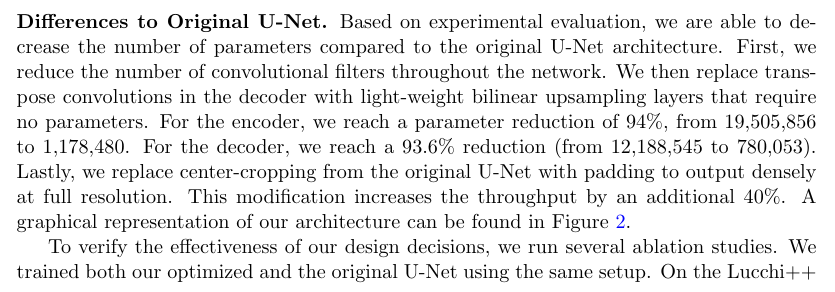

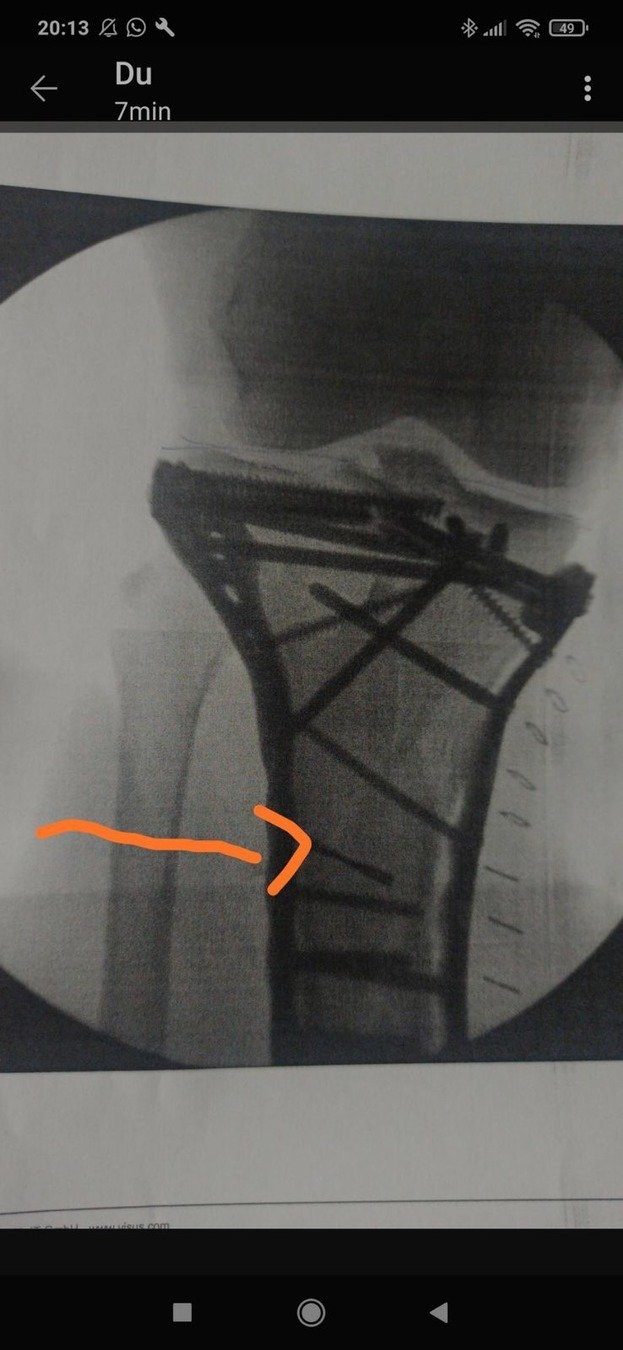

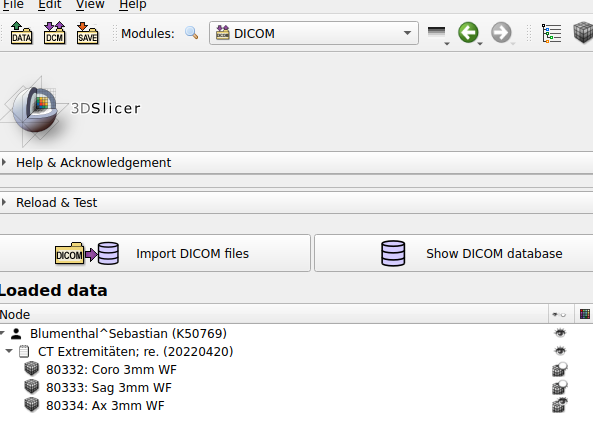

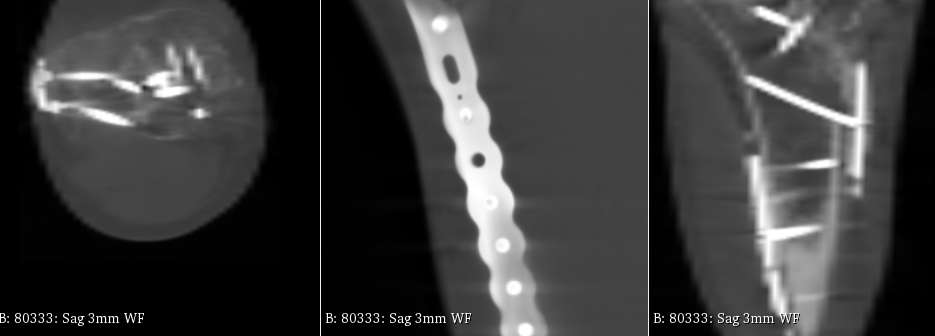

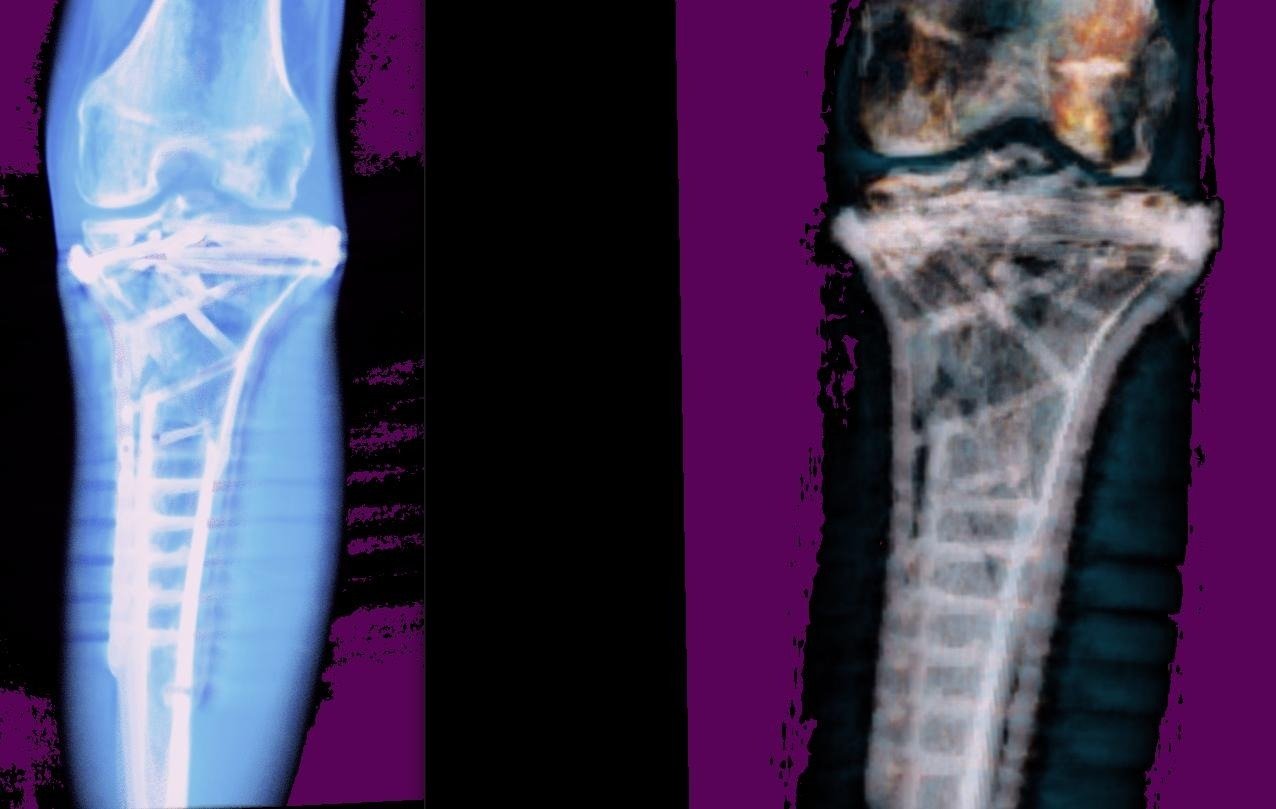

PyDicom

load CT volume, slice it, adjust window/level

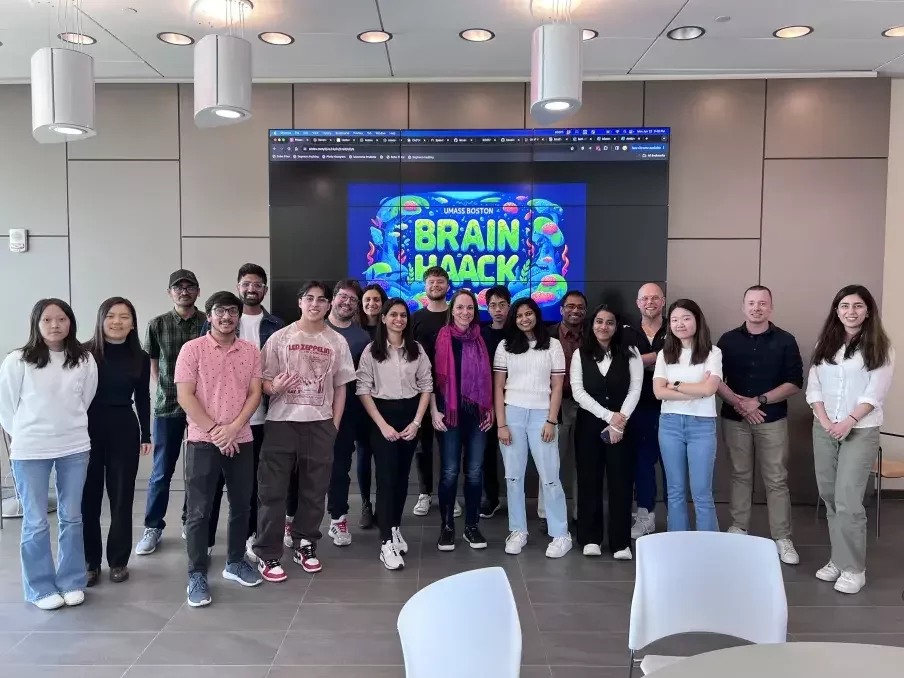

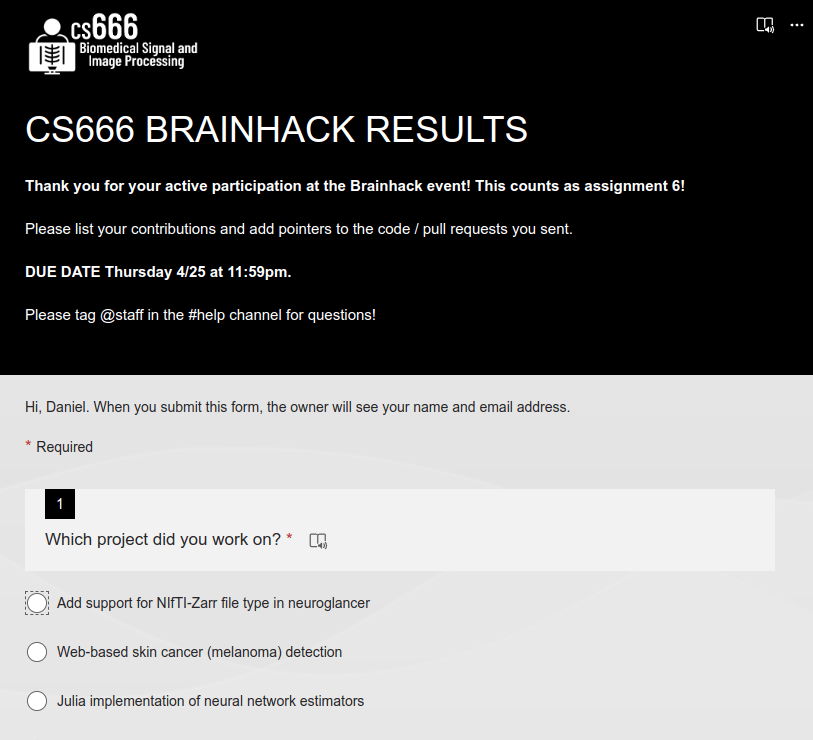

Assignment 6

3 Lectures

1 Assignment

2 Quizzes

4x Journal Clubs

Nuclear Imaging

Biometrics + Future

Final Recap

MEGAQUIZ

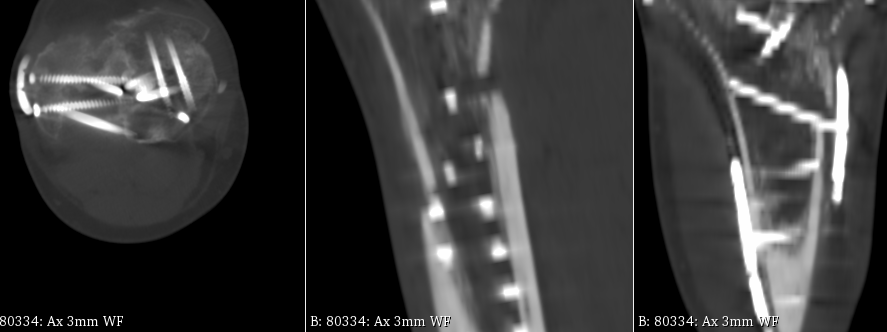

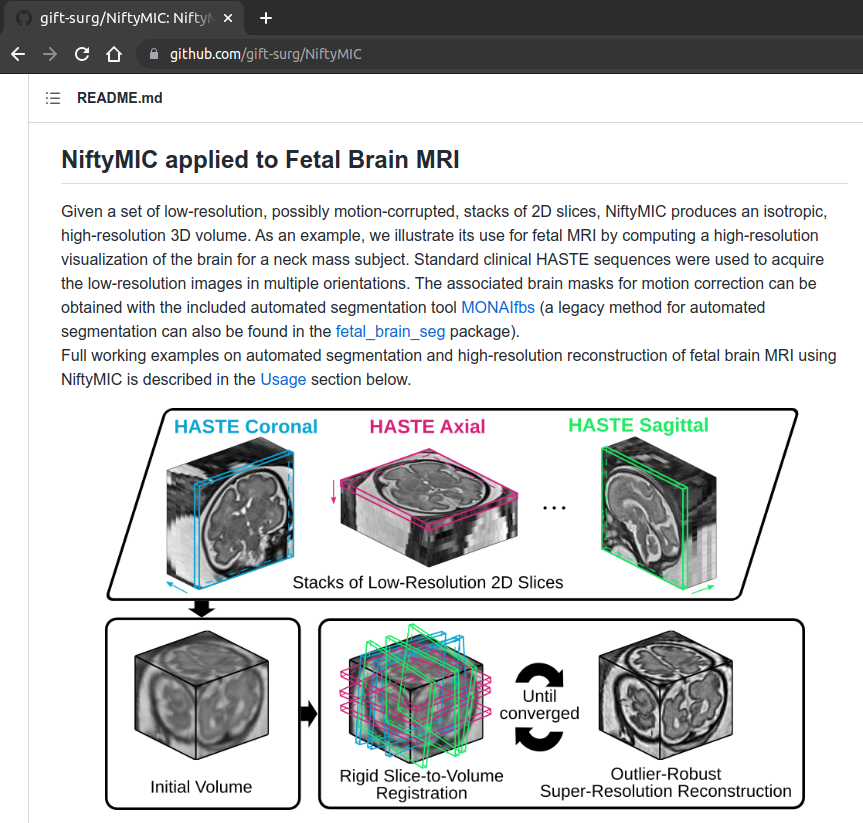

MIDL 2020

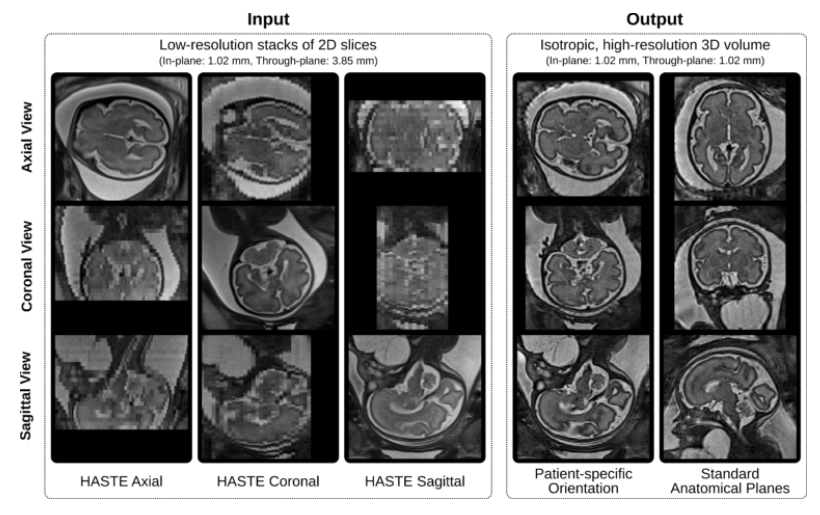

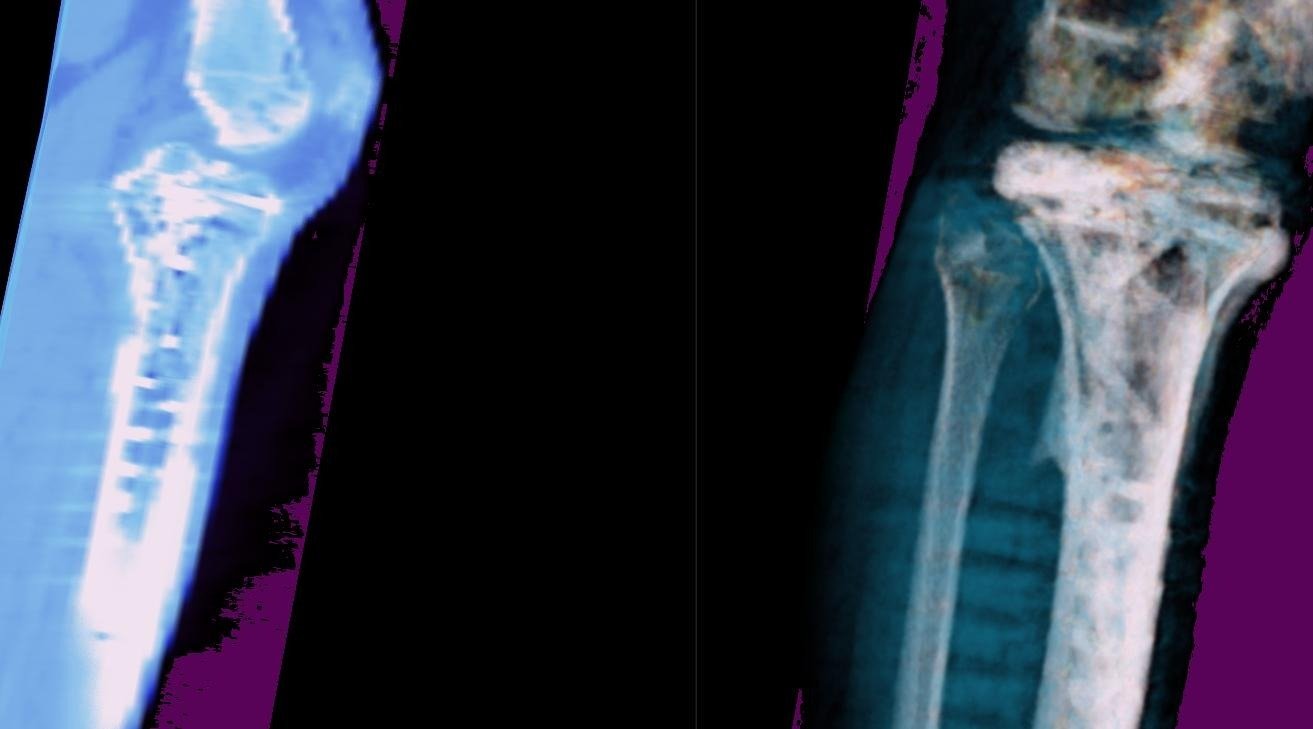

Coronal

Super-Resolution

Coronal

Super-Resolution

1. Load Data

2. Setup Network

3. Train Network

4. Predict!

4 Steps

Data

Training

Testing

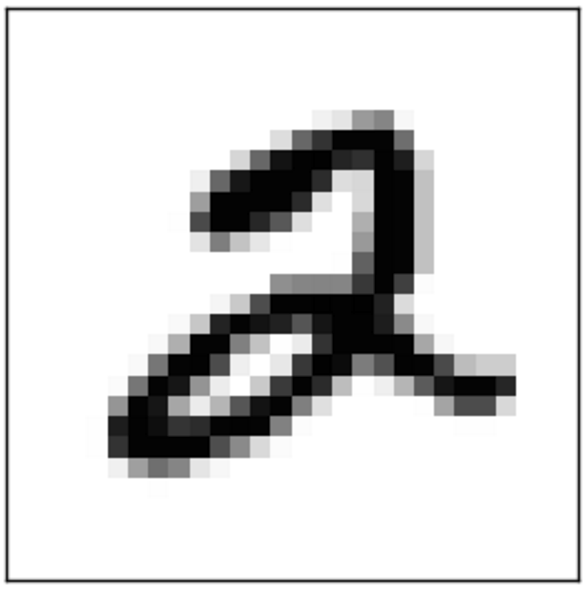

2

Label

?

Label

But we know the answer!

X_train

y_train

X_test

y_test

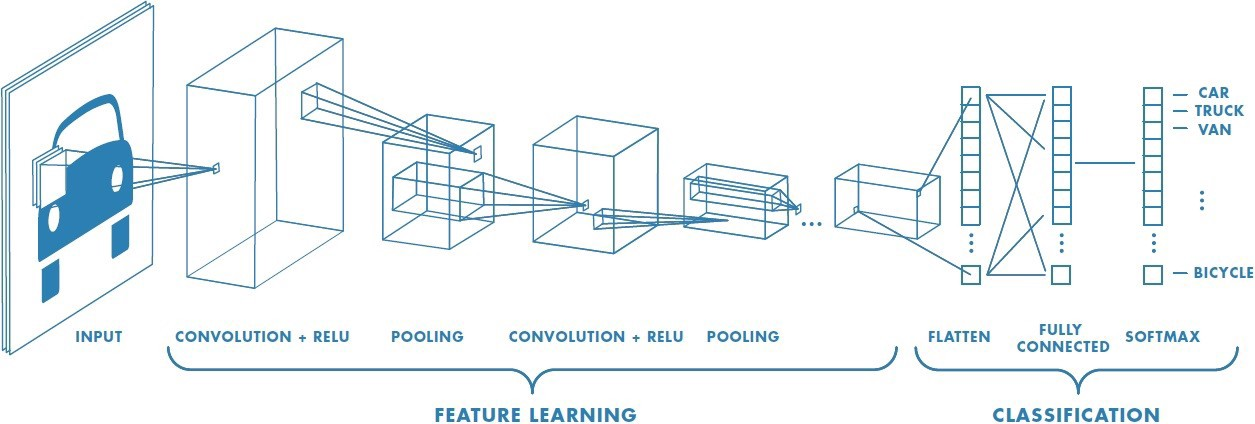

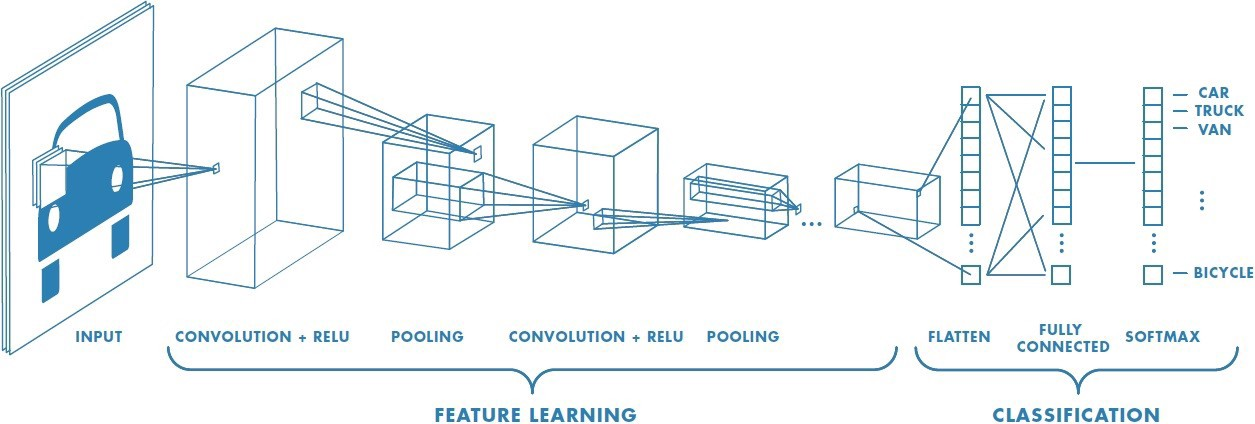

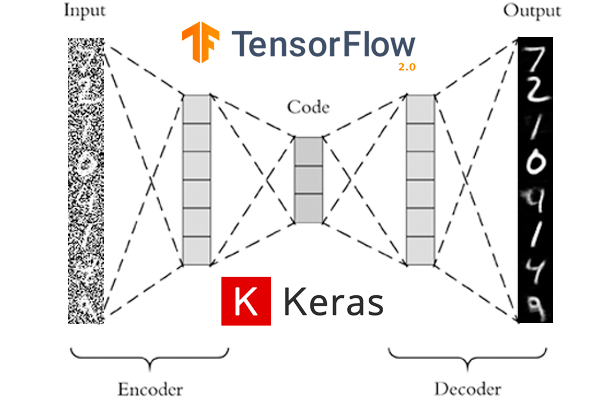

Setup Network

NUMBER_OF_CLASSES = 10

model = keras.models.Sequential()

model.add(keras.layers.Conv2D(32, kernel_size=(3, 3),

activation='relu',

input_shape=first_image.shape))

model.add(keras.layers.Conv2D(64, (3, 3), activation='relu'))

model.add(keras.layers.MaxPooling2D(pool_size=(2, 2)))

model.add(keras.layers.Dropout(0.25))

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(128, activation='relu'))

model.add(keras.layers.Dropout(0.5))

model.add(keras.layers.Dense(NUMBER_OF_CLASSES, activation='softmax'))NUMBER_OF_CLASSES = 10

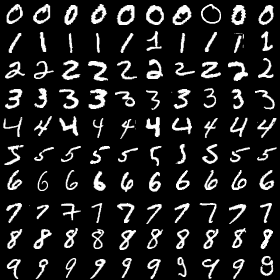

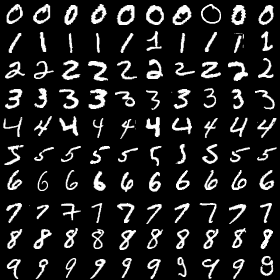

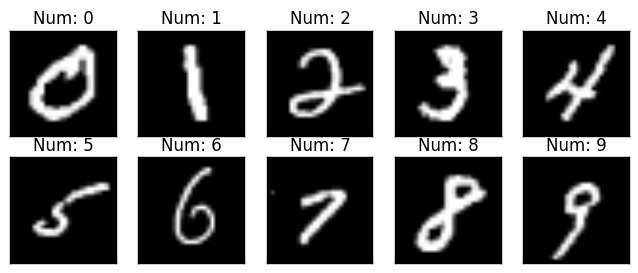

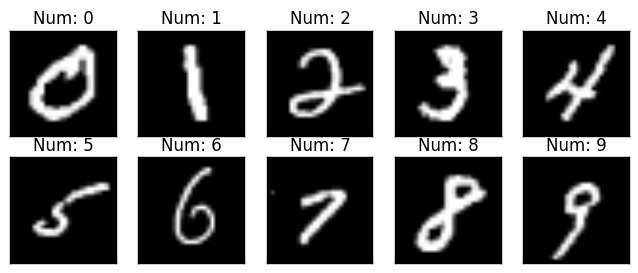

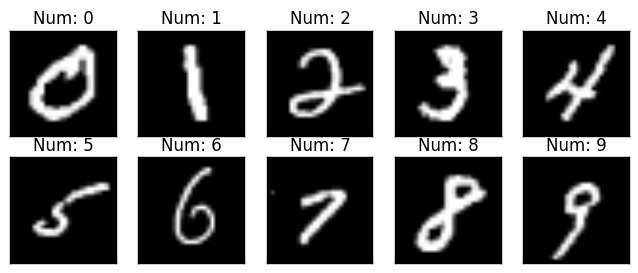

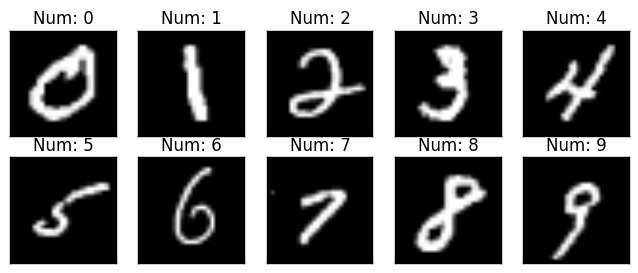

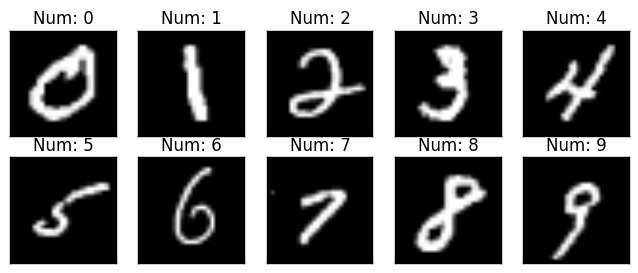

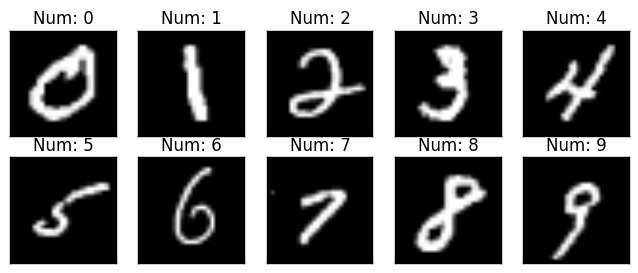

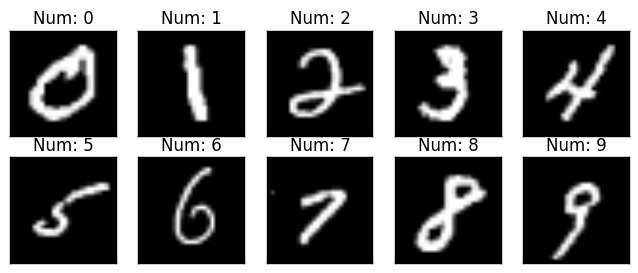

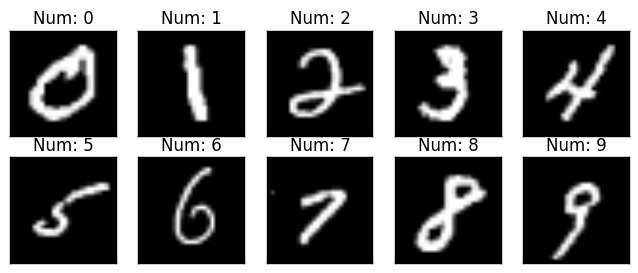

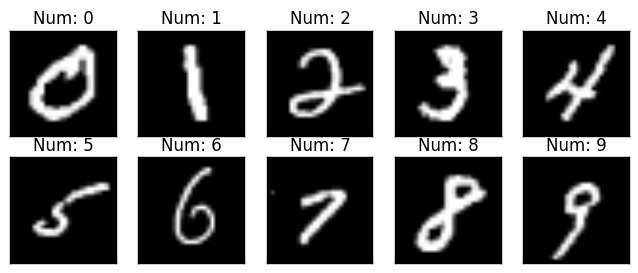

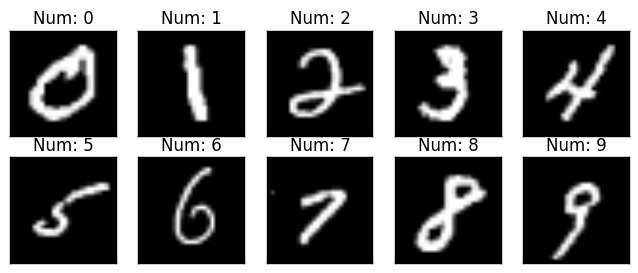

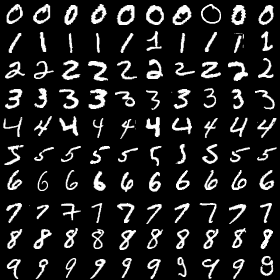

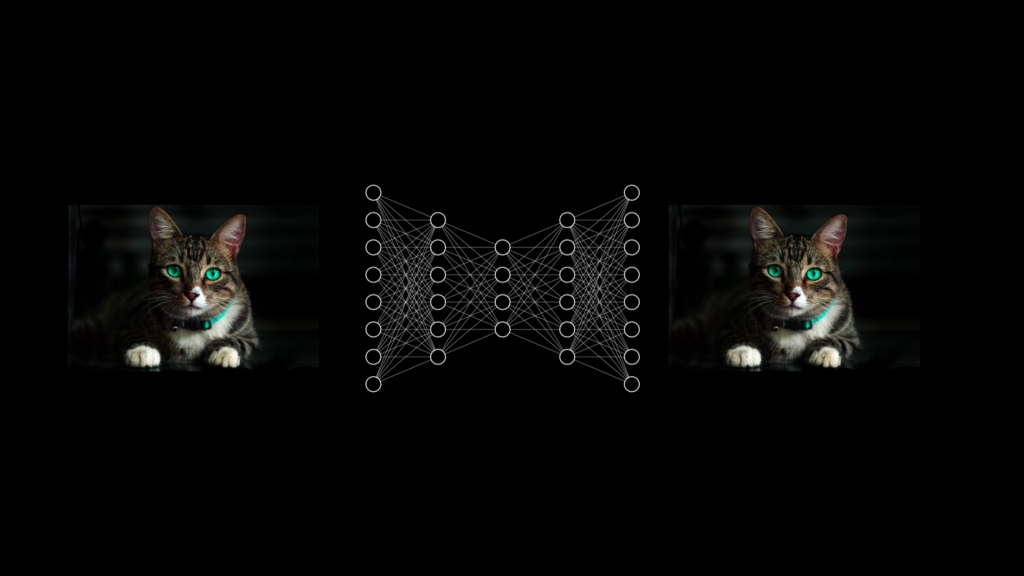

MNIST

NUMBER_OF_CLASSES = 2

Cats vs. Dogs

Setup Network

NUMBER_OF_CLASSES = 10

model = keras.models.Sequential()

model.add(keras.layers.Conv2D(32, kernel_size=(3, 3),

activation='relu',

input_shape=first_image.shape))

model.add(keras.layers.Conv2D(64, (3, 3), activation='relu'))

model.add(keras.layers.MaxPooling2D(pool_size=(2, 2)))

model.add(keras.layers.Dropout(0.25))

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(128, activation='relu'))

model.add(keras.layers.Dropout(0.5))

model.add(keras.layers.Dense(NUMBER_OF_CLASSES, activation='softmax'))model.compile(loss=keras.losses.categorical_crossentropy,

optimizer=keras.optimizers.Adadelta(),

metrics=['accuracy'])Train Network

9

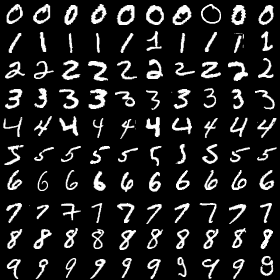

Training Data

Then we check how well the network predicts the evaluation data!

?

Loss

should go down!

Repeated.. (1 run is called an epoch)

Predict!

Testing Data

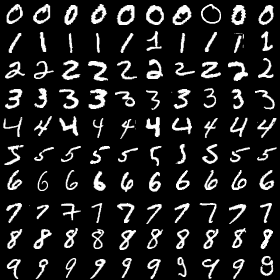

0 0 0

1 1 1

2 2 2

3 3 3

4 4 4

5 5 5

6 6 6

7 7 7

8 8 8

9 9 9

Measure how well the CNN does...

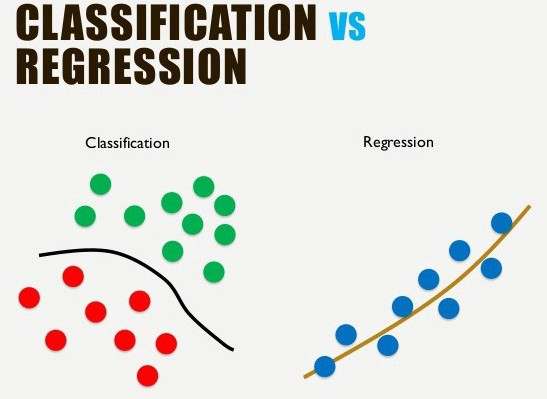

Classification

Regression

put samples into different classes

estimate values, "fitting"

0..1 ~ 0..90 degrees

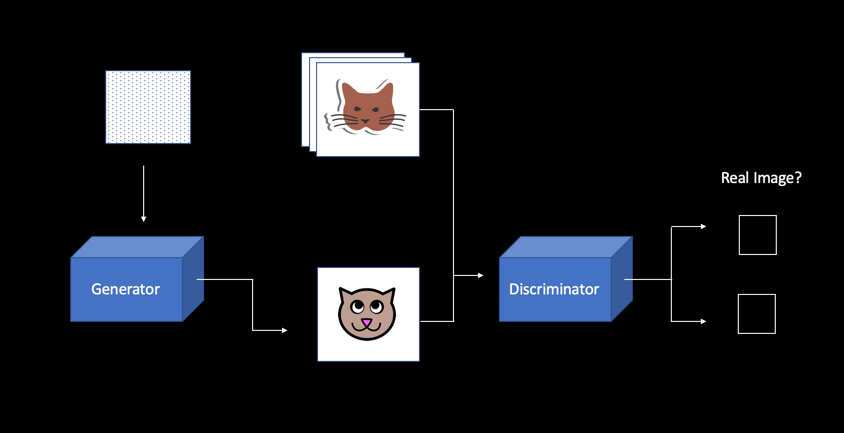

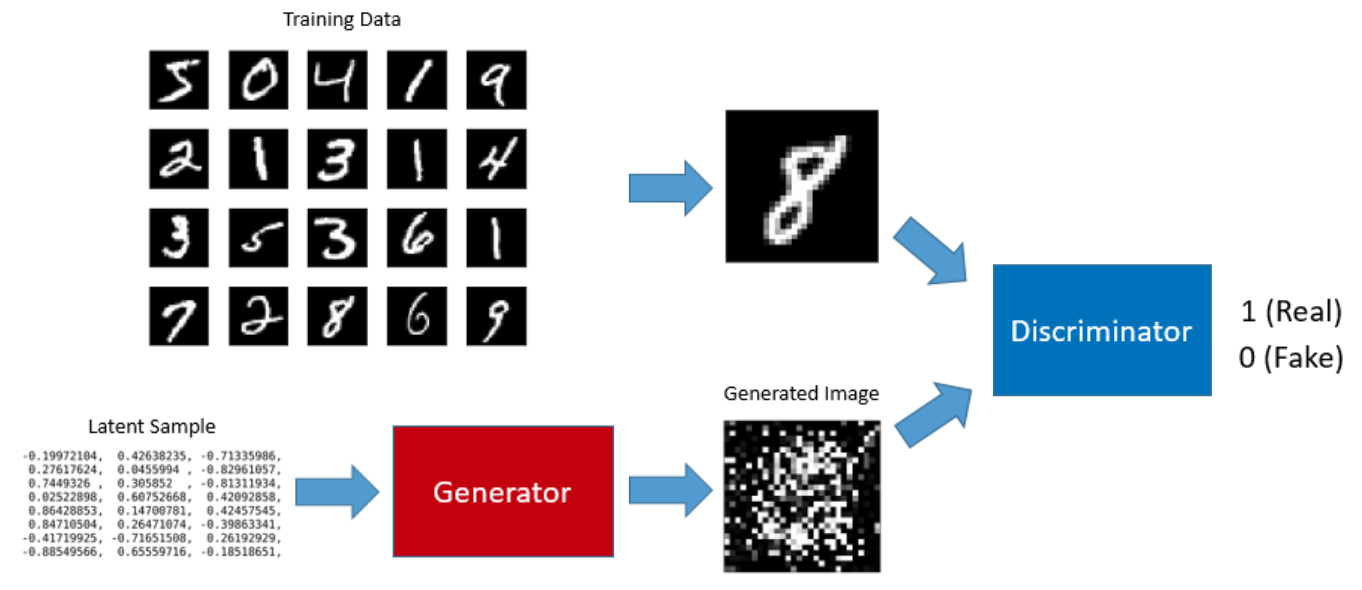

GANs

Generative Adversarial Networks

Create fake images and tune them to look real!

Finetuning

Noise

Ian Goodfellow

Director of Machine Learning

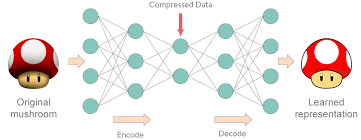

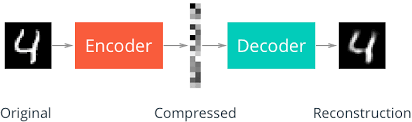

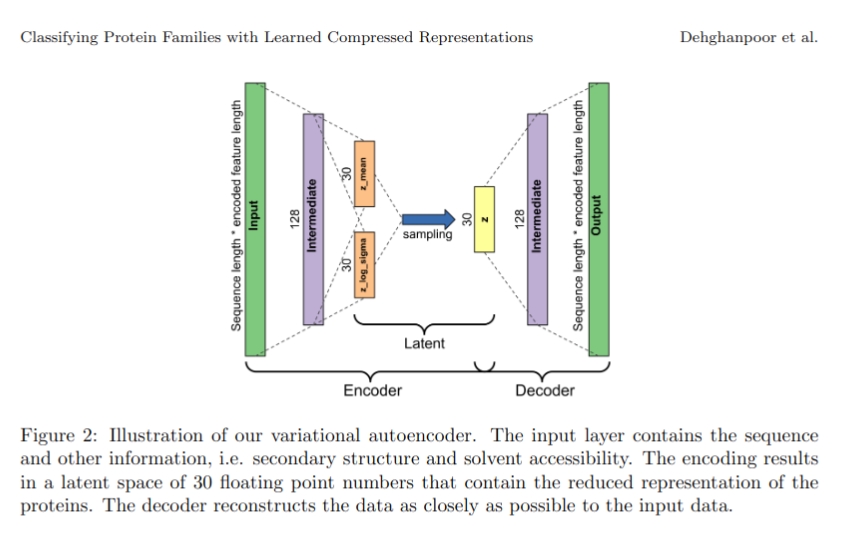

Autoencoders

Compressed representations allow more efficient processing!

80%

Latent Space

Denoising