New Zealand Summer Workshop

10th November 2023

Japan-New Zealand Catalyst Grant

Assoc Professor Ian Welch

ian.welch@vuw.ac.nz

https://www.linkedin.com/in/ianswelch

Te Herenga Waka, Victoria University of Wellington

Who am I?

- Associate Professor @ Victoria University of Wellington (VUW)

- PhD and Masters (Newcastle UK), BCom (VUW)

- Application security policies, malicious and fault tolerant systems, loosely coupled self-adaptive software systems

- Lead cybersecurity teaching stream at VUW

- Focus on network applications and people

Owhiti research group

Prior work

Privacy ensuring e-commerce transactions (Ben Palmer) - multipart computation, interactive zero knowledge proofs, formal modeling

Localisation of Attacks, Combating Browser-Based Geo-Information and IP Tracking Attacks (Masood Mansoori) - empirical, hazop for experiments

Early detection of ransomware (Shabbir Abbasi) - evolutionary computing, sequence aware machine learning

One-shot learning for malware detection (Jinting Zhu) - siamese neural networks, adversary machine learning

Automated threat analysis for IoT devices (Junaid Haseeb - unsupervised machine learning, autoencoders, extraction semantic information)

Projects soon to finish

- Detection of advanced persistent attacks

- (Abdullah Al-Mamoun) - long lasting, slow, few examples - hybrid fusion of data sources, feature selection, evolutionary computing

- Adaptable network application honeypots (Maryam Van Naseri) - optimisation problem, formal modeling, empirical experiments

- Understanding and influencing home users cybersecurity

- (Lisa Patterson) - quantiative and qualitative research, social nudges to improve cybersecurity

New and ongoing projects

- Explainable AI for cybersecurity (Dedy Hendro, 2027) - xAI, intrusion detection

- Trusted computing without hardware support (Paul Dagger, parttime, 2028) - multiparty computation

- Mobile-first Disease detection for native NZ flora (Jamey Hepi, 2026)

- Safety and security for consumer cyberphysical systems (interdisciplinary)

- Effective cybersecurity programmes in the Pacific (interdisciplinary)

- Indigicloud - edge clouds for digital marae - working with Te Tii Marae - applying for grant related to zero knowledge architecture and continuous authentication

- Intrusion detection for CPS using digital twins (waiting)

- Improving TOR anonymity (visitor)

Collaboration ideas

- Malware detection, intrusion detection - feature construction/evolutionary computing (w/ Harith Al-Sahaf).

- Use of intelligent transport systems as case studies for the new and incoming students working on explanatory AI (w/ Yi Mei, Andrew Lensen) & digital twins (w/ Masood Mansoori, Arman Khouzani).

- Deployment of honeypots in other organisations (w/ Masood Mansoori)

- Adversary machine learning (no current students, opportunity to explore with honours students).

Catalyst grant

Potential avenues.

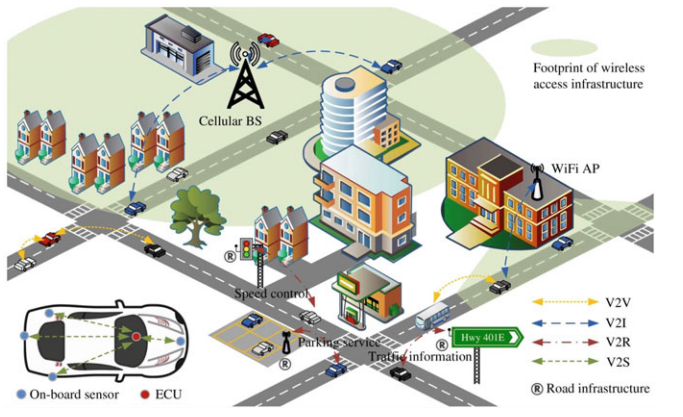

Cybersecurity for Intelligent Transport Systems.

- cars, pedestrians, (cycles)

- smart city interaction

Two recent survey papers:

- threats from malware

- adverserial machine learning

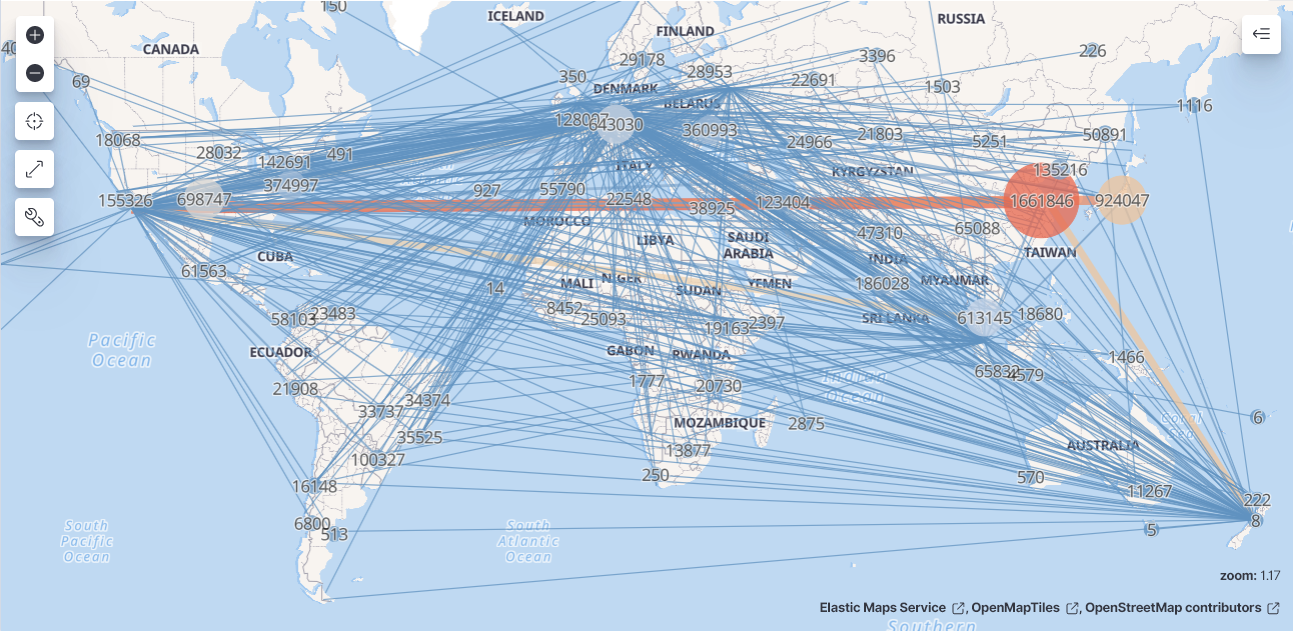

Malware

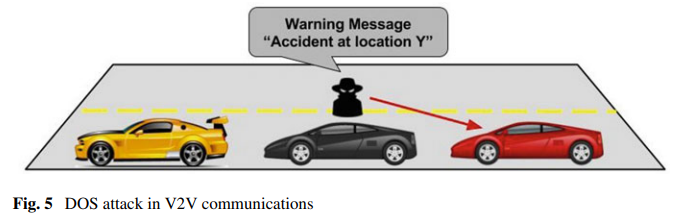

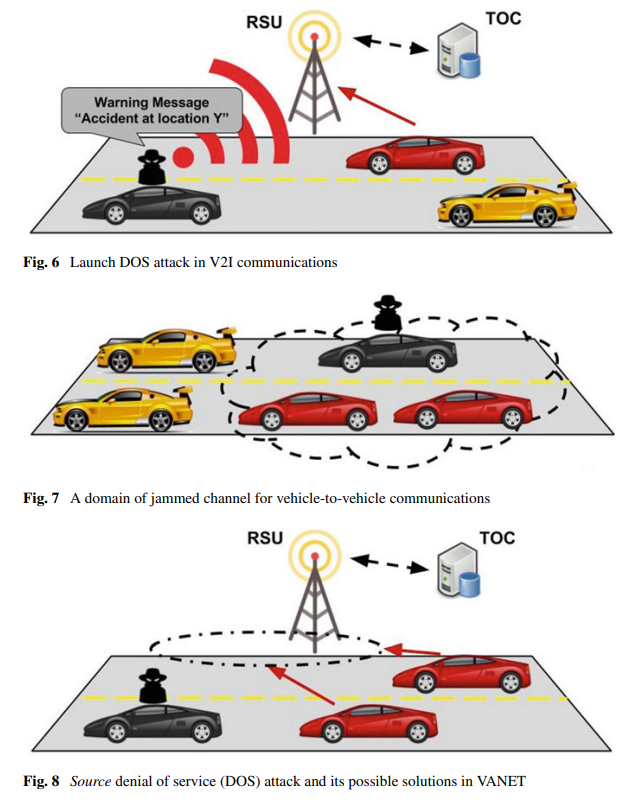

Inter-vehicle denial-of-service attacks.

Targets vehicles or infrastructure.

Basic - resource exhaustion.

Extended - jam channels.

Distributed denial of services - vehicles and infrastructure.

Solutions (voting to ignore bad actors, blockchain)

Text

Al-Sabaawi, A., Al-Dulaimi, K., Foo, E., & Alazab, M. (2021). Addressing Malware Attacks on Connected and Autonomous Vehicles: Recent Techniques and Challenges. In M. Stamp, M. Alazab, & A. Shalaginov (Eds.), Malware Analysis Using Artificial Intelligence and Deep Learning (pp. 97–119). Springer International Publishing. https://doi.org/10.1007/978-3-030-62582-5_4

Malware

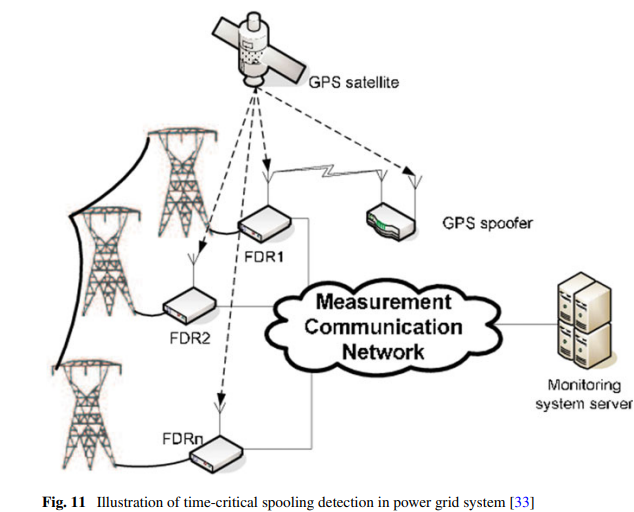

Inter-vehicle other attacks.

GPS spoofing - powergrid solution is spoofing detection).

Masquerading and Sybil attacks - concealment and duplication.

Impersonation - steal identity.

Effects - fake damaging roadway, traffic congestion, crashes.

Malware

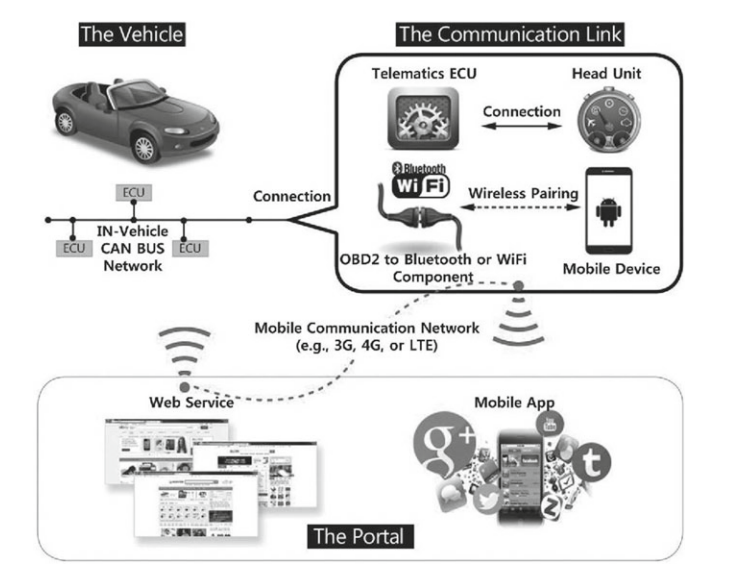

Intra-vehicle communication.

- indirect physical access (media system via MP3 files, docking ports, CAN bus via ODB port)

- short-range wireless access (bluetooth, keyless entry, tire pressure)

- long-range wireless (remote telematics, cellular access)

Defences

Inter-vehicle communication.

- voting to manage VAN membership

- trust-based sensor networks

- authentication

Intra-vehicle communication.

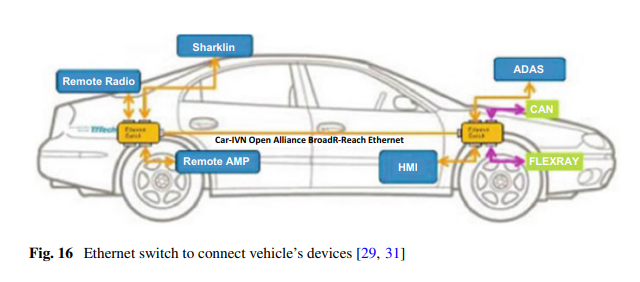

- CAN replaces multiple wires

- Only once way to access in the past, now multiple

- Move towards ethernet and more interoperability

-

Intrusion detection

- CVguard (DDoS prevention

- Anomaly detection approaches

What's the takeaway?

Anomaly detection looks possible area mirroring other systems.

But there are challenges specific to transport

- secure vs safe

- real-time constraints

Adversarial Attacks and Defence

- High-profile projects by Google, General Motors, Toyota, and Tesla have marked milestones in AV development.

- Despite advancements, AVs' safety is challenged by cybersecurity threats and adversarial attacks.

- Driving automation for cars relies upon Deep Learning, used for image recognition and fusion of sensors.

M. Girdhar, J. Hong and J. Moore, "Cybersecurity of Autonomous Vehicles: A Systematic Literature Review of Adversarial Attacks and Defense Models," in IEEE Open Journal of Vehicular Technology, vol. 4, pp. 417-437, 2023, doi: 10.1109/OJVT.2023.3265363.

Adversarial Attacks and Defence

-

AVs use ML in four key areas: perception, prediction, planning, and control.

-

ML algorithms handle tasks like object detection, traffic sign recognition, and decision-making.

- AVs' ML pipeline links sensory inputs to actuator controls, enabling self-driving capabilities.

-

Intentional threats include attacks exploiting AI weaknesses to impair AV operations.

- Unintentional risks involve algorithm biases, design flaws, and limitations of AI and ML techniques.

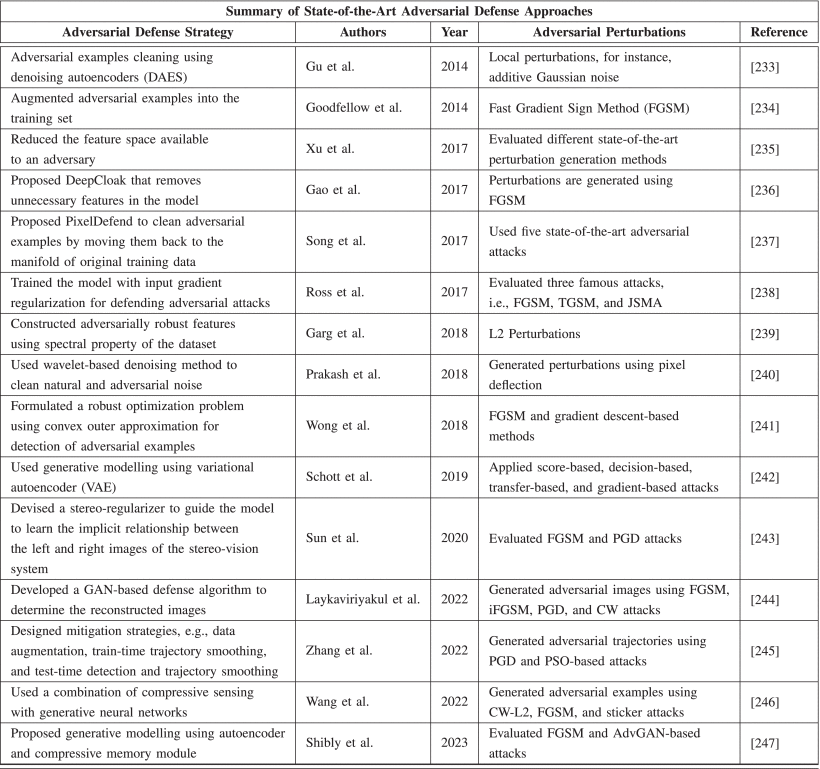

Adversarial Attacks and Defence

-

ML models can be manipulated by adversarial attacks during training or post-training.

- Adversarial ML poses a risk as attackers can deceive AI systems, causing incorrect predictions.

- Defences have been proposed in other areas that could be applicable for this context.

- These include proactive and reactive defences applied in other contexts.

Adversarial Attacks and Defence

-

Proactive defenses attempt to strengthen a neural network's resistance toward adversarial examples in advance of an attack.

-

Reactive defenses aim at detecting adversarial input observations or examples after the neural network models are trained.

-

These techniques can also be broadly categorized into three groups, 1) manipulating data, 2) introducing auxiliary models, and 3) altering models.

What's

the takeaway?

There are many state-of-the-art defences.

Haven't been (necessarily) validated in this context.

Must also meet safety requirements that other context might not have?