GW Data Analysis

& Deep Learning:

Advanced

2023 Summer School on GW @TianQin

He Wang (王赫)

2023/08/22

ICTP-AP, UCAS

Deep Generative Model

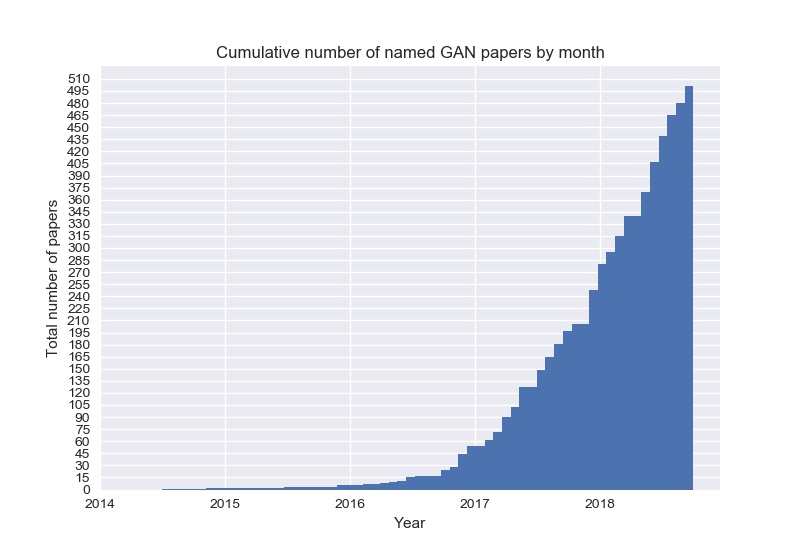

GAN

Transformer

Flow

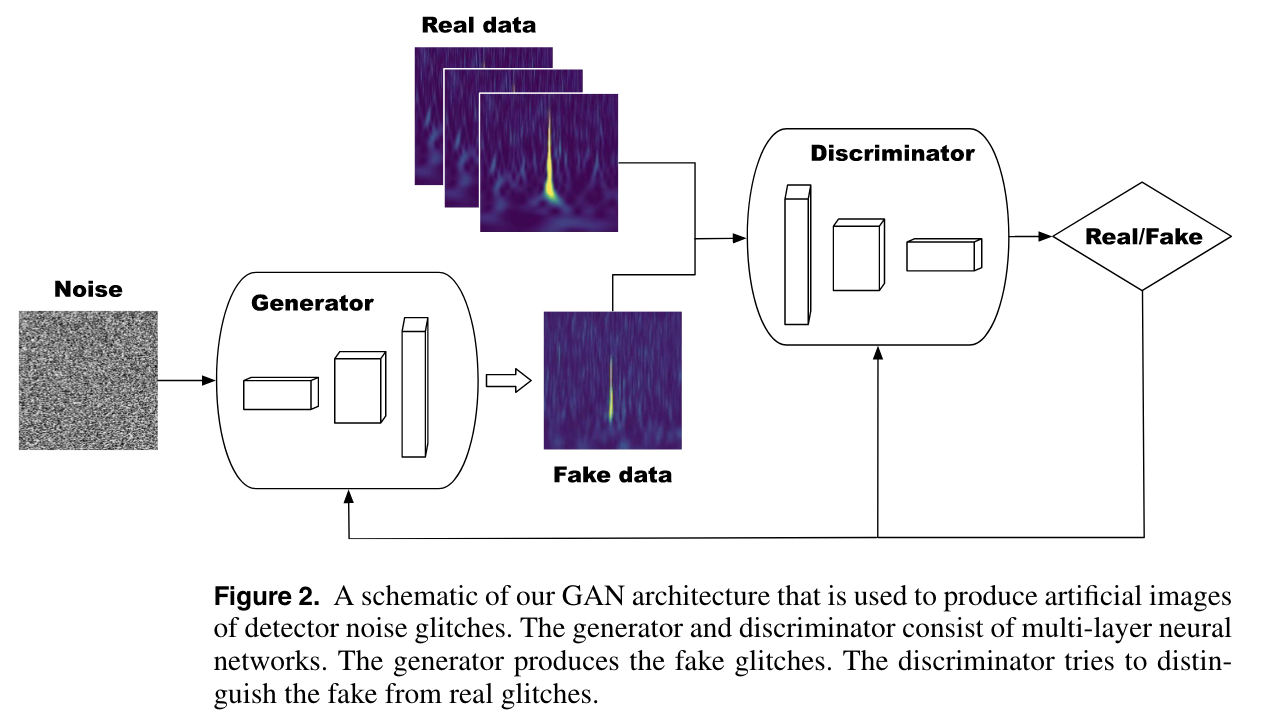

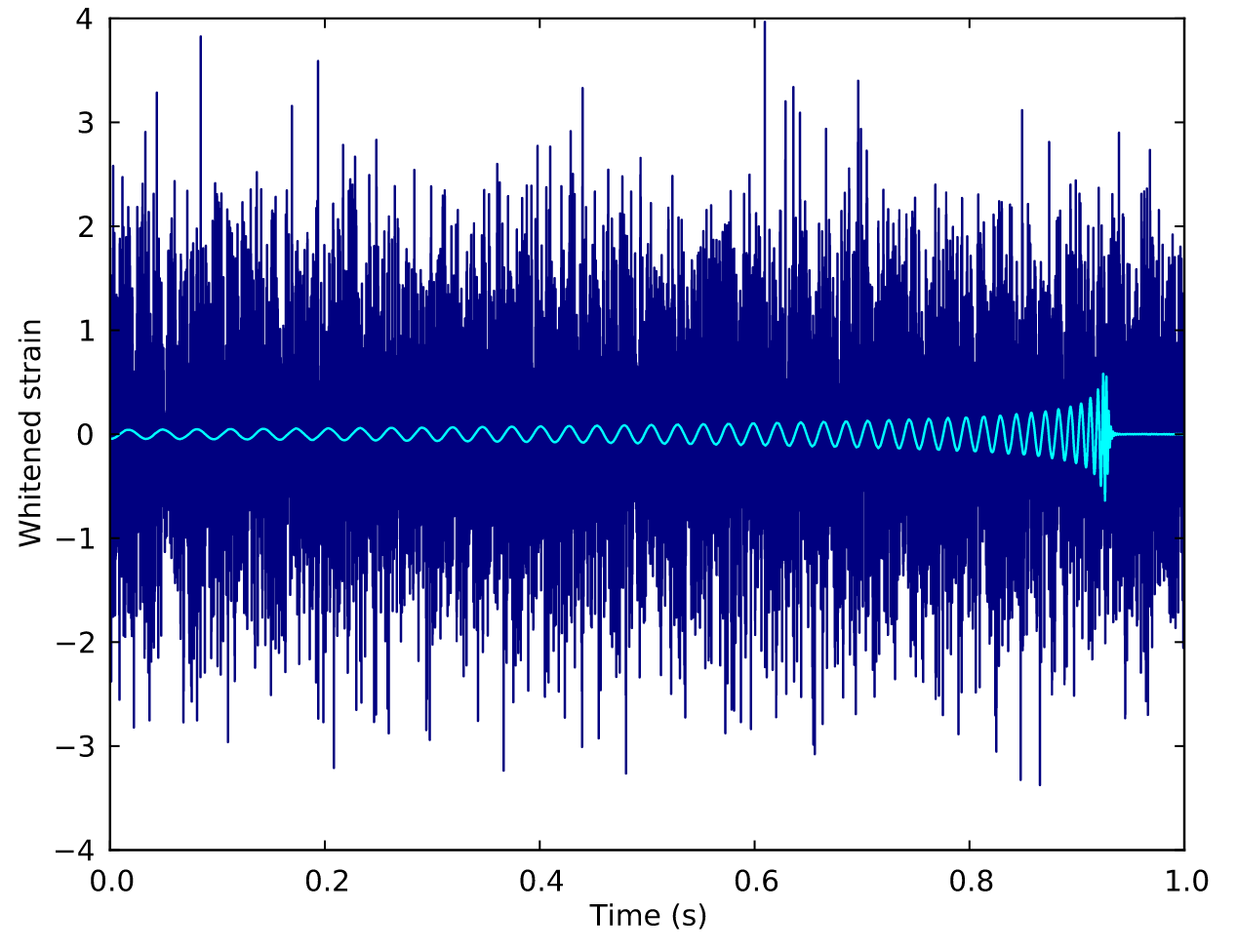

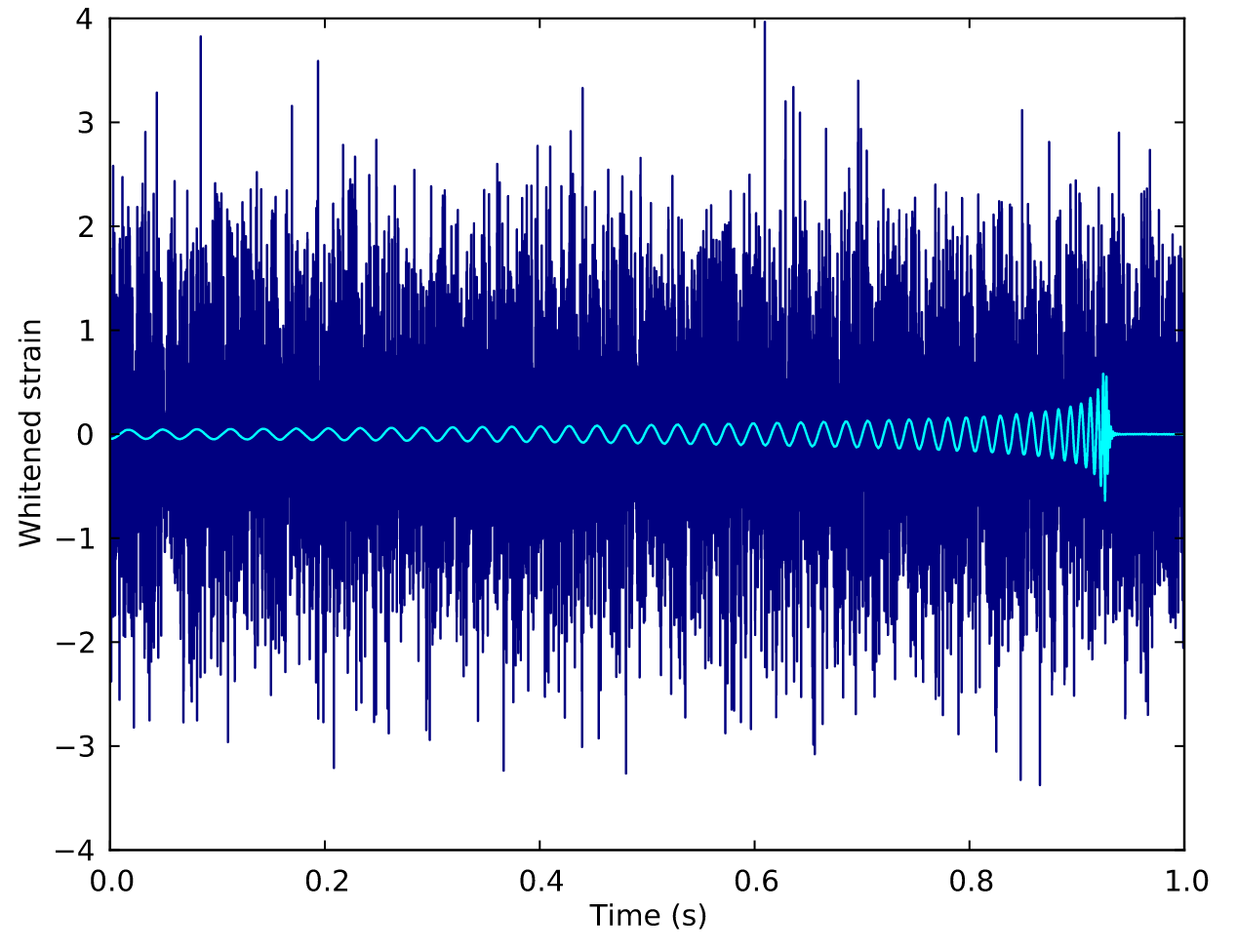

# GWDA: GAN

Generative Adversarial Networks

-

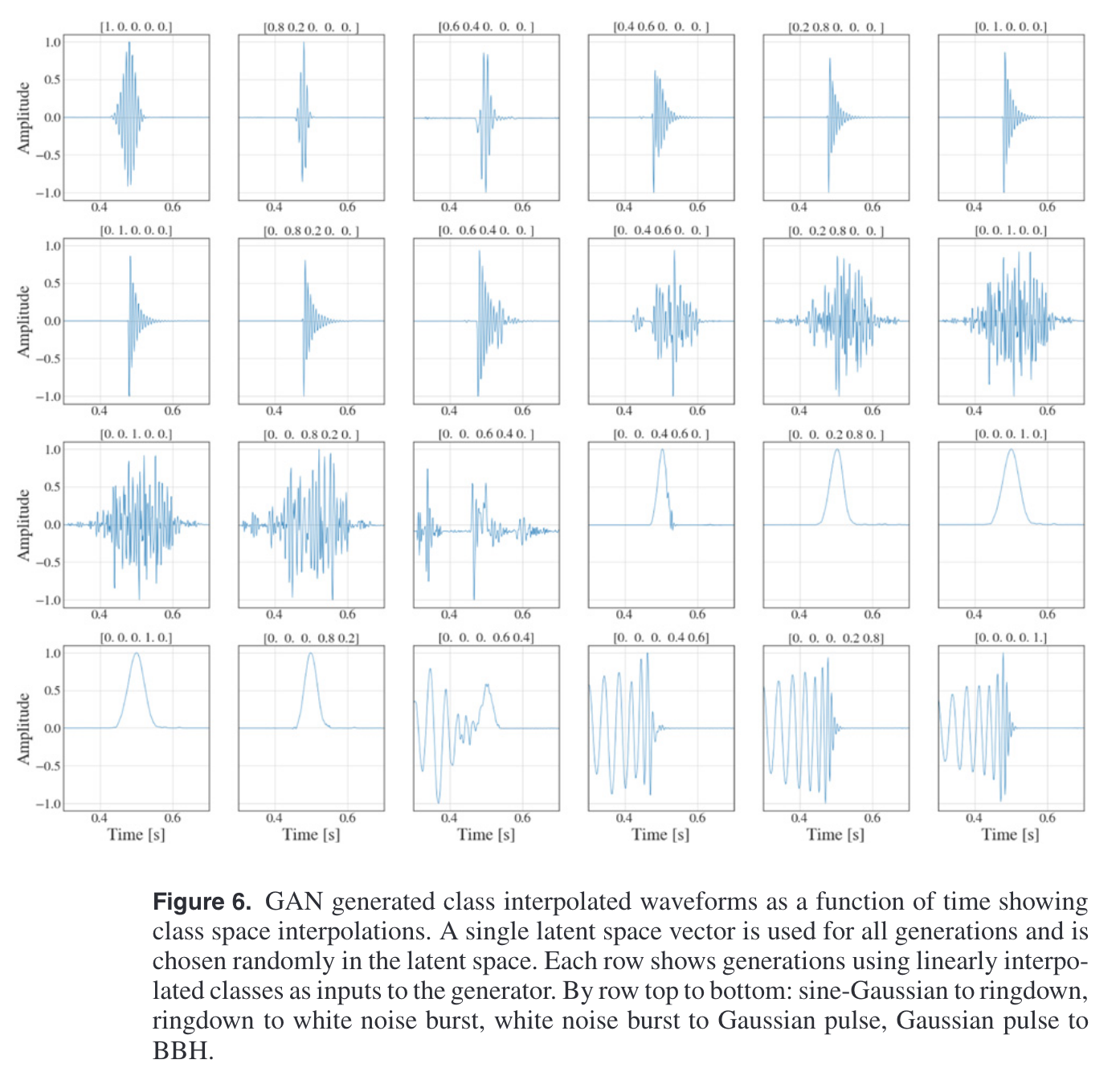

McGinn, J, C Messenger, M J Williams, and I S Heng. “Generalised Gravitational Wave Burst Generation with Generative Adversarial Networks.” Classical and Quantum Gravity 38, no. 15 (June 30, 2021): 155005.

-

Lopez, Melissa, Vincent Boudart, Kerwin Buijsman, Amit Reza, and Sarah Caudill. “Simulating Transient Noise Bursts in LIGO with Generative Adversarial Networks.” arXiv:2203.06494, March 12, 2022.

-

Lopez, Melissa, Vincent Boudart, Stefano Schmidt, and Sarah Caudill. “Simulating Transient Noise Bursts in LIGO with Gengli.” arXiv:2205.09204, May 18, 2022.

-

Yan, Jianqi, Alex P Leung, and C Y Hui. “On Improving the Performance of Glitch Classification for Gravitational Wave Detection by Using Generative Adversarial Networks.” Monthly Notices of the Royal Astronomical Society, July 27, 2022, stac1996.

-

Dooney, Tom, Stefano Bromuri, and Lyana Curier. “DVGAN: Stabilize Wasserstein GAN Training for Time-Domain Gravitational Wave Physics.” arXiv:2209.13592, September 29, 2022.

-

Powell, Jade, Ling Sun, Katinka Gereb, Paul D Lasky, and Markus Dollmann. “Generating Transient Noise Artefacts in Gravitational-Wave Detector Data with Generative Adversarial Networks.” Classical and Quantum Gravity 40, no. 3 (January 13, 2023): 035006.

-

Jadhav, Shreejit, Mihir Shrivastava, and Sanjit Mitra. “Towards a Robust and Reliable Deep Learning Approach for Detection of Compact Binary Mergers in Gravitational Wave Data.” arXiv:2306.11797, June 20, 2023.

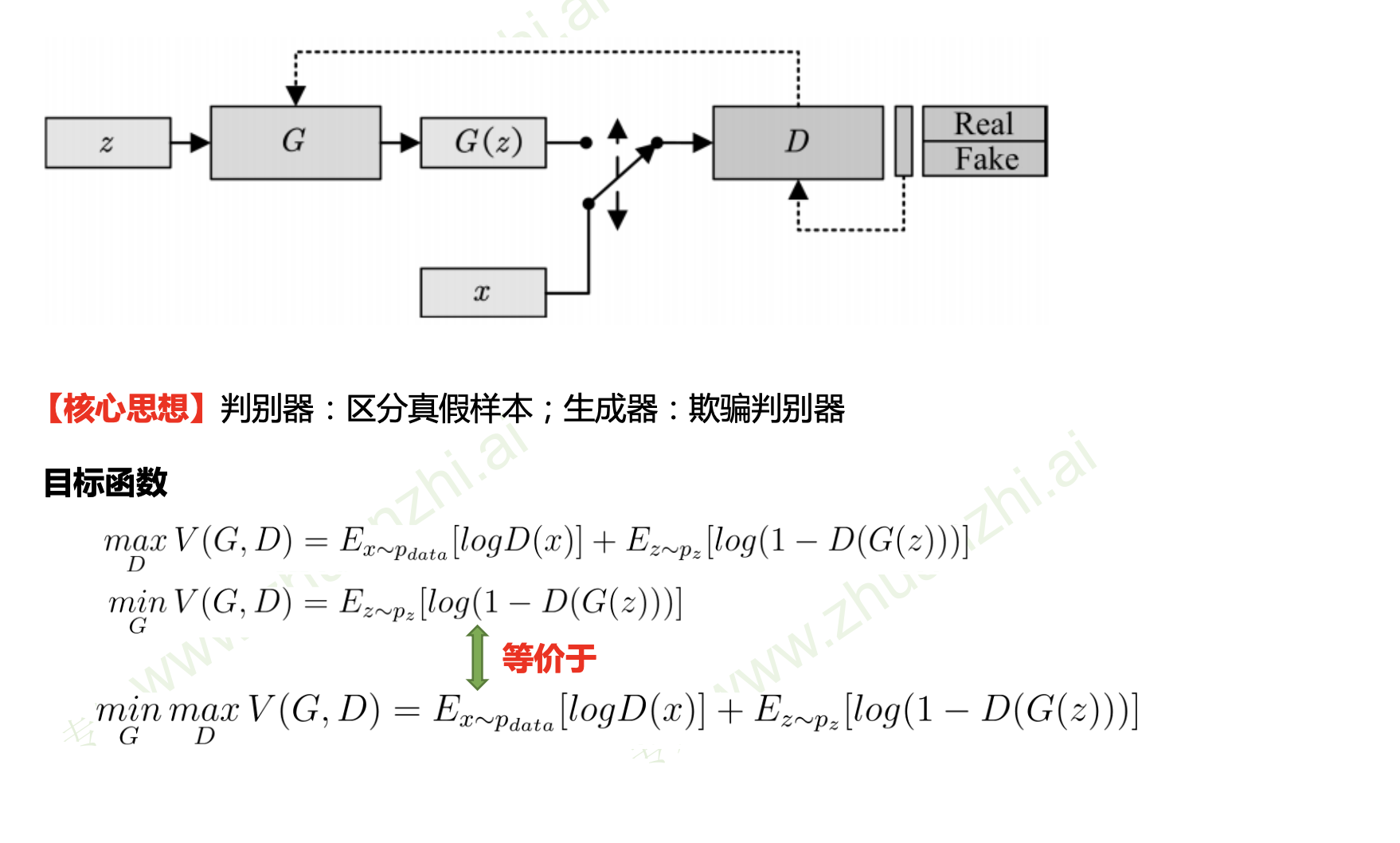

生成对抗网络

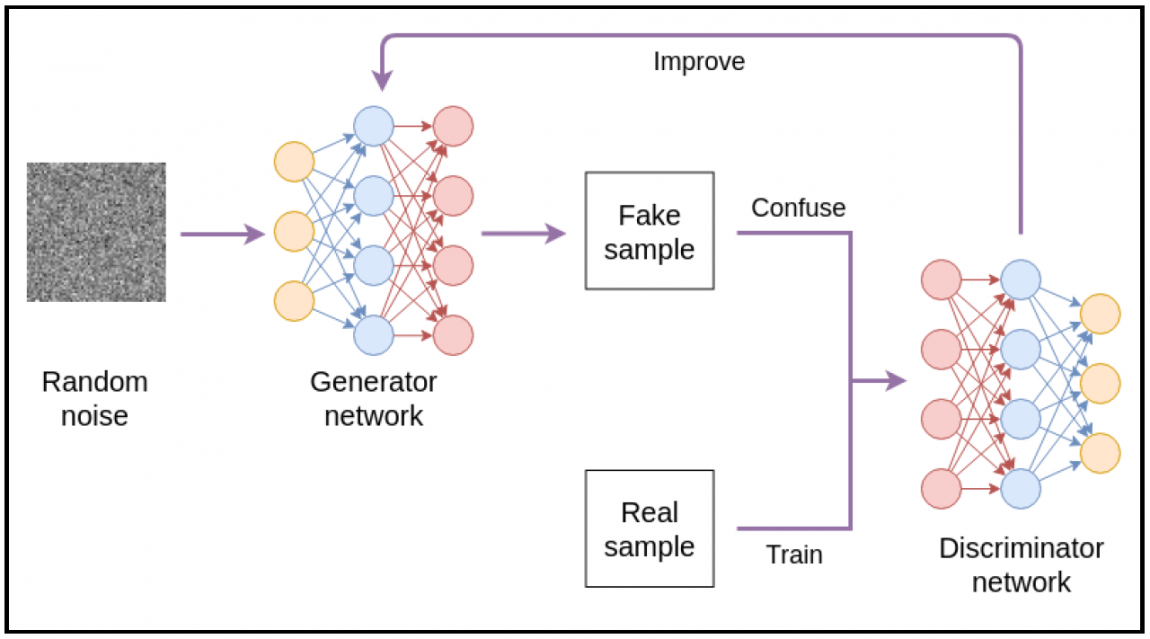

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

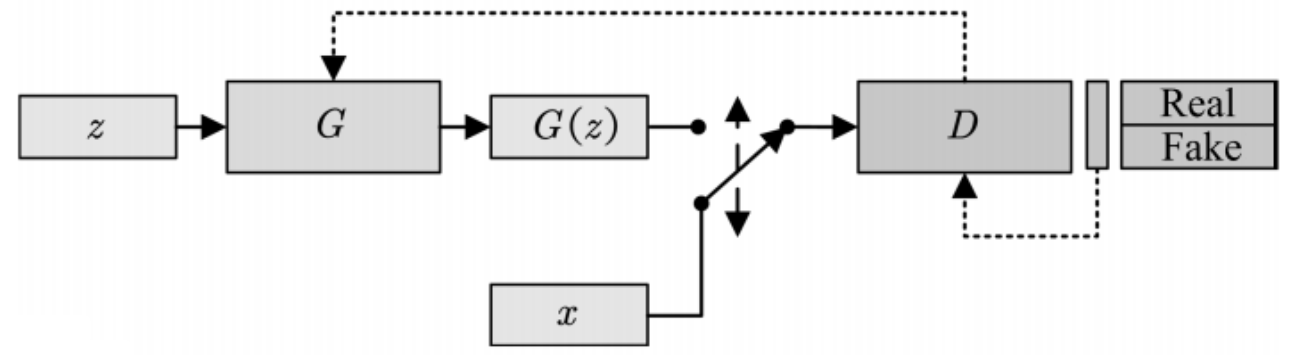

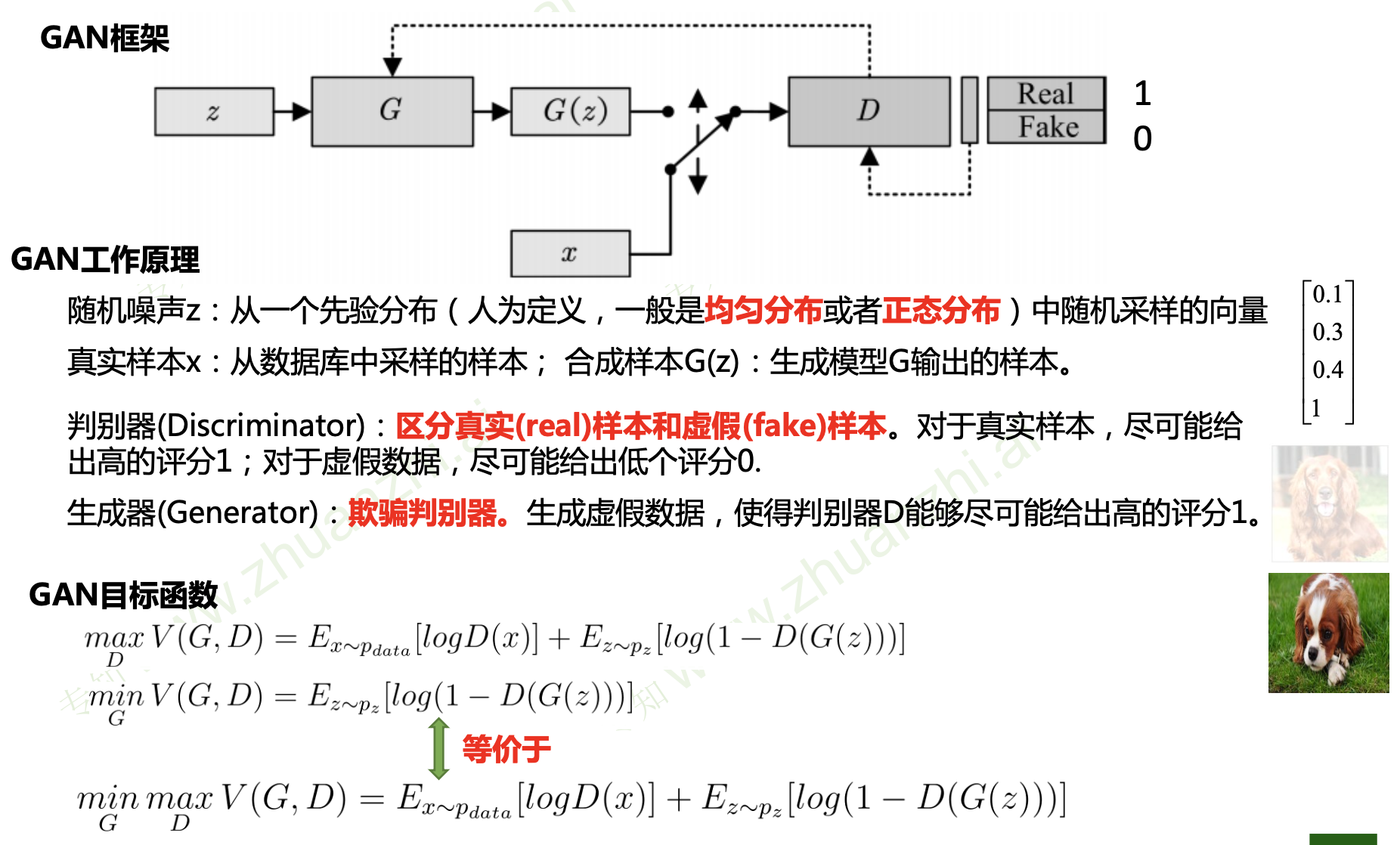

作者 Ian Goodfellow 于2014年NIPS顶会首次提出了GAN的概念

-

模型

-

目标函数

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

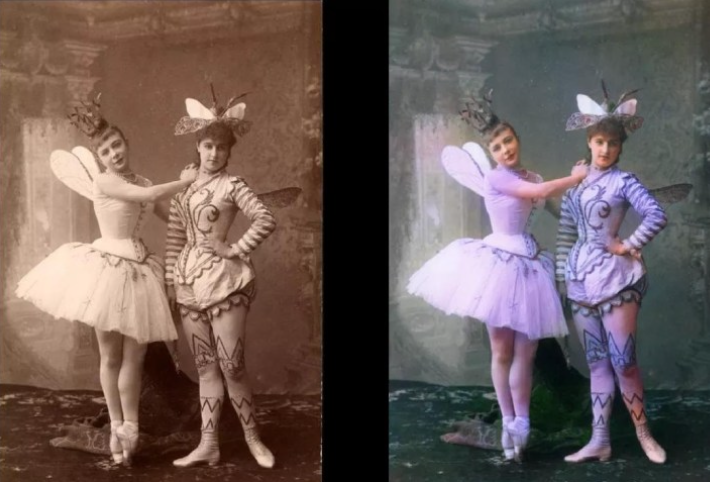

图像着色

图像超像素

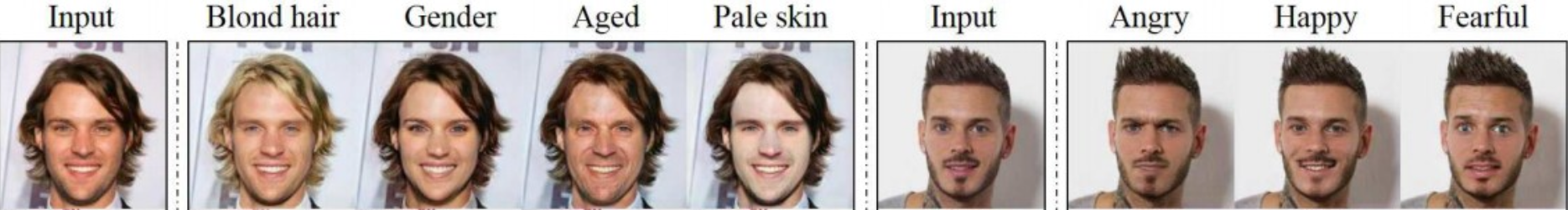

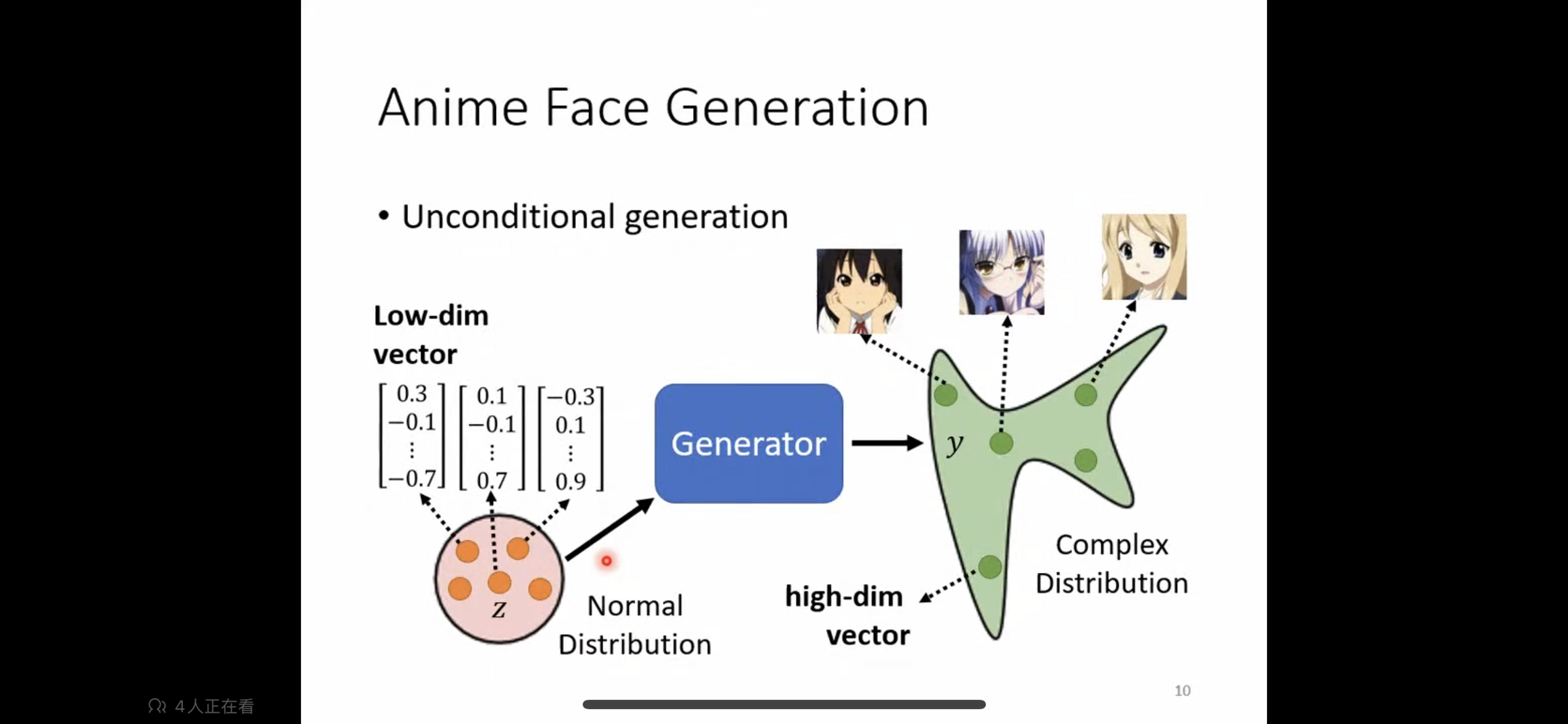

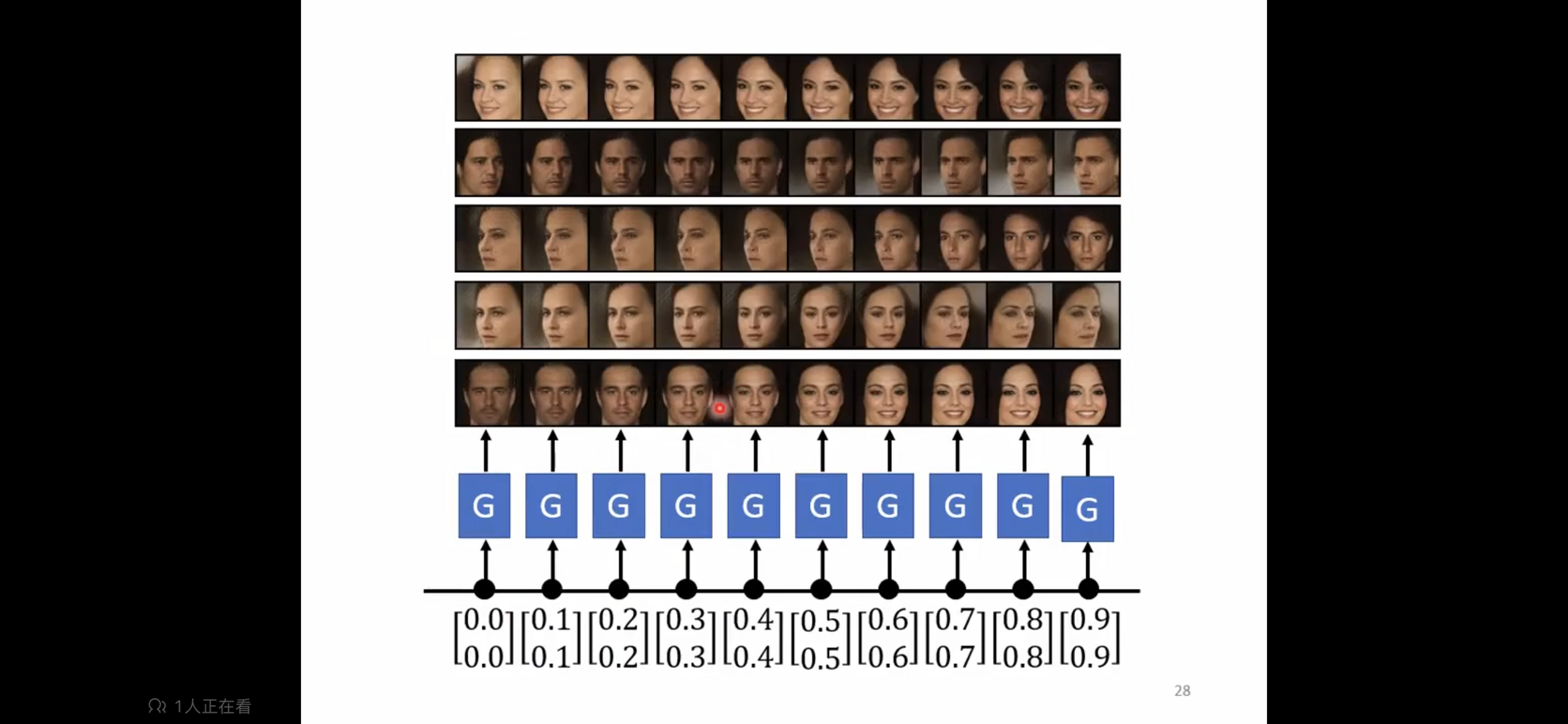

人脸生成

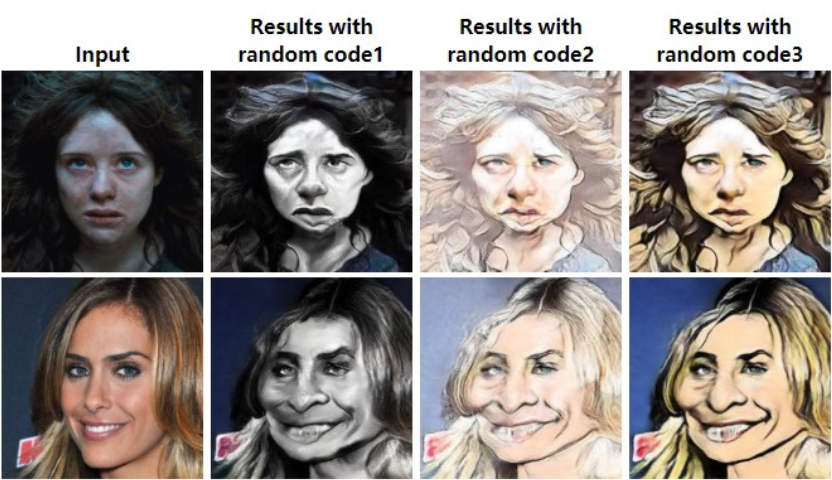

卡通图像生成

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

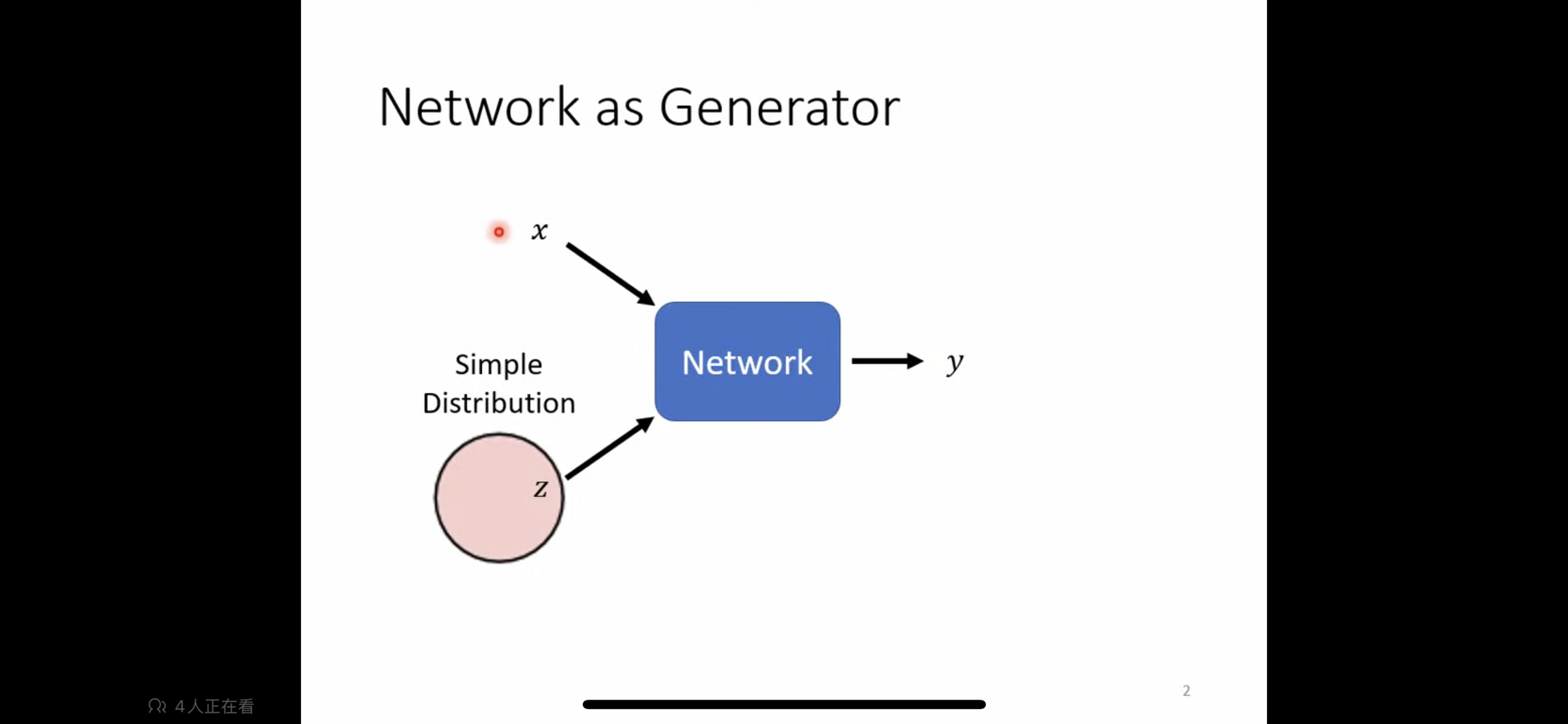

-

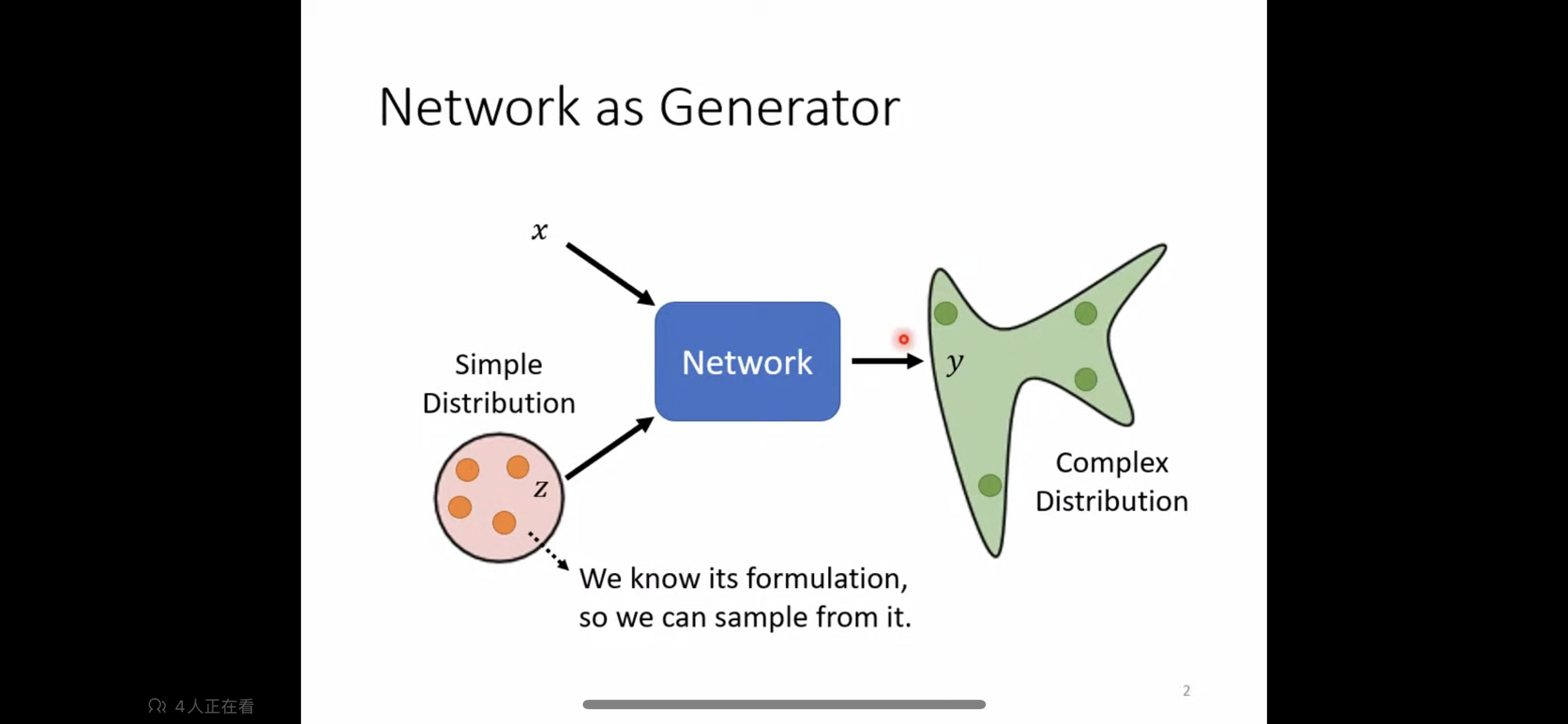

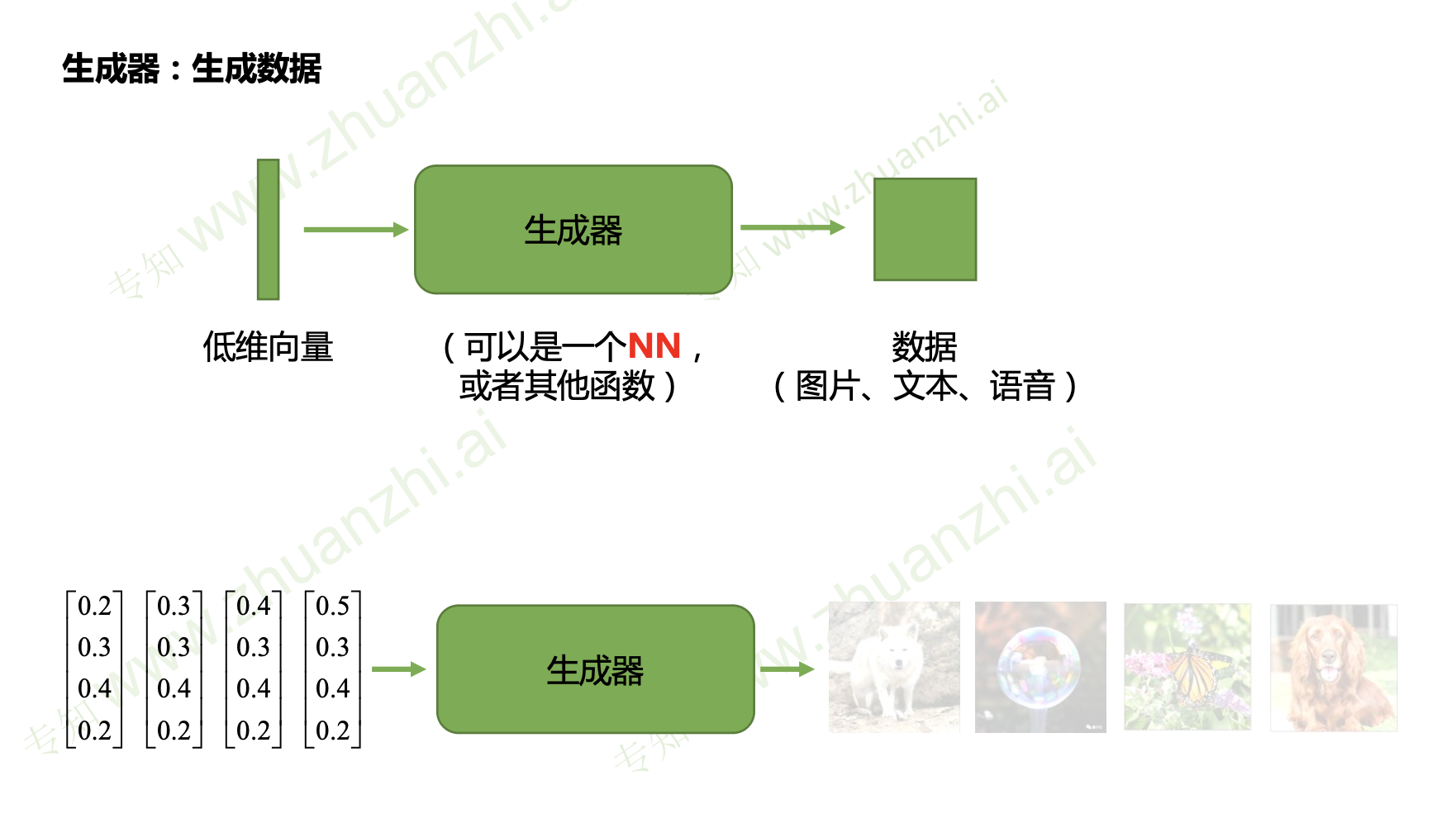

生成模型

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

生成模型

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

生成模型

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

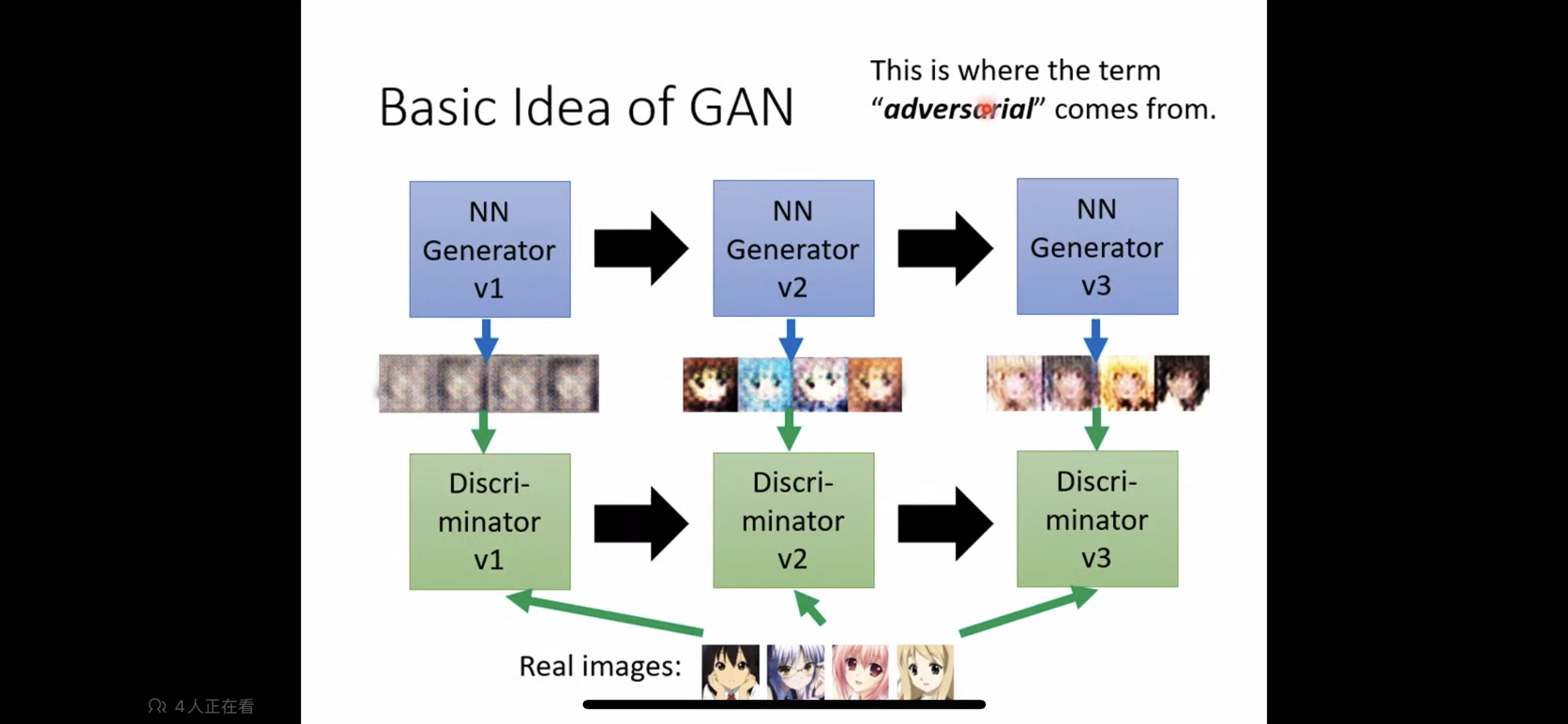

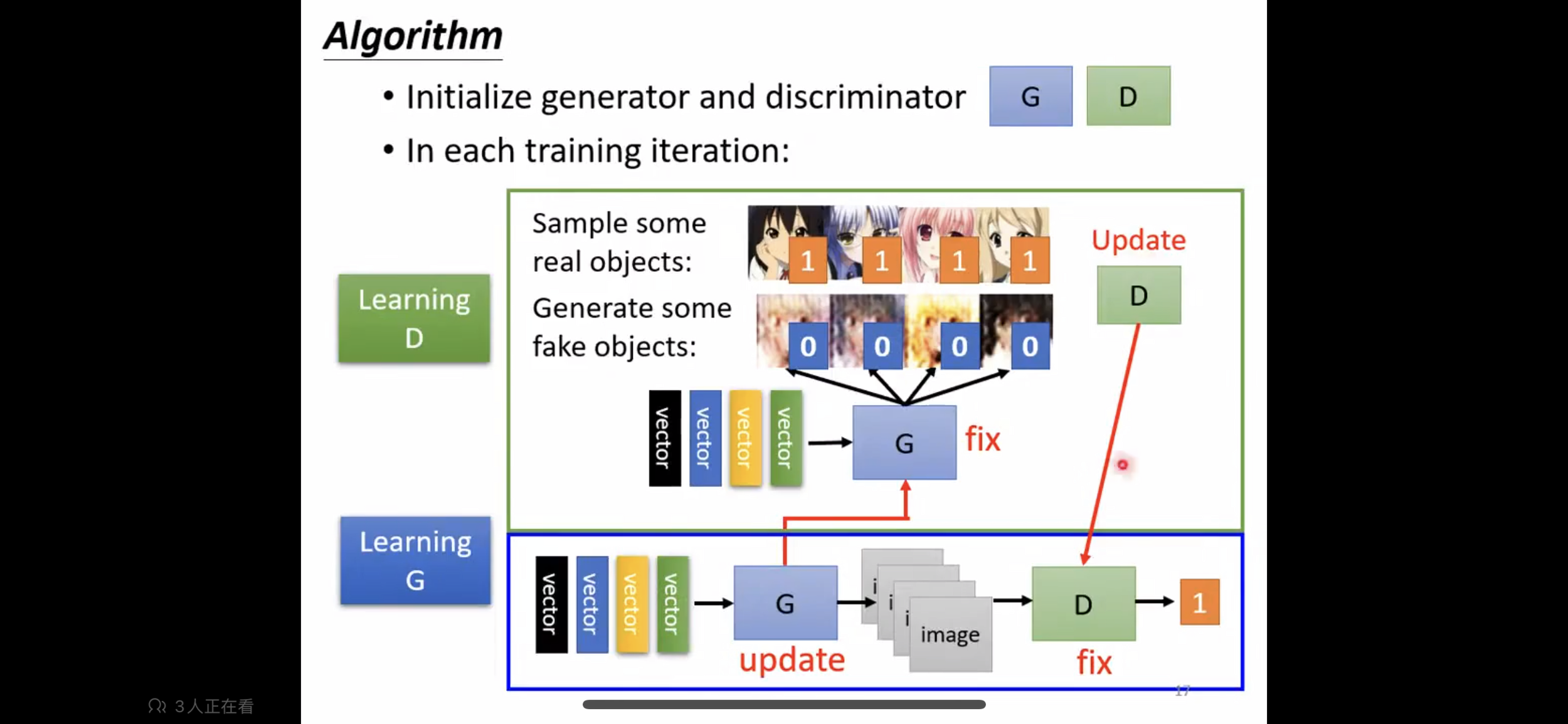

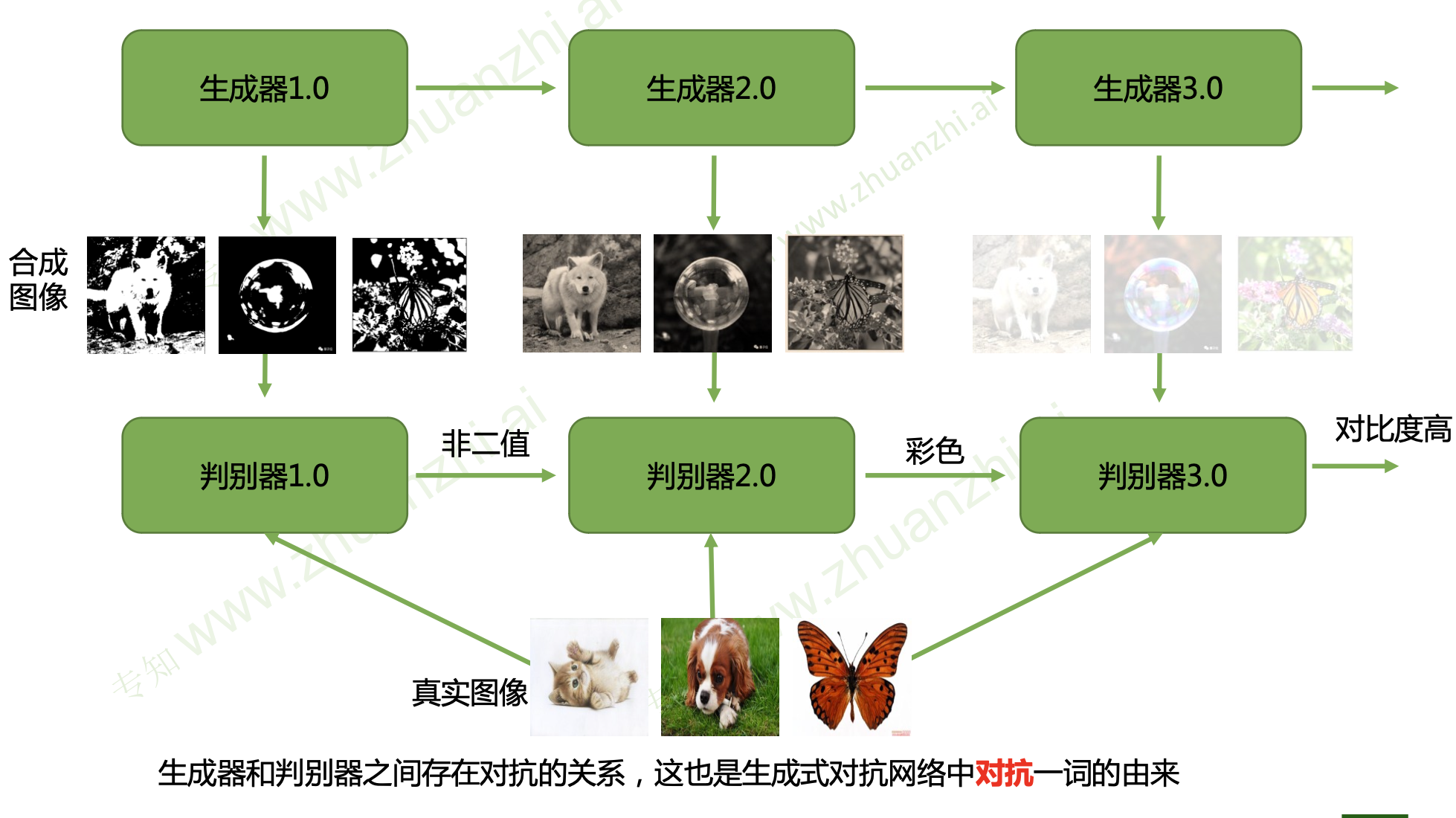

GAN 训练逻辑

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

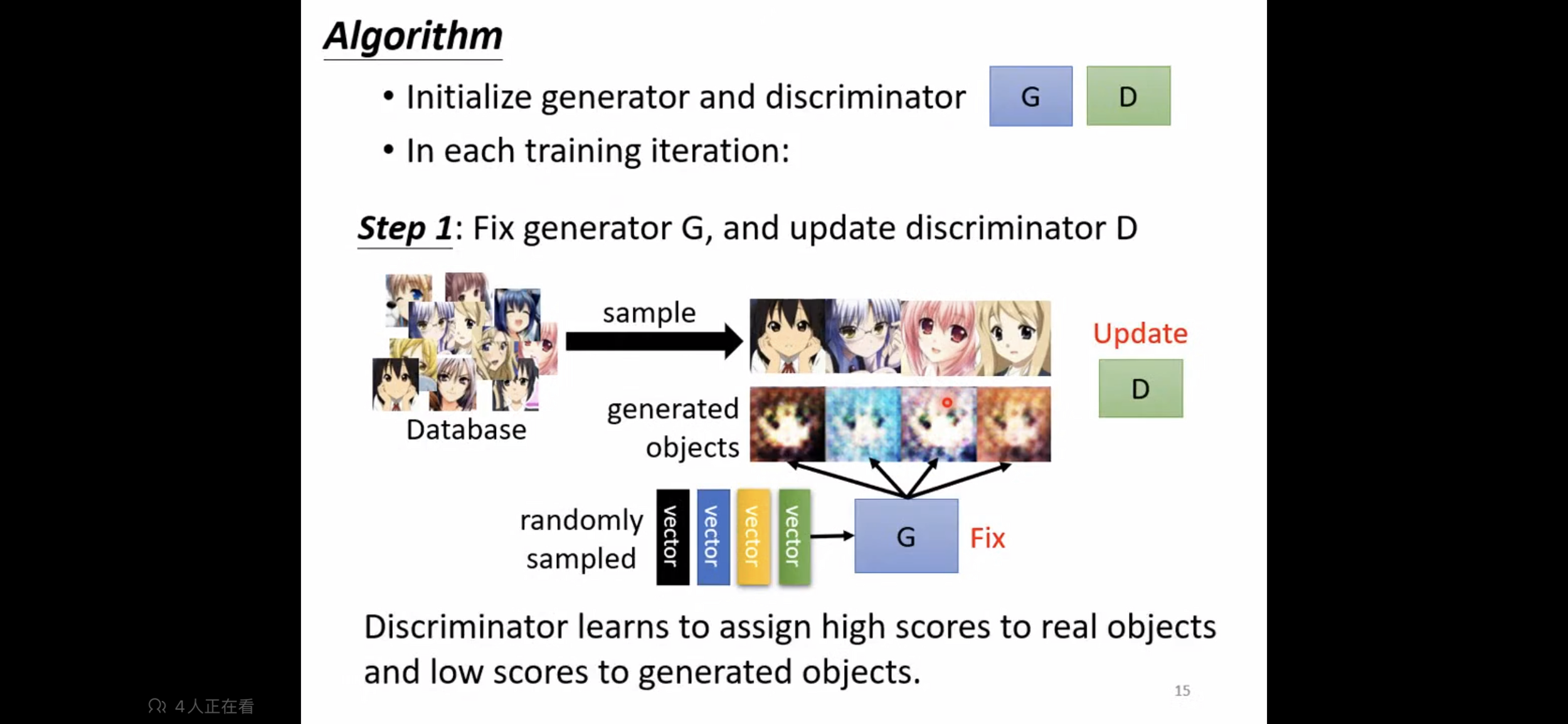

GAN 训练逻辑:Step 1

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

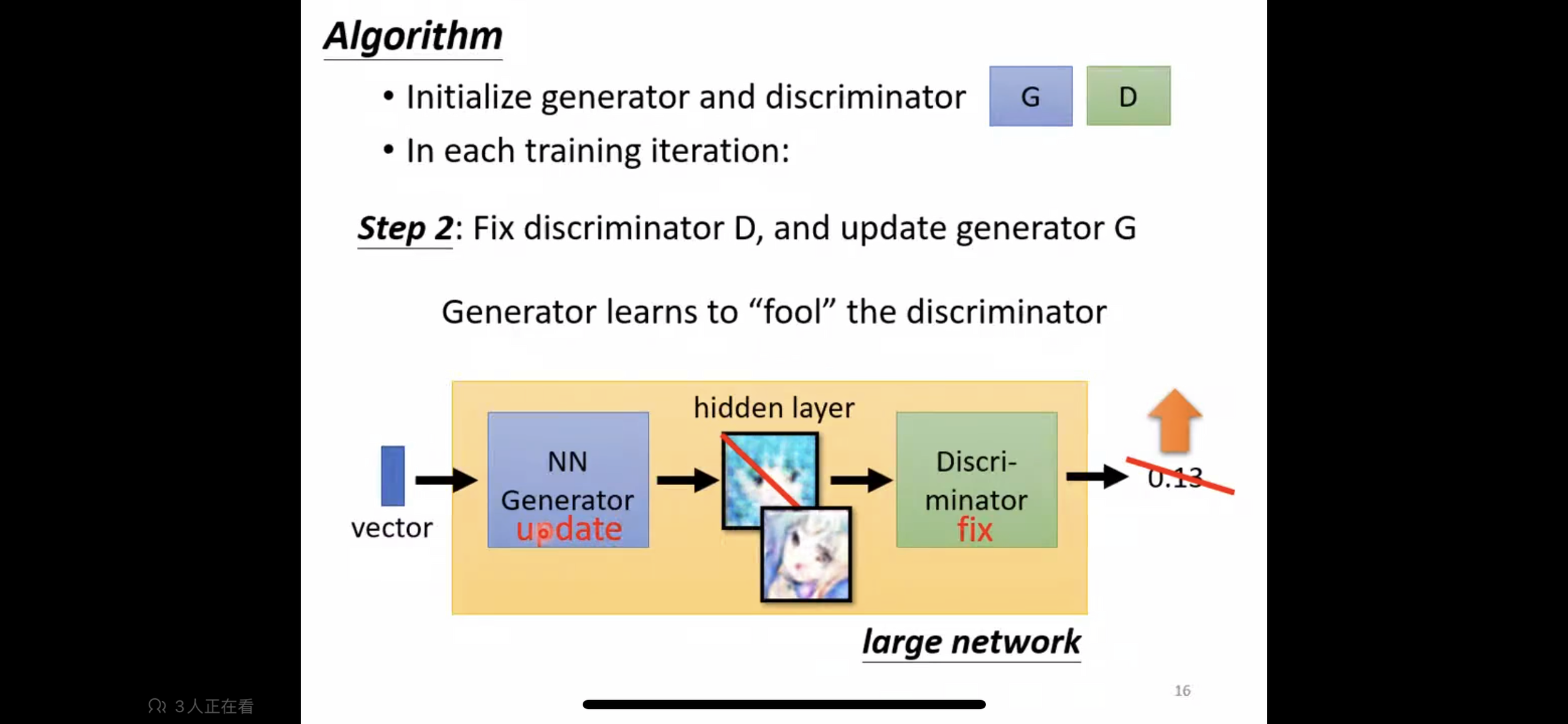

GAN 训练逻辑:Step 2

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

GAN 训练逻辑:Iteration

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

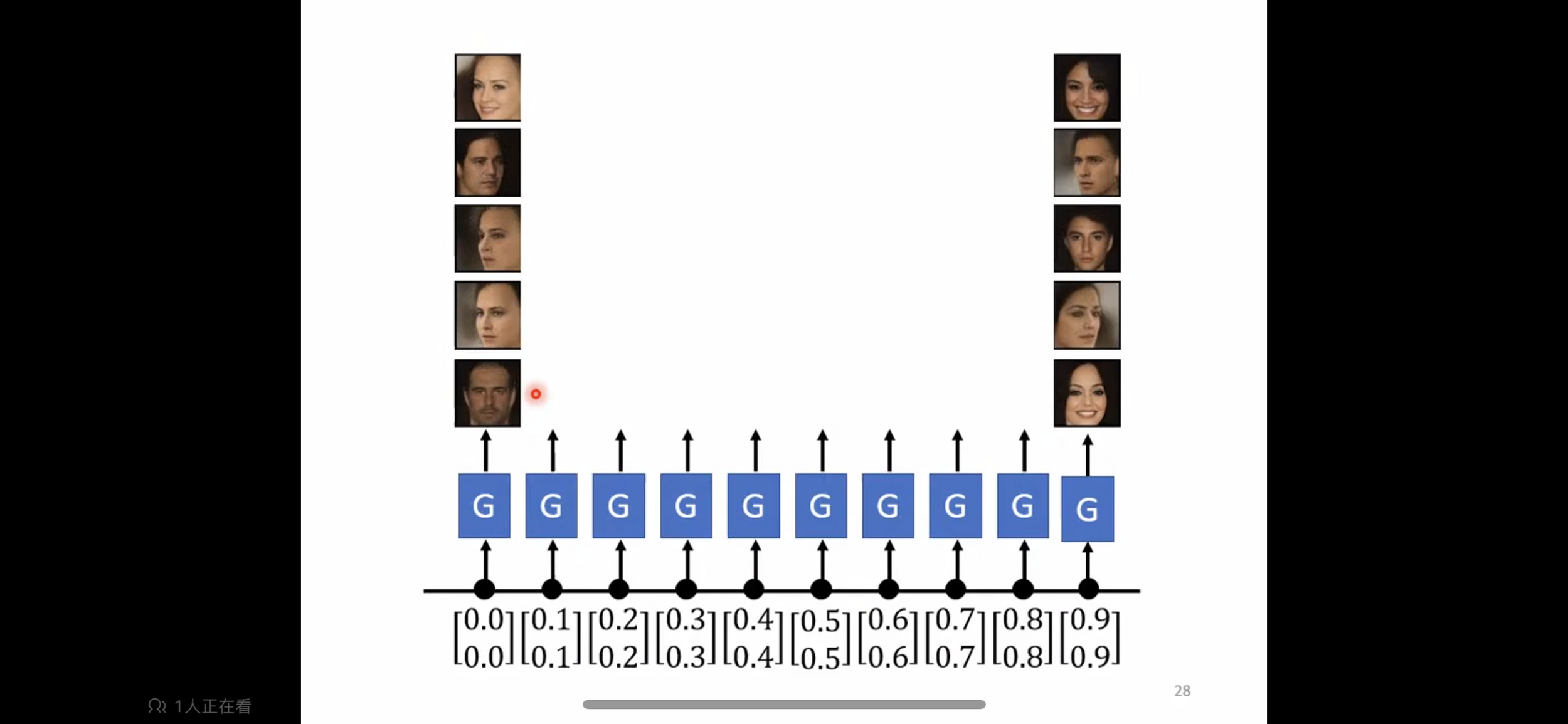

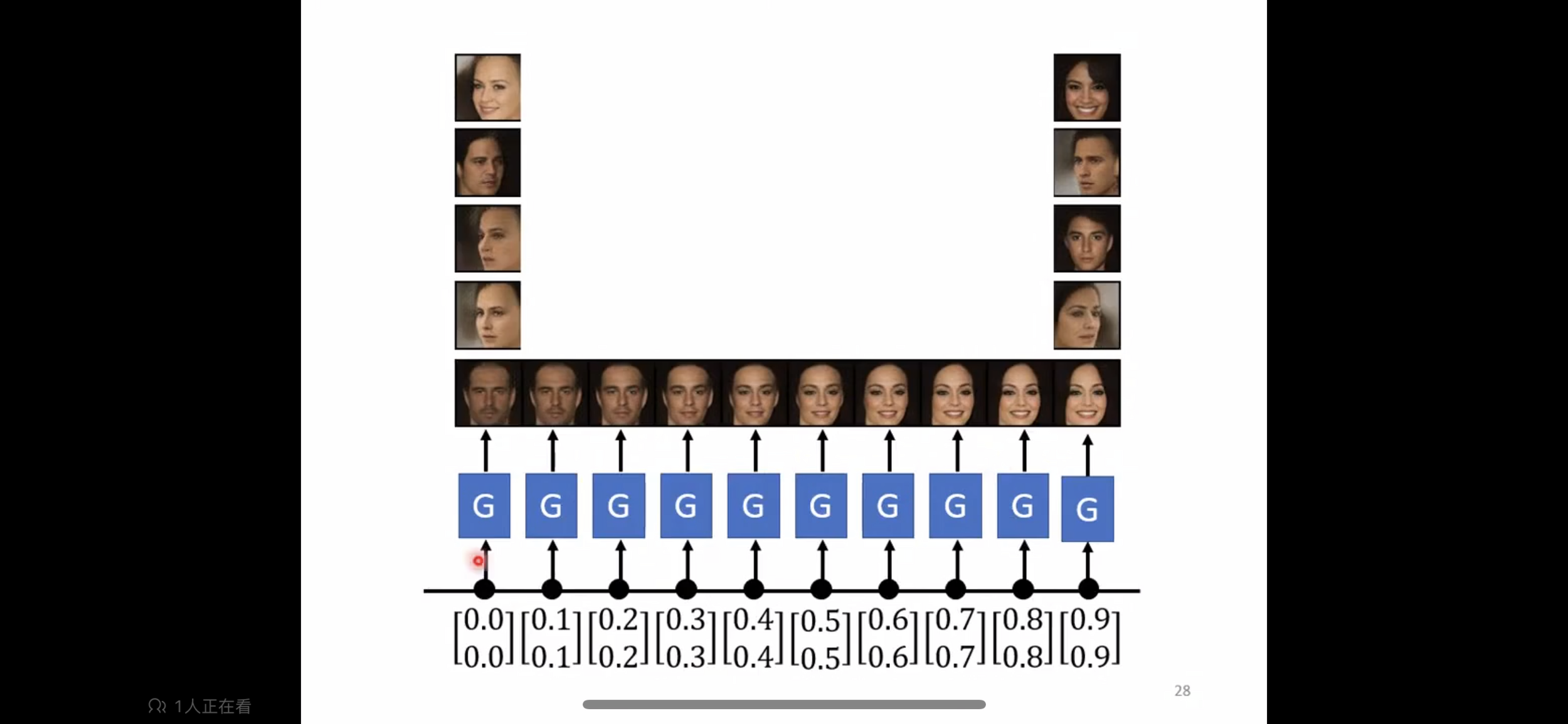

conditional GAN(CGAN)

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

conditional GAN(CGAN)

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

conditional GAN(CGAN)

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

生成式对抗网络(GAN)

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

生成器

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

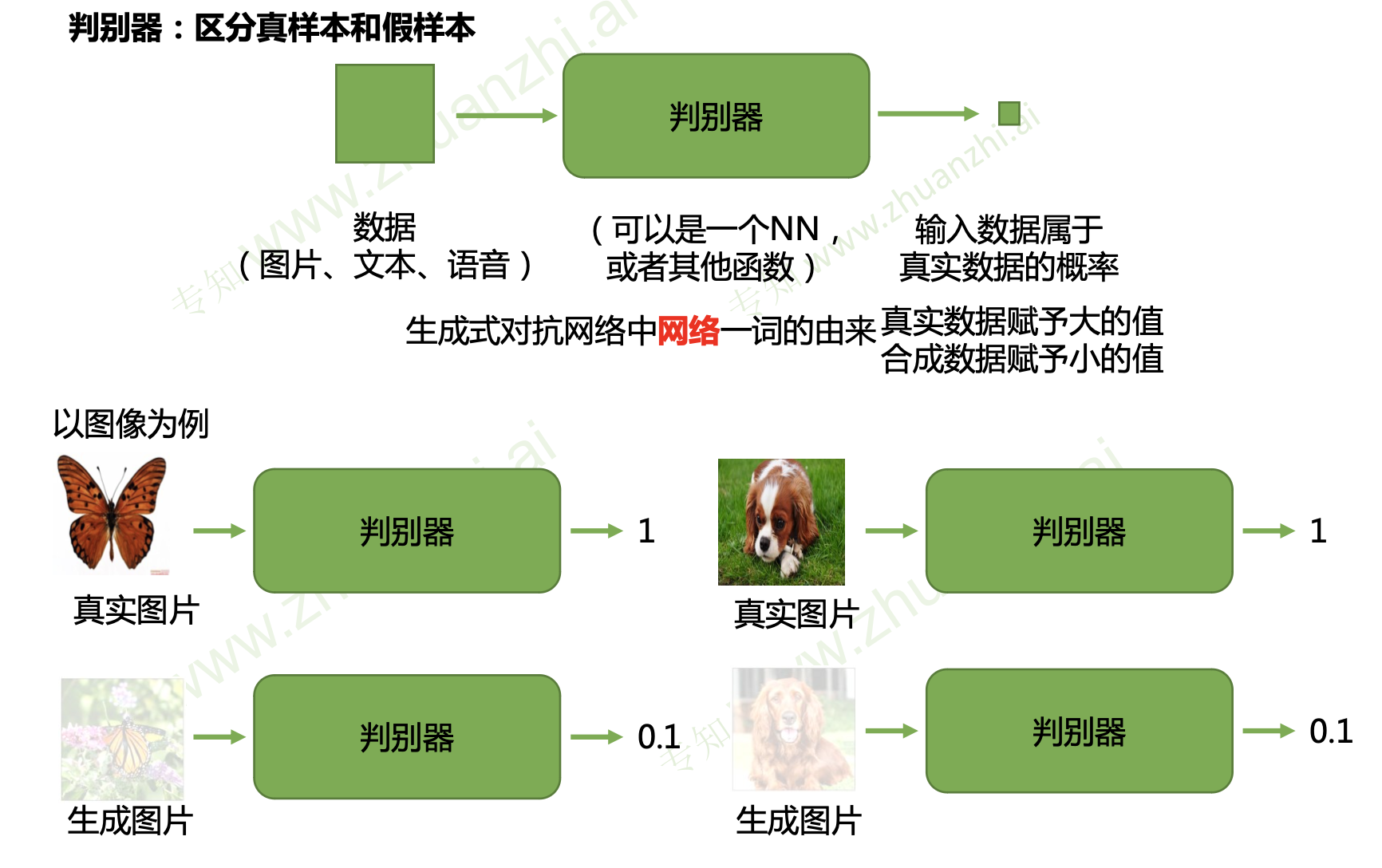

判别器

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

GAN

# GWDA: GAN

Generative Adversarial Networks

生成对抗网络

-

对抗训练

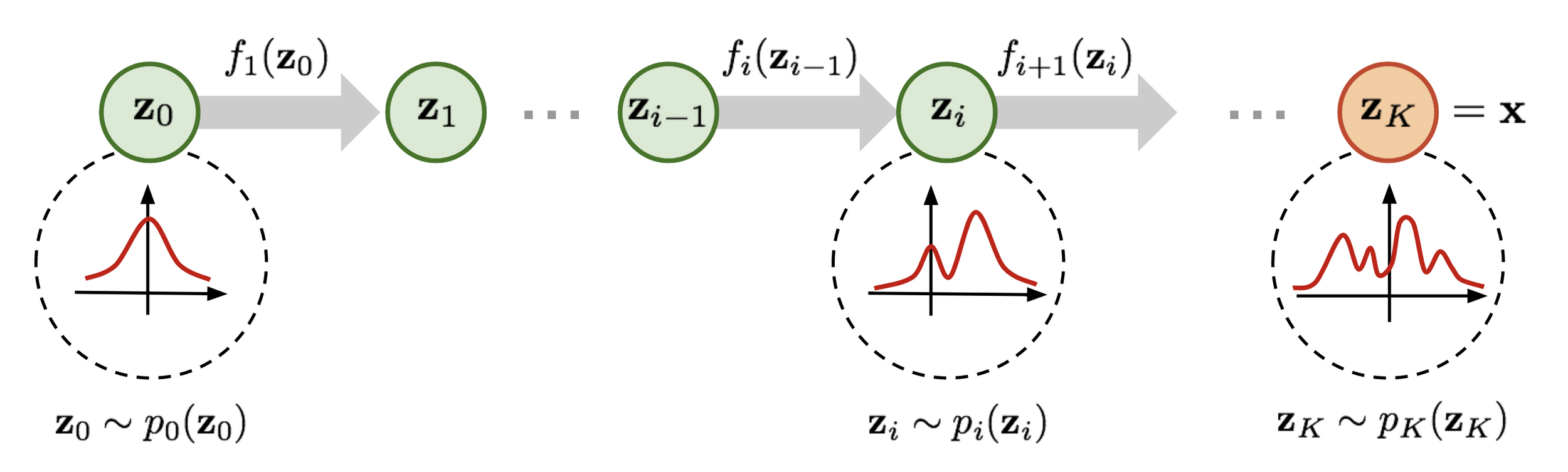

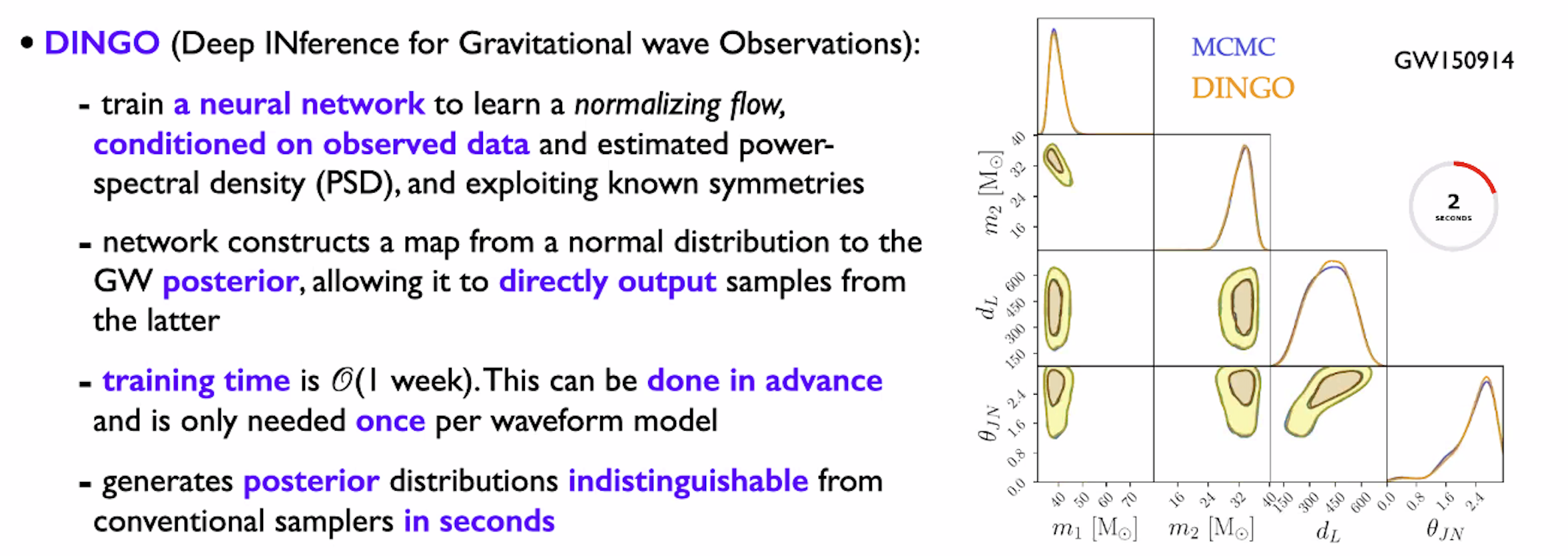

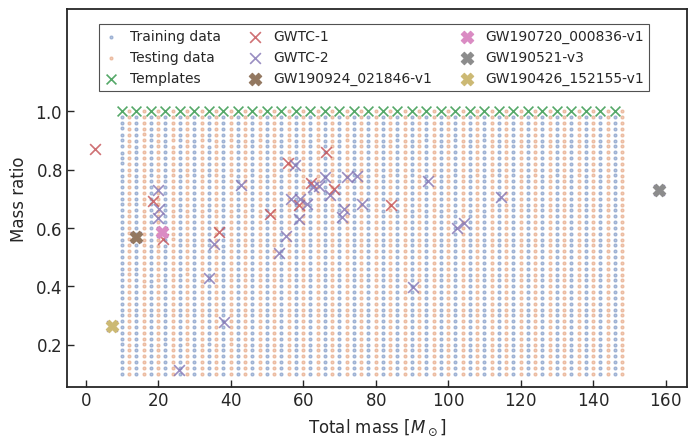

# GWDA: Flow

Flow Model

-

Green, Stephen Roland, and Jonathan Gair. “Complete Parameter Inference for GW150914 Using Deep Learning.” Machine Learning: Science and Technology 2, no. 3 (June 16, 2021): 03LT01.

-

Dax, Maximilian, Stephen R. Green, Jonathan Gair, Jakob H. Macke, Alessandra Buonanno, and Bernhard Schölkopf. “Real-Time Gravitational Wave Science with Neural Posterior Estimation.” Physical Review Letters 127, no. 24 (December 2021): 241103.

-

Shen, Hongyu, E A Huerta, Eamonn O’Shea, Prayush Kumar, and Zhizhen Zhao. “Statistically-Informed Deep Learning for Gravitational Wave Parameter Estimation.” Machine Learning: Science and Technology 3, no. 1 (November 30, 2021): 015007.

-

Khan, Asad, E.A. Huerta, and Prayush Kumar. “AI and Extreme Scale Computing to Learn and Infer the Physics of Higher Order Gravitational Wave Modes of Quasi-Circular, Spinning, Non-Precessing Black Hole Mergers.” Physics Letters B 835 (December 10, 2022): 137505.

-

Williams, Michael J., John Veitch, and Chris Messenger. “Nested Sampling with Normalizing Flows for Gravitational-Wave Inference.” Physical Review D 103, no. 10 (May 2021): 103006.

-

Cheung, Damon H. T., Kaze W. K. Wong, Otto A. Hannuksela, Tjonnie G. F. Li, and Shirley Ho. “Testing the Robustness of Simulation-Based Gravitational-Wave Population Inference.” ArXiv Preprint ArXiv:2112.06707, December 2021.

-

Karamanis, Minas, Florian Beutler, John A. Peacock, David Nabergoj, and Uros Seljak. “Accelerating Astronomical and Cosmological Inference with Preconditioned Monte Carlo.” arXiv:2207.05652, July 12, 2022.

-

Chatterjee, Chayan, and Linqing Wen. “Pre-Merger Sky Localization of Gravitational Waves from Binary Neutron Star Mergers Using Deep Learning.” arXiv:2301.03558, December 30, 2022.

-

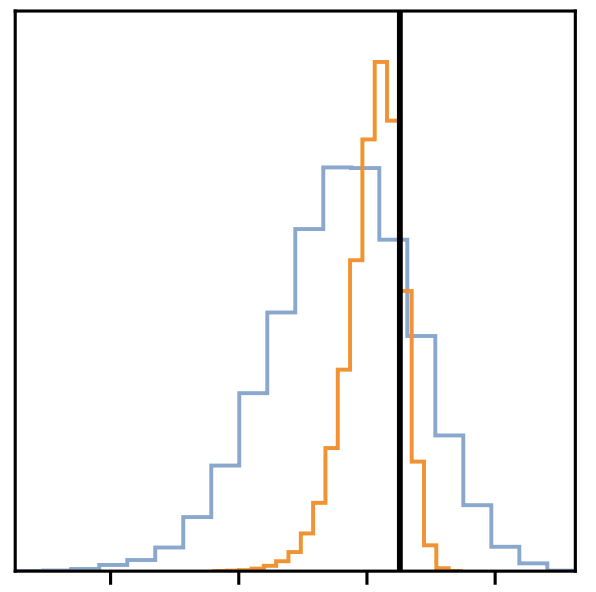

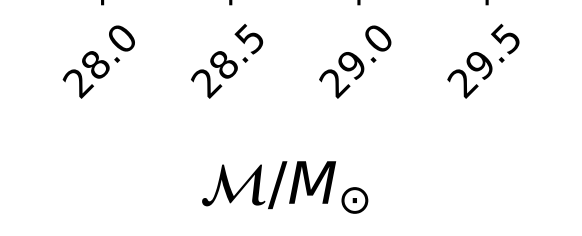

Langendorff, Jurriaan, Alex Kolmus, Justin Janquart, and Chris Van Den Broeck. “Normalizing Flows as an Avenue to Studying Overlapping Gravitational Wave Signals.” Physical Review Letters 130, no. 17 (April 2023): 171402.

-

...

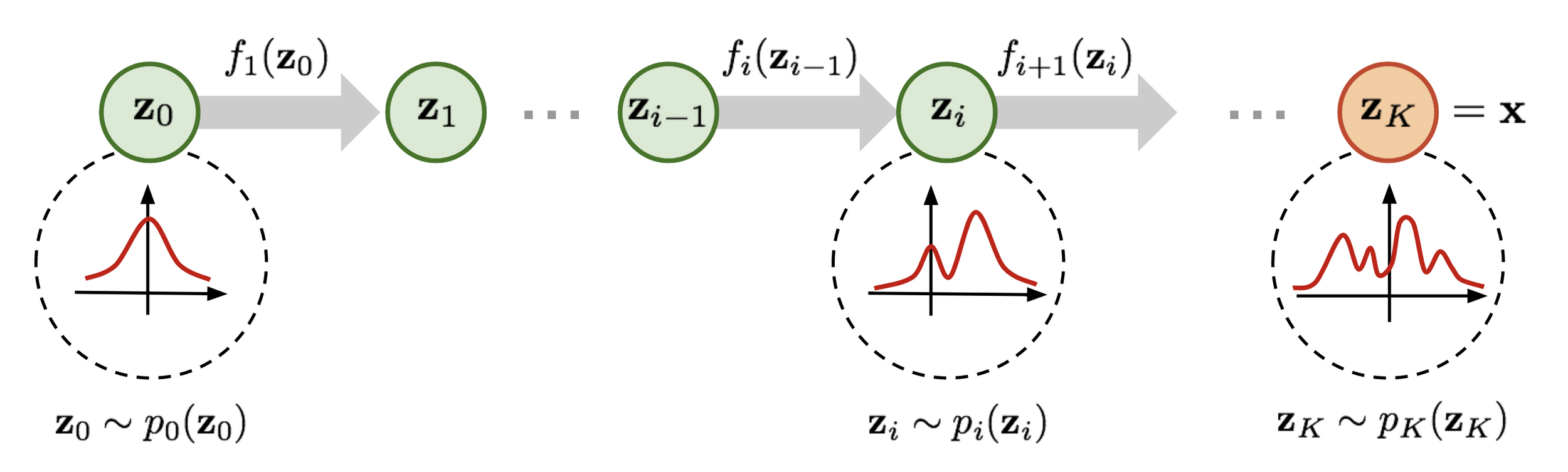

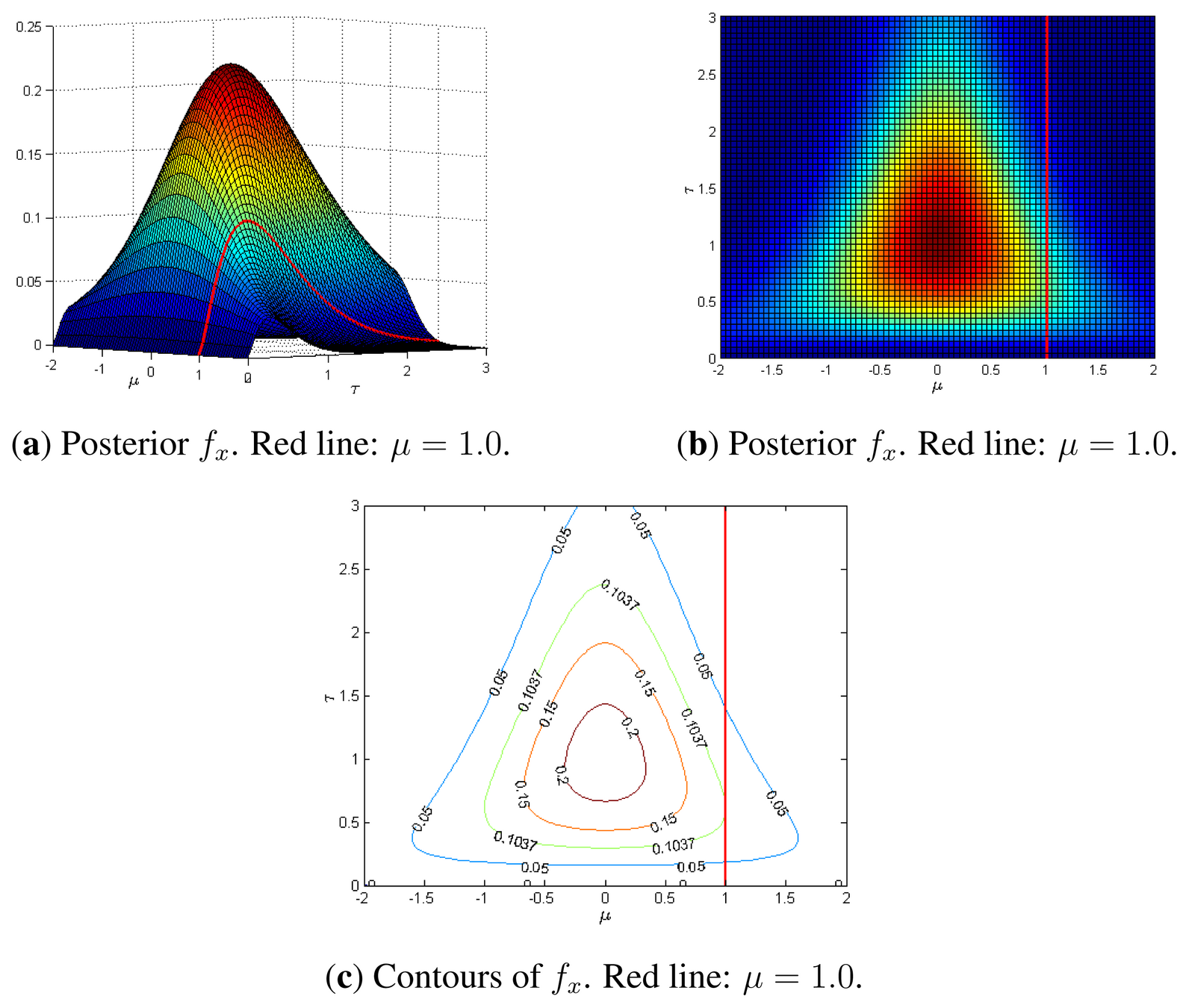

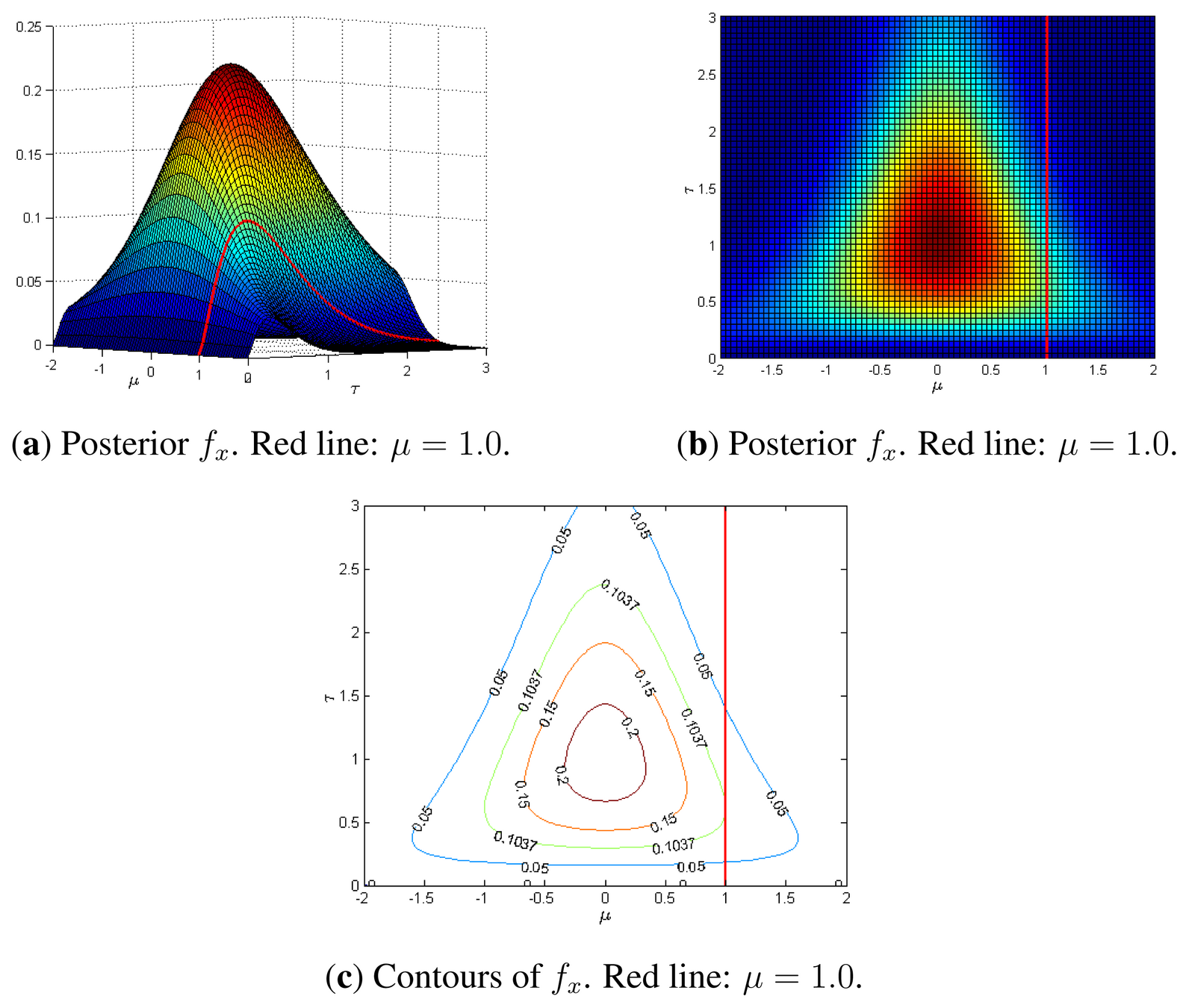

流模型

Assuming: \(x=f(z), z, x \in \mathbb{R}^p\), \(z \sim P_z(z), x \sim P_x(x)\).

\(f\) is continuous, invertible.

base density

target density

Flow Model

流模型

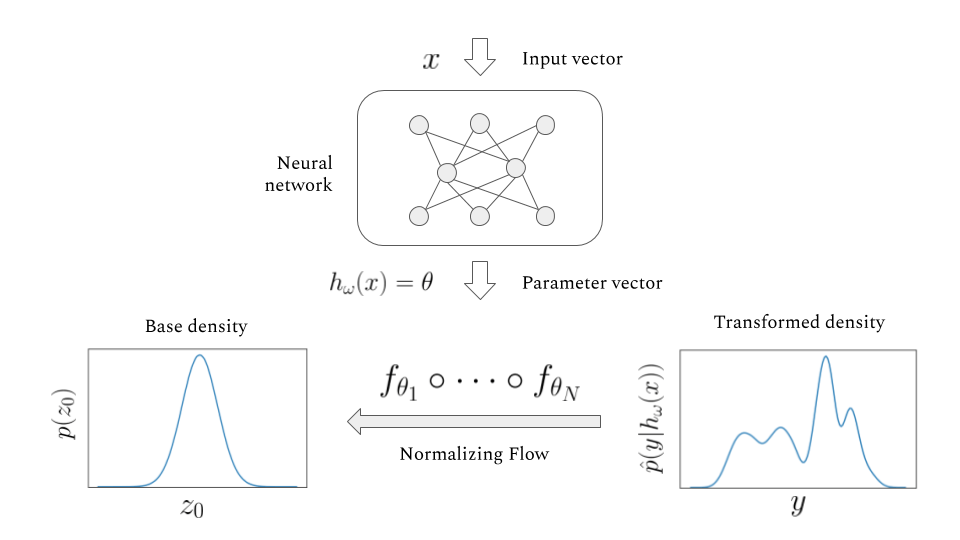

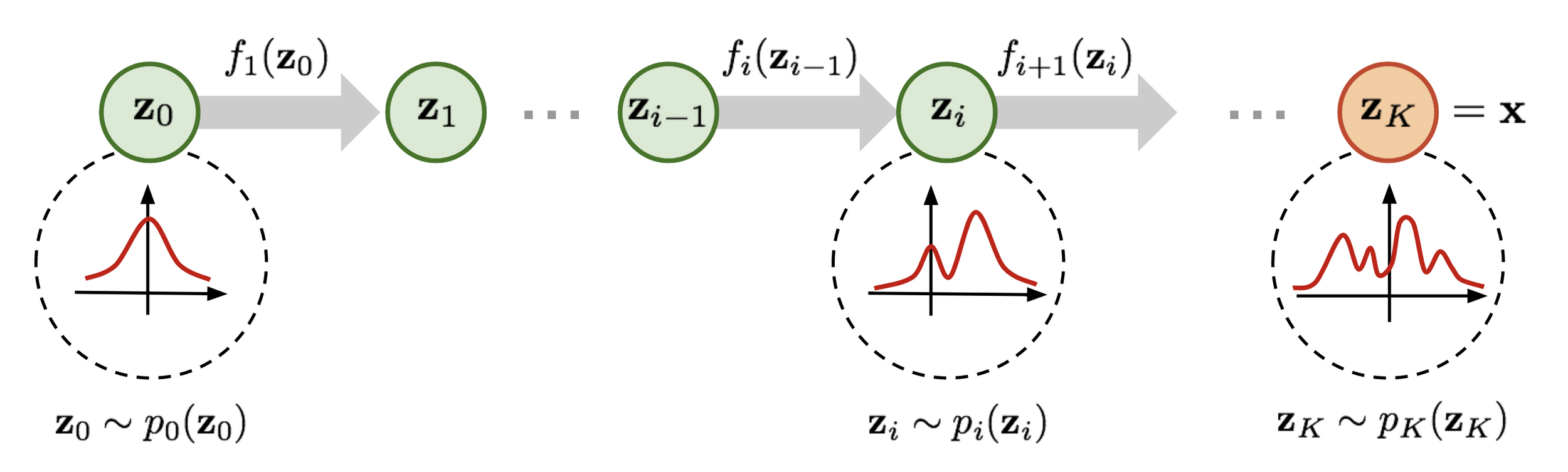

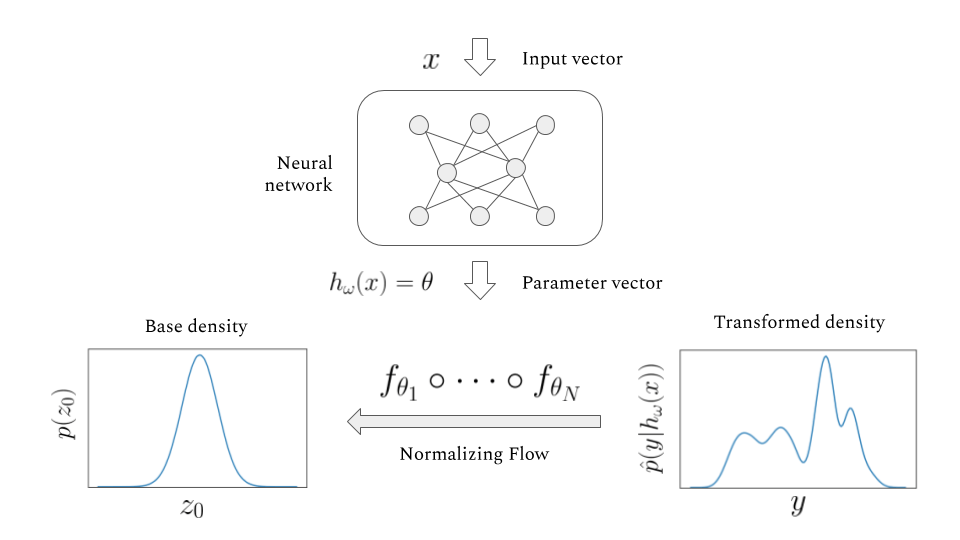

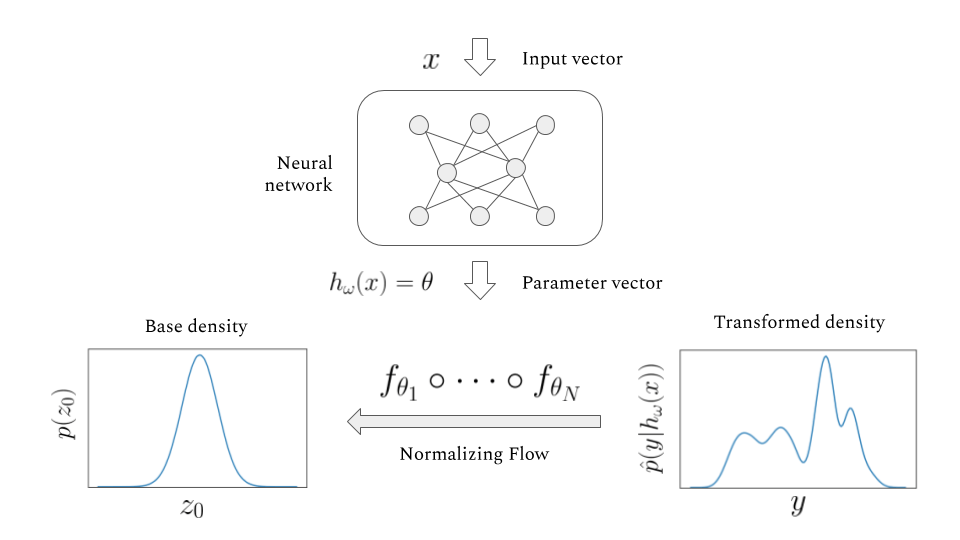

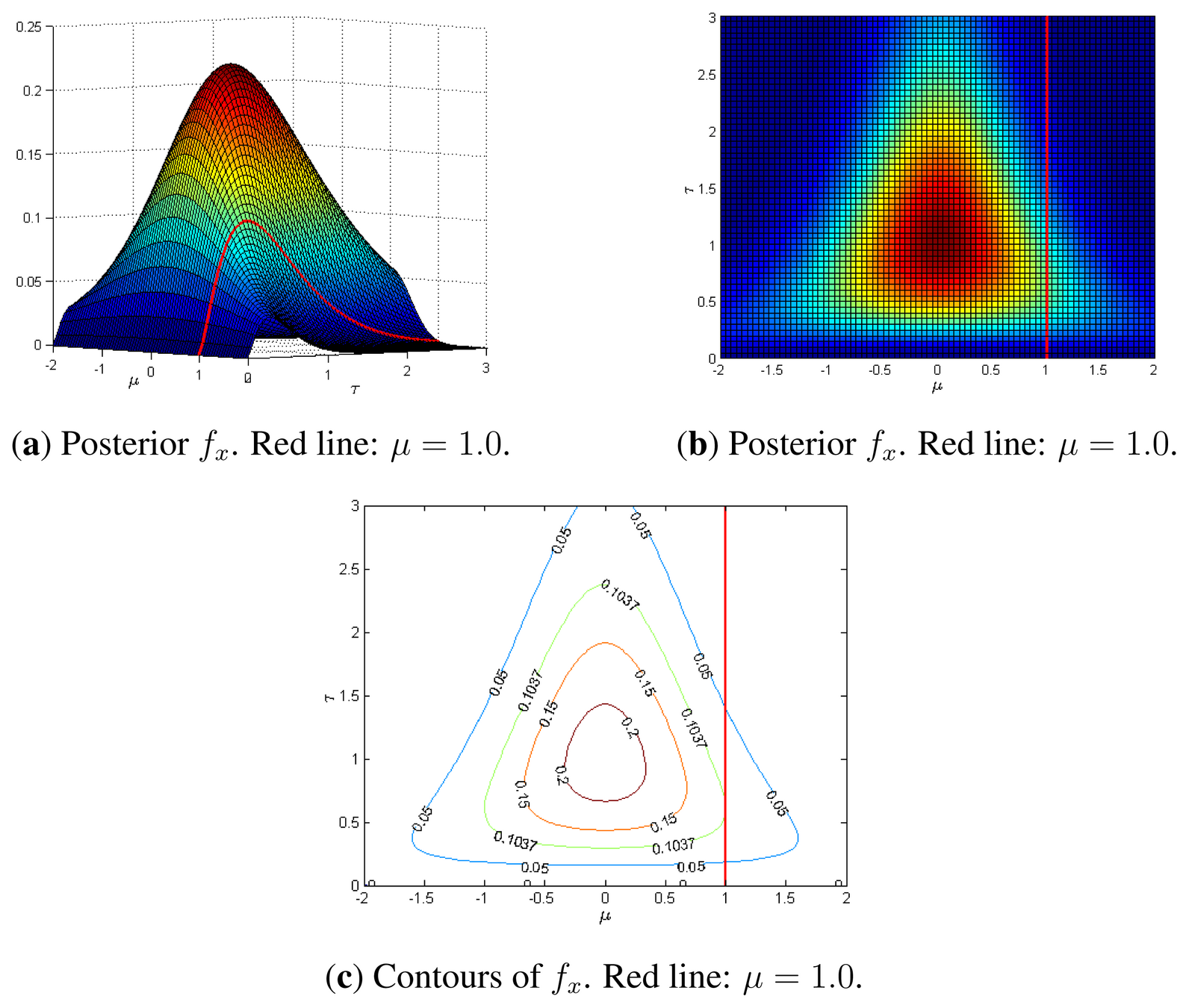

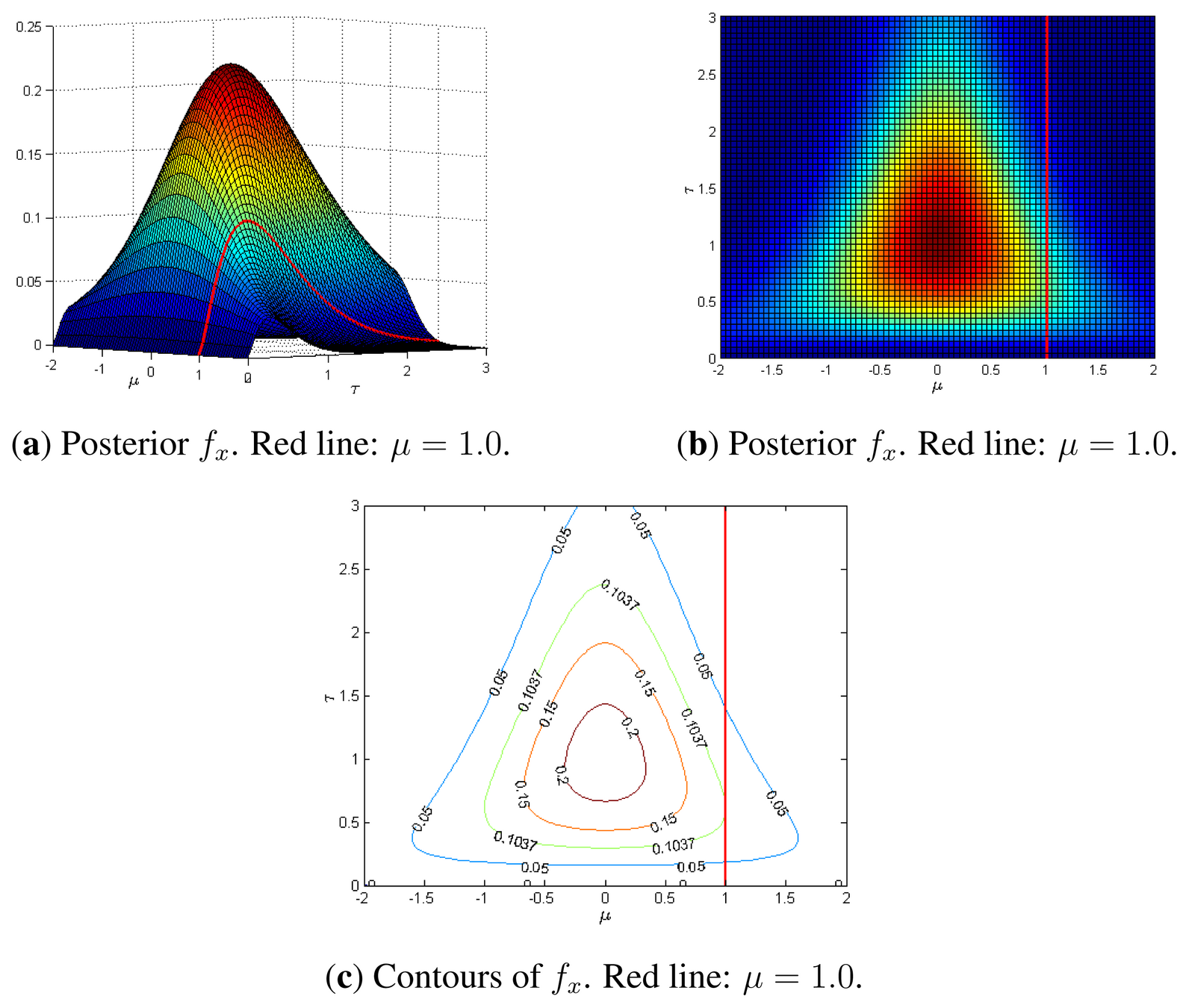

# GWDA: Flow

The main idea of flow-based modeling is to express \(\mathbf{y}\in\mathbb{R}^D\) as a transformation \(T\) of a real vector \(\mathbf{z}\in\mathbb{R}^D\) sampled from \(p_{\mathrm{z}}(\mathbf{z})\):

Note: The invertible and differentiable transformation \(T\) and the base distribution \(p_{\mathrm{z}}(\mathbf{z})\) can have parameters \(\{\boldsymbol{\phi}, \boldsymbol{\psi}\}\) of their own, i.e. \(T_\boldsymbol{\phi} \) and \(p_{\mathrm{z},\boldsymbol{\psi}}(\mathbf{z})\).

Change of Variables:

Equivalently,

The Jacobia \(J_{T}(\mathbf{u})\) is the \(D \times D\) matrix of all partial derivatives of \(T\) given by:

Flow Model

流模型

(Based on 1912.02762)

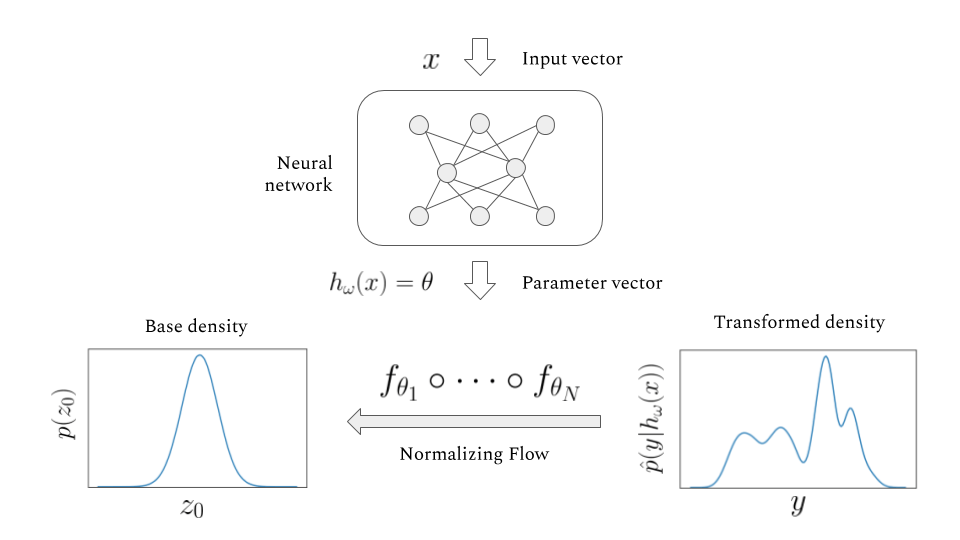

# GWDA: Flow

Flow Model

流模型

(Based on 1912.02762)

- Data: target data \(\mathbf{y}\in\mathbb{R}^{15}\) with condition data \(\mathbf{x}\).

- Task:

- Fitting a flow-based model \(p_{\mathrm{y}}(\mathbf{y} ; \boldsymbol{\theta})\) to a target distribution \(p_{\mathrm{y}}^{*}(\mathbf{y})\)

- by minimizing KL divergence with respect to the model’s parameters \(\boldsymbol{\theta}=\{\boldsymbol{\phi}, \boldsymbol{\psi}\}\),

- where \(\boldsymbol{\phi}\) are the parameters of \(T\) and \(\boldsymbol{\psi}\) are the parameters of \(p_{\mathrm{z}}(\mathbf{z})=\mathcal{N}(0,\mathbb{I})\).

- Loss function:

- Assuming we have a set of samples \(\left\{\mathbf{y}_{n}\right\}_{n=1}^{N}\sim p_{\mathrm{y}}^{*}(\mathbf{y})\),

Minimizing the above Monte Carlo approximation of the KL divergence is equivalent to fitting the flow-based model to the samples \(\left\{\mathbf{y}_{n}\right\}_{n=1}^{N}\) by maximum likelihood estimation.

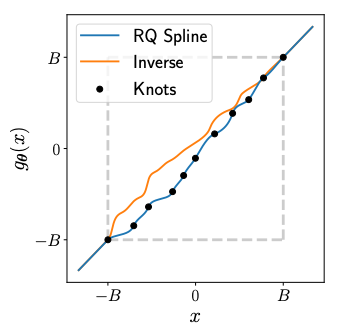

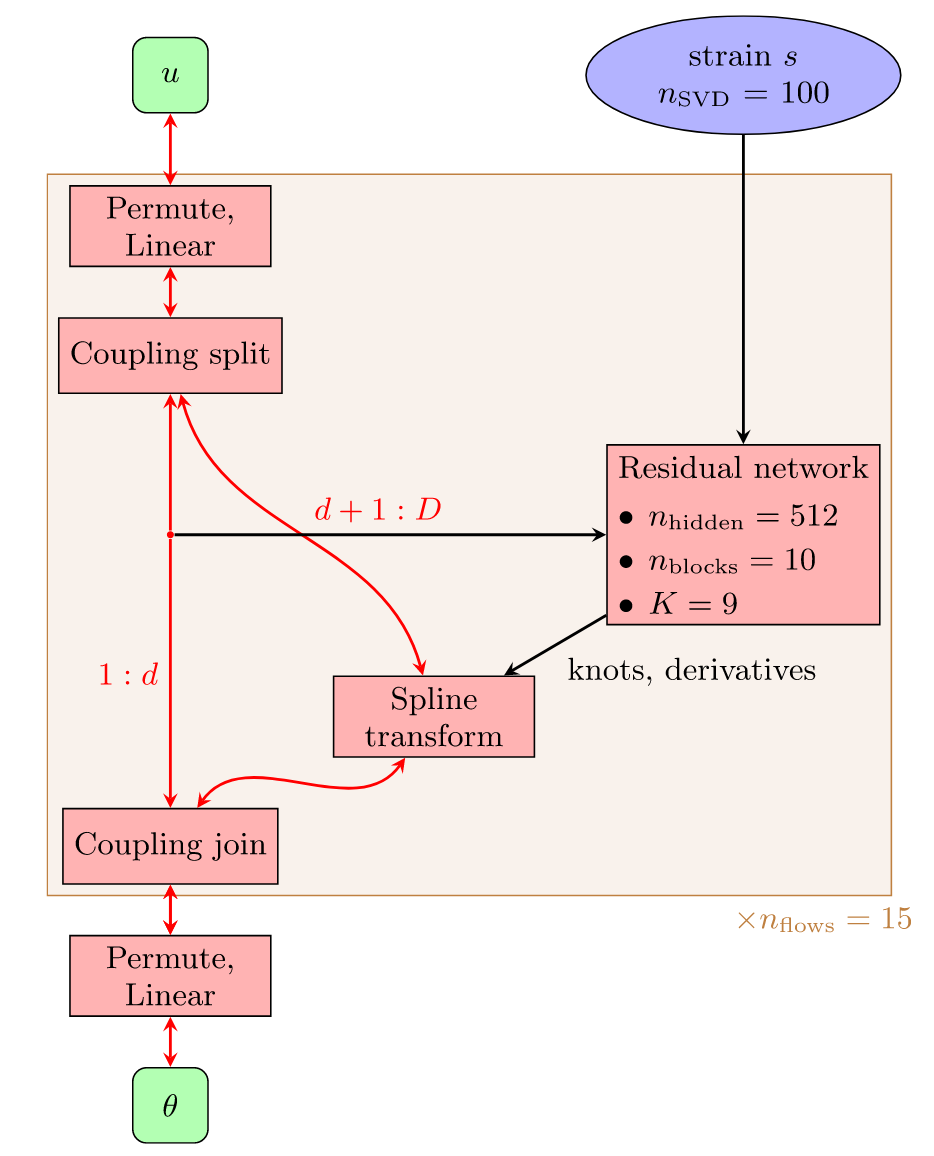

Rational Quadratic Neural Spline Flows

(RQ-NSF)

# GWDA: Flow

Flow Model

流模型

Rational Quadratic Neural Spline Flows

(RQ-NSF)

# GWDA: Flow

Green, Stephen Roland, and Jonathan Gair. “Complete Parameter Inference for GW150914 Using Deep Learning.” Machine Learning: Science and Technology 2, no. 3 (June 16, 2021): 03LT01.

base density

target density

Flow Model

流模型

# GWDA: Flow

-

進撃のnflow model in GW inference area.

-

2002.07656: 5D toy model [1] (PRD)

-

2008.03312: 15D binary black hole inference [1] (MLST)

-

2106.12594: Amortized inference and group-equivariant neural posterior estimation [2] (PRL)

-

2111.13139: Group-equivariant neural posterior estimation [2]

-

2210.05686: Importance sampling [2]

-

2211.08801: Noise forecasting [2]

-

-

https://github.com/dingo-gw/dingo (2023.03)

Flow Model

流模型

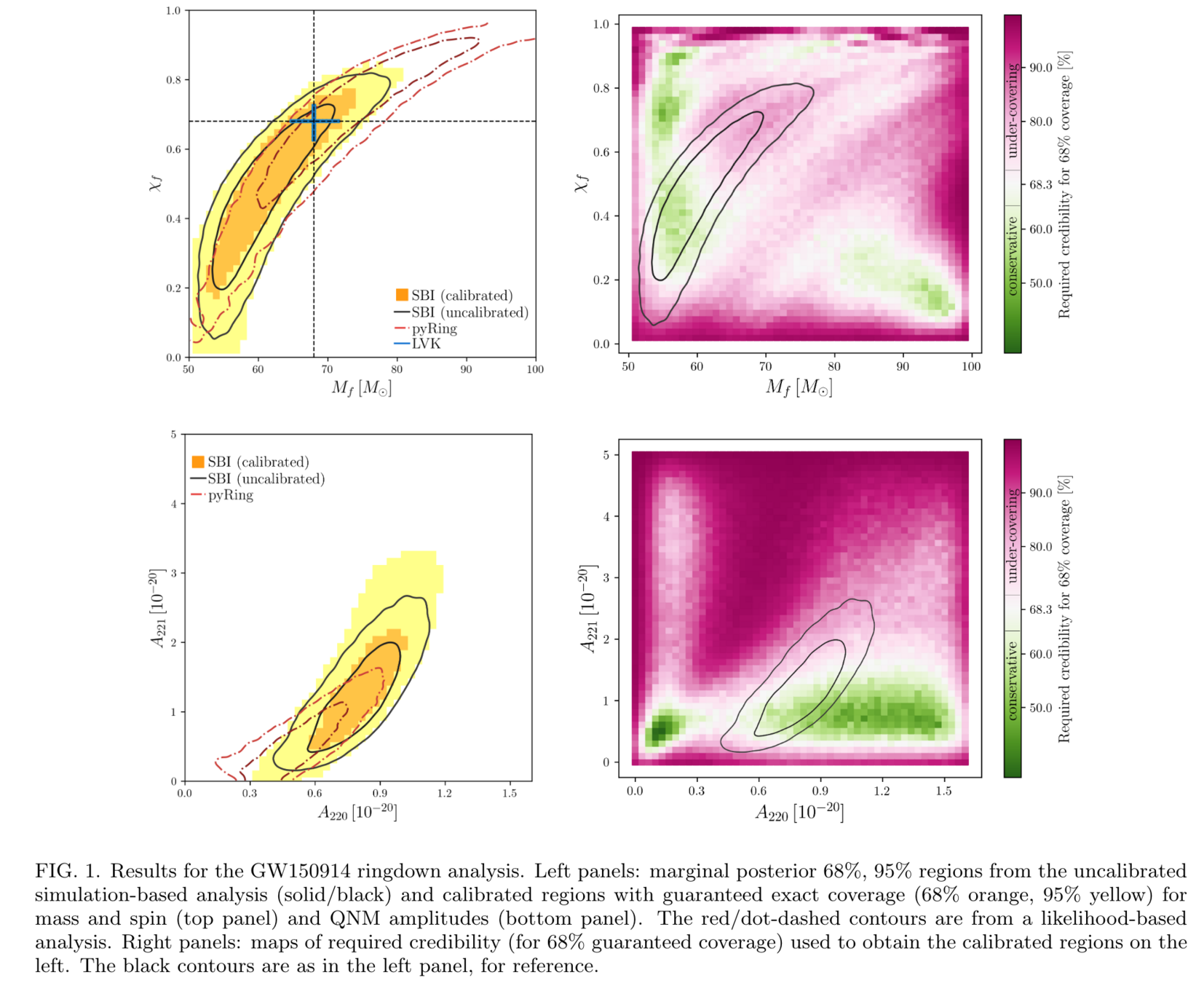

# GWDA: Flow

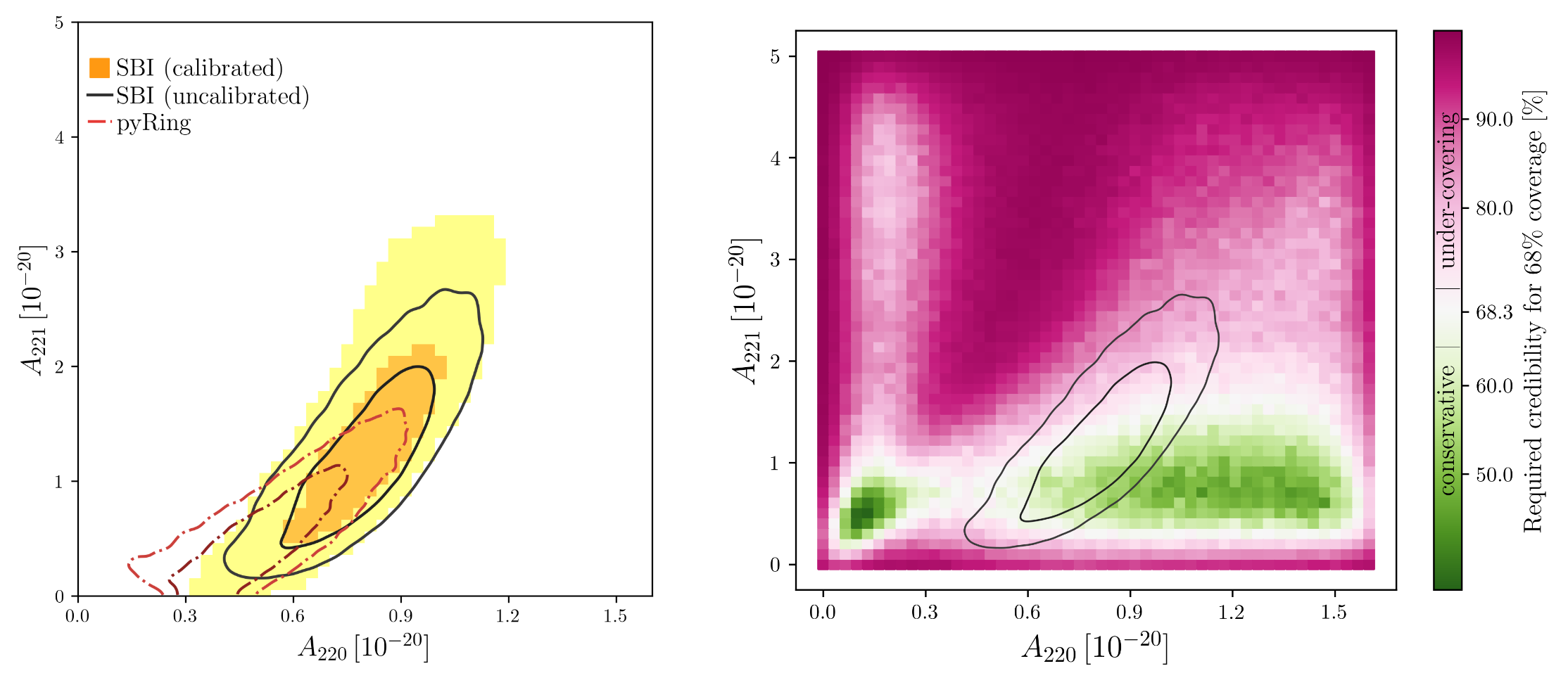

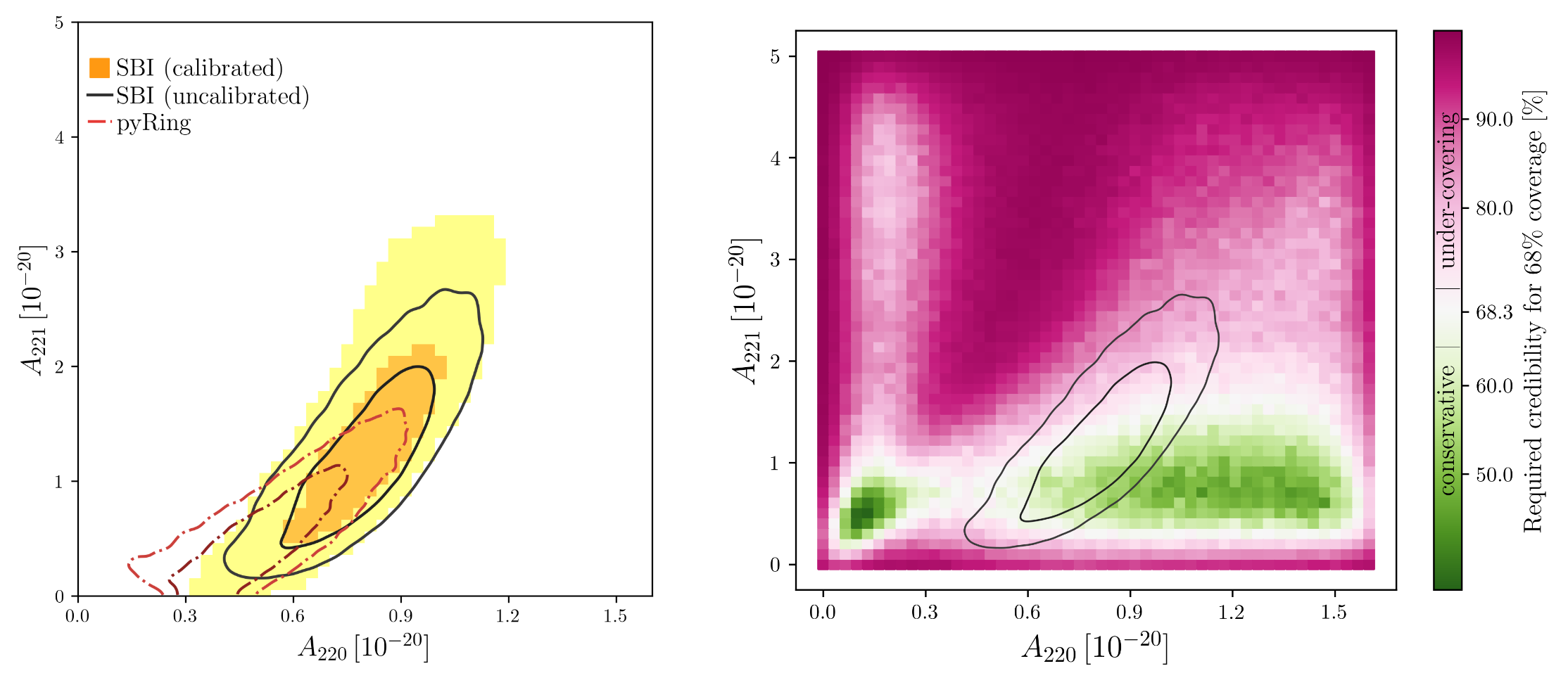

Neural Posterior Estimation with guaranteed exact coverage: the ringdown of GW150914

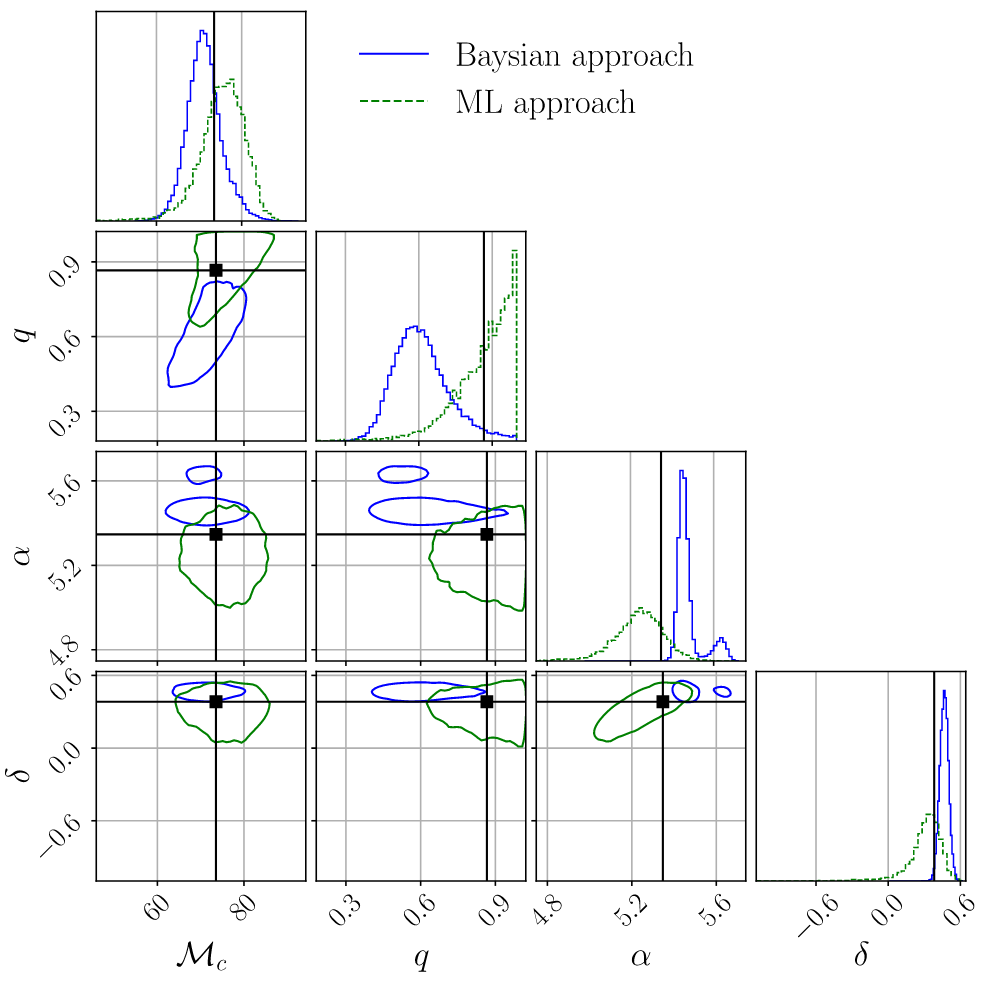

Normalizing Flows as an Avenue to Studying Overlapping Gravitational Wave Signals

LIGO-P2300197

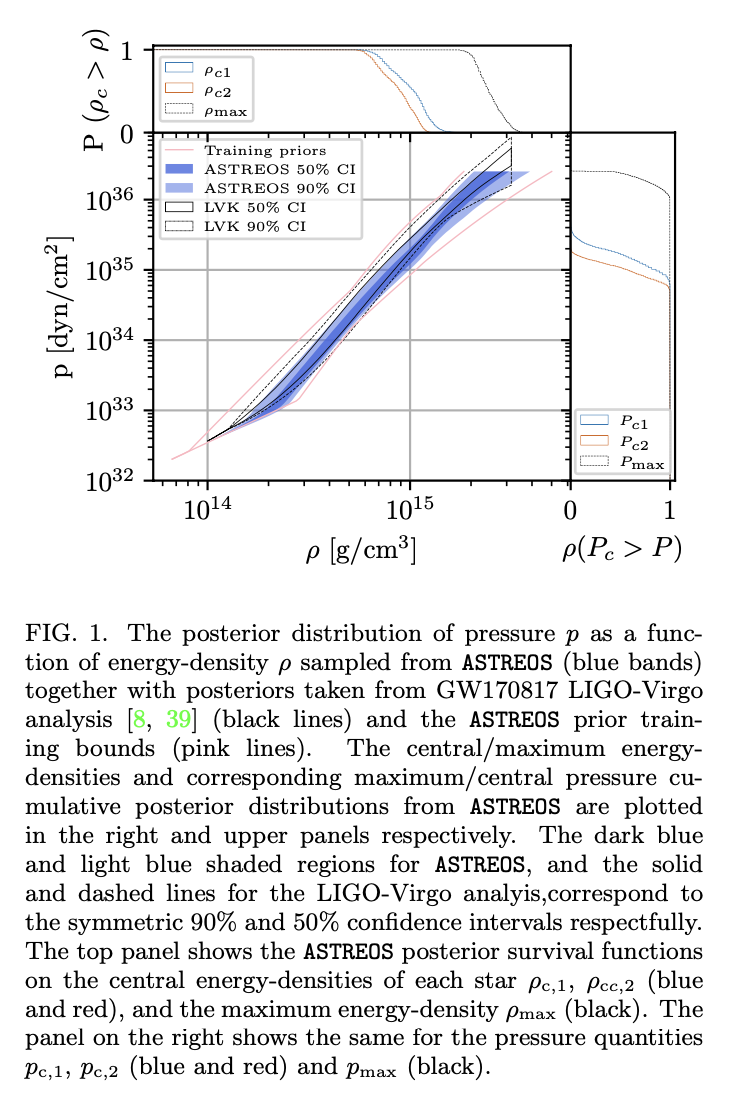

Rapid neutron star equation of state inference with Normalising Flows

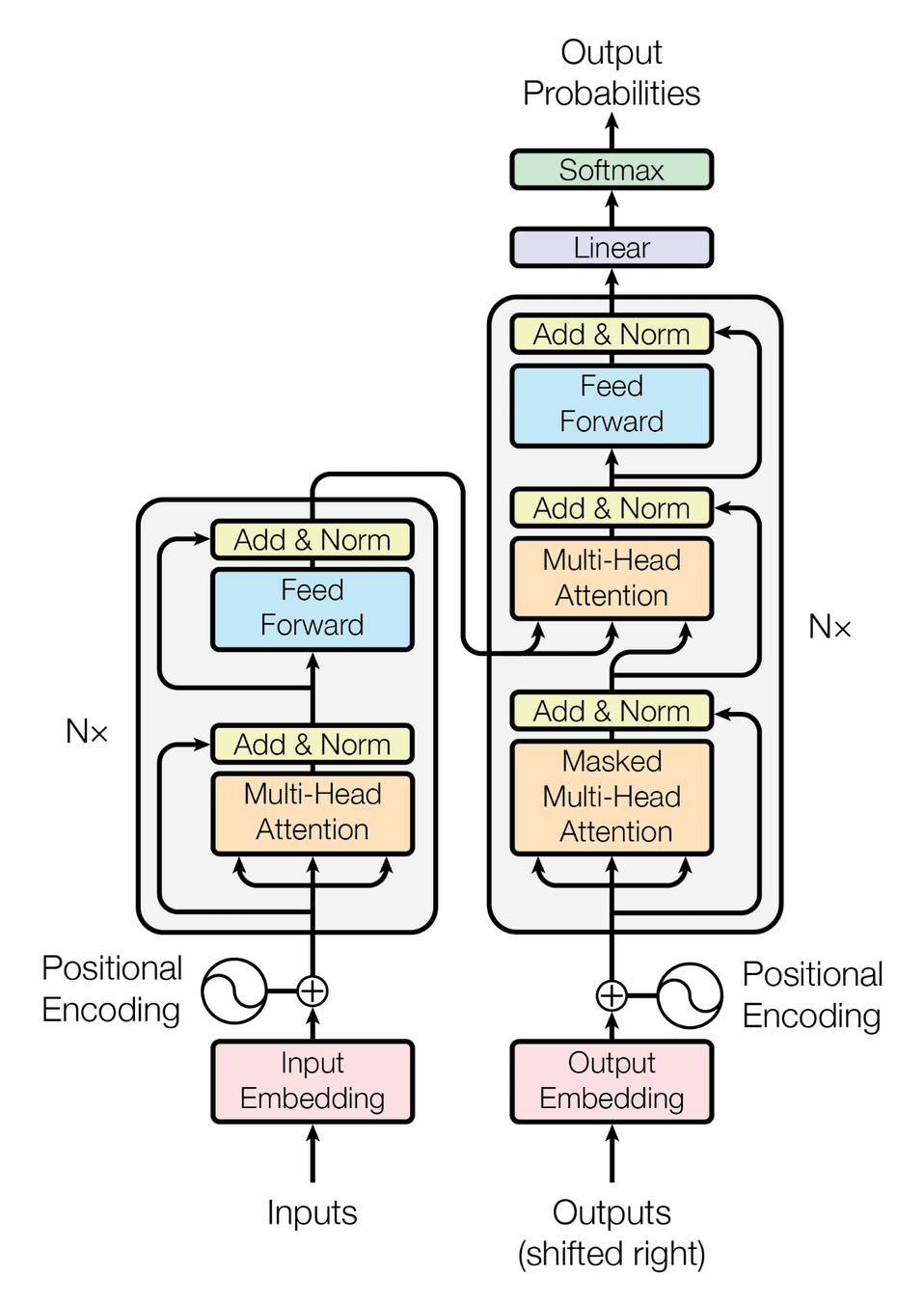

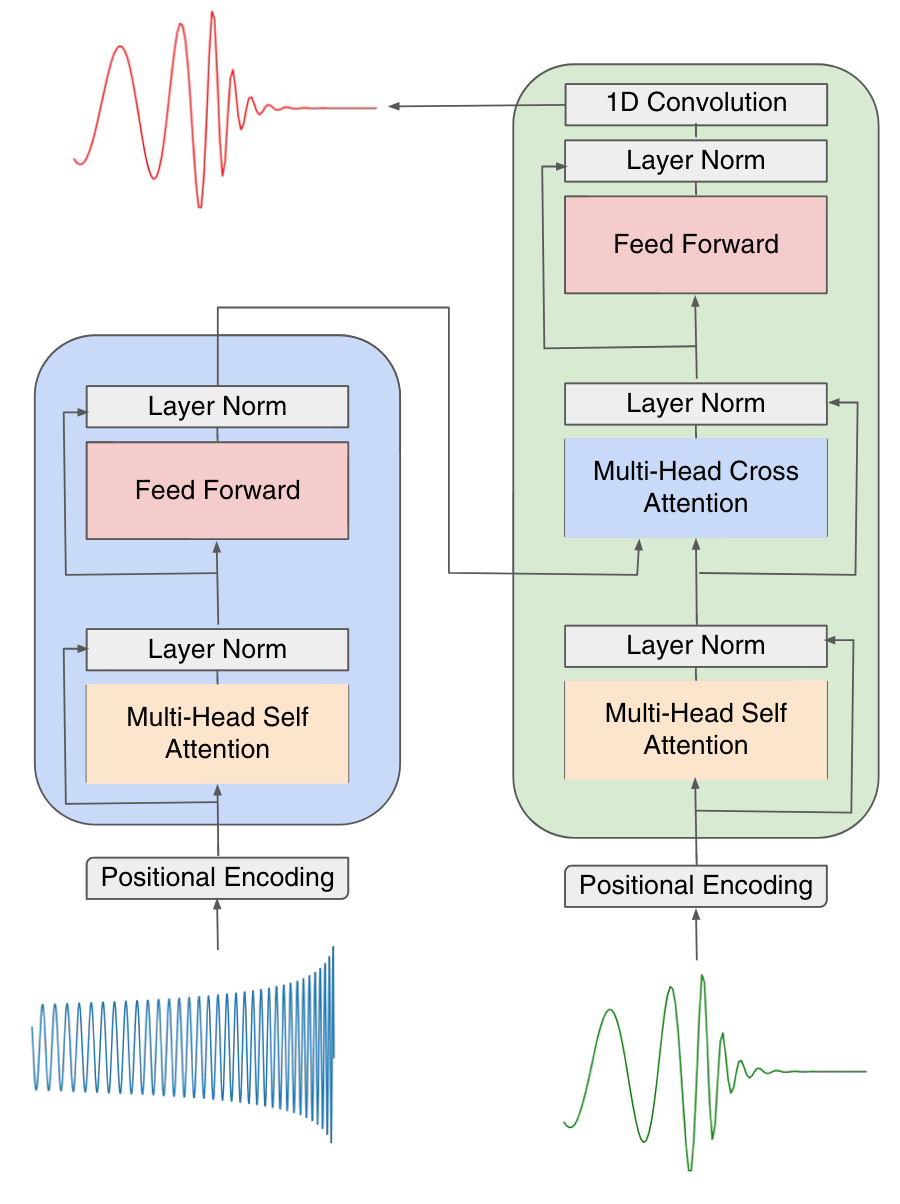

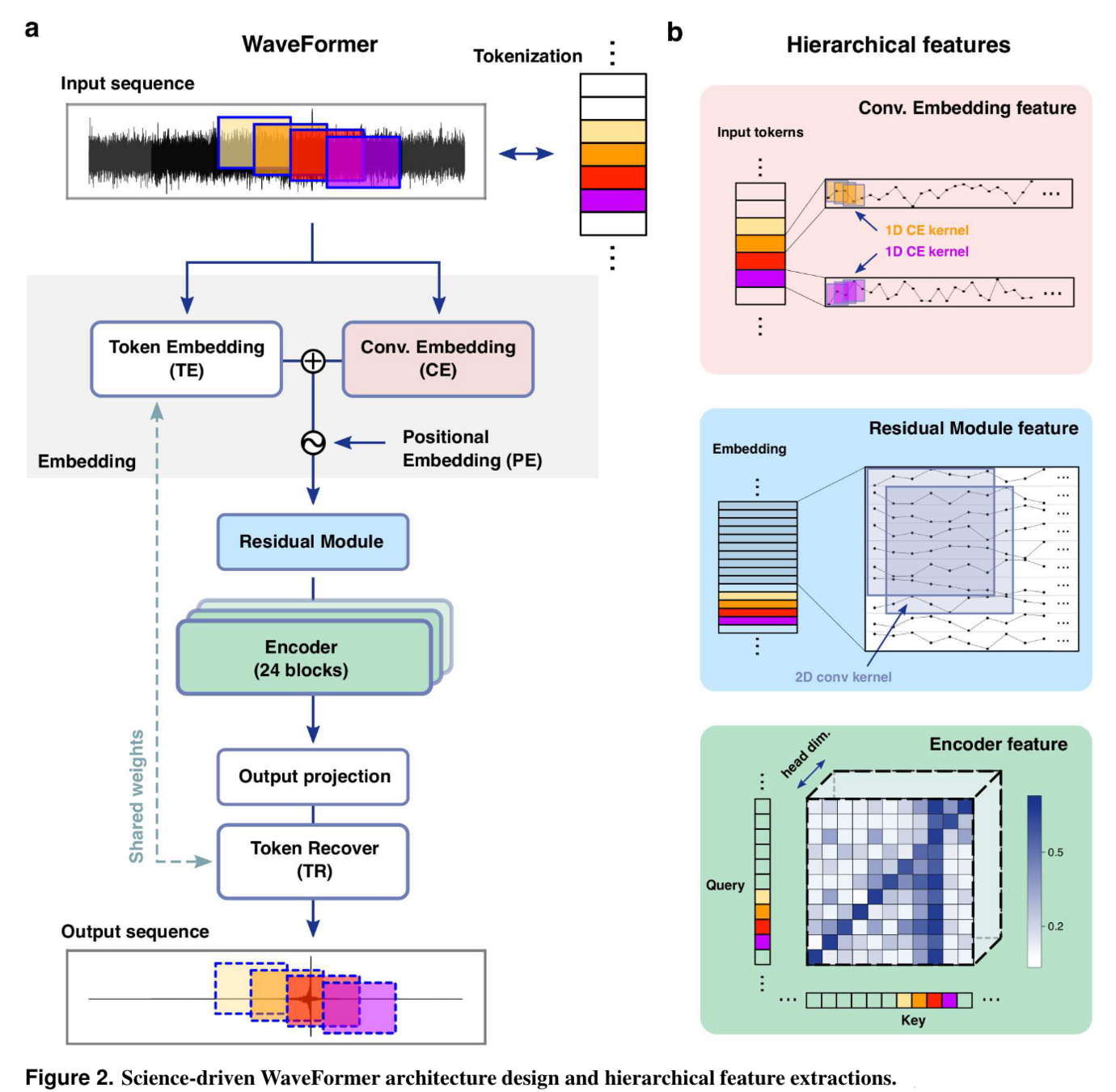

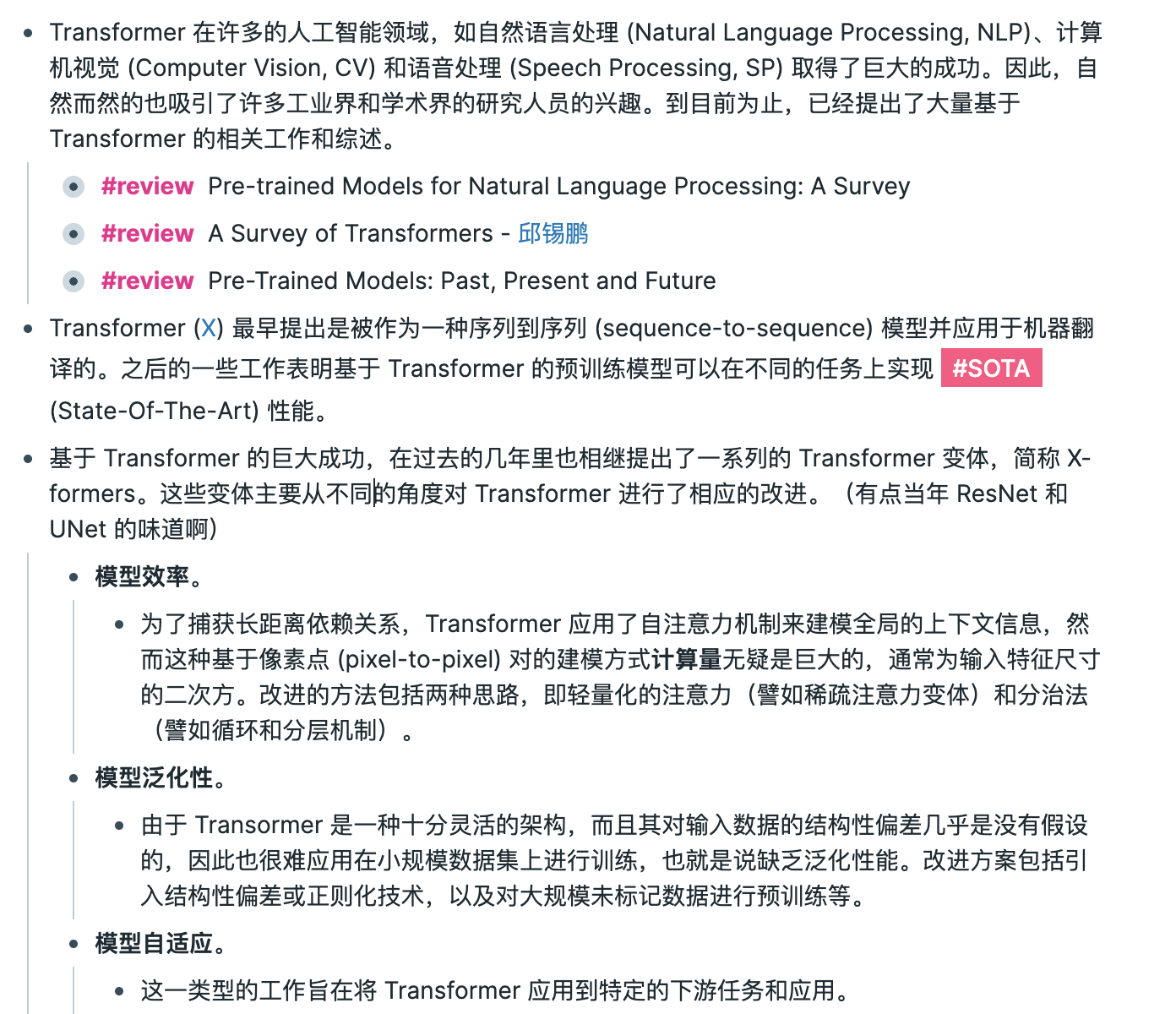

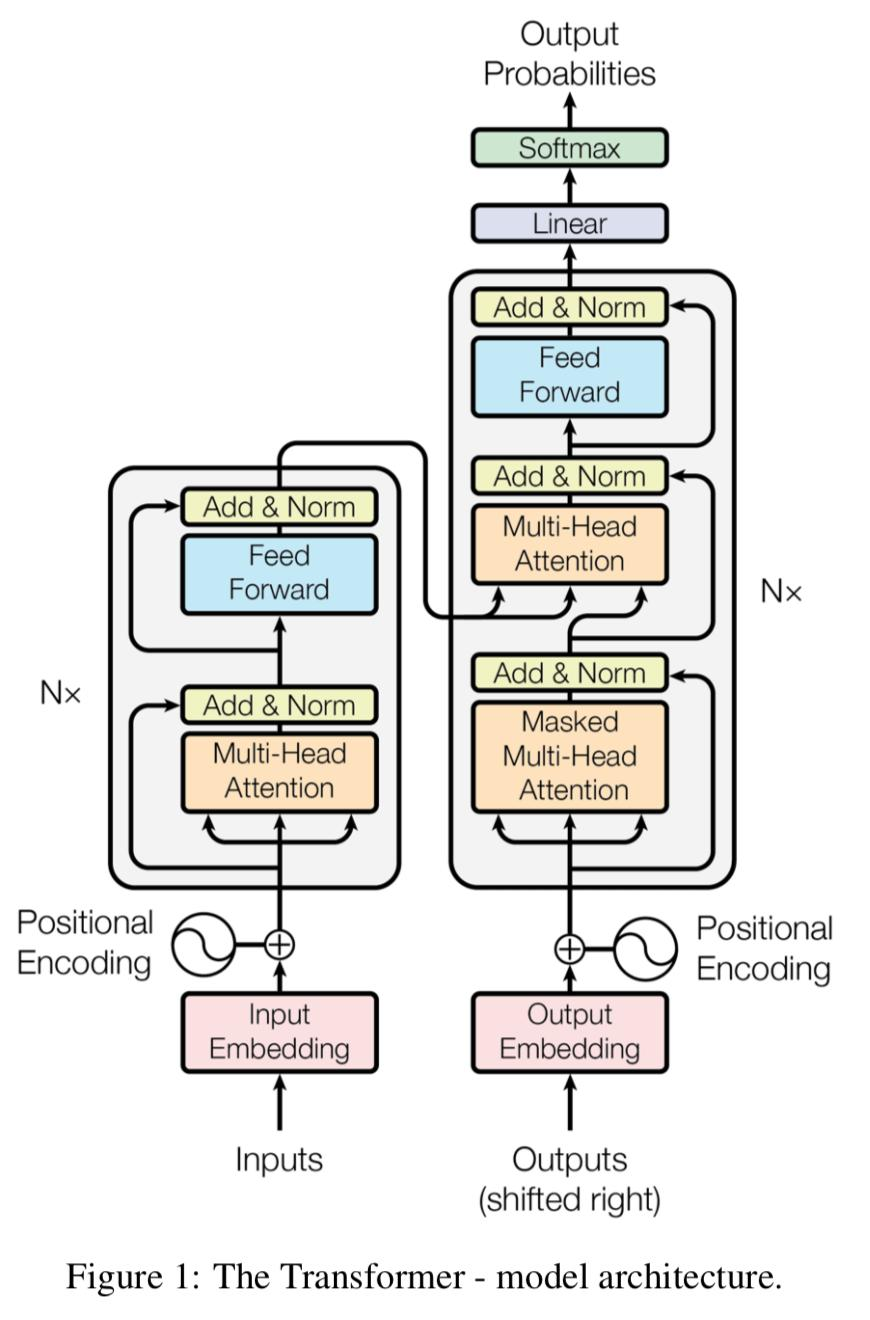

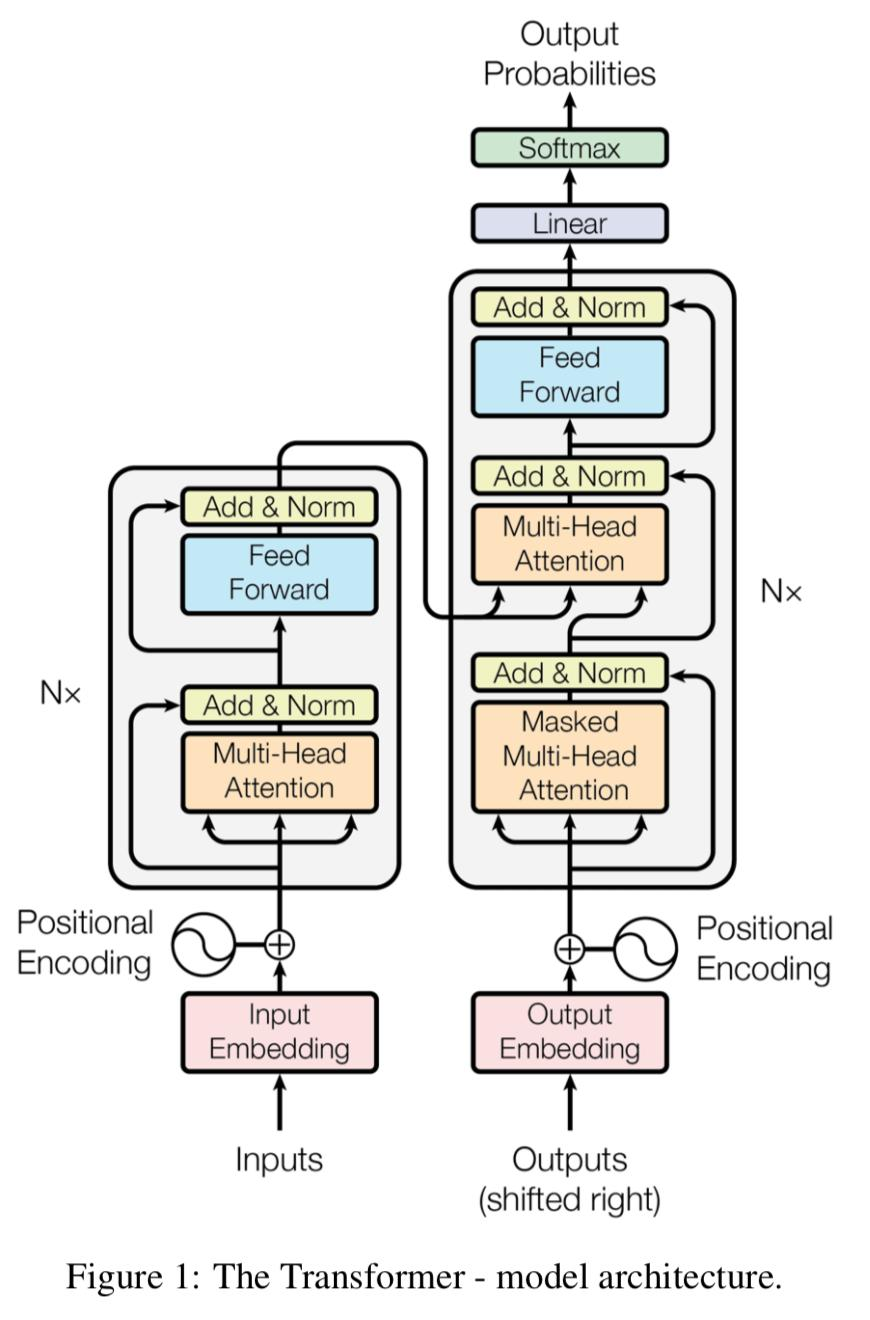

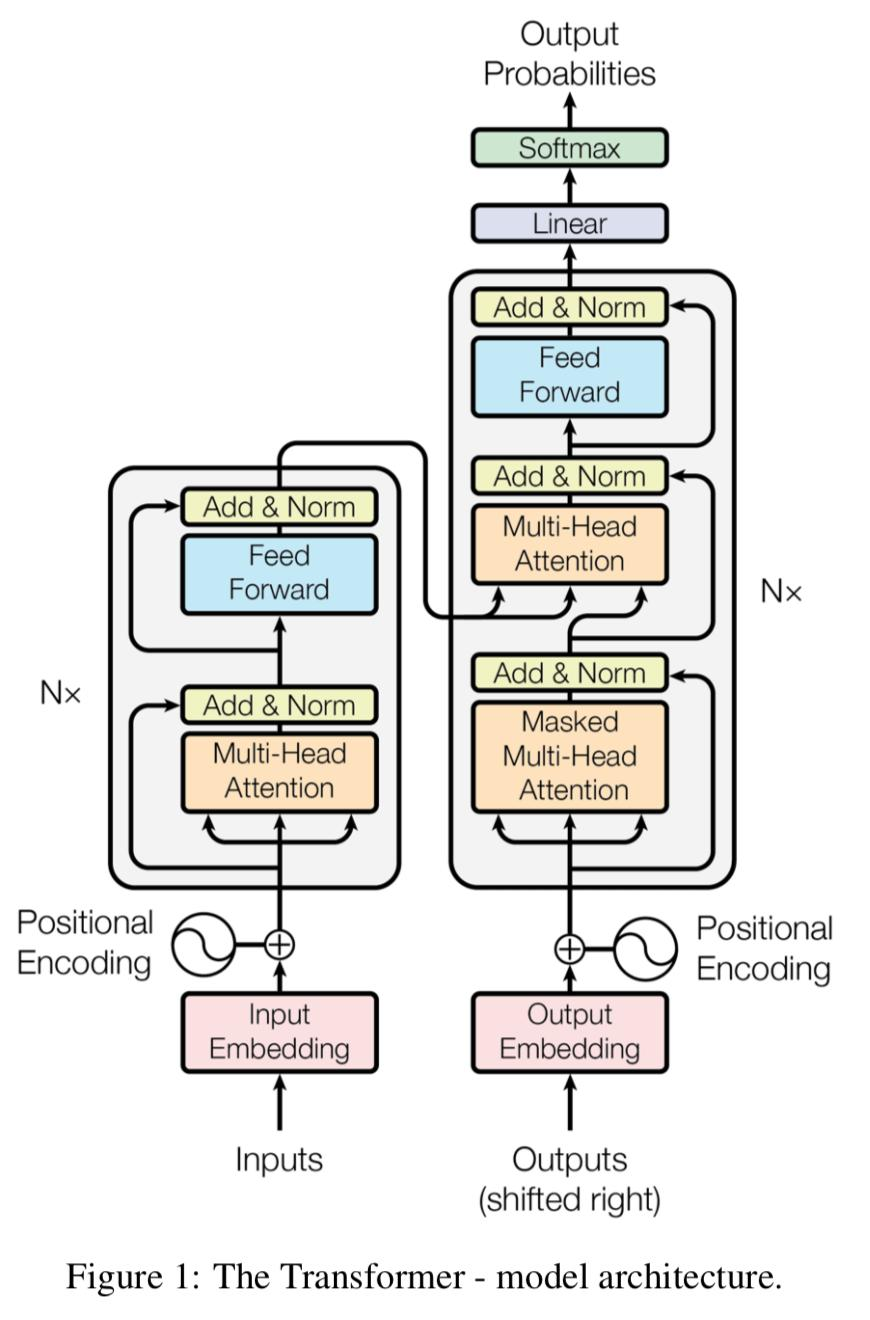

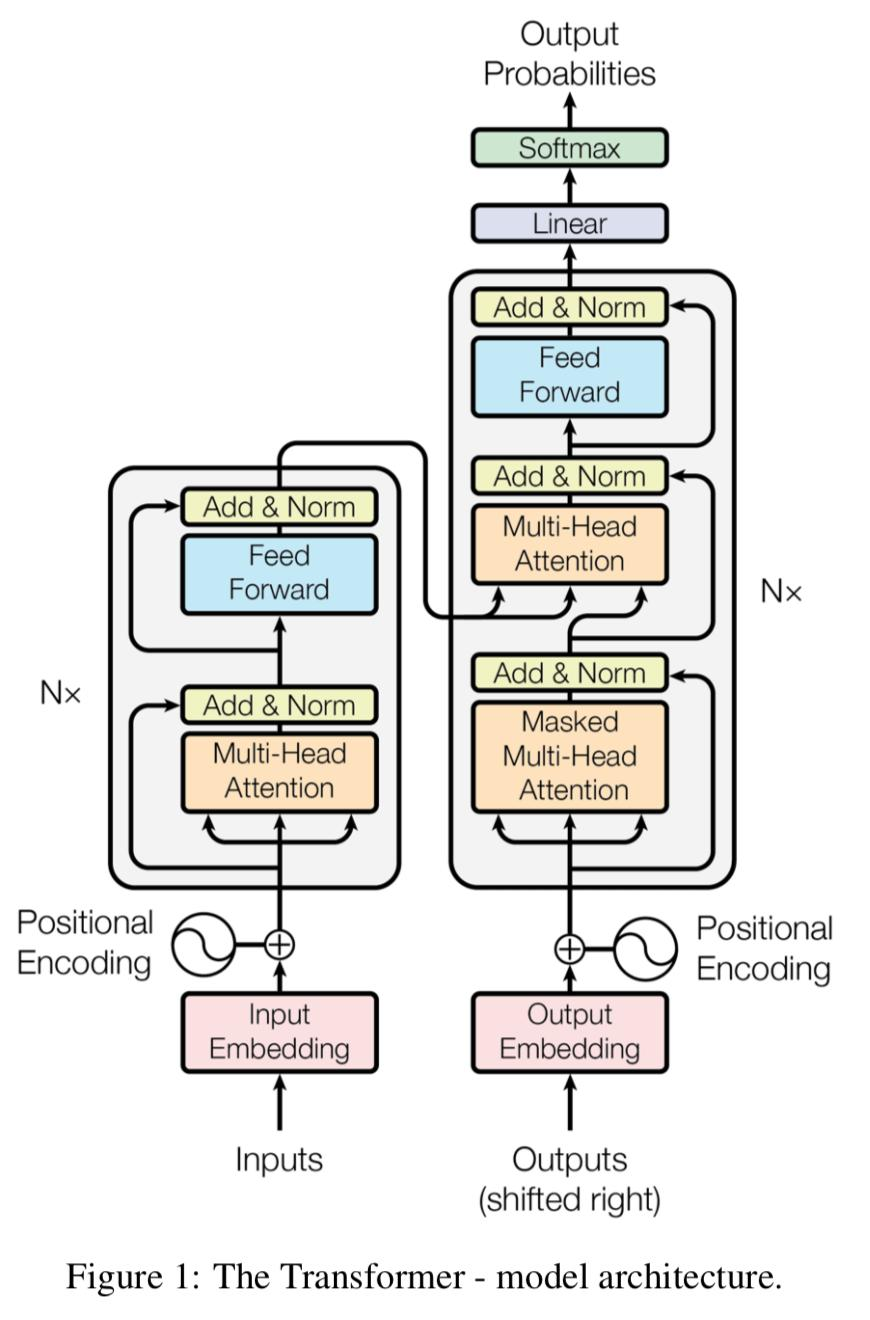

# GWDA: Transformer

Transformer

-

Khan, Asad, E. A. Huerta, and Huihuo Zheng. “Interpretable AI Forecasting for Numerical Relativity Waveforms of Quasicircular, Spinning, Nonprecessing Binary Black Hole Mergers.” Physical Review D 105, no. 2 (January 2022): 024024.

-

Jiang, Letian, and Yuan Luo. “Convolutional Transformer for Fast and Accurate Gravitational Wave Detection.” In 2022 26th International Conference on Pattern Recognition (ICPR), 46–53, 2022.

-

Ren, Zhixiang, He Wang, Yue Zhou, Zong-Kuan Guo, and Zhoujian Cao. “Intelligent Noise Suppression for Gravitational Wave Observational Data.” arXiv:2212.14283, December 29, 2022.

Transformer

# GWDA: Transformer

Transformer

Transformer

-

背景

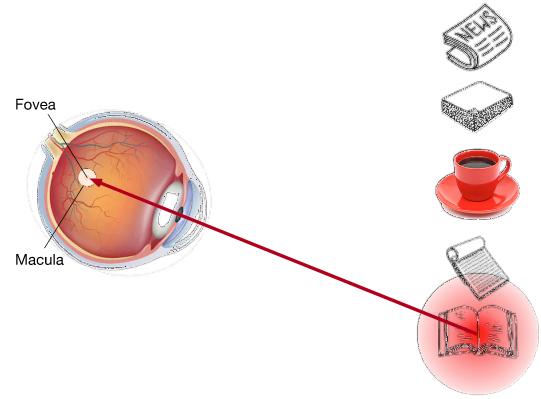

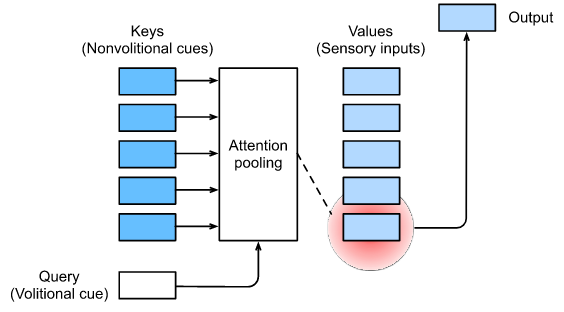

# GWDA: Transformer

Transformer

Vanilla Transformer: attention

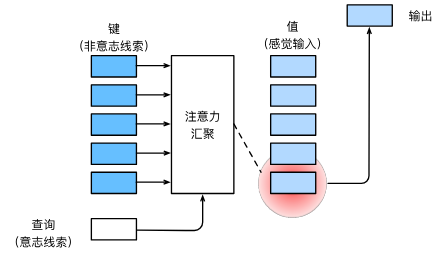

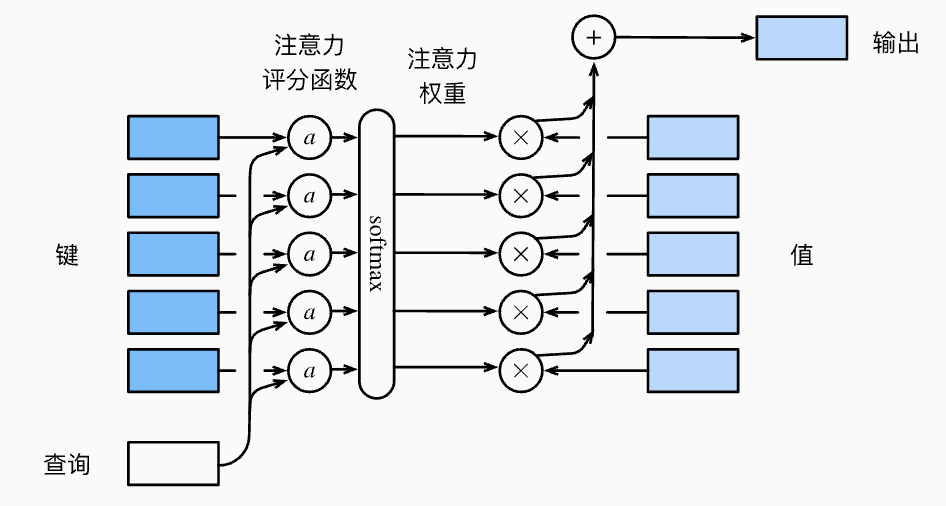

注意力机制通过注意力池化 (包括 queries (volitional cues) 和 keys (nonvolitional cues) ) 对 values (sensory inputs) 进行 bias selection。

# GWDA: Transformer

Transformer

Vanilla Transformer: attention

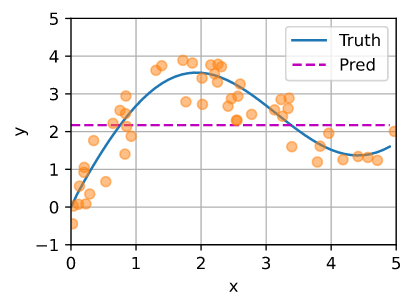

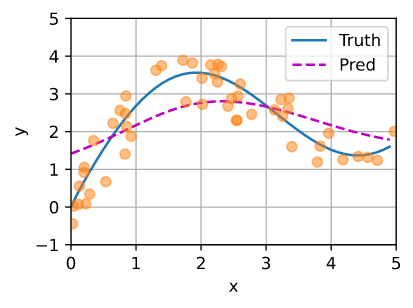

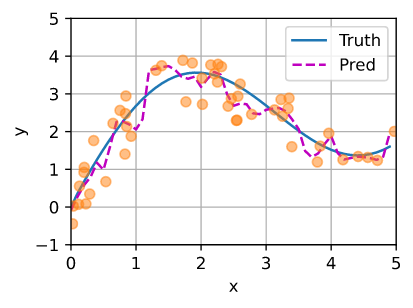

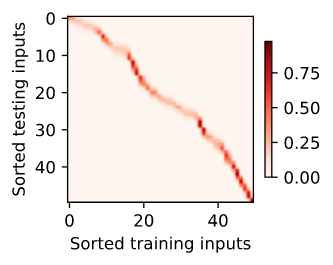

为了简单起见,让我们考虑下面的回归问题: 给定一个输入输出对的数据集 \(\left\{\left(x_{1}, y_{1}\right), \ldots,\left(x_{n}, y_{n}\right)\right\}\),如何学习 \(f\) 对 任意的新输入 \(x\), 去预测输出 \(\hat{y}=f(x)\) ?

Case 1: Average Pooling

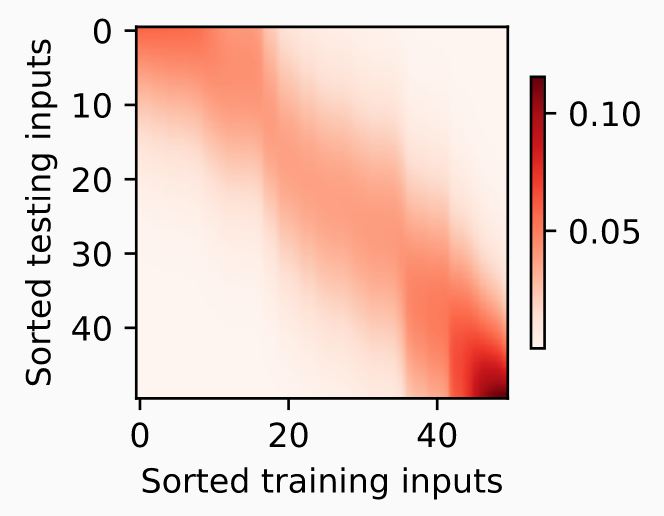

Case 2: Nonparametric Attention Pooling

Case 3: Parametric Attention Pooling

注意力汇聚(attention pooling)公式

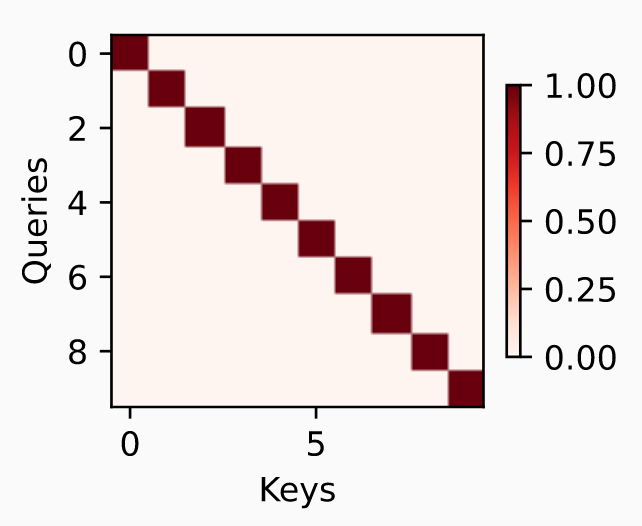

# GWDA: Transformer

Transformer

Vanilla Transformer: attention

用 \(\alpha\) 表示 attention scoring function,说明了如何将注意力池化的输出计算为各值的加权和。因为注意力的权重是一个概率分布,加权和本质上是一个加权平均。

- 数学上,假设我们有 query \(q \in \mathbb{R}^{q}\) 以及 \(m\) key-value 对 \(\left(k_{1}, v_{1}\right), \ldots,\left(k_{m}, v_{m}\right)\), 对所有的 \(k_{i} \in \mathbb{R}^{k}\) 和 \(v_{i} \in \mathbb{R}^{v}\)。 注意力池化 \(f\) 实例化为 values 的加权和:

其中, query \(q\) 和 key \(k_{i}\) 的注意力权重(标量)是通过 注意力评分函数 \(a\) 的softmax操作计算的, 该函数将两个向量映射为一个标量:

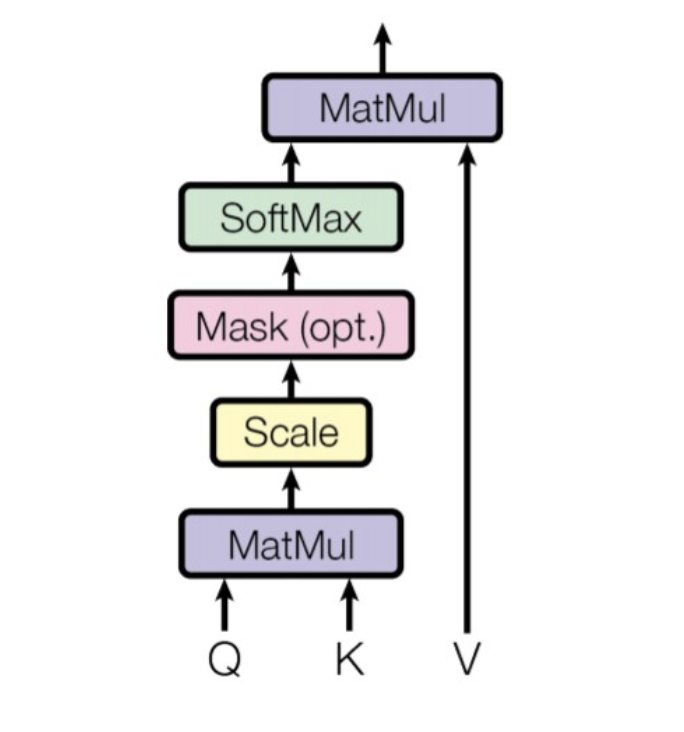

- Scaled Dot-Product Attention

queries \(Q \in \mathbb{R}^{n \times d}\), keys \(K \in \mathbb{R}^{m \times d}\) 和 values \(V \in \mathbb{R}^{m \times v}\) :

Q/K/V ~ [batch_size, len_tokens, dim_features]

[5, 15, 10]

[5, 15, 13]

[5, 15, 11]

[5, 13, 10]

[5, 13, 11]

# GWDA: Transformer

Transformer

Vanilla Transformer: attention

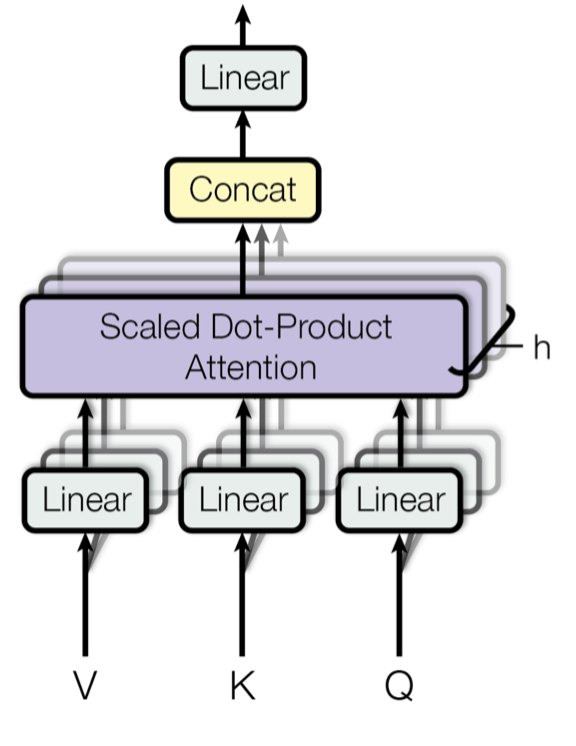

Multi-Head Attention

Transformer 并没有简单地应用单个注意力函数,而是使用了多头注意力。通过单独计算每一个注意力头,最终再将多个注意力头的结果拼接起来作为 multi head attenttion 模块最终的输出,具体公式如下所示:

Multi-head attention combines knowledge of the same attention pooling via different representation subspaces of queries, keys, and values.

[batch_size * num_heads, len_tokens, dim_features / num_heads]

Q,K,V ~ [batch_size, len_tokens, dim_features]

[batch_size, len_tokens, dim_features]

Self-Attention

在 Transformer 的编码器中,我们设置 \(Q=K=V\)。

[2, 5, 5]

[1, 5, 10]

# GWDA: Transformer

Transformer

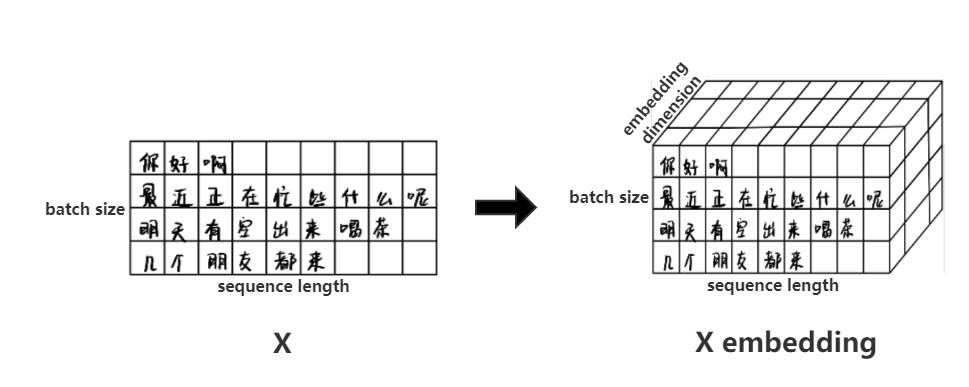

Vanilla Transformer: Embedding & Encoding

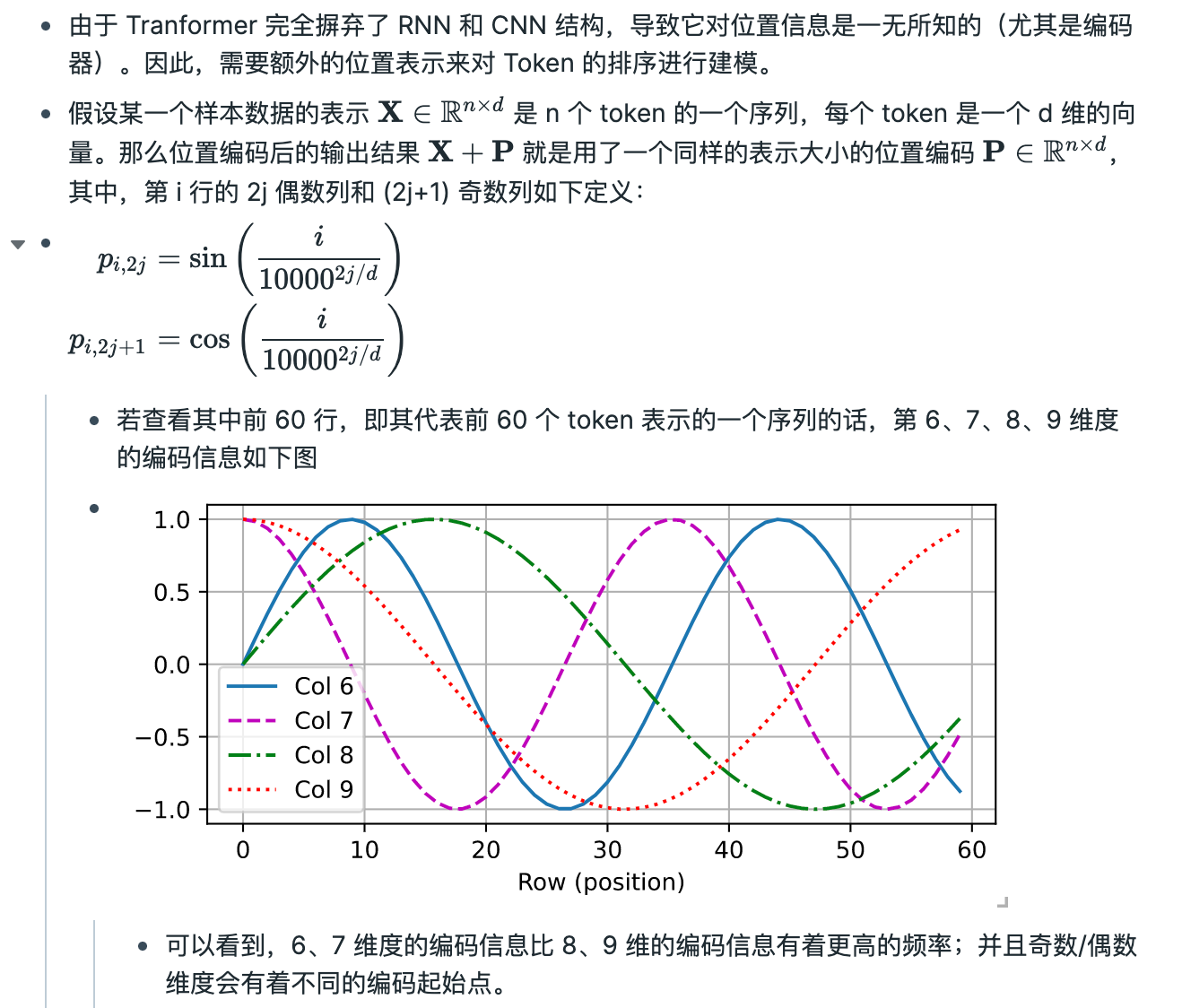

Positional Encoding

[batch_size, len_tokens]

[batch_size, len_tokens, dim_features]

[batch_size, len_tokens, dim_features]

Embedding

# GWDA: Transformer

Transformer

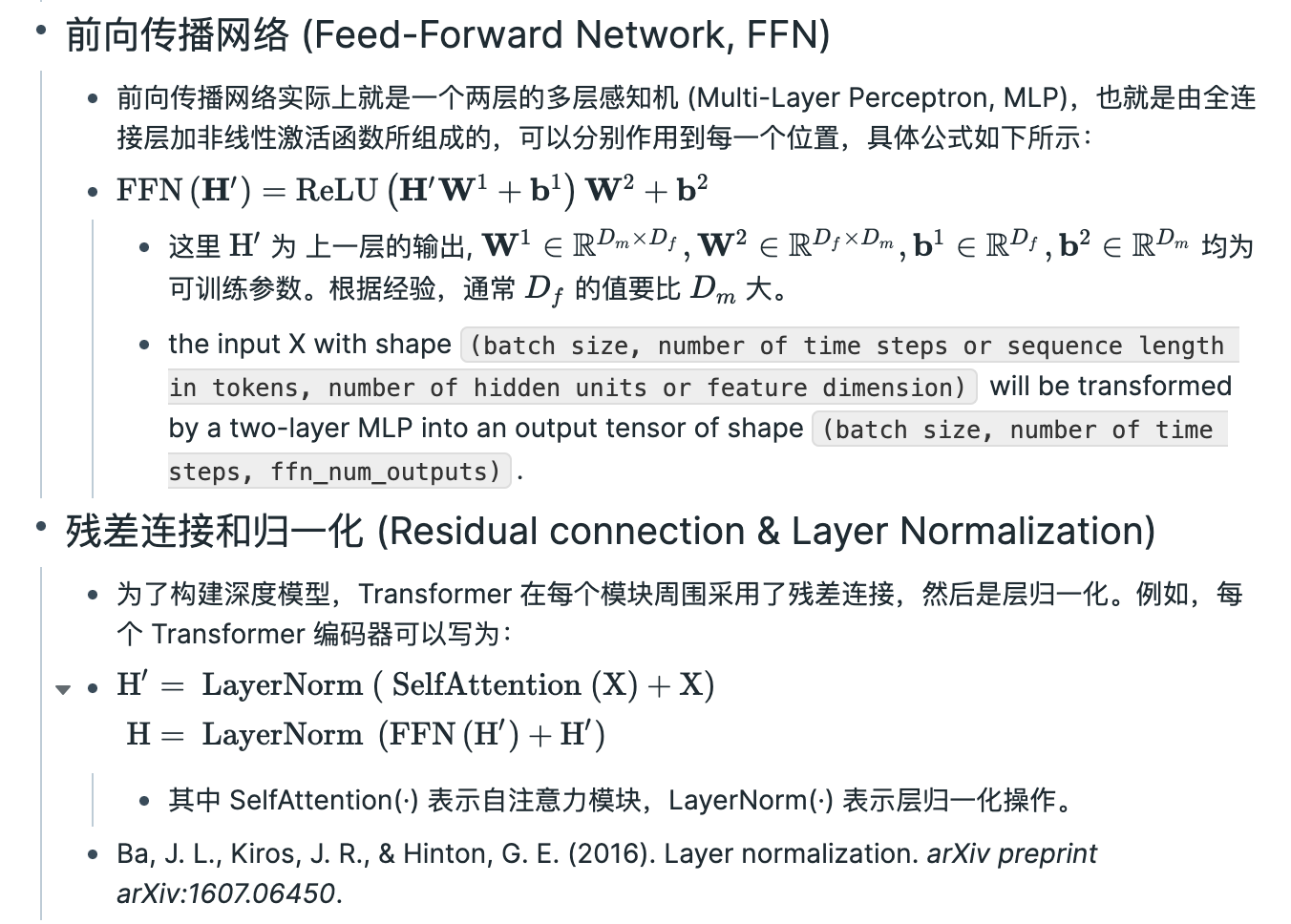

Vanilla Transformer: Modularity

Feed-Forward + Add & Norm

[batch_size, len_tokens, dim_features]

[batch_size, len_tokens, dim_features]

[batch_size, len_tokens, dim_features]

K

V

Q

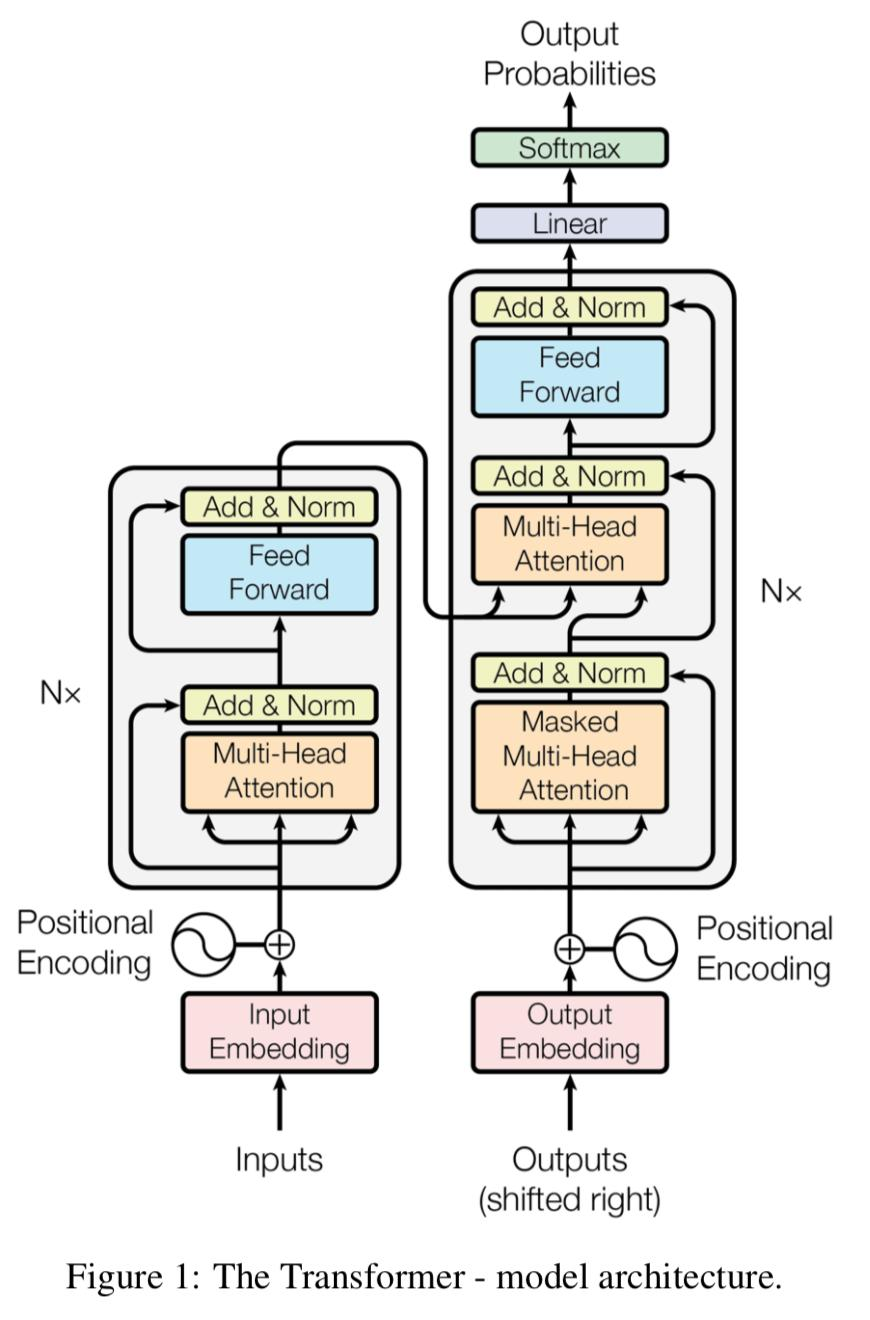

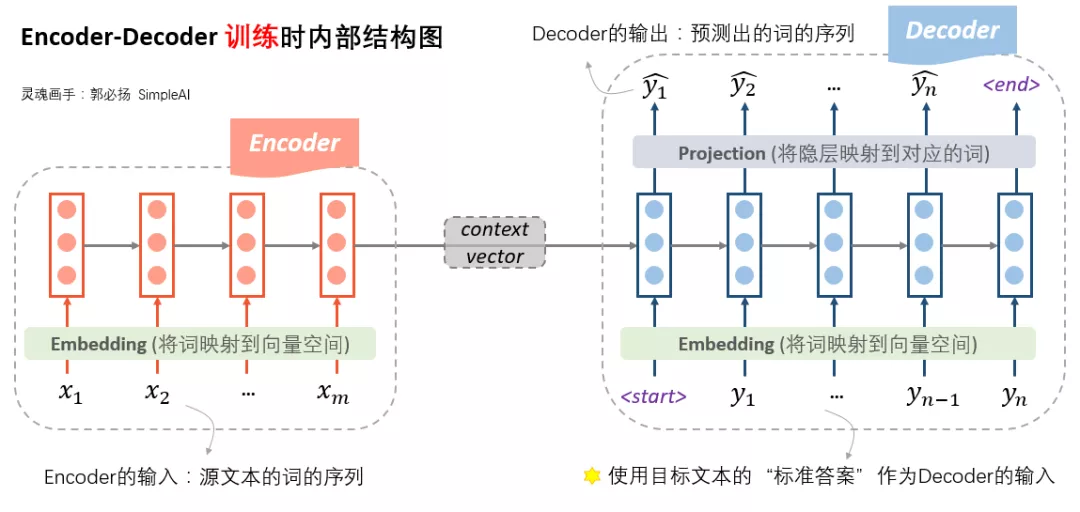

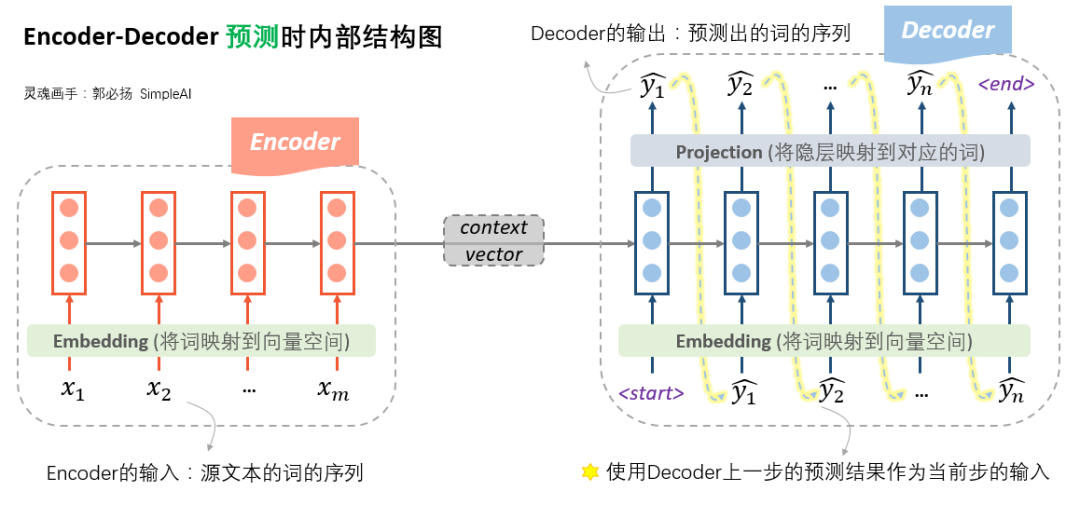

# GWDA: Transformer

Transformer

Vanilla Transformer: train for translation task

Train Stage

[batch_size, len_tokens1, dim_features1]

[batch_size, len_tokens1]

[batch_size, len_tokens2]

[batch_size, len_tokens2, vocal_size]

K

V

Q

[batch_size, len_tokens1, dim_features1]

[batch_size, len_tokens2, dim_features2]

# GWDA: Transformer

Transformer

Vanilla Transformer: test for translation task

Test Stage

[1, len_tokens1, dim_features1]

[1, len_tokens1]

[1, 1, vocal_size]

K

V

Q

[1, len_tokens1, dim_features1]

[1, len_tokens2, dim_features2]

[1, 1]、[1, 2]、...

Insights

-

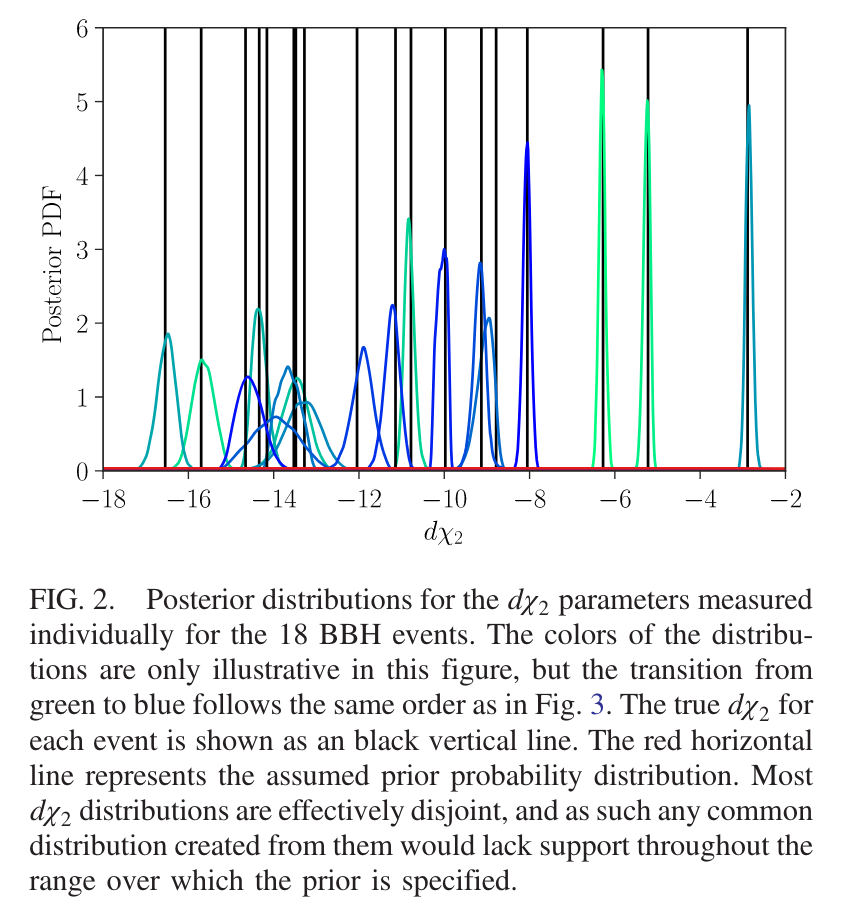

AI serves as a valuable tool in gravitational wave astronomy:

(Big data & Computational Complexity)- Enhancing data analysis,

- Noise reduction, and

- Parameter estimation.

- It streamlines the research process and allows scientists to focus on the most relevant information.

-

Beyond a Tool: AI transcends its role as a mere tool by enabling scientific discovery in GW astronomy.

- Characterization of GW signals involves

- Exploring beyond the scope of GR ,

- Enabling real-time inference

- Test of GR

- Tighter parameter constraints of variance

- Guaranteed exact coverage

- "Curse of Dimensionality" in inference

- Overlapping signal

- Hierarchical Bayesian Analysis

- ...

- Characterization of GW signals involves

GW170817

GW190412

GW190814

PRD 101, 10 (2020) 104003.

# GWDA: Insights

-

AI serves as a valuable tool in gravitational wave astronomy:

(Big data & Computational Complexity)- Enhancing data analysis,

- Noise reduction, and

- Parameter estimation.

- It streamlines the research process and allows scientists to focus on the most relevant information.

-

Beyond a Tool: AI transcends its role as a mere tool by enabling scientific discovery in GW astronomy.

- Characterization of GW signals involves

- Exploring beyond the scope of GR ,

- Enabling real-time inference

- Test of GR

- Tighter parameter constraints of variance

- Guaranteed exact coverage

- "Curse of Dimensionality" in inference

- Overlapping signal

- Hierarchical Bayesian Analysis

- ...

- Characterization of GW signals involves

arXiv:2305.18528

ICML2023

Insights

# GWDA: Insights

-

AI serves as a valuable tool in gravitational wave astronomy:

(Big data & Computational Complexity)- Enhancing data analysis,

- Noise reduction, and

- Parameter estimation.

- It streamlines the research process and allows scientists to focus on the most relevant information.

-

Beyond a Tool: AI transcends its role as a mere tool by enabling scientific discovery in GW astronomy.

- Characterization of GW signals involves

- Exploring beyond the scope of GR ,

- Enabling real-time inference

- Test of GR

- Tighter parameter constraints of variance

- Guaranteed exact coverage

- "Curse of Dimensionality" in inference

- Overlapping signal

- Hierarchical Bayesian Analysis

- ...

- ...

- Characterization of GW signals involves

Combining inferences from multiple sources

Insights

# GWDA: Insights

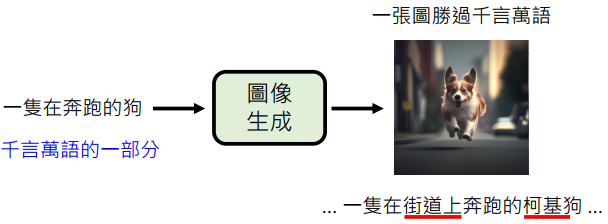

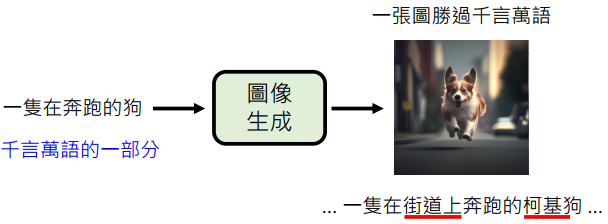

AI for Science: GW Astronomy

# GWDA: AI4Sci

- Exploring the importance of understanding how AI models make predictions in scientific research.

- The critical role of generative models (生成模型是关键)

- Quantifying uncertainty: a key aspect (不确定性量化问题)

- Fostering controllable and reliable models (模型的可控可信问题)

Bayes

AI

Credit: 李宏毅

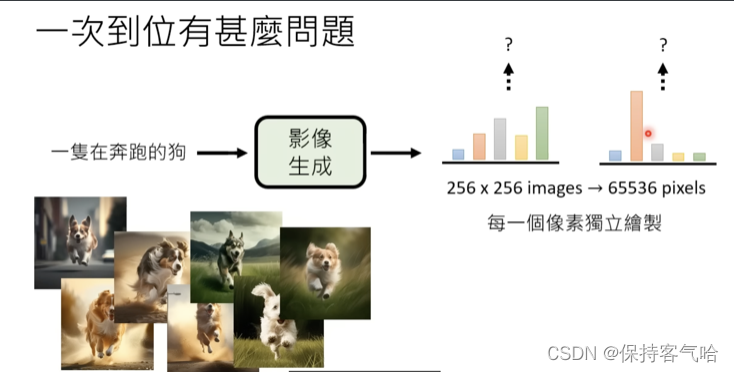

Text-to-image

AI for Science: GW Astronomy

# GWDA: AI4Sci

- Exploring the importance of understanding how AI models make predictions in scientific research.

- The critical role of generative models (生成模型是关键)

- Quantifying uncertainty: a key aspect (不确定性量化问题)

- Fostering controllable and reliable models (模型的可控可信问题)

Bayes

AI

Credit: 李宏毅

Text-to-image

# GWDA: ML

GWDA:

Machine Learning

引力波数据分析与机器学习