Responsible ML

Interpretable and fair machine

learning models

Jeremias Sulam

Responsible ML

-

Reproducibility

-

Practical Accuracy

-

Explainability

-

Fairness

-

Privacy

-

Explainability

-

Fairness

[M. E. Kaminski, 2019]

E.U.: “right to an explanation” of decisions made

on individuals by algorithms

[FDA Guiding principles]

F.D.A.: “interpretability of the model outputs”

Explanations in ML

- What parts of the image are important for this prediction?

- What are the subsets of the input so that

-

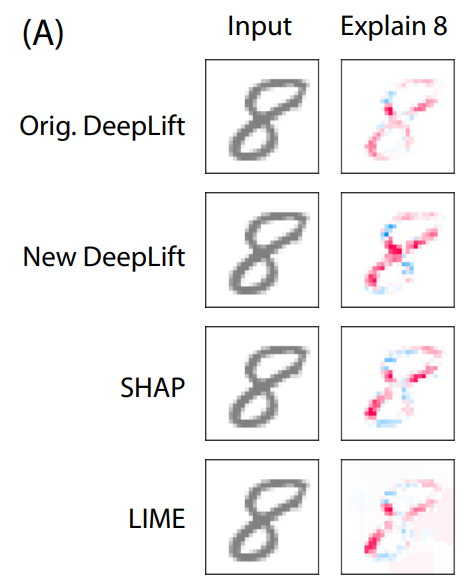

Sensitivity or Gradient-based perturbations

-

Shapley coefficients

-

Variational formulations

LIME [Ribeiro et al, '16], CAM [Zhou et al, '16], Grad-CAM [Selvaraju et al, '17]

Shap [Lundberg & Lee, '17], ...

RDE [Macdonald et al, '19], ...

-

Adebayo et al, Sanity checks for saliency maps, 2018

-

Ghorbani et al, Interpretation of neural networks is fragile, 2019

-

Shah et al, Do input gradients highlight discriminative features? 2021

-

Sensitivity or Gradient-based perturbations

-

Shapley coefficients

-

Variational formulations

Explanations in ML

Shapley Value

- efficiency

- nullity

- symmetry

Let be an -person cooperative game with characteristic function

- exponential complexity

Lloyd S Shapley. A value for n-person games. Contributions to the Theory of Games, 2(28):307–317, 1953.

How important is each player for the outcome of the game?

marginal contribution of player i with coalition S

Shap-Explanations

inputs

responses

Scott Lundberg and Su-In Lee. A Unified Approach to Interpreting Model Predictions, NeurIPS , 2017

Needs of approximations, largely ad-hoc

predictor

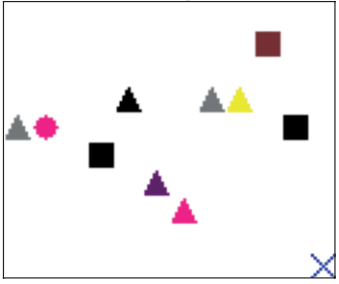

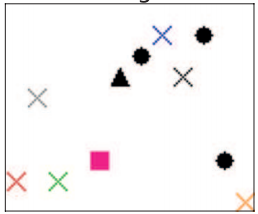

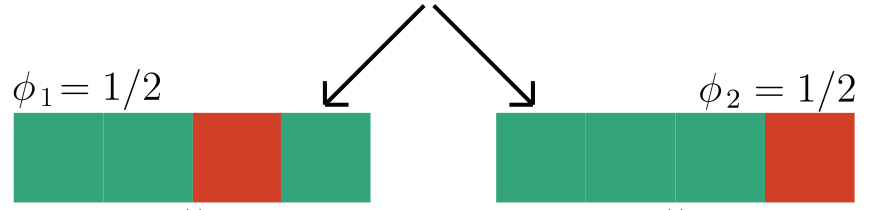

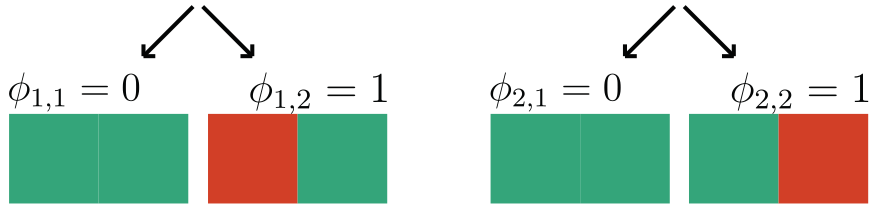

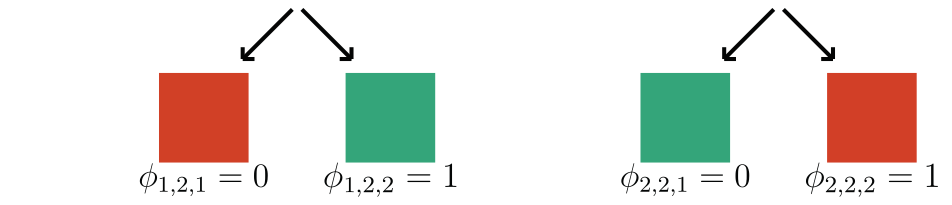

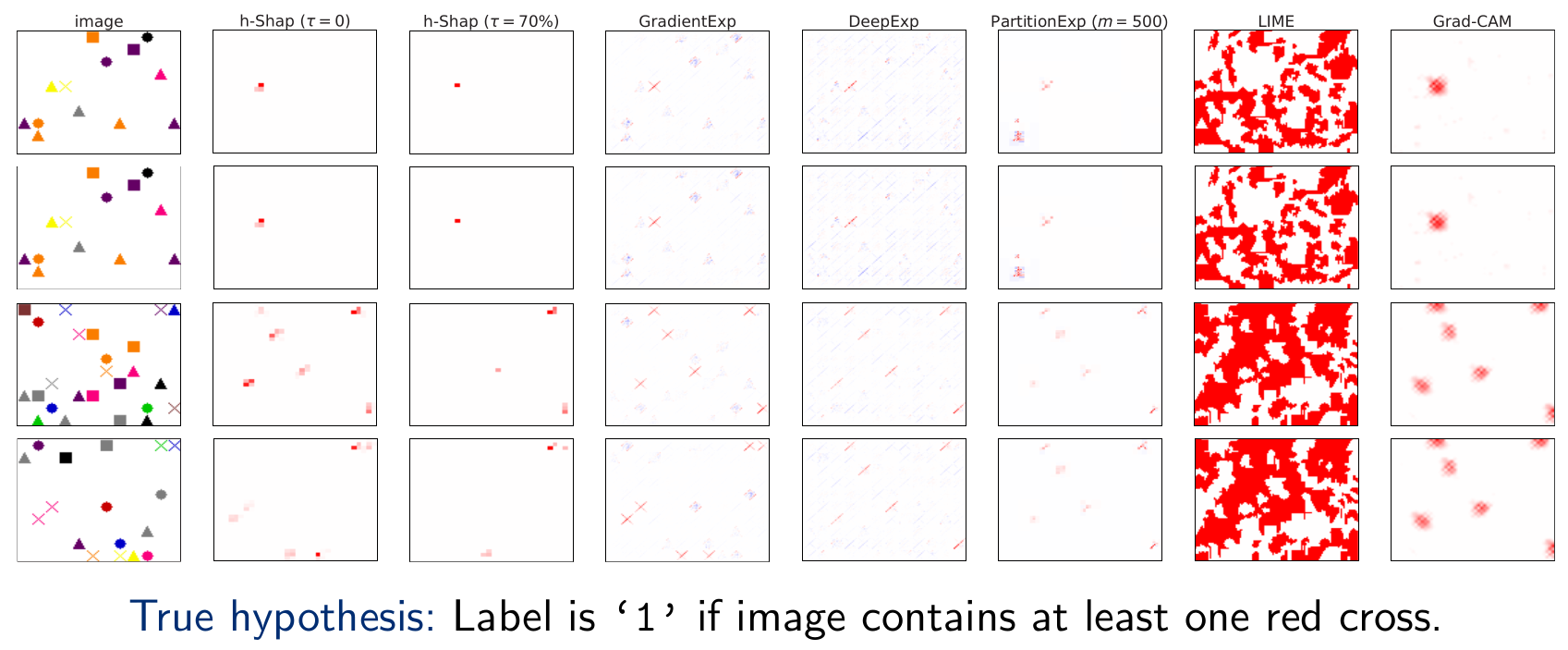

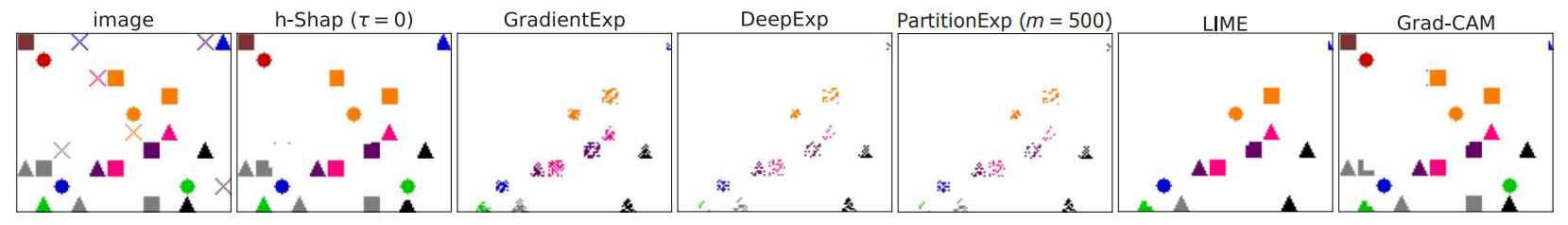

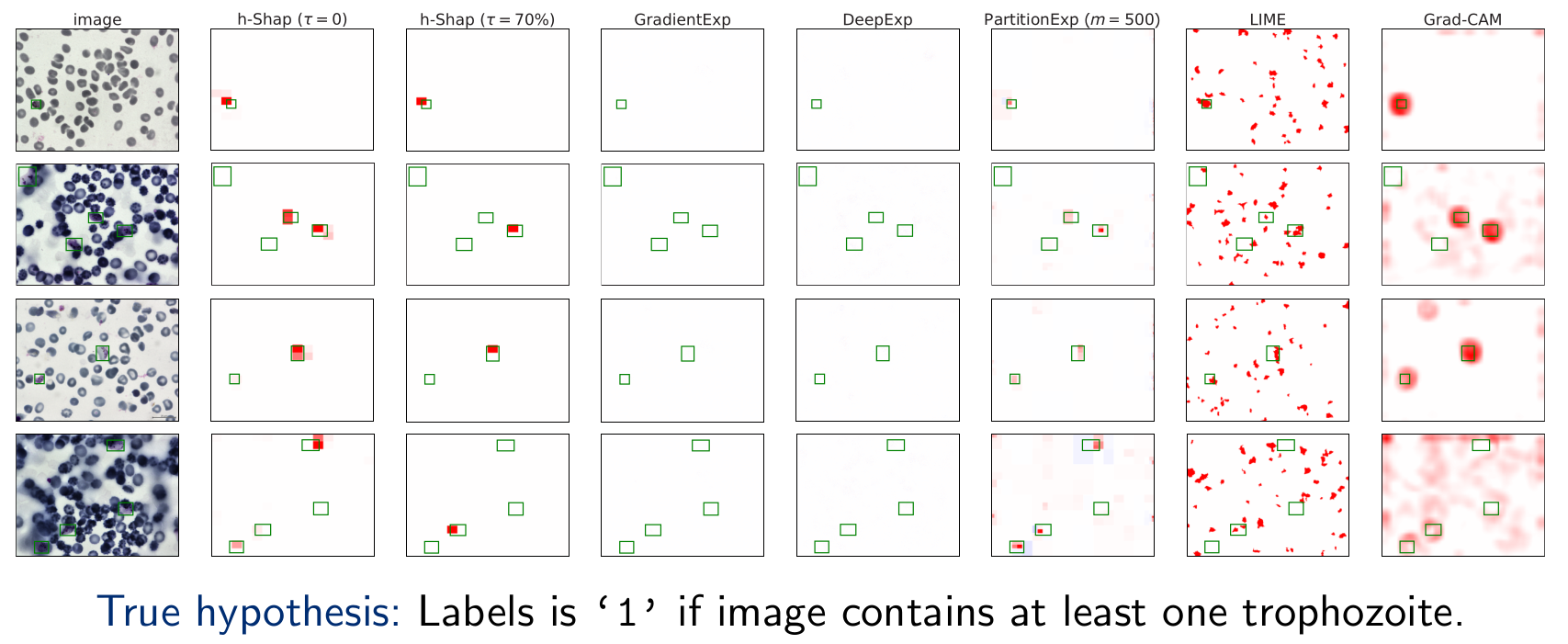

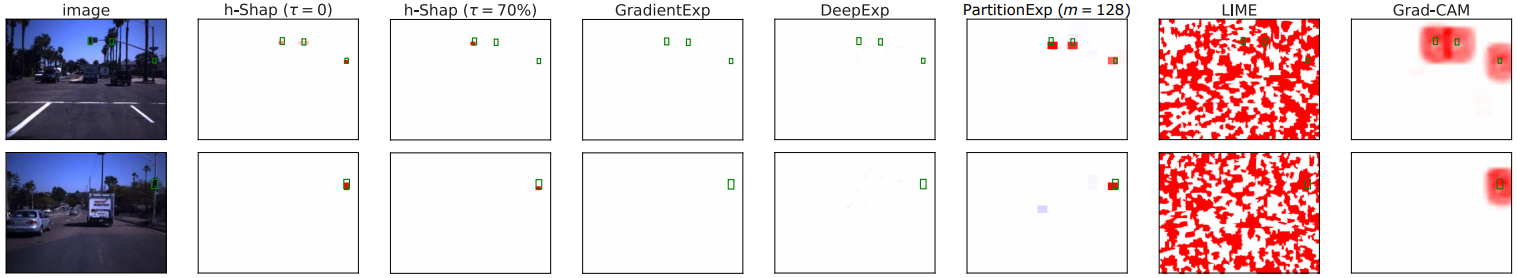

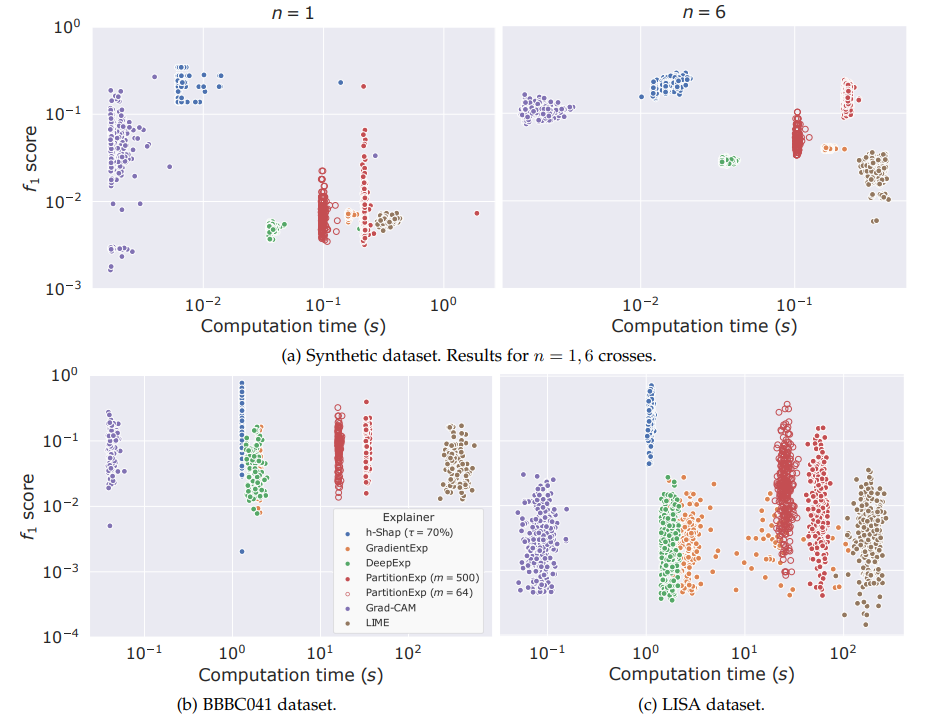

h-Shap: fast hierarchical games

We focus on data with certain structure:

Example:

if contains a cross

Theorem 1 (informal):

- h-Shap runs in

- Under A1, h-Shap -> Shapley

h-Shap: fast hierarchical games

h-Shap: fast hierarchical games

h-Shap: fast hierarchical games

Fast hierarchical games for image explanations,

Teneggi, Luster & Sulam,

IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022

Jacopo T.

JHU

Alex L.

EPFL

From Shapley back to Pearson

You are telling me that pixels play games?

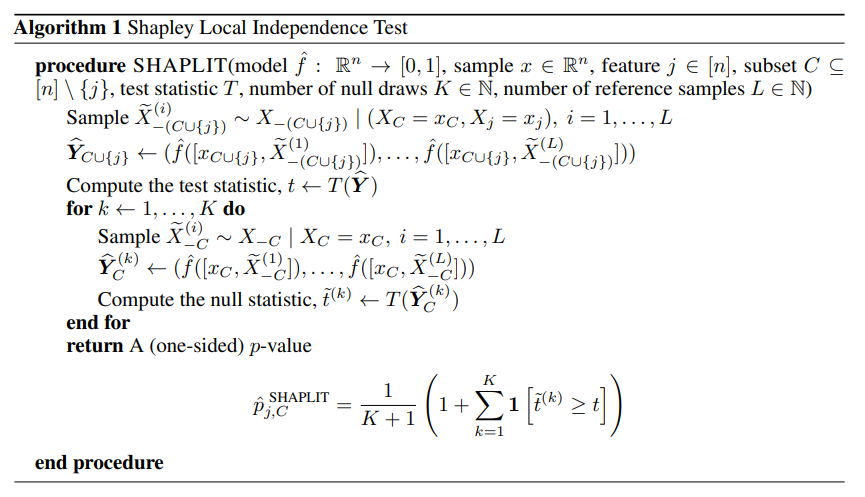

Formal Feature Importance

[Candes et al, 2018]

There exists a procedure that tests for this null and returns a valid p-value,

Local Feature Importance

Lemma:

From Shapley back to Pearson

Theorem 2 (informal):

Large values imply importance in a statistical sense:

Given the Shapley coefficient of any feature

Then

and the p-value obtained for , i.e. ,

From Shapley back to Pearson: Hypothesis Testing via the Shapley Value

J Teneggi, B Bharti, Y Romano, J Sulam

arXiv preprint arXiv:2207.07038

Beepul B.

JHU

Yaniv R.

Technion

Jacopo T.

JHU

What does the Shap Value test for?

Theorem 3 (informal):

Given the Shapley value for the i-th feature, and

Then, under , is a valid p-value and

From Shapley back to Pearson

Partial summary

-

Shapley values are popular among practitioners because of their "theoretical (game theoretic) foundations"

-

Despite their exponential computational advantage, one can often leverage structure in the data to compute or approximate these efficiently

-

Unbeknownst to users, these coefficients do convey statistical meaning with controlled Type I error

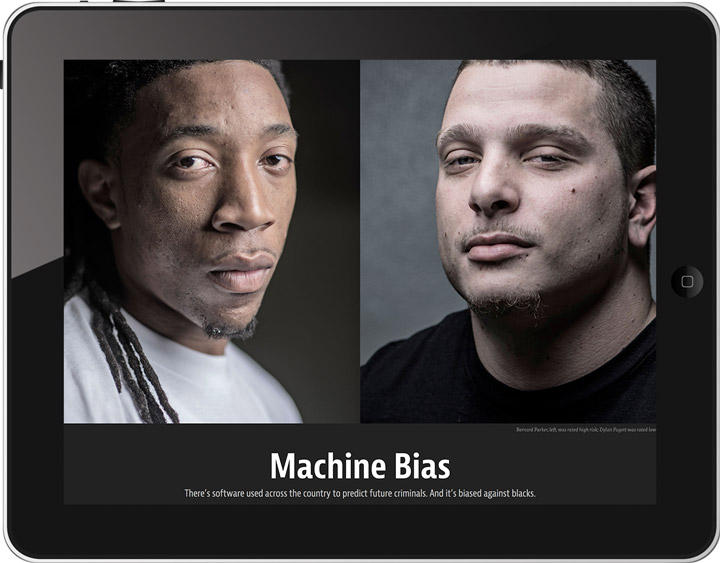

Fairness in ML

Formal Fairness in ML

Demographic Parity

prediction should not be correlated with the protected attribute

TPR should be equal for both groups

Equal Opportunity

Equalized Odds

TPR and FPR should be equal for both groups

Estimating (and controlling) bias requires a dataset

What if we only have ?!

(TPRs)

Formal Fairness in ML

(estimated bias)

Solution?

-

Gupta et al., Proxy fairness, 2018

-

Prost et al., Measuring model fairness under noisy covariates, 2021.

-

Kallus et al., Assessing algorithmic fairness with unobserved protected class using data combination, 2022

-

Awasthi et al., Evaluating fairness of machine learning models under uncertain and incomplete information, 2021.

Fair Predictors with inaccurate sensitive attributes

Assumption 2:

e.g. if h and f use features that are conditionally independent

* only needs the base rates

Can I make

Theorem 4:

Under Assumption 2,

where k is an analytical function of the error of h and the base rates

Controlling for Fairness with predicted attributes

Theorem 5:

Algorithm (informal)

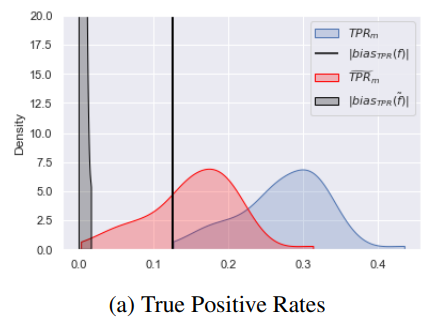

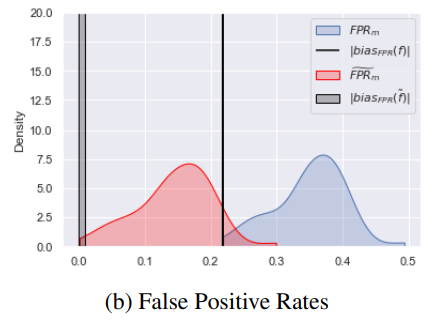

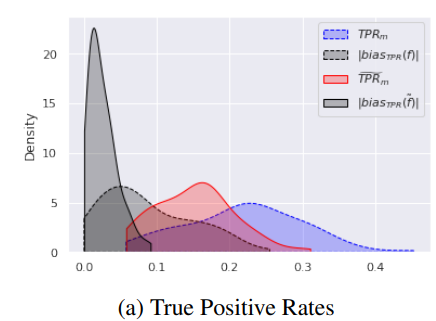

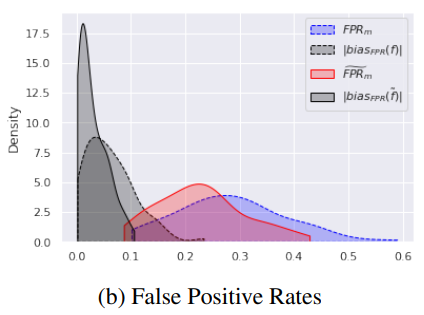

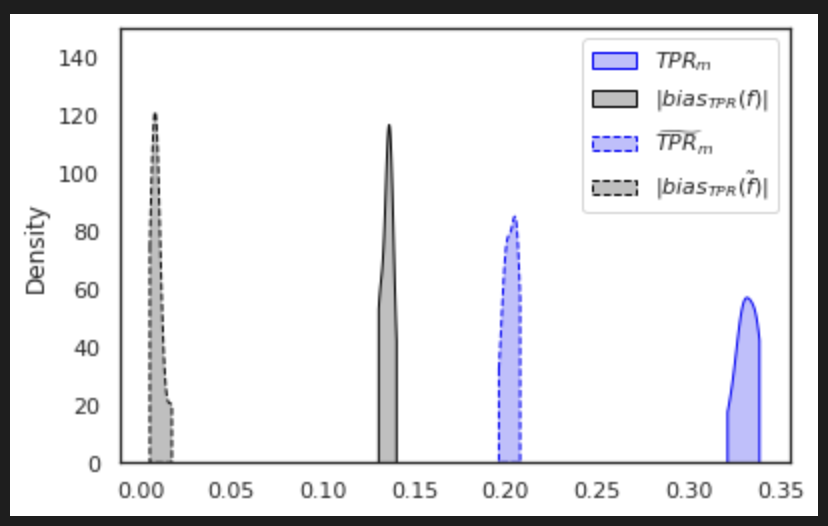

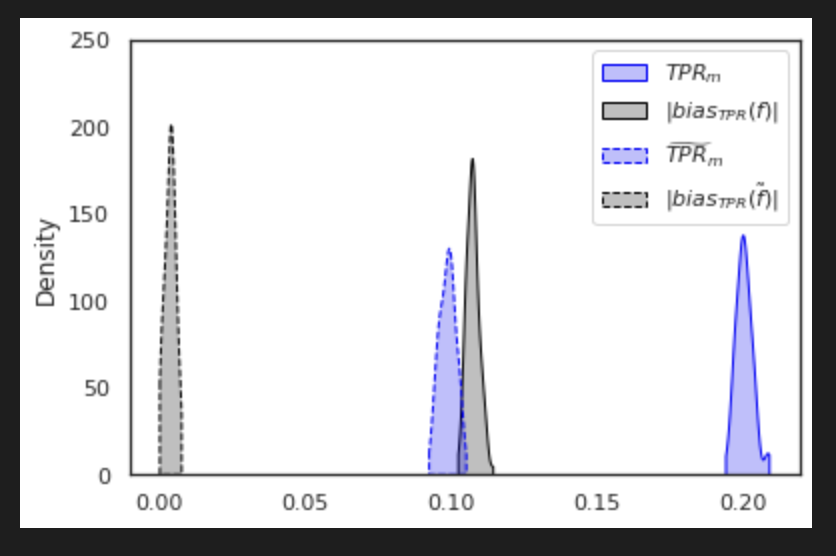

Experiments

Experiments

FIFA 20 Data set

A : nationality = {Argentina,England}

Y : salary = {above of median, below of median}

X : player features = {quality score, name}

Experiments

Estimating and Controlling for Fairness via Sensitive Attribute Predictors

B Bharti, P Yi, J Sulam

arXiv preprint arXiv:2207.12497

Beepul B.

JHU

Paul Yi

UMD

Final Thoughts

-

Fairness can be estimated and controlled for even when data is not fully observable

-

Huge opportunity for development of methods to rigorously enforce Responsible Constraints in machine learning predictors