A Generative Model for Volume Rendering

Matthew Berger, Jixian Li, and Joshua A. Levine

Introduction

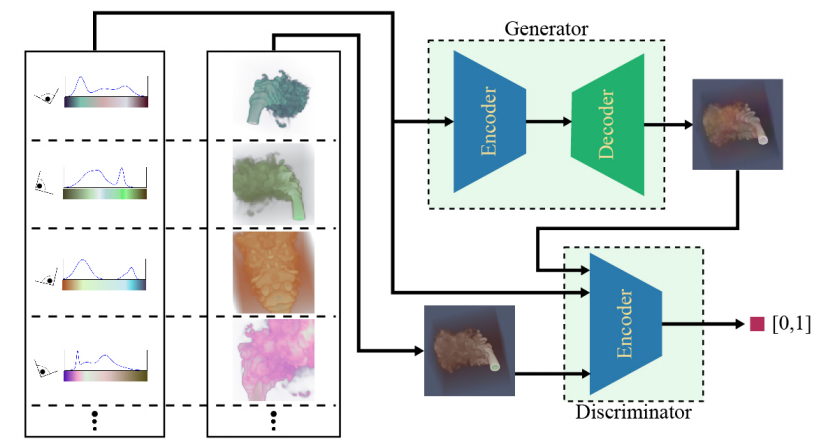

Similar to InSituNet

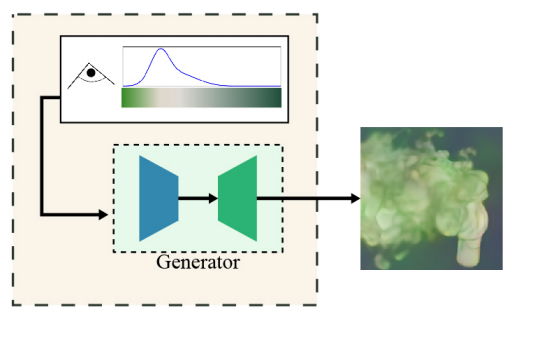

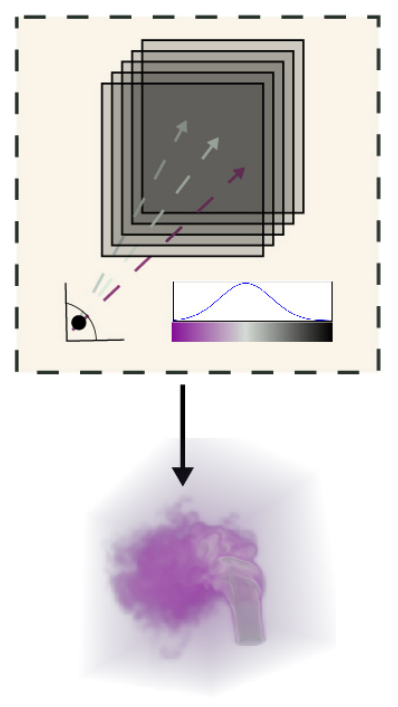

The general idea is to take a "view" and a discretized transfer function, and produce a volume rendered image.

Learns representation of volume

Introduction

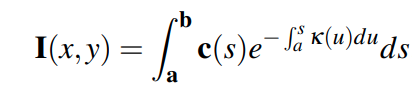

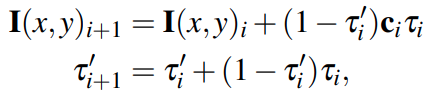

Volume Rendering

The General Idea

Generative Adversarial Networks (GANs) again

\(\displaystyle\min_G\displaystyle\max_DL_{adv}(G,D)\)

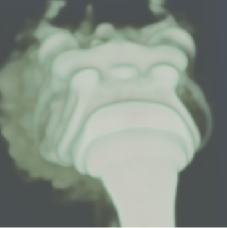

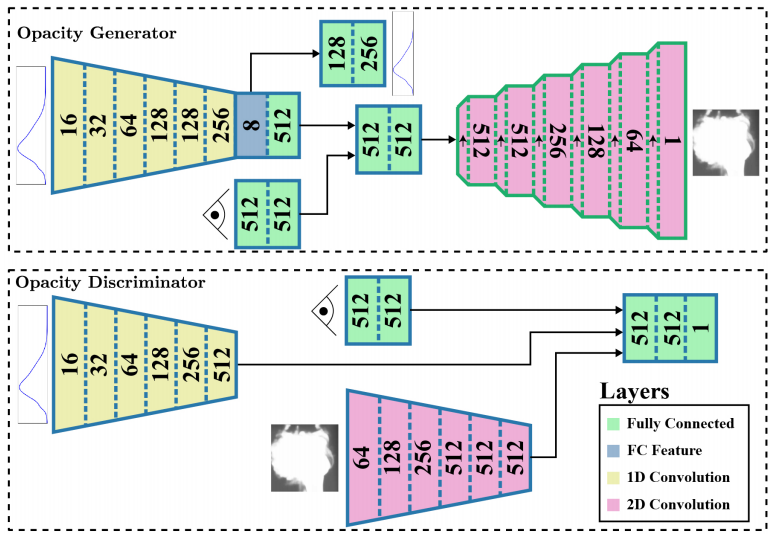

A Tale of Two GANs

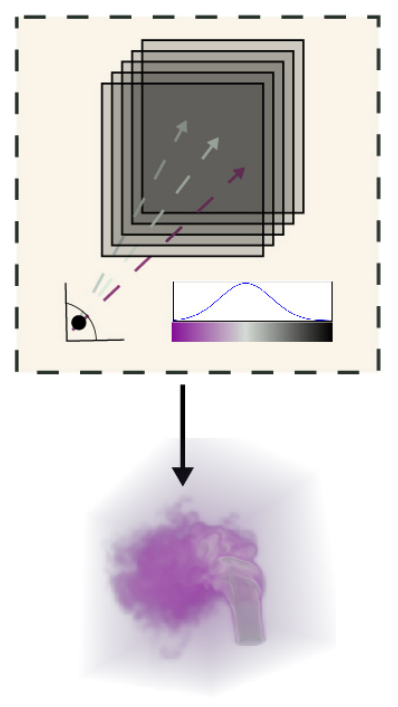

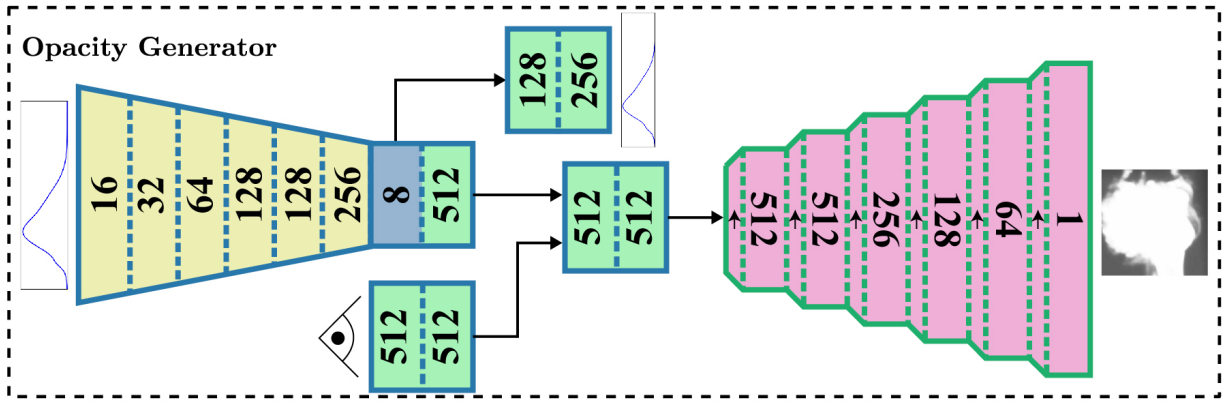

Opacity GAN

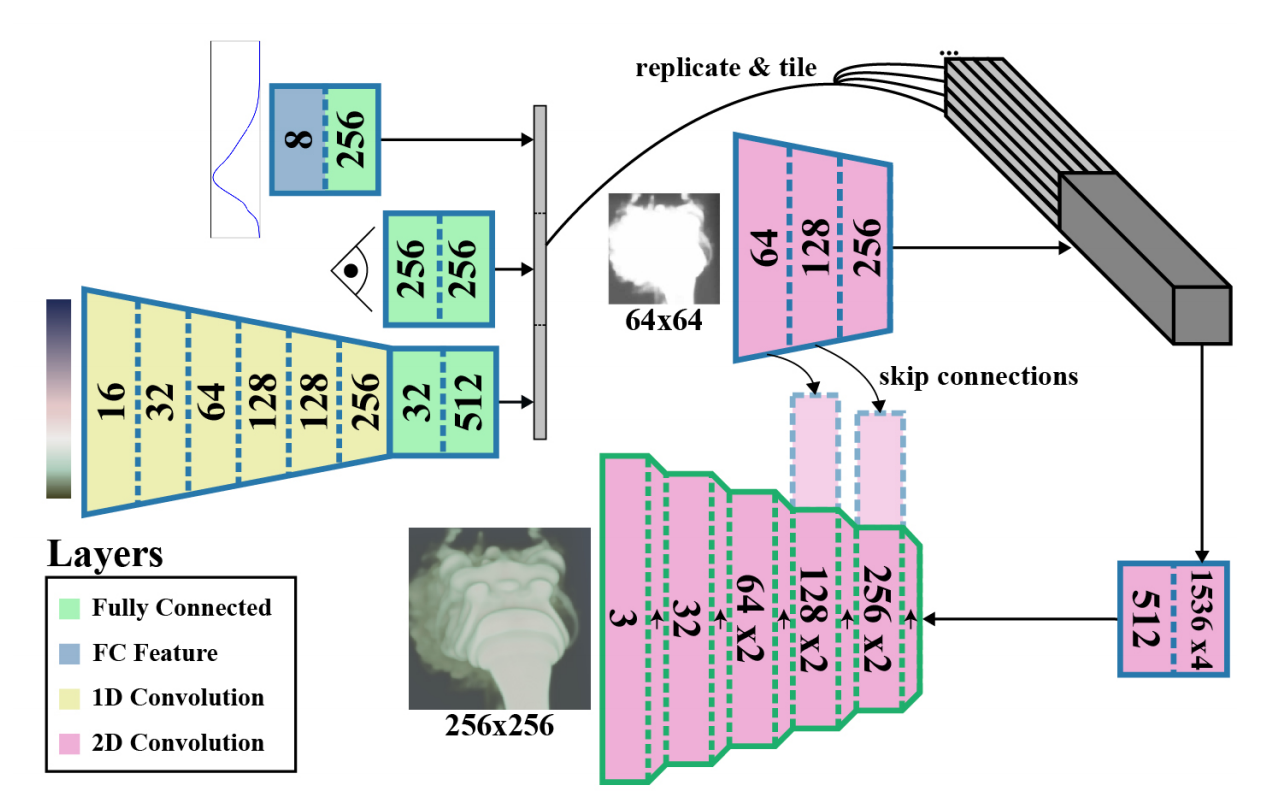

Color GAN

Composing these gives the full generator

input:

output:

A Tale of Two GANs

Why two gans?

- The complex relationship between parameters makes it hard to stabilize training --- this is a common problem for GANs of this structure (largely 'fixed' by Progressive GANs)

- It is relatively easier to train a GAN to generate a low-resolution image (which is often stable)

- Image-to-image upscaling DCGANs are also quite stable

A Tale of Two GANs

Opacity GAN

A Tale of Two GANs

Color GAN

Training

Training Data

Acquiring data is relatively easy.

- Fix the model

- A view is randomly chosen

- A color image and opacity image are rendered

view: (azimuth sin, azimuth cos, elevation, in plane rotation, distance to camera)

200,000 samples generated per model

Training

Procedure

Not much interesting here

Opacity GAN:

- Learning rate = \(2\times10^{-4}\), halved every 5 epochs

- Typical Wasserstein loss

- Unsure about discriminator-generator ratio

Color GAN:

- Learning rate = \(8\times10^{-6}\), halved every 8 epochs

- Color transfer function represented in LAB space

- Typical DCGAN loss

- Unsure about discriminator-generator ratio

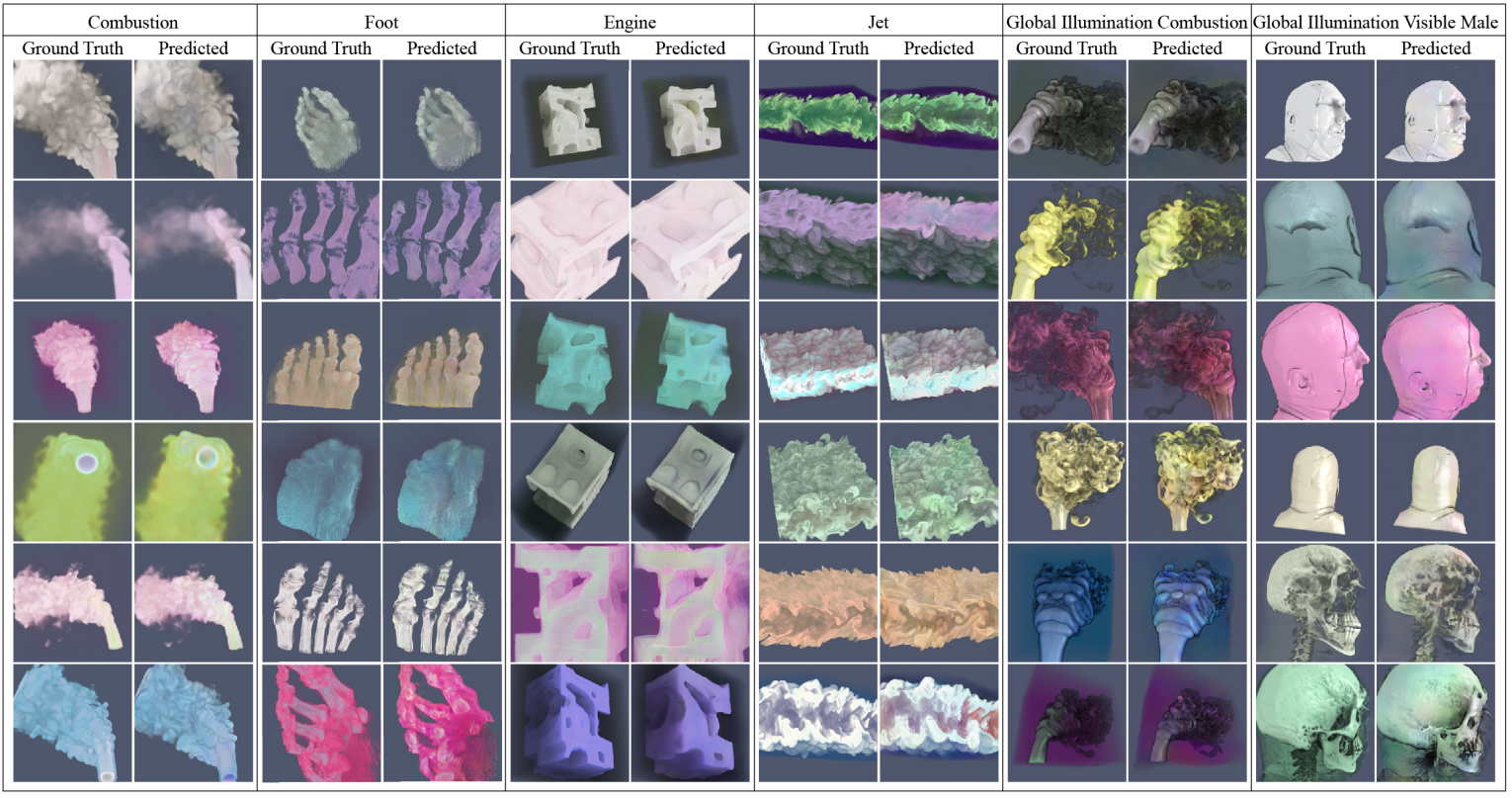

Results

Other Experiments

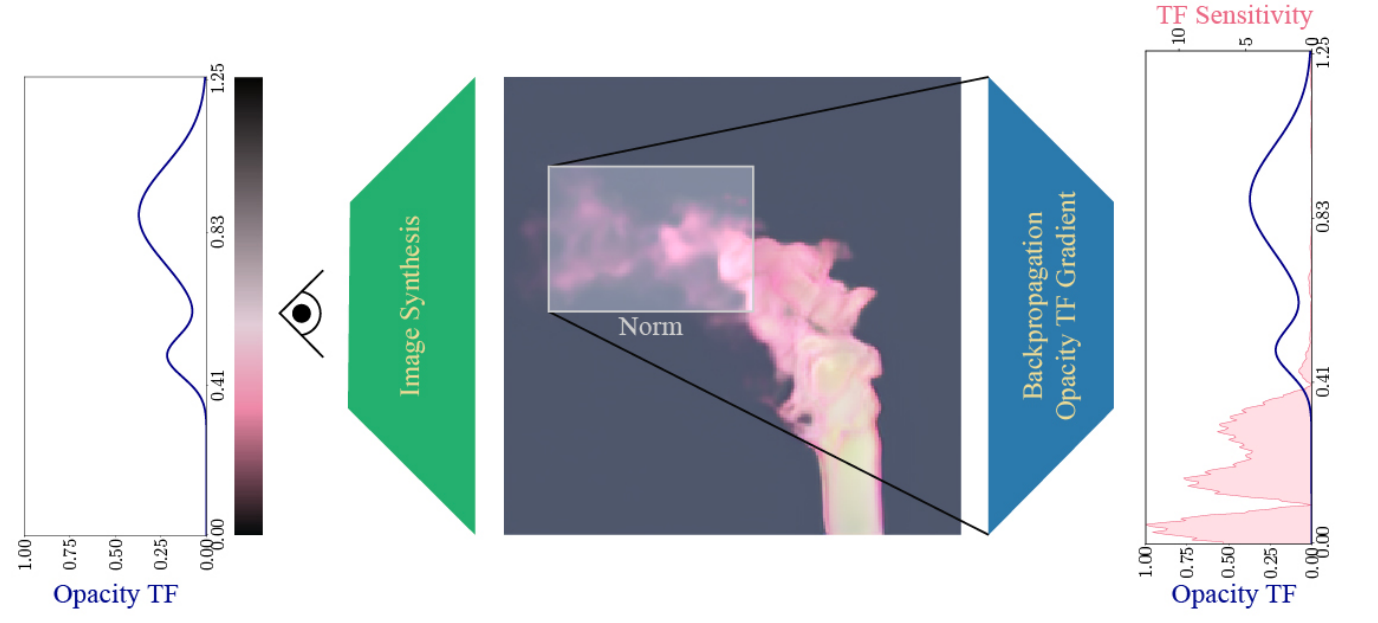

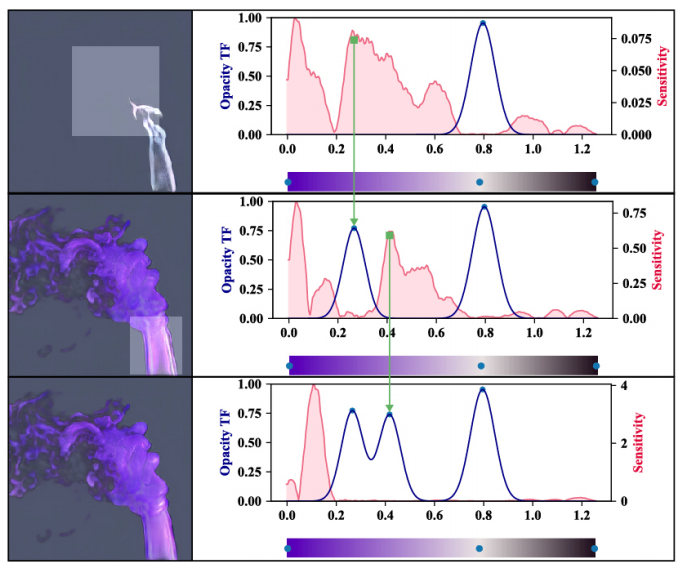

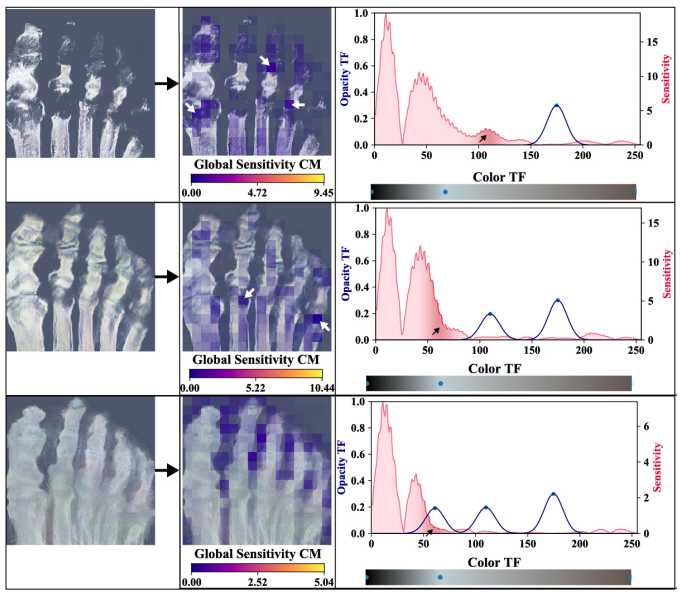

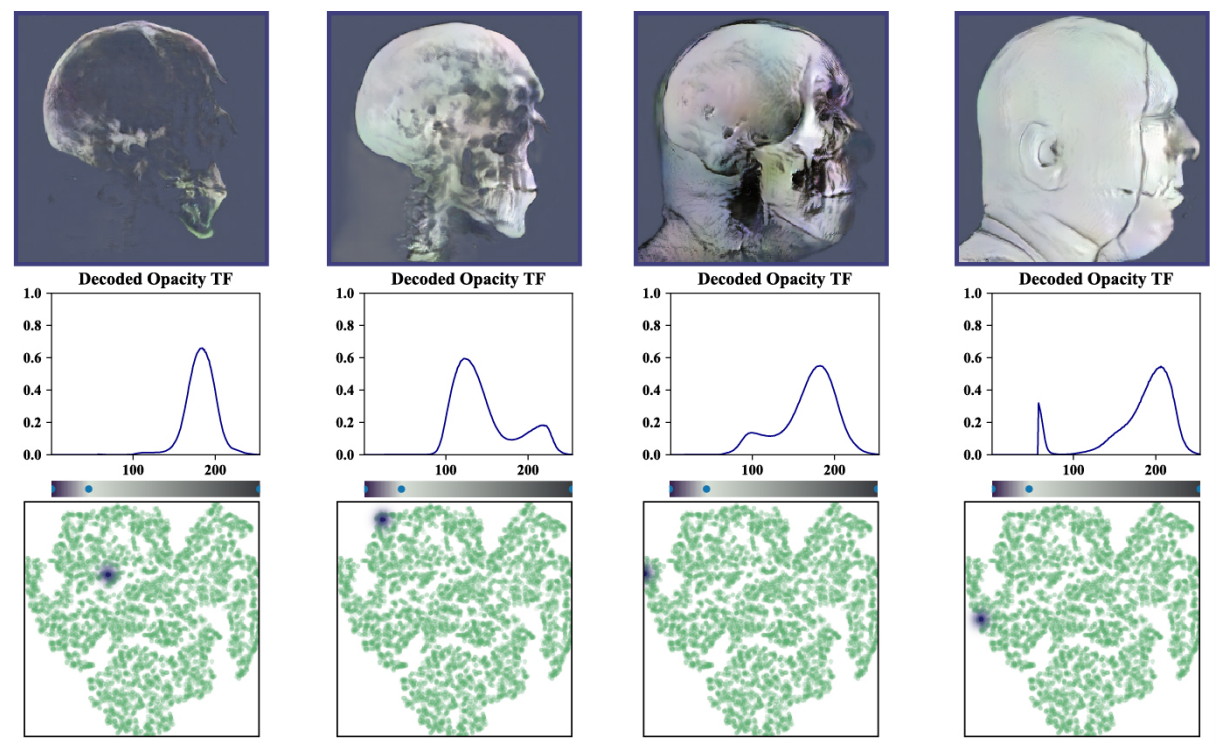

Transfer function sensitivity

Other Experiments

Transfer function sensitivity

Other Experiments

Transfer function sensitivity

Other Experiments

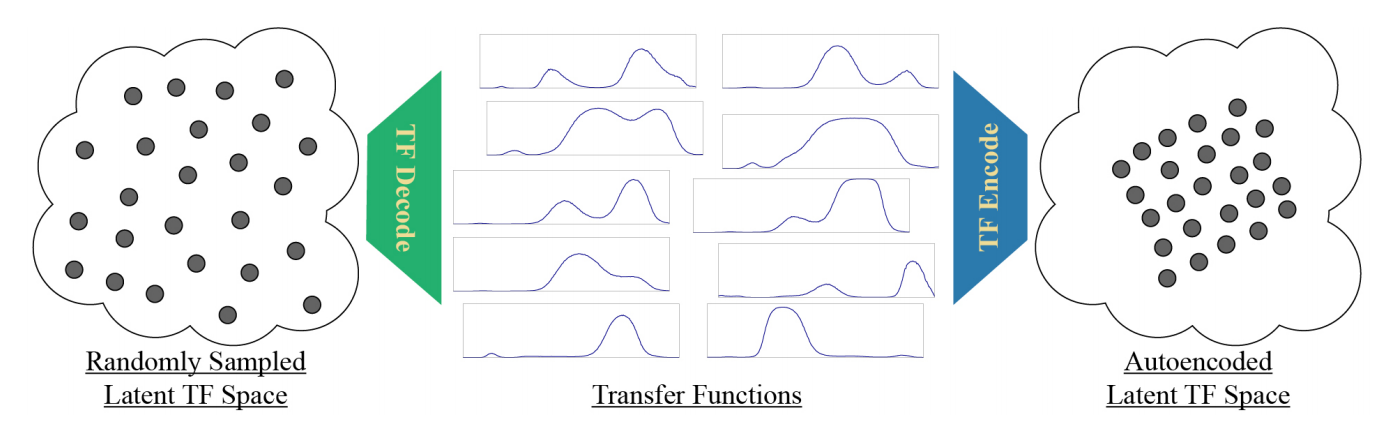

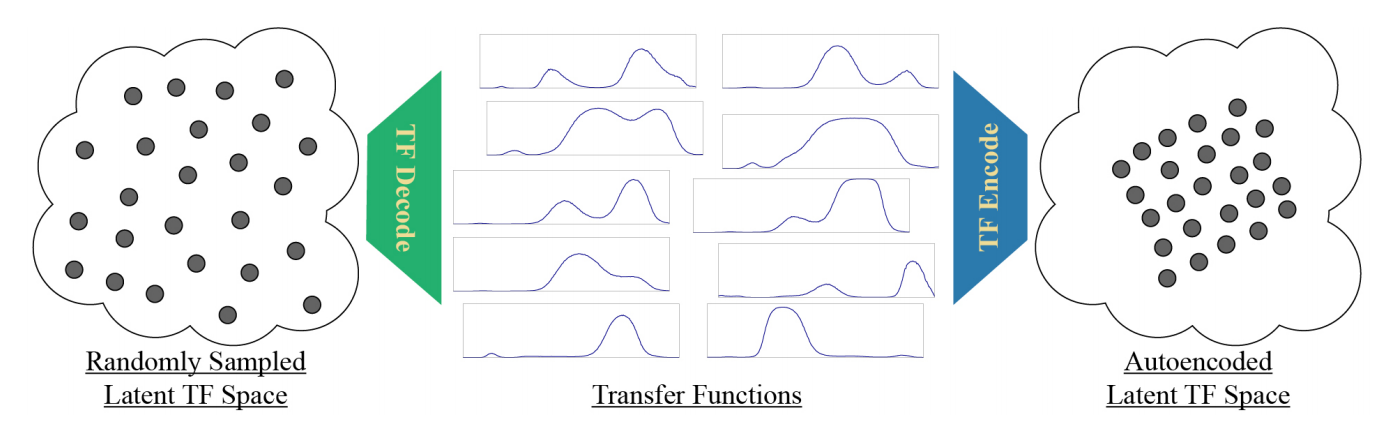

Latent space exploration

Other Experiments

Latent space exploration

High dimensional points, use tSNE to reduce dimensionality (to 2D)

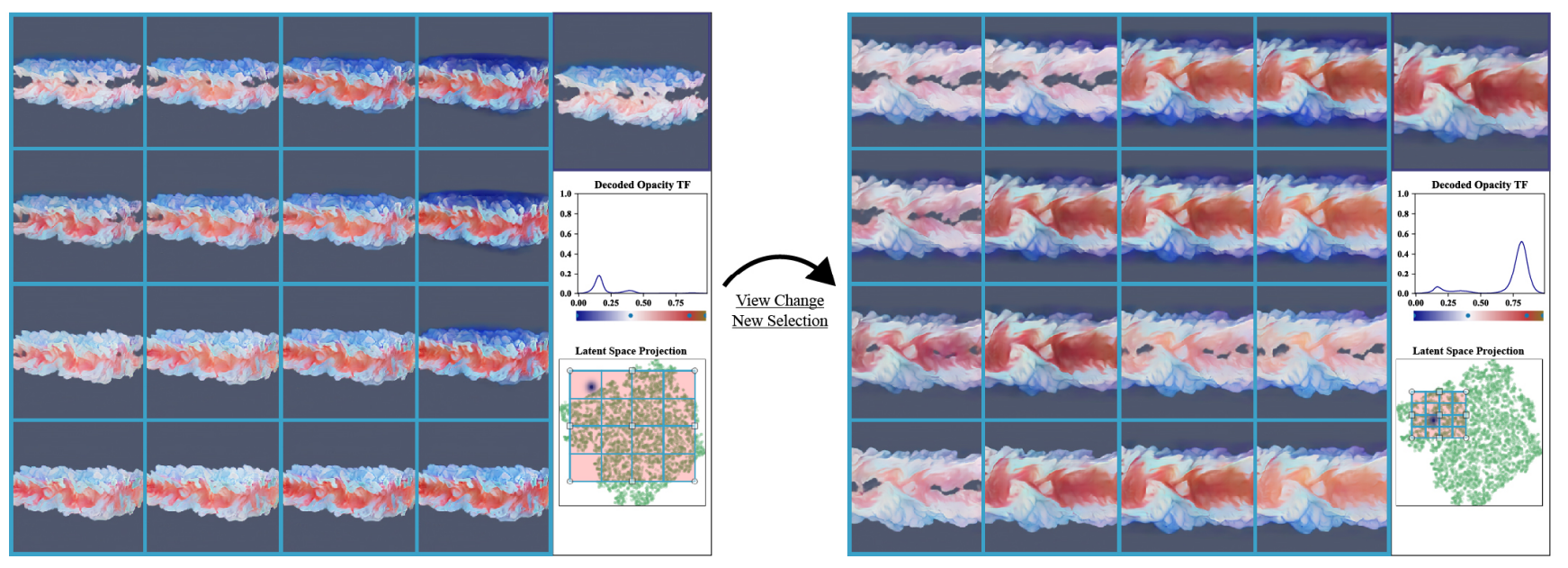

Other Experiments

Latent space exploration

Other Experiments

Latent space exploration

Text