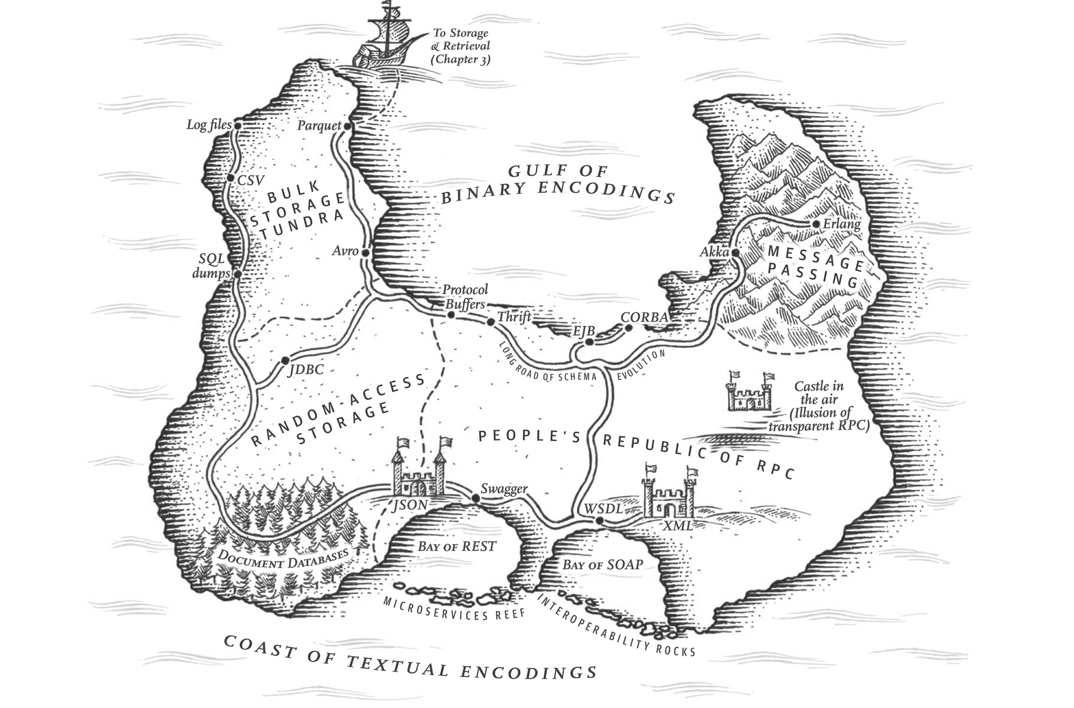

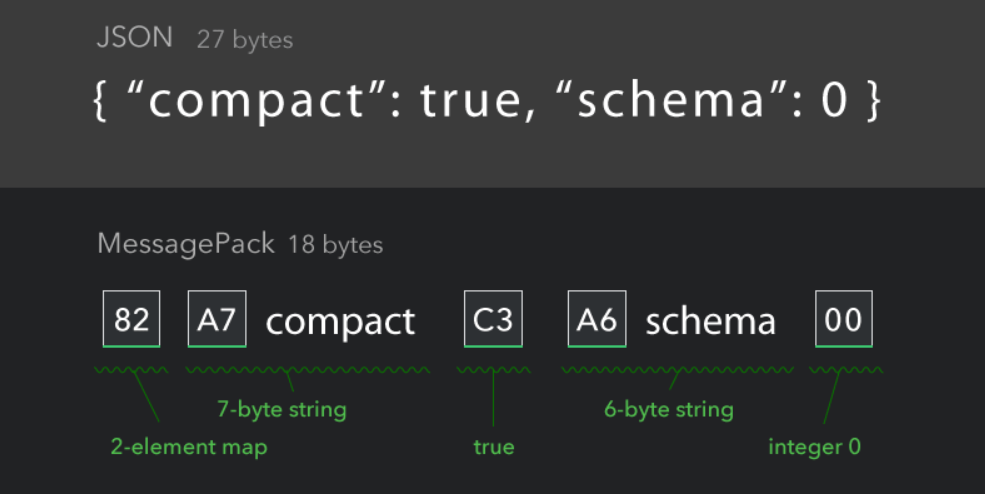

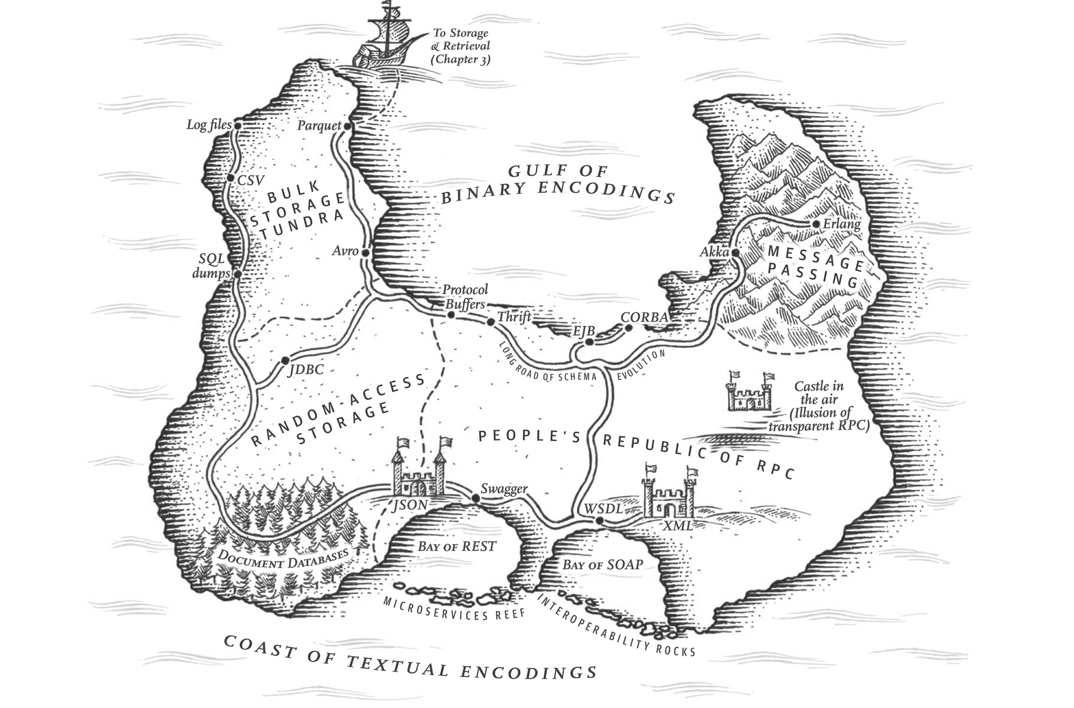

Image source: Designing Data Intensive Applications, by Martin Kleppmann

In the beginning...

everything was chaos (and proprietary)

no standard ruled the network...

until ASN.1 appeared

1984

more standards became popular...

Sun Microsystems developed XDR for Sun RPC

1987

and a revolution started...

the World Wide Web was born and became available to the public

1993

and with it...

XML

1996

in need of web friendly RPC protocols...

XML-RPC was born

1998

becoming a widely used standard...

SOAP

2000

and everything was chaos...

a widely-used chaos

2000

in need of a better and more intuitive web...

REST was born...

2000

and following it, our favorite...

{ JSON }

2001

and JSON became Queen 👑 ...

2023

and still is...

but there is...

Life Beyond JSON

Exploring protocols and binary formats in Node.js

Image source: Designing Data Intensive Applications, by Martin Kleppmann

Encoding / Decoding

Serialization / Deserialization

🗄️Databases

📦Networking

✉️Message Passing

JSON

✔ Text based

✔ Schema-less (optional support with json-schema)

✔ Highly supported (Web native)

✔ Widely used in REST APIs, GraphQL, and json-rpc

{

"name": "Baal",

"player": "Julian Duque",

"profession": "Developer and Educator",

"species": "Human",

"playing": true,

"level": 14,

"attributes": {

"strength": 12,

"dexterity": 9,

"constitution": 10,

"intelligence": 13,

"wisdom": 9,

"charisma": 14

},

"proficiencies": [

"node",

"backend",

"rpgs",

"education"

]

}JavaScript Object Notation

Binary Serialization Formats

MsgPack

Protobuf

Avro

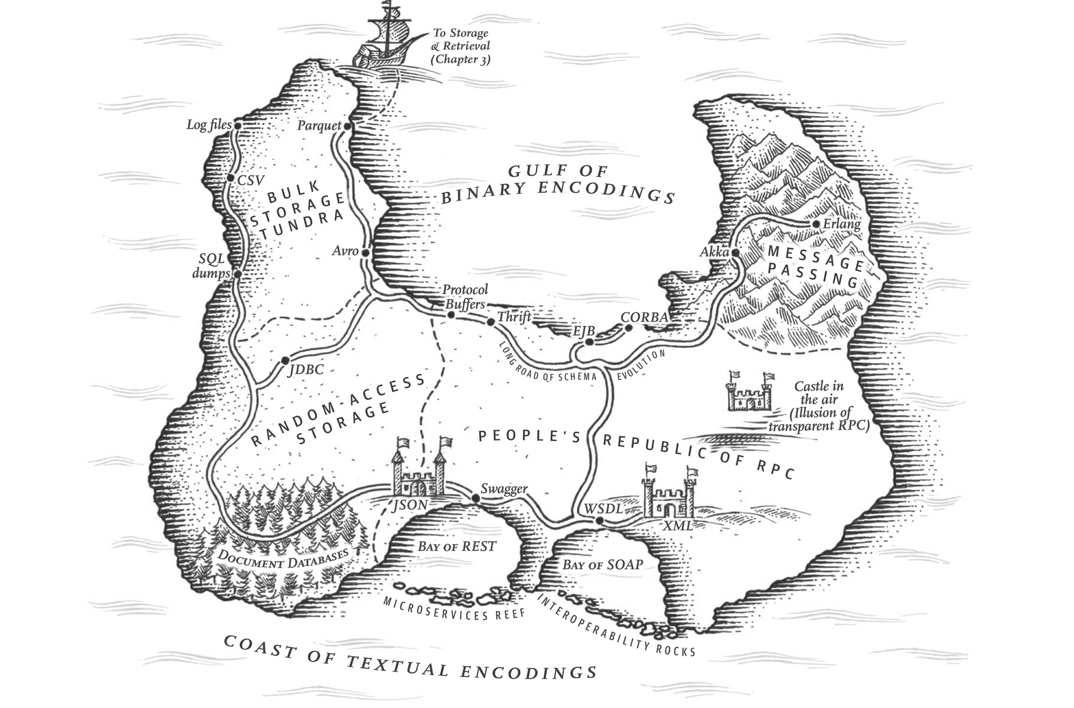

MsgPack

✔ Schema-less

✔ Smaller than JSON

✔ Not human-readable

✔ Supported by over 50 programming languages and environments

Image source: msgpack.org

$ npm install @msgpack/msgpack

ℹ️ Node.js JSON encoding/decoding is faster

MsgPack

const fs = require('fs')

const path = require('path')

const assert = require('assert')

const { encode, decode } = require('@msgpack/msgpack')

const character = require('./character.json')

const jsonString = JSON.stringify(character)

const characterEncoded = encode(character)

console.log('JSON Size: ', Buffer.byteLength(jsonString))

console.log('MsgPack Size: ', Buffer.byteLength(characterEncoded))

assert.deepStrictEqual(character, decode(characterEncoded))

fs.writeFileSync(path.join(__dirname, 'out', 'character-msgpack.dat'), characterEncoded)

Protocol Buffers

✔ Created by Google

✔ Schema-based

✔ Super fast and small

✔ Support for code generation

✔ Can define a protocol for RPC

syntax = "proto3";

message Character {

message Attribute {

int32 strength = 1;

int32 dexterity = 2;

int32 constitution = 3;

int32 intelligence = 4;

int32 wisdom = 5;

int32 charisma = 6;

}

string name = 1;

string player = 2;

string profession = 3;

string species = 4;

int32 level = 5;

bool playing = 6;

Attribute attributes = 7;

repeated string proficiencies = 8;

}$ npm install protobufjs

Protocol Buffers

const fs = require('fs')

const path = require('path')

const assert = require('assert')

const protobufjs = require('protobufjs')

const character = require('./character.json')

const main = async () => {

const root = await protobufjs.load(path.join(__dirname, 'schema', 'Character.proto'))

const Character = root.lookupType('Character')

const err = Character.verify(character)

if (err) throw err

const message = Character.create(character)

const buffer = Character.encode(message).finish()

console.log('JSON Size: ', Buffer.byteLength(JSON.stringify(character)))

console.log('Protobuf Size: ', Buffer.byteLength(buffer))

const decodeMsg = Character.decode(buffer)

const obj = Character.toObject(decodeMsg)

assert.deepStrictEqual(character, obj)

fs.writeFileSync(path.join(__dirname, 'out', 'character-protobuf.dat'), buffer)

}

main().catch((err) => console.error(err))Avro

protocol Tabletop {

record Attribute {

int strength;

int dexterity;

int constitution;

int intelligence;

int wisdom;

int charisma;

}

record Character {

string name;

string player;

string profession;

string species;

boolean playing = true;

int level;

Attribute attributes;

array<string> proficiencies;

}

}$ npm install avsc

✔ Project by the Apache Foundation

✔ Started as part of Hadoop

✔ Schema-based (JSON / IDL)

✔ Super fast and small 🚀

✔ Can define a protocol for RPC

Avro

const fs = require('fs')

const path = require('path')

const assert = require('assert')

const avro = require('avsc')

const character = require('./character.json')

const schema = fs.readFileSync(path.join(__dirname, 'schema', 'Character.avsc'), 'utf8')

const type = avro.Type.forSchema(JSON.parse(schema))

const buffer = type.toBuffer(character)

const value = type.fromBuffer(buffer)

console.log('JSON Size: ', Buffer.byteLength(JSON.stringify(character)))

console.log('Avro Size: ', Buffer.byteLength(buffer))

assert(type.isValid(character))

assert.deepEqual(value, character)

fs.writeFileSync(path.join(__dirname, 'out', 'character-avro.dat'), buffer)

🗄️Databases

✔ MsgPack is supported by Redis Lua and TreasureData

✔ Avro is used in many databases and data-heavy services:

BigQuery

Hadoop

Cloudera Impala

AWS Athena

📦 Networking

✔ MsgPack is supported by ZeroRPC

✔ Protocol Buffers is the main standard for gRPC

✔ Avro has its own RPC implementation

✉️ Messaging

✔ Both Protocol Buffers and Avro are widely supported in Apache Kafka

✔ Kafka supports its own Schema-Registy to keep a repository of schemas and version evolution

✔ Salesforce uses both Protocol Buffers (gRPC) and Avro for its Pub/Sub API

gRPC for the underlying message interchange format

Avro for the event payload

🚀 Benchmarks

Last thoughts

✔️ Optimal for internal integrations and controlled microservices

✔️ Perfect when throughput, bandwidth, and/or performance are key

✔️ Keep backwards and forward compatibility through Schema evolution

Thank you RenderATL!

Image source: Designing Data Intensive Applications, by Martin Kleppmann