Unified Task Parallelism

Julian Samaroo (MIT)

What if every function you called was parallel and scalable?

What if every function you called was ready and able to run on GPUs?

What if every function you called adapted itself to run optimally on your hardware?

What if you didn't have to rewrite your code each time to run on one thread, 100 threads, or 16 GPUs?

What if this library already exists in Julia?

What is this vision, really?

A Bold VISION!

Building a scalable, heterogeneous computing library that has all the APIs users need, with a sensible and consistent design, that all builds on a single simple task API.

A show of hands

This is easy with Dagger.jl

# Cholesky

Dagger.spawn_datadeps() do

for k in range(1, mt)

Dagger.@spawn LAPACK.potrf!(

'L',

ReadWrite(M[k, k]))

for m in range(k+1, mt)

Dagger.@spawn BLAS.trsm!(

'R', 'L', 'T', 'N', 1.0,

Read(M[k, k]), ReadWrite(M[m, k]))

end

for n in range(k+1, nt)

Dagger.@spawn BLAS.syrk!(

'L', 'N', -1.0,

Read(M[n, k]), 1.0, ReadWrite(M[n, n]))

for m in range(n+1, mt)

Dagger.@spawn BLAS.gemm!(

'N', 'T', -1.0,

Read(M[m, k]), Read(M[n, k]), 1.0, ReadWrite(M[m, n]))

end

end

end

end# Start an SPMD region with threads

X_all = spmd(Threads.nthreads()) do

# Have a rank per thread

rank = spmd_rank()

X = rand(4,4)

for iter in 1:niters

# Do a local thing on each rank

X .*= 3

# Do a collective op across all ranks

spmd_reduce!(+, X)

end

return X

end# Distribution Analysis

function analysis(dists, lens, K=1000)

res = DataFrame()

@sync for T in dists

dist = T()

σ = Dagger.@spawn std(dist)

for L in lens

z = Dagger.@spawn max_mean(

dist, L, K, σ)

push!(res, (;T, σ, L, z))

end

end

mapcols!(col->fetch.(col), res)

return res

end# Allocate a DArray

A = rand(AutoBlocks(), 1024, 1024)

# Matmul

B = A * A

# Broadcast

C = B .* A ./ 3

# QR

D = qr(C).U

# Triangular solve

X = rand(AutoBlocks(), 1024)

ldiv!(D, X)

# Broadcast (in-place)

X .+= 2Tasks

Arrays

Datadeps

SPMD

APIs

-

Arrays

- Tables

- Graphs

Data Flow

- Streaming

- SPMD

Acceleration

- Multithreading

- Multiprocessing

- GPUs

Dagger meets you at your problem

Devices

- Workers

- CPU threads

- GPU devices

- Memory spaces

- Disk devices

Measured Metrics

- Execution time

- Allocations

- Network transfer time

- Data locality

Dagger knows your hardware

Features

- Built-in dynamic scheduler

- Work-stealing load balancer

- Automatic data movement

- Out-of-core and checkpointing

- Lazy file loading

- Fault tolerance

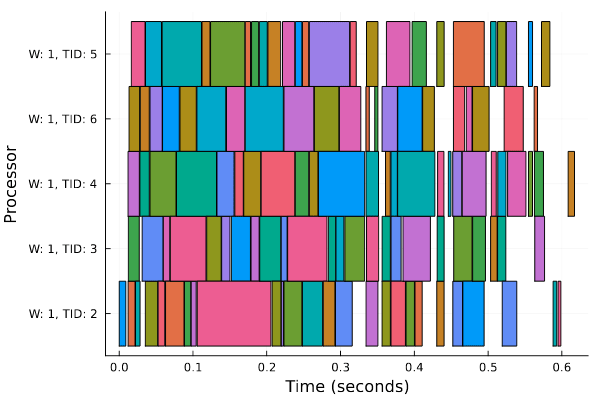

- Logging and visualization

Dagger is convenient

- Most packages try to solve one/few problem(s) well, and roll their own parallelism

- This is OK for domain-specific functionality, but is not composable with other parallel packages

- The domain-specifics and the parallelism implementation become non-separable

- Many different infrastructure solutions == many re-implementations of same algorithms

- Users get confused when deciding between various parallelism implementations

- Can we settle on one solid foundation?

Why not that other parallelism package XYZ.jl?

Barriers to adoption

- Dagger does have overhead, and always will

- ...but most of this is incidental, and can be fixed!

- Requires application of basic software engineering practices (memoization, memory reuse, etc.)

- Very likely, >95% of overhead can be removed

- Help wanted!

Overhead/memory usage

- Users want a certain set of algorithms available (and fast, efficient, scalable)

- Implementing these algorithms in Dagger is easy, *but* requires some domain expertise

- We need more contributors!

missing algorithms

- A universal API should be well documented so users know how to use it

- Yet, Dagger's documentation is currently lacking, and examples are few and far between

- Without this, users feel lost and confused, and don't stay around long enough to benefit

- This is something I'd love help with!

documentation

- The community isn't yet sure about what foundation to build upon

- This limits progress, as users implement their algorithms over different (read: incompatible) foundational packages

- If the community centralizes on one solution, we limit duplication and can make more progress

community consensus

CONCLUSION

Give Dagger a try for your problem, and reach out if you have any trouble! And, contributions are always welcome :)