Neural Compression and Neural Density Estimation for Cosmological Inference

Justine Zeghal

justine.zeghal@umontreal.ca

Bayesian Deep Learning for Cosmology and Time Domain Astrophysics 3rd ed, Paris, May 22

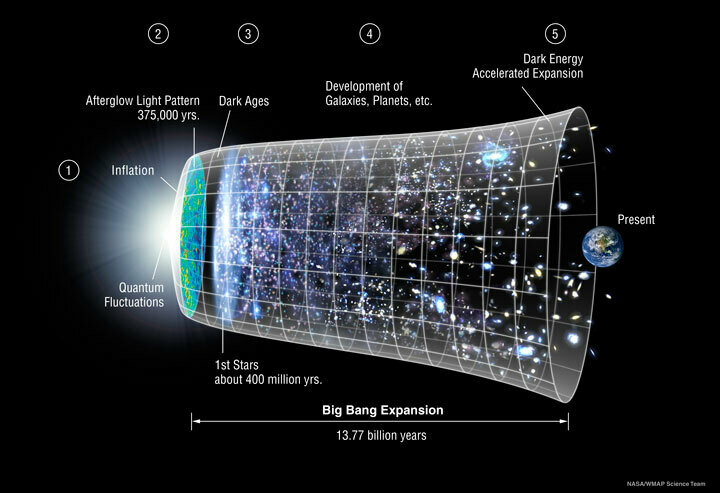

Lambda Cold Dark Matter ( CDM)

The simplest model that best describes our observations is

Relying only on a few parameters:

Suggesting: ordinary matter, cold dark matter (CDM), and dark energy Λ as an explanation of the accelerated expansion.

Goal: determine the value of those parameters based on our observations.

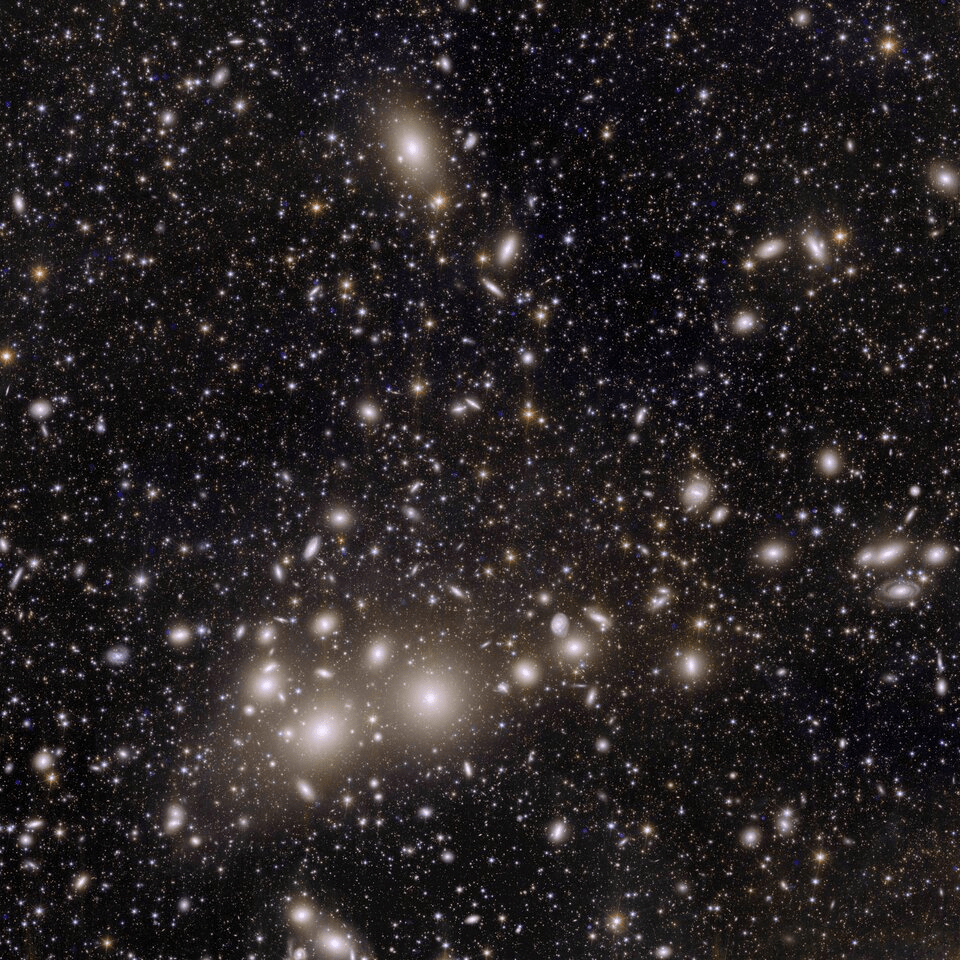

Credit: ESA

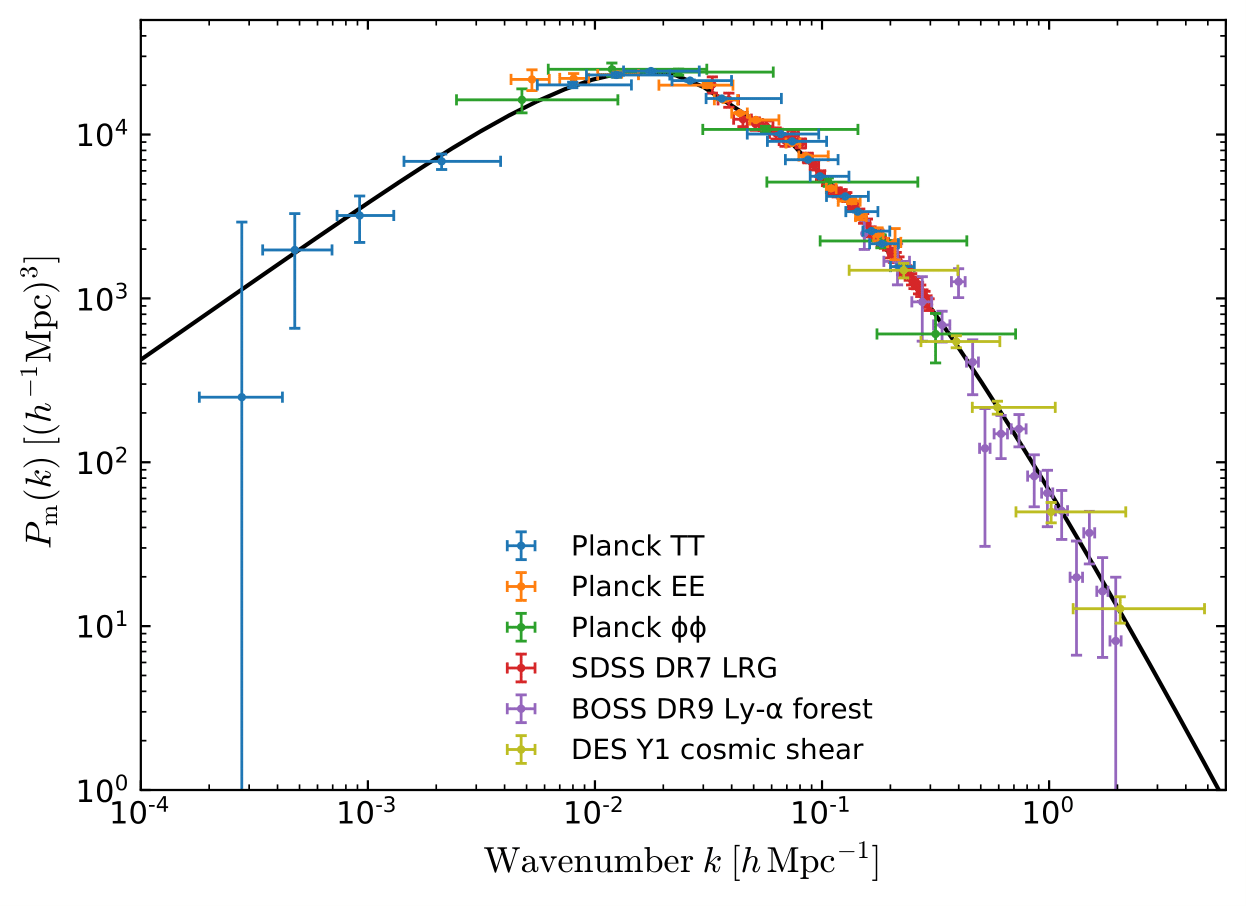

How to constrain cosmological parameters?

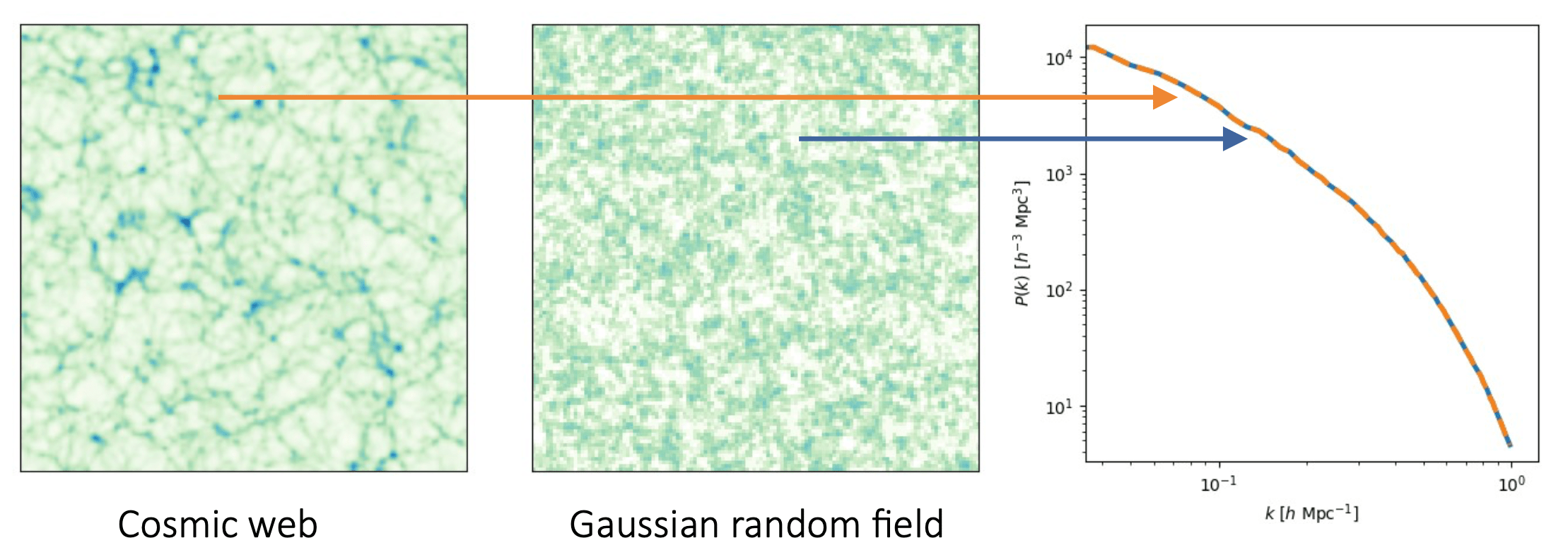

For which we have an analytical likelihood function.

This likelihood function connects our compressed observations to the cosmological parameters.

Bayes theorem:

We need to update our inference methods

The traditional way of constraining cosmological parameters misses information.

This results in constraints on cosmological parameters that are not precise.

Credit: Natalia Porqueres

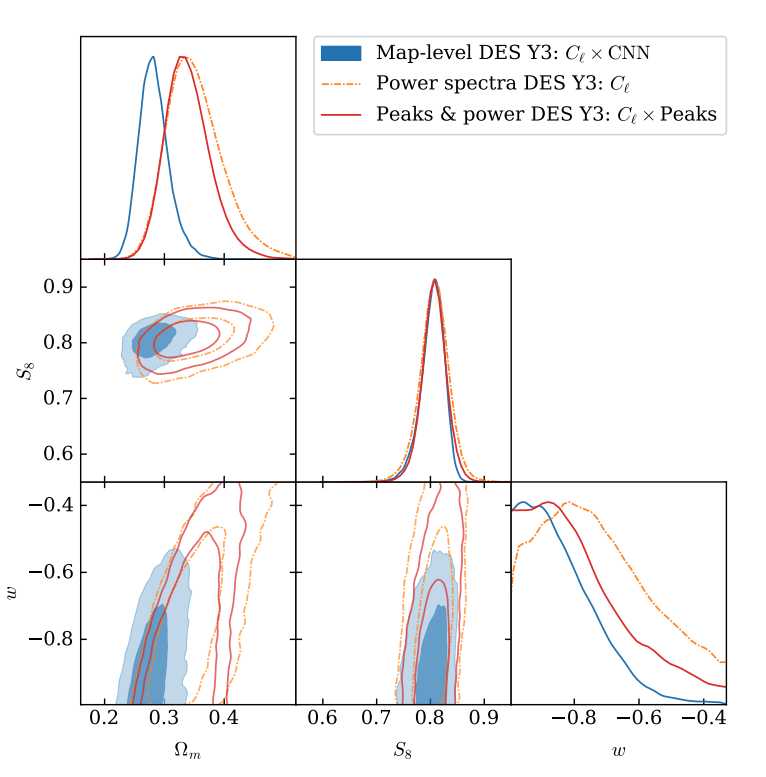

DES Y3 Results (with SBI).

Bayes theorem:

We can build a simulator to map the cosmological parameters to the data.

Prediction

Inference

Full-field inference: extracting all cosmological information

Simulator

Full-field inference: extracting all cosmological information

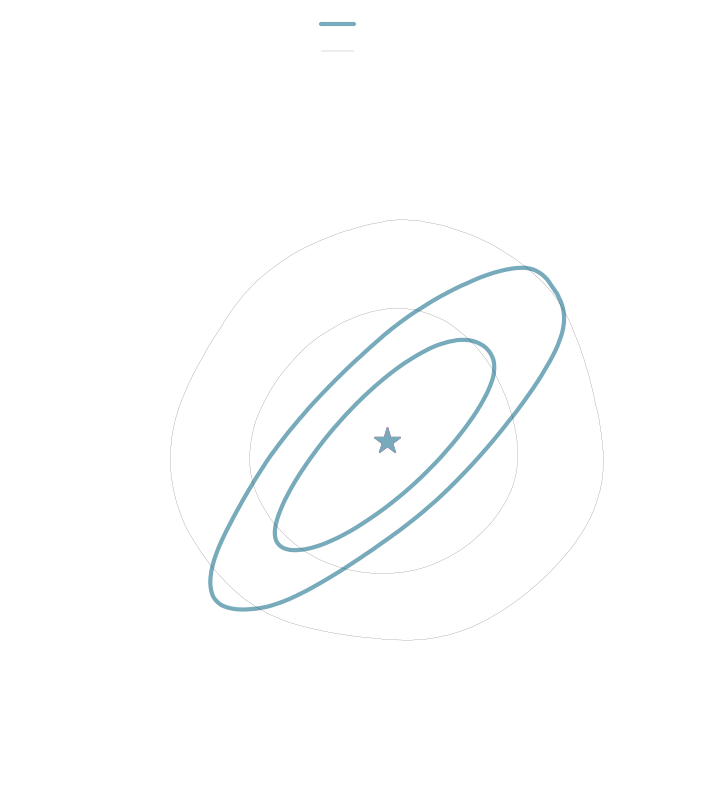

Depending on the simulator’s nature we can either perform

- Explicit inference

- Implicit inference

Simulator

Full-field inference: extracting all cosmological information

-

Explicit inference

Explicit joint likelihood

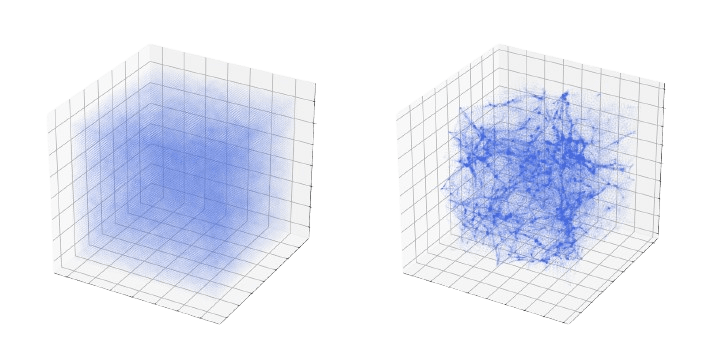

Initial conditions of the Universe

Large Scale Structure

Needs an explicit simulator to sample the joint posterior through MCMC:

We need to sample in extremely

high-dimension

→ gradient-based sampling schemes.

Depending on the simulator’s nature we can either perform

- Explicit inference

- Implicit inference

Full-field inference: extracting all cosmological information

Simulator

-

Implicit inference

It does not matter if the simulator is explicit or implicit because all we need are simulations

This approach typically involve 2 steps:

2) Implicit inference on these summary statistics to approximate the posterior.

1) compression of the high dimensional data into summary statistics. Without loosing cosmological information!

Summary statistics

Full-field inference: extracting all cosmological information

Simulator

Outline

Which full-field inference methods require the fewest simulations?

How to build sufficient statistics?

Can we perform implicit inference with fewer simulations?

How to deal with model misspecification?

Outline

Which full-field inference methods require the fewest simulations?

How to build sufficient statistics?

Can we perform implicit inference with fewer simulations?

How to deal with model misspecification?

Neural Posterior Estimation with Differentiable Simulators

ICML 2022 Workshop on Machine Learning for Astrophysics

Justine Zeghal, François Lanusse, Alexandre Boucaud,

Benjamin Remy and Eric Aubourg

Implicit Inference

1) Draw N parameters

2) Draw N simulations

3) Train a neural density estimator on to approximate the quantity of interest

4) Approximate the posterior from the learned quantity

Algorithm

Normalizing Flows

Normalizing Flows

Normalizing Flows

Normalizing Flows

Normalizing Flows

Normalizing Flows

Normalizing Flows

Change of Variable Formula:

Normalizing Flows

Change of Variable Formula:

Normalizing Flows

We need to learn the mapping

to approximate the complex distribution.

From simulations only!

A lot of simulations..

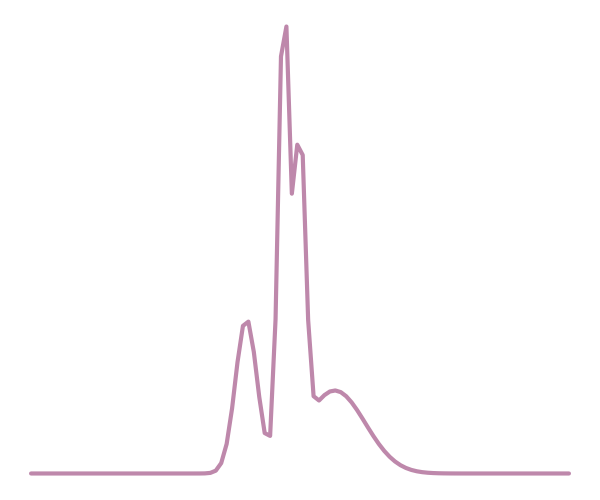

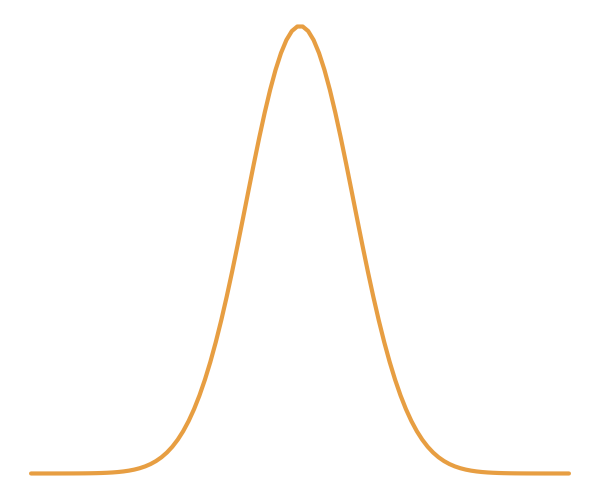

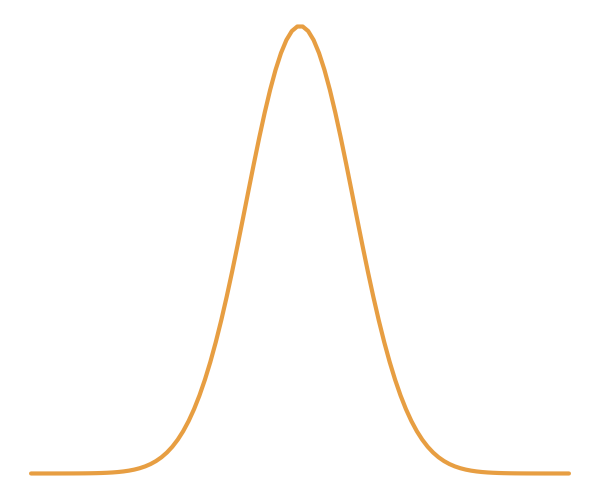

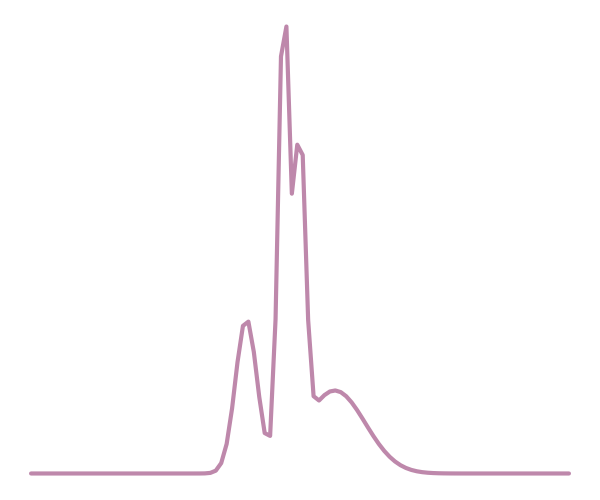

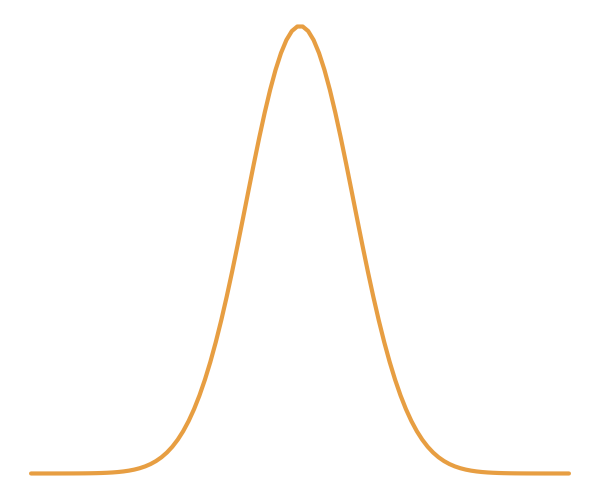

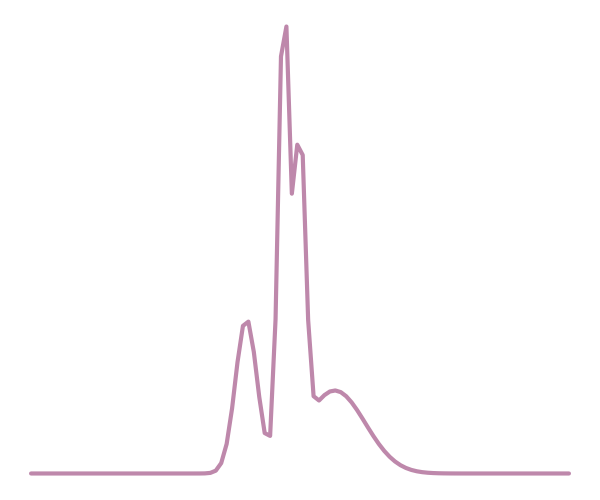

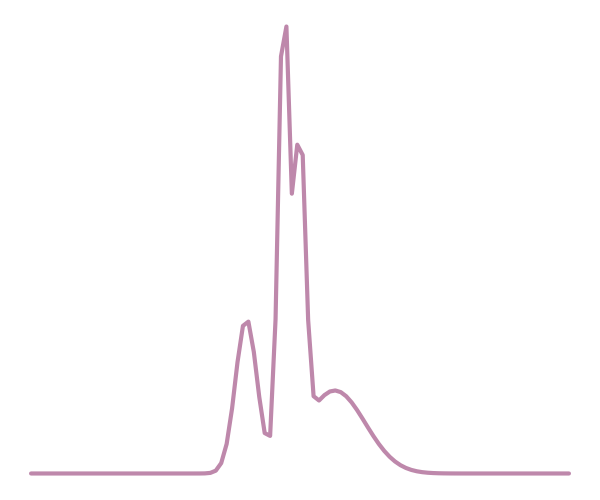

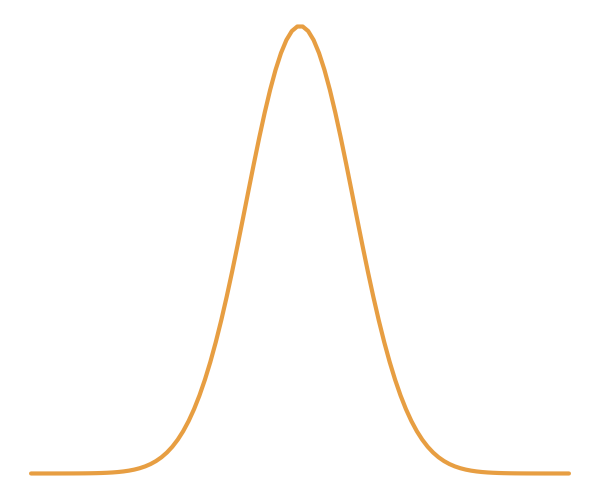

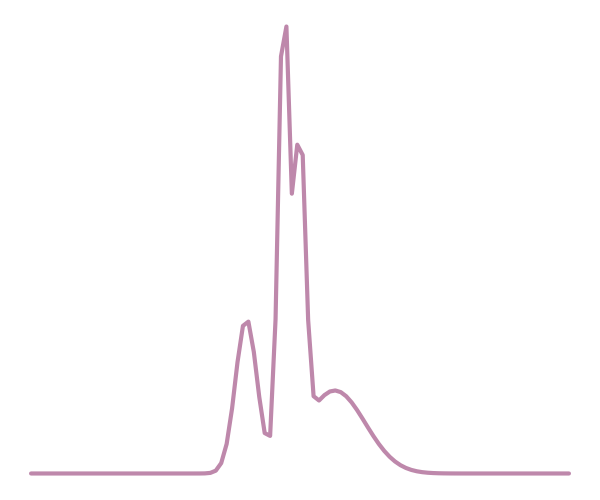

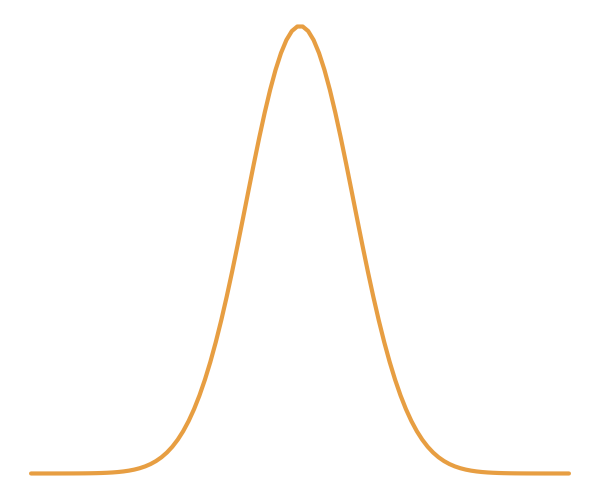

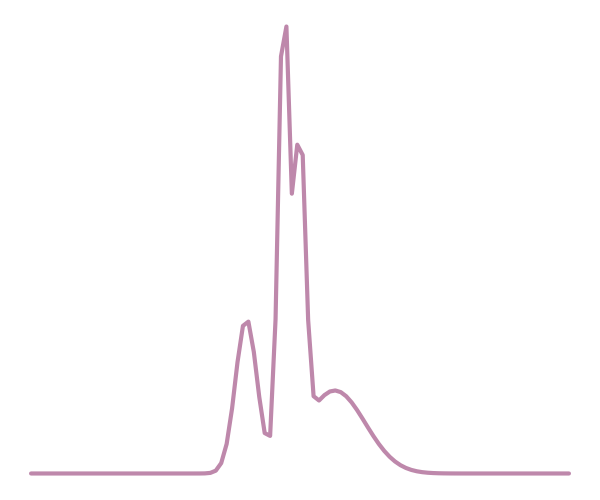

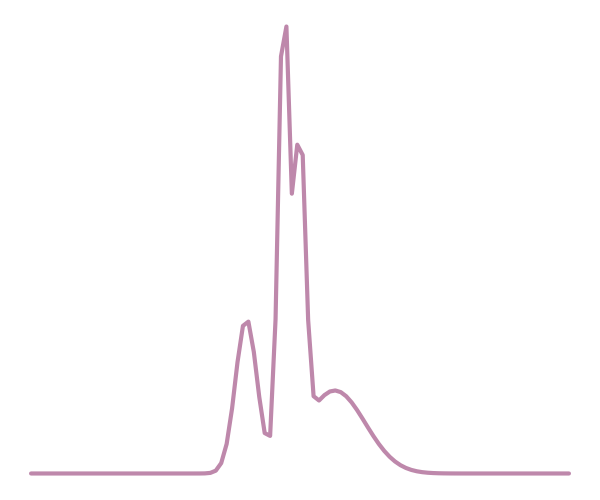

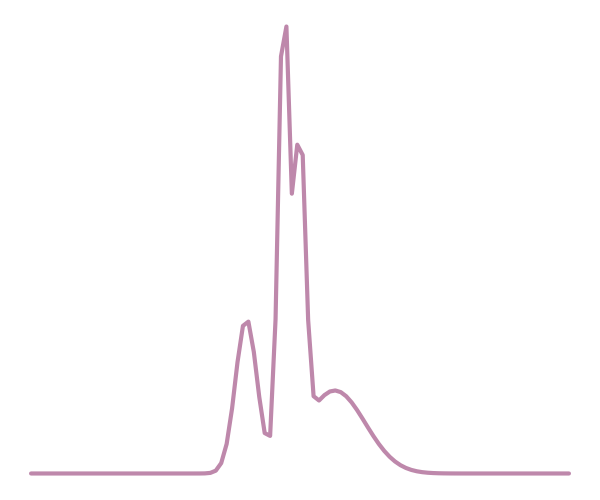

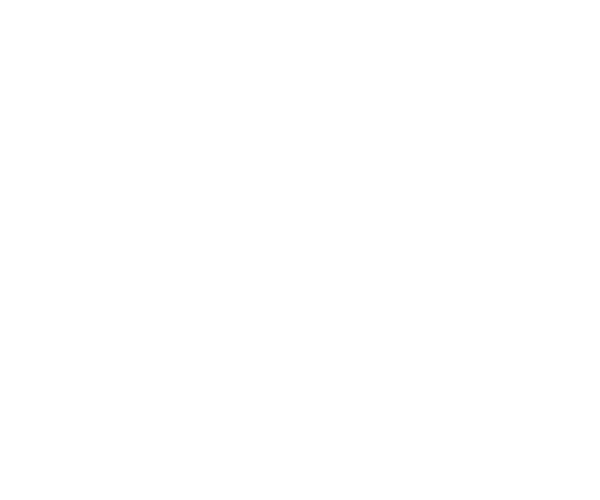

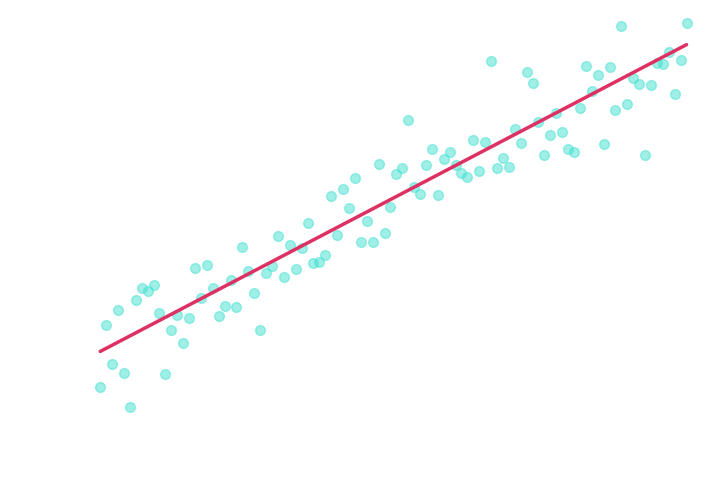

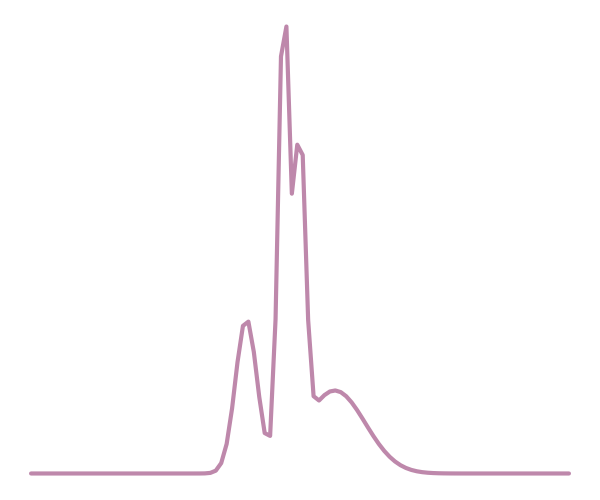

Truth

Approximation

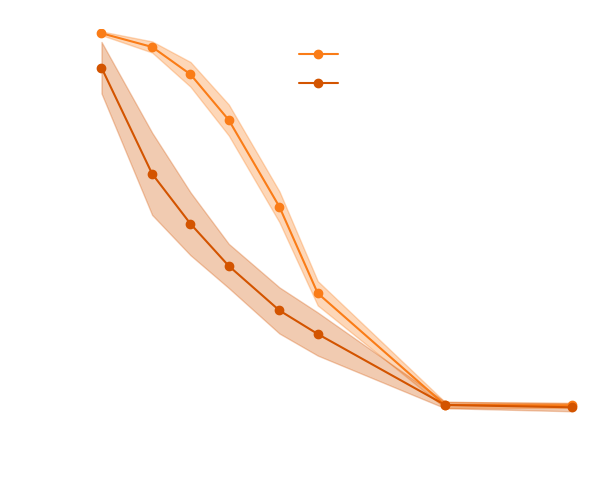

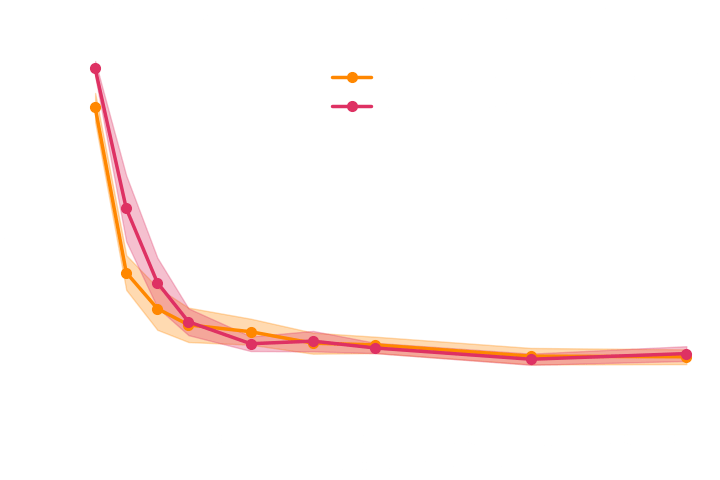

With a few simulations it's hard to approximate the posterior distribution.

→ we need more simulations

BUT if we have a few simulations

and the gradients

(also know as the score)

then it's possible to have an idea of the shape of the distribution.

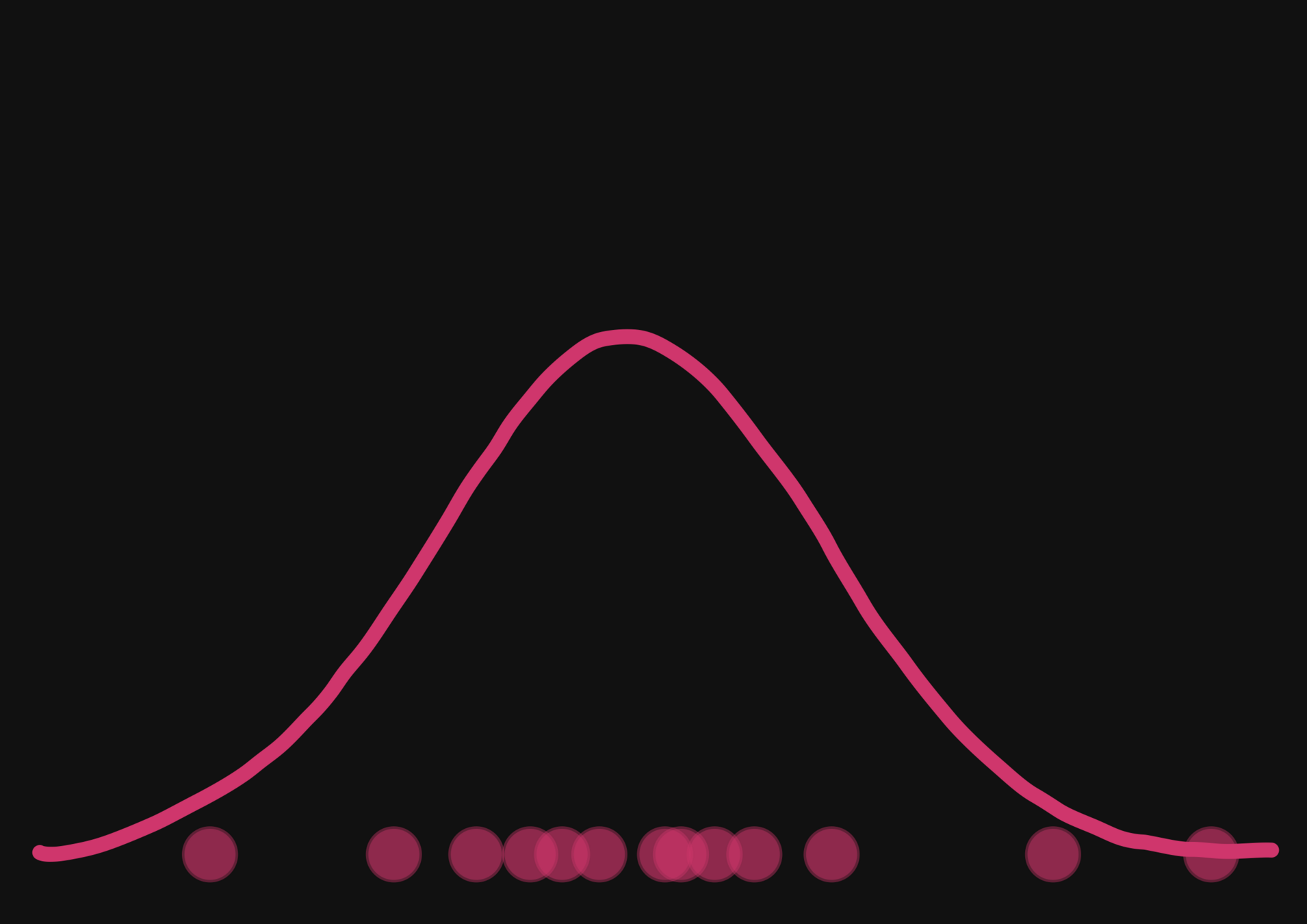

How gradients can help reduce the number of simulations?

How to train NFs with gradients?

How to train NFs with gradients?

Normalizing flows are trained by minimizing the negative log likelihood:

How to train NFs with gradients?

But to train the NF, we want to use both simulations and the gradients from the simulator:

Normalizing flows are trained by minimizing the negative log likelihood:

How to train NFs with gradients?

But to train the NF, we want to use both simulations and the gradients from the simulator:

Normalizing flows are trained by minimizing the negative log likelihood:

How to train NFs with gradients?

But to train the NF, we want to use both simulations and the gradients from the simulator:

Normalizing flows are trained by minimizing the negative log likelihood:

How to train NFs with gradients?

Problem: the gradient of current NFs lack expressivity

But to train the NF, we want to use both simulations and the gradients from the simulator:

Normalizing flows are trained by minimizing the negative log likelihood:

How to train NFs with gradients?

Problem: the gradient of current NFs lack expressivity

But to train the NF, we want to use both simulations and the gradients from the simulator:

Normalizing flows are trained by minimizing the negative log likelihood:

How to train NFs with gradients?

Problem: the gradient of current NFs lack expressivity

But to train the NF, we want to use both simulations and the gradients from the simulator:

Normalizing flows are trained by minimizing the negative log likelihood:

How to train NFs with gradients?

Problem: the gradient of current NFs lack expressivity

But to train the NF, we want to use both simulations and the gradients from the simulator:

Normalizing flows are trained by minimizing the negative log likelihood:

How to train NFs with gradients?

Problem: the gradient of current NFs lack expressivity

But to train the NF, we want to use both simulations and the gradients from the simulator:

Normalizing flows are trained by minimizing the negative log likelihood:

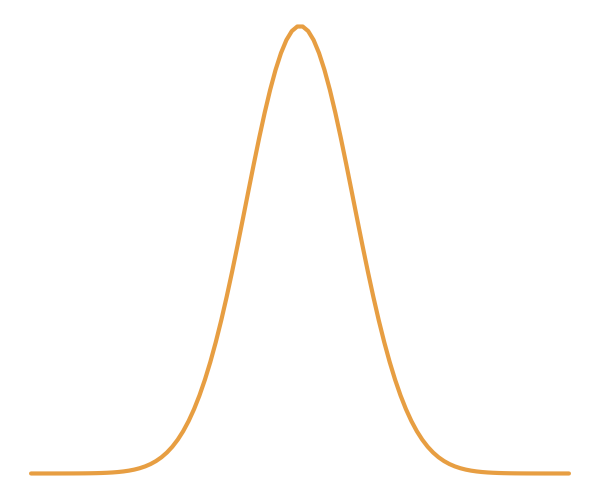

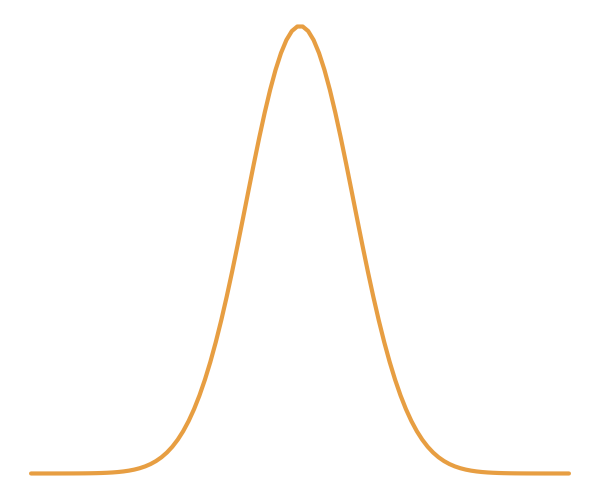

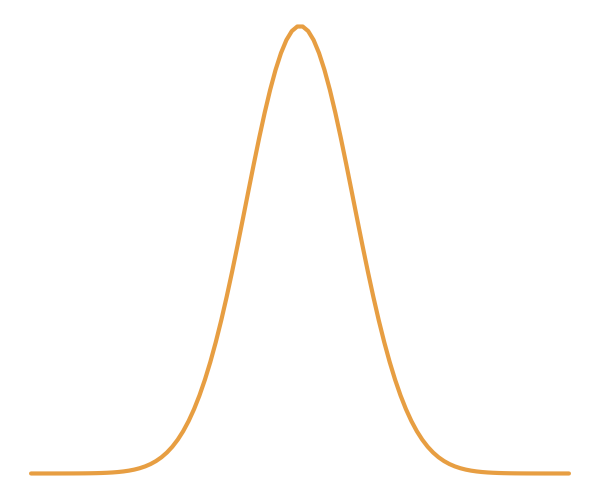

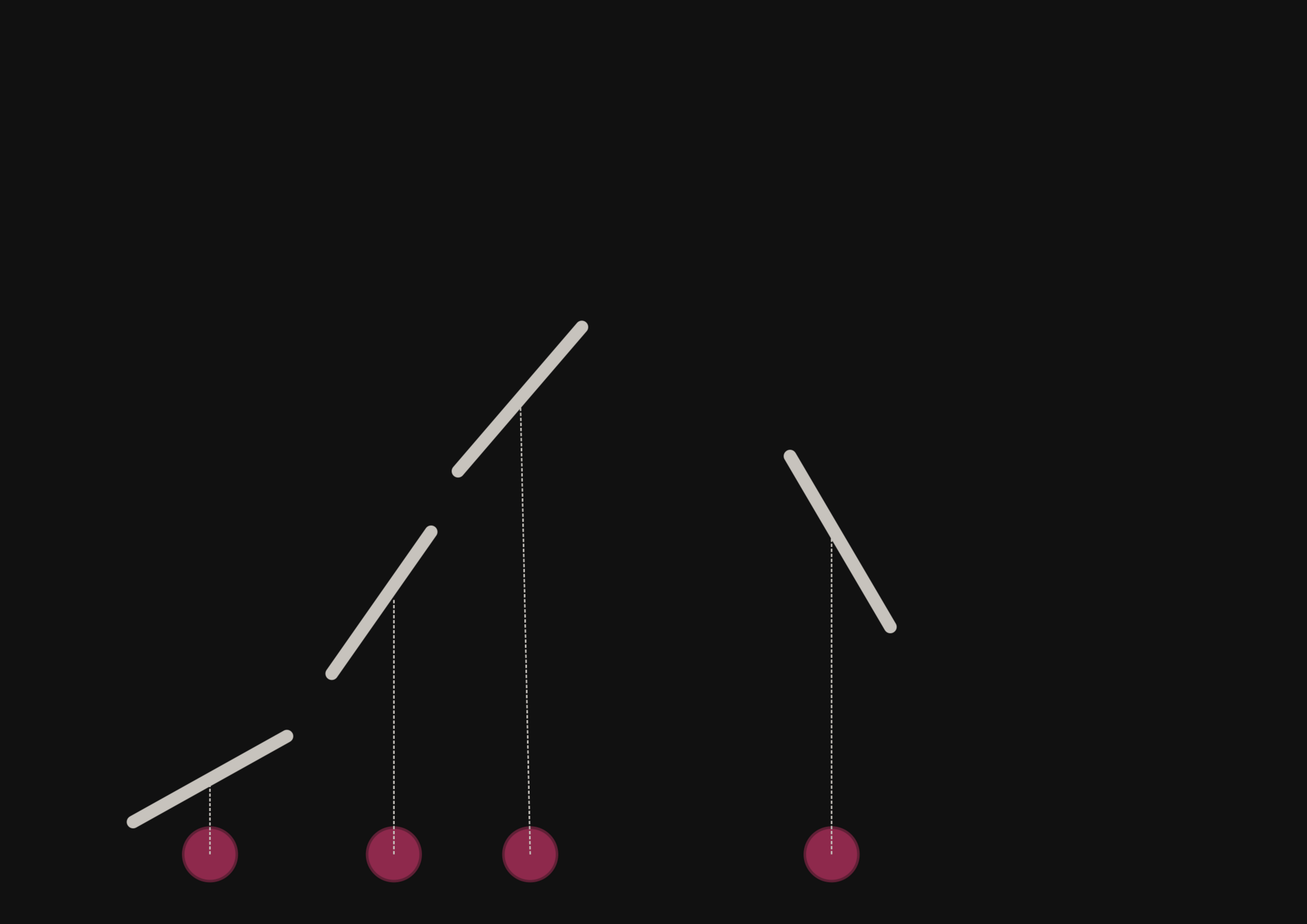

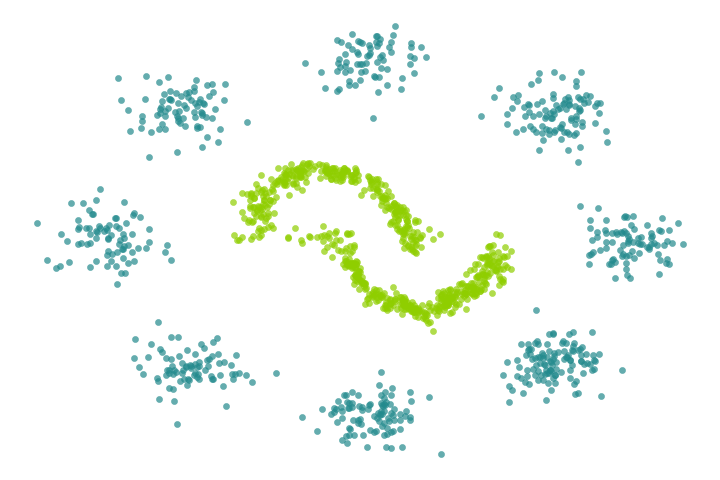

Benchmark Metric

A metric

We use the Classifier 2-Sample Tests (C2ST) metric.

- C2ST=0.5 (i.e “Impossible to differentiate 👍🏼”)

- C2ST=1(i.e “Too easy to differentiate 👎🏻”)

distribution 1

distribution 2

Requirement: the true distributions is needed.

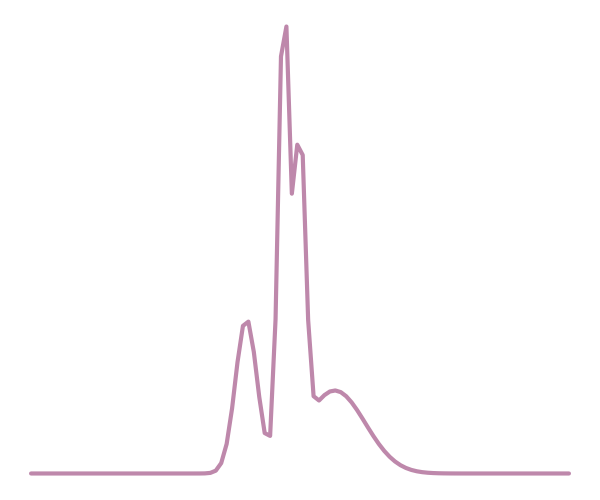

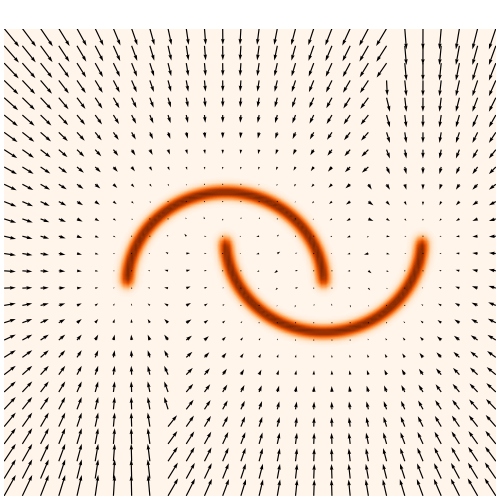

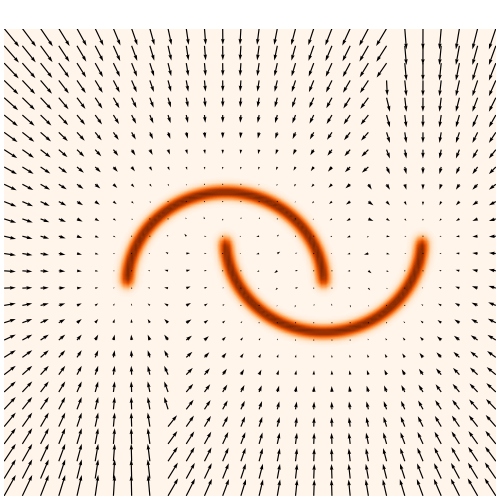

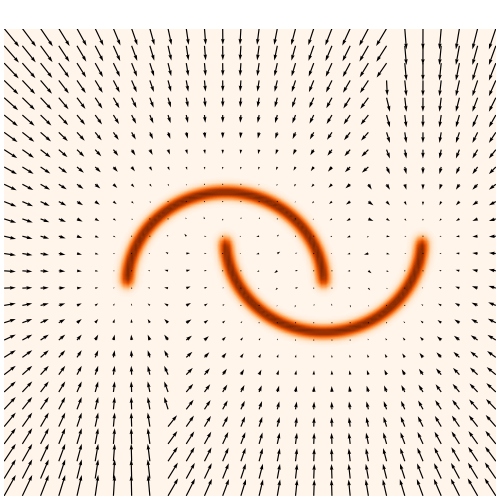

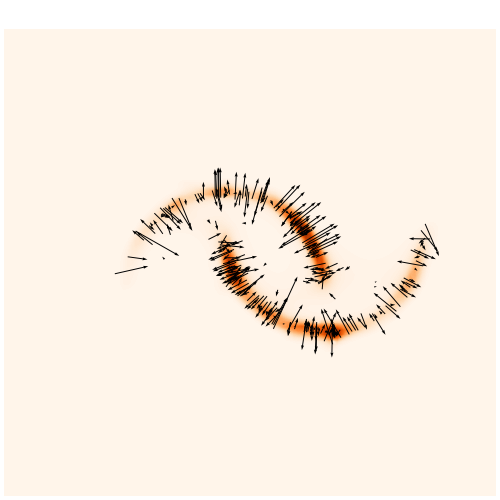

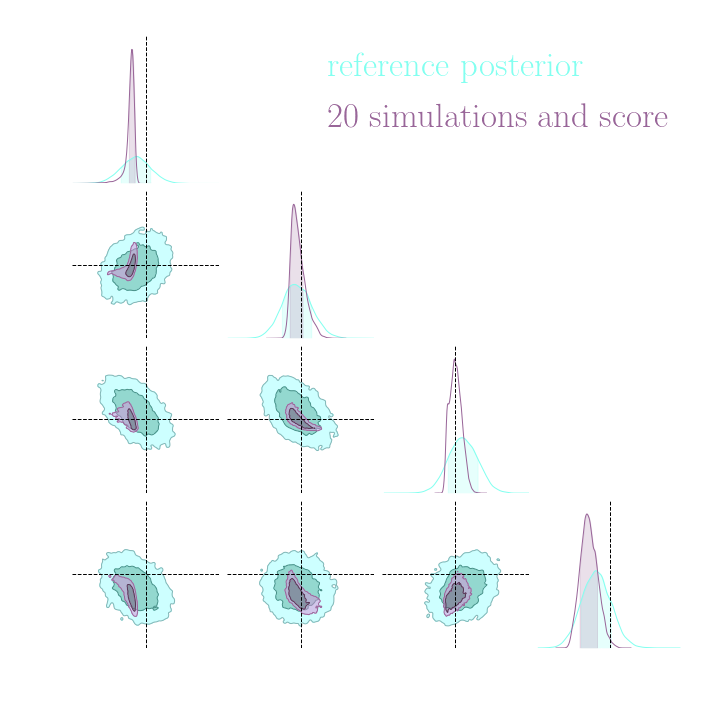

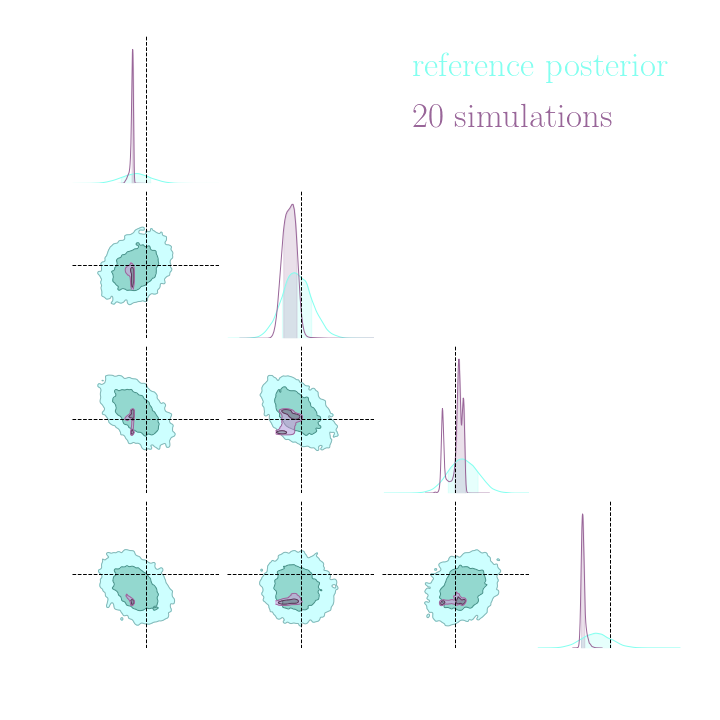

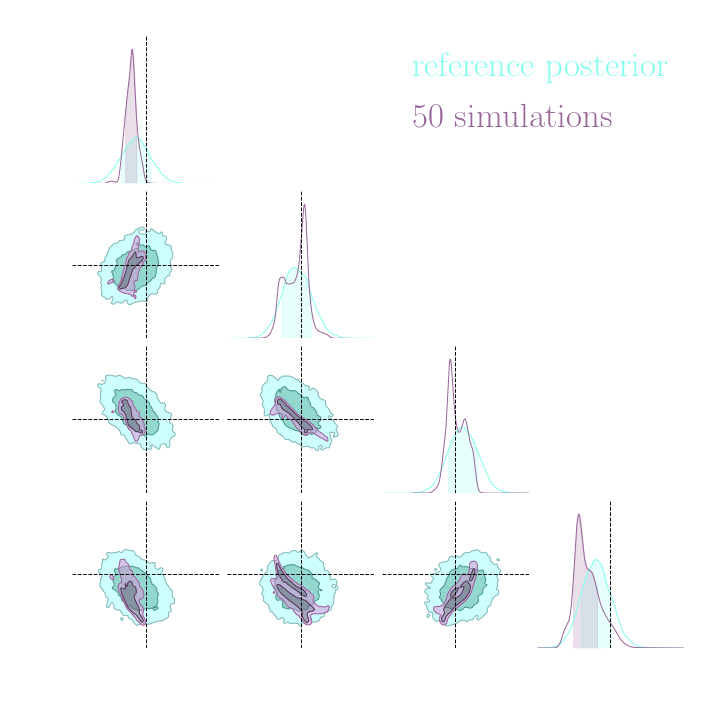

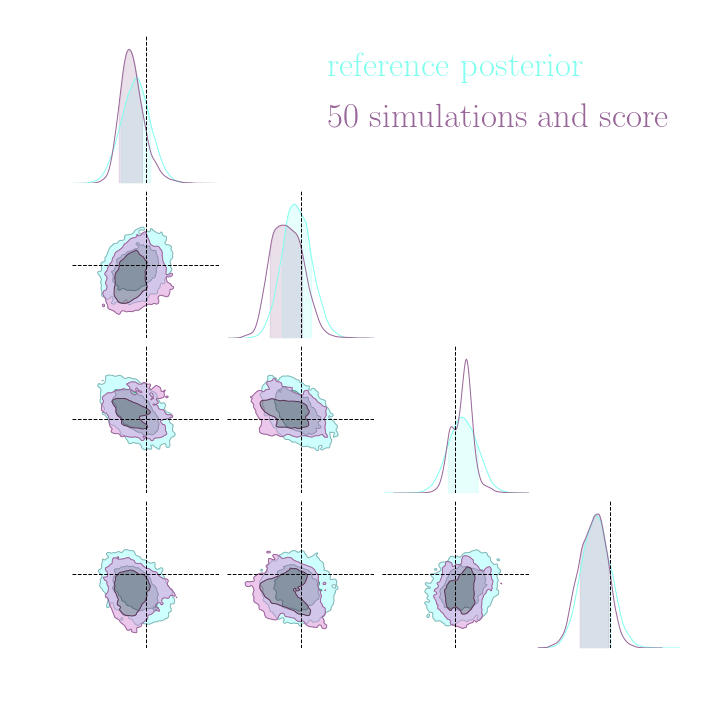

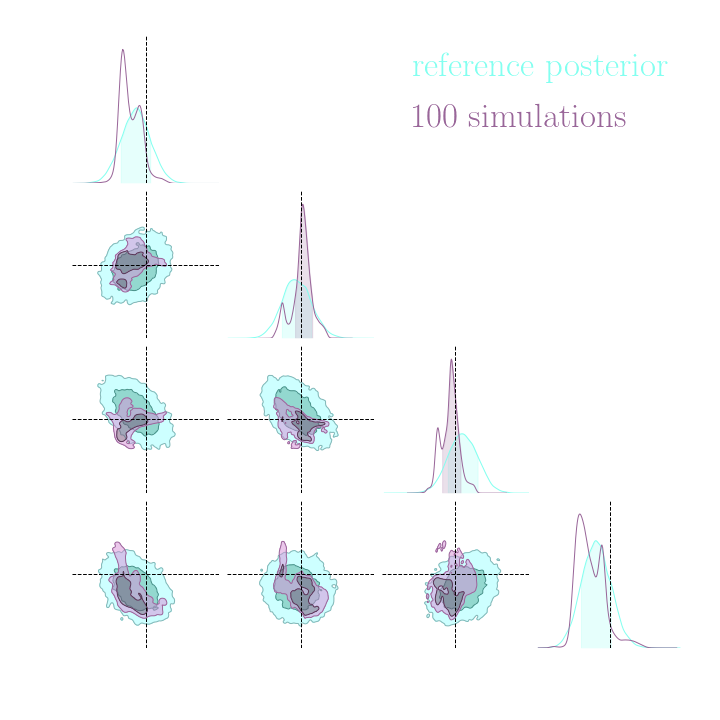

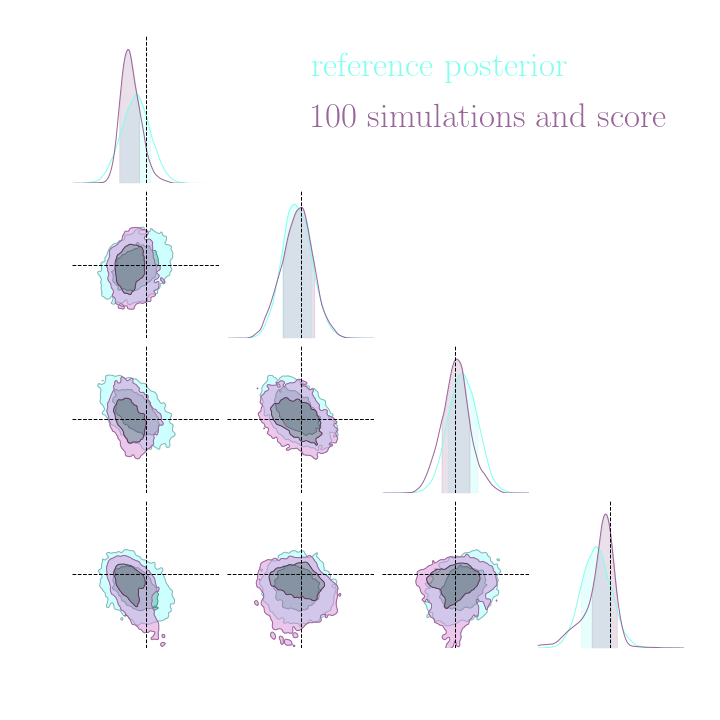

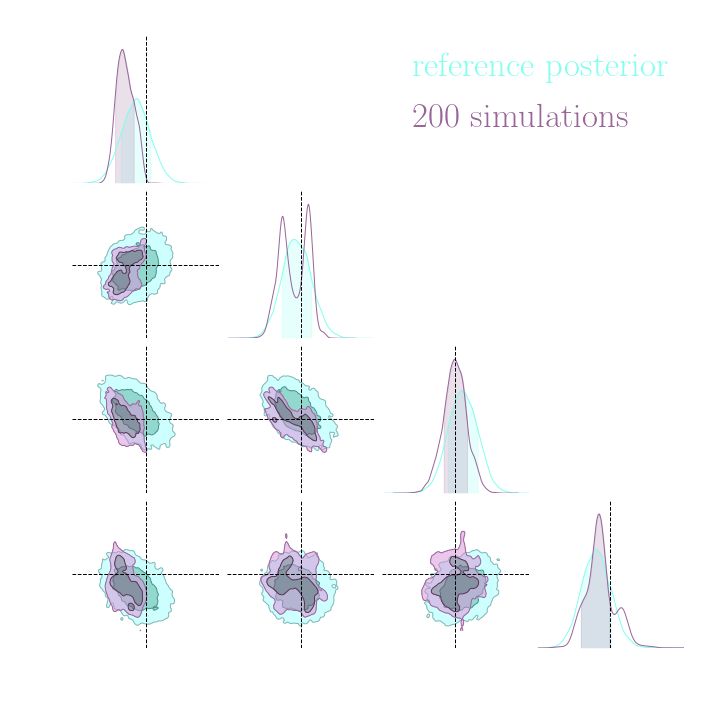

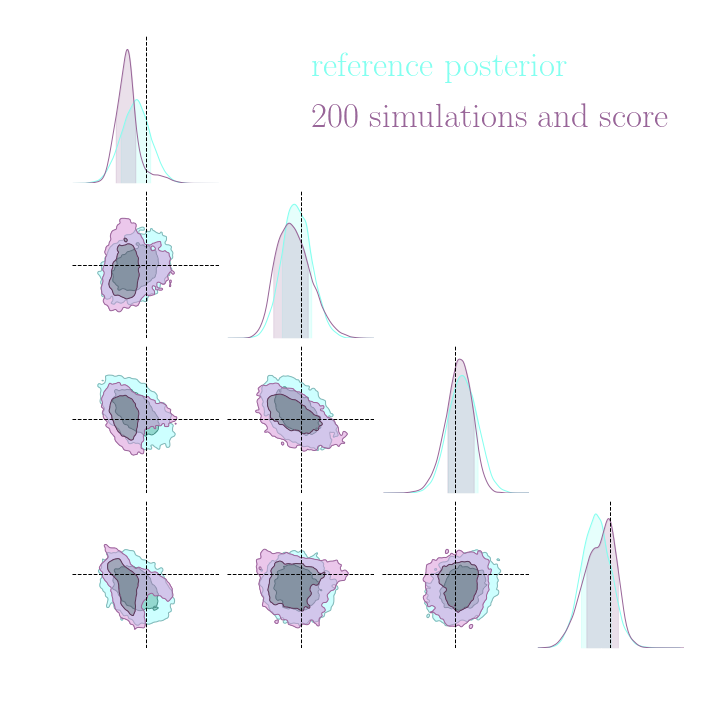

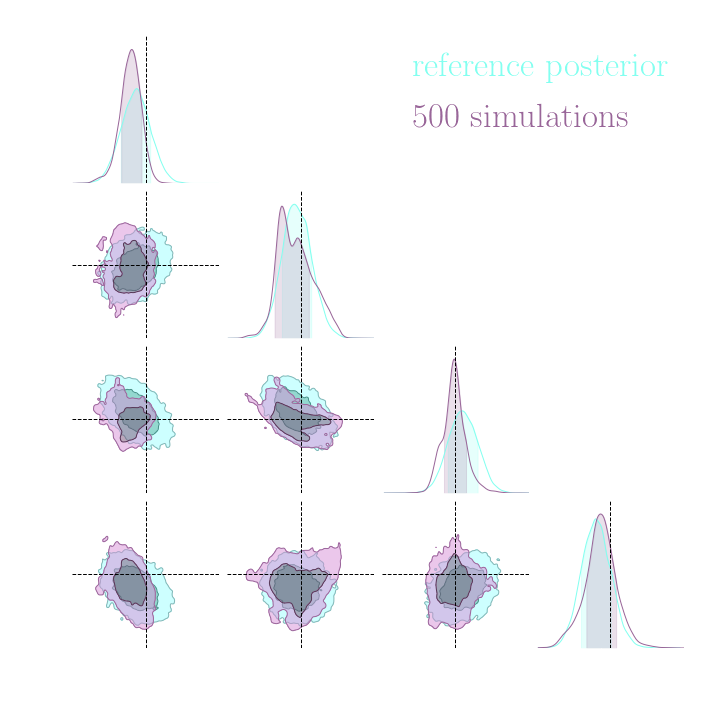

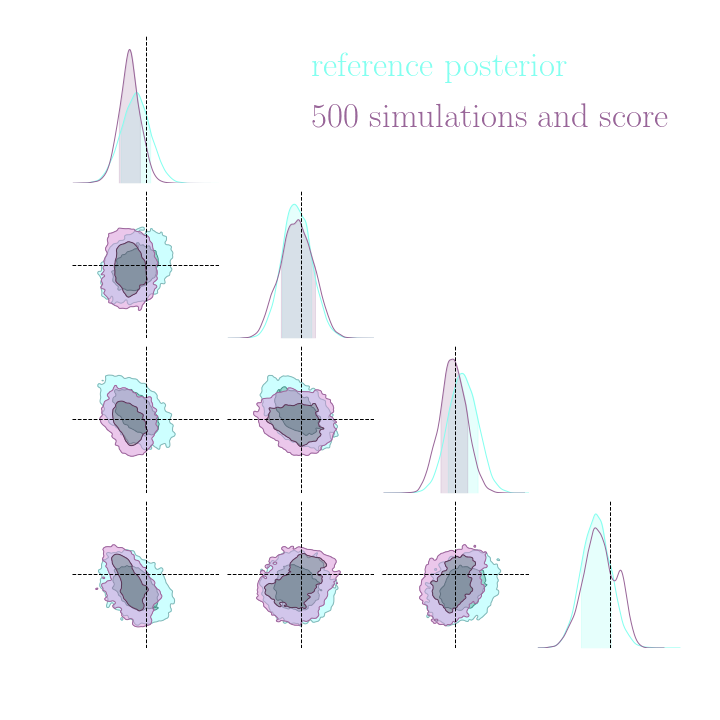

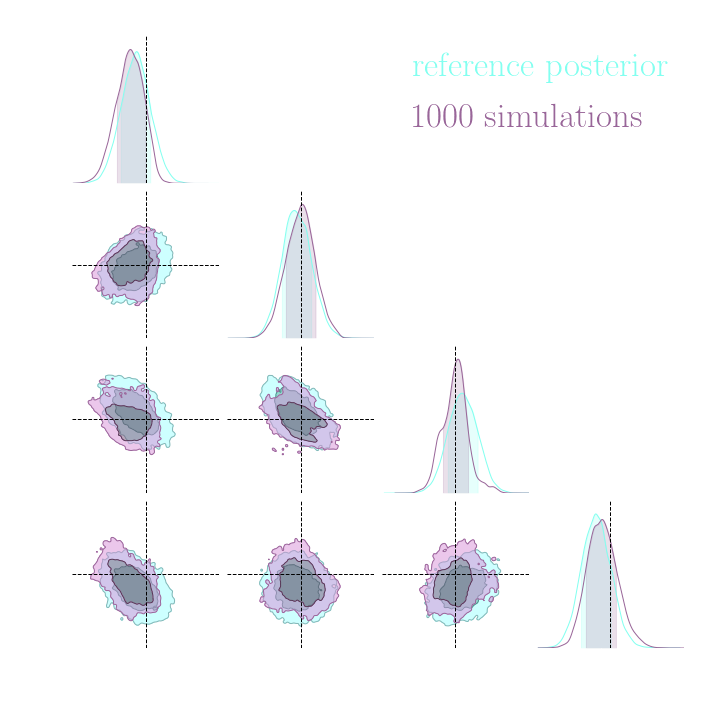

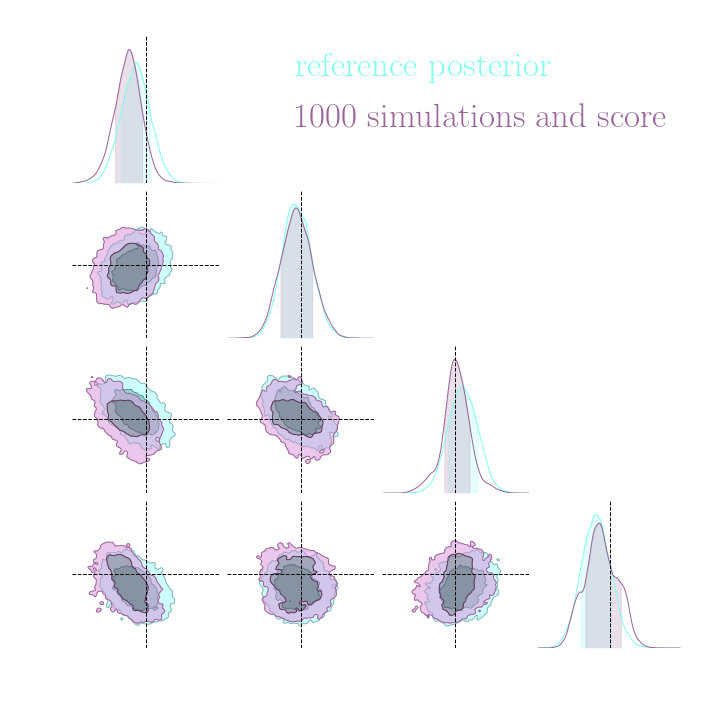

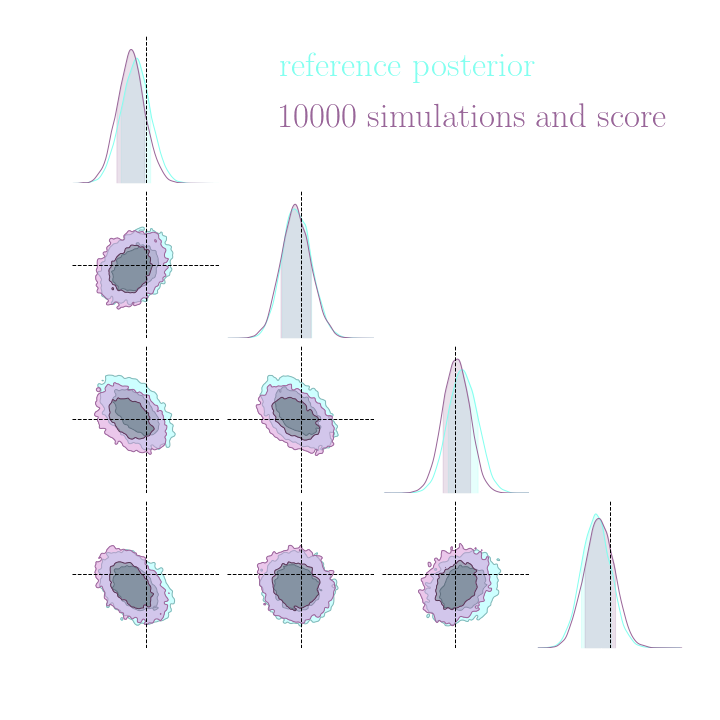

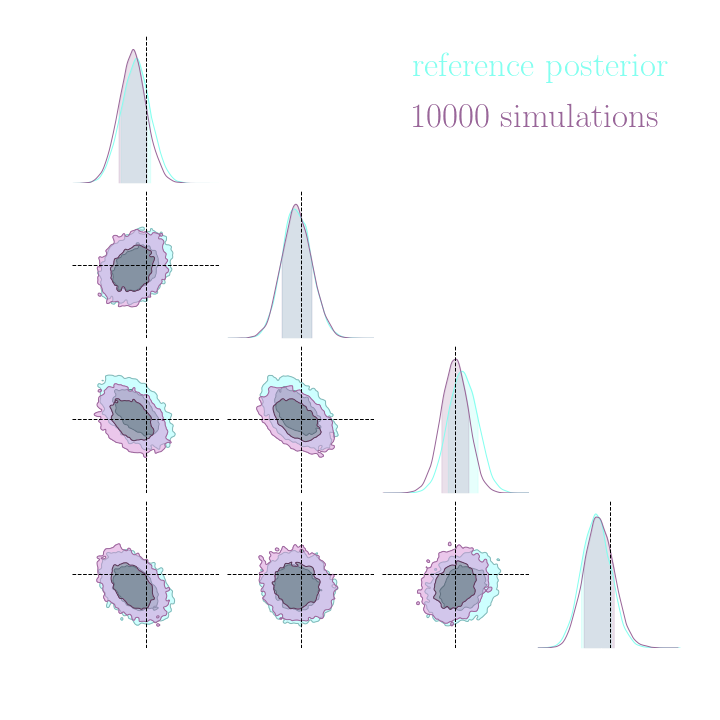

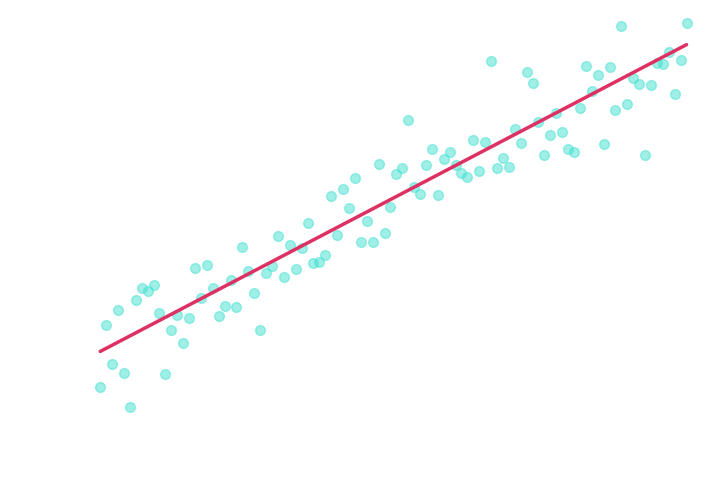

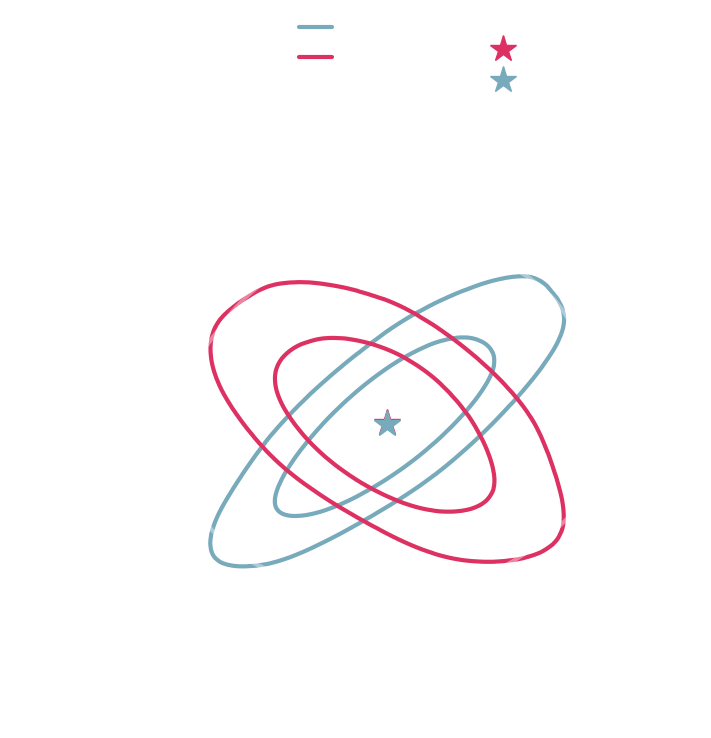

Results on a toy model

→ On a toy Lotka Volterra model, the gradients helps to constrain the distribution shape.

Results on a toy model

Without gradients

With gradients

Outline

Which full-field inference methods require the fewest simulations?

How to build sufficient statistics?

Can we perform implicit inference with fewer simulations?

How to deal with model misspecification?

Outline

Which full-field inference methods require the fewest simulations?

How to build sufficient statistics?

Can we perform implicit inference with fewer simulations?

How to deal with model misspecification?

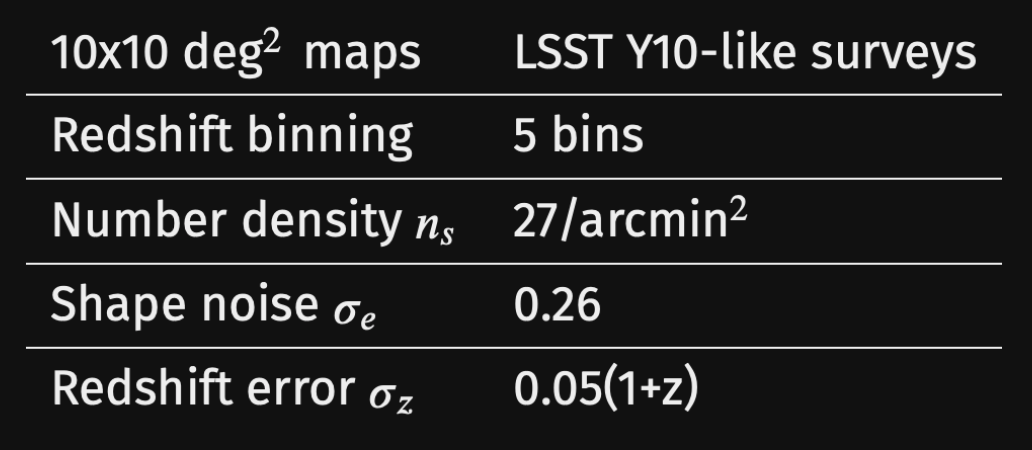

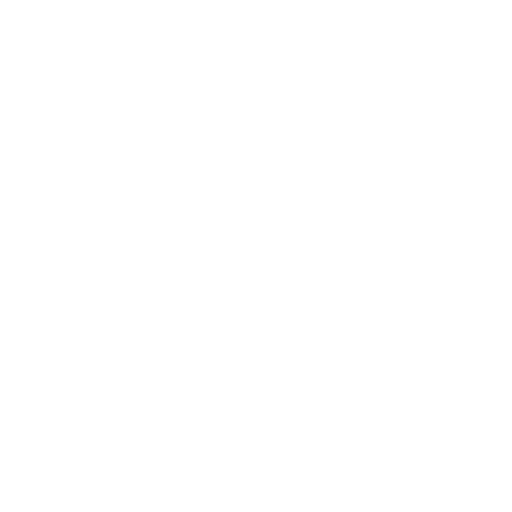

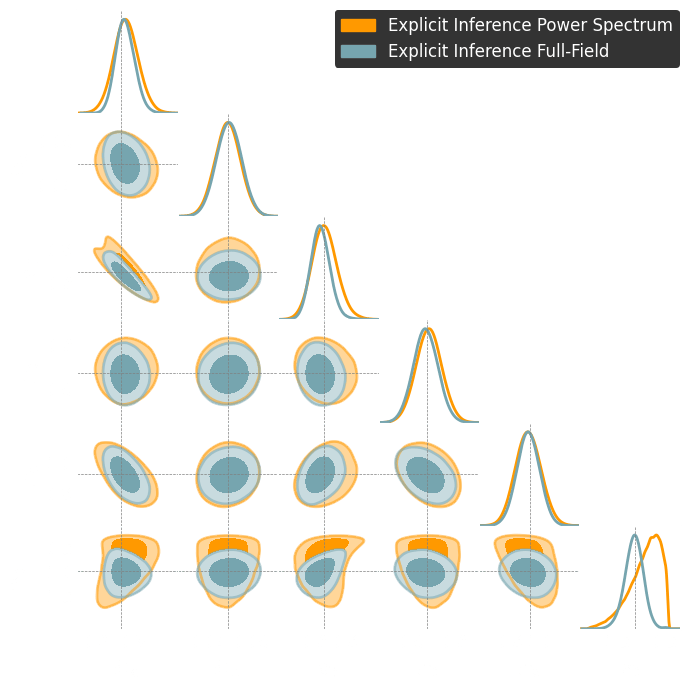

Simulation-Based Inference Benchmark for LSST Weak Lensing Cosmology

Justine Zeghal, Denise Lanzieri, François Lanusse, Alexandre Boucaud, Gilles Louppe, Eric Aubourg, Adrian E. Bayer

and The LSST Dark Energy Science Collaboration (LSST DESC)

-

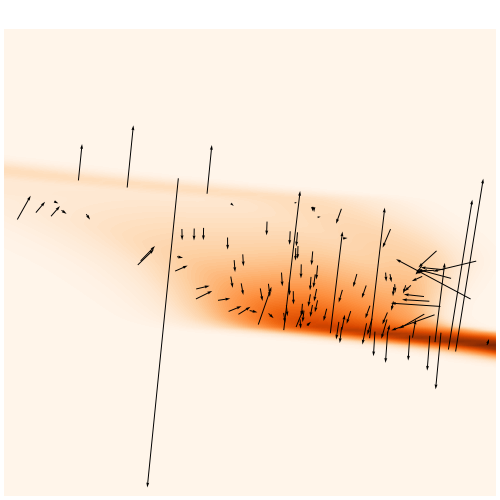

do gradients help implicit inference methods?

In the case of weak lensing full-field analysis,

-

which inference method requires the fewest simulations?

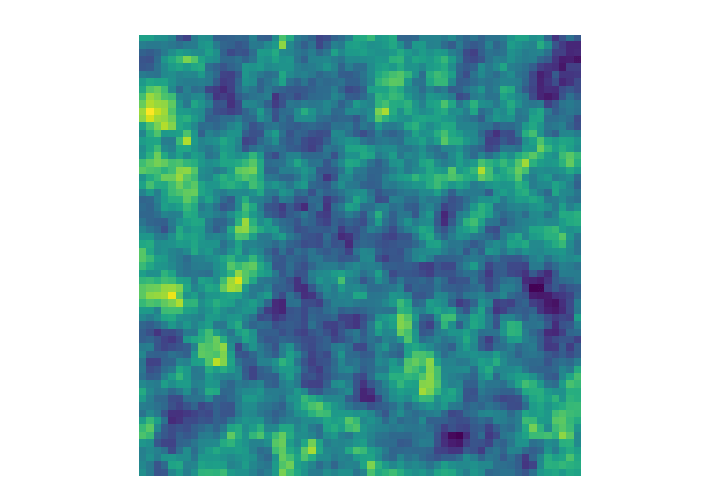

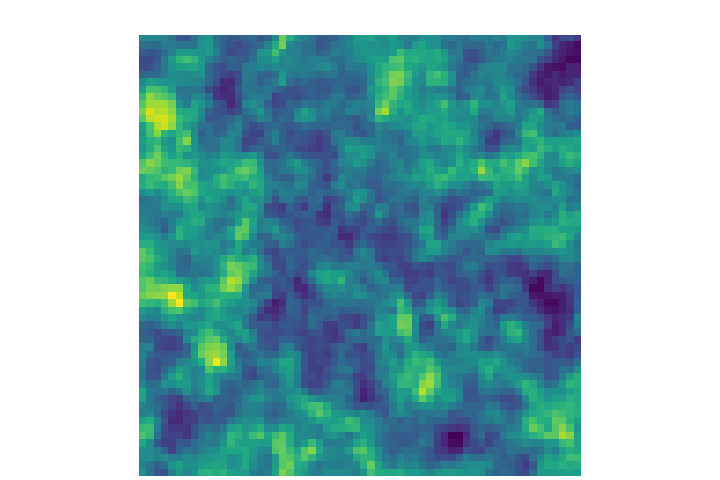

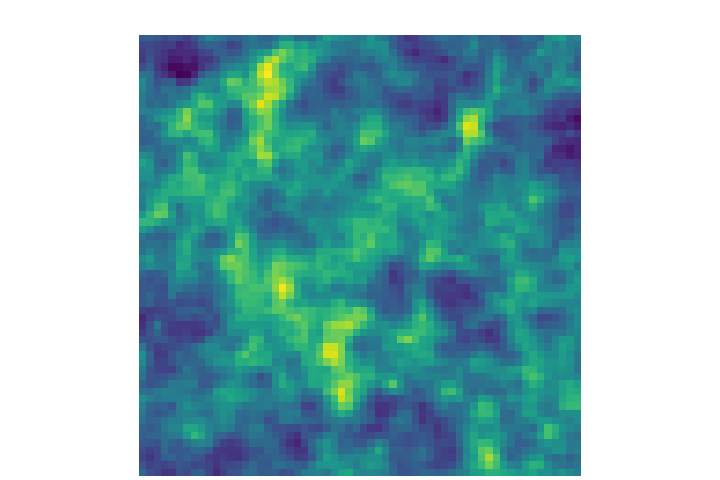

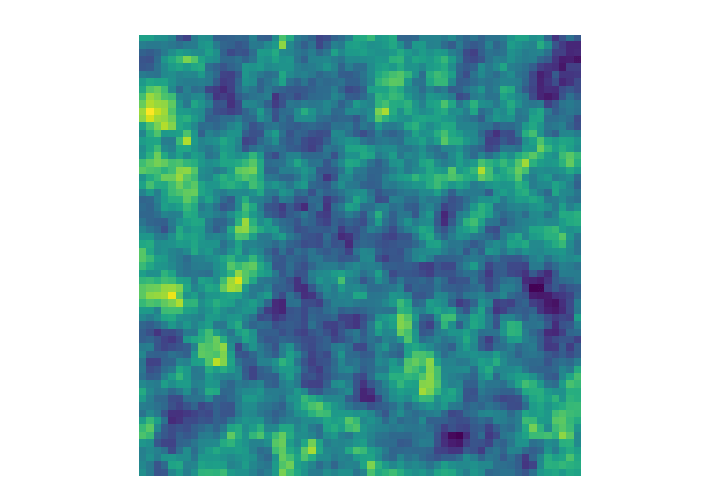

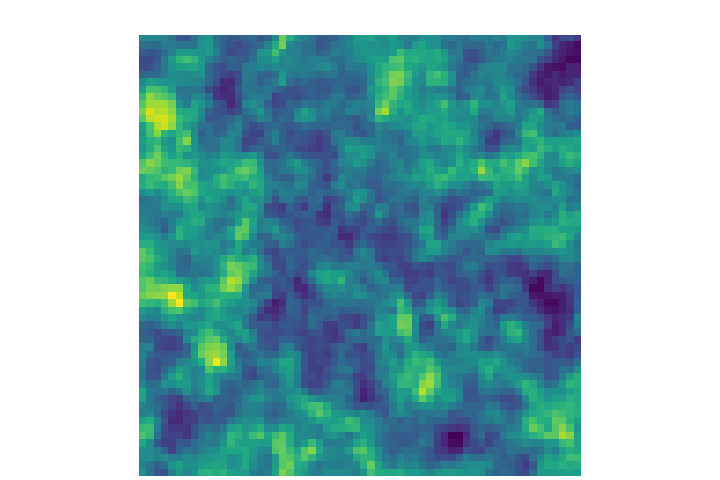

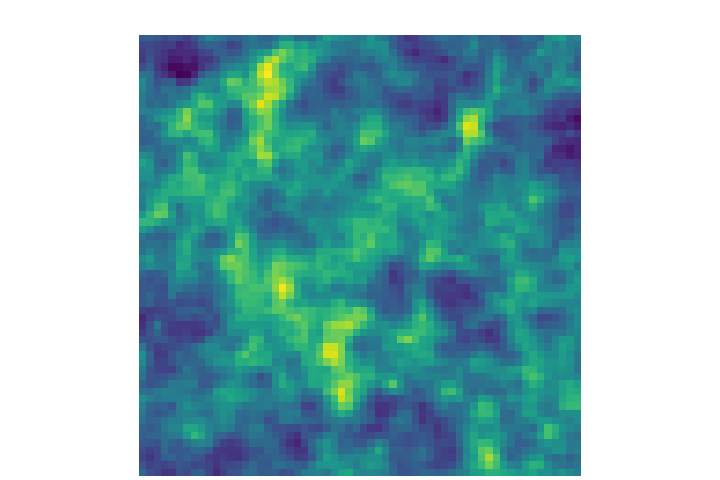

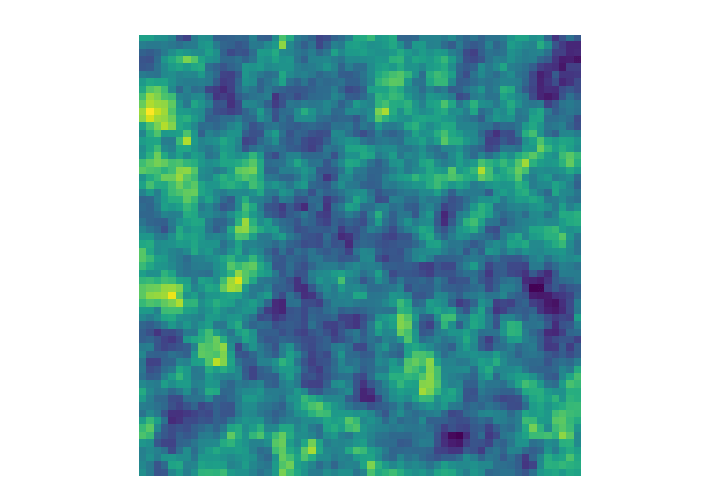

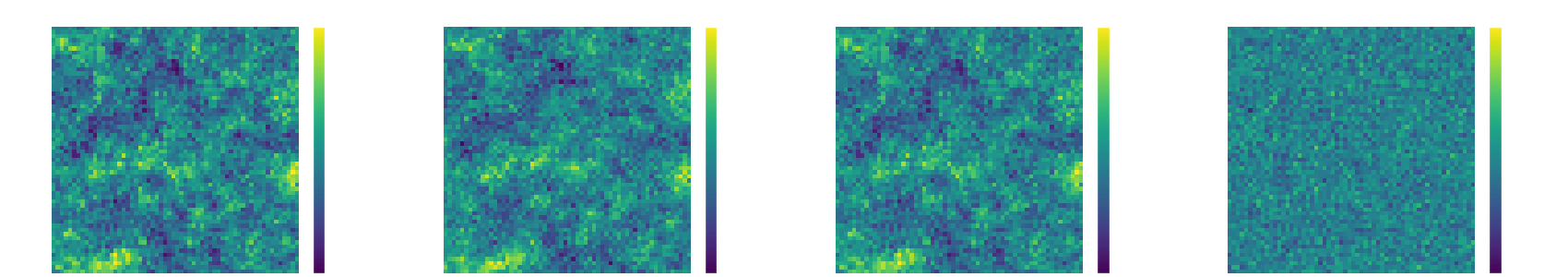

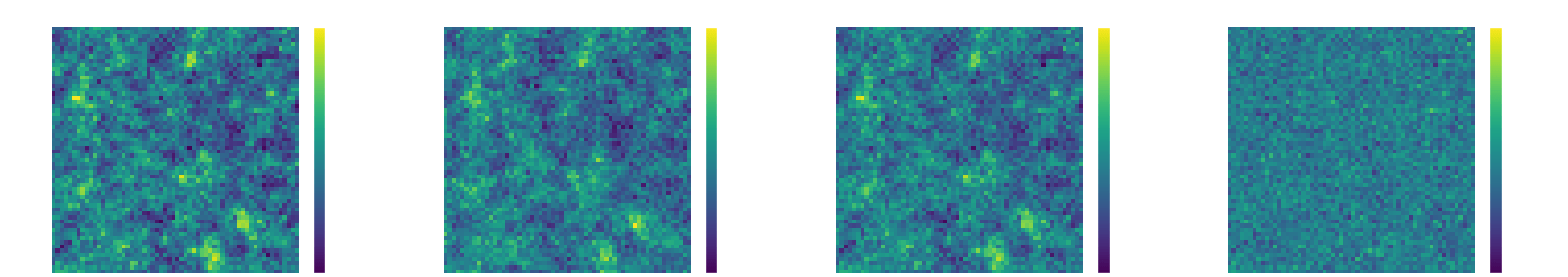

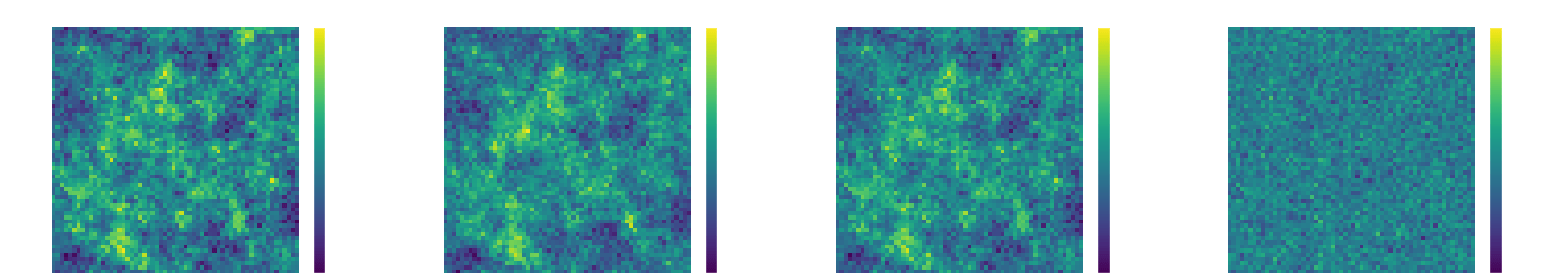

We developed a fast and differentiable (JAX) log-normal mass maps simulator.

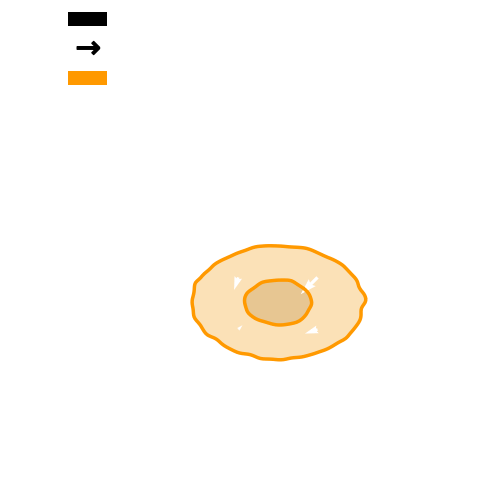

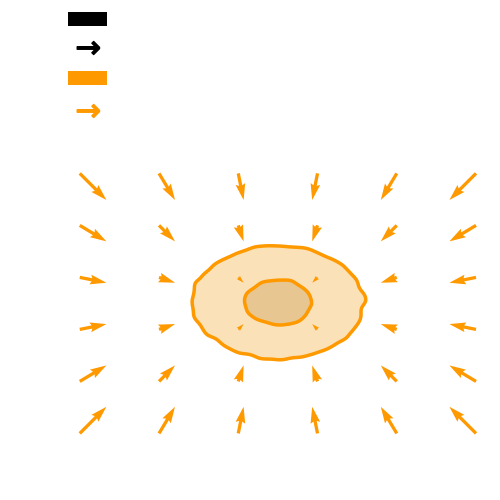

For our benchmark: a Differentiable Mass Maps Simulator

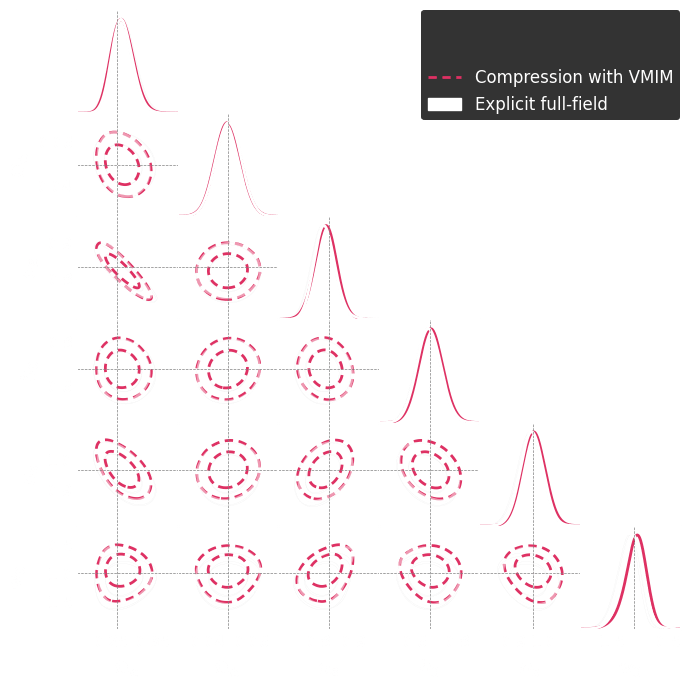

Benchmark metric

Explicit inference theoretically and asymptotically converges to the truth.

Explicit inference and implicit inference yield comparable constraints.

C2ST = 0.6!

To use the C2ST we need the true posterior distribution.

→ We use the explicit full-field posterior.

Why?

-

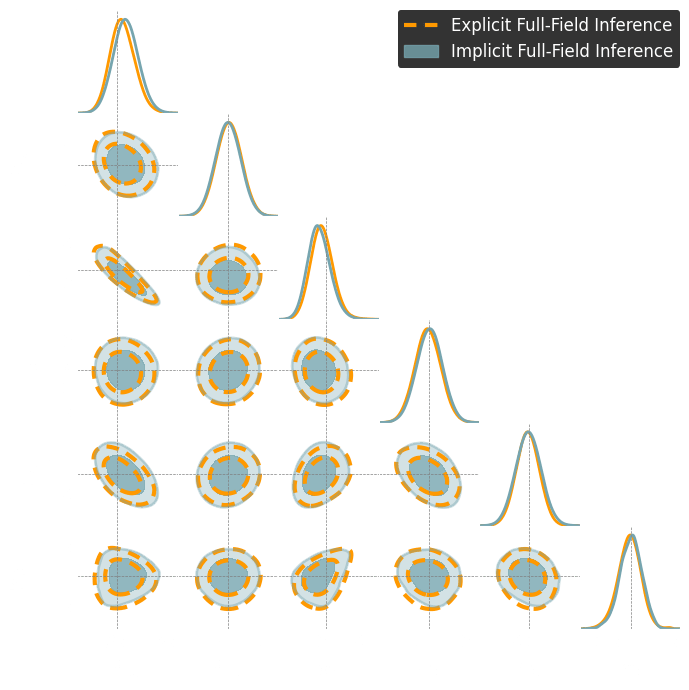

Do gradients help implicit inference methods?

Training the NF with simulations and gradients:

Loss =

-

Do gradients help implicit inference methods?

Training the NF with simulations and gradients:

Loss =

-

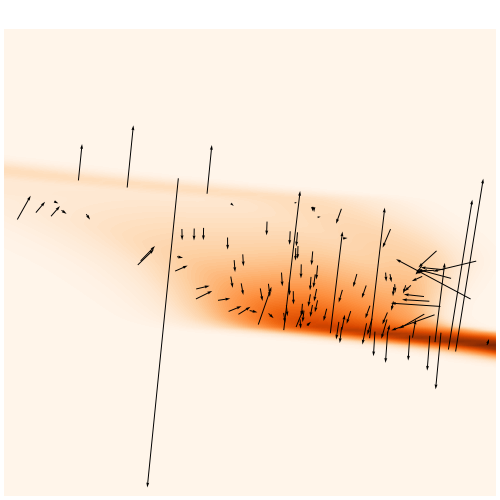

Do gradients help implicit inference methods?

(from the simulator)

→ For this particular problem, the gradients from the simulator are too noisy to help.

-

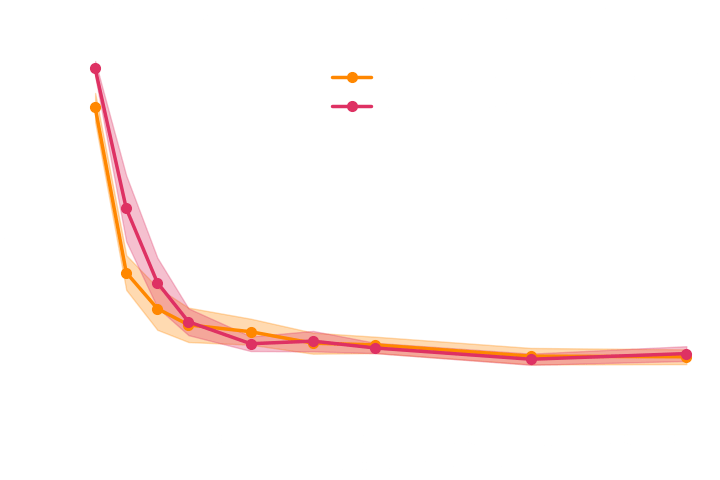

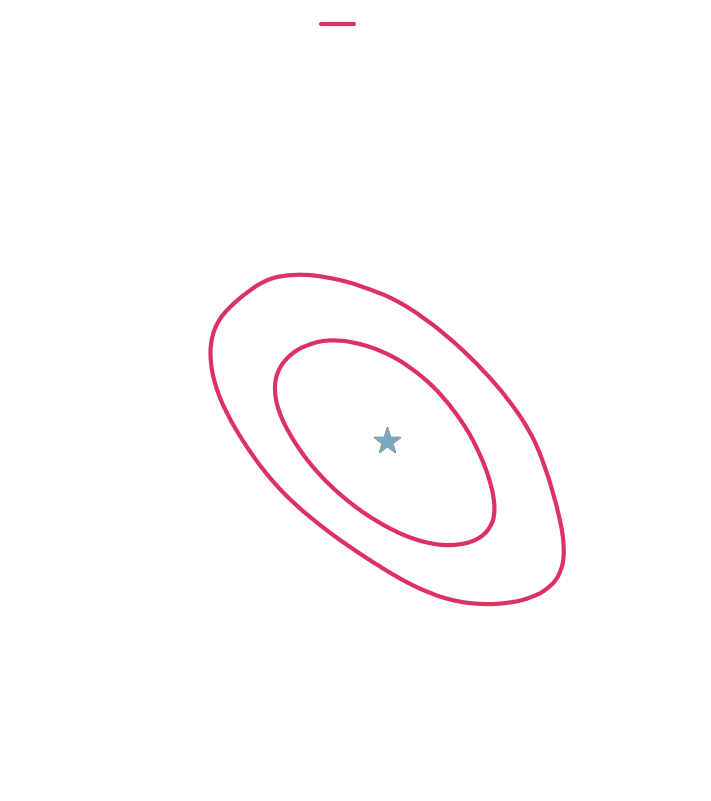

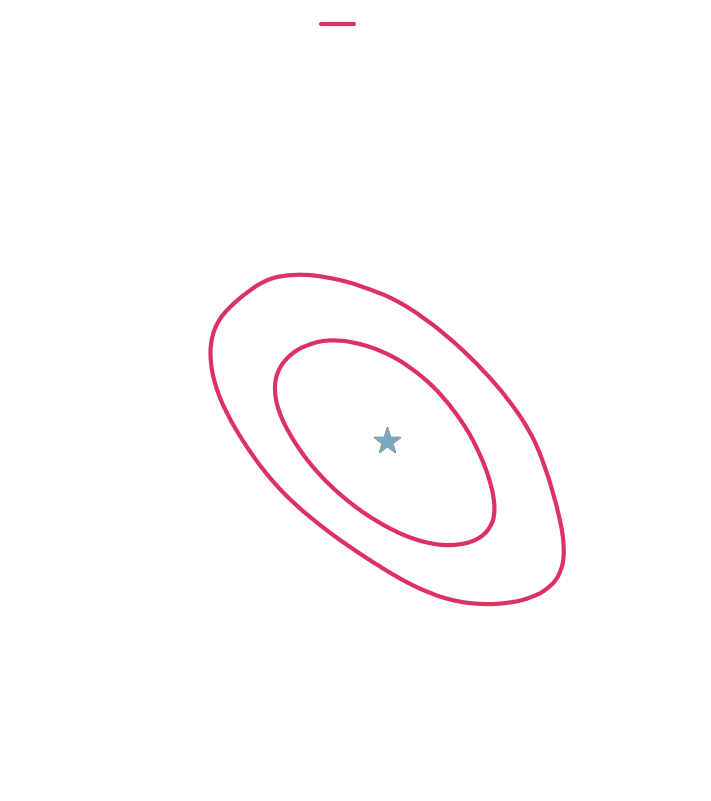

Do gradients help implicit inference methods?

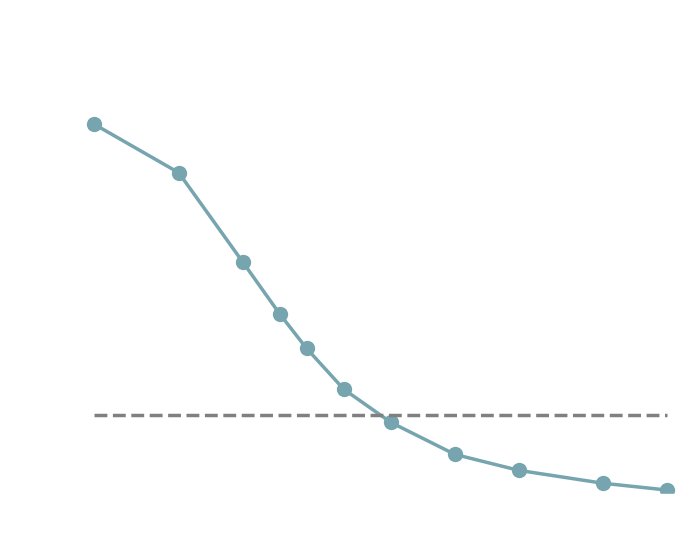

→ Implicit inference requires 1500 simulations.

→ In the case of perfect gradients it does not significantly help.

→ Simple distribution all the simulations seems to help locate the posterior distribution.

-

do gradients help implicit inference methods?

In the case of weak lensing full-field analysis,

-

which inference method requires the fewest simulations?

→ No, it does not help to reduce the number of simulations because the gradients of the simulator are too noisy.

→ Even with marginal gradients the gain is not significant.

→ For now, we now that implicit inference requires 1500 simulations.

-

which inference method requires the fewest simulations?

What about explicit inference?

-

which inference method requires the fewest simulations?

What about explicit inference?

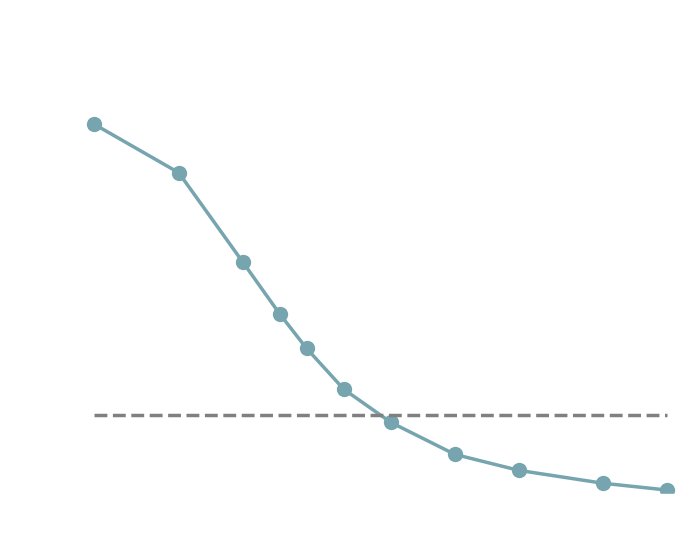

→ Explicit inference requires

simulations.

-

which inference method requires the fewest simulations?

Outline

Which full-field inference methods require the fewest simulations?

How to build sufficient statistics?

Can we perform implicit inference with fewer simulations?

How to deal with model misspecification?

Outline

Which full-field inference methods require the fewest simulations?

How to build sufficient statistics?

Can we perform implicit inference with fewer simulations?

How to deal with model misspecification?

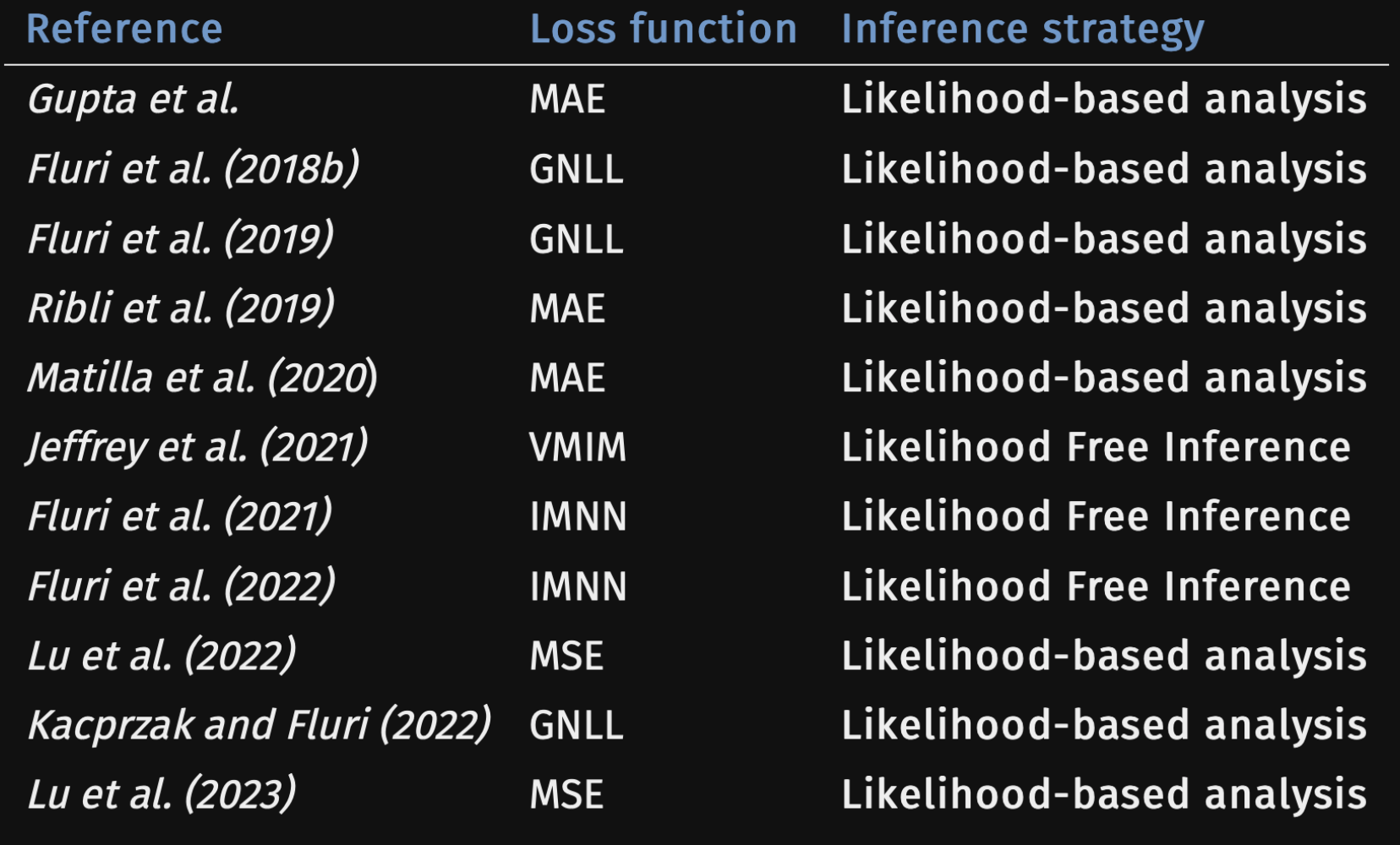

Optimal Neural Summarisation for Full-Field Weak Lensing Cosmological Implicit Inference

Denise Lanzieri*, Justine Zeghal*, T. Lucas Makinen, François Lanusse, Alexandre Boucaud and Jean-Luc Starck

* equal contibutions

How to extract all the information?

It is only a matter of the loss function used to train the compressor.

Definition: Sufficient Statistic

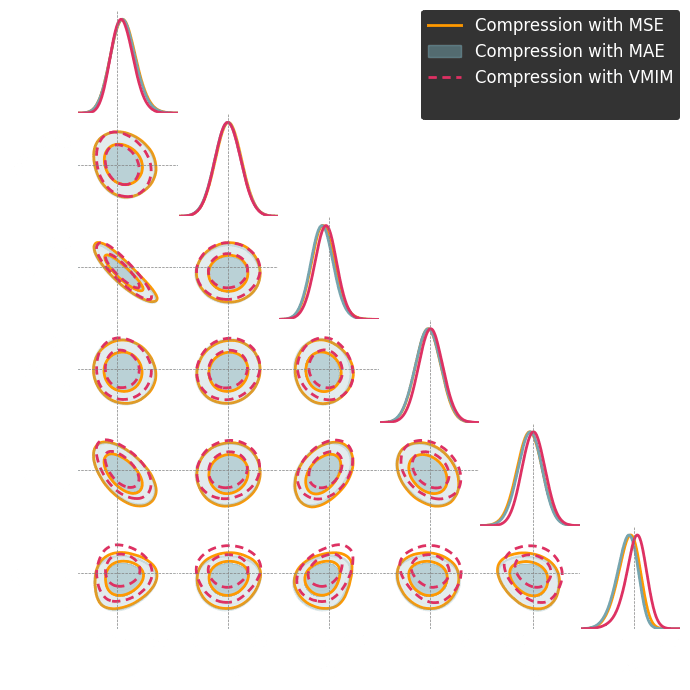

Two main compression schemes

Regression Losses

Two main compression schemes

Text

Regression Losses

Two main compression schemes

Which learns a moment of the posterior distribution.

Mean Squared Error (MSE) loss:

Which learns a moment of the posterior distribution.

Regression Losses

Two main compression schemes

Mean Squared Error (MSE) loss:

→ Approximate the mean of the posterior.

Regression Losses

Two main compression schemes

Which learns a moment of the posterior distribution.

Mean Squared Error (MSE) loss:

→ Approximate the mean of the posterior.

Regression Losses

Mean Absolute Error (MAE) loss:

Two main compression schemes

Which learns a moment of the posterior distribution.

Mean Squared Error (MSE) loss:

→ Approximate the mean of the posterior.

Regression Losses

Mean Absolute Error (MAE) loss:

→ Approximate the median of the posterior.

Two main compression schemes

Which learns a moment of the posterior distribution.

Regression Losses

Two main compression schemes

Regression Losses

Two main compression schemes

Regression Losses

Two main compression schemes

Regression Losses

Two main compression schemes

Regression Losses

The mean is not guaranteed to be a sufficient statistic.

Two main compression schemes

Mutual information maximization

Two main compression schemes

Mutual information maximization

By definition:

Two main compression schemes

Mutual information maximization

By definition:

Two main compression schemes

Mutual information maximization

By definition:

Two main compression schemes

Mutual information maximization

By definition:

Two main compression schemes

Mutual information maximization

By definition:

Two main compression schemes

Mutual information maximization

By definition:

Two main compression schemes

→ should build sufficient statistics according to the definition.

Mutual information maximization

By definition:

Two main compression schemes

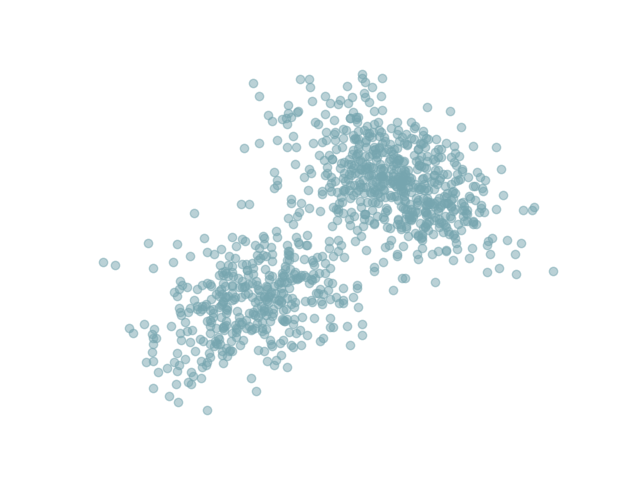

For our benchmark

Log-normal LSST Y10 like

differentiable

simulator

1. We compress using one of the losses.

Benchmark procedure:

2. We compare their extraction power by comparing their posteriors.

For this, we use implicit inference, which is fixed for all the compression strategies.

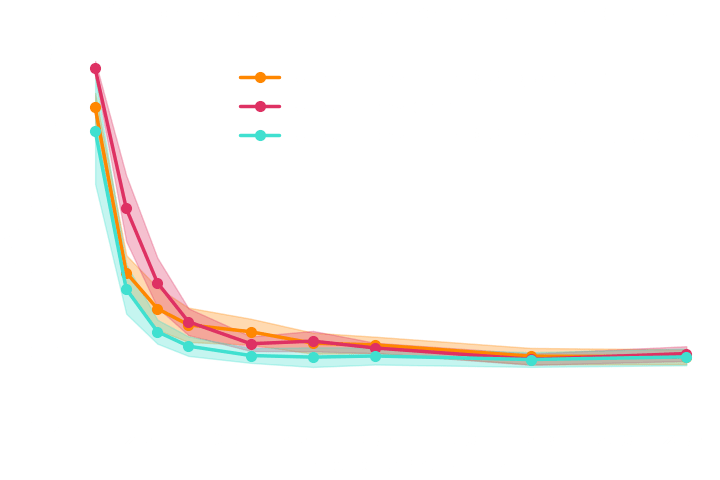

Numerical results

Outline

Which full-field inference methods require the fewest simulations?

How to build sufficient statistics?

Can we perform implicit inference with fewer simulations?

How to deal with model misspecification?

Outline

Which full-field inference methods require the fewest simulations?

How to build sufficient statistics?

Can we perform implicit inference with fewer simulations?

How to deal with model misspecification?

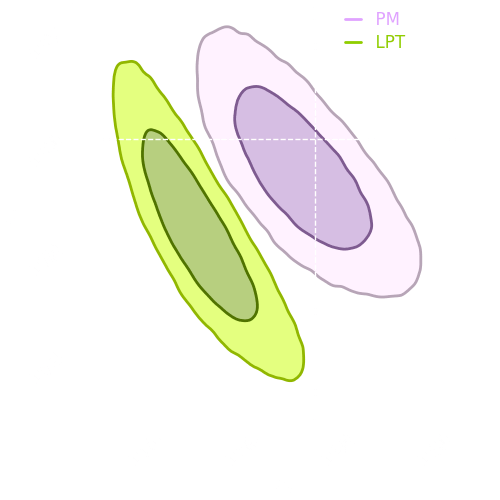

Correcting Model Misspecification with Conditional Optimal Transport

Justine Zeghal, Benjamin Remy, Laurence Perreault-Levasseur, Yashar Hezaveh

Preliminary results*

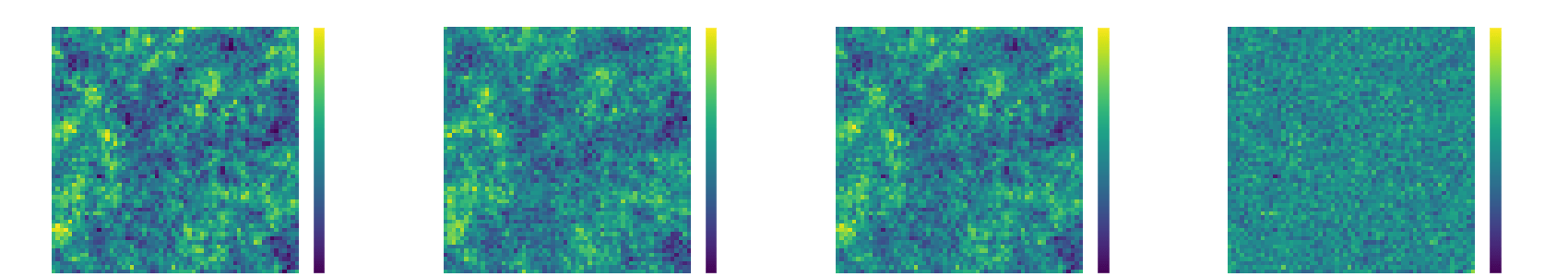

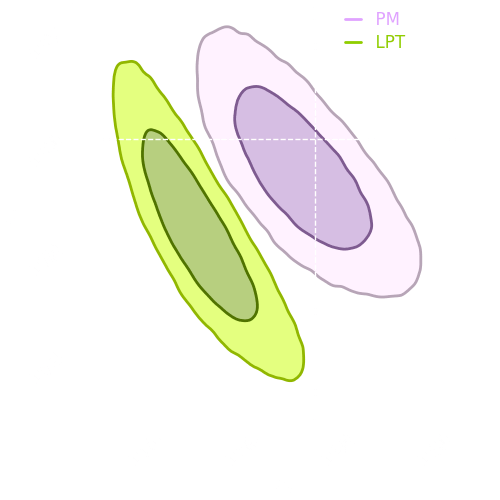

What happens when the simulation model differs from the true physical model?

With full-field inference, we are now only relying on simulations, and we work at the pixel level.

We cannot escape this, as there may be physics that we do not understand or cannot model computationally.

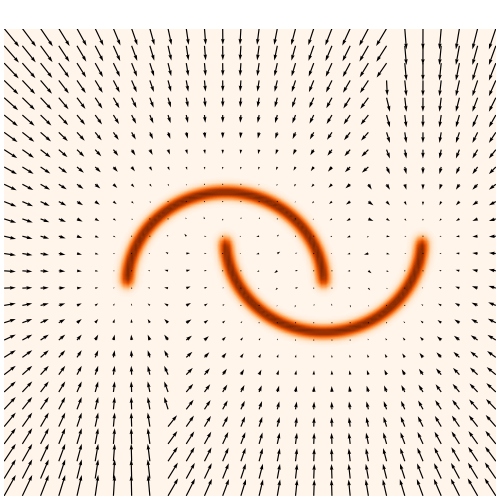

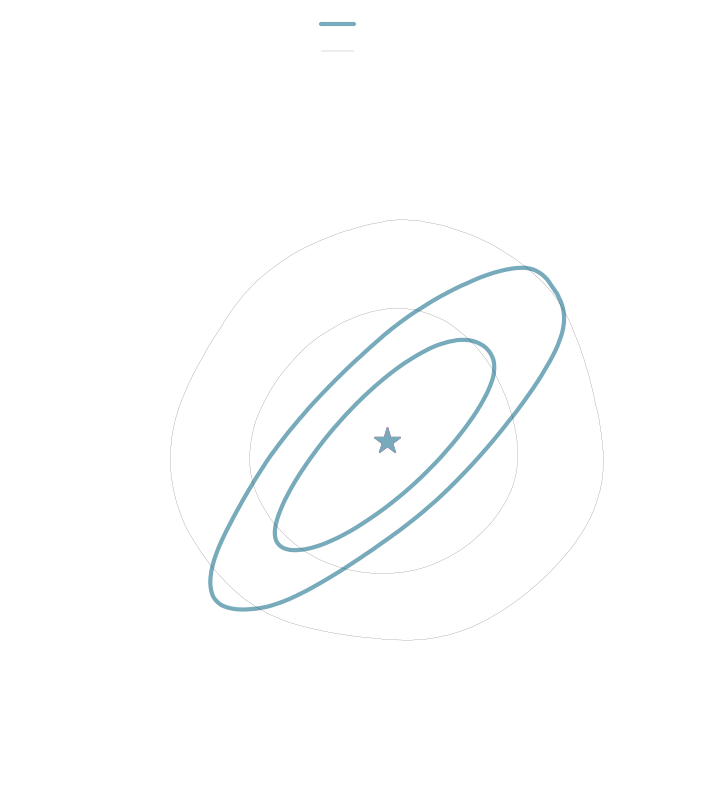

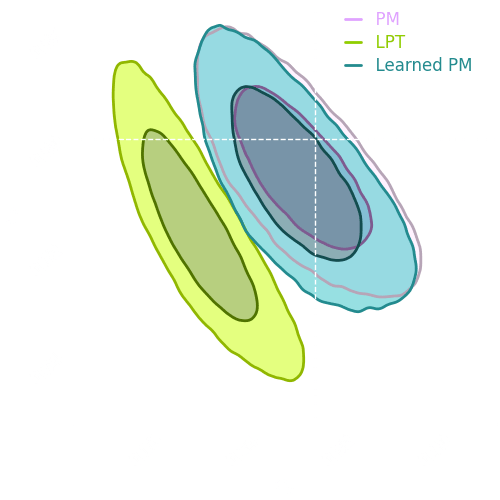

A way to correct this bias is to learn a mapping to transform one

simulation into another

and we would like it to be the optimal transport mapping in the sense that is minimally transformed to match its PM counterpart.

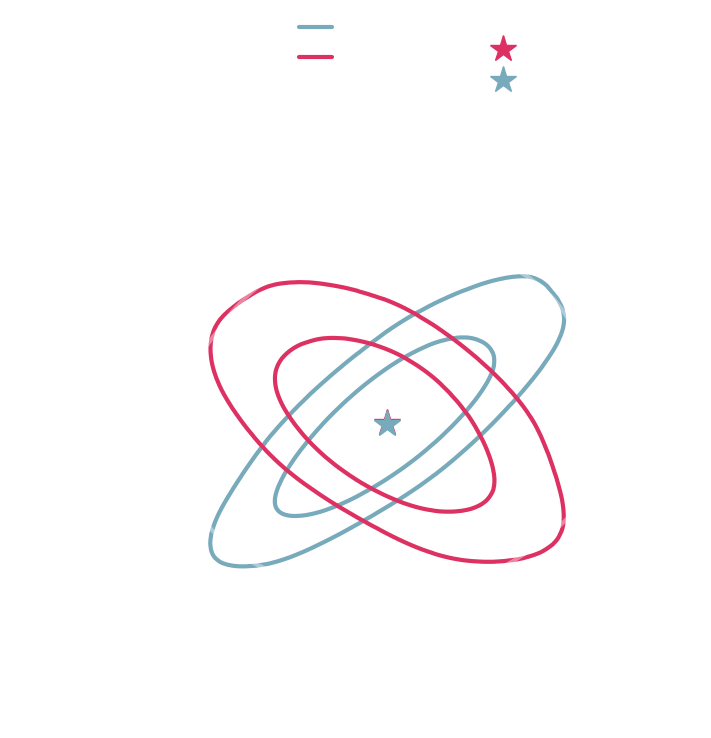

OT Flow Matching enables to learn an OT mapping between two random distributions.

Need to learn discrete transformations

Need to learn a continuous transformation

Credit: https://mlg.eng.cam.ac.uk/blog/2024/01/20/flow-matching.html

Credit: Tong et al., 2023

Credit: Albergo et al., 2023

Flow Matching

Optimal Transport Flow Matching

Optimal Transport Flow Matching

Optimal Transport Flow Matching

Optimal Transport Flow Matching

Optimal Transport Flow Matching

Preliminary Results

Conclusion

Which full-field inference methods require the fewest simulations?

How to build sufficient statistics?

Can we perform implicit inference with fewer simulations?

How to deal with model misspecification?

Gradients can be beneficial, depending on your simulation model.

Explicit inference requires 100 times more simulations than implicit inference.

Mutual Information Maximization

We can learn an optimal transport mapping.

Simulator

Summary statistics