Introduction to Data Science

Karl Ho

School of Economic, Political and Policy Sciences

University of Texas at Dallas

NCHU+UT Dallas Data Science Short Course Series

-

Karl Ho is:

- Professor of Instruction at University of Texas at Dallas (UTD) School of Economic, Political and Policy Sciences (EPPS)

- Co-founder of the UTD Social Data Analytics and Research program (SDAR)

- Projects

- Taiwan Studies Initiative at UTD EPPS funded by MOE

- Taiwan Research Academy funded by TFD

- UTD Math and Coding Camp (EPPS)

- Co-PI: Hong Kong Election Study

- Co-PI: North Texas Quality of Life

- Website: karlho.com (talks, lecture, publications)

Speaker bio.

NCHU-UTD

Dual Degree Program in Data Science

UTD Partnerships in Taiwan (EPPS)

- NCHU: MPA DDP

- NCCU: Diplomacy (in progress)

- NTU SPE: Student Exchange Mobility

Illustration: Collecting stock data

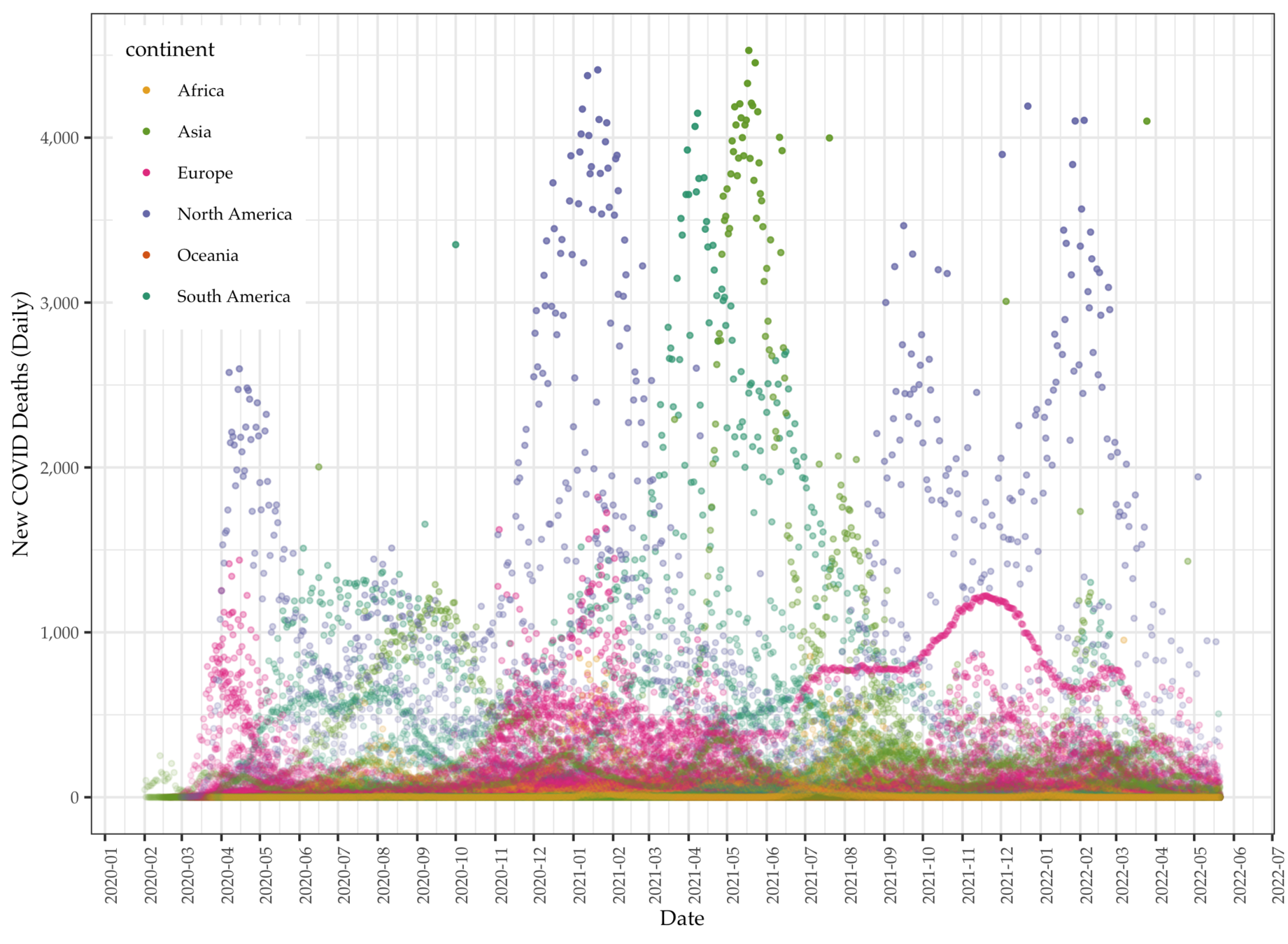

Data: Daily COVID deaths

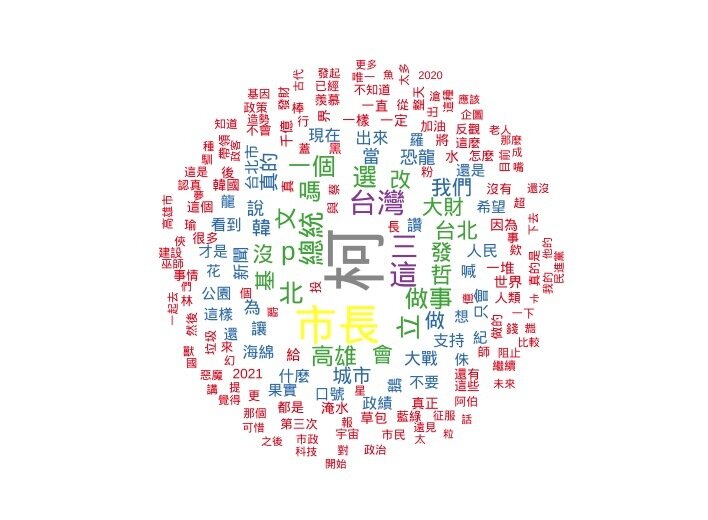

Wordcloud using YouTube data

Automated Machine Learning

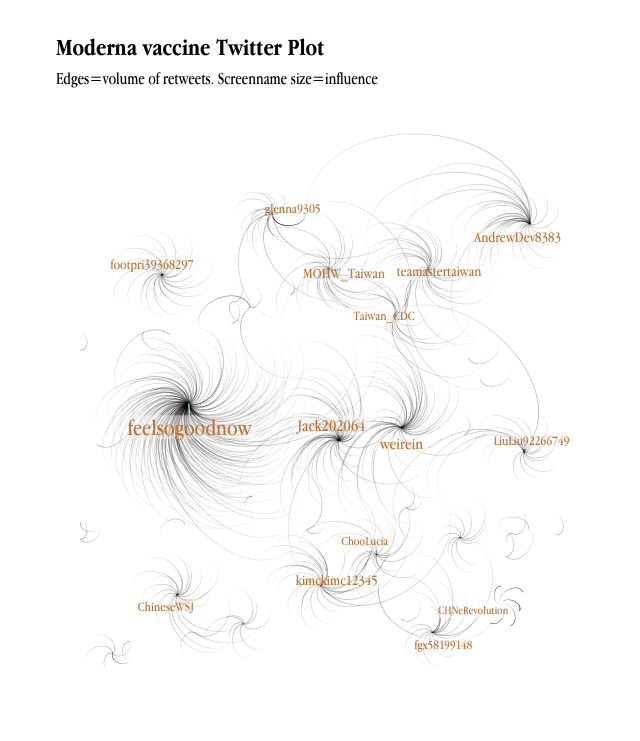

Analytics using Twitter data

Overview:

The certificate program is composed of short courses introducing students to data science and applications. Each course is delivered in three hours, giving overview and survey in subfields of data science with illustrations and hands-on practices. Students should follow pre-class instructions to prepare materials and own device before coming to class.

Whom is this course for?

-

Regular track: any students with no to any background in data programming and statistics

-

Advanced Progress (AP) track: students with some and intermediate backgrounds in data programming and statistical modeling

Course structure

-

In 30-minute segments

Lecture:

Workshop: Data Programming

Lecture

Workshop: Data Collection

Lecture

Workshop: Data Visualization and Modeling (Machine Learning)

Pre-class preparation

- Bring own device (Windows 10 or MacOS, no tablets)

- All software/applications used in this class are open-sourced

- Programming in cloud platforms (RStudio cloud, Google Colab)

- Recommended accounts: GitHub

In the beginning.....

This introductory course is an overview of Data Science. Students will learn:

- What is Data Science?

- What is Big Data?

- How to equip for data scientist

- Tools for professional data scientists

Prepare for class

Recommended software and IDE’s

- R version 4.x (https://cran.r-project.org)

- RStudio version 2023.06.x (https://posit.co/download/rstudio-desktop/)

Cloud websites/accounts:

- GitHub account (https://github.com)

- RStudio Cloud account (https://rstudio.cloud)

Optional software and IDE’s:

Text editor of own choice (e.g. Visual Studio Code, Sublime Text, Bracket)

Ask me anything!

Overview:

-

Why Data Science? Why now?

-

Data fluency (vs. Data literacy)

-

Types of Data Science

-

Data Science Roadmap

-

Data Programming

-

Data Acquisition

-

Data Visualization

Why Data Science? Why now?

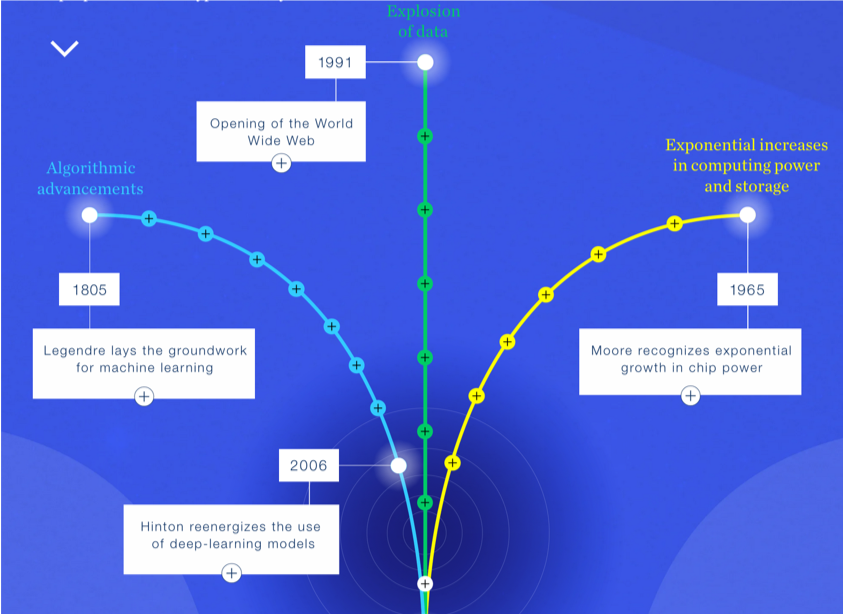

McKinsey & Co., An Executive’s Guide to AI

Data fluency

Hugo Bowne-Anderson. 2019. "What 300 L&D leaders have learned about building data fluency"

Data fluency

Hugo Bowne-Anderson. 2019. "What 300 L&D leaders have learned about building data fluency"

Data fluency

Hugo Bowne-Anderson. 2019. "What 300 L&D leaders have learned about building data fluency"

Data fluency

Everybody has the data skills and literacy to understand and perform data driven documents and tasks

Danger of immature data fluency

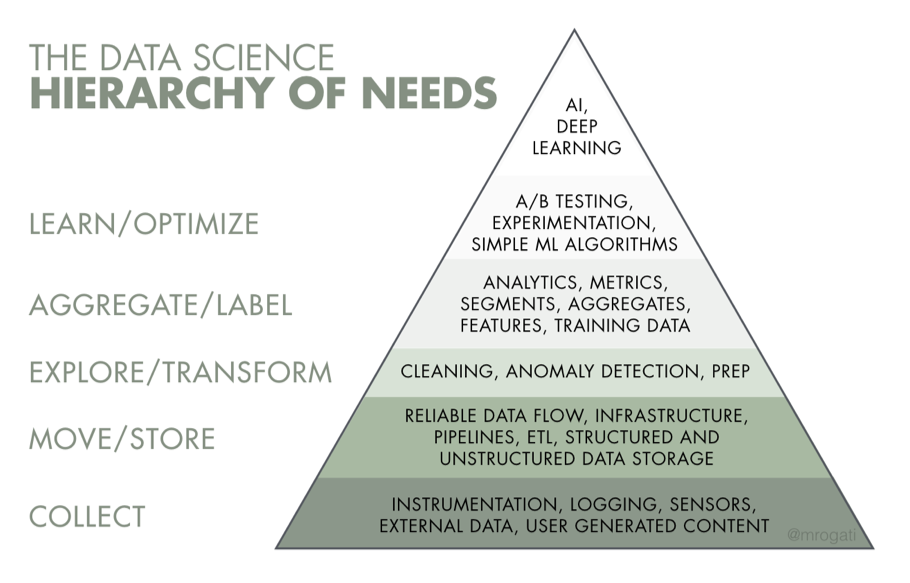

Types of Data Science

- Business intelligence (Descriptive analytics)

- Machine learning (Predictive analytics)

- Decision making (Prescriptive analytics)

Rogati AI hierarchy of needs

Data Science Roadmap

-

Introduction - Data theory

-

Data methods

-

Statistics

-

Programming

-

Data Visualization

-

Information Management

-

Data Curation

-

Spatial Models and Methods

-

Machine Learning

-

NLP/Text mining

What is data?

What is data?

-

Data generation

-

Made data vs. Found data

-

Structured vs. Semi/unstructured

-

Primary vs. secondary data

-

Derived data

-

metadata, paradata

-

-

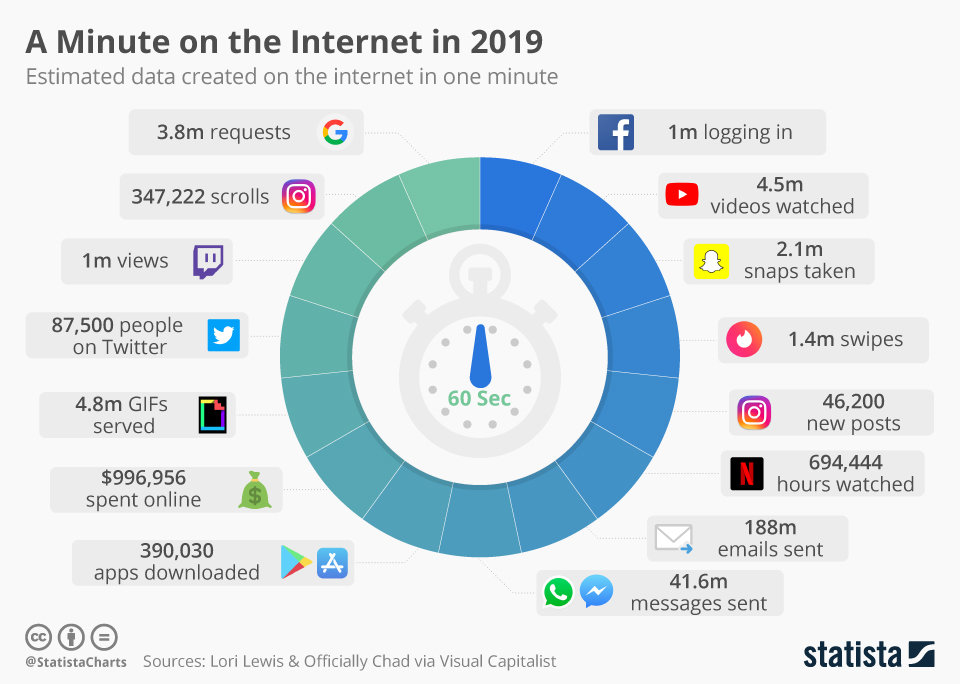

What is Big Data?

The Big data is about data that has huge volume, cannot be on one computer. Has a lot of variety in data types, locations, formats and form. It is also getting created very very fast (velocity) (Doug Laney 2001).

The Big data is about data that has huge volume, cannot be on one computer. Has a lot of variety in data types, locations, formats and form. It is also getting created very very fast (velocity) (Doug Laney 2001).

What is Big Data?

Burt Monroe (2012)

5Vs of Big data

Volume

Variety

Velocity

Vinculation

Validity

- Programming is a practice of using programming language to design, perform and evaluate tasks using a computer. These tasks include:

- Computation

- Data collection

- Data management

- Data visualization

- Data modeling

- In this course, we focus on data programming, which emphasizes programs dealing with and evolving with data.

What is programming?

Data programming

}

- Understand the differences between apparently similar constructs in different languages

- Be able to choose a suitable programming language for each application

- Enhance fluency in existing languages and ability to learn new languages

- Application development

Why learning programming Languages?

- Maribel Fernandez 2014

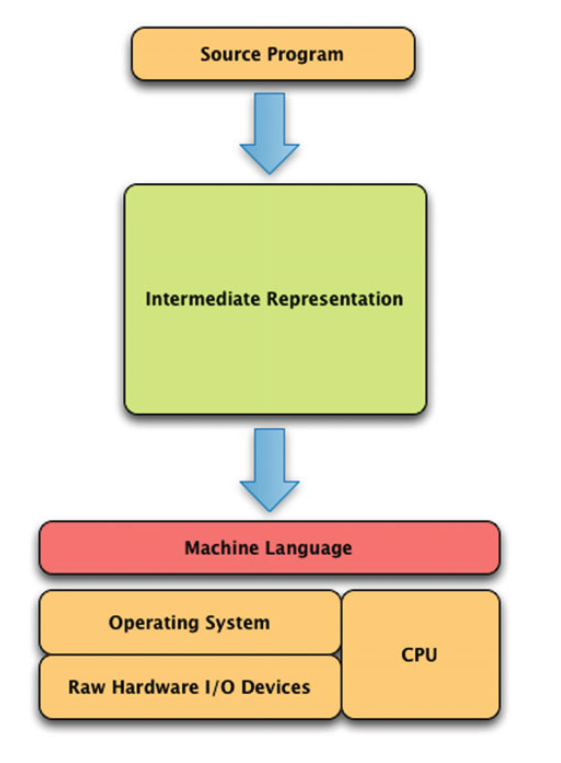

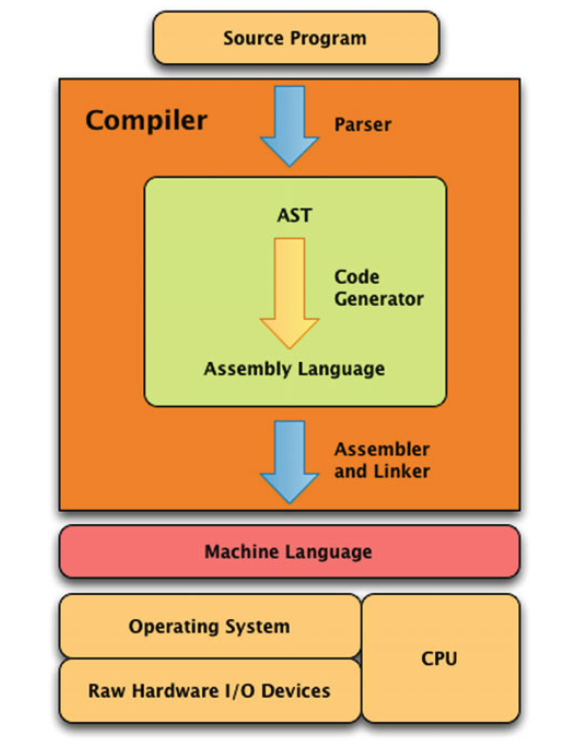

Language implementations

Compilation

Interpretation

- Machine language

- Assembly language

- C

Low-level languages

- BASIC

- REALbasic

- Visual Basic

- C++

- Objective-C

- Mac

- C#

- Windows

- Java

Systems languages

- Perl

- Tcl

- JavaScript

- Python

Scripting languages

DRY – Don’t Repeat Yourself

Write a function!

Function example

# Create preload function

# Check if a package is installed.

# If yes, load the library

# If no, install package and load the library

preload<-function(x)

{

x <- as.character(x)

if (!require(x,character.only=TRUE))

{

install.packages(pkgs=x, repos="http://cran.r-project.org")

require(x,character.only=TRUE)

}

}

- For social scientists, programming is more than just developing an application. It could introduce social injustice:

Why learning programming?

learning how to program can significantly enhance how social scientists can think about their studies, and especially those premised on the collection and analysis of digital data.

- Brooker 2019:

Chances are the language you learn today will quite likely not be the language you'll be using tomorrow.

What is R?

R is an integrated suite of software facilities for data manipulation, calculation and graphical display.

- Venables, Smith and the R Core team

- array

- interpreted

- impure

- interactive mode

- list-based

- object-oriented (prototype-based)

- scripting

R

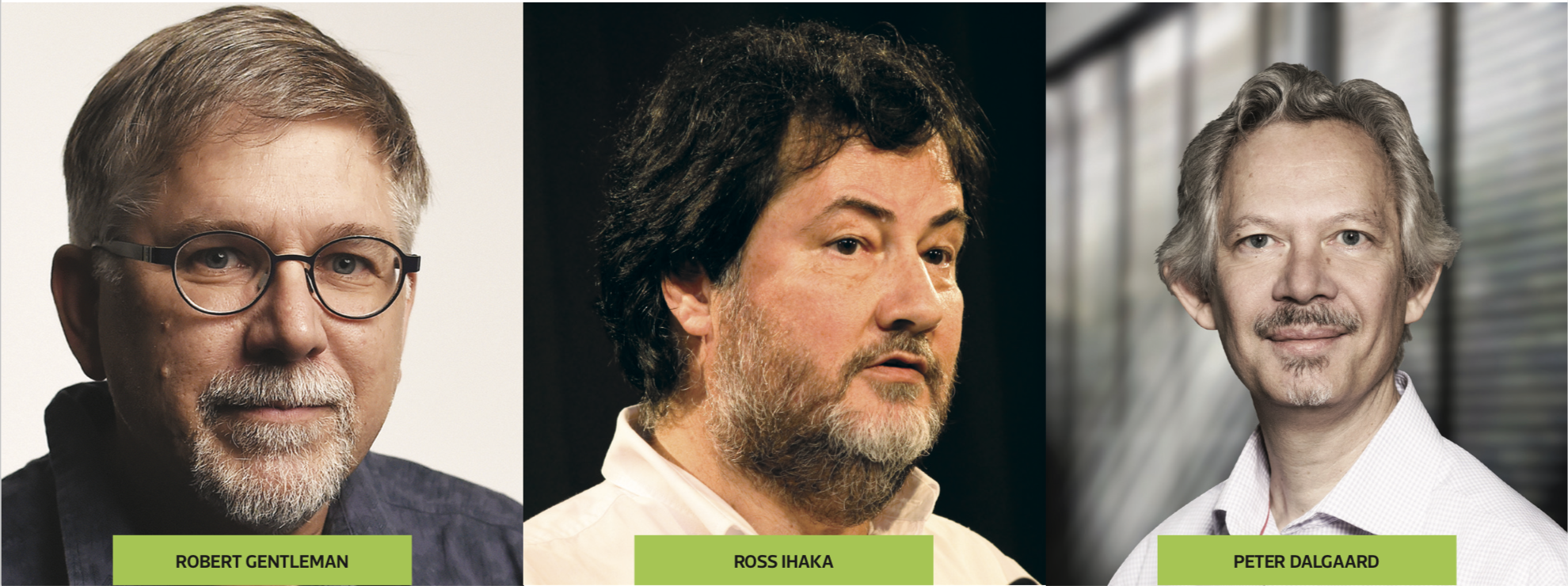

What is R?

-

The R statistical programming language is a free, open source package based on the S language developed by John Chambers.

-

Some history of R and S

-

S was further developed into R by Robert Gentlemen (Canada) and Ross Ihaka (NZ)

Source: Nick Thieme. 2018. R Generation: 25 years of R https://rss.onlinelibrary.wiley.com/doi/10.1111/j.1740-9713.2018.01169.x

What is R?

-

Ihaka, Ross and Robert Gentleman. 1996. R: A language for data analysis and graphics. Journal of Computational and Graphical Statistics, 5(3):299–314

-

Roger Peng's 2015 video of R history

What is R?

It is:

-

Large, probably one of the largest based on the user-written add-ons/procedures

-

Object-oriented

-

Interactive

-

Multiplatform: Windows, Mac, Linux

What is R?

According to John Chambers (2009), six facets of R :

-

an interface to computational procedures of many kinds;

-

interactive, hands-on in real time;

-

functional in its model of programming;

-

object-oriented, “everything is an object”;

-

modular, built from standardized pieces; and,

-

collaborative, a world-wide, open-source effort.

Why R?

-

A programming platform environment

-

Allow development of software/packages by users

-

Currently, the CRAN package repository features 12,108 available packages (as of 1/31/2018).

-

Graphics!!!

-

Comparing R with other software?

Getting the software

-

R home: https://www.r-project.org

-

Downloads: https://cran.r-project.org

-

Select Download R for Windows or Mac or Linux

-

R 4.x

-

Caveat:

-

Frequent updates/upgrades

-

Packages/library

-

Workshop I: Data Programming

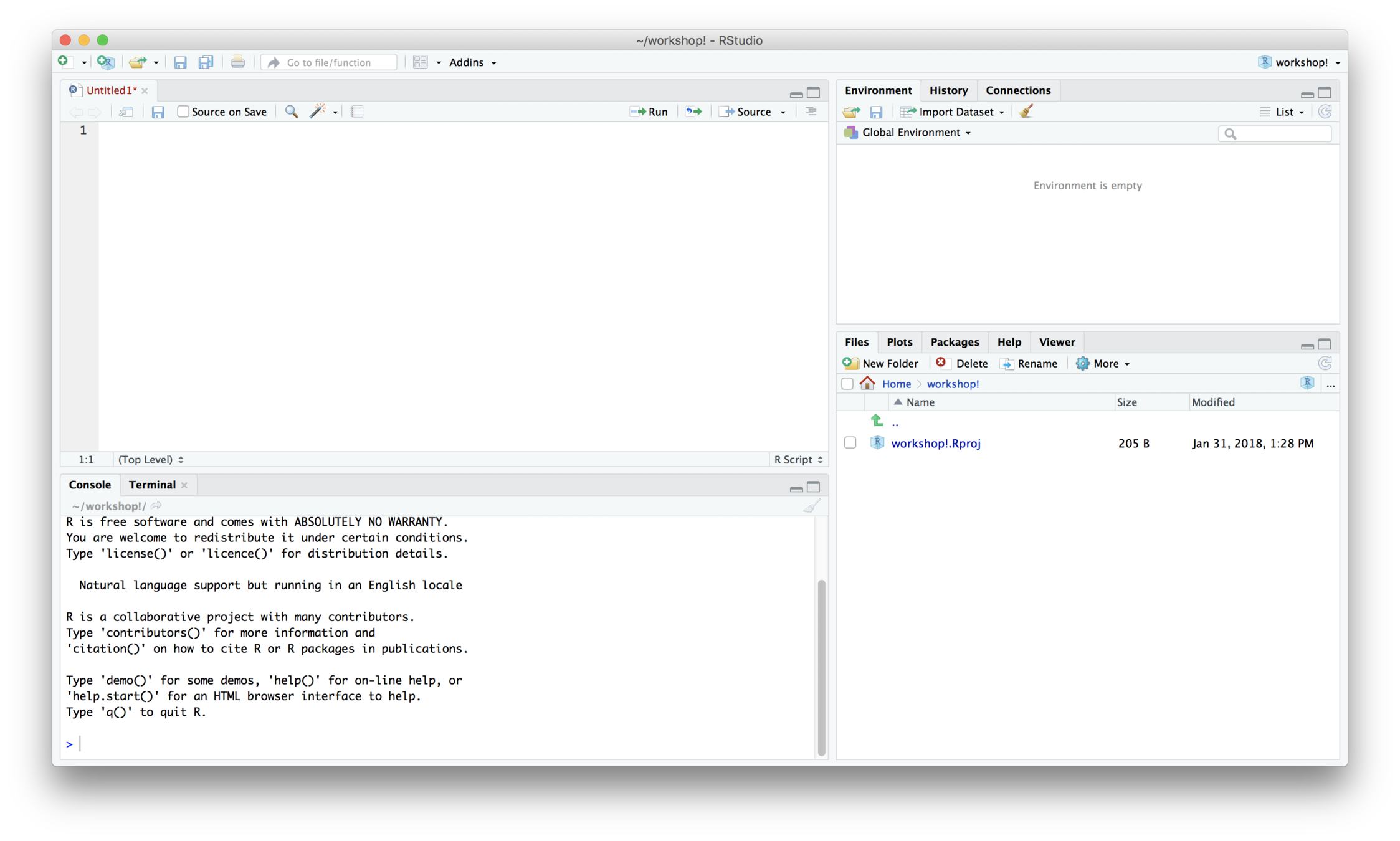

RStudio

RStudio is a user interface for the statistical programming software R.

-

Object-based environment

-

Window system

-

Point and click operations

-

Coding recommended

-

Expansions and development

Posit Cloud:

https://posit.cloud/content/6625059

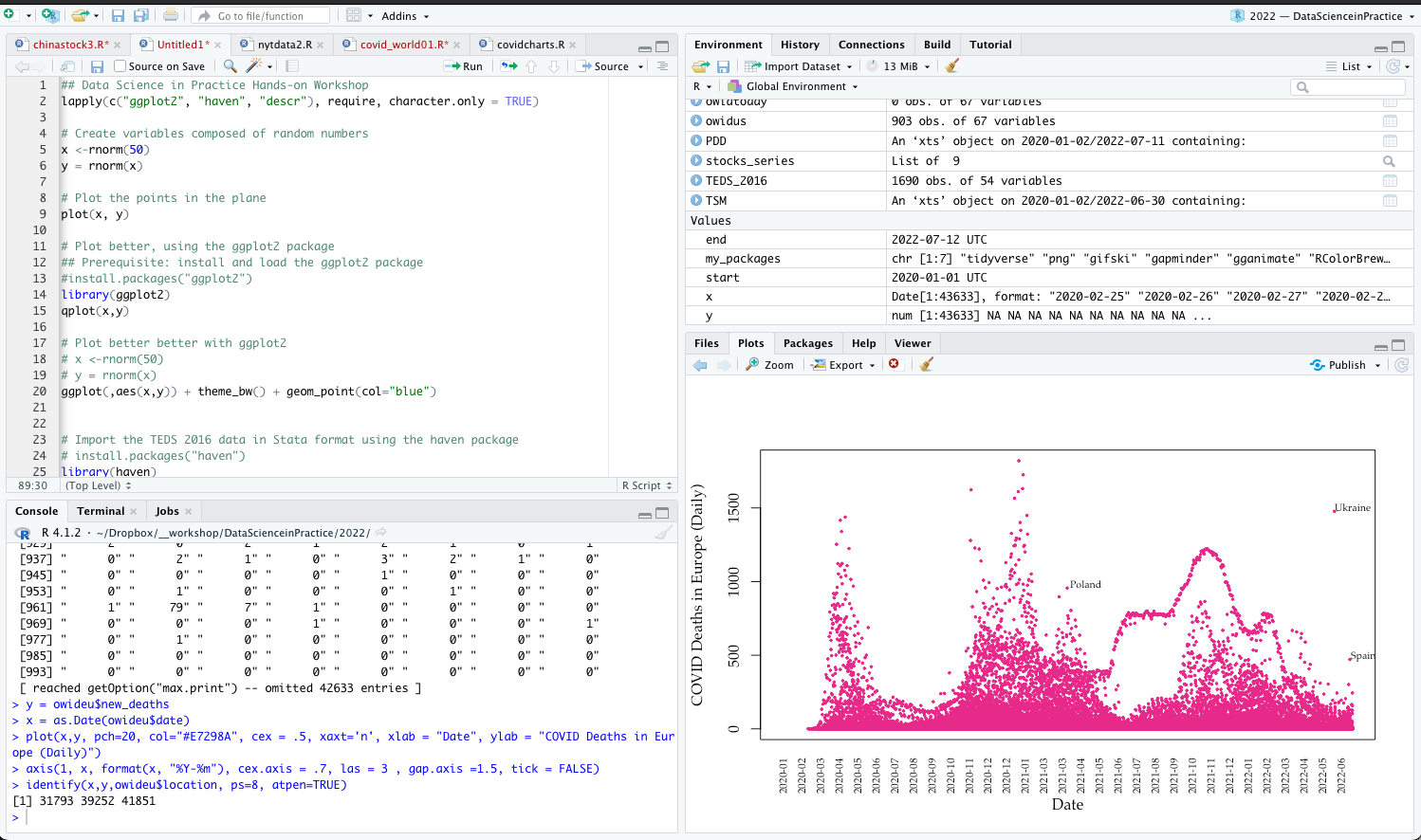

RStudio

The script window:

You can store a document of commands you used in R to reference later or repeat analyses

Environment:

Lists all of the objects

Console:

Output appears here. The > sign means R is ready to accept commands.

Plot/Help:

Plots appear in this window. You can resize the window if plots appear too small or do not fit.

RStudio

The script window:

You can store a document of commands you used in R to reference later or repeat analyses

Environment:

Lists all of the objects

Console:

Output appears here. The > sign means R is ready to accept commands.

Plot/Help:

Plots appear in this window. You can resize the window if plots appear too small or do not fit.

R Programming Basics

-

R code can be entered into the command line directly or saved to a script, which can be run as a script

-

Commands are separated either by a ; or by a newline.

-

R is case sensitive.

-

The # character at the beginning of a line signifies a comment, which is not executed.

-

Help can be accessed by preceding the name of the function with ? (e.g. ?plot).

Importing data

-

Can import from SPSS, Stata and text data file

Use a package called foreign:

First, install.packages(“foreign”), then you can use following codes to import data:

mydata <- read.csv(“path”,sep=“,”,header=TRUE)

mydata.spss <- read.spss(“path”,sep=“,”,header=TRUE)

mydata.dta <- read.dta(“path”,sep=“,”,header=TRUE)

Importing data

Note:

-

R is absolutely case-sensitive

-

R uses extra backslashes to recognize path

-

Read data directly from GitHub:

happy=read.csv("https://raw.githubusercontent.com/kho7/SPDS/master/R/happy.csv")

Accessing variables

To select a column use:

mydata$column

For example:

Manipulating variables

Recoding variables

For example:

mydata$Age.rec<-recode(mydata$Age, "18:19='18to19'; 20:29='20to29';30:39='30to39'")

Getting started

Beware of bugs in the above code; I have only proved it correct, not tried it."

- Donald Knuth, author of The Art of Computer Programming

Source: https://www.frontiersofknowledgeawards-fbbva.es/version/edition_2010/

Overview

In this module, we will help you:

-

Understand data generation process in big data age

-

Learn how to collect web data and social data

-

Illustration: Open data

-

collecting stock data

-

collecting COVID data

-

-

Illustration: API

Data Methods

-

Survey

-

Experiments

-

Qualitative Data

-

Text Data

-

Web Data

-

Machine Data

-

Complex Data

-

Network Data

-

Multiple-source linked Data

-

Made

Data

}

}

Found

Data

Data Methods

-

Small data or Made data emphasize design

-

Big data or Found data focus on algorithm

How Data are generated?

-

Computers

-

Web

-

Mobile devices

-

IoT (Internet of Things)

-

Further extension of human users (e.g. AI, avatars)

Web data

How do we take advantage of the web data?

-

Purpose of web data

-

Generation process of web data

-

What is data of data?

-

Why data scientists need to collect web data?

Data file formats

-

CSV (comma-separated values)

- CSVY with metadata (YAML)

-

JSON (JavaScript Object Notation)

-

XML (Extensible Markup Language)

-

Text (ASCII)

-

Tab-delimited data

-

Proprietary formats

- Stata

- SPSS

- SAS

- Database

YAML (Yet Another Markup Language or YAML Ain't Markup Language) is a data-oriented, human readable language mostly use for configuration files)

Open data

Open data refers to the type of data usually offered by government (e.g. Census), organization or research institutions (e.g. ICPSR, Johns Hopkins Coronavirus Resource Center). Some may require an application for access and others may be open for free access (usually via websites or GitHub).

Open data

Since open data are provided by government agencies or research institutions, these data files are often:

-

Structured

-

Well documented

-

Ready for data/research functions

API

-

API stands for Application Programming Interface. It is a web service that allows interactions with, and retrieval of, structured data from a company, organization or government agency.

-

Example:

-

Social media (e.g. Facebook, YouTube, Twitter)

-

Government agency (e.g. Congress)

-

-

APIs can take many different forms and be of varying quality and usefulness.

-

RESTful API (Representational State Transfer) is a means of transferring data using web protocols

- Example:

- Crossref API

http://api.crossref.org/works/10.1093/nar/gni170 - Taiwan Legislative Yuan API

https://www.ly.gov.tw/WebAPI/LegislativeBill.aspx?from=1050201&to=1050531&proposer=&mode=json

- Crossref API

API

API

Like open data, data available through API are generally:

-

Structured

-

Somewhat documented

-

Not necessary fully open

-

Subject to the discretion of data providers

-

E.g. Not all variables are available, rules may change without announcements, etc.

For the type of found data not available via API or open access, one can use non-API methods to collect this kind of data. These methods include scraping, which is to simulate web browsing but through automated scrolling and parsing to collect data. These data are usually non-structured and often times noisy. Researchers also have little control over data generation process and sampling design.

Non-API methods

Non-API methods

Non-API data are generally:

-

Non-structured

-

Noisy

-

Undocumented with no or little information on sampling

Illustration: Collecting stock data

This workshop demonstrates how to collect stock data using:

- an R package called quantmod

Illustration: Collecting stock data

This workshop demonstrates how to collect stock data using:

- an R package called quantmod

This workshop demonstrates how to collect stock data using:

Link to RStudio Cloud:

https://posit.cloud/content/6625059

- Need a GitHub and RStudio Account

Link to class GitHub:

Workshop II: Data collection

Assignment 1

-

Install R and RStudio

-

Download the R program in class GitHub (under codes)

-

DPR_stockdata.R

-

-

Can you download TSM's (台積電) data in the last three years?

-

Plot the TSM data in the last three years using the sample codes (plot and ggplot2)

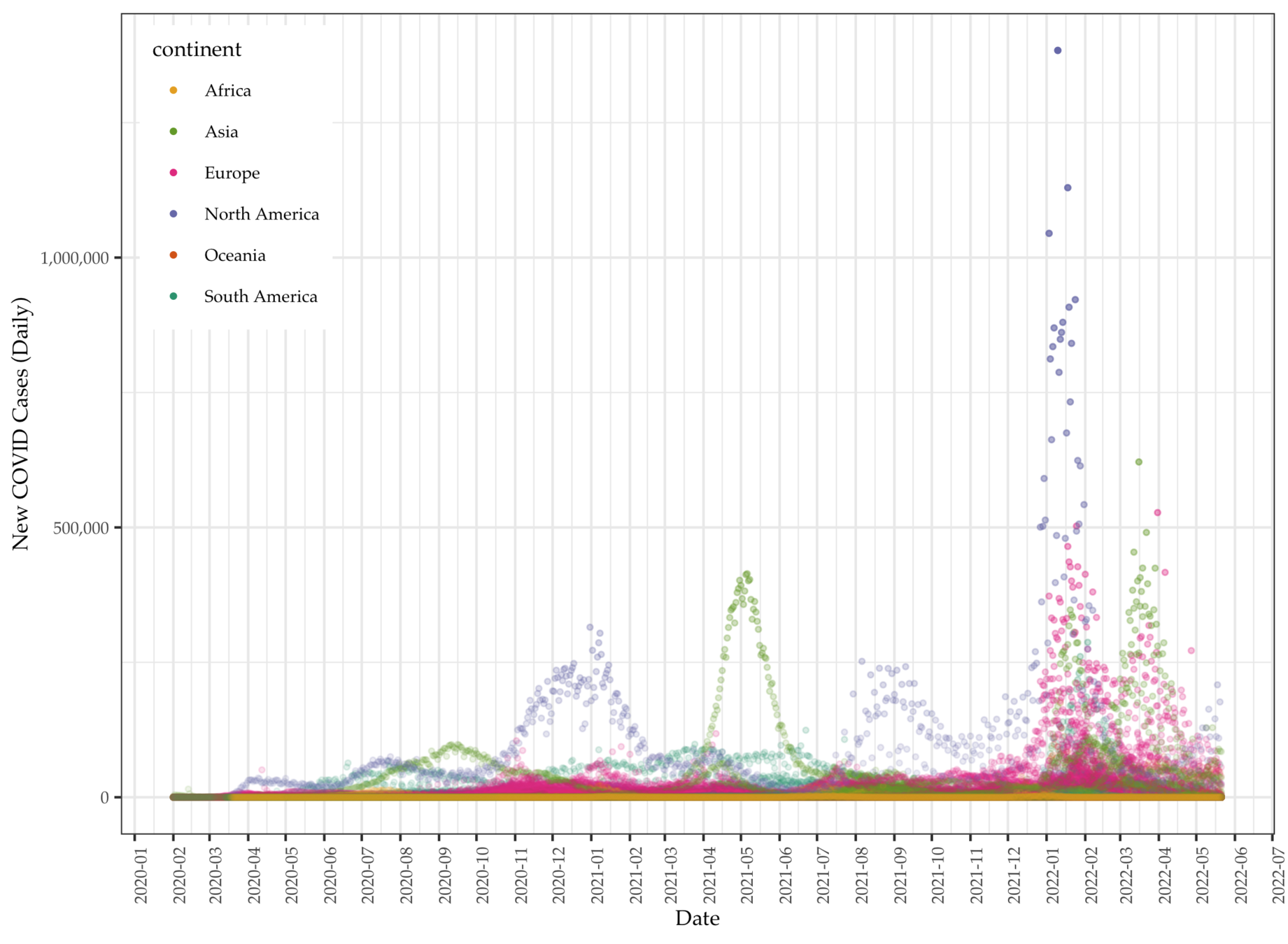

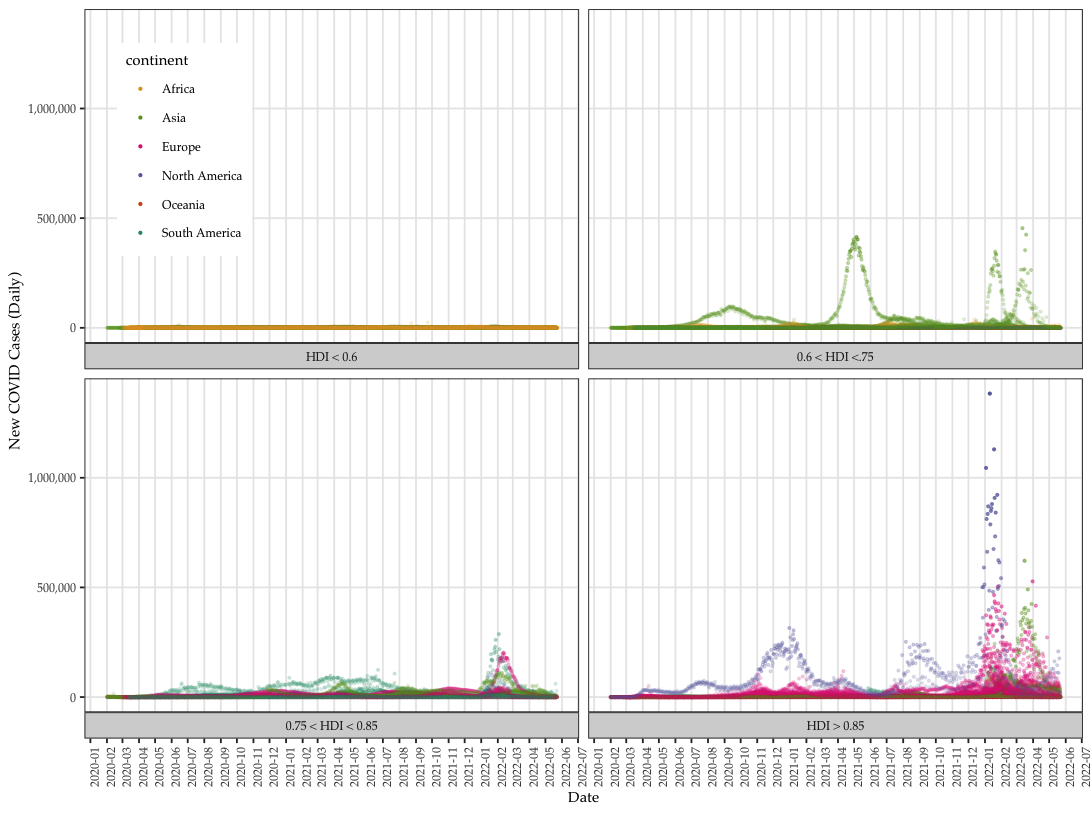

Illustration: Collecting COVID data

Data: Total cases per million

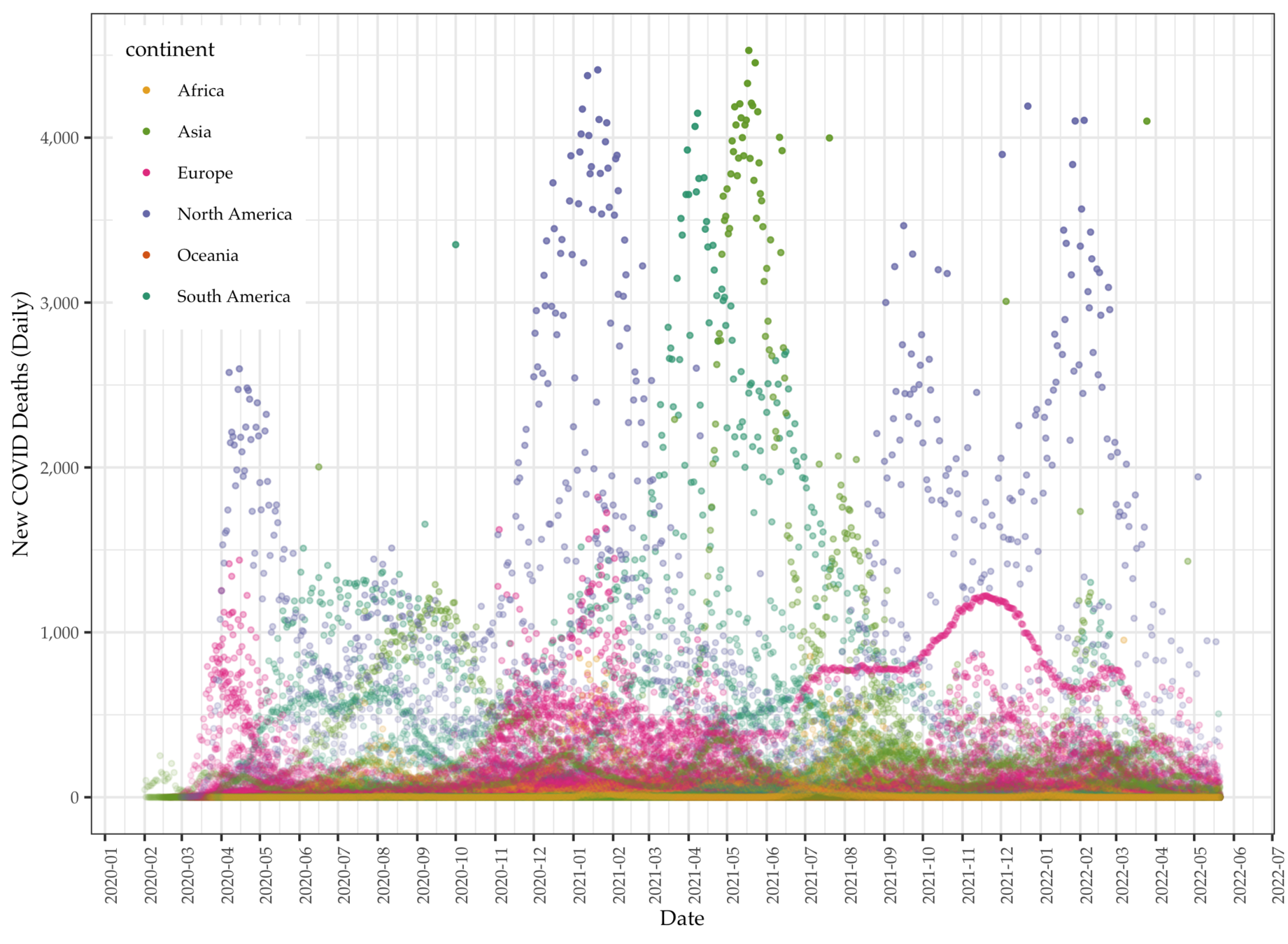

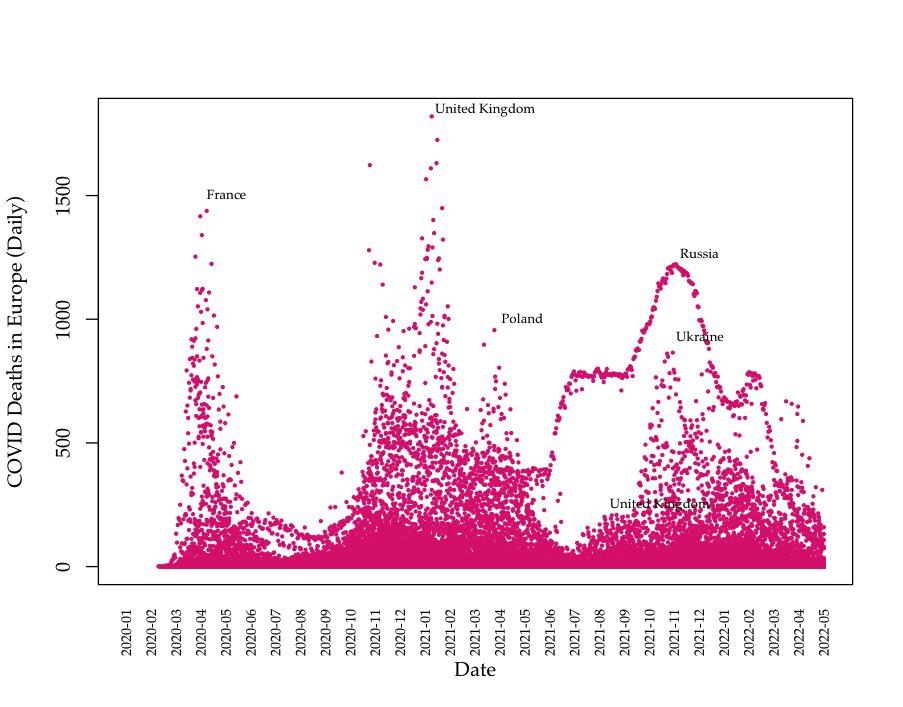

Data: Daily COVID deaths

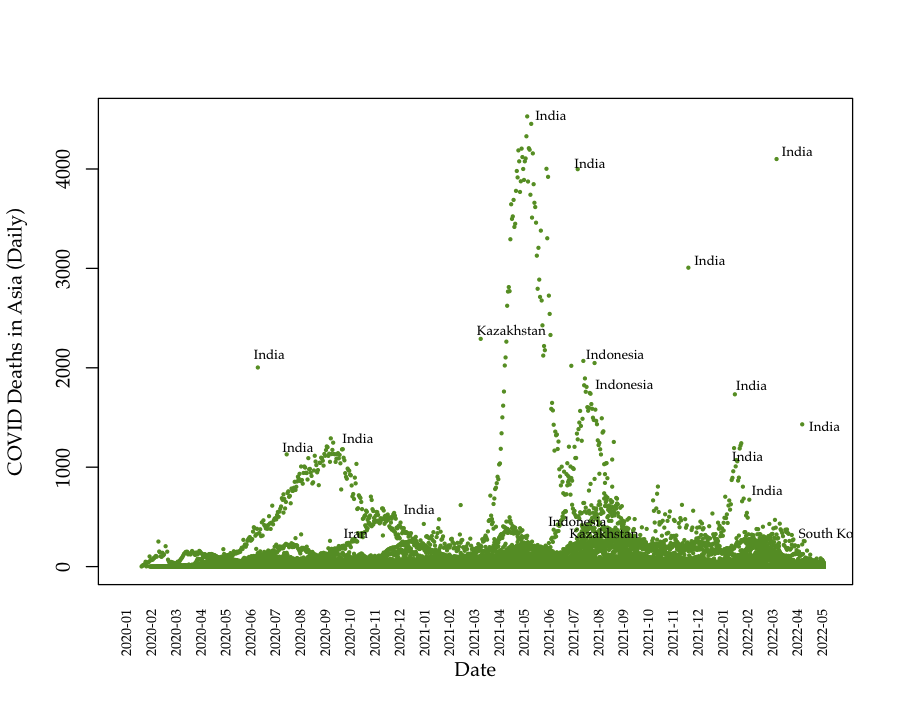

Data: Death data (Asia)

Data: Death data (Europe)

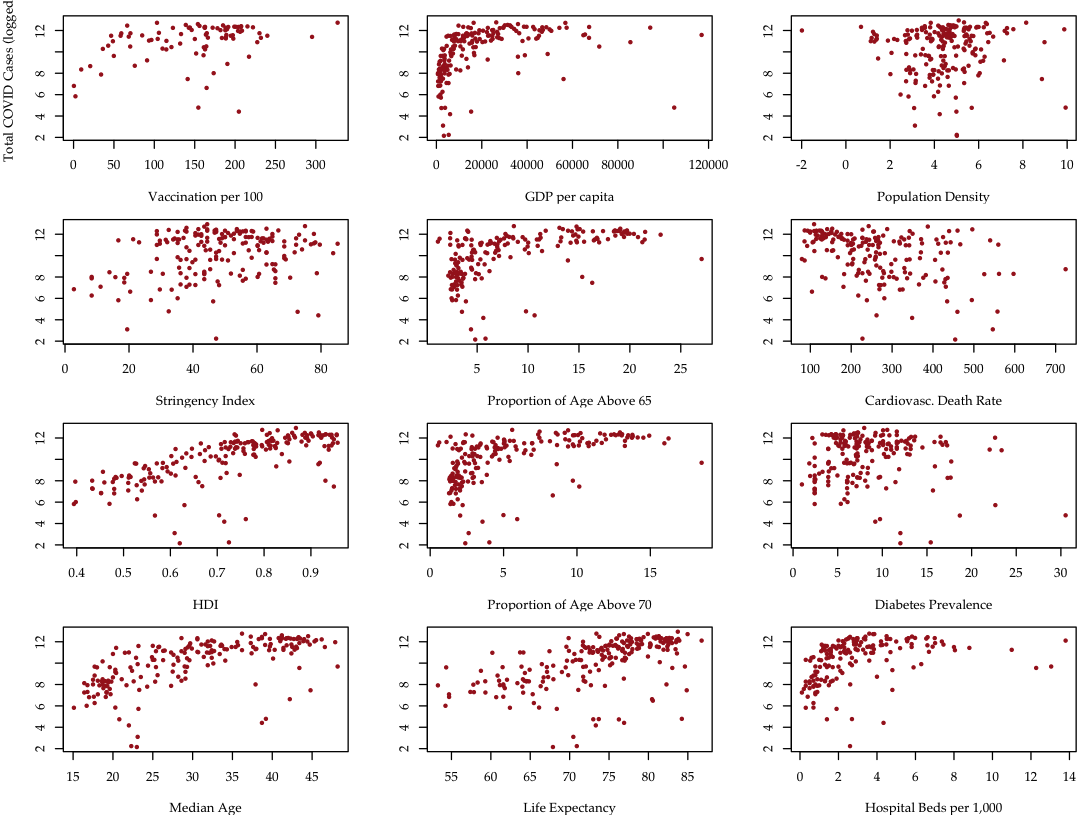

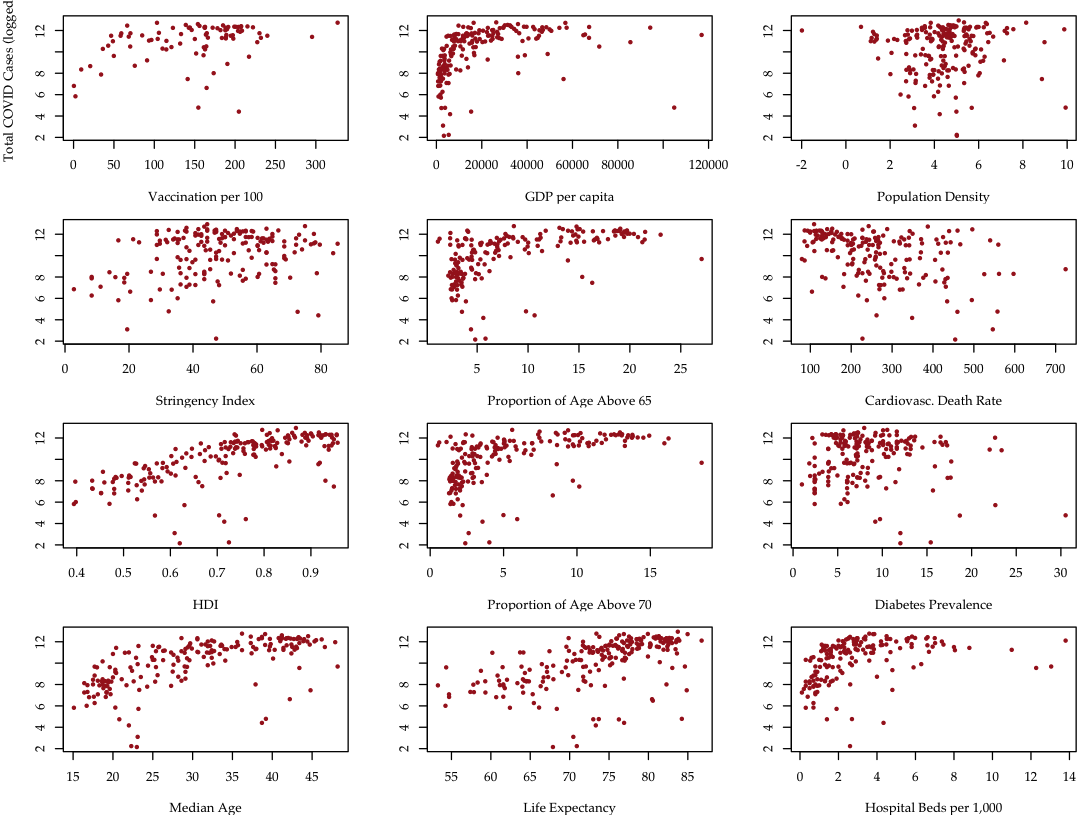

Data: COVID cases~predictors

Data: COVID cases~predictors

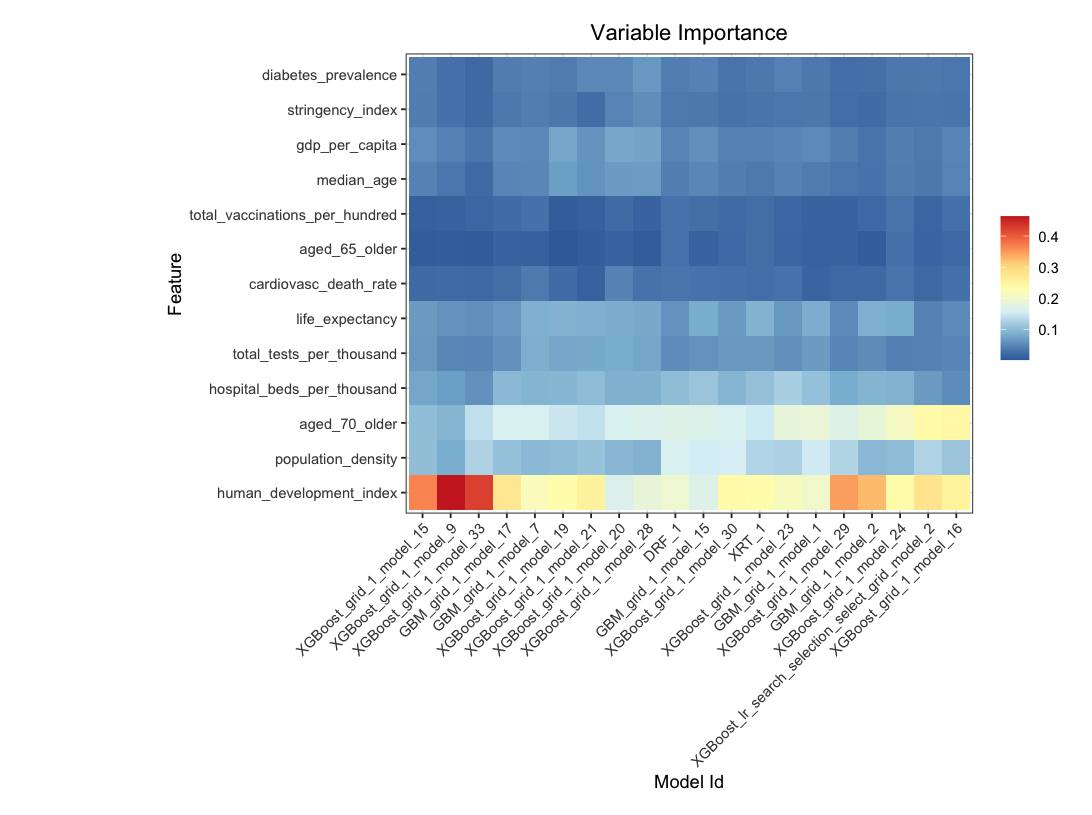

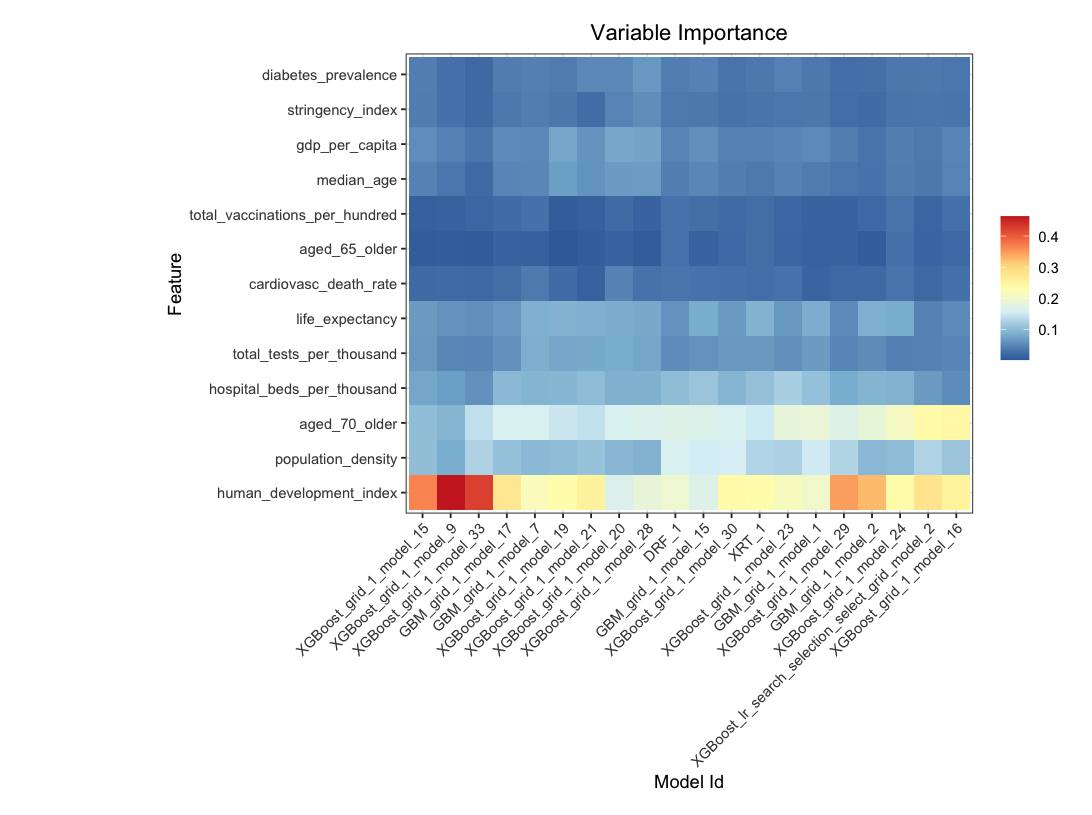

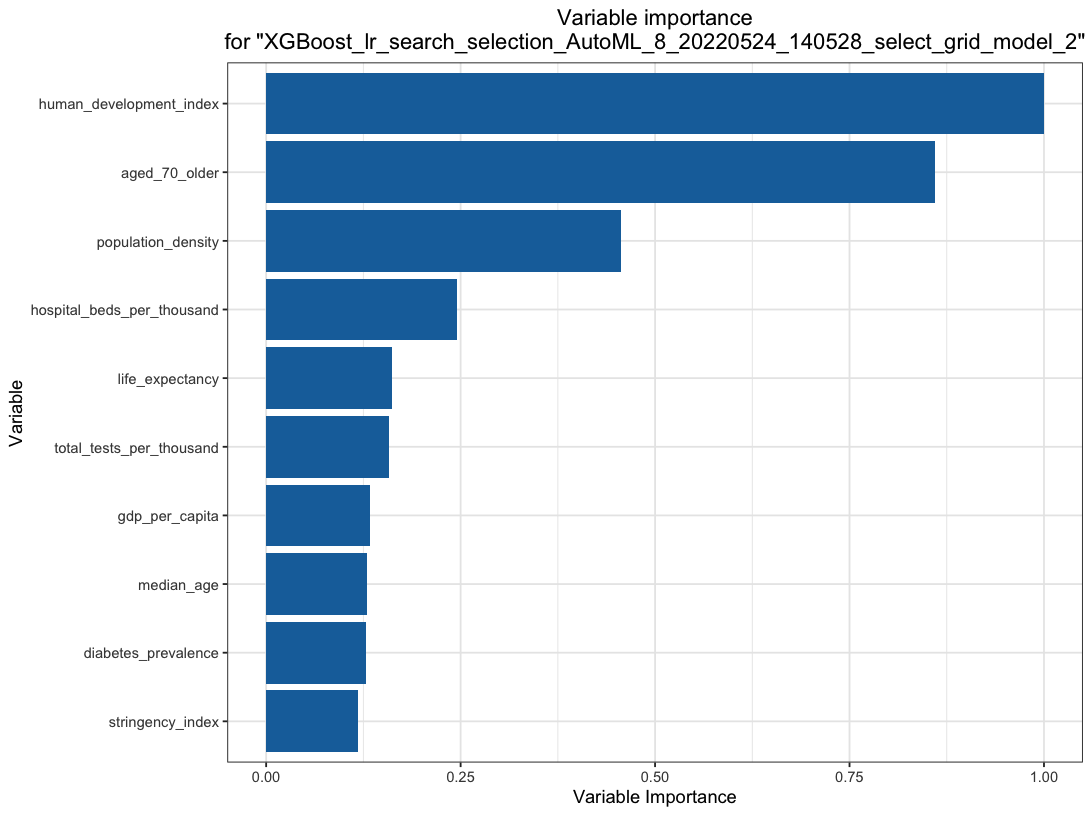

Automated Machine Learning

Automated Machine Learning

Automated Machine Learning

Assignment 2

-

Download the R program in class GitHub (under codes)

-

DPR_coviddata1.R

-

-

Can you download Taiwan and Germany COVID data in last three years?

-

Plot the data using the sample codes (use plot and ggplot2 functions)

Assignment 3

-

Download the R program in class GitHub (under codes)

-

DPR_caret01.R

-

-

Can you predict the chance of Tsai winning including additional variable "indep" (support for Taiwan's independence")?

-

What is the new accuracy? Better or worse?

Assignment 4 (optional for AP)

-

Download the R program in class GitHub (under codes)

-

DPR_tuber01.R

-

-

Can you download channel and video data from “中天新聞” and “關鍵時刻”?

-

Can you create WordClouds for selected video from each channel?

Illustration: Scraping YouTube data

This workshop demonstrates how to collect YouTube data using:

- API method (with Google developer account)

Illustration: Collecting YouTube data

This workshop demonstrates how to collect YouTube data using Google API:

Link to RStudio Cloud:

https://rstudio.cloud/project/4631380

- Need a GitHub and RStudio Account

Link to class GitHub:

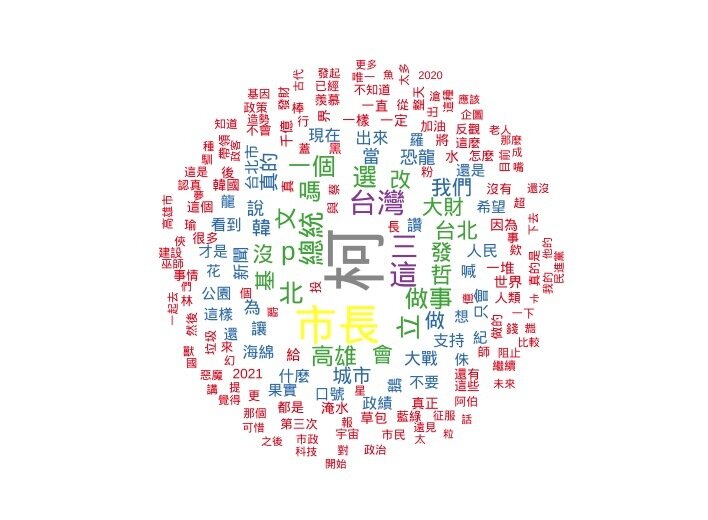

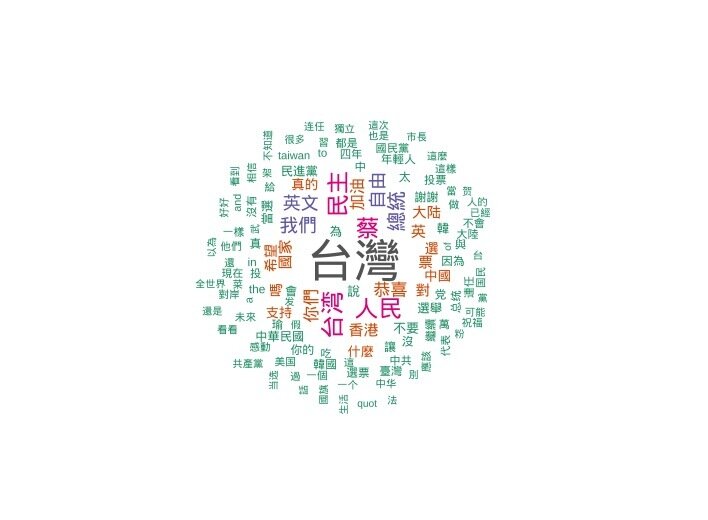

Wordcloud using YouTube data

Wordcloud using YouTube data

Illustration: Scraping Twitter data

This workshop demonstrates how to collect Twitter data using:

- API method (with Twitter developer account)

- Non-API method (using Python-based twint)

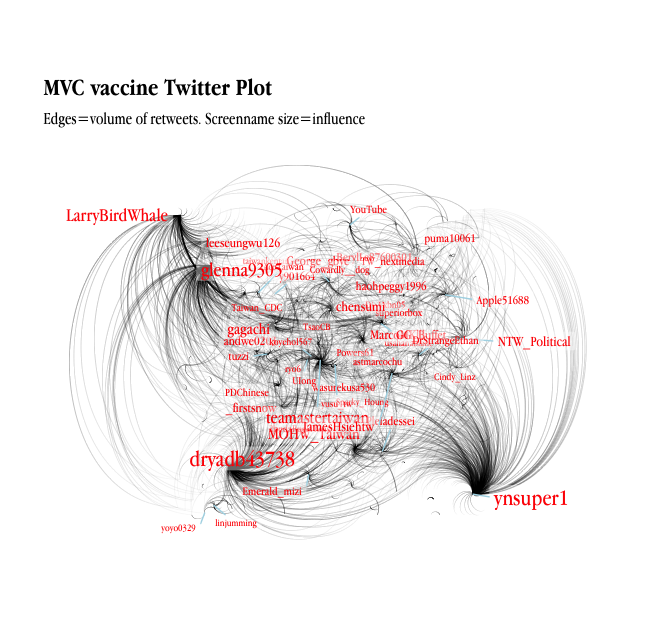

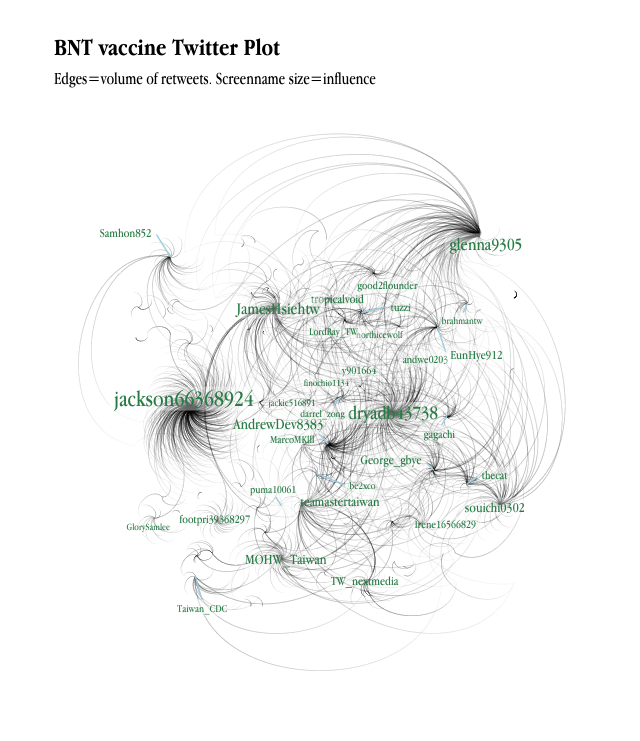

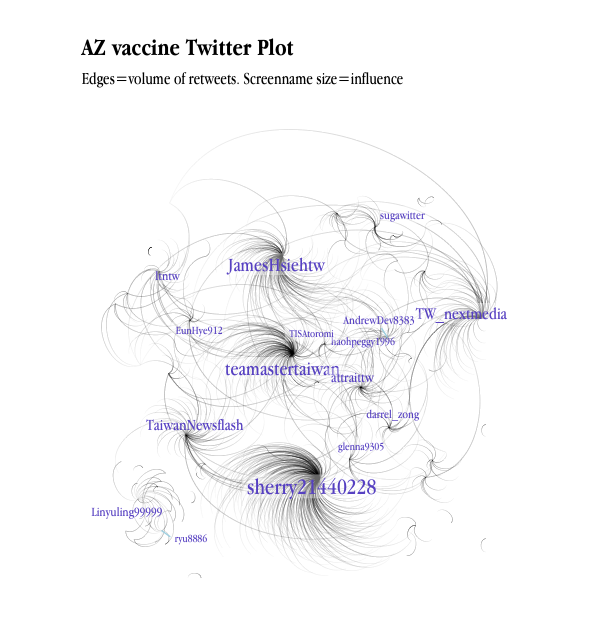

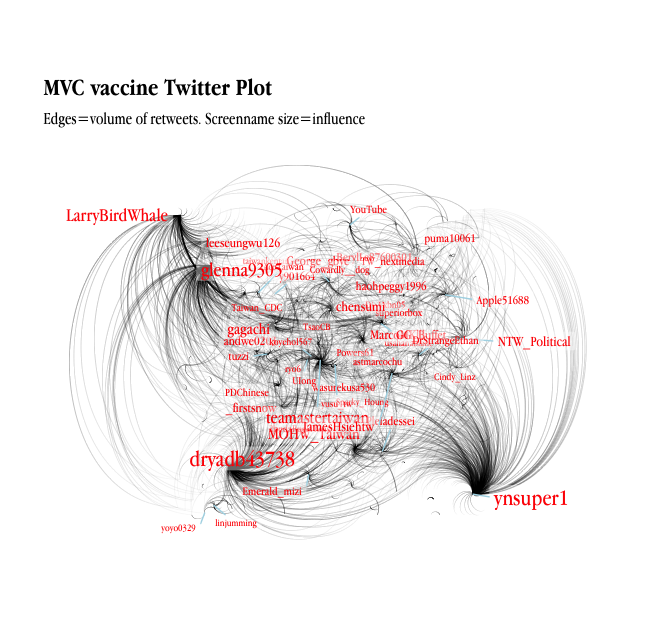

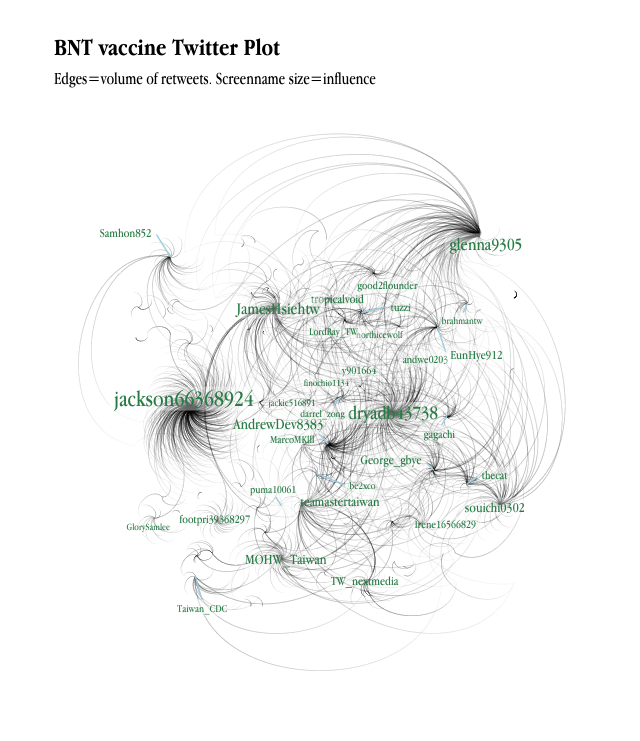

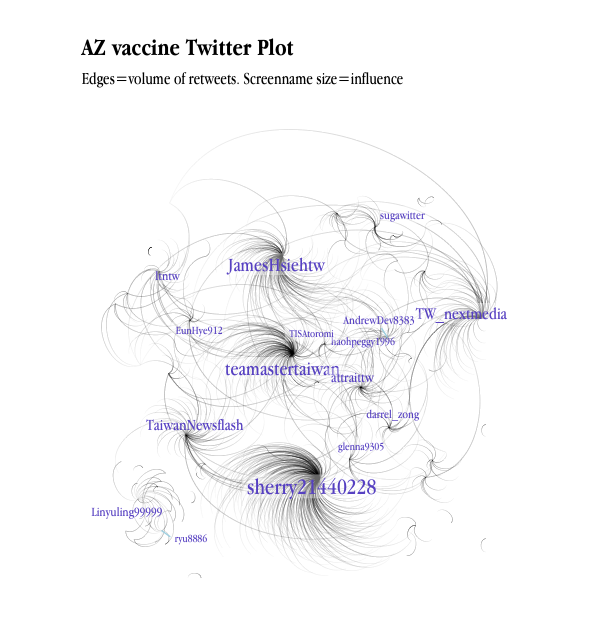

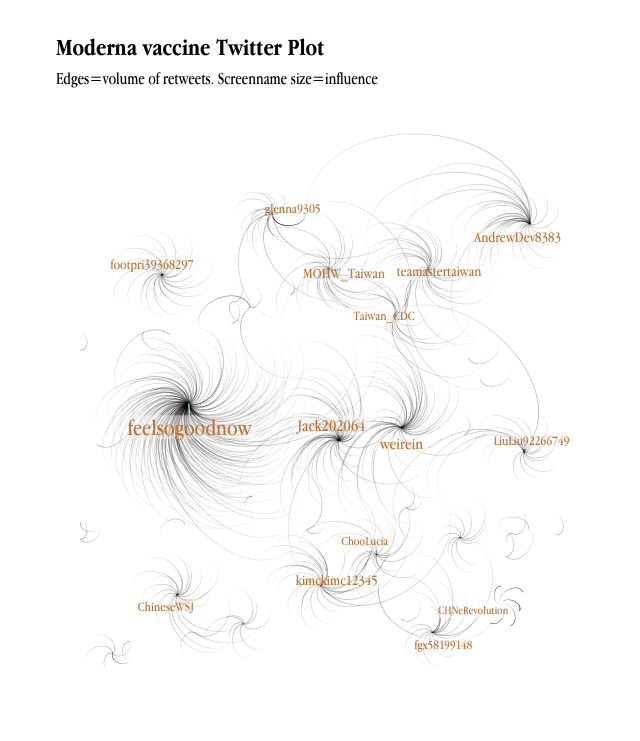

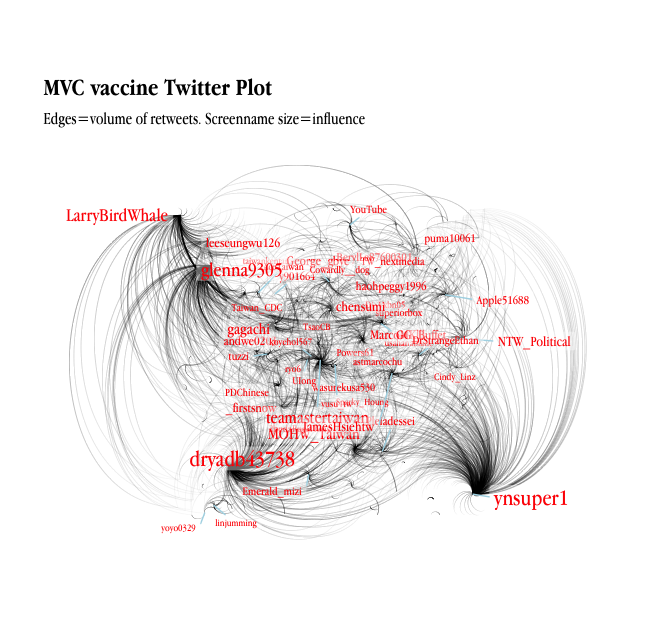

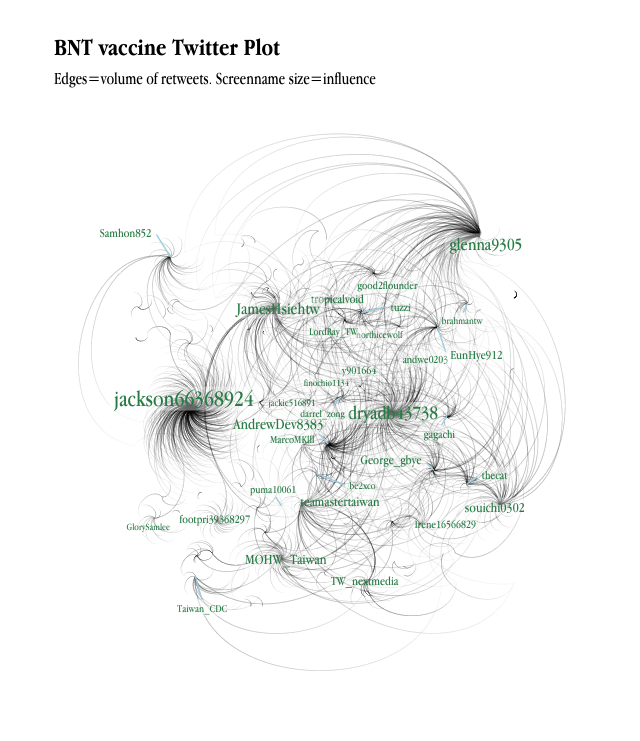

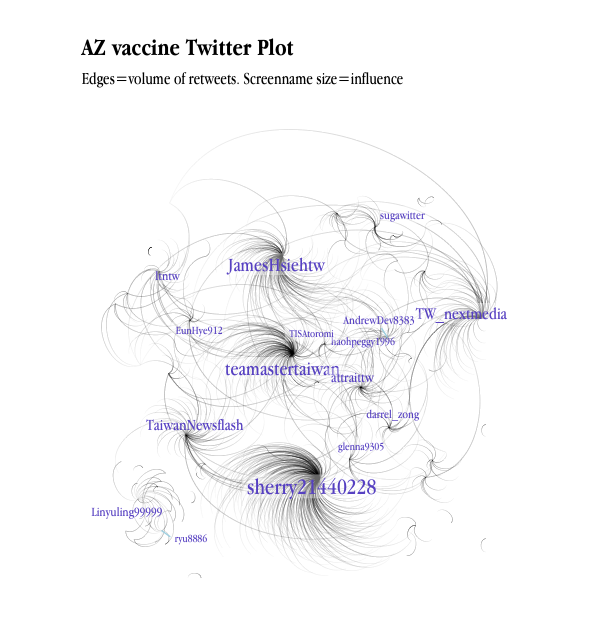

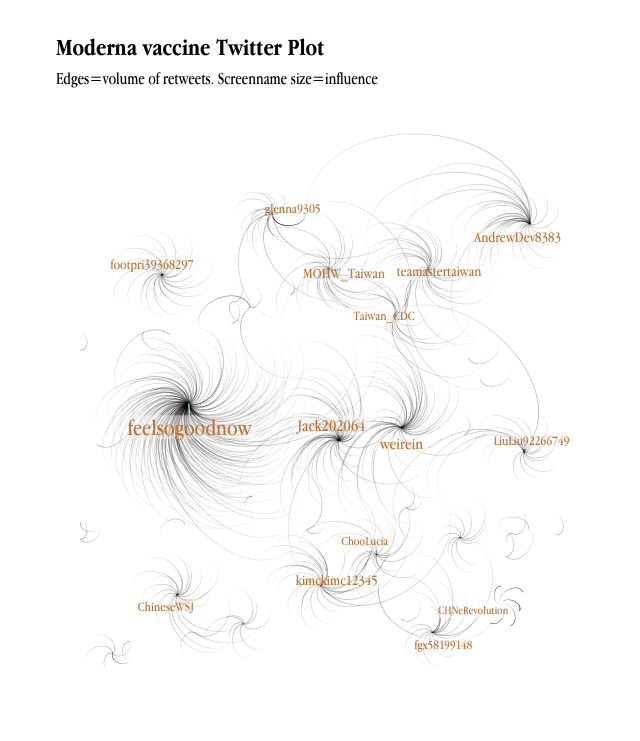

Analytics using Twitter data

Analytics using Twitter data

Ask me anything!